|

PLearn 0.1

|

|

PLearn 0.1

|

Layer in an RBM consisting in rate-coded units. More...

#include <RBMRateLayer.h>

Public Member Functions | |

| RBMRateLayer (real the_learning_rate=0.) | |

| Default constructor. | |

| virtual void | generateSample () |

| generate a sample, and update the sample field | |

| virtual void | generateSamples () |

| batch version | |

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | computeExpectations () |

| batch version | |

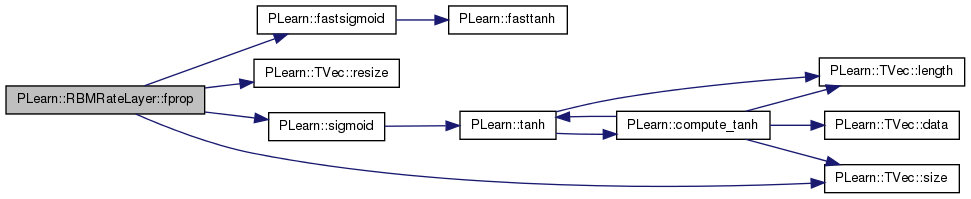

| virtual void | fprop (const Vec &input, Vec &output) const |

| forward propagation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| back-propagates the output gradient to the input | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| back-propagates the output gradient to the input, in mini-batch mode | |

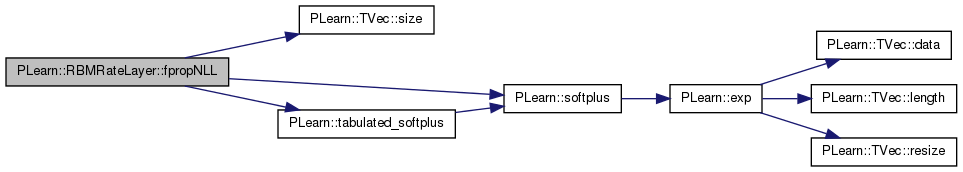

| virtual real | fpropNLL (const Vec &target) |

| Computes the negative log-likelihood of target given the internal activations of the layer. | |

| virtual void | bpropNLL (const Vec &target, real nll, Vec &bias_gradient) |

| Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations. | |

| virtual real | energy (const Vec &unit_values) const |

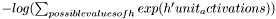

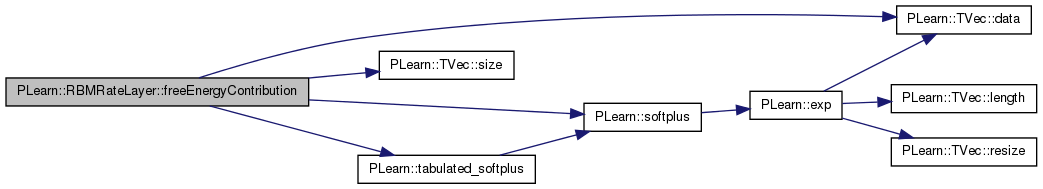

| virtual real | freeEnergyContribution (const Vec &unit_activations) const |

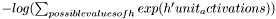

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. | |

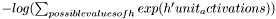

| virtual void | freeEnergyContributionGradient (const Vec &unit_activations, Vec &unit_activations_gradient, real output_gradient=1, bool accumulate=false) const |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations. with respect to unit_activations. | |

| virtual int | getConfigurationCount () |

| Returns a number of different configurations the layer can be in. | |

| virtual void | getConfiguration (int conf_index, Vec &output) |

| Computes the conf_index configuration of the layer. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMRateLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | n_spikes |

| Maximum number of spikes for each neuron. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Vec | tmp_softmax |

Private Types | |

| typedef RBMLayer | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Layer in an RBM consisting in rate-coded units.

Definition at line 51 of file RBMRateLayer.h.

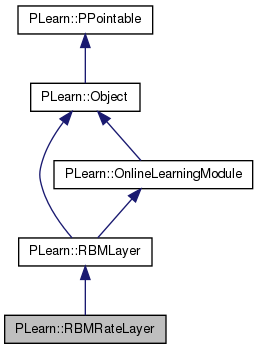

typedef RBMLayer PLearn::RBMRateLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 53 of file RBMRateLayer.h.

| PLearn::RBMRateLayer::RBMRateLayer | ( | real | the_learning_rate = 0. | ) |

Default constructor.

Definition at line 53 of file RBMRateLayer.cc.

| string PLearn::RBMRateLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

| OptionList & PLearn::RBMRateLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

| RemoteMethodMap & PLearn::RBMRateLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

| Object * PLearn::RBMRateLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 51 of file RBMRateLayer.cc.

| StaticInitializer RBMRateLayer::_static_initializer_ & PLearn::RBMRateLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

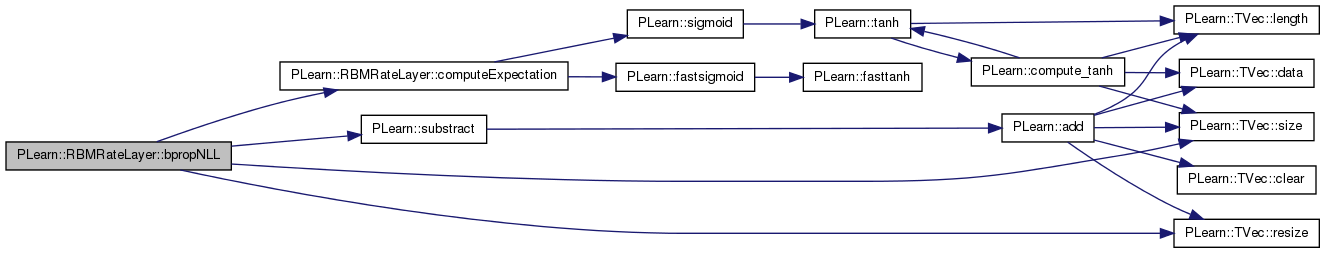

Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations.

Reimplemented from PLearn::RBMLayer.

Definition at line 236 of file RBMRateLayer.cc.

References computeExpectation(), PLearn::RBMLayer::expectation, PLearn::OnlineLearningModule::input_size, PLASSERT, PLERROR, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, and PLearn::substract().

{

PLERROR("In RBMRateLayer::bpropNLL(): not implemented");

computeExpectation();

PLASSERT( target.size() == input_size );

bias_gradient.resize( size );

// bias_gradient = expectation - target

substract(expectation, target, bias_gradient);

}

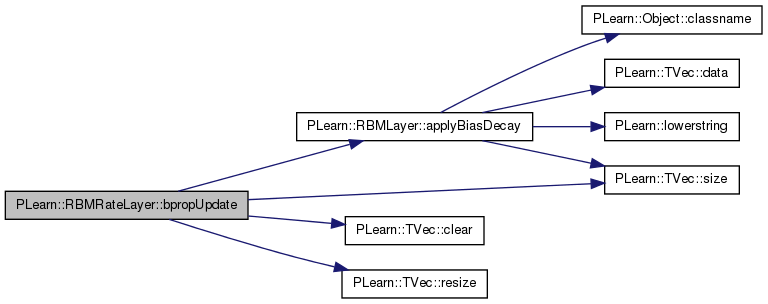

| void PLearn::RBMRateLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 147 of file RBMRateLayer.cc.

References PLearn::RBMLayer::applyBiasDecay(), PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_inc, PLearn::TVec< T >::clear(), i, PLearn::RBMLayer::learning_rate, PLearn::RBMLayer::momentum, n_spikes, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::RBMLayer::size, and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( size );

input_gradient.clear();

}

if( momentum != 0. )

bias_inc.resize( size );

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

real in_grad_i;

in_grad_i = output_i * (1-output_i) * output_gradient[i] * n_spikes;

input_gradient[i] += in_grad_i;

if( momentum == 0. )

{

// update the bias: bias -= learning_rate * input_gradient

bias[i] -= learning_rate * in_grad_i;

}

else

{

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

}

}

applyBiasDecay();

}

| void PLearn::RBMRateLayer::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

back-propagates the output gradient to the input, in mini-batch mode

Implements PLearn::RBMLayer.

Definition at line 194 of file RBMRateLayer.cc.

References PLERROR.

{

PLERROR("In RBMRateLayer::bpropUpdate(): mini-batch version of bpropUpdate is not "

"implemented yet");

}

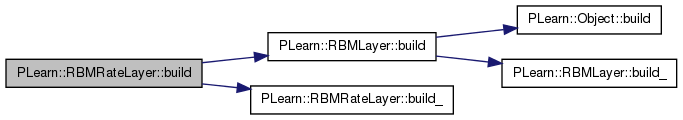

| void PLearn::RBMRateLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

Definition at line 266 of file RBMRateLayer.cc.

References PLearn::RBMLayer::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::RBMRateLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

Definition at line 260 of file RBMRateLayer.cc.

References n_spikes, and PLERROR.

Referenced by build().

| string PLearn::RBMRateLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file RBMRateLayer.cc.

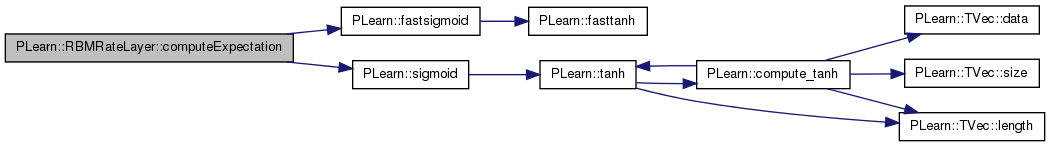

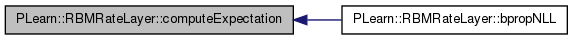

| void PLearn::RBMRateLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

Definition at line 98 of file RBMRateLayer.cc.

References PLearn::RBMLayer::activation, PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectation_is_up_to_date, PLearn::fastsigmoid(), i, n_spikes, PLearn::sigmoid(), PLearn::RBMLayer::size, and PLearn::OnlineLearningModule::use_fast_approximations.

Referenced by bpropNLL().

{

if( expectation_is_up_to_date )

return;

if (use_fast_approximations)

for(int i=0; i<size; i++)

expectation[i] = n_spikes*fastsigmoid(activation[i]);

else

for(int i=0; i<size; i++)

expectation[i] = n_spikes*sigmoid(activation[i]);

expectation_is_up_to_date = true;

}

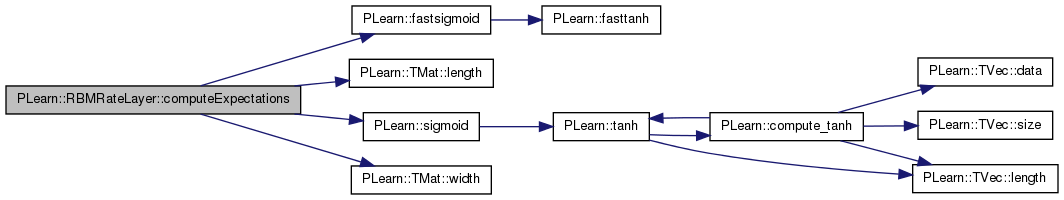

| void PLearn::RBMRateLayer::computeExpectations | ( | ) | [virtual] |

batch version

Implements PLearn::RBMLayer.

Definition at line 112 of file RBMRateLayer.cc.

References PLearn::RBMLayer::activations, PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, PLearn::fastsigmoid(), i, PLearn::TMat< T >::length(), n_spikes, PLASSERT, PLearn::sigmoid(), PLearn::RBMLayer::size, PLearn::OnlineLearningModule::use_fast_approximations, and PLearn::TMat< T >::width().

{

if( expectations_are_up_to_date )

return;

PLASSERT( expectations.width() == size

&& expectations.length() == batch_size );

if (use_fast_approximations)

for (int k = 0; k < batch_size; k++)

for(int i=0; i<size; i++)

expectations(k,i) = n_spikes*fastsigmoid(activations(k,i));

else

for (int k = 0; k < batch_size; k++)

for(int i=0; i<size; i++)

expectations(k,i) = n_spikes*sigmoid(activations(k,i));

expectations_are_up_to_date = true;

}

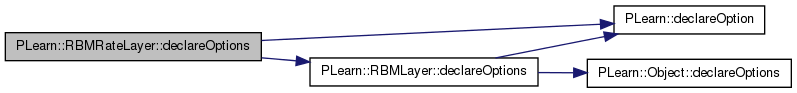

| void PLearn::RBMRateLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

Definition at line 249 of file RBMRateLayer.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::RBMLayer::declareOptions(), and n_spikes.

{

declareOption(ol, "n_spikes", &RBMRateLayer::n_spikes,

OptionBase::buildoption,

"Maximum number of spikes for each neuron.\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMRateLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 127 of file RBMRateLayer.h.

:

//##### Not Options #####################################################

| RBMRateLayer * PLearn::RBMRateLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 51 of file RBMRateLayer.cc.

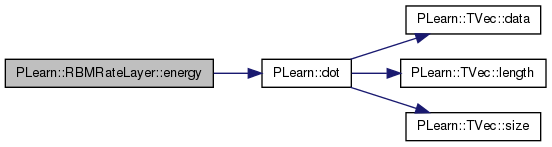

Reimplemented from PLearn::RBMLayer.

Definition at line 279 of file RBMRateLayer.cc.

References PLearn::RBMLayer::bias, and PLearn::dot().

forward propagation

Reimplemented from PLearn::RBMLayer.

Definition at line 132 of file RBMRateLayer.cc.

References PLearn::RBMLayer::bias, PLearn::fastsigmoid(), i, PLearn::OnlineLearningModule::input_size, n_spikes, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), PLearn::RBMLayer::size, PLearn::TVec< T >::size(), and PLearn::OnlineLearningModule::use_fast_approximations.

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

if (use_fast_approximations)

for(int i=0; i<size; i++)

output[i] = n_spikes*fastsigmoid(input[i]+bias[i]);

else

for(int i=0; i<size; i++)

output[i] = n_spikes*sigmoid(input[i]+bias[i]);

}

Computes the negative log-likelihood of target given the internal activations of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 206 of file RBMRateLayer.cc.

References PLearn::RBMLayer::activation, i, PLearn::OnlineLearningModule::input_size, PLASSERT, PLERROR, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), and PLearn::OnlineLearningModule::use_fast_approximations.

{

PLERROR("In RBMRateLayer::fpropNLL(): not implemented");

PLASSERT( target.size() == input_size );

real ret = 0;

real target_i, activation_i;

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ )

{

target_i = target[i];

activation_i = activation[i];

ret += tabulated_softplus(activation_i) - target_i * activation_i;

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following identity:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

}

} else {

for( int i=0 ; i<size ; i++ )

{

target_i = target[i];

activation_i = activation[i];

ret += softplus(activation_i) - target_i * activation_i;

}

}

return ret;

}

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

Reimplemented from PLearn::RBMLayer.

Definition at line 284 of file RBMRateLayer.cc.

References a, PLearn::TVec< T >::data(), i, n_spikes, PLASSERT, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), and PLearn::OnlineLearningModule::use_fast_approximations.

{

PLASSERT( unit_activations.size() == size );

// result = -\sum_{i=0}^{size-1} softplus(a_i)

real result = 0;

real* a = unit_activations.data();

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

result -= n_spikes*tabulated_softplus(a[i]);

else

result -= n_spikes*softplus(a[i]);

}

return result;

}

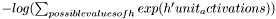

| void PLearn::RBMRateLayer::freeEnergyContributionGradient | ( | const Vec & | unit_activations, |

| Vec & | unit_activations_gradient, | ||

| real | output_gradient = 1, |

||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations.

with respect to unit_activations.

Optionally, a gradient with respect to freeEnergyContribution can be given

Reimplemented from PLearn::RBMLayer.

Definition at line 302 of file RBMRateLayer.cc.

References a, PLearn::TVec< T >::clear(), PLearn::TVec< T >::data(), PLearn::fastsigmoid(), i, n_spikes, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, and PLearn::OnlineLearningModule::use_fast_approximations.

{

PLASSERT( unit_activations.size() == size );

unit_activations_gradient.resize( size );

if( !accumulate ) unit_activations_gradient.clear();

real* a = unit_activations.data();

real* ga = unit_activations_gradient.data();

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

ga[i] -= output_gradient * n_spikes *

fastsigmoid( a[i] );

else

ga[i] -= output_gradient * n_spikes *

sigmoid( a[i] );

}

}

| void PLearn::RBMRateLayer::generateSample | ( | ) | [virtual] |

generate a sample, and update the sample field

Implements PLearn::RBMLayer.

Definition at line 59 of file RBMRateLayer.cc.

References PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectation_is_up_to_date, i, n_spikes, PLASSERT_MSG, PLCHECK_MSG, PLearn::RBMLayer::random_gen, PLearn::RBMLayer::sample, and PLearn::RBMLayer::size.

{

PLASSERT_MSG(random_gen,

"random_gen should be initialized before generating samples");

PLCHECK_MSG(expectation_is_up_to_date, "Expectation should be computed "

"before calling generateSample()");

real exp_i = 0;

for( int i=0; i<size; i++)

{

exp_i = expectation[i];

sample[i] = round(random_gen->gaussian_mu_sigma(

exp_i,exp_i*(1-exp_i/n_spikes)) );

}

}

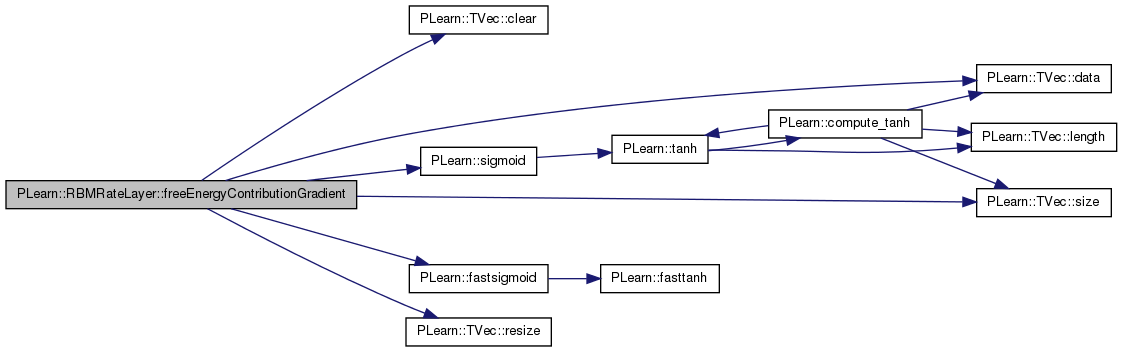

| void PLearn::RBMRateLayer::generateSamples | ( | ) | [virtual] |

batch version

Implements PLearn::RBMLayer.

Definition at line 76 of file RBMRateLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, i, PLearn::TMat< T >::length(), n_spikes, PLASSERT, PLASSERT_MSG, PLCHECK_MSG, PLearn::RBMLayer::random_gen, PLearn::RBMLayer::samples, PLearn::RBMLayer::size, and PLearn::TMat< T >::width().

{

PLASSERT_MSG(random_gen,

"random_gen should be initialized before generating samples");

PLCHECK_MSG(expectations_are_up_to_date, "Expectations should be computed "

"before calling generateSamples()");

PLASSERT( samples.width() == size && samples.length() == batch_size );

real exp_i = 0;

for (int k = 0; k < batch_size; k++)

{

for( int i=0; i<size; i++)

{

exp_i = expectations(k,i);

samples(k,i) = round(random_gen->gaussian_mu_sigma(

exp_i,exp_i*(1-exp_i/n_spikes)) );

}

}

}

Computes the conf_index configuration of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 328 of file RBMRateLayer.cc.

References PLERROR.

{

PLERROR("In RBMRateLayer::getConfiguration(): not implemented");

}

| int PLearn::RBMRateLayer::getConfigurationCount | ( | ) | [virtual] |

Returns a number of different configurations the layer can be in.

Reimplemented from PLearn::RBMLayer.

Definition at line 323 of file RBMRateLayer.cc.

References PLearn::RBMLayer::INFINITE_CONFIGURATIONS.

{

return INFINITE_CONFIGURATIONS;

}

| OptionList & PLearn::RBMRateLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file RBMRateLayer.cc.

| OptionMap & PLearn::RBMRateLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file RBMRateLayer.cc.

| RemoteMethodMap & PLearn::RBMRateLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file RBMRateLayer.cc.

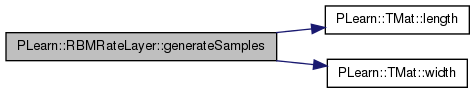

| void PLearn::RBMRateLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

Definition at line 273 of file RBMRateLayer.cc.

References PLearn::RBMLayer::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

//deepCopyField(tmp_softmax, copies);

}

Reimplemented from PLearn::RBMLayer.

Definition at line 127 of file RBMRateLayer.h.

Maximum number of spikes for each neuron.

Definition at line 59 of file RBMRateLayer.h.

Referenced by bpropUpdate(), build_(), computeExpectation(), computeExpectations(), declareOptions(), fprop(), freeEnergyContribution(), freeEnergyContributionGradient(), generateSample(), and generateSamples().

Vec PLearn::RBMRateLayer::tmp_softmax [mutable, protected] |

Definition at line 137 of file RBMRateLayer.h.

1.7.4

1.7.4