|

PLearn 0.1

|

|

PLearn 0.1

|

#include <GaussMix.h>

Public Member Functions | |

| GaussMix () | |

| Default constructor. | |

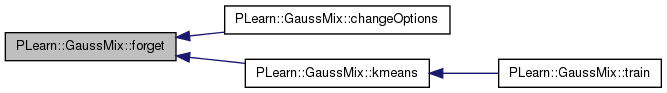

| virtual void | changeOptions (const map< string, string > &name_value) |

| Overridden in order to detect changes that require a call to forget(). | |

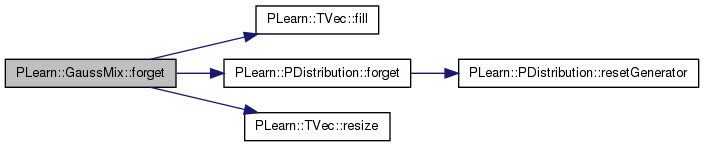

| virtual void | forget () |

| Reset the learner. | |

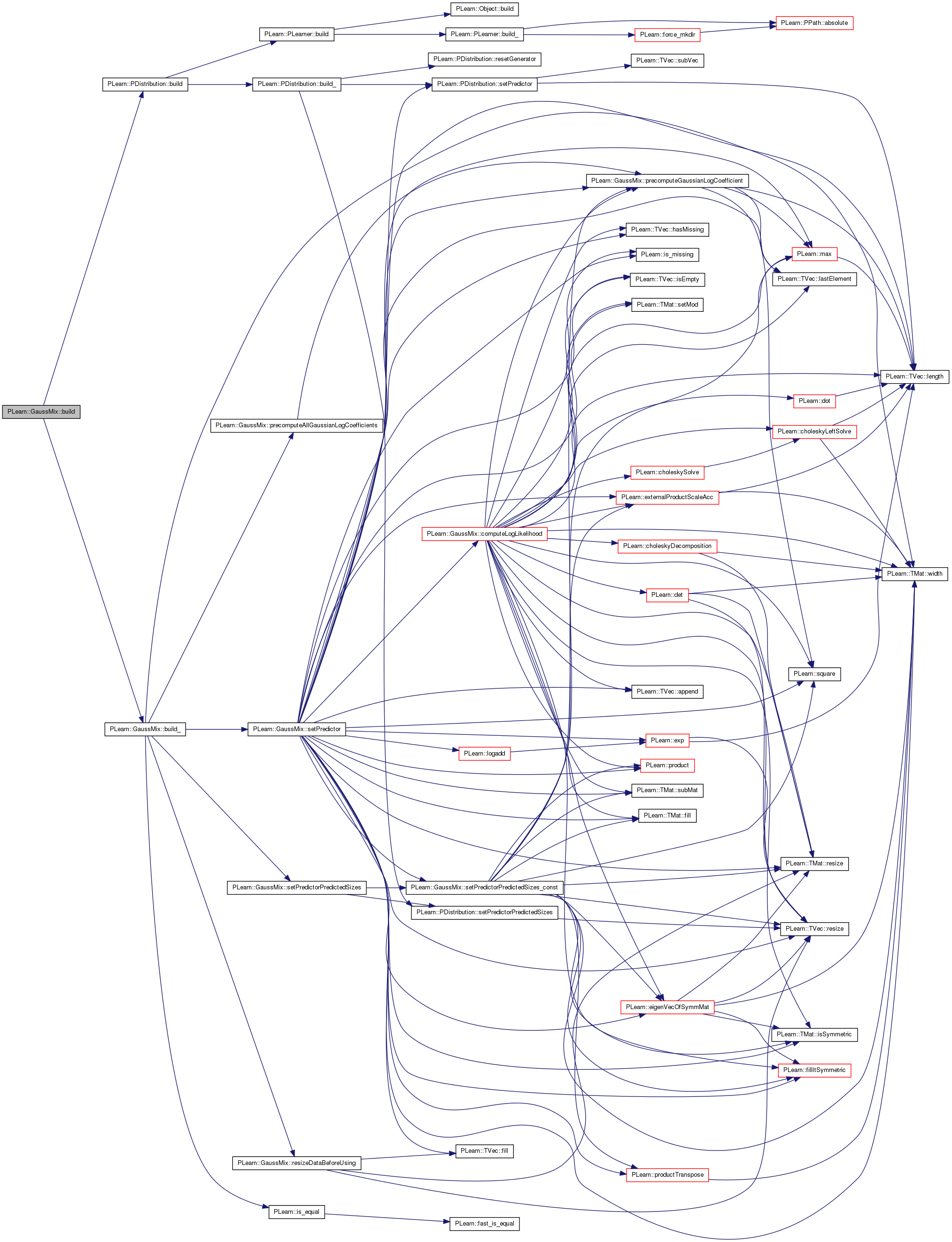

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

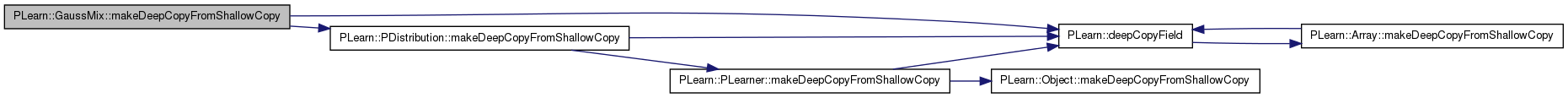

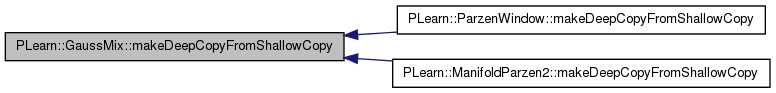

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transform a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual GaussMix * | deepCopy (CopiesMap &copies) const |

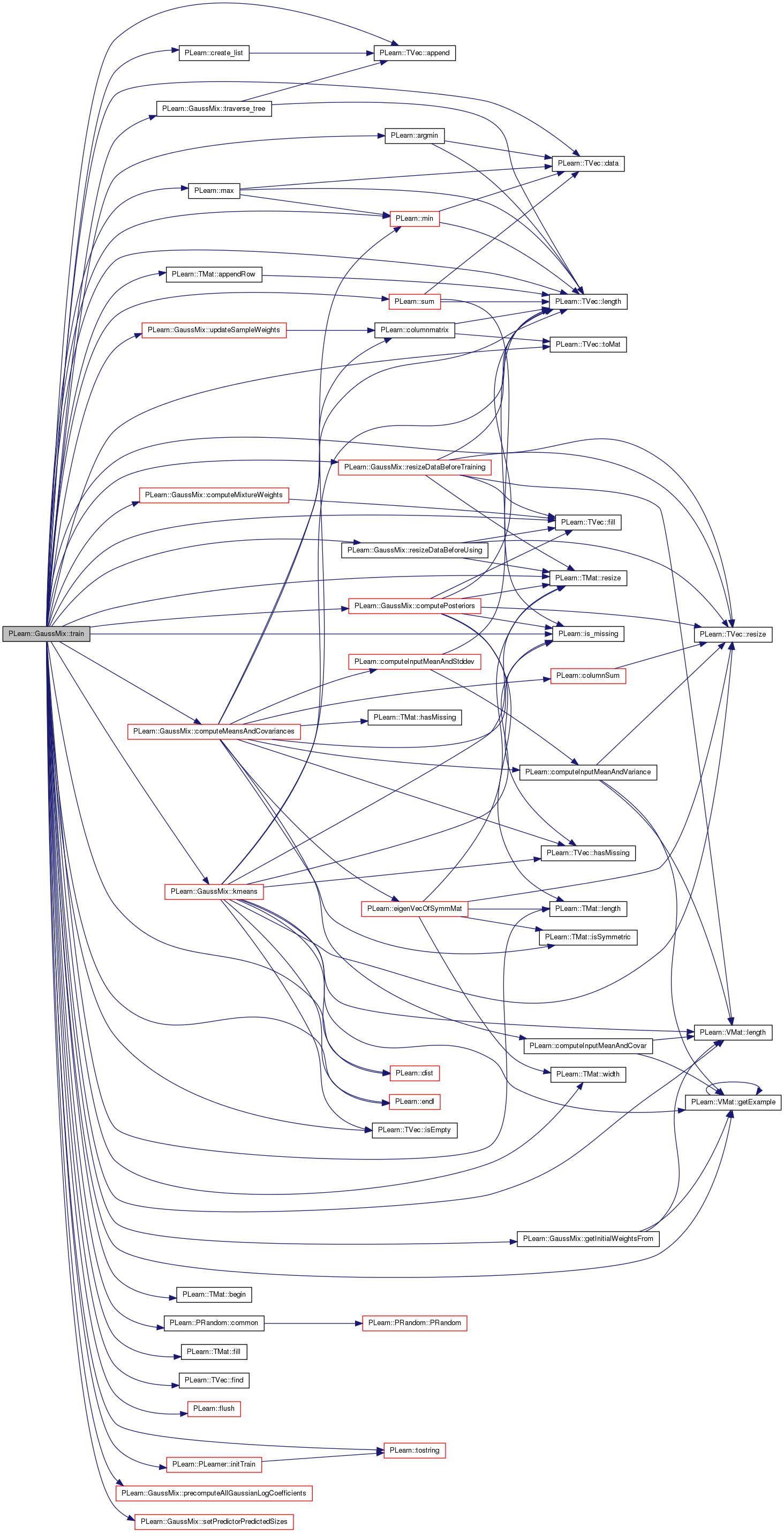

| virtual void | train () |

| Trains the model. | |

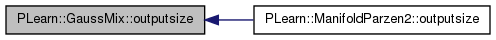

| virtual int | outputsize () const |

| Overridden to take into account new outputs computed. | |

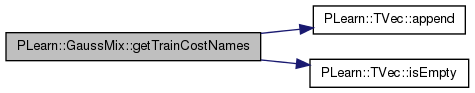

| virtual TVec< string > | getTrainCostNames () const |

| Overridden to compute the training time. | |

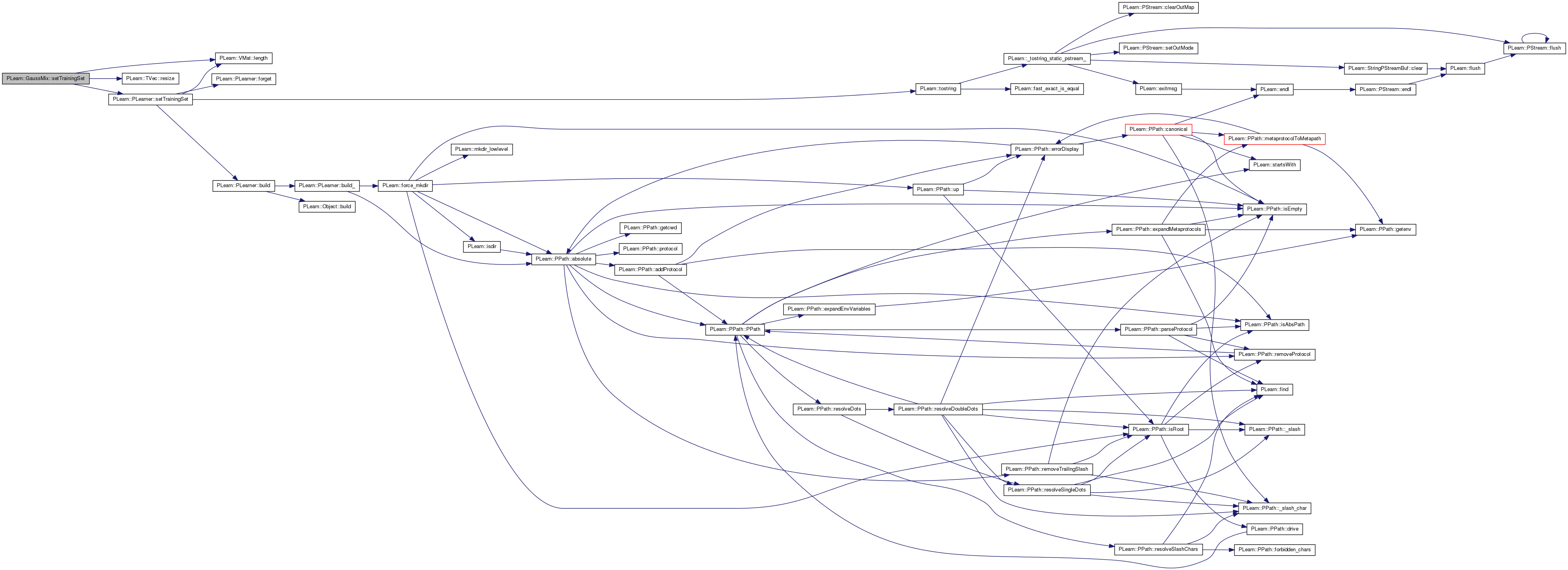

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Overridden to reorder the dataset when efficient_missing == 2. | |

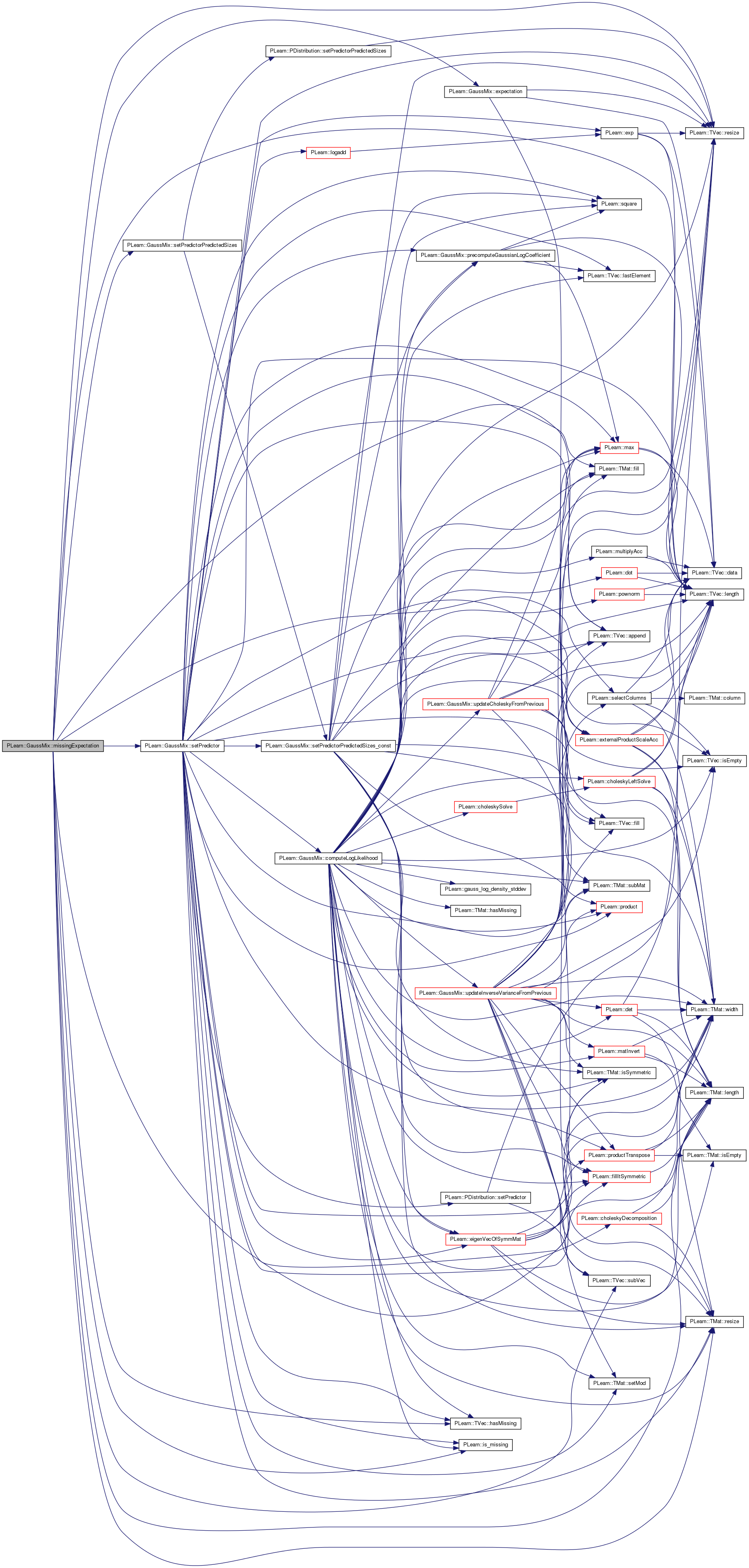

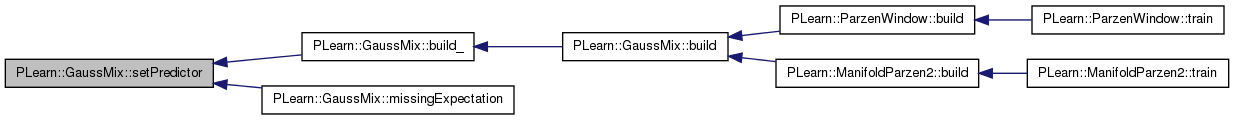

| virtual void | setPredictor (const Vec &predictor, bool call_parent=true) const |

| Set the value for the predictor part of the conditional probability. | |

| virtual real | log_density (const Vec &y) const |

| Return density p(y | x). | |

| virtual real | survival_fn (const Vec &y) const |

| Return survival fn = P(Y>y | x). | |

| virtual real | cdf (const Vec &y) const |

| Return cumulative density fn = P(Y<y | x). | |

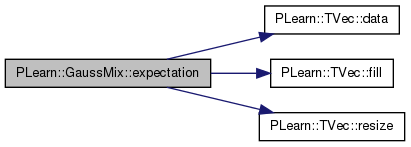

| virtual void | expectation (Vec &mu) const |

| Compute E[Y | x]. | |

| virtual void | missingExpectation (const Vec &input, Vec &mu) |

| Return E[Y | x] where Y is the missing part in the 'input' vector, and x in the observed part. | |

| virtual void | variance (Mat &cov) const |

| Compute Var[Y | x] (currently not implemented). | |

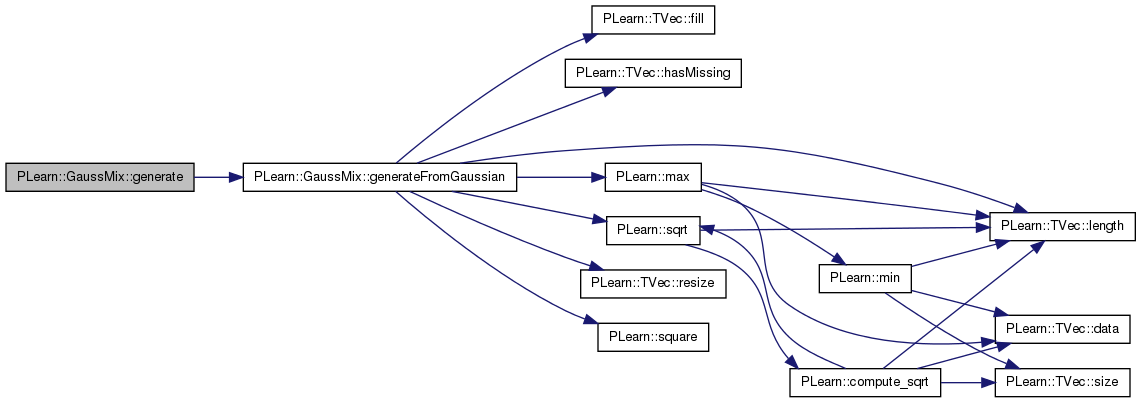

| virtual void | generate (Vec &s) const |

| Generate a sample from this distribution. | |

Static Public Member Functions | |

| static string | _classname_ () |

| Declares name and deepCopy methods. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | alpha_min |

| int | efficient_k_median |

| int | efficient_k_median_iter |

| int | efficient_missing |

| real | epsilon |

| real | f_eigen |

| bool | impute_missing |

| int | kmeans_iterations |

| int | L |

| int | max_samples_in_cluster |

| int | min_samples_in_cluster |

| int | n_eigen |

| real | sigma_min |

| string | type |

| Vec | alpha |

| Mat | center |

| Vec | sigma |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

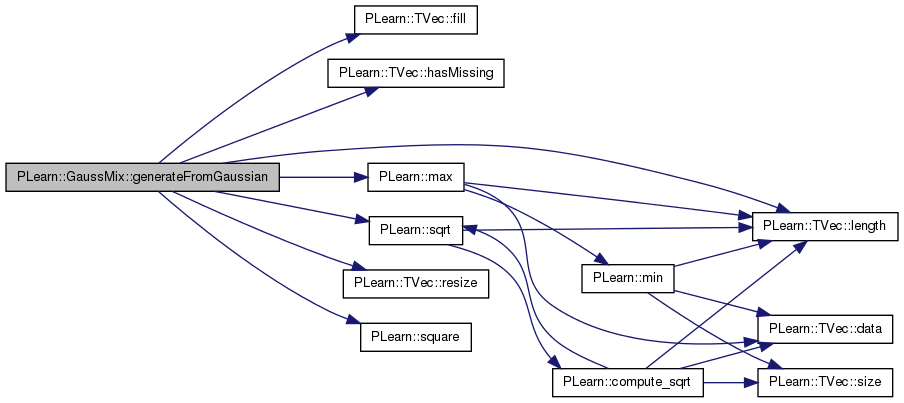

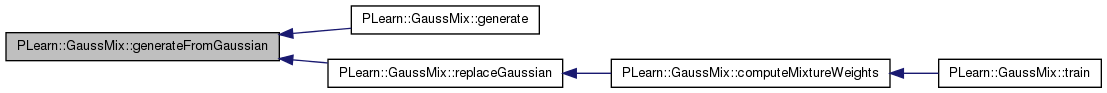

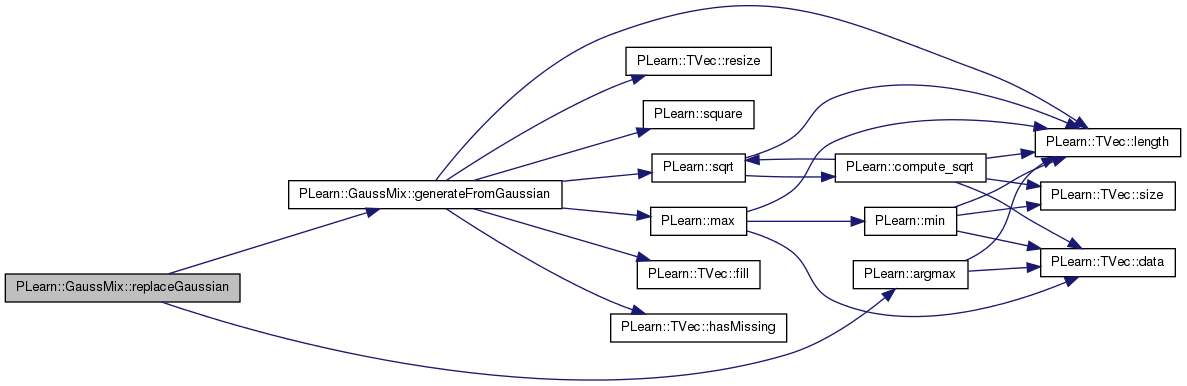

| void | generateFromGaussian (Vec &s, int given_gaussian) const |

| Generate a sample 's' from the given Gaussian. | |

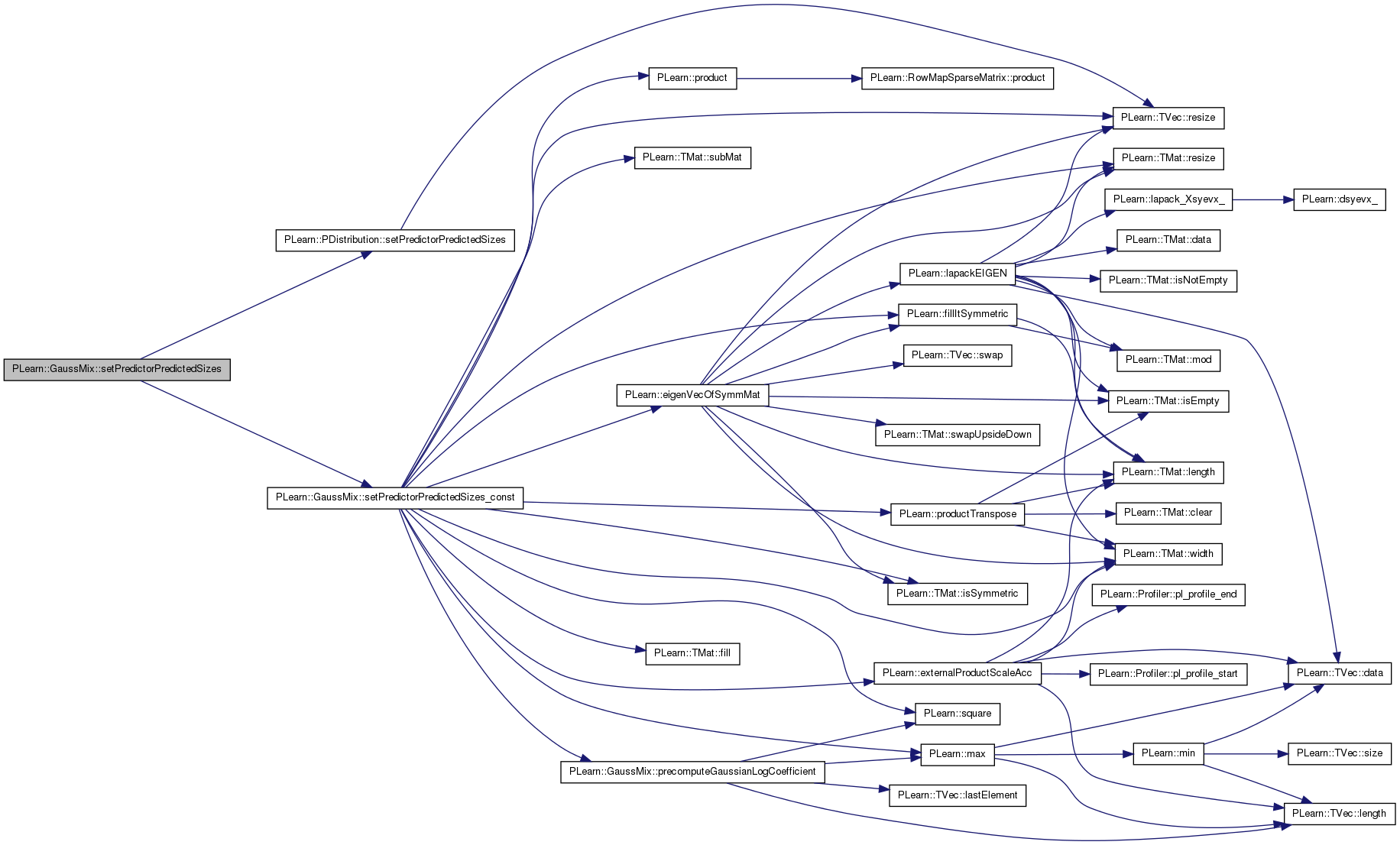

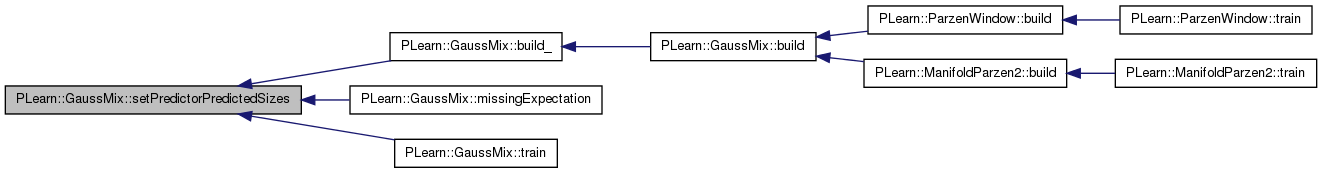

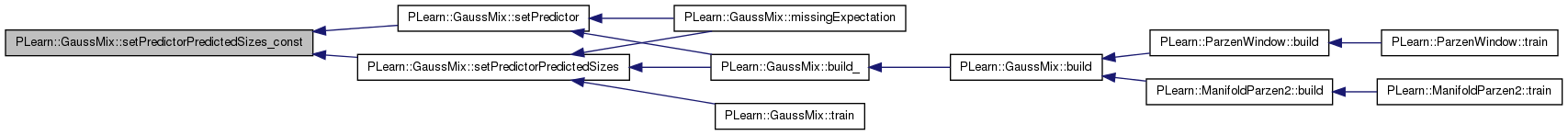

| virtual bool | setPredictorPredictedSizes (int the_predictor_size, int the_predicted_size, bool call_parent=true) |

| In the 'general' conditional type, will precompute the covariance matrix of Y|x. | |

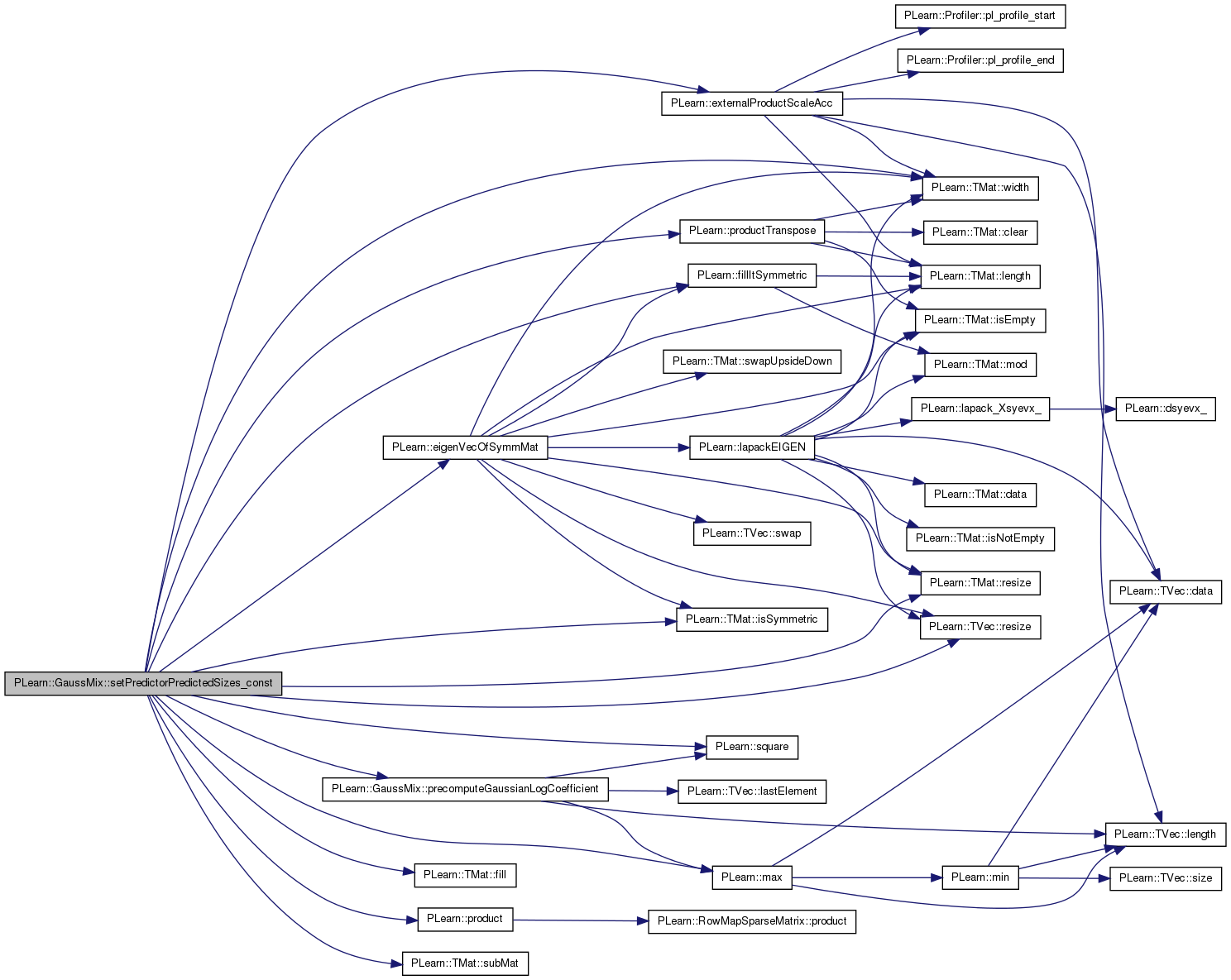

| void | setPredictorPredictedSizes_const () const |

| Main implementation of 'setPredictorPredictedSizes', that needs to be 'const' as it currently needs to be called in setPredictor(..). | |

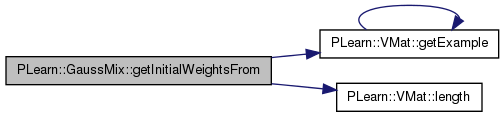

| void | getInitialWeightsFrom (const VMat &vmat) |

| Fill the 'initial_weights' vector with the weights from the given VMatrix (which must have a weights column). | |

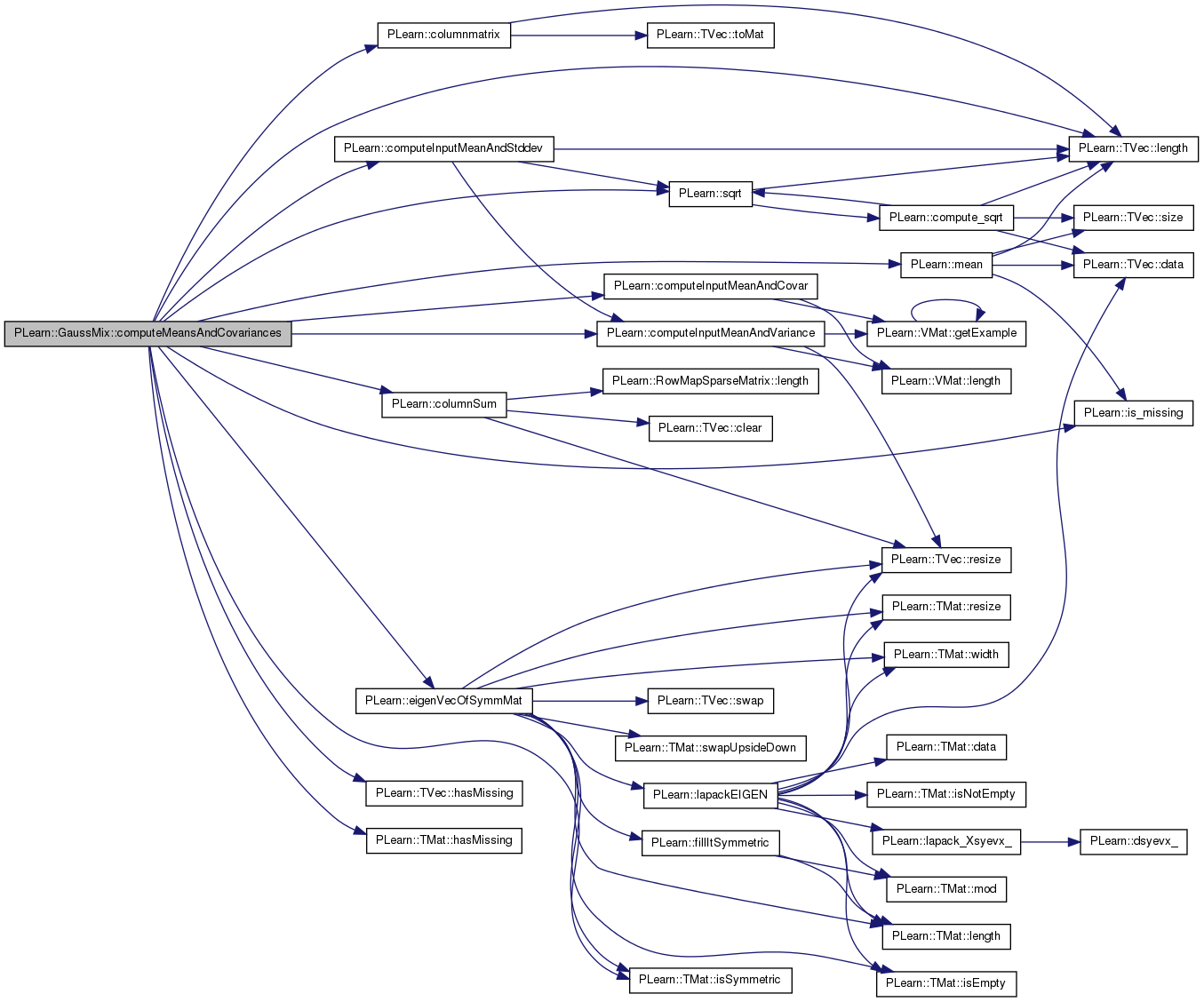

| virtual void | computeMeansAndCovariances () |

| Given the posteriors, fill the centers and covariance of each Gaussian. | |

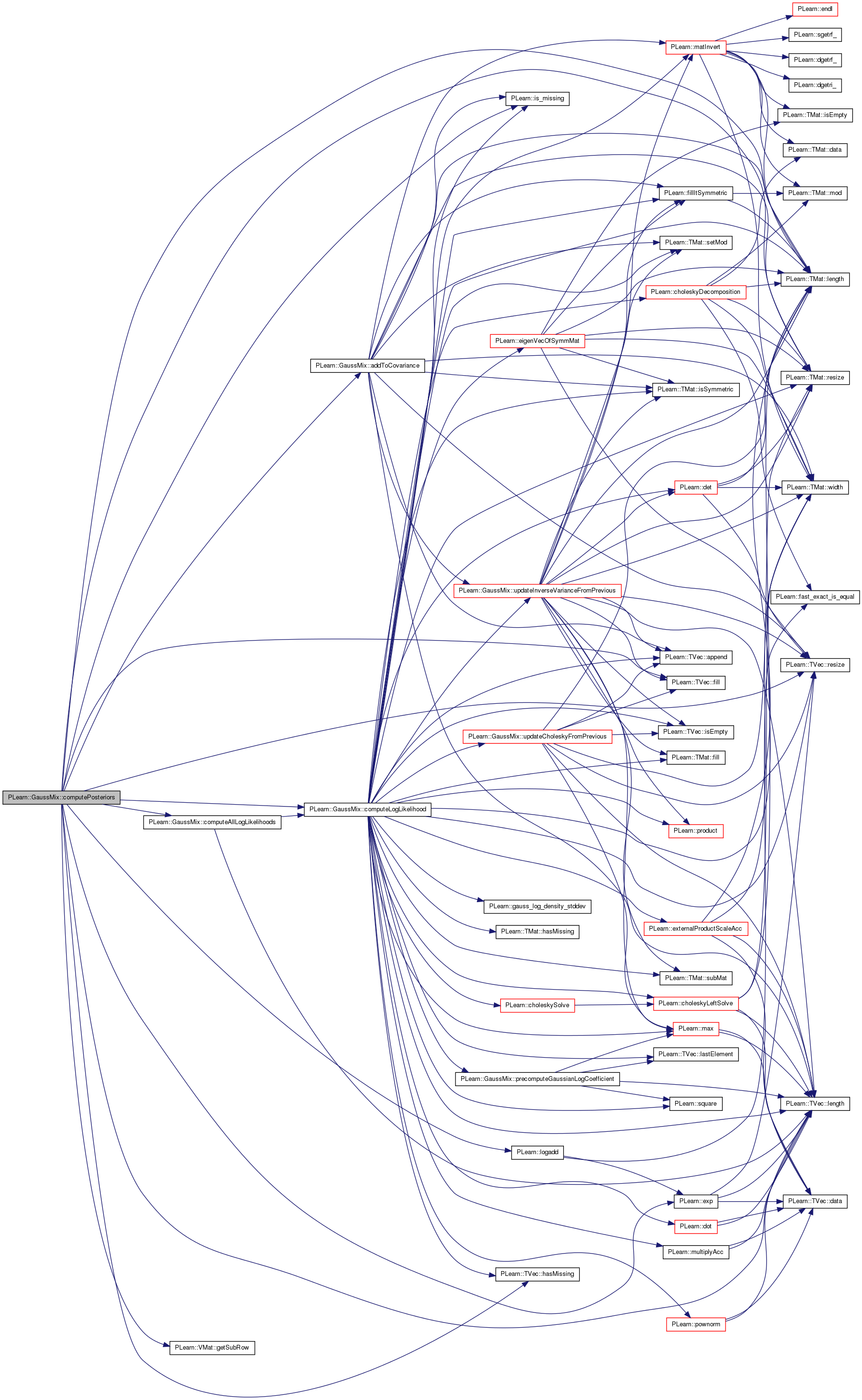

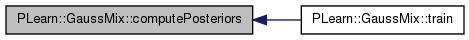

| virtual void | computePosteriors () |

| Compute posteriors P(j | s_i) for each sample point and each Gaussian. | |

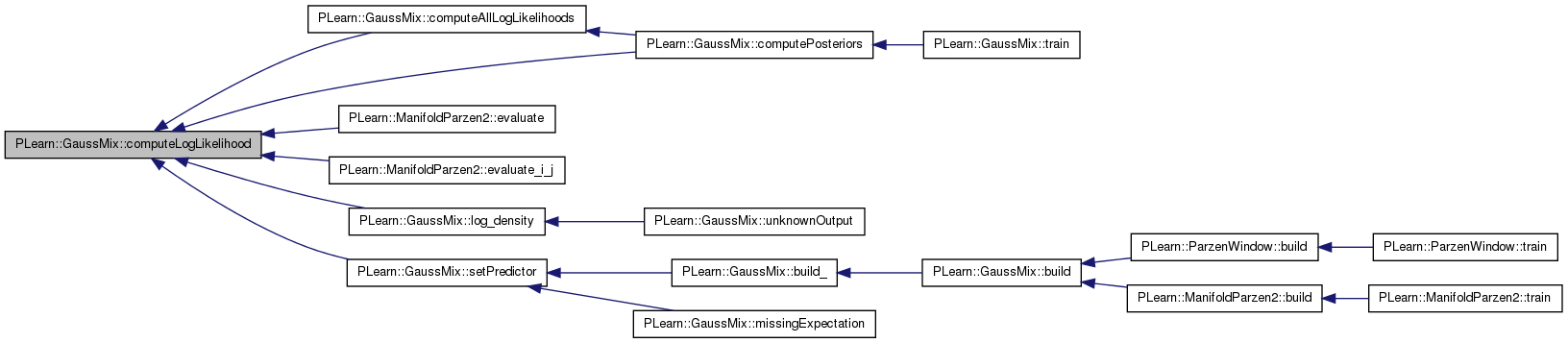

| void | computeAllLogLikelihoods (const Vec &sample, const Vec &log_like) |

| Fill the 'log_like' vector with the log-likelihood of each Gaussian for the given sample. | |

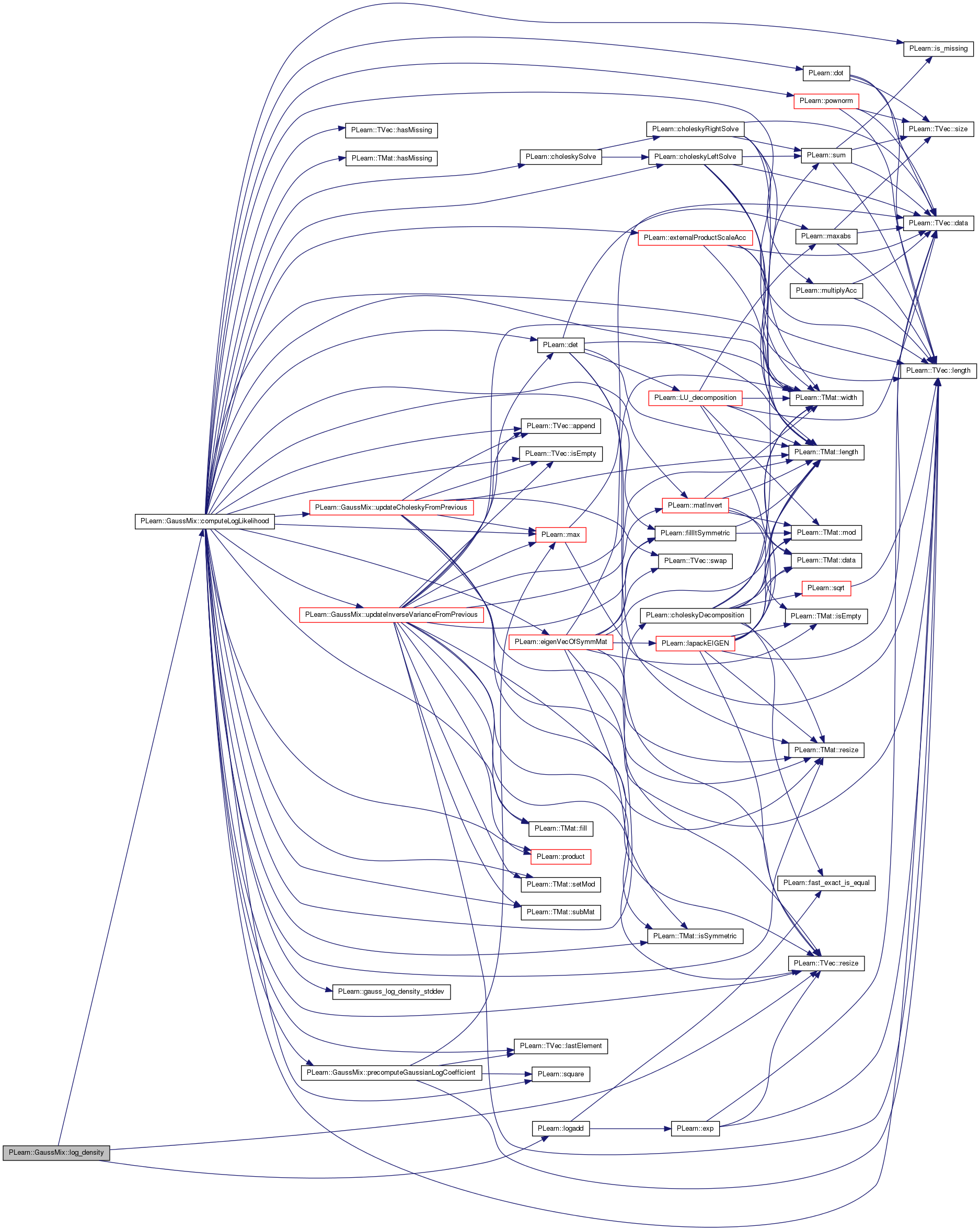

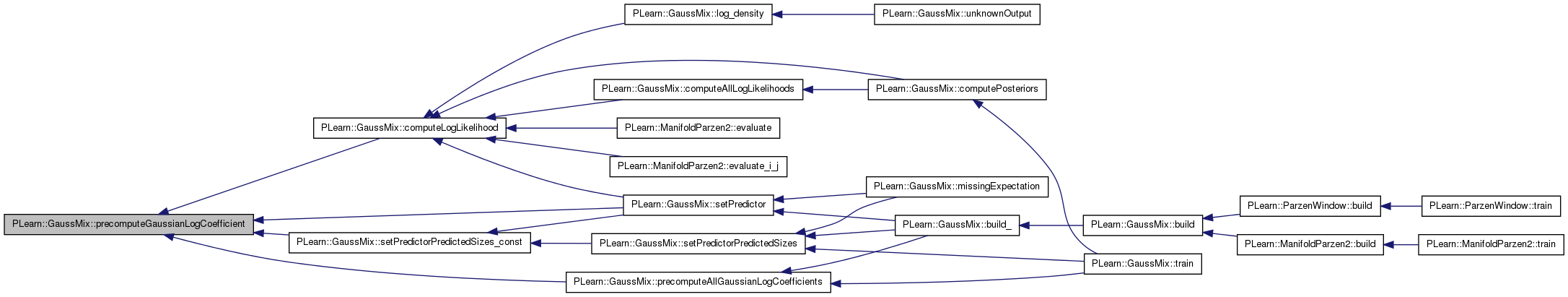

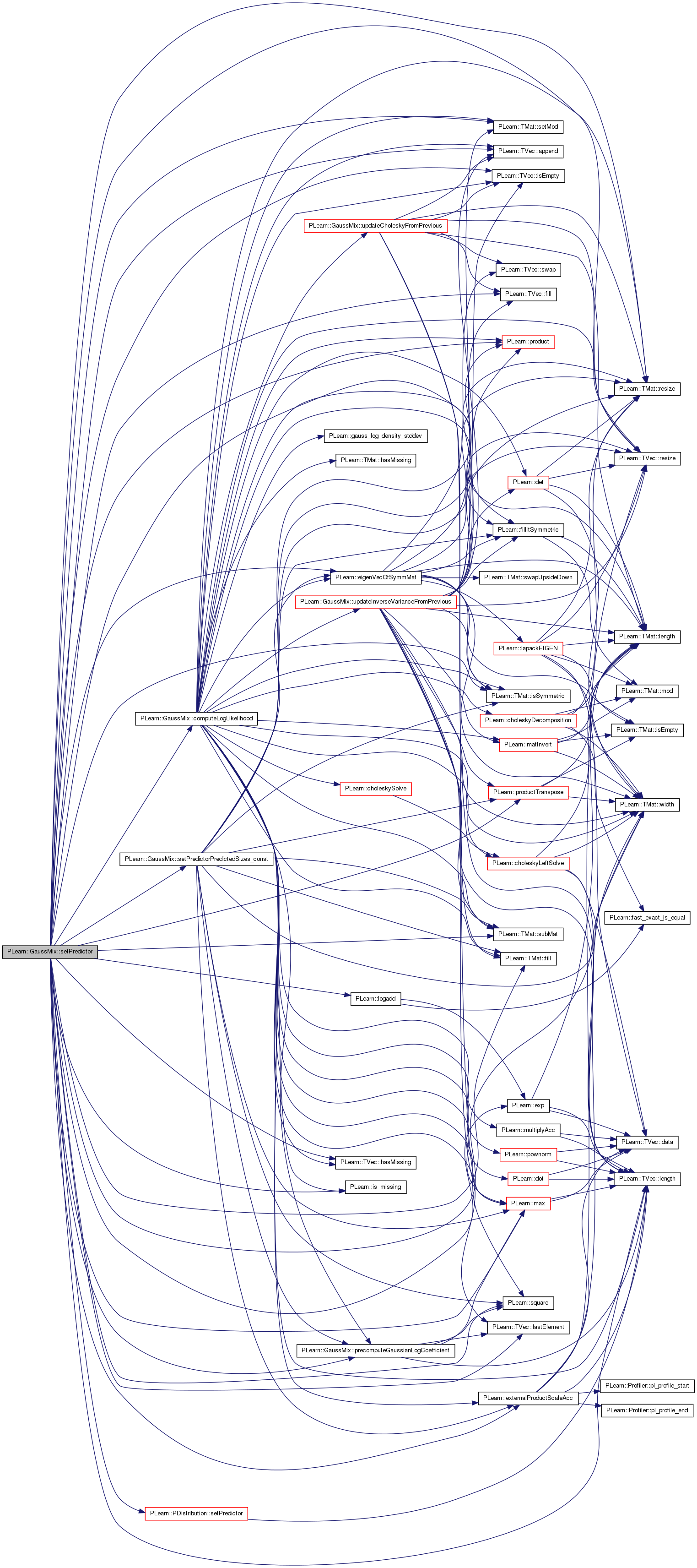

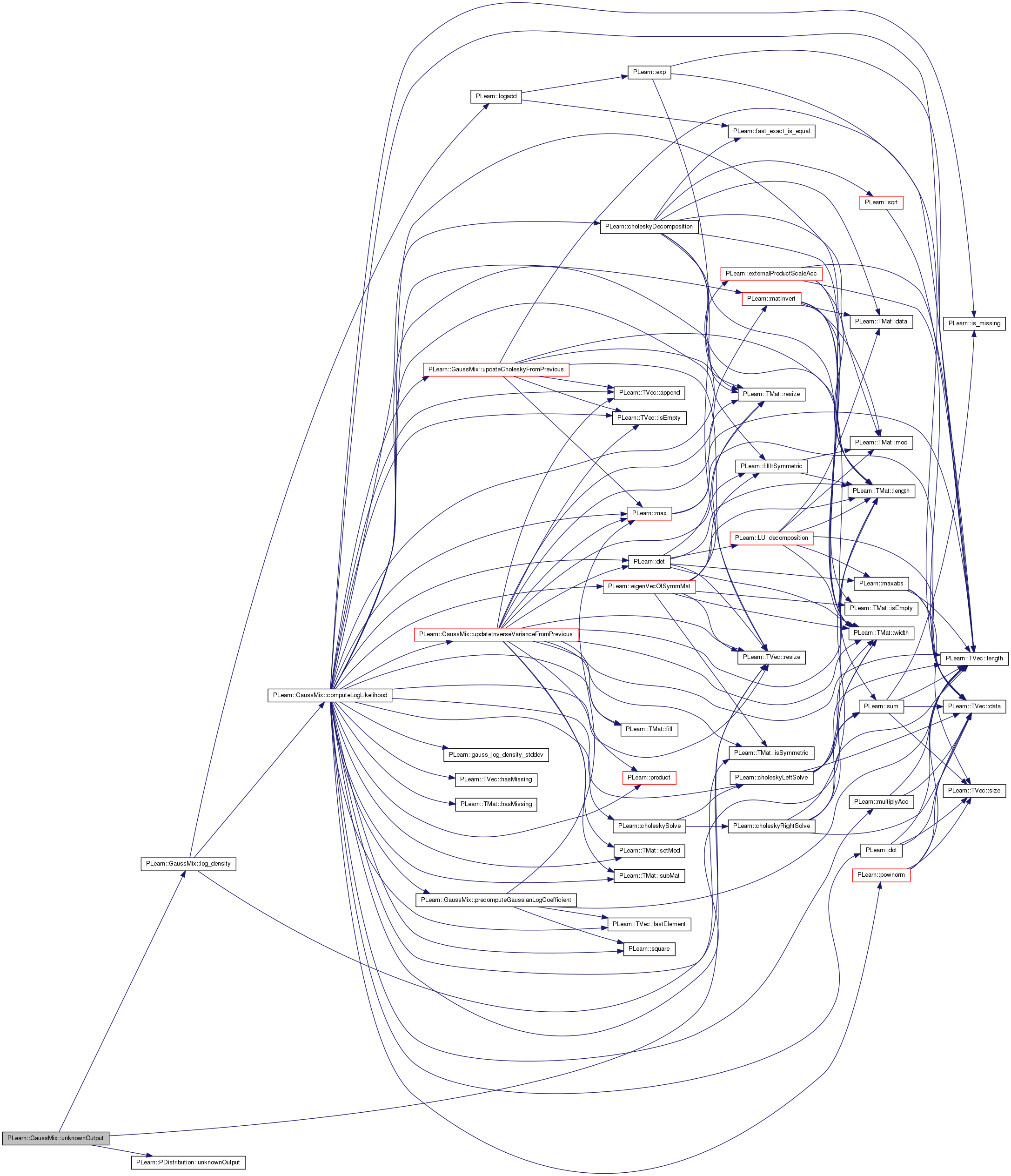

| real | computeLogLikelihood (const Vec &y, int j, bool is_predictor=false) const |

| Compute log p(y | x,j), with j < L the index of a mixture's component, and 'x' the current predictor part. | |

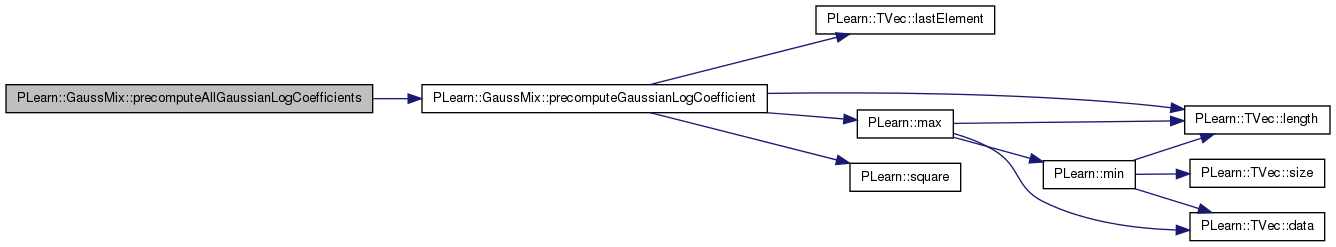

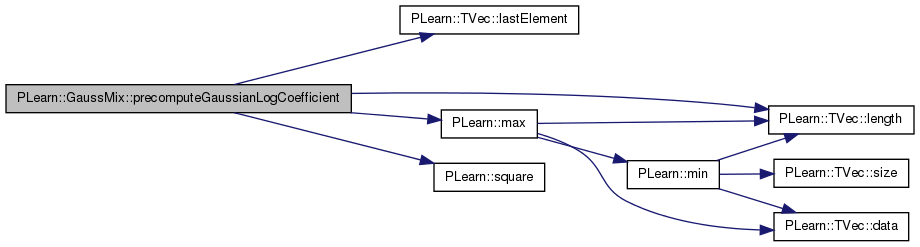

| real | precomputeGaussianLogCoefficient (const Vec &eigenvals, int dimension) const |

| Return log( 1 / sqrt( 2 Pi^dimension |C| ) ), i.e. | |

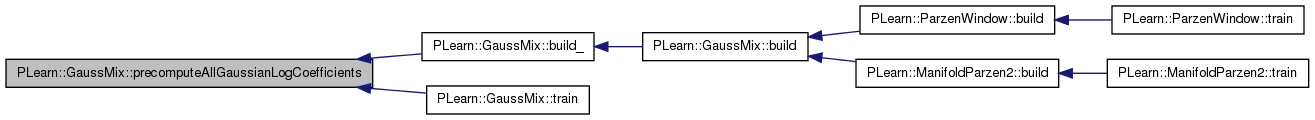

| void | precomputeAllGaussianLogCoefficients () |

| When type is 'general', fill the 'log_coeff' vector with the result of precomputeGaussianLogCoefficient(..) applied to each component of the mixture. | |

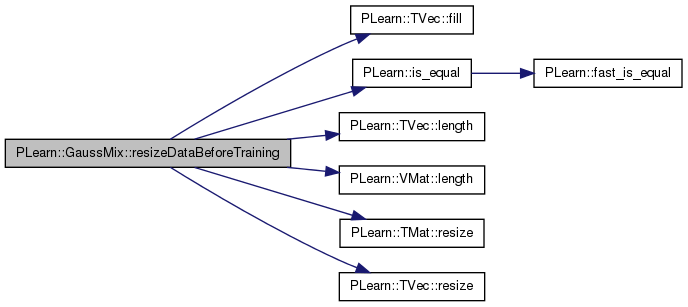

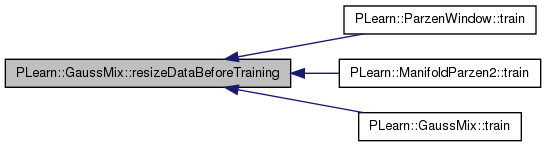

| void | resizeDataBeforeTraining () |

| Make sure everything has the right size when training starts. | |

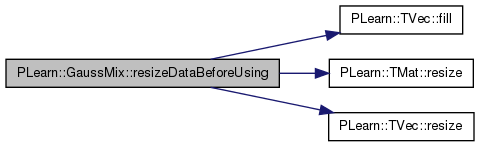

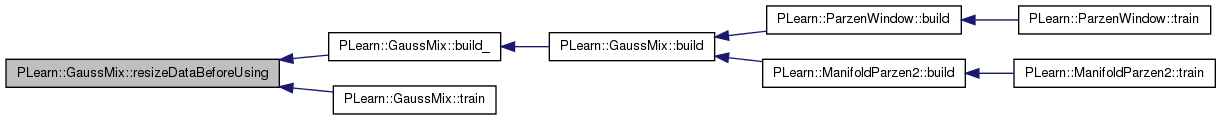

| void | resizeDataBeforeUsing () |

| Make sure everything has the right size when the object is about to be used (e.g. | |

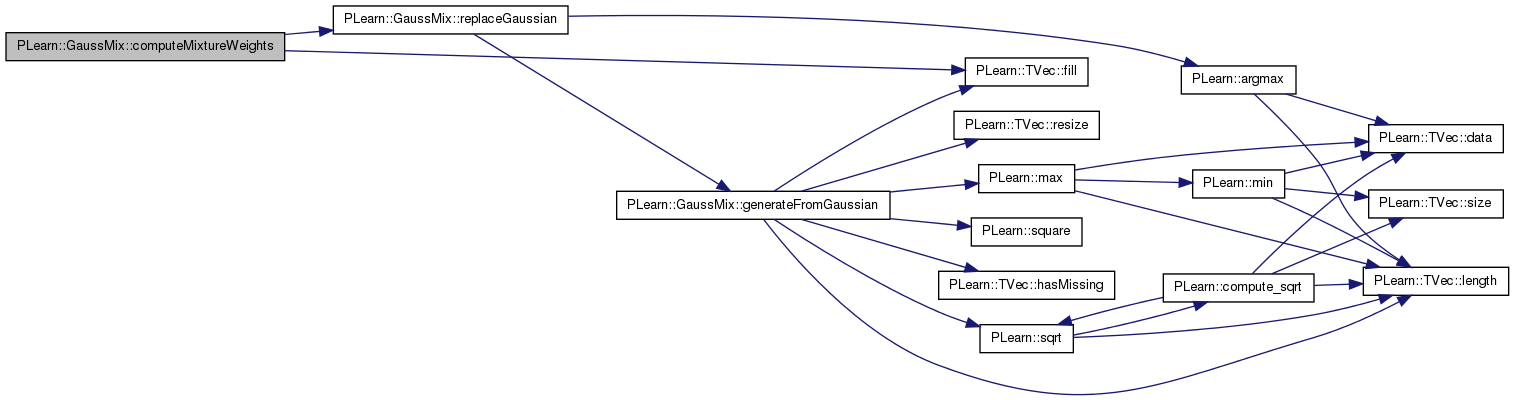

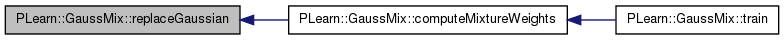

| bool | computeMixtureWeights (bool allow_replace=true) |

| Compute the weight of each Gaussian (the coefficient 'alpha'). | |

| void | replaceGaussian (int j) |

| Replace the j-th Gaussian with another one (probably because that one is not appropriate). | |

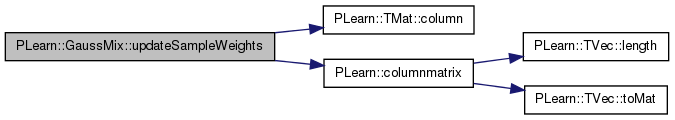

| void | updateSampleWeights () |

| Update the sample weights according to their initial weights and the current posterior probabilities (see documentation of 'updated_weights' for the exact formula). | |

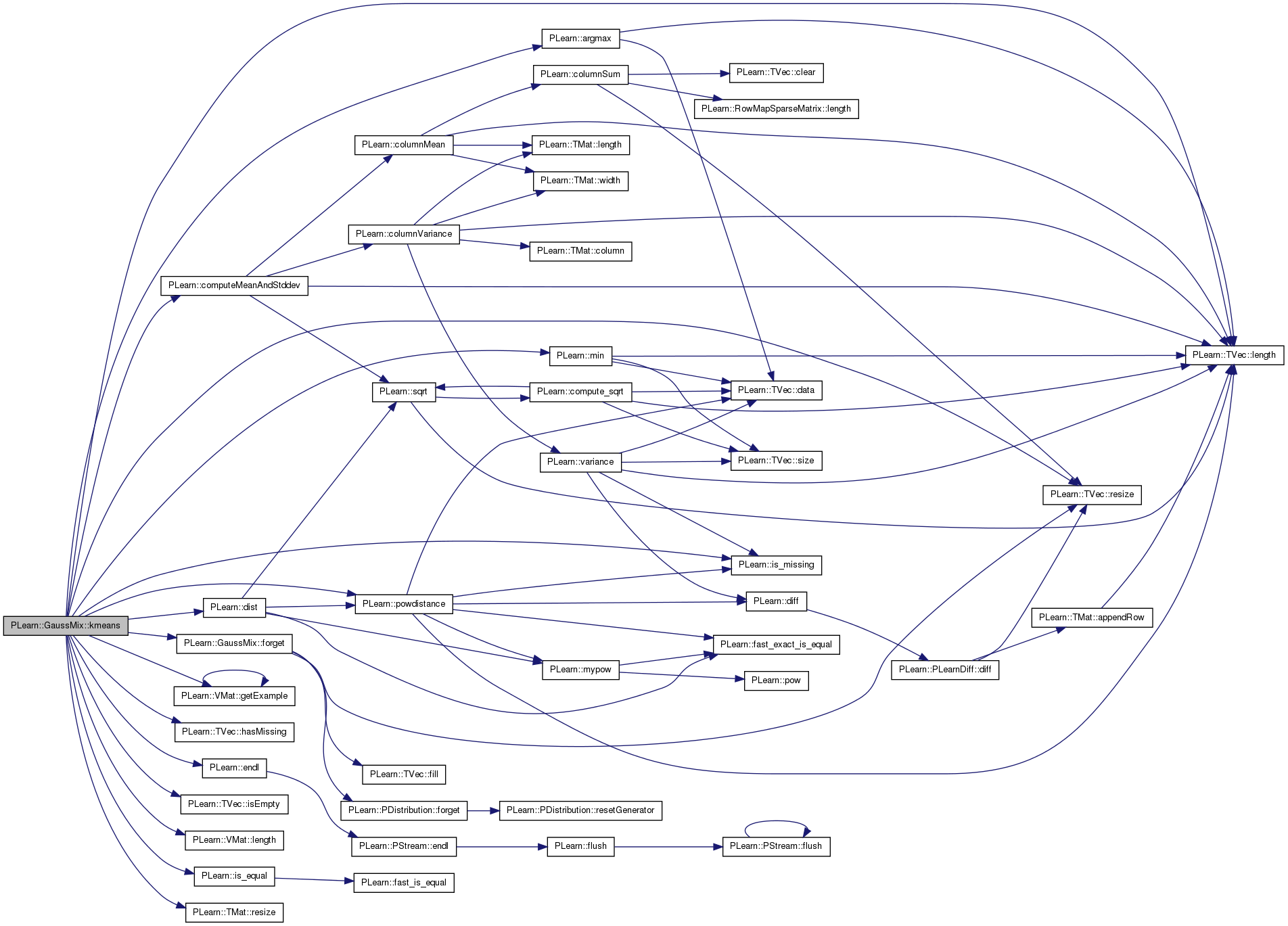

| void | kmeans (const VMat &samples, int nclust, TVec< int > &clust_idx, Mat &clust, int maxit=9999) |

| Perform K-means. | |

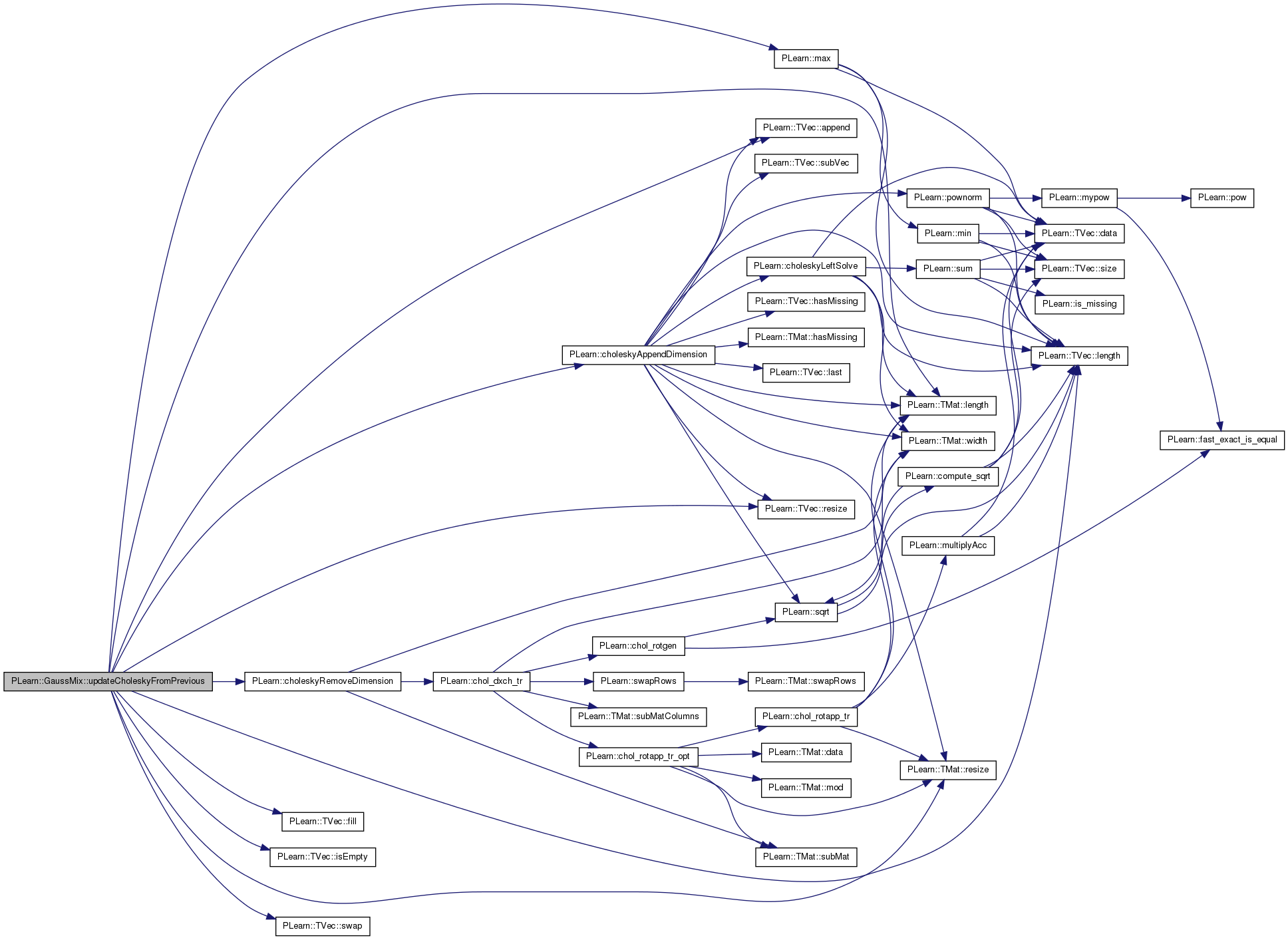

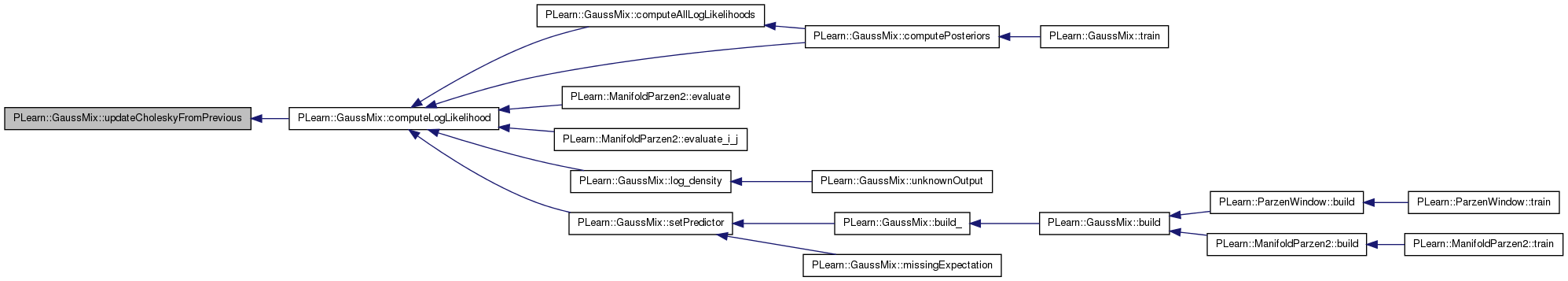

| void | updateCholeskyFromPrevious (const Mat &chol_previous, Mat &chol_updated, const Mat &full_matrix, const TVec< int > &indices_previous, const TVec< int > &indices_updated) const |

| Fill 'chol_updated' with the Cholesky decomposition of the submatrix of 'full_matrix' corresponding to the selection of dimensions given by 'indices_updated'. | |

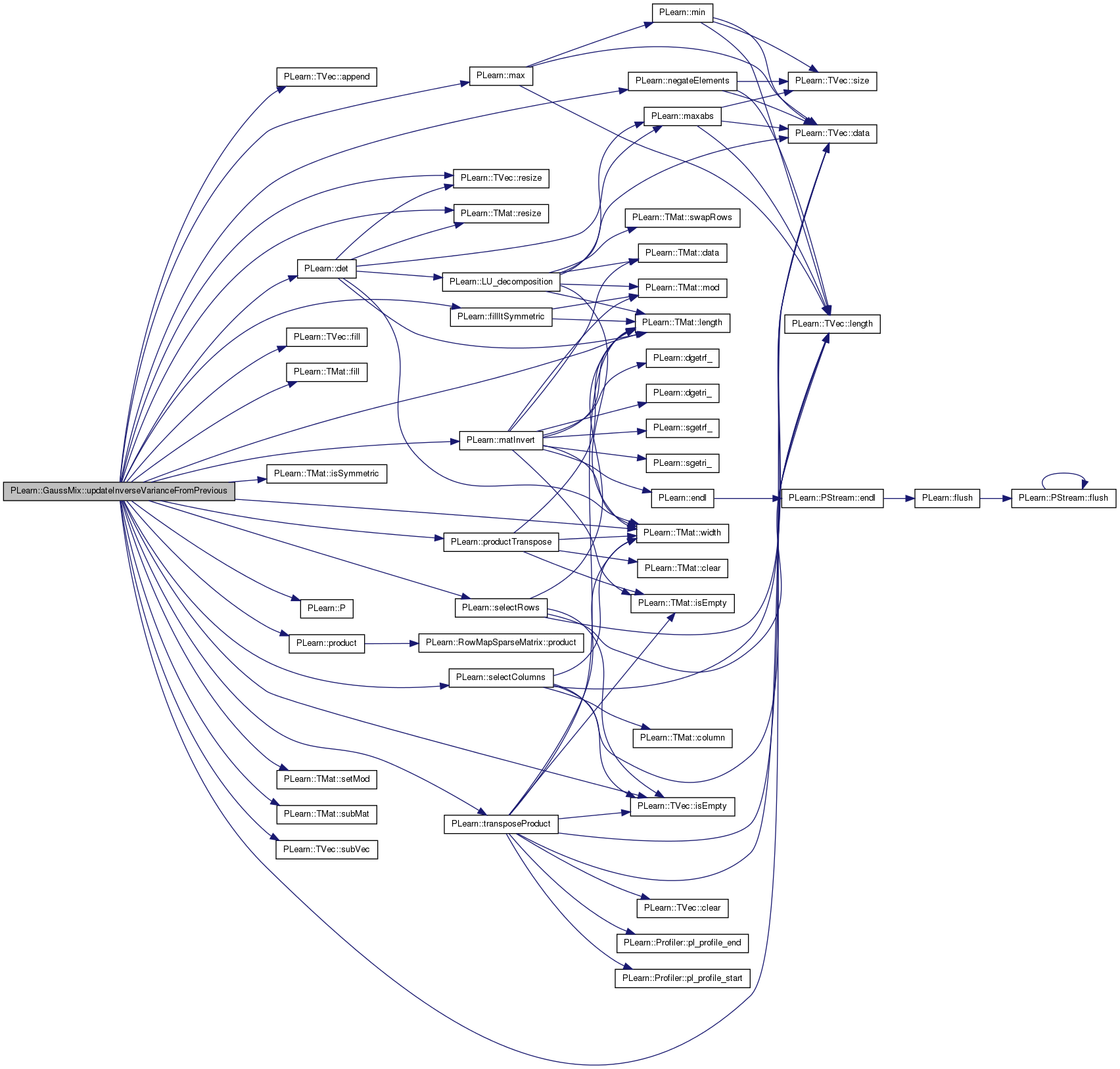

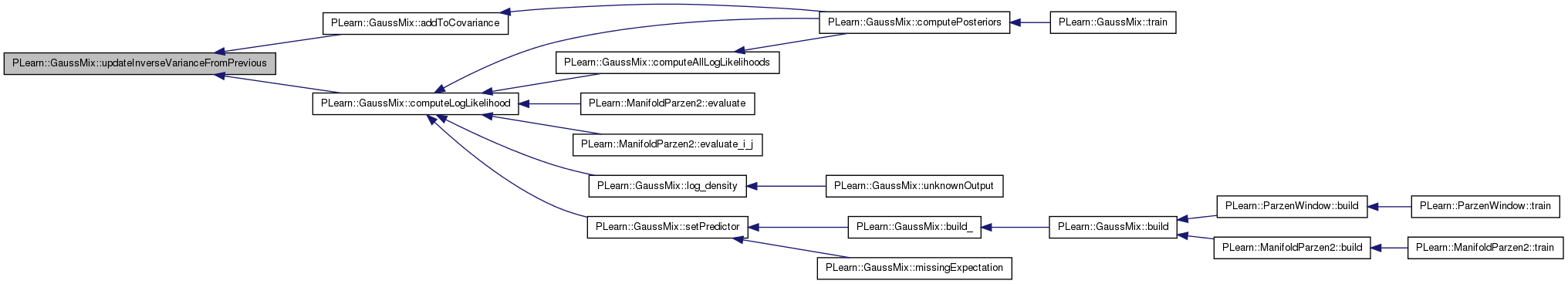

| void | updateInverseVarianceFromPrevious (const Mat &src, Mat &dst, const Mat &full, const TVec< int > &ind_src, const TVec< int > &ind_dst, real *src_log_det=0, real *dst_log_det=0) const |

| void | addToCovariance (const Vec &y, int j, const Mat &cov, real post) |

| virtual void | unknownOutput (char def, const Vec &input, Vec &output, int &k) const |

| Overridden so as to compute specific GaussMix outputs. | |

Static Protected Member Functions | |

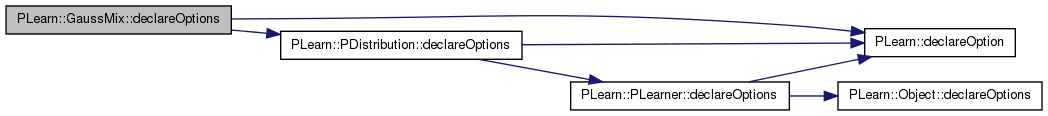

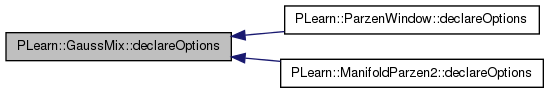

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

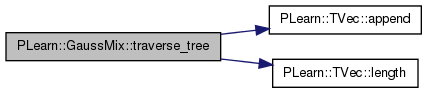

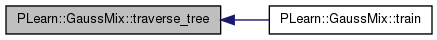

| static void | traverse_tree (TVec< int > &path, TVec< bool > &span_can_free, TVec< bool > &span_use_previous, bool free_previous, bool use_previous, int index_node, int previous_node, const TVec< int > &parent, const TVec< TVec< int > > &children, const TVec< int > &message_up, const TVec< int > &message_down) |

| Recursive function to compute a spanning path from previously computed cost values (given by 'message_up' and 'message_down'). | |

Protected Attributes | |

| TVec< Mat > | H3_inverse |

| PP< PTimer > | ptimer |

| Used to measure the total training time. | |

| TMat< bool > | missing_patterns |

| All missing patterns found in the training set (stored in rows). | |

| TMat< bool > | missing_template |

| Missing patterns used as templates (obtained by k-median). | |

| int | current_cluster |

| Index of the current cluster whose spanning path is being walked on during training. | |

| TVec< int > | sample_to_path_index |

| The i-th element is the index of the i-th training sample in the spanning path that contains it (i.e. | |

| TVec< TVec< int > > | spanning_path |

| The k-th element is the list of ordered samples in the spanning path for the k-th cluster. | |

| TVec< TVec< bool > > | spanning_use_previous |

| The k-th element is a vector that indicates whether at each step in the spanning path of the k-th cluster, a sample should use the previous covariance matrix computed in the path (true), or the one stored one step before it (false). | |

| TVec< TVec< bool > > | spanning_can_free |

| The k-th element is a vector that indicates whether at each step in the spanning path of the k-th cluster, we should free the memory used by the previous covariance matrix (true), or we should instead keep this matrix for further use (false). | |

| Mat | log_likelihood_post_clust |

| Used to store the likelihood given by all Gaussians for each sample in the current cluster. | |

| TVec< TVec< int > > | clusters_samp |

| The k-th element is the list of samples in the k-th cluster. | |

| TVec< Mat > | cholesky_queue |

| The list of all cholesky decompositions (of covariance matrices) that need to be kept in memory during training. | |

| Vec | log_det_queue |

| TODO Document (list of determinants of covariance matrices of the. | |

| TVec< VMat > | imputed_missing |

| TODO Document (the VMats with the imputed missing values). | |

| TVec< Mat > | clust_imputed_missing |

| TODO Document (the mats with the imputed missing values by each. | |

| Vec | sum_of_posteriors |

| TODO Document (sum of all posteriors in computePosteriors()). | |

| TVec< bool > | no_missing_change |

| TODO Document. | |

| TVec< Mat > | cond_var_inv_queue |

| TVec< TVec< int > > | indices_queue |

| The list of all lists of dimension indices (of covariance matrices) that need to be kept in memory during training. | |

| TVec< TVec< int > > | indices_inv_queue |

| int | type_id |

| Set at build time, this integer value depends uniquely on the 'type' option. | |

| Vec | mean_training |

| Mean and standard deviation of the training set. | |

| Vec | stddev_training |

| TVec< Mat > | error_covariance |

| Mat | posteriors |

| The posterior probabilities P(j | s_i), where j is the index of a Gaussian and i is the index of a sample. | |

| Vec | initial_weights |

| The initial weights of the samples s_i in the training set, copied for efficiency concerns. | |

| Mat | updated_weights |

| A matrix whose j-th line is a Vec with the weights of each sample s_i for Gaussian j, i.e. | |

| TVec< Mat > | eigenvectors_x |

| The eigenvectors of the covariance of X. | |

| Mat | eigenvalues_x |

| The eigenvalues of the covariance of X. | |

| TVec< Mat > | y_x_mat |

| The product K2 * K1^-1 to compute E[Y|x]. | |

| TVec< Mat > | eigenvectors_y_x |

| The eigenvectors of the covariance of Y|x. | |

| Mat | eigenvalues_y_x |

| The eigenvalues of the covariance of Y|x. | |

| Mat | center_y_x |

| Used to store the conditional expectation E[Y | X = x]. | |

| Vec | log_p_j_x |

| The logarithm of P(j|x), where x is the predictor part. | |

| Vec | p_j_x |

| The probability P(j|x), where x is the predictor part (it is computed by exp(log_p_j_x)). | |

| Vec | log_coeff |

| The logarithm of the constant part in the joint Gaussian density: log(1/sqrt(2*pi^D * Det(C))). | |

| Vec | log_coeff_x |

| The logarithm of the constant part in P(X) and P(Y | X = x), similar to what 'log_coeff' is for the joint distribution P(X,Y). | |

| Vec | log_coeff_y_x |

| TVec< Mat > | joint_cov |

| The j-th element is the full covariance matrix for Gaussian j. | |

| TVec< Mat > | joint_inv_cov |

| TVec< Mat > | chol_joint_cov |

| The j-th element is the matrix L in the Cholesky decomposition S = L L' of the covariance matrix S of Gaussian j. | |

| TVec< int > | stage_joint_cov_computed |

| The (i,j)-th element is the matrix L in the Cholesky decomposition of the covariance for the pattern of missing values of the i-th template for the j-th Gaussian. | |

| TVec< int > | stage_replaced |

| Indicates at which stage the j-th Gaussian has been replaced (due to having a too low coefficient alpha[j] < alpha_min). | |

| TVec< int > | sample_to_template |

| The i-th element is the index of the missing template of sample i in the 'missing_template' matrix. | |

| int | current_training_sample |

| Hack to know that we are compute likelihood on a training sample. | |

| int | previous_training_sample |

| Index of the previous training sample whose likelihood (and associated covariance matrix) has been computed. | |

| bool | previous_predictor_part_had_missing |

| A boolean indicating whether or not the last predictor part set through setPredictor(..) had a missing value. | |

| Vec | y_centered |

| Storage vector to save some memory allocations. | |

| Mat | covariance |

| Storage for the (weighted) covariance matrix of the dataset. | |

| Vec | log_likelihood_dens |

| Temporary storage vector. | |

| TVec< bool > | need_recompute |

| TVec< int > | original_to_reordered |

| int | D |

| Mat | diags |

| Mat | eigenvalues |

| TVec< Mat > | eigenvectors |

| int | n_eigen_computed |

| int | nsamples |

Private Types | |

| typedef PDistribution | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | log_likelihood_post |

| Temporary storage used when computing posteriors. | |

| Vec | sample_row |

Definition at line 49 of file GaussMix.h.

typedef PDistribution PLearn::GaussMix::inherited [private] |

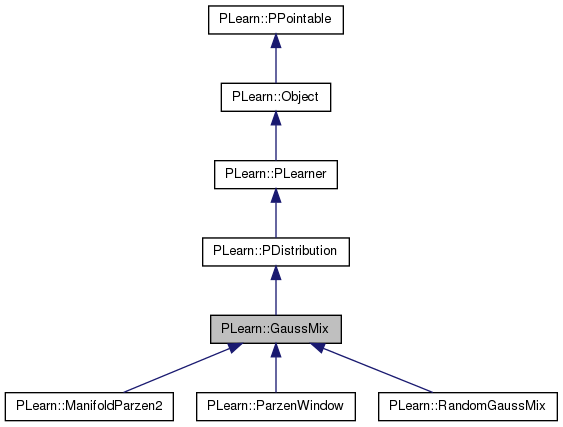

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 54 of file GaussMix.h.

| PLearn::GaussMix::GaussMix | ( | ) |

Default constructor.

Definition at line 74 of file GaussMix.cc.

References current_training_sample, PLearn::PLearner::nstages, previous_training_sample, and ptimer.

:

ptimer(new PTimer()),

type_id(TYPE_UNKNOWN),

previous_predictor_part_had_missing(false),

D(-1),

n_eigen_computed(-1),

nsamples(-1),

alpha_min(1e-6),

efficient_k_median(1),

efficient_k_median_iter(100),

efficient_missing(0),

epsilon(1e-6),

f_eigen(0),

impute_missing(false),

kmeans_iterations(5),

L(1),

max_samples_in_cluster(-1),

min_samples_in_cluster(1),

n_eigen(-1),

sigma_min(1e-6),

type("spherical")

{

// Change the default value of 'nstages' to 10 to make the user aware that

// in general it should be higher than 1.

nstages = 10;

current_training_sample = -1;

previous_training_sample = -2; // Only use efficient_missing in training.

ptimer->newTimer("init_time");

ptimer->newTimer("training_time");

}

| string PLearn::GaussMix::_classname_ | ( | ) | [static] |

Declares name and deepCopy methods.

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

| OptionList & PLearn::GaussMix::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

| RemoteMethodMap & PLearn::GaussMix::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

| Object * PLearn::GaussMix::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

| StaticInitializer GaussMix::_static_initializer_ & PLearn::GaussMix::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

| void PLearn::GaussMix::addToCovariance | ( | const Vec & | y, |

| int | j, | ||

| const Mat & | cov, | ||

| real | post | ||

| ) | [protected] |

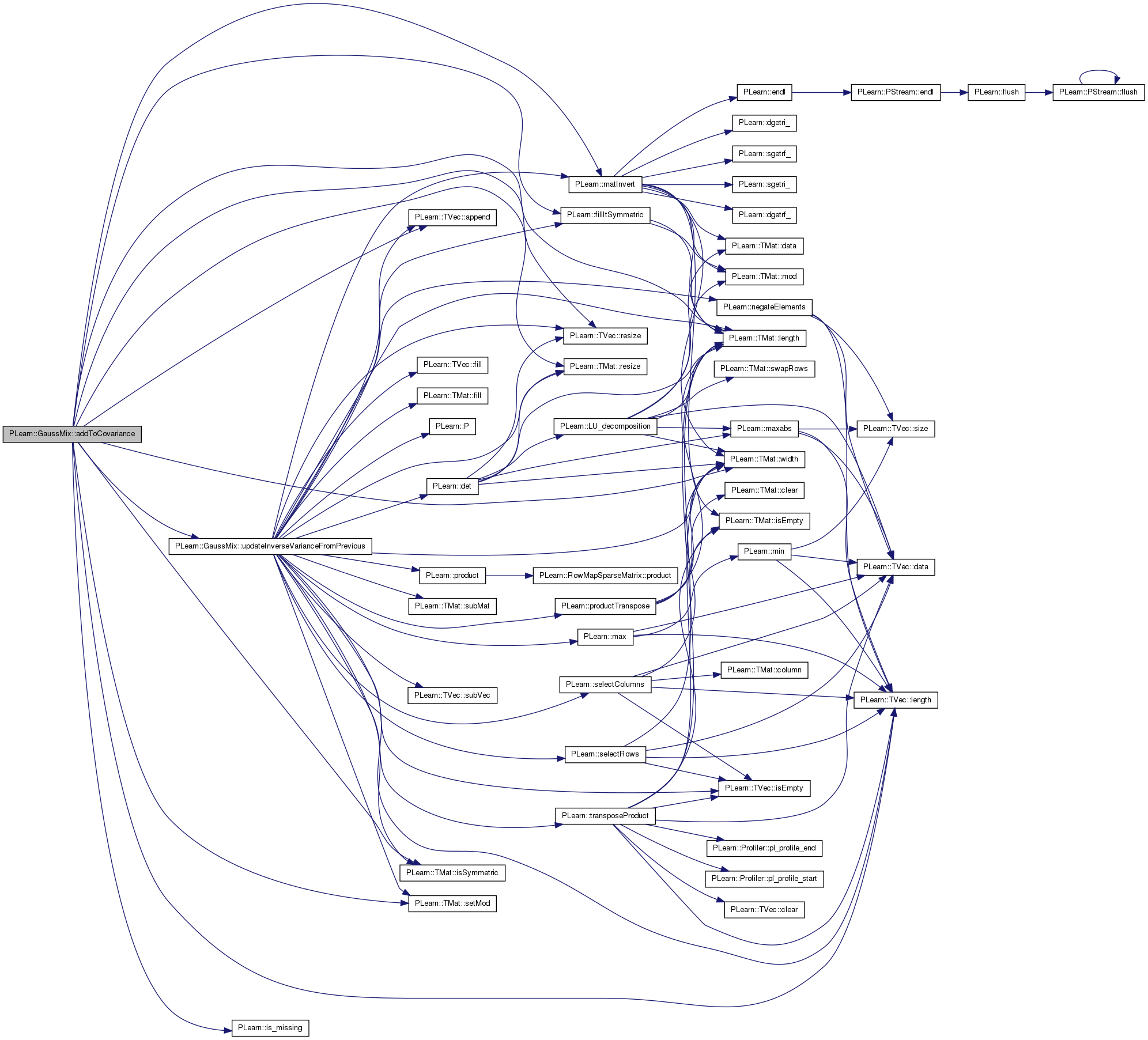

Definition at line 810 of file GaussMix.cc.

References PLearn::TVec< T >::append(), cond_var_inv_queue, current_cluster, current_training_sample, PLearn::fillItSymmetric(), i, impute_missing, indices_inv_queue, PLearn::is_missing(), PLearn::TMat< T >::isSymmetric(), j, joint_inv_cov, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::matInvert(), PLearn::PDistribution::n_predictor, no_missing_change, PLASSERT, previous_training_sample, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sample_to_path_index, PLearn::TMat< T >::setMod(), spanning_can_free, spanning_use_previous, updateInverseVarianceFromPrevious(), and PLearn::TMat< T >::width().

Referenced by computePosteriors().

{

//Profiler::start("addToCovariance");

PLASSERT( y.length() == cov.length() && y.length() == cov.width() );

PLASSERT( n_predictor == 0 );

PLASSERT( impute_missing );

static TVec<int> coord_missing;

static Mat inv_cov_y_missing;

static Mat H_inv_tpl;

static TVec<int> ind_inv_tpl;

static Mat H_inv_tot;

static TVec<int> ind_inv_tot;

coord_missing.resize(0);

for (int k = 0; k < y.length(); k++)

if (is_missing(y[k]))

coord_missing.append(k);

Mat& inv_cov_y = joint_inv_cov[j];

if (previous_training_sample == -1) {

int n_missing = coord_missing.length();

inv_cov_y_missing.setMod(n_missing);

inv_cov_y_missing.resize(n_missing, n_missing);

for (int k = 0; k < n_missing; k++)

for (int q = 0; q < n_missing; q++)

inv_cov_y_missing(k,q) =

inv_cov_y(coord_missing[k], coord_missing[q]);

cond_var_inv_queue.resize(1);

Mat& cond_inv = cond_var_inv_queue[0];

cond_inv.resize(inv_cov_y_missing.length(), inv_cov_y_missing.width());

matInvert(inv_cov_y_missing, cond_inv);

// Take care of numerical imprecisions that may cause the inverse not

// to be exactly symmetric.

PLASSERT( cond_inv.isSymmetric(false, true) );

fillItSymmetric(cond_inv);

indices_inv_queue.resize(1);

TVec<int>& ind = indices_inv_queue[0];

ind.resize(n_missing);

ind << coord_missing;

}

int path_index =

sample_to_path_index[current_training_sample];

int queue_index;

if (spanning_use_previous[current_cluster][path_index])

queue_index = cond_var_inv_queue.length() - 1;

else

queue_index = cond_var_inv_queue.length() - 2;

H_inv_tpl = cond_var_inv_queue[queue_index];

ind_inv_tpl = indices_inv_queue[queue_index];

int n_inv_tpl = H_inv_tpl.length();

H_inv_tot.resize(n_inv_tpl, n_inv_tpl);

ind_inv_tot = coord_missing;

bool same_covariance = no_missing_change[current_training_sample];

if (!same_covariance)

updateInverseVarianceFromPrevious(H_inv_tpl, H_inv_tot,

joint_inv_cov[j], ind_inv_tpl, ind_inv_tot);

Mat* the_H_inv = same_covariance ? &H_inv_tpl : &H_inv_tot;

TVec<int>* the_ind_inv = same_covariance? &ind_inv_tpl : &ind_inv_tot;

// Add this matrix (weighted by the coefficient 'post') to the given 'cov'

// full matrix.

for (int i = 0; i < the_ind_inv->length(); i++) {

int the_ind_inv_i = (*the_ind_inv)[i];

for (int k = 0; k < the_ind_inv->length(); k++)

cov(the_ind_inv_i, (*the_ind_inv)[k]) += post * (*the_H_inv)(i, k);

}

bool cannot_free =

!spanning_can_free[current_cluster][path_index];

if (cannot_free)

queue_index++;

cond_var_inv_queue.resize(queue_index + 1);

indices_inv_queue.resize(queue_index + 1);

static Mat dummy_mat;

H_inv_tpl = dummy_mat;

if (!same_covariance || cannot_free) {

Mat& M = cond_var_inv_queue[queue_index];

M.resize(H_inv_tot.length(), H_inv_tot.width());

M << H_inv_tot;

TVec<int>& ind = indices_inv_queue[queue_index];

ind.resize(the_ind_inv->length());

ind << *the_ind_inv;

}

//Profiler::end("addToCovariance");

}

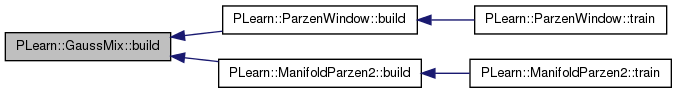

| void PLearn::GaussMix::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 312 of file GaussMix.cc.

References PLearn::PDistribution::build(), and build_().

Referenced by PLearn::ParzenWindow::build(), and PLearn::ManifoldParzen2::build().

{

inherited::build();

build_();

}

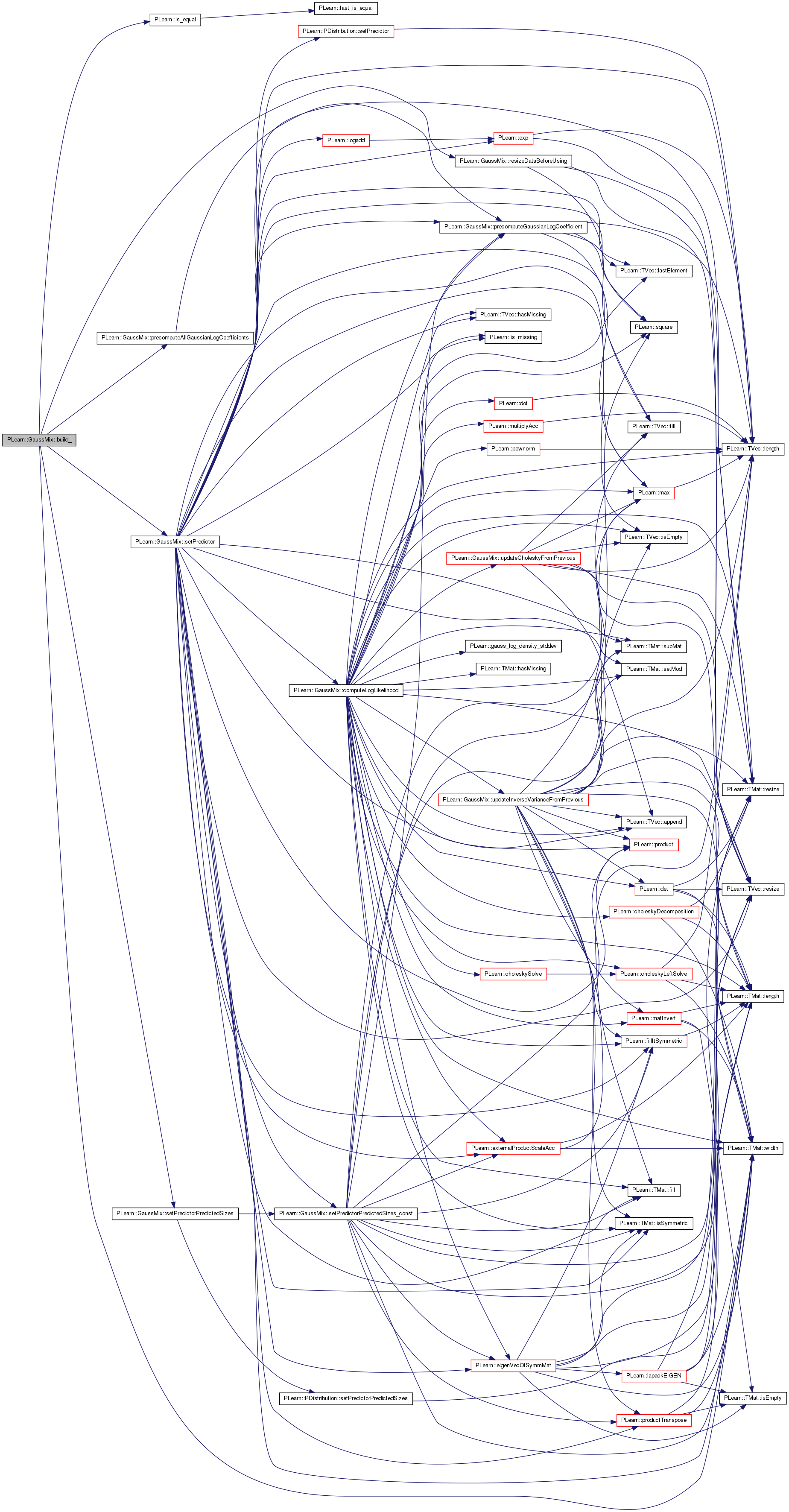

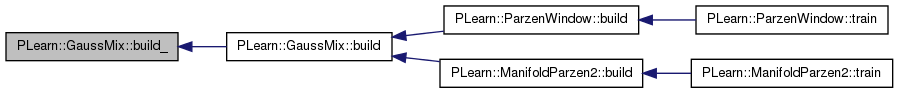

| void PLearn::GaussMix::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 321 of file GaussMix.cc.

References center, D, eigenvalues, f_eigen, PLearn::is_equal(), n_eigen, n_eigen_computed, PLASSERT, PLERROR, precomputeAllGaussianLogCoefficients(), PLearn::PDistribution::predicted_size, PLearn::PDistribution::predictor_part, PLearn::PDistribution::predictor_size, resizeDataBeforeUsing(), setPredictor(), setPredictorPredictedSizes(), PLearn::PLearner::stage, type, TYPE_DIAGONAL, TYPE_GENERAL, type_id, TYPE_SPHERICAL, and PLearn::TMat< T >::width().

Referenced by build().

{

// Check type value.

if (type == "spherical") {

type_id = TYPE_SPHERICAL;

} else if (type == "diagonal") {

type_id = TYPE_DIAGONAL;

} else if (type == "general") {

type_id = TYPE_GENERAL;

} else

PLERROR("In GaussMix::build_ - Type '%s' is unknown", type.c_str());

// Special case for the 'f_eigen' option: 1 means we keep everything.

PLASSERT( f_eigen >= 0 && f_eigen <= 1 );

if (is_equal(f_eigen, 1))

n_eigen = -1;

// Guess values for 'D' and 'n_eigen_computed' if they are not provided

// (this could be the case for instance when specifying 'by hand' the

// parameters of the mixture of Gaussians).

// Make also a few checks to ensure all values are coherent.

if (stage > 0) {

PLASSERT( D == -1 || D == center.width() );

if (D == -1)

D = center.width();

PLASSERT( n_eigen_computed == -1 ||

n_eigen_computed == eigenvalues.width() );

if (n_eigen_computed == -1)

n_eigen_computed = eigenvalues.width();

PLASSERT( n_eigen == -1 || n_eigen_computed <= n_eigen + 1 );

PLASSERT( n_eigen_computed <= D );

}

// Make sure everything is correctly resized before using the object.

resizeDataBeforeUsing();

// If the learner is ready to be used, we need to precompute the logarithm

// of the constant coefficient of each Gaussian.

if (stage > 0)

precomputeAllGaussianLogCoefficients();

// Make GaussMix-specific operations for conditional distributions.

GaussMix::setPredictorPredictedSizes(predictor_size, predicted_size, false);

GaussMix::setPredictor(predictor_part, false);

}

Return cumulative density fn = P(Y<y | x).

Reimplemented from PLearn::PDistribution.

Definition at line 4400 of file GaussMix.cc.

References MISSING_VALUE.

{

//PLERROR("cdf not implemented for GaussMix"); return 0.0;

return MISSING_VALUE;

}

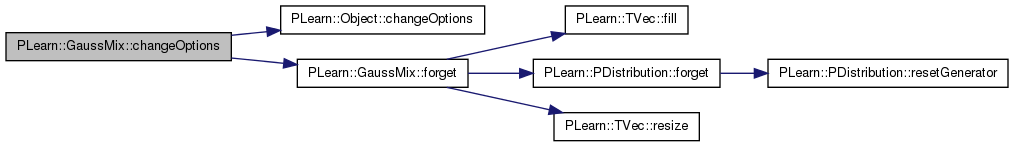

| void PLearn::GaussMix::changeOptions | ( | const map< string, string > & | name_value | ) | [virtual] |

Overridden in order to detect changes that require a call to forget().

Reimplemented from PLearn::Object.

Definition at line 370 of file GaussMix.cc.

References PLearn::Object::changeOptions(), forget(), and PLearn::PLearner::stage.

{

// When 'n_eigen' is changed for a learner that is already trained, we need

// to call forget(), otherwise some asserts may fail during a subsequent

// build.

if (stage > 0 && (name_value.find("n_eigen") != name_value.end() ||

name_value.find("L") != name_value.end() ||

name_value.find("seed") != name_value.end() ||

name_value.find("sigma_min")!=name_value.end() ||

name_value.find("type") != name_value.end() ))

forget();

inherited::changeOptions(name_value);

}

| string PLearn::GaussMix::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 133 of file GaussMix.cc.

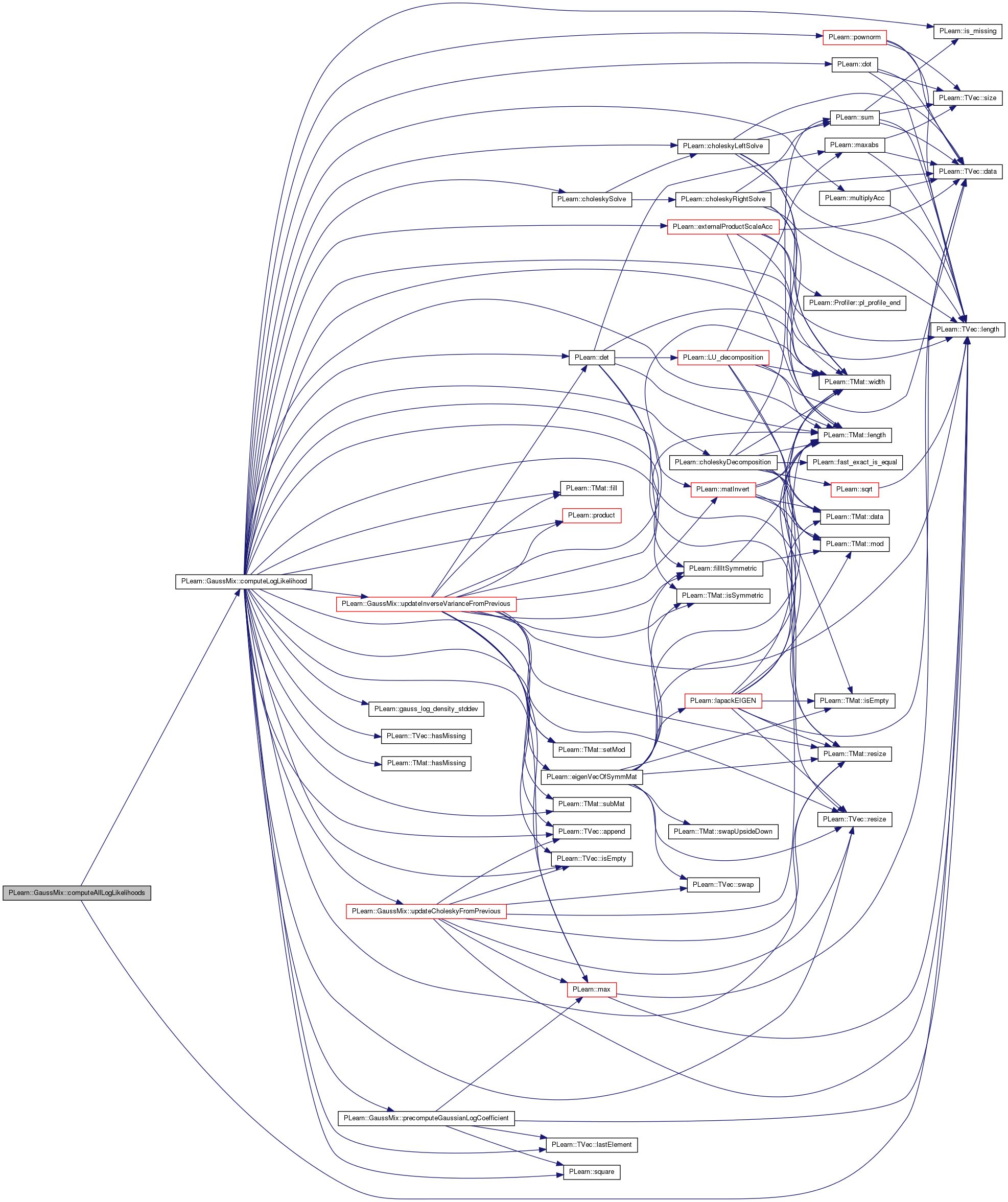

| void PLearn::GaussMix::computeAllLogLikelihoods | ( | const Vec & | sample, |

| const Vec & | log_like | ||

| ) | [protected] |

Fill the 'log_like' vector with the log-likelihood of each Gaussian for the given sample.

Definition at line 1907 of file GaussMix.cc.

References computeLogLikelihood(), D, j, L, PLearn::TVec< T >::length(), and PLASSERT.

Referenced by computePosteriors().

{

PLASSERT( sample.length() == D );

PLASSERT( log_like.length() == L );

for (int j = 0; j < L; j++)

log_like[j] = computeLogLikelihood(sample, j);

}

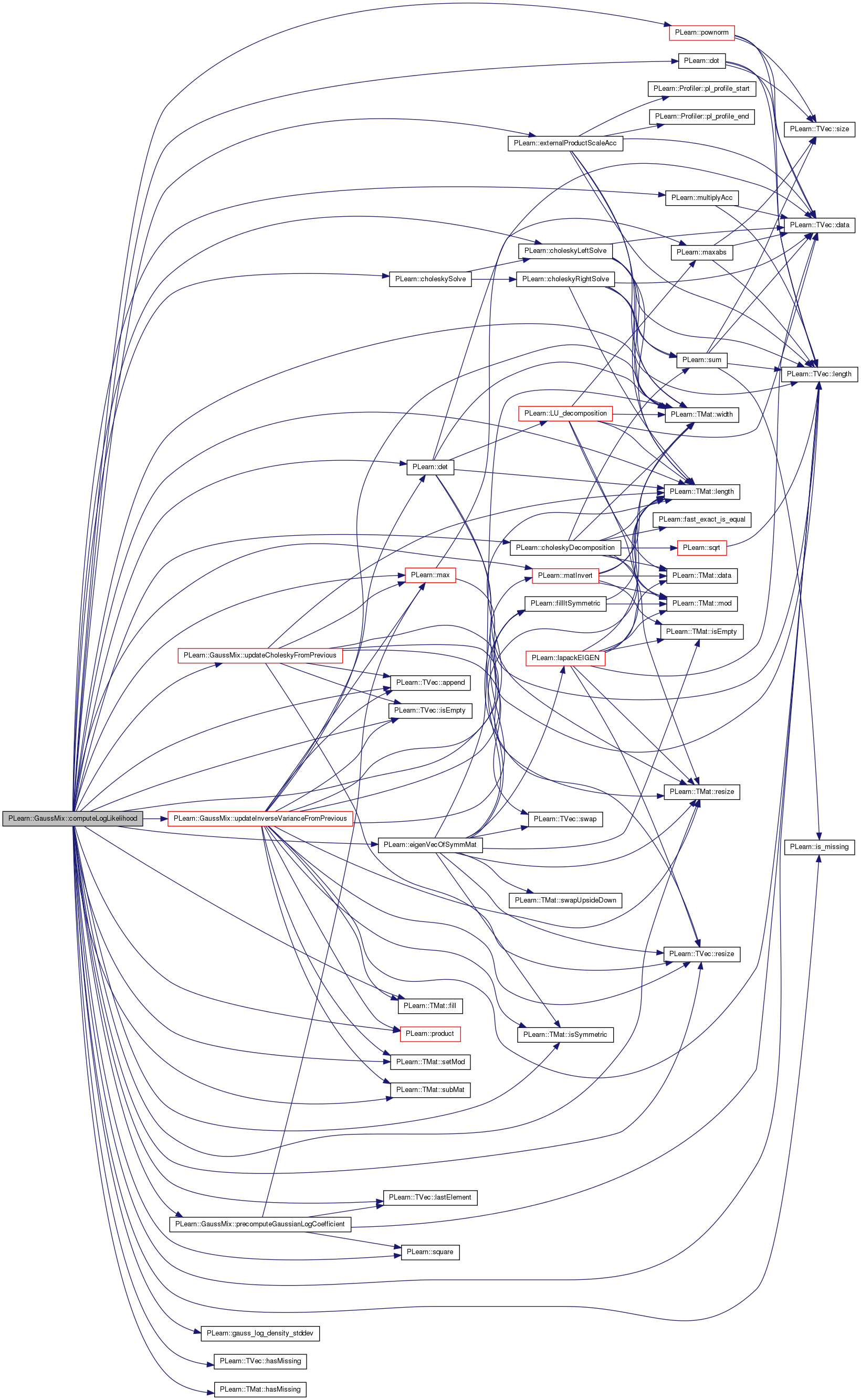

| real PLearn::GaussMix::computeLogLikelihood | ( | const Vec & | y, |

| int | j, | ||

| bool | is_predictor = false |

||

| ) | const [protected] |

Compute log p(y | x,j), with j < L the index of a mixture's component, and 'x' the current predictor part.

If 'is_predictor' is set to true, then it is the likelihood of the des that will be returned, i.e. log p(X = y | j).

Definition at line 908 of file GaussMix.cc.

References PLearn::TVec< T >::append(), center, center_y_x, cholesky_queue, PLearn::choleskyDecomposition(), PLearn::choleskyLeftSolve(), PLearn::choleskySolve(), clust_imputed_missing, current_cluster, current_training_sample, D, PLearn::det(), diags, PLearn::dot(), efficient_missing, eigenvalues, eigenvalues_x, eigenvalues_y_x, PLearn::eigenVecOfSymmMat(), eigenvectors, eigenvectors_x, eigenvectors_y_x, PLearn::externalProductScaleAcc(), PLearn::TMat< T >::fill(), PLearn::fillItSymmetric(), PLearn::gauss_log_density_stddev(), H3_inverse, PLearn::TVec< T >::hasMissing(), PLearn::TMat< T >::hasMissing(), i, impute_missing, imputed_missing, indices_queue, PLearn::is_missing(), PLearn::TVec< T >::isEmpty(), PLearn::TMat< T >::isSymmetric(), j, joint_cov, joint_inv_cov, L, PLearn::TVec< T >::lastElement(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), Log2Pi, log_coeff, log_coeff_x, log_coeff_y_x, log_det_queue, PLearn::matInvert(), PLearn::max(), PLearn::multiplyAcc(), n, n_eigen, n_eigen_computed, PLearn::PDistribution::n_predicted, PLearn::PDistribution::n_predictor, need_recompute, no_missing_change, pl_log, PLASSERT, PLearn::pownorm(), precomputeGaussianLogCoefficient(), previous_training_sample, PLearn::product(), PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sample_to_path_index, PLearn::TMat< T >::setMod(), sigma, sigma_min, spanning_can_free, spanning_use_previous, PLearn::square(), PLearn::PLearner::stage, stage_joint_cov_computed, PLearn::TMat< T >::subMat(), TYPE_DIAGONAL, TYPE_GENERAL, type_id, TYPE_SPHERICAL, updateCholeskyFromPrevious(), updateInverseVarianceFromPrevious(), PLearn::TMat< T >::width(), and y_centered.

Referenced by computeAllLogLikelihoods(), computePosteriors(), PLearn::ManifoldParzen2::evaluate(), PLearn::ManifoldParzen2::evaluate_i_j(), log_density(), and setPredictor().

{

//Profiler::start("computeLogLikelihood");

static int size; // Size of the vector whose density is computed.

// Index where we start (usually 0 when 'is_predictor', and 'n_predictor'

// otherwise).

static int start;

// Storage of mean.

static Vec mu_y;

static Vec mu;

static Vec diag_j; // Points to the standard deviations of Gaussian j.

// Used to point to the correct eigenvalues / eigenvectors.

static Vec eigenvals;

static Mat eigenvecs;

// Stuff when there are missing values: we need to do a lot more

// computations (with the current rather dumb implementation).

static Vec mu_y_missing;

static Mat cov_y_missing;

static Mat dummy_storage;

static TVec<Mat> covs_y_missing;

static TVec<Vec> mus_y_missing;

static Vec y_missing;

static Vec eigenvals_missing;

static TVec<Vec> eigenvals_allj_missing;

static Mat* eigenvecs_missing;

static Mat eigenvecs_missing_storage;

static TVec<Mat> eigenvecs_allj_missing;

static TVec<int> non_missing;

static Mat work_mat1, work_mat2;

static Mat eigenvalues_x_miss;

static TVec<Mat> eigenvectors_x_miss;

static Mat full_cov;

static Mat cov_x_j;

static Vec y_non_missing;

static Vec center_non_missing;

static Mat cov_y_x;

// Dummy matrix and vector to release some storage pointers so that some

// matrices can be resized.

static Mat dummy_mat;

static Vec dummy_vec;

eigenvecs_missing = &eigenvecs_missing_storage;

Mat* the_cov_y_missing = &cov_y_missing;

Vec* the_mu_y_missing = &mu_y_missing;

// Will contain the final result (the desired log-likelihood).

real log_likelihood;

if (type_id == TYPE_SPHERICAL || type_id == TYPE_DIAGONAL) {

// Easy case: the covariance matrix is diagonal.

if (is_predictor) {

size = n_predictor;

start = 0;

} else {

size = n_predicted;

start = n_predictor;

}

mu_y = center(j).subVec(start, size);

if (type_id == TYPE_DIAGONAL) {

PLASSERT( diags.length() == L && diags.width() == n_predictor+n_predicted );

diag_j = diags(j).subVec(start, size);

}

log_likelihood = 0;

// x ~= N(mu_x, cov (diagonal))

// y|x ~= N(mu_y, cov (diagonal))

for (int k = 0; k < size; k++)

if (!is_missing(y[k])) {

real stddev =

type_id == TYPE_SPHERICAL ? sigma[j]

: diag_j[k];

stddev = max(sigma_min, stddev);

log_likelihood +=

gauss_log_density_stddev(y[k], mu_y[k], stddev);

}

} else {

PLASSERT( type_id == TYPE_GENERAL );

log_likelihood = 0; // Initialize resultresult to zero.

// TODO Put both cases (n_predictor == 0 and other) in same code (they are

// very close one to each other).

if (n_predictor == 0) {

// Simple case: there is no predictor part.

PLASSERT( !is_predictor );

PLASSERT( y.length() == n_predicted );

// When not in training mode, 'previous_training_sample' is set to

// -2, and 'current_training_sample' is set to -1.

// In such a case, it is not necessary to do all computations.

// TODO It would be good to have one single flag for both lines

// below. Maybe current_training_sample != -1 would be enough?

bool eff_missing = (efficient_missing == 1 ||

efficient_missing == 3 ) &&

(previous_training_sample != -2);

bool imp_missing = impute_missing &&

(current_training_sample != -1);

bool eff_naive_missing = (efficient_missing == 2) &&

(current_training_sample != -1);

if (y.hasMissing() || eff_missing || imp_missing) {

// TODO This will probably make the 'efficient_missing' method

// perform slower on data with no missing value. This should be

// optimized.

// We need to recompute almost everything.

// First the full covariance.

Mat& cov_y = joint_cov[j];

Mat* inv_cov_y = impute_missing ? &joint_inv_cov[j] : 0;

real var_min = square(sigma_min);

if (stage_joint_cov_computed[j] != this->stage) {

stage_joint_cov_computed[j] = this->stage;

cov_y.resize(D, D);

eigenvals = eigenvalues(j);

real lambda0 = max(var_min, eigenvals.lastElement());

cov_y.fill(0);

Mat& eigenvectors_j = eigenvectors[j];

PLASSERT( eigenvectors_j.width() == D );

for (int k = 0; k < n_eigen_computed - 1; k++)

externalProductScaleAcc(

cov_y, eigenvectors_j(k), eigenvectors_j(k),

max(var_min, eigenvals[k]) - lambda0);

for (int i = 0; i < D; i++)

cov_y(i,i) += lambda0;

// By construction, the resulting matrix is symmetric. However,

// it may happen that it is not exactly the case due to numerical

// approximations. Thus we ensure it is perfectly symmetric.

PLASSERT( cov_y.isSymmetric(false) );

fillItSymmetric(cov_y);

if (impute_missing) {

// We also need to compute the inverse covariance

// matrix.

PLASSERT( inv_cov_y );

inv_cov_y->resize(D, D);

inv_cov_y->fill(0);

real l0 = 1 / lambda0;

for (int k = 0; k < n_eigen_computed - 1; k++)

externalProductScaleAcc(

*inv_cov_y, eigenvectors_j(k),

eigenvectors_j(k),

1 / max(var_min, eigenvals[k]) - l0);

for (int i = 0; i < D; i++)

(*inv_cov_y)(i, i) += l0;

// For the same reason as above.

PLASSERT( inv_cov_y->isSymmetric(false) );

fillItSymmetric(*inv_cov_y);

}

/*

if (efficient_missing) {

// Now compute its Cholesky decomposition.

Mat& chol_cov_y = chol_joint_cov[j];

choleskyDecomposition(cov_y, chol_cov_y);

// And do the same for missing templates.

TVec<bool> miss_pattern;

for (int i = 0; i < efficient_k_median; i++) {

miss_pattern = missing_template(i);

int n_non_missing = miss_pattern.length();

non_missing.resize(0);

for (int k = 0; k < miss_pattern.length(); k++)

if (miss_pattern[k])

n_non_missing--;

else

non_missing.append(k);

cov_y_missing.resize(n_non_missing, n_non_missing);

for (int k = 0; k < n_non_missing; k++)

for (int q = 0; q < n_non_missing; q++)

cov_y_missing(k,q) =

cov_y(non_missing[k], non_missing[q]);

Mat& chol_cov_tpl = chol_cov_template(i, j);

choleskyDecomposition(cov_y_missing, chol_cov_tpl);

}

}

*/

}

/*

// Then extract what we want.

int tpl_idx;

TVec<bool> missing_tpl;

if (efficient_missing) {

PLASSERT( current_training_sample != -1 );

tpl_idx =

sample_to_template[current_training_sample];

missing_tpl = missing_template(tpl_idx);

}

*/

/*

static TVec<int> com_non_missing, add_non_missing, add_missing;

com_non_missing.resize(0);

add_non_missing.resize(0);

// 'add_missing' will contain those coordinate in the template

// covariance matrix that need to be deleted (because they are

// missing in the current template).

add_missing.resize(0);

*/

non_missing.resize(0);

static TVec<int> coord_missing;

coord_missing.resize(0);

// int count_tpl_dim = 0;

for (int k = 0; k < n_predicted; k++)

if (!is_missing(y[k]))

non_missing.append(k);

else

coord_missing.append(k);

int n_non_missing = non_missing.length();

if (eff_missing && previous_training_sample == -1) {

// No previous training sample: we need to compute from

// scratch the Cholesky decomposition.

the_cov_y_missing->setMod(n_non_missing);

the_cov_y_missing->resize(n_non_missing, n_non_missing);

for (int k = 0; k < n_non_missing; k++)

for (int q = 0; q < n_non_missing; q++)

(*the_cov_y_missing)(k,q) =

cov_y(non_missing[k], non_missing[q]);

cholesky_queue.resize(1);

// pout << "length = " << cholesky_queue.length() << endl;

Mat& chol = cholesky_queue[0];

if (efficient_missing == 1)

choleskyDecomposition(*the_cov_y_missing, chol);

else {

PLASSERT( efficient_missing == 3 );

log_det_queue.resize(1);

log_det_queue[0] = det(*the_cov_y_missing, true);

chol.resize(the_cov_y_missing->length(),

the_cov_y_missing->length());

PLASSERT( the_cov_y_missing->isSymmetric() );

matInvert(*the_cov_y_missing, chol);

// Commenting-out this assert: it can actually fail due

// to some numerical imprecisions during matrix

// inversion, which is a bit annoying.

// PLASSERT( chol.isSymmetric(false, true) );

fillItSymmetric(chol);

}

indices_queue.resize(1);

TVec<int>& ind = indices_queue[0];

ind.resize(n_non_missing);

ind << non_missing;

}

mu_y = center(j).subVec(0, n_predicted);

the_mu_y_missing->resize(n_non_missing);

y_missing.resize(n_non_missing);

// Fill in first the coordinates which are in the template,

// then the coordinates specific to this data point.

/*

static TVec<int> tot_non_missing;

if (efficient_missing) {

tot_non_missing.resize(com_non_missing.length() +

add_non_missing.length());

tot_non_missing.subVec(0, com_non_missing.length())

<< com_non_missing;

tot_non_missing.subVec(com_non_missing.length(),

add_non_missing.length())

<< add_non_missing;

for (int k = 0; k < tot_non_missing.length(); k++) {

mu_y_missing[k] = mu_y[tot_non_missing[k]];

y_missing[k] = y[tot_non_missing[k]];

}

}

*/

if (!eff_missing) {

if (!eff_naive_missing) {

dummy_storage.setMod(n_non_missing);

dummy_storage.resize(n_non_missing, n_non_missing);

the_cov_y_missing = &dummy_storage;

} else {

PLASSERT( efficient_missing == 2 );

covs_y_missing.resize(L);

Mat& cov_y_missing_j = covs_y_missing[j];

cov_y_missing_j.resize(n_non_missing, n_non_missing);

the_cov_y_missing = &cov_y_missing_j;

mus_y_missing.resize(L);

Vec& mu_y_missing_j = mus_y_missing[j];

mu_y_missing_j.resize(n_non_missing);

the_mu_y_missing = &mu_y_missing_j;

}

for (int k = 0; k < n_non_missing; k++)

y_missing[k] = y[non_missing[k]];

if (!eff_naive_missing ||

need_recompute[current_training_sample]) {

for (int k = 0; k < n_non_missing; k++) {

(*the_mu_y_missing)[k] = mu_y[non_missing[k]];

for (int q = 0; q < n_non_missing; q++) {

(*the_cov_y_missing)(k,q) =

cov_y(non_missing[k], non_missing[q]);

}

}

}

}

/*

if (n_non_missing == 0) {

log_likelihood = 0;

} else {*/

// Perform SVD of cov_y_missing.

if (!eff_missing) {

if (!eff_naive_missing ||

need_recompute[current_training_sample]) {

eigenvals_allj_missing.resize(L);

eigenvecs_allj_missing.resize(L);

// TODO We probably do not need this 'cov_backup', since

// the matrix 'the_cov_y_missing' should not be re-used.

// Once this is tested and verified, it could be removed

// for efficiency reasons.

static Mat cov_backup;

cov_backup.setMod(the_cov_y_missing->width());

cov_backup.resize(the_cov_y_missing->length(),

the_cov_y_missing->width());

cov_backup << *the_cov_y_missing;

eigenVecOfSymmMat(cov_backup, n_non_missing,

eigenvals_allj_missing[j],

eigenvecs_allj_missing[j]);

PLASSERT( eigenvals_allj_missing[j].length()==n_non_missing);

PLASSERT( !cov_backup.hasMissing() );

}

eigenvals_missing = eigenvals_allj_missing[j];

eigenvecs_missing = &eigenvecs_allj_missing[j];

}

real log_det = 0;

static Mat L_tpl;

static TVec<int> ind_tpl;

static Mat L_tot;

static TVec<int> ind_tot;

int n_tpl = -1;

int queue_index = -1;

int path_index = -1;

bool same_covariance = false;

real log_det_tot, log_det_tpl;

if (eff_missing) {

path_index =

sample_to_path_index[current_training_sample];

// pout << "path index = " << path_index << endl;

L_tot.resize(n_non_missing, n_non_missing);

if (spanning_use_previous[current_cluster][path_index])

queue_index = cholesky_queue.length() - 1;

else

queue_index = cholesky_queue.length() - 2;

L_tpl = cholesky_queue[queue_index];

ind_tpl = indices_queue[queue_index];

if (efficient_missing == 3)

log_det_tpl = log_det_queue[queue_index];

n_tpl = L_tpl.length();

L_tot.resize(n_tpl, n_tpl);

/*

ind_tot.resize(n_non_missing);

ind_tot << non_missing;

*/

ind_tot = non_missing;

// Optimization: detect when the same covariance matrix

// can be re-used.

// TODO What about just the dimensions being reordered?

// Are we losing time in such cases?

same_covariance =

ind_tpl.length() == ind_tot.length() &&

previous_training_sample >= 0;

if (same_covariance)

for (int i = 0; i < ind_tpl.length(); i++)

if (ind_tpl[i] != ind_tot[i]) {

same_covariance = false;

break;

}

/*

Mat tmp;

if (add_missing.length() > 0) {

tmp.resize(L_tot.length(), L_tot.width());

productTranspose(tmp, L_tot, L_tot);

VMat tmp_vm(tmp);

tmp_vm->saveAMAT("/u/delallea/tmp/before.amat", false,

true);

}

*/

// Remove some rows / columns.

/*

int p = add_missing.length() - 1;

for (int k = p; k >= 0; k--) {

choleskyRemoveDimension(L_tot, add_missing[k]); //(-k+p);

*/

/*

tmp.resize(L_tot.length(), L_tot.width());

productTranspose(tmp, L_tot, L_tot);

VMat tmp_vm(tmp);

tmp_vm->saveAMAT("/u/delallea/tmp/before_" +

tostring(add_missing[k]) + ".amat", false,

true);

*/

/*

}

*/

}

if ((efficient_missing == 1 || efficient_missing == 3) &&

current_training_sample >= 0)

no_missing_change[current_training_sample] =

same_covariance;

// Now we must perform updates to compute the Cholesky

// decomposition of interest.

static Vec new_vec;

int n = -1;

Mat* the_L = 0;

if (eff_missing) {

//L_tot.resize(n_non_missing, n_non_missing);

/*

for (int k = 0; k < add_non_missing.length(); k++) {

new_vec.resize(L_tot.length() + 1);

for (int q = 0; q < new_vec.length(); q++)

new_vec[q] = cov_y(tot_non_missing[q],

add_non_missing[k]);

choleskyAppendDimension(L_tot, new_vec);

}

*/

if (!same_covariance) {

if (efficient_missing == 1) {

//Profiler::start("updateCholeskyFromPrevious, em1");

updateCholeskyFromPrevious(L_tpl, L_tot,

joint_cov[j], ind_tpl, ind_tot);

//Profiler::end("updateCholeskyFromPrevious, em1");

} else {

PLASSERT( efficient_missing == 3 );

//Profiler::start("updateInverseVarianceFromPrevious, em3");

updateInverseVarianceFromPrevious(L_tpl, L_tot,

joint_cov[j], ind_tpl, ind_tot,

&log_det_tpl, &log_det_tot);

//Profiler::end("updateInverseVarianceFromPrevious, em3");

#if 0

// Check that the inverse is correctly computed.

VMat L_tpl_vm(L_tpl);

VMat L_tot_vm(L_tot);

VMat joint_cov_vm(joint_cov[j]);

Mat data_tpl(1, ind_tpl.length());

for (int q = 0; q < ind_tpl.length(); q++)

data_tpl(0, q) = ind_tpl[q];

Mat data_tot(1, ind_tot.length());

for (int q = 0; q < ind_tot.length(); q++)

data_tot(0, q) = ind_tot[q];

VMat ind_tpl_vm(data_tpl);

VMat ind_tot_vm(data_tot);

L_tpl_vm->saveAMAT("/u/delallea/tmp/L_tpl_vm.amat",

false, true);

L_tot_vm->saveAMAT("/u/delallea/tmp/L_tot_vm.amat",

false, true);

joint_cov_vm->saveAMAT("/u/delallea/tmp/joint_cov_vm.amat",

false, true);

ind_tpl_vm->saveAMAT("/u/delallea/tmp/ind_tpl_vm.amat",

false, true);

ind_tot_vm->saveAMAT("/u/delallea/tmp/ind_tot_vm.amat",

false, true);

#endif

}

}

// Note to myself: indices in ind_tot will be changed.

// Debug check.

/*

static Mat tmp_mat;

tmp_mat.resize(L_tot.length(), L_tot.length());

productTranspose(tmp_mat, L_tot, L_tot);

// pout << "max = " << max(tmp_mat) << endl;

// pout << "min = " << min(tmp_mat) << endl;

*/

the_L = same_covariance ? &L_tpl : &L_tot;

real* the_log_det = same_covariance ? &log_det_tpl

: &log_det_tot;

n = the_L->length();

if (efficient_missing == 1) {

for (int i = 0; i < n; i++)

log_det += pl_log((*the_L)(i, i));

} else {

PLASSERT( efficient_missing == 3 );

#if 0

VMat the_L_vm(*the_L);

the_L_vm->saveAMAT("/u/delallea/tmp/L.amat", false,

true);

#endif

if (is_missing(*the_log_det)) {

// That can happen due to numerical imprecisions.

// In such a case we have to recompute the

// determinant and the inverse.

PLASSERT( !same_covariance );

the_cov_y_missing->setMod(n_non_missing);

the_cov_y_missing->resize(n_non_missing, n_non_missing);

for (int k = 0; k < n_non_missing; k++)

for (int q = 0; q < n_non_missing; q++)

(*the_cov_y_missing)(k,q) =

cov_y(non_missing[k], non_missing[q]);

*the_log_det = det((*the_cov_y_missing), true);

matInvert(*the_cov_y_missing, *the_L);

fillItSymmetric(*the_L);

}

// Note: we need to multiply the log-determinant by 0.5

// compared to 'efficient_missing == 1' because the

// determinant computed from Cholesky is the one for L,

// which is the squared root of the one of the full

// matrix.

log_det += 0.5 * *the_log_det;

}

PLASSERT( !(isnan(log_det) || isinf(log_det)) );

log_likelihood = -0.5 * (n * Log2Pi) - log_det;

}

y_centered.resize(n_non_missing);

if (!eff_missing) {

mu_y = *the_mu_y_missing;

eigenvals = eigenvals_missing;

eigenvecs = *eigenvecs_missing;

y_centered << y_missing;

y_centered -= mu_y;

}

real* center_j = center[j];

if (eff_missing) {

for (int k = 0; k < n_non_missing; k++) {

int ind_tot_k = ind_tot[k];

y_centered[k] =

y[ind_tot_k] - center_j[ind_tot_k];

}

static Vec tmp_vec1;

if (impute_missing && current_training_sample >= 0) {

// We need to store the conditional expectation of the

// sample missing values.

static Vec tmp_vec2;

tmp_vec1.resize(the_L->length());

tmp_vec2.resize(the_L->length());

if (efficient_missing == 1)

choleskySolve(*the_L, y_centered, tmp_vec1, tmp_vec2);

else {

PLASSERT( efficient_missing == 3 );

product(tmp_vec1, *the_L, y_centered);

}

static Mat K2;

int ind_tot_length = ind_tot.length();

K2.resize(cov_y.length() - ind_tot_length,

ind_tot.length());

for (int i = 0; i < K2.length(); i++)

for (int k = 0; k < K2.width(); k++)

K2(i,k) = cov_y(coord_missing[i],

non_missing[k]);

static Vec cond_mean;

cond_mean.resize(coord_missing.length());

product(cond_mean, K2, tmp_vec1);

static Vec full_vec;

// TODO Right now, we store the full data vector. It

// may be more efficient to only store the missing

// values.

full_vec.resize(D);

full_vec << y;

for (int i = 0; i < coord_missing.length(); i++)

full_vec[coord_missing[i]] =

cond_mean[i] + center_j[coord_missing[i]];

clust_imputed_missing[j](path_index) << full_vec;

}

if (n > 0) {

if (efficient_missing == 1) {

tmp_vec1.resize(y_centered.length());

choleskyLeftSolve(*the_L, y_centered, tmp_vec1);

log_likelihood -= 0.5 * pownorm(tmp_vec1);

} else {

PLASSERT( efficient_missing == 3 );

log_likelihood -= 0.5 * dot(y_centered, tmp_vec1);

}

}

// Now remember L_tot for the generations to come.

// TODO This could probably be optimized to avoid useless

// copies of the covariance matrix.

bool cannot_free =

!spanning_can_free[current_cluster][path_index];

if (cannot_free)

queue_index++;

cholesky_queue.resize(queue_index + 1);

indices_queue.resize(queue_index + 1);

if (efficient_missing == 3)

log_det_queue.resize(queue_index + 1);

// pout << "length = " << cholesky_queue.length() << endl;

// Free a reference to element in cholesky_queue. This

// is needed because this matrix is going to be resized.

L_tpl = dummy_mat;

if (!same_covariance || cannot_free) {

Mat& chol = cholesky_queue[queue_index];

chol.resize(L_tot.length(), L_tot.width());

chol << L_tot;

TVec<int>& ind = indices_queue[queue_index];

ind.resize(ind_tot.length());

ind << ind_tot;

if (efficient_missing == 3)

log_det_queue[queue_index] = log_det_tot;

}

// pout << "queue_index = " << queue_index << endl;

}

if (!eff_missing) {

// real squared_norm_y_centered = pownorm(y_centered);

int n_eig = n_non_missing;

real lambda0 = var_min;

if (!eigenvals.isEmpty() && eigenvals.lastElement() > lambda0)

lambda0 = eigenvals.lastElement();

PLASSERT( lambda0 > 0 );

real one_over_lambda0 = 1.0 / lambda0;

log_likelihood = precomputeGaussianLogCoefficient(

eigenvals, n_non_missing);

static Vec y_centered_copy;

y_centered_copy.resize(y_centered.length());

y_centered_copy << y_centered; // Backup vector.

for (int k = 0; k < n_eig - 1; k++) {

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

Vec eigen_k = eigenvecs(k);

real dot_k = dot(eigen_k, y_centered);

log_likelihood -= 0.5 * square(dot_k) / lambda;

multiplyAcc(y_centered, eigen_k, -dot_k);

}

log_likelihood -=

0.5 * pownorm(y_centered) * one_over_lambda0;

y_centered << y_centered_copy; // Restore original vector.

#if 0

// Old code, that had stability issues when dealing with

// large numbers.

// log_likelihood -= 0.5 * 1/lambda_0 * ||y - mu||^2

log_likelihood -=

0.5 * one_over_lambda0 * squared_norm_y_centered;

for (int k = 0; k < n_eig - 1; k++) {

// log_likelihood -= 0.5 * (1/lambda_k - 1/lambda_0)

// * ((y - mu)'.v_k)^2

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

if (lambda > lambda0)

log_likelihood -=

0.5 * (1.0 / lambda - one_over_lambda0)

* square(dot(eigenvecs(k), y_centered));

}

#endif

// Release pointer to 'eigenvecs_missing'.

eigenvecs = dummy_mat;

eigenvecs_missing = &eigenvecs_missing_storage;

if (impute_missing && current_training_sample >= 0) {

// We need to store the conditional expectation of the

// sample missing values.

// For this we compute H3^-1, since this expectation is

// equal to mu_y - H3^-1 H2 (x - mu_x).

static Mat H3;

static Mat H2;

Mat& H3_inv = H3_inverse[j];

int n_missing = coord_missing.length();

if (!eff_naive_missing ||

need_recompute[current_training_sample]) {

H3.setMod(n_missing);

H3.resize(n_missing, n_missing);

H3_inv.resize(n_missing, n_missing);

for (int i = 0; i < n_missing; i++)

for (int k = 0; k < n_missing; k++)

H3(i,k) = (*inv_cov_y)(coord_missing[i],

coord_missing[k]);

PLASSERT( H3.isSymmetric(true, true) );

matInvert(H3, H3_inv);

// PLASSERT( H3_inv.isSymmetric(false, true) );

fillItSymmetric(H3_inv);

}

H2.resize(n_missing, n_non_missing);

for (int i = 0; i < n_missing; i++)

for (int k = 0; k < n_non_missing; k++)

H2(i,k) = (*inv_cov_y)(coord_missing[i],

non_missing[k]);

static Vec H2_y_centered;

H2_y_centered.resize(n_missing);

product(H2_y_centered, H2, y_centered);

static Vec cond_mean;

cond_mean.resize(n_missing);

product(cond_mean, H3_inv, H2_y_centered);

static Vec full_vec;

// TODO Right now, we store the full data vector. It

// may be more efficient to only store the missing

// values.

full_vec.resize(D);

full_vec << y;

for (int i = 0; i < n_missing; i++)

full_vec[coord_missing[i]] =

center_j[coord_missing[i]] - cond_mean[i];

PLASSERT( !full_vec.hasMissing() );

imputed_missing[j]->putRow(current_training_sample,

full_vec);

}

}

//}

} else {

log_likelihood = log_coeff[j];

mu_y = center(j).subVec(0, n_predicted);

eigenvals = eigenvalues(j);

eigenvecs = eigenvectors[j];

y_centered.resize(n_predicted);

y_centered << y;

y_centered -= mu_y;

real squared_norm_y_centered = pownorm(y_centered);

real var_min = square(sigma_min);

int n_eig = n_eigen_computed;

real lambda0 = max(var_min, eigenvals[n_eig - 1]);

PLASSERT( lambda0 > 0 );

real one_over_lambda0 = 1.0 / lambda0;

// log_likelihood -= 0.5 * 1/lambda_0 * ||y - mu||^2

log_likelihood -= 0.5 * one_over_lambda0 * squared_norm_y_centered;

for (int k = 0; k < n_eig - 1; k++) {

// log_likelihood -= 0.5 * (1/lambda_k - 1/lambda_0)

// * ((y - mu)'.v_k)^2

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

if (lambda > lambda0)

log_likelihood -= 0.5 * (1.0 / lambda - one_over_lambda0)

* square(dot(eigenvecs(k), y_centered));

}

}

} else {

if (y.hasMissing()) {

// TODO Code duplication is ugly!

if (is_predictor) {

non_missing.resize(0);

for (int k = 0; k < y.length(); k++)

if (!is_missing(y[k]))

non_missing.append(k);

int n_non_missing = non_missing.length();

int n_predicted_ext = n_predicted + (n_predictor - n_non_missing);

work_mat1.resize(n_predicted_ext, n_non_missing);

work_mat2.resize(n_predicted_ext, n_predicted_ext);

real var_min = square(sigma_min);

eigenvalues_x_miss.resize(L, n_non_missing);

eigenvectors_x_miss.resize(L);

// Compute the mean and covariance of x and y|x for the j-th

// Gaussian (we will need them to compute the likelihood).

// TODO Do we really compute the mean of y|x here?

// TODO This is pretty ugly but it seems to work: replace by

// better-looking code.

// First we compute the joint covariance matrix from the

// eigenvectors and eigenvalues:

// full_cov = sum_k (lambda_k - lambda0) v_k v_k' + lambda0.I

PLASSERT( n_predictor + n_predicted == D );

Mat& full_cov_j = full_cov;

full_cov_j.resize(D, D);

eigenvals = eigenvalues(j);

real lambda0 = max(var_min, eigenvals[n_eigen_computed - 1]);

full_cov_j.fill(0);

Mat& eigenvectors_j = eigenvectors[j];

PLASSERT( eigenvectors_j.width() == D );

for (int k = 0; k < n_eigen_computed - 1; k++)

externalProductScaleAcc(

full_cov_j, eigenvectors_j(k),

eigenvectors_j(k),

max(var_min, eigenvals[k]) - lambda0);

for (int i = 0; i < D; i++)

full_cov_j(i,i) += lambda0;

// By construction, the resulting matrix is symmetric. However,

// it may happen that it is not exactly the case due to numerical

// approximations. Thus we ensure it is perfectly symmetric.

PLASSERT( full_cov_j.isSymmetric(false) );

fillItSymmetric(full_cov_j);

// Extract the covariance of the predictor x.

Mat cov_x_j_miss = full_cov.subMat(0, 0, n_predictor, n_predictor);

cov_x_j.resize(n_non_missing, n_non_missing);

for (int k = 0; k < n_non_missing; k++)

for (int p = k; p < n_non_missing; p++)

cov_x_j(k,p) = cov_x_j(p,k) =

cov_x_j_miss(non_missing[k], non_missing[p]);

// Compute its SVD.

eigenvectors_x_miss[j].resize(n_non_missing, n_non_missing);

eigenvals = eigenvalues_x_miss(j);

eigenVecOfSymmMat(cov_x_j, n_non_missing, eigenvals,

eigenvectors_x_miss[j]);

y_non_missing.resize(n_non_missing);

center_non_missing.resize(n_non_missing);

for (int k = 0; k < n_non_missing; k++) {

center_non_missing[k] = center(j, non_missing[k]);

y_non_missing[k] = y[non_missing[k]];

}

log_likelihood =

precomputeGaussianLogCoefficient(eigenvals, n_non_missing);

eigenvecs = eigenvectors_x_miss[j];

y_centered.resize(n_non_missing);

y_centered << y_non_missing;

mu = center_non_missing;

} else {

// We need to re-do everything again, now this sucks!

// First the full covariance (of y|x).

Mat& cov_y = cov_y_x;

real var_min = square(sigma_min);

cov_y.resize(n_predicted, n_predicted);

eigenvals = eigenvalues_y_x(j);

real lambda0 = max(var_min, eigenvals.lastElement());

cov_y.fill(0);

Mat& eigenvectors_j = eigenvectors_y_x[j];

int n_eig = eigenvectors_j.length();

PLASSERT( eigenvectors_j.width() == n_predicted );

for (int k = 0; k < n_eig - 1; k++)

externalProductScaleAcc(

cov_y, eigenvectors_j(k), eigenvectors_j(k),

max(var_min, eigenvals[k]) - lambda0);

for (int i = 0; i < n_predicted; i++)

cov_y(i,i) += lambda0;

// By construction, the resulting matrix is symmetric. However,

// it may happen that it is not exactly the case due to numerical

// approximations. Thus we ensure it is perfectly symmetric.

PLASSERT( cov_y.isSymmetric(false) );

fillItSymmetric(cov_y);

// Then extract what we want.

non_missing.resize(0);

for (int k = 0; k < n_predicted; k++)

if (!is_missing(y[k]))

non_missing.append(k);

mu_y = center_y_x(j);

int n_non_missing = non_missing.length();

the_mu_y_missing->resize(n_non_missing);

y_missing.resize(n_non_missing);

the_cov_y_missing->resize(n_non_missing, n_non_missing);

for (int k = 0; k < n_non_missing; k++) {

(*the_mu_y_missing)[k] = mu_y[non_missing[k]];

y_missing[k] = y[non_missing[k]];

for (int q = 0; q < n_non_missing; q++) {

(*the_cov_y_missing)(k,q) =

cov_y(non_missing[k], non_missing[q]);

}

}

if (n_non_missing == 0) {

log_likelihood = 0;

} else {

// Perform SVD of cov_y_missing.

eigenVecOfSymmMat(*the_cov_y_missing, n_non_missing,

eigenvals_missing, *eigenvecs_missing);

mu_y = *the_mu_y_missing;

eigenvals = eigenvals_missing;

eigenvecs = *eigenvecs_missing;

y_centered.resize(n_non_missing);

y_centered << y_missing;

y_centered -= mu_y;

real squared_norm_y_centered = pownorm(y_centered);

int n_eigen = n_non_missing;

lambda0 = max(var_min, eigenvals.lastElement());

PLASSERT( lambda0 > 0 );

real one_over_lambda0 = 1.0 / lambda0;

log_likelihood = precomputeGaussianLogCoefficient(

eigenvals, n_non_missing);

// log_likelihood -= 0.5 * 1/lambda_0 * ||y - mu||^2

log_likelihood -=

0.5 * one_over_lambda0 * squared_norm_y_centered;

for (int k = 0; k < n_eigen - 1; k++) {

// log_likelihood -= 0.5 * (1/lambda_k - 1/lambda_0)

// * ((y - mu)'.v_k)^2

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

if (lambda > lambda0)

log_likelihood -=

0.5 * (1.0 / lambda - one_over_lambda0)

* square(dot(eigenvecs(k), y_centered));

}

// Allow future resize of 'eigenvecs_missing'.

eigenvecs = dummy_mat;

}

//Profiler::end("computeLogLikelihood");

return log_likelihood;

}

if (y_centered.length() > 0) {

y_centered -= mu;

real squared_norm_y_centered = pownorm(y_centered);

real var_min = square(sigma_min);

int n_eig = eigenvals.length();

real lambda0 = max(var_min, eigenvals.lastElement());

PLASSERT( lambda0 > 0 );

real one_over_lambda0 = 1.0 / lambda0;

// log_likelihood -= 0.5 * 1/lambda_0 * ||y - mu||^2

log_likelihood -= 0.5 * one_over_lambda0 * squared_norm_y_centered;

for (int k = 0; k < n_eig - 1; k++) {

// log_likelihood -= 0.5 * (1/lambda_k - 1/lambda_0)

// * ((y - mu)'.v_k)^2

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

PLASSERT( lambda >= lambda0 );

if (lambda > lambda0)

log_likelihood -= 0.5 * (1.0 / lambda - one_over_lambda0)

* square(dot(eigenvecs(k), y_centered));

}

}

} else {

if (is_predictor) {

log_likelihood = log_coeff_x[j];

mu = center(j).subVec(0, n_predictor);

eigenvals = eigenvalues_x(j);

eigenvecs = eigenvectors_x[j];

y_centered.resize(n_predictor);

} else {

log_likelihood = log_coeff_y_x[j];

mu = center_y_x(j);

eigenvals = eigenvalues_y_x(j);

eigenvecs = eigenvectors_y_x[j];

y_centered.resize(n_predicted);

}

y_centered << y;

y_centered -= mu;

real squared_norm_y_centered = pownorm(y_centered);

real var_min = square(sigma_min);

int n_eig = eigenvals.length();

real lambda0 = max(var_min, eigenvals[n_eig - 1]);

PLASSERT( lambda0 > 0 );

real one_over_lambda0 = 1.0 / lambda0;

// log_likelihood -= 0.5 * 1/lambda_0 * ||y - mu||^2

log_likelihood -= 0.5 * one_over_lambda0 * squared_norm_y_centered;

for (int k = 0; k < n_eig - 1; k++) {

// log_likelihood -= 0.5 * (1/lambda_k - 1/lambda_0)

// * ((y - mu)'.v_k)^2

real lambda = max(var_min, eigenvals[k]);

PLASSERT( lambda > 0 );

PLASSERT( lambda >= lambda0 );

if (lambda > lambda0)

log_likelihood -= 0.5 * (1.0 / lambda - one_over_lambda0)

* square(dot(eigenvecs(k), y_centered));

}

}

// Free a potential reference to 'eigenvalues_x_miss' and

// 'eigenvectors_x_miss'.

eigenvals = dummy_vec;

eigenvecs = dummy_mat;

}

}

PLASSERT( !isnan(log_likelihood) );

//Profiler::end("computeLogLikelihood");

return log_likelihood;

}

| void PLearn::GaussMix::computeMeansAndCovariances | ( | ) | [protected, virtual] |

Given the posteriors, fill the centers and covariance of each Gaussian.

Definition at line 387 of file GaussMix.cc.

References center, PLearn::columnmatrix(), PLearn::columnSum(), PLearn::computeInputMeanAndCovar(), PLearn::computeInputMeanAndStddev(), PLearn::computeInputMeanAndVariance(), covariance, D, diags, eigenvalues, PLearn::eigenVecOfSymmMat(), eigenvectors, epsilon, error_covariance, PLearn::TVec< T >::hasMissing(), PLearn::TMat< T >::hasMissing(), i, impute_missing, imputed_missing, PLearn::is_missing(), PLearn::TMat< T >::isSymmetric(), j, L, PLearn::TVec< T >::length(), PLearn::mean(), mean_training, n_eigen_computed, nsamples, PLASSERT, PLERROR, PLWARNING, posteriors, PLearn::PLearner::random_gen, sigma, PLearn::sqrt(), PLearn::PLearner::stage, stddev_training, sum_of_posteriors, PLearn::PLearner::train_set, TYPE_DIAGONAL, TYPE_GENERAL, type_id, TYPE_SPHERICAL, and updated_weights.

Referenced by train().

{

//Profiler::start("computeMeansAndCovariances");

VMat weighted_train_set;

Vec sum_columns(L);

Vec storage_D(D);

columnSum(posteriors, sum_columns);

for (int j = 0; j < L; j++) {

// Build the weighted dataset.

if (sum_columns[j] < epsilon)

PLWARNING("In GaussMix::computeMeansAndCovariances - A posterior "

"is almost zero");

PLASSERT( !updated_weights(j).hasMissing() );

VMat weights(columnmatrix(updated_weights(j)));

bool use_impute_missing = impute_missing && stage > 0;

VMat input_data = use_impute_missing ? imputed_missing[j]

: train_set;

/*

input_data->saveAMAT("/u/delallea/tmp/input_data_" +

tostring(this->stage) + ".amat", false, true);

*/

weighted_train_set = new ConcatColumnsVMatrix(

new SubVMatrix(input_data, 0, 0, nsamples, D), weights);

weighted_train_set->defineSizes(D, 0, 1);

Vec center_j = center(j);

if (type_id == TYPE_SPHERICAL) {

computeInputMeanAndVariance(weighted_train_set, center_j,

storage_D);

// TODO Would it be better to use an harmonic mean?

sigma[j] = sqrt(mean(storage_D));

if (isnan(sigma[j]))

PLERROR("In GaussMix::computeMeansAndCovariances - A "

"standard deviation is 'nan'");

} else if (type_id == TYPE_DIAGONAL ) {

computeInputMeanAndStddev(weighted_train_set, center_j,

storage_D);

diags(j) << storage_D;

if (storage_D.hasMissing())

PLERROR("In GaussMix::computeMeansAndCovariances - A "

"standard deviation is 'nan'");

} else {

PLASSERT( type_id == TYPE_GENERAL );

//Profiler::start("computeInputMeanAndCovar");

computeInputMeanAndCovar(weighted_train_set, center_j, covariance);

//Profiler::end("computeInputMeanAndCovar");

if (use_impute_missing) {

// Need to add the extra contributions.

if (sum_of_posteriors[j] > 0) {

error_covariance[j] /= sum_of_posteriors[j];

PLASSERT( covariance.isSymmetric() );

PLASSERT( error_covariance[j].isSymmetric() );

covariance += error_covariance[j];

PLASSERT( covariance.isSymmetric() );

}

}

if (center_j.hasMissing()) {

// There are features missing in all points assigned to this

// Gaussian. We sample a new random value for these features.

for (int i = 0; i < D; i++)

if (is_missing(center_j[i])) {

center_j[i] =

random_gen->gaussian_mu_sigma(mean_training [i],

stddev_training[i]);

#ifdef BOUNDCHECK

// Sanity check: the corresponding row and column in

// the covariance matrix should be missing.

for (int k = 0; k < D; k++) {

if (!is_missing(covariance(i,k)) ||

!is_missing(covariance(k,i)))

PLERROR(

"In GaussMix::computeMeansAndCovariances -"

" Expected a missing value in covariance");

}

#endif

}

}

if (covariance.hasMissing())

// The covariance matrix may have some missing values when not

// enough samples were seen to get simultaneous observations of

// some pairs of features.

// Those missing values are replaced with zero.

for (int i = 0; i < D; i++)

for (int k = i; k < D; k++)

if (is_missing(covariance(i,k))) {

covariance(i,k) = 0;

PLASSERT( is_missing(covariance(k,i)) ||

covariance(k,i) == 0 );

covariance(k,i) = 0;

}

#ifdef BOUNDCHECK

// At this point there should be no more missing values.

if (covariance.hasMissing() || center.hasMissing())

PLERROR("In GaussMix::computeMeansAndCovariances - Found "

"missing values when computing weighted mean and "

"covariance");

#endif

// 'eigenvals' points to the eigenvalues of the j-th Gaussian.

Vec eigenvals = eigenvalues(j);

eigenVecOfSymmMat(covariance, n_eigen_computed, eigenvals,

eigenvectors[j]);

PLASSERT( eigenvals.length() == n_eigen_computed );

// Currently, the returned covariance matrix returned is not

// guaranteed to be semi-definite positive. Thus we need to ensure

// it is the case, by thresholding the negative eigenvalues to the

// smallest positive one.

for (int i = n_eigen_computed - 1; i >= 0; i--)

if (eigenvals[i] > 0) {

for (int k = i + 1; k < n_eigen_computed; k++)

eigenvals[k] = eigenvals[i];

break;

}

}

}

//Profiler::end("computeMeansAndCovariances");

}

Compute the weight of each Gaussian (the coefficient 'alpha').

If a Gaussian's coefficient is too low (i.e. less than 'alpha_min') and 'allow_replace' is set to true, this Gaussian will be removed and replaced by a new one, while this method will return 'true' (otherwise it will return 'false').

Definition at line 2045 of file GaussMix.cc.

References alpha, alpha_min, PLearn::TVec< T >::fill(), i, j, L, nsamples, posteriors, replaceGaussian(), PLearn::PLearner::stage, and stage_replaced.

Referenced by train().

{

bool replaced_gaussian = false;

if (L==1)

alpha[0] = 1;

else {

alpha.fill(0);

for (int i = 0; i < nsamples; i++)

for (int j = 0; j < L; j++)

alpha[j] += posteriors(i,j);

alpha /= real(nsamples);

for (int j = 0; j < L && !replaced_gaussian; j++)

if (alpha[j] < alpha_min && allow_replace

&& stage_replaced[j] != this->stage) {

// alpha[j] is too small! We need to remove this Gaussian from

// the mixture, and find a new (better) one.

replaceGaussian(j);

replaced_gaussian = true;

stage_replaced[j] = this->stage;

}

}

return replaced_gaussian;

}

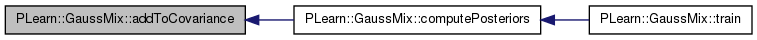

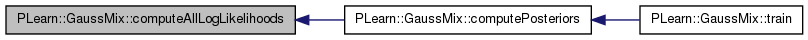

| void PLearn::GaussMix::computePosteriors | ( | ) | [protected, virtual] |

Compute posteriors P(j | s_i) for each sample point and each Gaussian.

Note that actual weights (stored in 'updated_weights') will not be until you explicitely call 'updateSampleWeights'.

Definition at line 1918 of file GaussMix.cc.

References addToCovariance(), alpha, clust_imputed_missing, computeAllLogLikelihoods(), computeLogLikelihood(), current_cluster, current_training_sample, D, efficient_missing, error_covariance, PLearn::exp(), PLearn::TVec< T >::fill(), PLearn::VMat::getSubRow(), H3_inverse, PLearn::TVec< T >::hasMissing(), i, impute_missing, imputed_missing, PLearn::is_missing(), j, L, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), log_likelihood_post, log_likelihood_post_clust, PLearn::logadd(), missing_template, nsamples, pl_log, PLASSERT, posteriors, previous_training_sample, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sample_row, spanning_path, sum_of_posteriors, and PLearn::PLearner::train_set.

Referenced by train().

{

//Profiler::start("computePosteriors");

sample_row.resize(D);

if (impute_missing) {

sum_of_posteriors.resize(L); // TODO Do that in resize method.

sum_of_posteriors.fill(0);

}

log_likelihood_post.resize(L);

if (impute_missing)

// Clear the additional 'error_covariance' matrix.

for (int j = 0; j < L; j++)

error_covariance[j].fill(0);

if (efficient_missing == 1 || efficient_missing == 3) {

// Loop over all clusters.

for (int k = 0; k < missing_template.length(); k++) {

const TVec<int>& samples_clust = spanning_path[k];

int n_samp = samples_clust.length();

log_likelihood_post_clust.resize(n_samp, L);

current_cluster = k;

if (impute_missing)

for (int j = 0; j < L; j++)

clust_imputed_missing[j].resize(n_samp, D);

for (int j = 0; j < L; j++) {

// For each Gaussian, go through all samples in the cluster.

previous_training_sample = -1;

for (int i = 0; i < samples_clust.length(); i++) {

int s = samples_clust[i];

current_training_sample = s;

train_set->getSubRow(s, 0, sample_row);

log_likelihood_post_clust(i, j) =

computeLogLikelihood(sample_row, j) + pl_log(alpha[j]);

previous_training_sample = current_training_sample;

current_training_sample = -1;

}

}

previous_training_sample = -2;

// Get the posteriors for all samples in the cluster.

for (int i = 0; i < samples_clust.length(); i++) {

real log_sum_likelihood = logadd(log_likelihood_post_clust(i));

int s = samples_clust[i];

for (int j = 0; j < L; j++) {

real post = exp(log_likelihood_post_clust(i, j) -

log_sum_likelihood);

posteriors(s, j) = post;

if (impute_missing)

sum_of_posteriors[j] += post;

}

}

if (!impute_missing)

continue;

// We should now be ready to impute missing values.

for (int i = 0; i < samples_clust.length(); i++) {

int s = samples_clust[i];

for (int j = 0; j < L; j++) {

PLASSERT( !clust_imputed_missing[j](i).hasMissing() );

// TODO We are most likely wasting memory here.

imputed_missing[j]->putRow(s, clust_imputed_missing[j](i));

}

}

// If the 'impute_missing' method is used, we now need to compute

// the extra contribution to the covariance matrix.

for (int j = 0; j < L; j++) {

// For each Gaussian, go through all samples in the cluster.

previous_training_sample = -1;

for (int i = 0; i < samples_clust.length(); i++) {

int s = samples_clust[i];

current_training_sample = s;

train_set->getSubRow(s, 0, sample_row);

addToCovariance(sample_row, j, error_covariance[j],

posteriors(s, j));

previous_training_sample = current_training_sample;

current_training_sample = -1;

}

}

previous_training_sample = -2;

}

} else {

previous_training_sample = -1;

for (int i = 0; i < nsamples; i++) {

train_set->getSubRow(i, 0, sample_row);

// First we need to compute the likelihood P(s_i | j).

current_training_sample = i;

computeAllLogLikelihoods(sample_row, log_likelihood_post);

PLASSERT( !log_likelihood_post.hasMissing() );

for (int j = 0; j < L; j++)

log_likelihood_post[j] += pl_log(alpha[j]);

real log_sum_likelihood = logadd(log_likelihood_post);

for (int j = 0; j < L; j++) {

// Compute the posterior

// P(j | s_i) = P(s_i | j) * alpha_i / (sum_i ")

real post = exp(log_likelihood_post[j] - log_sum_likelihood);

posteriors(i,j) = post;

if (impute_missing)

sum_of_posteriors[j] += post;

}

// Add contribution to the covariance matrix if needed.

if (impute_missing) {

for (int j = 0; j < L; j++) {

real post = posteriors(i,j);

int k_count = 0;

for (int k = 0; k < sample_row.length(); k++)

if (is_missing(sample_row[k])) {

int l_count = 0;

for (int l = 0; l < sample_row.length(); l++)

if (is_missing(sample_row[l])) {

error_covariance[j](k, l) +=

post * H3_inverse[j](k_count, l_count);

l_count++;

}

k_count++;

}

}

int dummy_test = 0;

dummy_test++;

}

previous_training_sample = current_training_sample;

current_training_sample = -1;

}

previous_training_sample = -2;

}

//Profiler::end("computePosteriors");

}

| void PLearn::GaussMix::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PDistribution.

Reimplemented in PLearn::ManifoldParzen2, PLearn::ParzenWindow, and PLearn::RandomGaussMix.

Definition at line 138 of file GaussMix.cc.

References alpha, alpha_min, PLearn::OptionBase::buildoption, center, D, PLearn::declareOption(), PLearn::PDistribution::declareOptions(), diags, efficient_k_median, efficient_k_median_iter, efficient_missing, eigenvalues, eigenvectors, epsilon, f_eigen, impute_missing, kmeans_iterations, L, PLearn::OptionBase::learntoption, max_samples_in_cluster, min_samples_in_cluster, n_eigen, n_eigen_computed, sigma, sigma_min, and type.

Referenced by PLearn::ParzenWindow::declareOptions(), and PLearn::ManifoldParzen2::declareOptions().

{

// Build options.

declareOption(ol, "L", &GaussMix::L, OptionBase::buildoption,

"Number of Gaussians in the mixture.");

declareOption(ol, "type", &GaussMix::type, OptionBase::buildoption,

"This is the type of covariance matrix for each Gaussian:\n"

" - spherical : spherical covariance matrix sigma^2 * I\n"

" - diagonal : diagonal covariance matrix, given by standard\n"

" deviations 'diags'\n"

" - general : unconstrained covariance matrix (defined by its\n"

" eigenvectors)\n");

declareOption(ol, "n_eigen", &GaussMix::n_eigen, OptionBase::buildoption,

"If type is 'general', the number of eigenvectors used to compute\n"

"the covariance matrix. The remaining eigenvectors will be given an\n"

"eigenvalue equal to the next highest eigenvalue. If set to -1, all\n"

"eigenvectors will be kept.");

declareOption(ol, "f_eigen", &GaussMix::f_eigen, OptionBase::buildoption,

"If == 0, is ignored. Otherwise, it must be a fraction representing\n"

"the fraction of eigenvectors that are kept (this value overrides\n"

"any setting of the 'n_eigen' option).");

declareOption(ol, "efficient_missing", &GaussMix::efficient_missing,

OptionBase::buildoption,

"If not 0, computations with missing values will be more efficient:\n"

"- 1: most efficient method\n"

"- 2: less naive method than 0, where we compute the matrices\n"

" only once per missing pattern (not as good as 1)\n"

"- 3: same as 1, but using inverse variance lemma instead of\n"

" Cholesky (could be more efficient after all)");

declareOption(ol, "efficient_k_median", &GaussMix::efficient_k_median,

OptionBase::buildoption,

"Starting number of clusters used.");

declareOption(ol, "max_samples_in_cluster",

&GaussMix::max_samples_in_cluster,

OptionBase::buildoption,

"Maximum number of samples allowed in each cluster (ignored if -1).\n"

"More than 'efficient_k_median' clusters may be used in order to\n"

"comply with this constraint.");

declareOption(ol, "min_samples_in_cluster",

&GaussMix::min_samples_in_cluster,

OptionBase::buildoption,

"Minimum number of samples allowed in each cluster.\n"

"Less than 'efficient_k_median' clusters may be used in order to\n"

"comply with this constraint.");

declareOption(ol, "efficient_k_median_iter",

&GaussMix::efficient_k_median_iter,

OptionBase::buildoption,

"Maximum number of iterations in k-median.");

declareOption(ol, "impute_missing", &GaussMix::impute_missing,

OptionBase::buildoption,

"If true, missing values will be imputed their conditional mean when\n"

"computing the covariance matrix. Note that even if the current\n"