|

PLearn 0.1

|

|

PLearn 0.1

|

Feedforward Neural Network for symbolic data represented using features. More...

#include <FeatureSetSequentialCRF.h>

Public Member Functions | |

| FeatureSetSequentialCRF () | |

| virtual | ~FeatureSetSequentialCRF () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual FeatureSetSequentialCRF * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

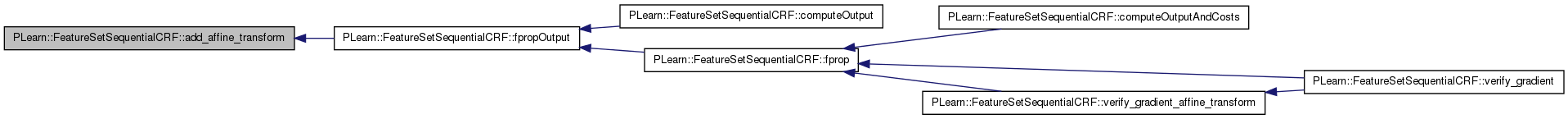

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

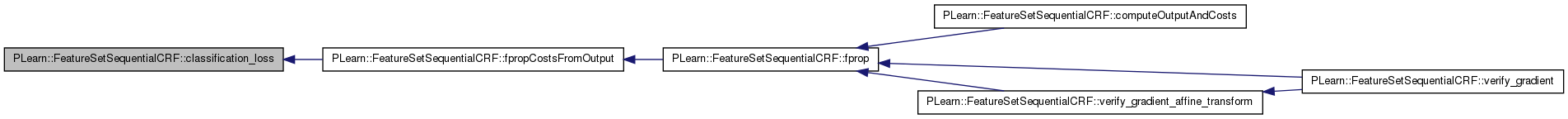

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

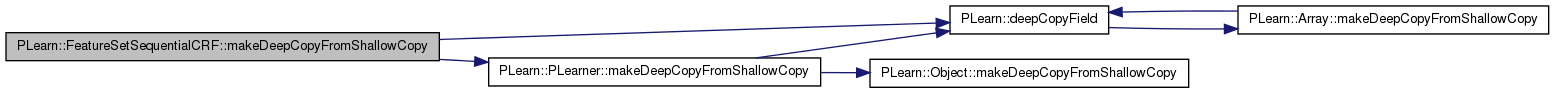

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Mat | w1 |

| Weights of first hidden layer. | |

| Mat | gradient_w1 |

| Gradient on weights of first hidden layer. | |

| Vec | b1 |

| Bias of first hidden layer. | |

| Vec | gradient_b1 |

| Gradient on bias of first hidden layer. | |

| Mat | w2 |

| Weights of second hidden layer. | |

| Mat | gradient_w2 |

| gradient on weights of second hidden layer | |

| Vec | b2 |

| Bias of second hidden layer. | |

| Vec | gradient_b2 |

| Gradient on bias of second hidden layer. | |

| Mat | wout |

| Weights of output layer. | |

| Mat | gradient_wout |

| Gradient on weights of output layer. | |

| Vec | bout |

| Bias of output layer. | |

| Vec | gradient_bout |

| Gradient on bias of output layer. | |

| Mat | direct_wout |

| Direct input to output weights. | |

| Mat | gradient_direct_wout |

| Gradient on direct input to output weights. | |

| Vec | direct_bout |

| Direct input to output bias (empty, since no bias is used) | |

| Vec | gradient_direct_bout |

| Gradient on direct input to output bias (empty, since no bias is used) | |

| Mat | wout_dist_rep |

| Weights of output layer for distributed representation predictor. | |

| Mat | gradient_wout_dist_rep |

| Gradient on weights of output layer for distributed representation predictor. | |

| Vec | bout_dist_rep |

| Bias of output layer for distributed representation predictor. | |

| Vec | gradient_bout_dist_rep |

| Gradient on bias of output layer for distributed representation predictor. | |

| int | nhidden |

| Number of hidden nunits in first hidden layer (default:0) | |

| int | nhidden2 |

| Number of hidden units in second hidden layer (default:0) | |

| real | weight_decay |

| Weight decay (default:0) | |

| real | bias_decay |

| Bias decay (default:0) | |

| real | layer1_weight_decay |

| Weight decay for weights from input layer to first hidden layer (default:0) | |

| real | layer1_bias_decay |

| Bias decay for weights from input layer to first hidden layer (default:0) | |

| real | layer2_weight_decay |

| Weight decay for weights from first hidden layer to second hidden layer (default:0) | |

| real | layer2_bias_decay |

| Bias decay for weights from first hidden layer to second hidden layer (default:0) | |

| real | output_layer_weight_decay |

| Weight decay for weights from last hidden layer to output layer (default:0) | |

| real | output_layer_bias_decay |

| Bias decay for weights from last hidden layer to output layer (default:0) | |

| real | direct_in_to_out_weight_decay |

| Weight decay for weights from input directly to output layer (default:0) | |

| real | output_layer_dist_rep_weight_decay |

| Weight decay for weights from last hidden layer to output layer of distributed representation predictor (default:0) | |

| real | output_layer_dist_rep_bias_decay |

| Bias decay for weights from last hidden layer to output layer of distributed representation predictor (default:0) | |

| real | margin |

| Margin requirement, used only with the margin_perceptron_cost cost function (default:1) | |

| bool | fixed_output_weights |

| If true then the output weights are not learned. | |

| bool | direct_in_to_out |

| If true then direct input to output weights will be added (if nhidden > 0) | |

| string | penalty_type |

| Penalty to use on the weights (for weight and bias decay) (default:"L2_square") | |

| string | output_transfer_func |

| Transfer function to use for ouput layer (default:"") | |

| string | hidden_transfer_func |

| Transfer function to use for hidden units (default:"tanh") tanh, sigmoid, softplus, softmax, etc... | |

| TVec< string > | cost_funcs |

| Cost functions. | |

| real | start_learning_rate |

| Start learning rate of gradient descent. | |

| real | decrease_constant |

| Decrease constant of gradietn descent. | |

| int | batch_size |

| Number of samples to use to estimate gradient before an update. | |

| bool | stochastic_gradient_descent_speedup |

| Indication that a trick to speedup stochastic gradient descent should be used. | |

| string | initialization_method |

| Method of initialization for neural network's weights. | |

| int | dist_rep_dim |

| Dimensionality (number of components) of distributed representations If <= 0, than distributed representations will not be used. | |

| bool | possible_targets_vary |

| Indication that the set of possible targets vary from one input vector to another. | |

| TVec< PP< FeatureSet > > | feat_sets |

| FeatureSets to apply on input. | |

| bool | use_input_as_feature |

| Indication that the input IDs should be used as the feature ID. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

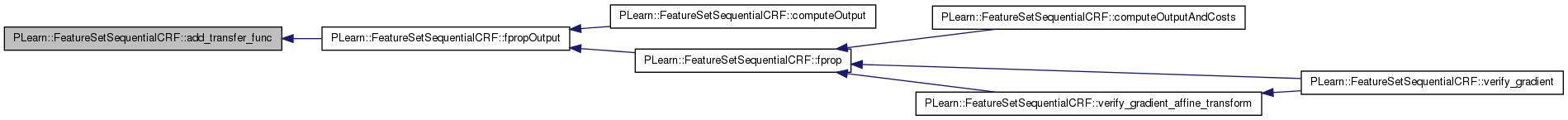

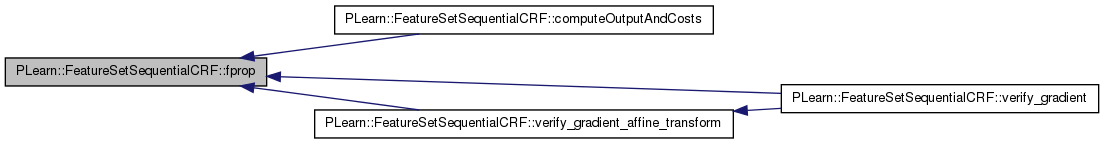

| void | fprop (const Vec &inputv, Vec &outputv, const Vec &targetv, Vec &costsv, real sampleweight=1) const |

| Forward propagation in the network. | |

| void | fpropOutput (const Vec &inputv, Vec &outputv) const |

| Forward propagation to compute the output. | |

| void | fpropCostsFromOutput (const Vec &inputv, const Vec &outputv, const Vec &targetv, Vec &costsv, real sampleweight=1) const |

| Forward propagation to compute the costs from the output. | |

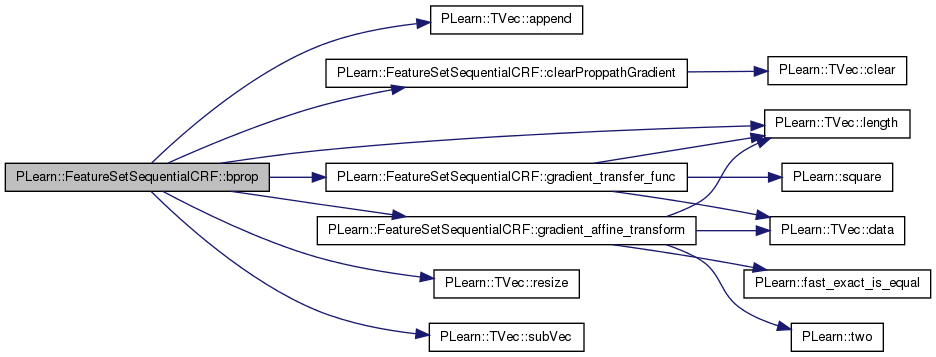

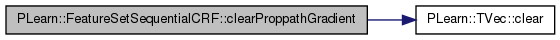

| void | bprop (Vec &inputv, Vec &outputv, Vec &targetv, Vec &costsv, real learning_rate, real sampleweight=1) |

| Backward propagation in the network, which assumes that a forward propagation has been done before. | |

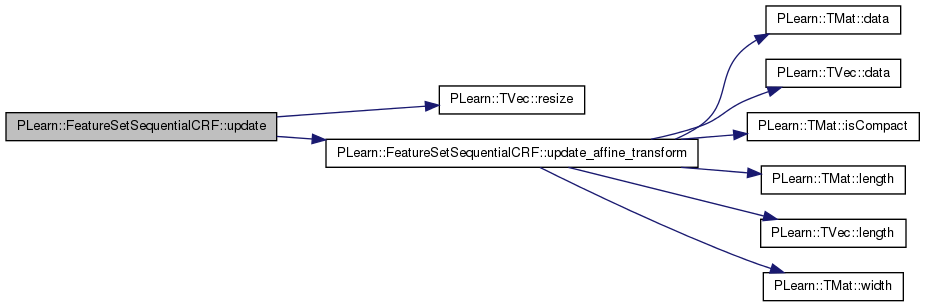

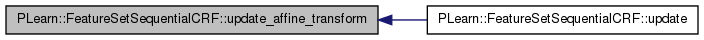

| void | update () |

| Update network's parameters. | |

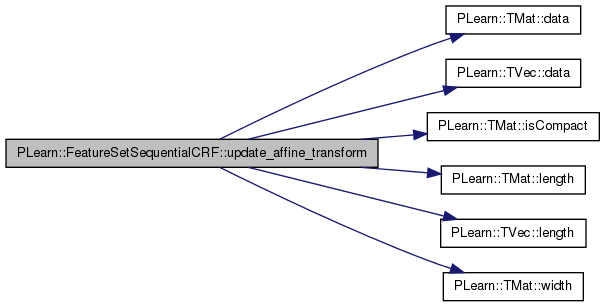

| void | update_affine_transform (Vec input, Mat weights, Vec bias, Mat gweights, Vec gbias, bool input_is_sparse, bool output_is_sparse, Vec output_indices) |

| Update affine transformation's parameters. | |

| void | clearProppathGradient () |

| Clear network's propagation path gradient fields Assumes fprop and bprop have been called before. | |

| virtual void | initializeParams (bool set_seed=true) |

| Initialize the parameters. | |

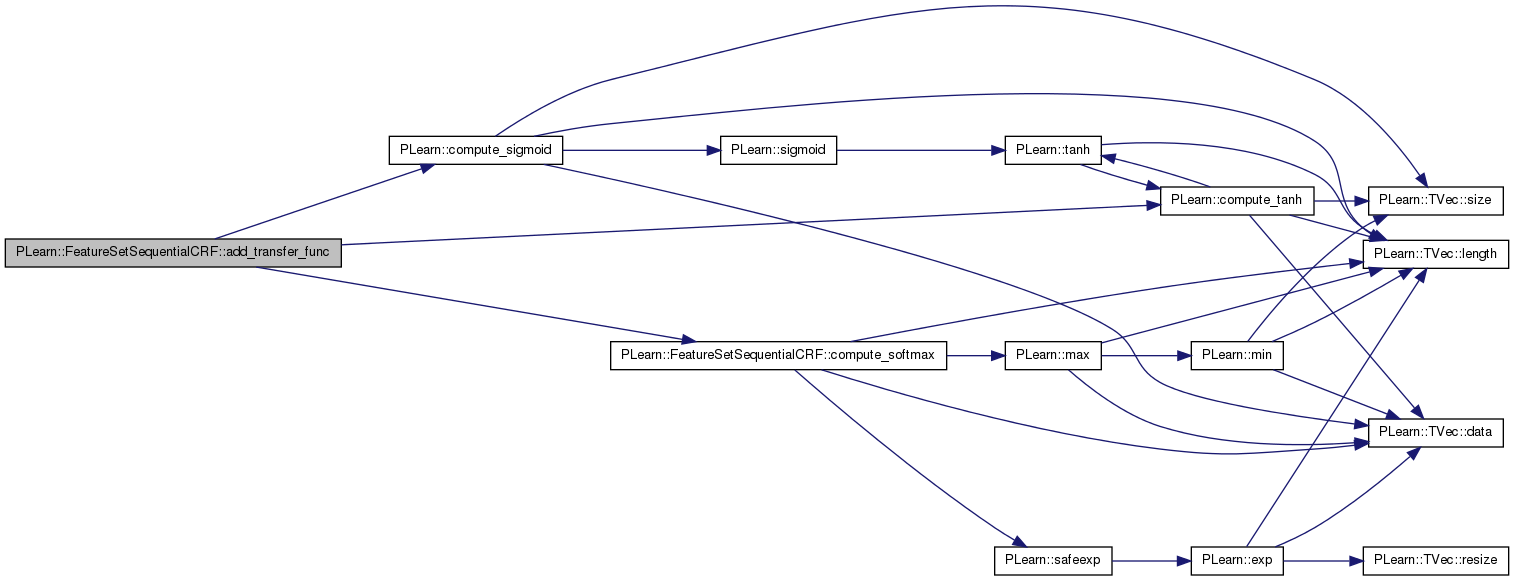

| void | add_transfer_func (const Vec &input, string transfer_func="default") const |

| Computes the result of the application of the given transfer function on the input vector. | |

| void | gradient_transfer_func (Vec &output, Vec &gradient_input, Vec &gradient_output, string transfer_func="default", int nll_softmax_speed_up_target=-1) |

| Computes the gradient through the given activation function, the output value and the initial gradient on that output (i.e. | |

| void | add_affine_transform (Vec input, Mat weights, Vec bias, Vec output, bool input_is_sparse, bool output_is_sparse, Vec output_indices=Vec(0)) const |

| Applies affine transform on input using provided weights and bias. | |

| void | gradient_affine_transform (Vec input, Mat weights, Vec bias, Vec ginput, Mat gweights, Vec gbias, Vec goutput, bool input_is_sparse, bool output_is_sparse, real learning_rate, real weight_decay, real bias_decay, Vec output_indices=Vec(0)) |

| Propagate gradient through affine transform on input using provided weights and bias. | |

| void | gradient_penalty (Vec input, Mat weights, Vec bias, Mat gweights, Vec gbias, bool input_is_sparse, bool output_is_sparse, real learning_rate, real weight_decay, real bias_decay, Vec output_indices=Vec(0)) |

| Propagate penalty gradient through weights and bias, scaled by -learning rate. | |

| void | fillWeights (const Mat &weights) |

| Fill a matrix of weights according to the 'initialization_method' specified. | |

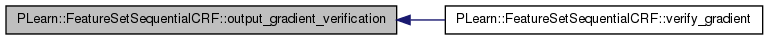

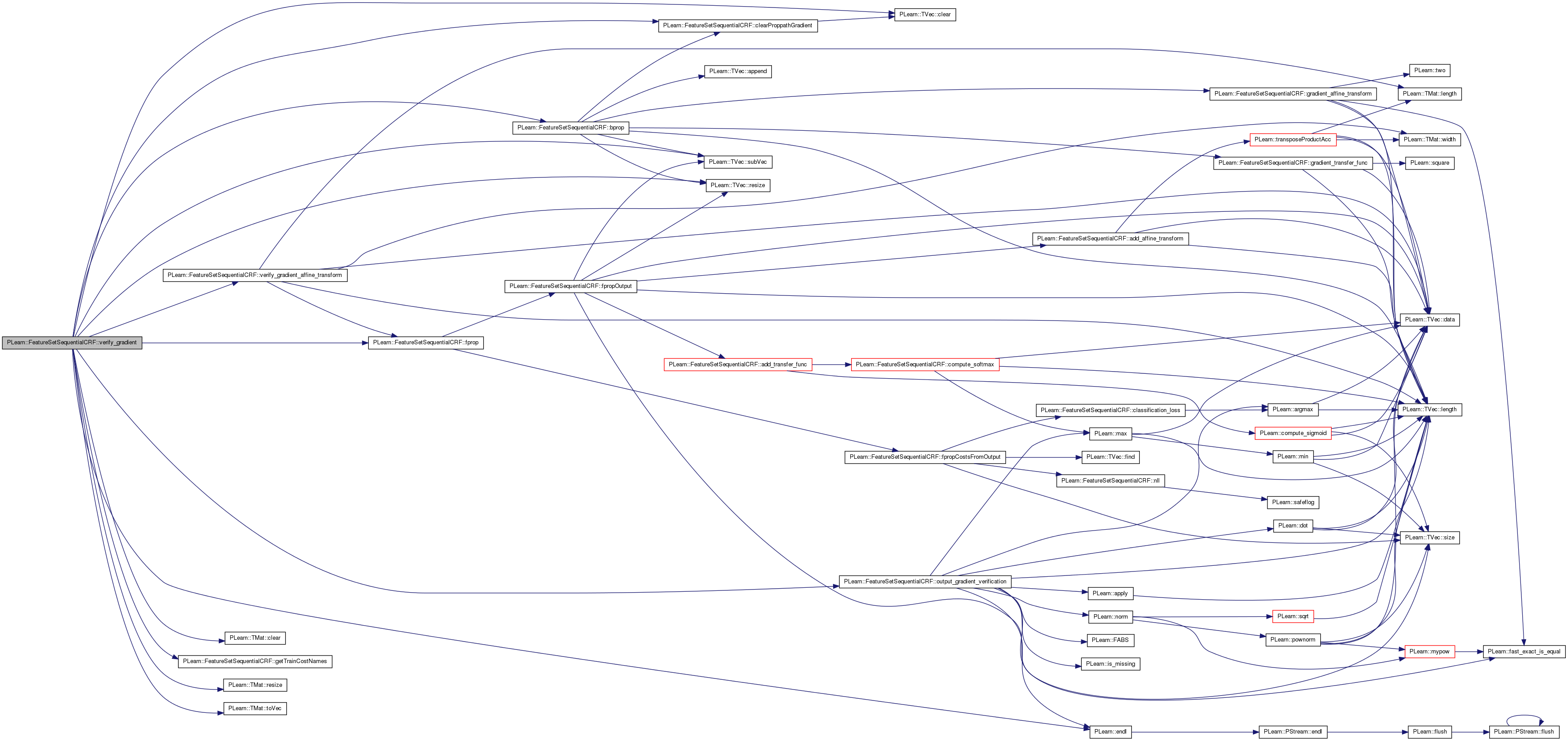

| void | verify_gradient (Vec &input, Vec target, real step) |

| Verify gradient of propagation path. | |

| void | verify_gradient_affine_transform (Vec global_input, Vec &global_output, Vec &global_targetv, Vec &global_costs, real sampleweight, Vec input, Mat weights, Vec bias, Mat est_gweights, Vec est_gbias, bool input_is_sparse, bool output_is_sparse, real step, Vec output_indices=Vec(0)) const |

| Verify gradient of affine_transform parameters. | |

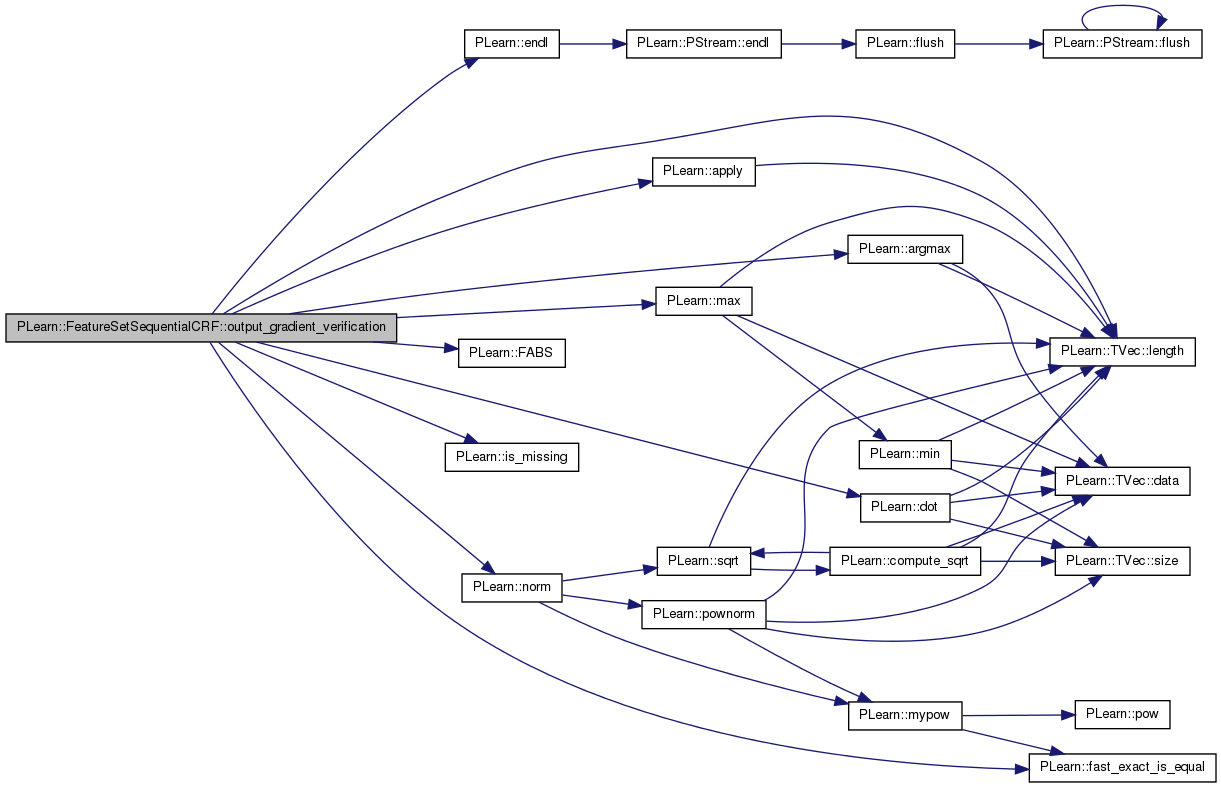

| void | output_gradient_verification (Vec grad, Vec est_grad) |

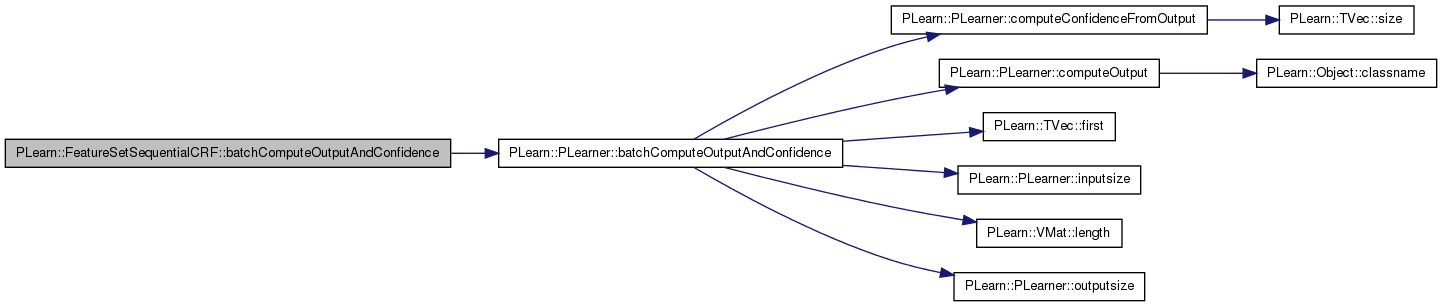

| void | batchComputeOutputAndConfidence (VMat inputs, real probability, VMat outputs_and_confidence) const |

| Changes the reference_set and then calls the parent's class method. | |

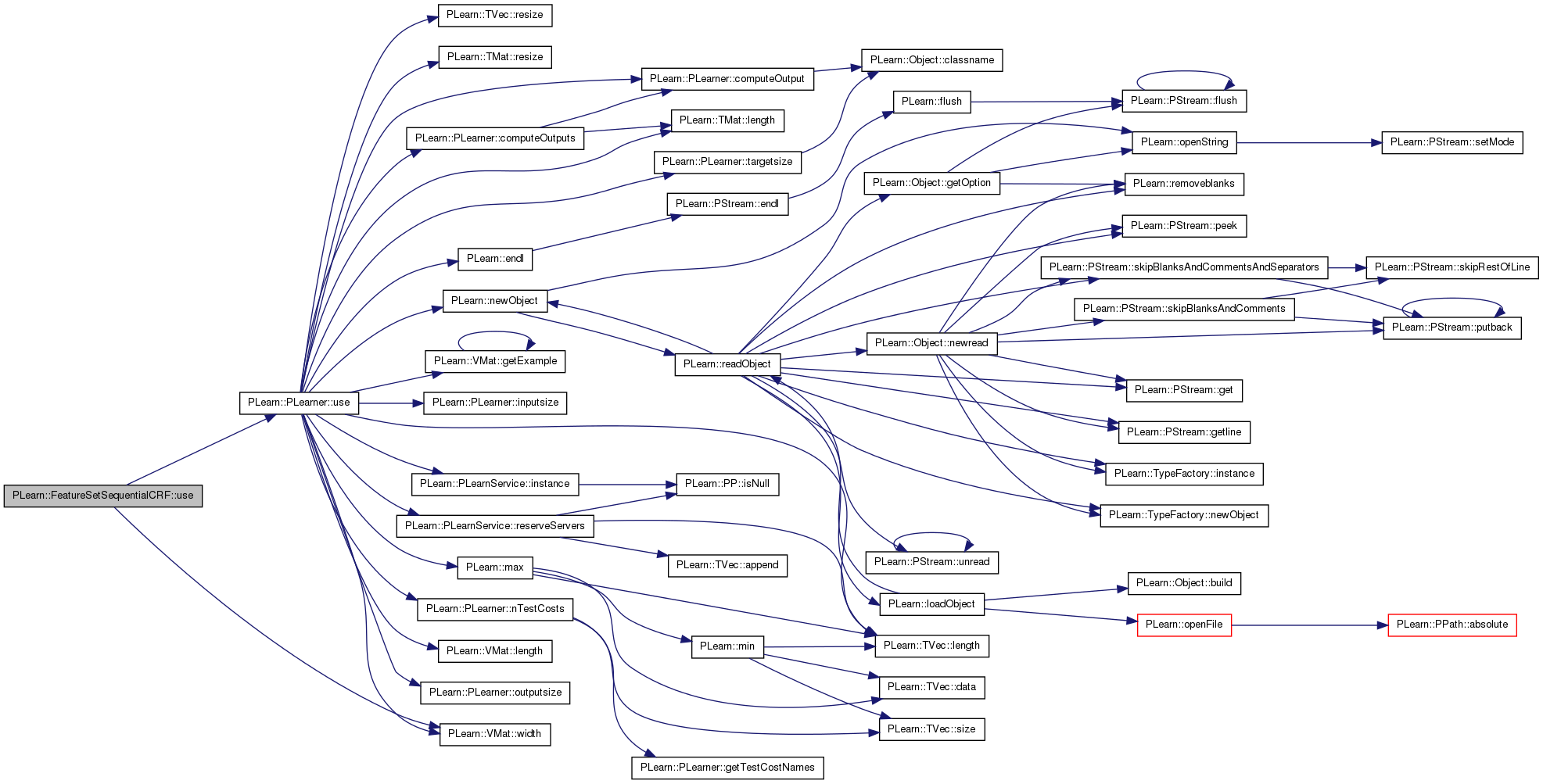

| virtual void | use (VMat testset, VMat outputs) const |

| Changes the reference_set and then calls the parent's class method. | |

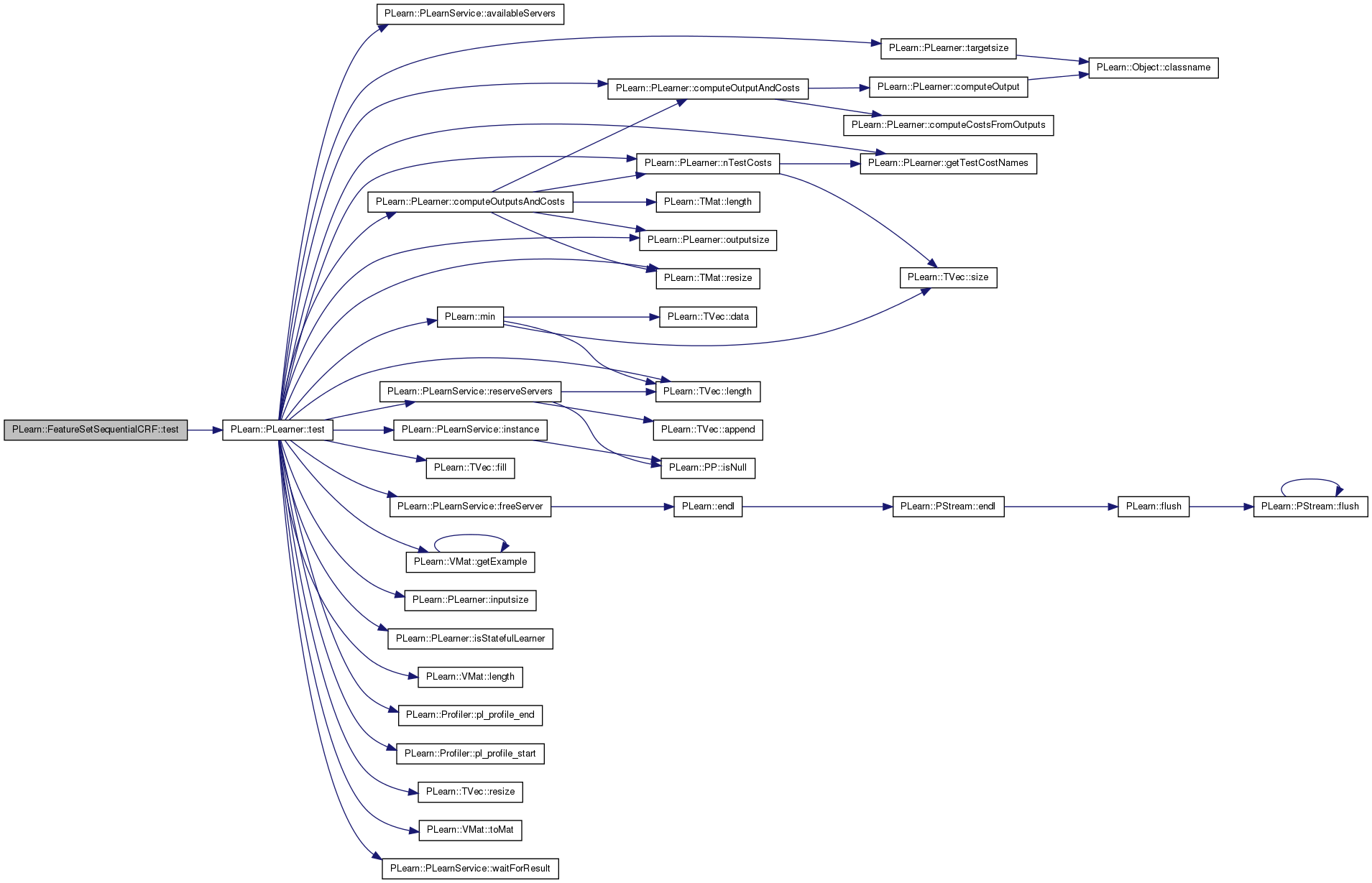

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Changes the reference_set and then calls the parent's class method. | |

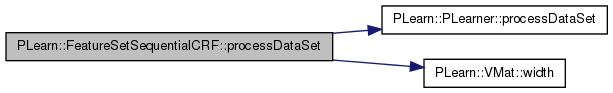

| virtual VMat | processDataSet (VMat dataset) const |

| Changes the reference_set and then calls the parent's class method. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| int | total_output_size |

| Total output size. | |

| int | total_updates |

| Total updates so far;. | |

| int | n_feat_sets |

| Number of feature sets. | |

| int | total_feats_per_token |

| Number of features per input token for which a distributed representation is computed. | |

| int | reind_target |

| Reindexed target. | |

| Vec | feat_input |

| Feature input;. | |

| Vec | gradient_feat_input |

| Gradient on feature input (useless for now) | |

| Vec | nnet_input |

| Input vector to NNet (after mapping into distributed representations) | |

| Vec | gradient_nnet_input |

| Gradient for vector to NNet. | |

| Vec | hiddenv |

| First hidden layer value. | |

| Vec | gradient_hiddenv |

| Gradient of first hidden layer. | |

| Vec | gradient_act_hiddenv |

| Gradient through first hidden layer activation. | |

| Vec | hidden2v |

| Second hidden layer value. | |

| Vec | gradient_hidden2v |

| Gradient of second hidden layer. | |

| Vec | gradient_act_hidden2v |

| Gradient through second hidden layer activation. | |

| Vec | gradient_outputv |

| Gradient on output. | |

| Vec | gradient_act_outputv |

| Gradient throught output layer activation. | |

| PP< PRandom > | rgen |

| Random number generator for parameters initialization. | |

| Vec | feats_since_last_update |

| Features seen in input since last update. | |

| Vec | target_values_since_last_update |

| Possible target values seen since last update. | |

| VMat | val_string_reference_set |

| VMatrix used to get values to string mapping for input tokens. | |

| VMat | target_values_reference_set |

| Possible target values mapping. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| **** SUBCLASS WRITING: **** | |

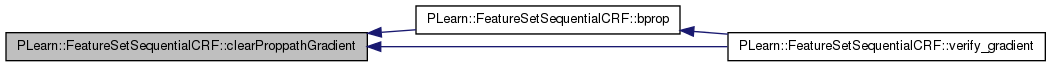

| void | compute_softmax (const Vec &x, const Vec &y) const |

| Softmax vector y obtained on x This implementation is such that compute_softmax(x,x) is such that x becomes its softmax value. | |

| real | nll (const Vec &outputv, int target) const |

| Negative log-likelihood loss. | |

| real | classification_loss (const Vec &outputv, int target) const |

| Classification loss. | |

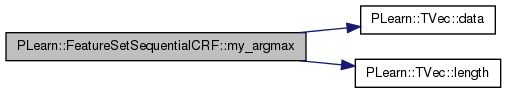

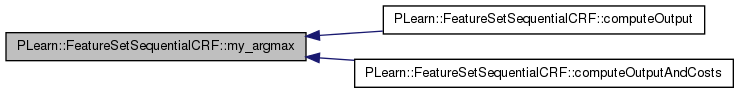

| int | my_argmax (const Vec &vec, int default_compare=0) const |

| Argmax function that lets you define the default (first) component used for comparisons. | |

Private Attributes | |

| Vec | target_values |

| Vector of possible target values. | |

| Vec | output_comp |

| Vector for output computations. | |

| Vec | row |

| Row vector. | |

| Vec | last_layer |

| Last layer of network (pointer to either nnet_input, vnhidden or vnhidden2) | |

| Vec | gradient_last_layer |

| Gradient of last layer in back propagation. | |

| TVec< TVec< int > > | feats |

| Features for each token. | |

| Vec | gradient |

| Temporary computations variable, used in fprop() and bprop() Care must be taken when using these variables, since they are used by many different functions. | |

| string | str |

| real * | pval1 |

| real * | pval2 |

| real * | pval3 |

| real * | pval4 |

| real * | pval5 |

| real | val |

| real | val2 |

| real | grad |

| int | offset |

| int | ni |

| int | nj |

| int | nk |

| int | id |

| int | nfeats |

| int | ifeats |

| int * | f |

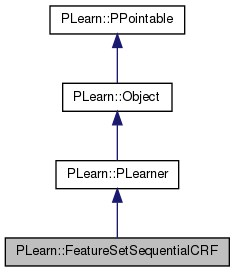

Feedforward Neural Network for symbolic data represented using features.

Inspired from the NNet class, FeatureSetSequentialCRF is simply an extension that deals with feature representations of symbolic data. It can also learn distributed representations for each symbolic input token. The possible targets are defined by the VMatrix's getValues() function.

Definition at line 55 of file FeatureSetSequentialCRF.h.

typedef PLearner PLearn::FeatureSetSequentialCRF::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 60 of file FeatureSetSequentialCRF.h.

| PLearn::FeatureSetSequentialCRF::FeatureSetSequentialCRF | ( | ) |

Definition at line 53 of file FeatureSetSequentialCRF.cc.

: rgen(new PRandom()), nhidden(0), nhidden2(0), weight_decay(0), bias_decay(0), layer1_weight_decay(0), layer1_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), direct_in_to_out_weight_decay(0), output_layer_dist_rep_weight_decay(0), output_layer_dist_rep_bias_decay(0), fixed_output_weights(0), direct_in_to_out(0), penalty_type("L2_square"), output_transfer_func(""), hidden_transfer_func("tanh"), start_learning_rate(0.01), decrease_constant(0), batch_size(1), stochastic_gradient_descent_speedup(true), initialization_method("uniform_linear"), dist_rep_dim(-1), possible_targets_vary(false) {}

| PLearn::FeatureSetSequentialCRF::~FeatureSetSequentialCRF | ( | ) | [virtual] |

Definition at line 83 of file FeatureSetSequentialCRF.cc.

{

}

| string PLearn::FeatureSetSequentialCRF::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| OptionList & PLearn::FeatureSetSequentialCRF::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| RemoteMethodMap & PLearn::FeatureSetSequentialCRF::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| Object * PLearn::FeatureSetSequentialCRF::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| StaticInitializer FeatureSetSequentialCRF::_static_initializer_ & PLearn::FeatureSetSequentialCRF::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

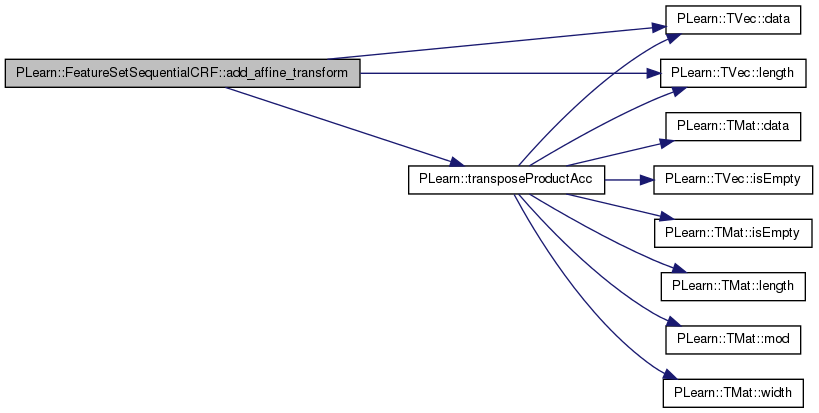

| void PLearn::FeatureSetSequentialCRF::add_affine_transform | ( | Vec | input, |

| Mat | weights, | ||

| Vec | bias, | ||

| Vec | output, | ||

| bool | input_is_sparse, | ||

| bool | output_is_sparse, | ||

| Vec | output_indices = Vec(0) |

||

| ) | const [protected] |

Applies affine transform on input using provided weights and bias.

Information about the nature of the input and output need to be provided. If bias.length() == 0, then output initial value is used as the bias.

Definition at line 1030 of file FeatureSetSequentialCRF.cc.

References PLearn::TVec< T >::data(), i, j, PLearn::TVec< T >::length(), ni, nj, pval1, pval2, pval3, and PLearn::transposeProductAcc().

Referenced by fpropOutput().

{

// Bias

if(bias.length() != 0)

{

if(output_is_sparse)

{

pval1 = output.data();

pval2 = bias.data();

pval3 = output_indices.data();

ni = output.length();

for(int i=0; i<ni; i++)

*pval1++ = pval2[(int)*pval3++];

}

else

{

pval1 = output.data();

pval2 = bias.data();

ni = output.length();

for(int i=0; i<ni; i++)

*pval1++ = *pval2++;

}

}

// Weights

if(!input_is_sparse && !output_is_sparse)

{

transposeProductAcc(output,weights,input);

}

else if(!input_is_sparse && output_is_sparse)

{

ni = output.length();

nj = input.length();

pval1 = output.data();

pval3 = output_indices.data();

for(int i=0; i<ni; i++)

{

pval2 = input.data();

for(int j=0; j<nj; j++)

*pval1 += (*pval2++)*weights(j,(int)*pval3);

pval1++;

pval3++;

}

}

else if(input_is_sparse && !output_is_sparse)

{

ni = input.length();

nj = output.length();

if(ni != 0)

{

pval3 = input.data();

for(int i=0; i<ni; i++)

{

pval1 = output.data();

pval2 = weights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

*pval1++ += *pval2++;

}

}

}

else if(input_is_sparse && output_is_sparse)

{

// Weights

ni = input.length();

nj = output.length();

if(ni != 0)

{

pval2 = input.data();

for(int i=0; i<ni; i++)

{

pval1 = output.data();

pval3 = output_indices.data();

for(int j=0; j<nj; j++)

*pval1++ += weights((int)(*pval2),(int)*pval3++);

pval2++;

}

}

}

}

| void PLearn::FeatureSetSequentialCRF::add_transfer_func | ( | const Vec & | input, |

| string | transfer_func = "default" |

||

| ) | const [protected] |

Computes the result of the application of the given transfer function on the input vector.

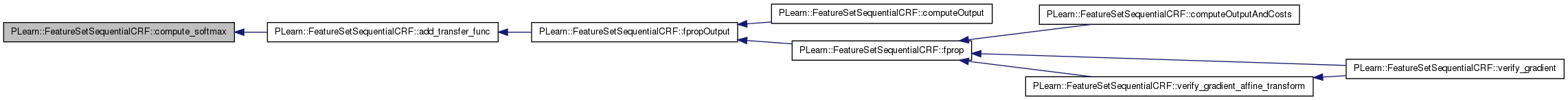

Definition at line 921 of file FeatureSetSequentialCRF.cc.

References PLearn::compute_sigmoid(), compute_softmax(), PLearn::compute_tanh(), hidden_transfer_func, and PLERROR.

Referenced by fpropOutput().

{

if (transfer_func == "default")

transfer_func = hidden_transfer_func;

if(transfer_func=="linear")

return;

else if(transfer_func=="tanh")

{

compute_tanh(input,input);

return;

}

else if(transfer_func=="sigmoid")

{

compute_sigmoid(input,input);

return;

}

else if(transfer_func=="softmax")

{

compute_softmax(input,input);

return;

}

else PLERROR("In FeatureSetSequentialCRF::add_transfer_func(): Unknown value for transfer_func: %s",transfer_func.c_str());

}

| void PLearn::FeatureSetSequentialCRF::batchComputeOutputAndConfidence | ( | VMat | inputs, |

| real | probability, | ||

| VMat | outputs_and_confidence | ||

| ) | const [protected, virtual] |

Changes the reference_set and then calls the parent's class method.

Reimplemented from PLearn::PLearner.

Definition at line 2544 of file FeatureSetSequentialCRF.cc.

References PLearn::PLearner::batchComputeOutputAndConfidence(), PLearn::PLearner::train_set, and val_string_reference_set.

{

val_string_reference_set = inputs;

inherited::batchComputeOutputAndConfidence(inputs,probability,outputs_and_confidence);

val_string_reference_set = train_set;

}

| void PLearn::FeatureSetSequentialCRF::bprop | ( | Vec & | inputv, |

| Vec & | outputv, | ||

| Vec & | targetv, | ||

| Vec & | costsv, | ||

| real | learning_rate, | ||

| real | sampleweight = 1 |

||

| ) | [protected] |

Backward propagation in the network, which assumes that a forward propagation has been done before.

A learning rate needs to be provided because it is -learning_rate * gradient that is propagated, not just the gradient.

Definition at line 501 of file FeatureSetSequentialCRF.cc.

References PLearn::TVec< T >::append(), b1, b2, bias_decay, bout, bout_dist_rep, clearProppathGradient(), cost_funcs, direct_bout, direct_in_to_out, direct_in_to_out_weight_decay, direct_wout, dist_rep_dim, feat_input, feats, feats_since_last_update, gradient_act_hidden2v, gradient_act_hiddenv, gradient_act_outputv, gradient_affine_transform(), gradient_b1, gradient_b2, gradient_bout, gradient_bout_dist_rep, gradient_direct_bout, gradient_direct_wout, gradient_feat_input, gradient_hidden2v, gradient_hiddenv, gradient_last_layer, gradient_nnet_input, gradient_outputv, gradient_transfer_func(), gradient_w1, gradient_w2, gradient_wout, gradient_wout_dist_rep, hidden2v, hiddenv, i, ifeats, PLearn::PLearner::inputsize_, j, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::TVec< T >::length(), n_feat_sets, nfeats, nhidden, nhidden2, nnet_input, output_layer_bias_decay, output_layer_dist_rep_bias_decay, output_layer_dist_rep_weight_decay, output_layer_weight_decay, output_transfer_func, PLERROR, possible_targets_vary, reind_target, PLearn::TVec< T >::resize(), stochastic_gradient_descent_speedup, PLearn::TVec< T >::subVec(), target_values, target_values_since_last_update, w1, w2, weight_decay, wout, and wout_dist_rep.

Referenced by verify_gradient().

{

if(possible_targets_vary)

{

gradient_outputv.resize(target_values.length());

gradient_act_outputv.resize(target_values.length());

if(!stochastic_gradient_descent_speedup)

target_values_since_last_update.append(target_values);

}

if(!stochastic_gradient_descent_speedup)

feats_since_last_update.append(feat_input);

// Gradient through cost

if(cost_funcs[0]=="NLL")

{

// Permits to avoid numerical precision errors

if(output_transfer_func == "softmax")

gradient_outputv[reind_target] = learning_rate*sampleweight;

else

gradient_outputv[reind_target] = learning_rate*sampleweight/(outputv[reind_target]);

}

else if(cost_funcs[0]=="class_error")

{

PLERROR("FeatureSetSequentialCRF::bprop(): gradient cannot be computed for \"class_error\" cost");

}

// Gradient through output transfer function

if(output_transfer_func != "linear")

{

if(cost_funcs[0]=="NLL" && output_transfer_func == "softmax")

gradient_transfer_func(outputv,gradient_act_outputv, gradient_outputv,

output_transfer_func, reind_target);

else

gradient_transfer_func(outputv,gradient_act_outputv, gradient_outputv,

output_transfer_func);

gradient_last_layer = gradient_act_outputv;

}

else

gradient_last_layer = gradient_act_outputv;

// Gradient through output affine transform

if(nhidden2 > 0) {

gradient_affine_transform(hidden2v, wout, bout, gradient_hidden2v,

gradient_wout, gradient_bout, gradient_last_layer,

false, possible_targets_vary, learning_rate,

weight_decay+output_layer_weight_decay,

bias_decay+output_layer_bias_decay,

target_values);

}

else if(nhidden > 0)

{

gradient_affine_transform(hiddenv, wout, bout, gradient_hiddenv,

gradient_wout, gradient_bout, gradient_last_layer,

false, possible_targets_vary, learning_rate,

weight_decay+output_layer_weight_decay,

bias_decay+output_layer_bias_decay, target_values);

}

else

{

gradient_affine_transform(nnet_input, wout, bout, gradient_nnet_input,

gradient_wout, gradient_bout, gradient_last_layer,

(dist_rep_dim <= 0), possible_targets_vary, learning_rate,

weight_decay+output_layer_weight_decay,

bias_decay+output_layer_bias_decay, target_values);

}

if(nhidden2 > 0)

{

gradient_transfer_func(hidden2v,gradient_act_hidden2v,gradient_hidden2v);

gradient_affine_transform(hiddenv, w2, b2, gradient_hiddenv,

gradient_w2, gradient_b2, gradient_act_hidden2v,

false, false,learning_rate,

weight_decay+layer2_weight_decay,

bias_decay+layer2_bias_decay);

}

if(nhidden > 0)

{

gradient_transfer_func(hiddenv,gradient_act_hiddenv,gradient_hiddenv);

gradient_affine_transform(nnet_input, w1, b1, gradient_nnet_input,

gradient_w1, gradient_b1, gradient_act_hiddenv,

dist_rep_dim<=0, false,learning_rate,

weight_decay+layer1_weight_decay,

bias_decay+layer1_bias_decay);

}

if(nhidden>0 && direct_in_to_out)

{

gradient_affine_transform(nnet_input, direct_wout, direct_bout,

gradient_nnet_input,

gradient_direct_wout, gradient_direct_bout,

gradient_last_layer,

dist_rep_dim<=0, possible_targets_vary,learning_rate,

weight_decay+direct_in_to_out_weight_decay,

0,

target_values);

}

if(dist_rep_dim > 0)

{

nfeats = 0;

id = 0;

for(int i=0; i<inputsize_; )

{

ifeats = 0;

for(int j=0; j<n_feat_sets; j++,i++)

ifeats += feats[i].length();

gradient_affine_transform(feat_input.subVec(nfeats,ifeats),

wout_dist_rep, bout_dist_rep,

//gradient_feat_input.subVec(nfeats,feats[i].length()),

gradient_feat_input,// Useless anyways...

gradient_wout_dist_rep,

gradient_bout_dist_rep,

gradient_nnet_input.subVec(id*dist_rep_dim,dist_rep_dim),

true, false, learning_rate,

weight_decay+output_layer_dist_rep_weight_decay,

bias_decay+output_layer_dist_rep_bias_decay);

nfeats += ifeats;

id++;

}

}

clearProppathGradient();

}

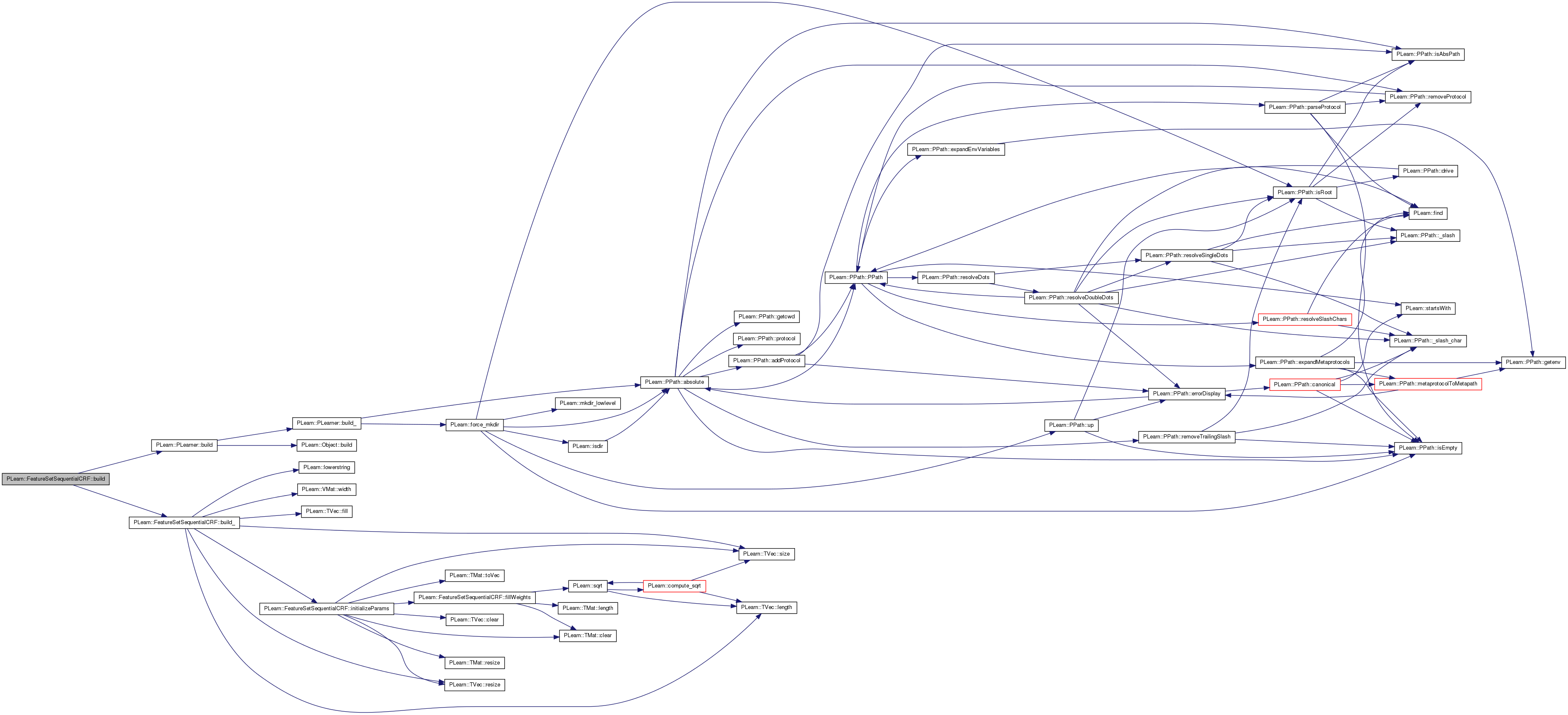

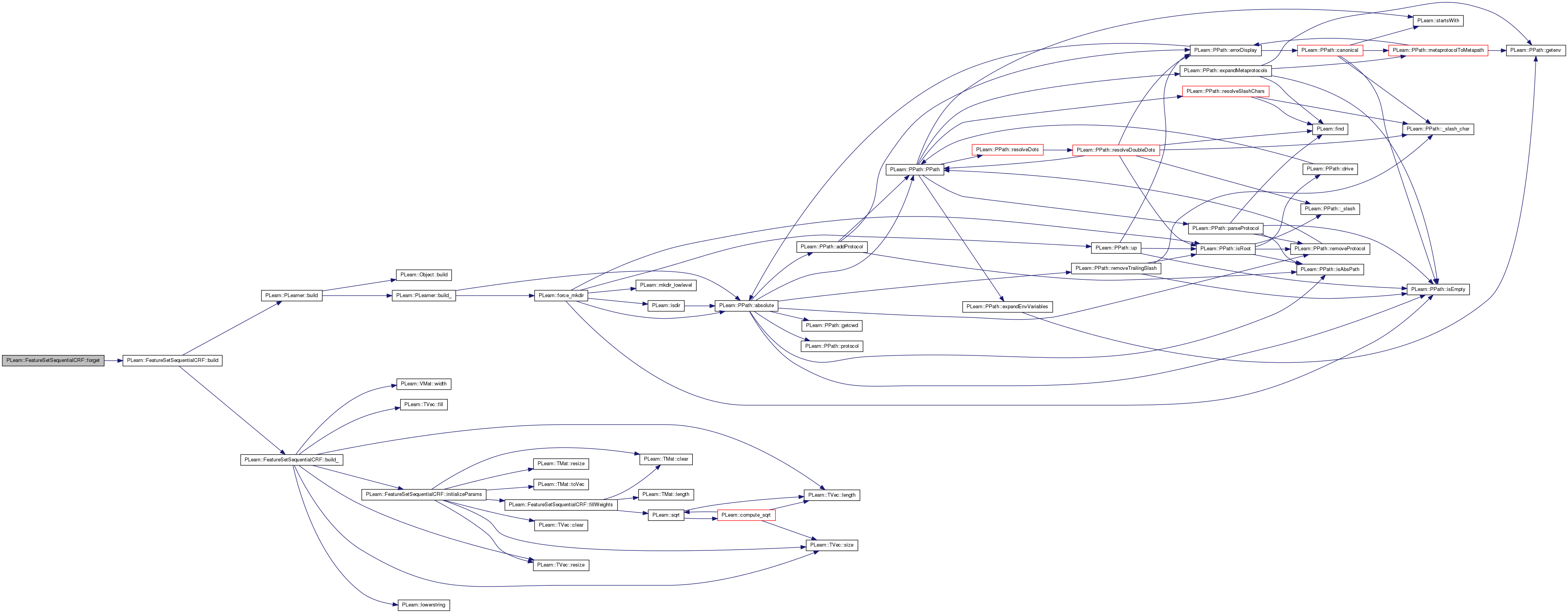

| void PLearn::FeatureSetSequentialCRF::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 277 of file FeatureSetSequentialCRF.cc.

References PLearn::PLearner::build(), and build_().

Referenced by forget().

{

inherited::build();

build_();

}

| void PLearn::FeatureSetSequentialCRF::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 287 of file FeatureSetSequentialCRF.cc.

References cost_funcs, feat_sets, feats, PLearn::TVec< T >::fill(), fixed_output_weights, i, initializeParams(), PLearn::PLearner::inputsize_, PLearn::TVec< T >::length(), PLearn::lowerstring(), MISSING_VALUE, n_feat_sets, output_comp, penalty_type, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), row, PLearn::TVec< T >::size(), PLearn::PLearner::stage, stochastic_gradient_descent_speedup, target_values_reference_set, PLearn::PLearner::targetsize_, total_output_size, PLearn::PLearner::train_set, val_string_reference_set, PLearn::PLearner::weightsize_, and PLearn::VMat::width().

Referenced by build().

{

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize, targetsize and weightsize

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

if(targetsize_ != 1)

PLERROR("In FeatureSetSequentialCRF::build_(): targetsize_ must be 1, not %d",targetsize_);

n_feat_sets = feat_sets.length();

if(n_feat_sets == 0)

PLERROR("In FeatureSetSequentialCRF::build_(): at least one FeatureSet must be provided\n");

if(inputsize_ % n_feat_sets != 0)

PLERROR("In FeatureSetSequentialCRF::build_(): feat_sets.length() must be a divisor of inputsize()");

// Process penalty type option

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("In FeatureSetSequentialCRF::build_(): L2 penalty not supported, assuming you want L2 square");

penalty_type = "L2_square";

}

else

PLERROR("In FeatureSetSequentialCRF::build_(): penalty_type \"%s\" not supported", penalty_type.c_str());

int ncosts = cost_funcs.size();

if(ncosts<=0)

PLERROR("In FeatureSetSequentialCRF::build_(): Empty cost_funcs : must at least specify the cost function to optimize!");

if(stage <= 0 ) // Training hasn't started

{

// Initialize parameters

initializeParams();

}

output_comp.resize(total_output_size);

row.resize(train_set->width());

row.fill(MISSING_VALUE);

feats.resize(inputsize_);

// Making sure that all feats[i] have non null storage...

for(int i=0; i<feats.length(); i++)

{

feats[i].resize(1);

feats[i].resize(0);

}

if(fixed_output_weights && stochastic_gradient_descent_speedup)

PLERROR("In FeatureSetSequentialCRF::build_(): cannot use stochastic gradient descent speedup with fixed output weights");

val_string_reference_set = train_set;

target_values_reference_set = train_set;

}

}

| real PLearn::FeatureSetSequentialCRF::classification_loss | ( | const Vec & | outputv, |

| int | target | ||

| ) | const [private] |

Classification loss.

Definition at line 1761 of file FeatureSetSequentialCRF.cc.

References PLearn::argmax().

Referenced by fpropCostsFromOutput().

{

return (argmax(outputv) == target ? 0 : 1);

}

| string PLearn::FeatureSetSequentialCRF::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| void PLearn::FeatureSetSequentialCRF::clearProppathGradient | ( | ) | [protected] |

Clear network's propagation path gradient fields Assumes fprop and bprop have been called before.

Clear network's gradient fields.

Definition at line 787 of file FeatureSetSequentialCRF.cc.

References PLearn::TVec< T >::clear(), cost_funcs, dist_rep_dim, gradient_act_hidden2v, gradient_act_hiddenv, gradient_act_outputv, gradient_hidden2v, gradient_hiddenv, gradient_nnet_input, gradient_outputv, nhidden, nhidden2, and reind_target.

Referenced by bprop(), and verify_gradient().

{

// Trick to make clearProppathGradient faster...

if(cost_funcs[0]=="NLL")

gradient_outputv[reind_target] = 0;

else

gradient_outputv.clear();

gradient_act_outputv.clear();

if(dist_rep_dim>0)

gradient_nnet_input.clear();

if(nhidden>0)

{

gradient_hiddenv.clear();

gradient_act_hiddenv.clear();

if(nhidden2>0)

{

gradient_hidden2v.clear();

gradient_act_hidden2v.clear();

}

}

}

| void PLearn::FeatureSetSequentialCRF::compute_softmax | ( | const Vec & | x, |

| const Vec & | y | ||

| ) | const [private] |

Softmax vector y obtained on x This implementation is such that compute_softmax(x,x) is such that x becomes its softmax value.

Definition at line 1728 of file FeatureSetSequentialCRF.cc.

References PLearn::TVec< T >::data(), i, PLearn::TVec< T >::length(), PLearn::max(), n, PLERROR, and PLearn::safeexp().

Referenced by add_transfer_func().

{

int n = x.length();

// real* yp = y.data();

// real* xp = x.data();

// for(int i=0; i<n; i++)

// {

// *yp++ = *xp > 1e-5 ? *xp : 1e-5;

// xp++;

// }

if (n>0)

{

real* yp = y.data();

real* xp = x.data();

real maxx = max(x);

real s = 0;

for (int i=0;i<n;i++)

s += (*yp++ = safeexp(*xp++-maxx));

if (s == 0) PLERROR("trying to divide by 0 in softmax");

s = 1.0 / s;

yp = y.data();

for (int i=0;i<n;i++)

*yp++ *= s;

}

}

| void PLearn::FeatureSetSequentialCRF::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 815 of file FeatureSetSequentialCRF.cc.

References PLERROR.

{

PLERROR("In FeatureSetSequentialCRF::computeCostsFromOutputs(): output is not enough to compute costs");

}

| void PLearn::FeatureSetSequentialCRF::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 842 of file FeatureSetSequentialCRF.cc.

References PLearn::argmax(), fpropOutput(), PLearn::TVec< T >::length(), my_argmax(), output_comp, possible_targets_vary, rgen, and target_values.

{

fpropOutput(inputv, output_comp);

if(possible_targets_vary)

{

//row.subVec(0,inputsize_) << inputv;

//target_values_reference_set->getValues(row,inputsize_,target_values);

outputv[0] = target_values[my_argmax(output_comp,rgen->uniform_multinomial_sample(output_comp.length()))];

}

else

outputv[0] = argmax(output_comp);

}

| void PLearn::FeatureSetSequentialCRF::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

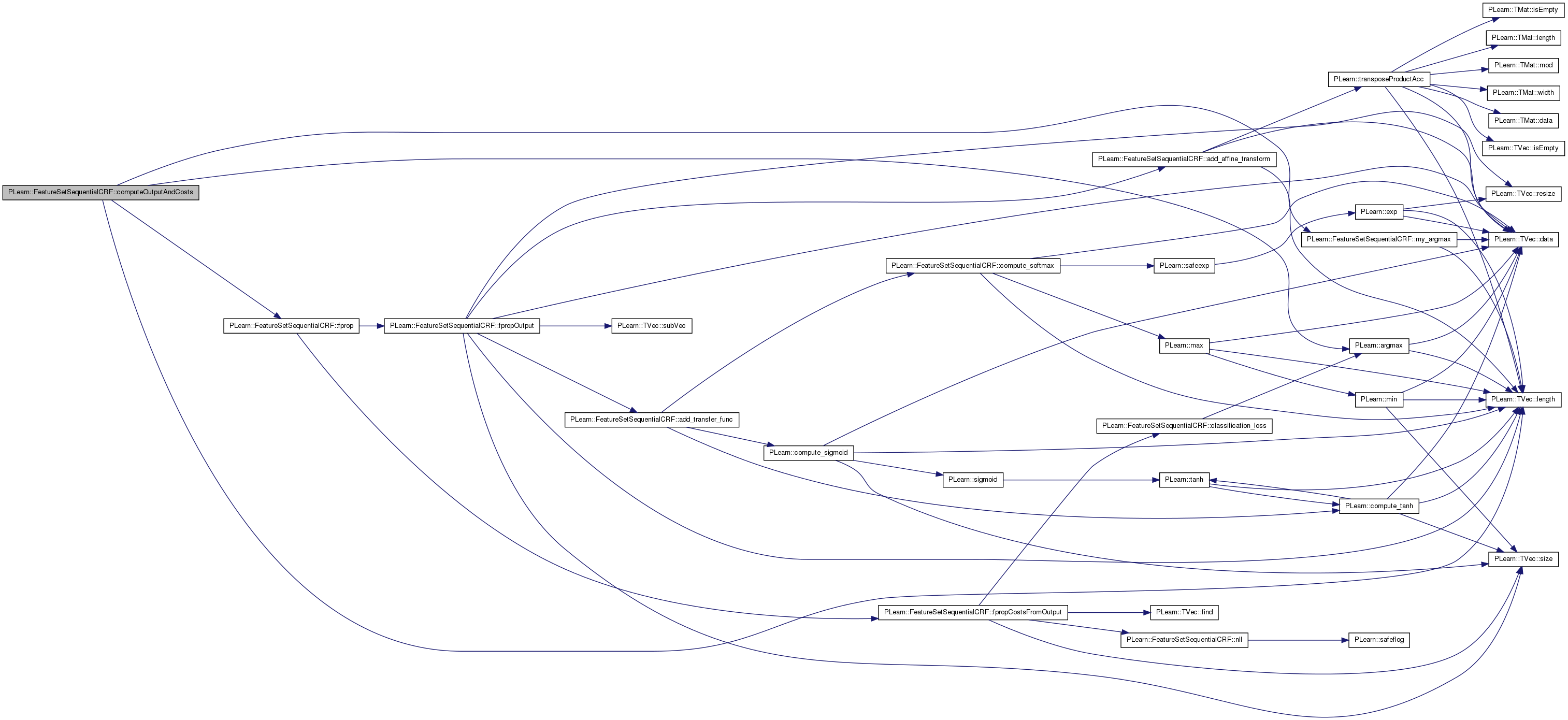

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 858 of file FeatureSetSequentialCRF.cc.

References PLearn::argmax(), fprop(), PLearn::TVec< T >::length(), my_argmax(), output_comp, possible_targets_vary, rgen, and target_values.

{

fprop(inputv,output_comp,targetv,costsv);

if(possible_targets_vary)

{

//row.subVec(0,inputsize_) << inputv;

//target_values_reference_set->getValues(row,inputsize_,target_values);

outputv[0] = target_values[my_argmax(output_comp,rgen->uniform_multinomial_sample(output_comp.length()))];

}

else

outputv[0] = argmax(output_comp);

}

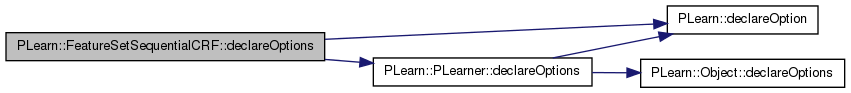

| void PLearn::FeatureSetSequentialCRF::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 87 of file FeatureSetSequentialCRF.cc.

References b1, b2, batch_size, bias_decay, bout, bout_dist_rep, PLearn::OptionBase::buildoption, cost_funcs, PLearn::declareOption(), PLearn::PLearner::declareOptions(), decrease_constant, direct_bout, direct_in_to_out, direct_in_to_out_weight_decay, direct_wout, dist_rep_dim, feat_sets, fixed_output_weights, hidden_transfer_func, initialization_method, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, nhidden, nhidden2, output_layer_bias_decay, output_layer_dist_rep_bias_decay, output_layer_dist_rep_weight_decay, output_layer_weight_decay, output_transfer_func, penalty_type, possible_targets_vary, start_learning_rate, stochastic_gradient_descent_speedup, PLearn::PLearner::train_set, w1, w2, weight_decay, wout, and wout_dist_rep.

{

declareOption(ol, "nhidden", &FeatureSetSequentialCRF::nhidden,

OptionBase::buildoption,

"Number of hidden units in first hidden layer (0 means no hidden layer).\n");

declareOption(ol, "nhidden2", &FeatureSetSequentialCRF::nhidden2,

OptionBase::buildoption,

"Number of hidden units in second hidden layer (0 means no hidden layer).\n");

declareOption(ol, "weight_decay", &FeatureSetSequentialCRF::weight_decay,

OptionBase::buildoption,

"Global weight decay for all layers.\n");

declareOption(ol, "bias_decay", &FeatureSetSequentialCRF::bias_decay,

OptionBase::buildoption,

"Global bias decay for all layers.\n");

declareOption(ol, "layer1_weight_decay", &FeatureSetSequentialCRF::layer1_weight_decay,

OptionBase::buildoption,

"Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer1_bias_decay", &FeatureSetSequentialCRF::layer1_bias_decay,

OptionBase::buildoption,

"Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer2_weight_decay", &FeatureSetSequentialCRF::layer2_weight_decay,

OptionBase::buildoption,

"Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer2_bias_decay", &FeatureSetSequentialCRF::layer2_bias_decay,

OptionBase::buildoption,

"Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(ol, "output_layer_weight_decay",

&FeatureSetSequentialCRF::output_layer_weight_decay,

OptionBase::buildoption,

"Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_bias_decay",

&FeatureSetSequentialCRF::output_layer_bias_decay,

OptionBase::buildoption,

"Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "direct_in_to_out_weight_decay",

&FeatureSetSequentialCRF::direct_in_to_out_weight_decay,

OptionBase::buildoption,

"Additional weight decay for the weights going from the input directly to the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_dist_rep_weight_decay",

&FeatureSetSequentialCRF::output_layer_dist_rep_weight_decay,

OptionBase::buildoption,

"Additional weight decay for the output layer of distributed representation\n"

"predictor. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_dist_rep_bias_decay",

&FeatureSetSequentialCRF::output_layer_dist_rep_bias_decay,

OptionBase::buildoption,

"Additional bias decay for the output layer of distributed representation\n"

"predictor. Is added to 'bias_decay'.\n");

declareOption(ol, "fixed_output_weights",

&FeatureSetSequentialCRF::fixed_output_weights,

OptionBase::buildoption,

"If true then the output weights are not learned. They are initialized to +1 or -1 randomly.\n");

declareOption(ol, "direct_in_to_out", &FeatureSetSequentialCRF::direct_in_to_out,

OptionBase::buildoption,

"If true then direct input to output weights will be added (if nhidden > 0).\n");

declareOption(ol, "penalty_type", &FeatureSetSequentialCRF::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "output_transfer_func",

&FeatureSetSequentialCRF::output_transfer_func,

OptionBase::buildoption,

"what transfer function to use for ouput layer? One of: \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"softmax\" \n"

"An empty string or \"none\" means no output transfer function \n");

declareOption(ol, "hidden_transfer_func",

&FeatureSetSequentialCRF::hidden_transfer_func,

OptionBase::buildoption,

"What transfer function to use for hidden units? One of \n"

" - \"linear\" \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"softmax\" \n");

declareOption(ol, "cost_funcs", &FeatureSetSequentialCRF::cost_funcs,

OptionBase::buildoption,

"A list of cost functions to use\n"

"in the form \"[ cf1; cf2; cf3; ... ]\" where each function is one of: \n"

" - \"NLL\" (negative log likelihood -log(p[c]) for classification) \n"

" - \"class_error\" (classification error) \n"

"The FIRST function of the list will be used as \n"

"the objective function to optimize \n"

"(possibly with an added weight decay penalty) \n");

declareOption(ol, "start_learning_rate", &FeatureSetSequentialCRF::start_learning_rate,

OptionBase::buildoption,

"Start learning rate of gradient descent.\n");

declareOption(ol, "decrease_constant", &FeatureSetSequentialCRF::decrease_constant,

OptionBase::buildoption,

"Decrease constant of gradient descent.\n");

declareOption(ol, "batch_size", &FeatureSetSequentialCRF::batch_size,

OptionBase::buildoption,

"How many samples to use to estimate the avergage gradient before updating the weights\n"

"0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "stochastic_gradient_descent_speedup", &FeatureSetSequentialCRF::stochastic_gradient_descent_speedup,

OptionBase::buildoption,

"Indication that a trick to speedup stochastic gradient descent\n"

"should be used.\n");

declareOption(ol, "initialization_method",

&FeatureSetSequentialCRF::initialization_method, OptionBase::buildoption,

"The method used to initialize the weights:\n"

" - \"normal_linear\" = a normal law with variance 1/n_inputs\n"

" - \"normal_sqrt\" = a normal law with variance 1/sqrt(n_inputs)\n"

" - \"uniform_linear\" = a uniform law in [-1/n_inputs, 1/n_inputs]\n"

" - \"uniform_sqrt\" = a uniform law in [-1/sqrt(n_inputs), 1/sqrt(n_inputs)]\n"

" - \"zero\" = all weights are set to 0\n");

declareOption(ol, "dist_rep_dim", &FeatureSetSequentialCRF::dist_rep_dim,

OptionBase::buildoption,

" Dimensionality (number of components) of distributed representations.\n"

"If <= 0, than distributed representations will not be used.\n"

);

declareOption(ol, "possible_targets_vary",

&FeatureSetSequentialCRF::possible_targets_vary, OptionBase::buildoption,

"Indication that the set of possible targets vary from\n"

"one input vector to another.\n"

);

declareOption(ol, "feat_sets", &FeatureSetSequentialCRF::feat_sets,

OptionBase::buildoption,

"FeatureSets to apply on input. The number of feature\n"

"sets should be a divisor of inputsize(). The feature\n"

"sets applied to the ith input field is the feature\n"

"set at position i % feat_sets.length().\n"

);

declareOption(ol, "train_set", &FeatureSetSequentialCRF::train_set,

OptionBase::learntoption,

"VMatrix used for training, that also provides information about the data (e.g. Dictionary objects for the different fields).\n");

// Networks' learnt parameters

declareOption(ol, "w1", &FeatureSetSequentialCRF::w1, OptionBase::learntoption,

"Weights of first hidden layer.\n");

declareOption(ol, "b1", &FeatureSetSequentialCRF::b1, OptionBase::learntoption,

"Bias of first hidden layer.\n");

declareOption(ol, "w2", &FeatureSetSequentialCRF::w2, OptionBase::learntoption,

"Weights of second hidden layer.\n");

declareOption(ol, "b2", &FeatureSetSequentialCRF::b2, OptionBase::learntoption,

"Bias of second hidden layer.\n");

declareOption(ol, "wout", &FeatureSetSequentialCRF::wout, OptionBase::learntoption,

"Weights of output layer.\n");

declareOption(ol, "bout", &FeatureSetSequentialCRF::bout, OptionBase::learntoption,

"Bias of output layer.\n");

declareOption(ol, "direct_wout", &FeatureSetSequentialCRF::direct_wout,

OptionBase::learntoption,

"Direct input to output weights.\n");

declareOption(ol, "direct_bout", &FeatureSetSequentialCRF::direct_bout,

OptionBase::learntoption,

"Direct input to output bias.\n");

declareOption(ol, "wout_dist_rep", &FeatureSetSequentialCRF::wout_dist_rep,

OptionBase::learntoption,

"Weights of output layer for distributed representation predictor.\n");

declareOption(ol, "bout_dist_rep", &FeatureSetSequentialCRF::bout_dist_rep,

OptionBase::learntoption,

"Bias of output layer for distributed representation predictor.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::FeatureSetSequentialCRF::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 294 of file FeatureSetSequentialCRF.h.

:

static void declareOptions(OptionList& ol);

| FeatureSetSequentialCRF * PLearn::FeatureSetSequentialCRF::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

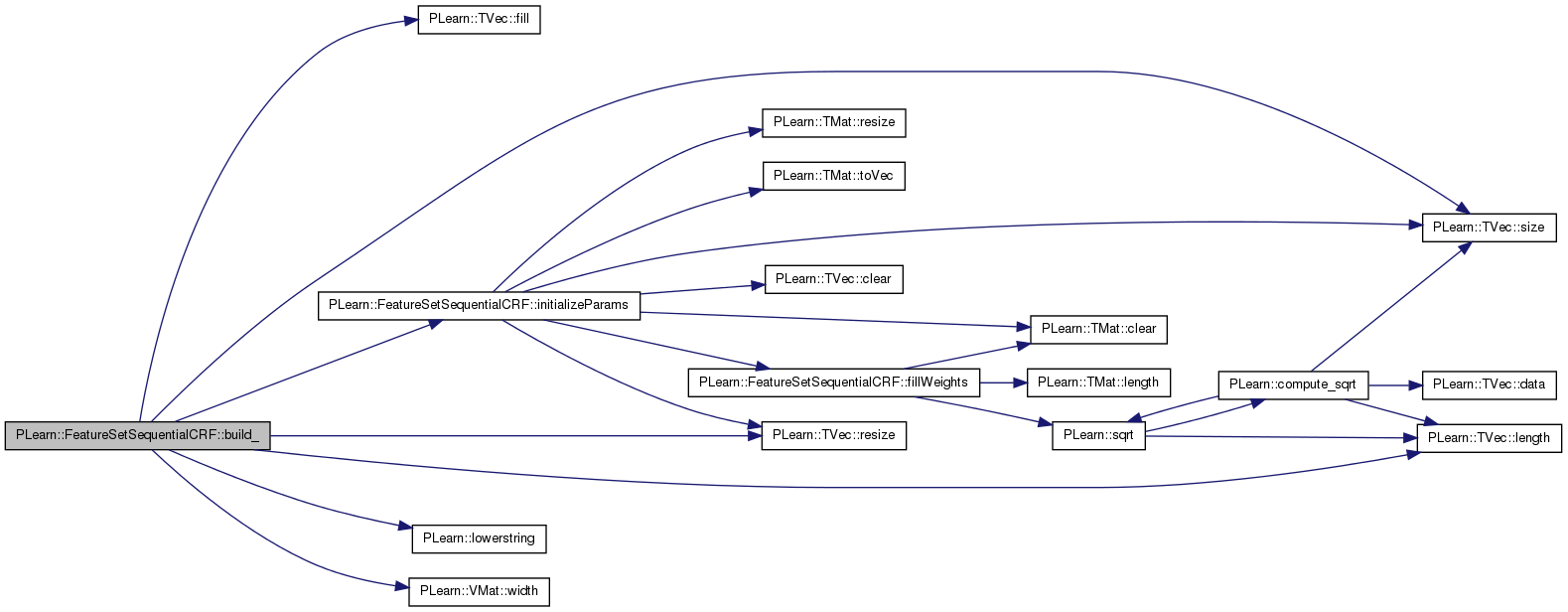

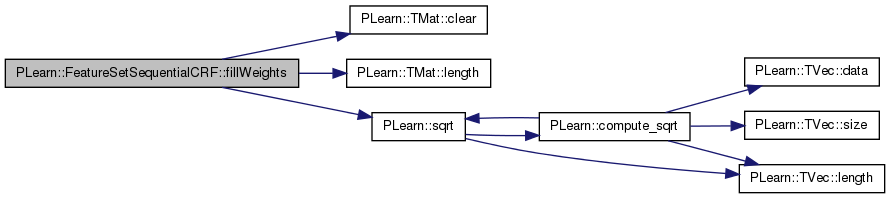

| void PLearn::FeatureSetSequentialCRF::fillWeights | ( | const Mat & | weights | ) | [protected] |

Fill a matrix of weights according to the 'initialization_method' specified.

Definition at line 875 of file FeatureSetSequentialCRF.cc.

References PLearn::TMat< T >::clear(), initialization_method, PLearn::TMat< T >::length(), rgen, and PLearn::sqrt().

Referenced by initializeParams().

{

if (initialization_method == "zero") {

weights.clear();

return;

}

real delta;

int is = weights.length();

if (initialization_method.find("linear") != string::npos)

delta = 1.0 / real(is);

else

delta = 1.0 / sqrt(real(is));

if (initialization_method.find("normal") != string::npos)

rgen->fill_random_normal(weights, 0, delta);

else

rgen->fill_random_uniform(weights, -delta, delta);

}

| void PLearn::FeatureSetSequentialCRF::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 895 of file FeatureSetSequentialCRF.cc.

References build(), PLearn::PLearner::stage, total_updates, and PLearn::PLearner::train_set.

{

if (train_set) build();

total_updates=0;

stage = 0;

}

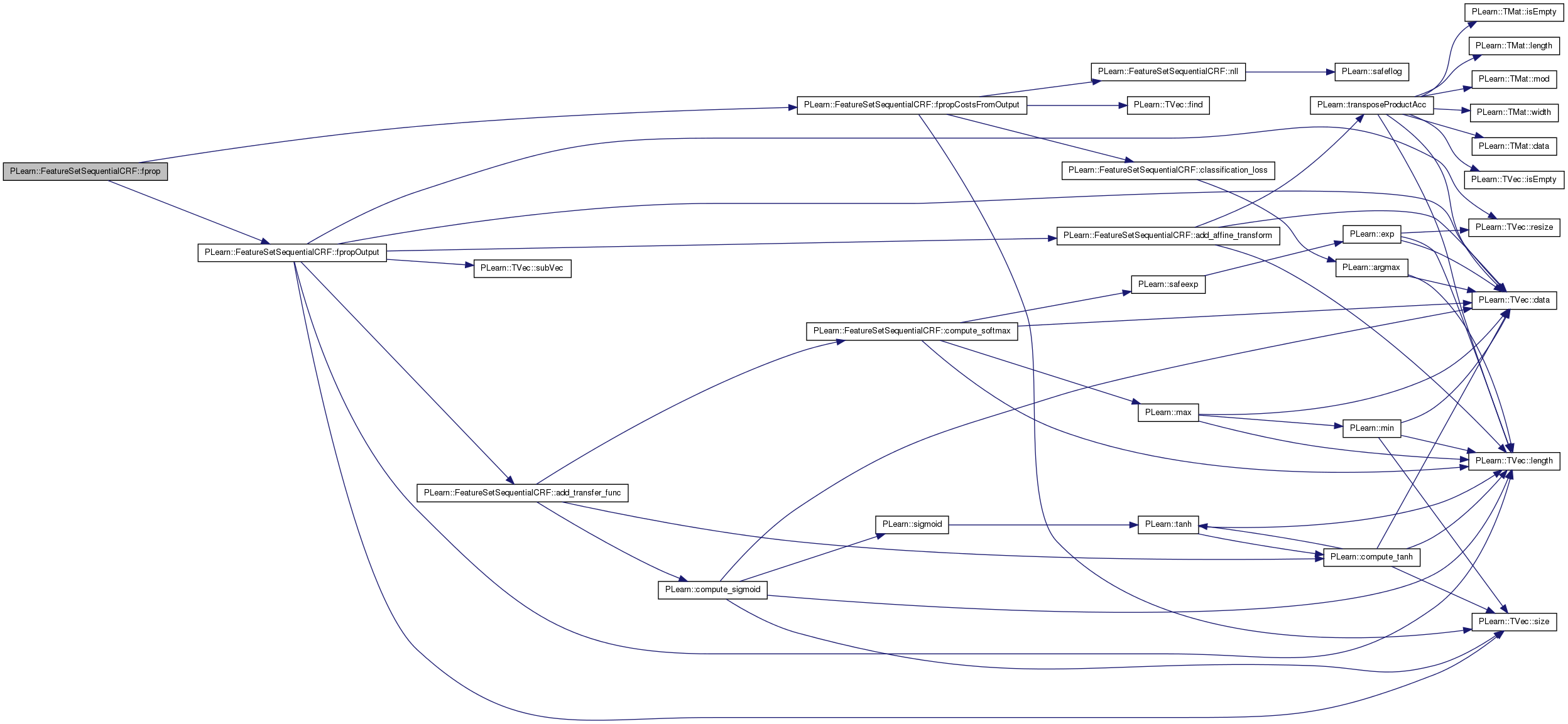

| void PLearn::FeatureSetSequentialCRF::fprop | ( | const Vec & | inputv, |

| Vec & | outputv, | ||

| const Vec & | targetv, | ||

| Vec & | costsv, | ||

| real | sampleweight = 1 |

||

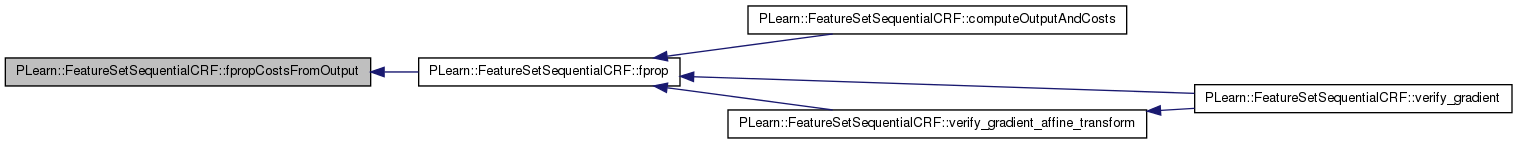

| ) | const [protected] |

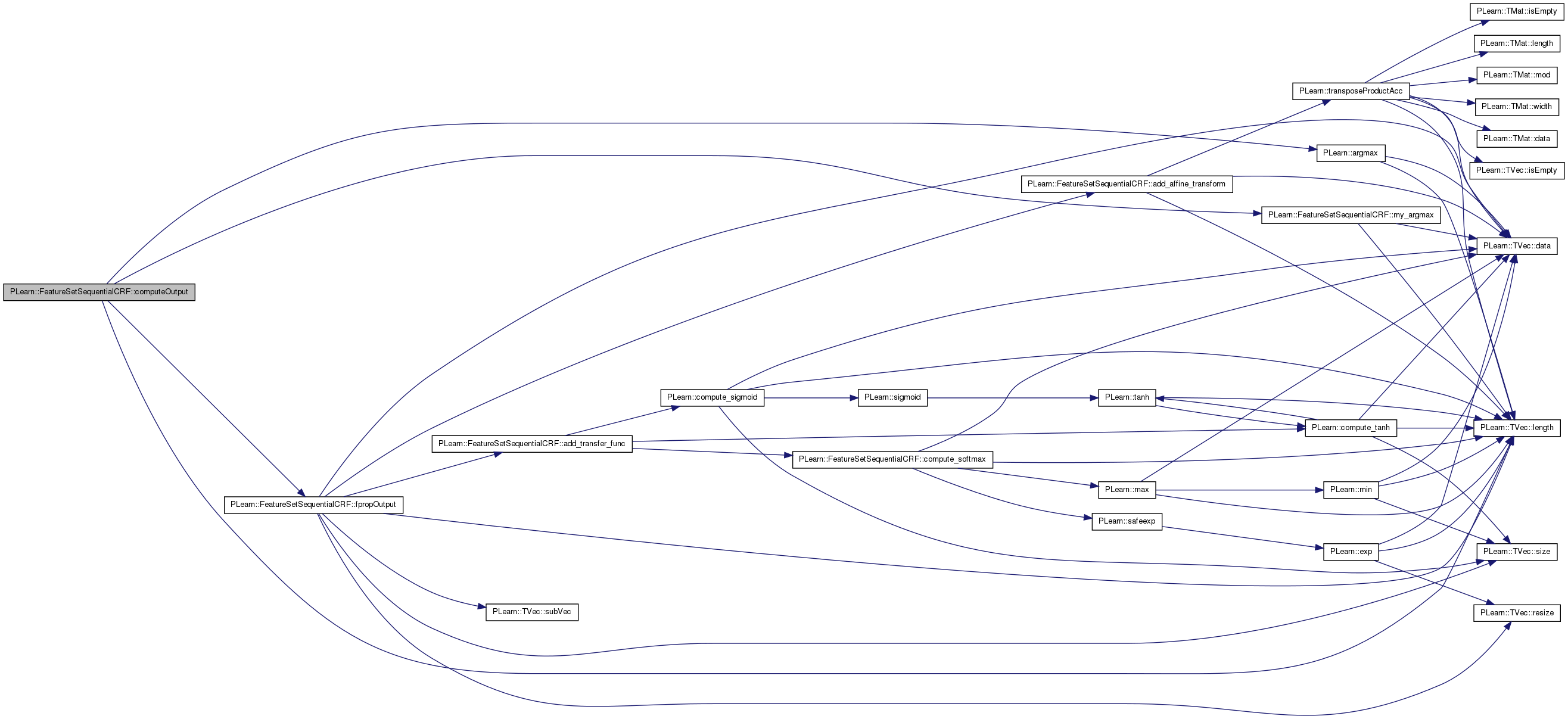

Forward propagation in the network.

Definition at line 346 of file FeatureSetSequentialCRF.cc.

References fpropCostsFromOutput(), and fpropOutput().

Referenced by computeOutputAndCosts(), verify_gradient(), and verify_gradient_affine_transform().

{

fpropOutput(inputv,outputv);

//if(is_missing(outputv[0]))

// cout << "What the fuck" << endl;

fpropCostsFromOutput(inputv, outputv, targetv, costsv, sampleweight);

//if(is_missing(costsv[0]))

// cout << "Re-What the fuck" << endl;

}

| void PLearn::FeatureSetSequentialCRF::fpropCostsFromOutput | ( | const Vec & | inputv, |

| const Vec & | outputv, | ||

| const Vec & | targetv, | ||

| Vec & | costsv, | ||

| real | sampleweight = 1 |

||

| ) | const [protected] |

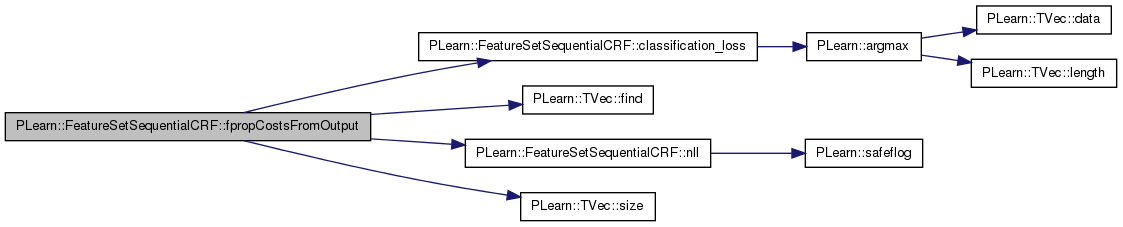

Forward propagation to compute the costs from the output.

Definition at line 472 of file FeatureSetSequentialCRF.cc.

References classification_loss(), cost_funcs, PLearn::TVec< T >::find(), nll(), PLERROR, possible_targets_vary, reind_target, PLearn::TVec< T >::size(), and target_values.

Referenced by fprop().

{

//Compute cost

if(possible_targets_vary)

{

reind_target = target_values.find(targetv[0]);

if(reind_target<0)

PLERROR("In FeatureSetSequentialCRF::fprop(): target %d is not in possible targets", targetv[0]);

}

else

reind_target = (int)targetv[0];

// Build cost function

int ncosts = cost_funcs.size();

for(int k=0; k<ncosts; k++)

{

if(cost_funcs[k]=="NLL")

{

costsv[k] = sampleweight*nll(outputv,reind_target);

}

else if(cost_funcs[k]=="class_error")

costsv[k] = sampleweight*classification_loss(outputv, reind_target);

else

PLERROR("In FeatureSetSequentialCRF::fprop(): unknown cost_func option: %s",cost_funcs[k].c_str());

}

}

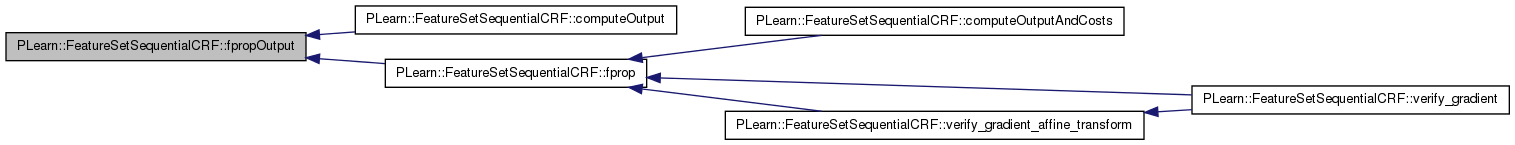

| void PLearn::FeatureSetSequentialCRF::fpropOutput | ( | const Vec & | inputv, |

| Vec & | outputv | ||

| ) | const [protected] |

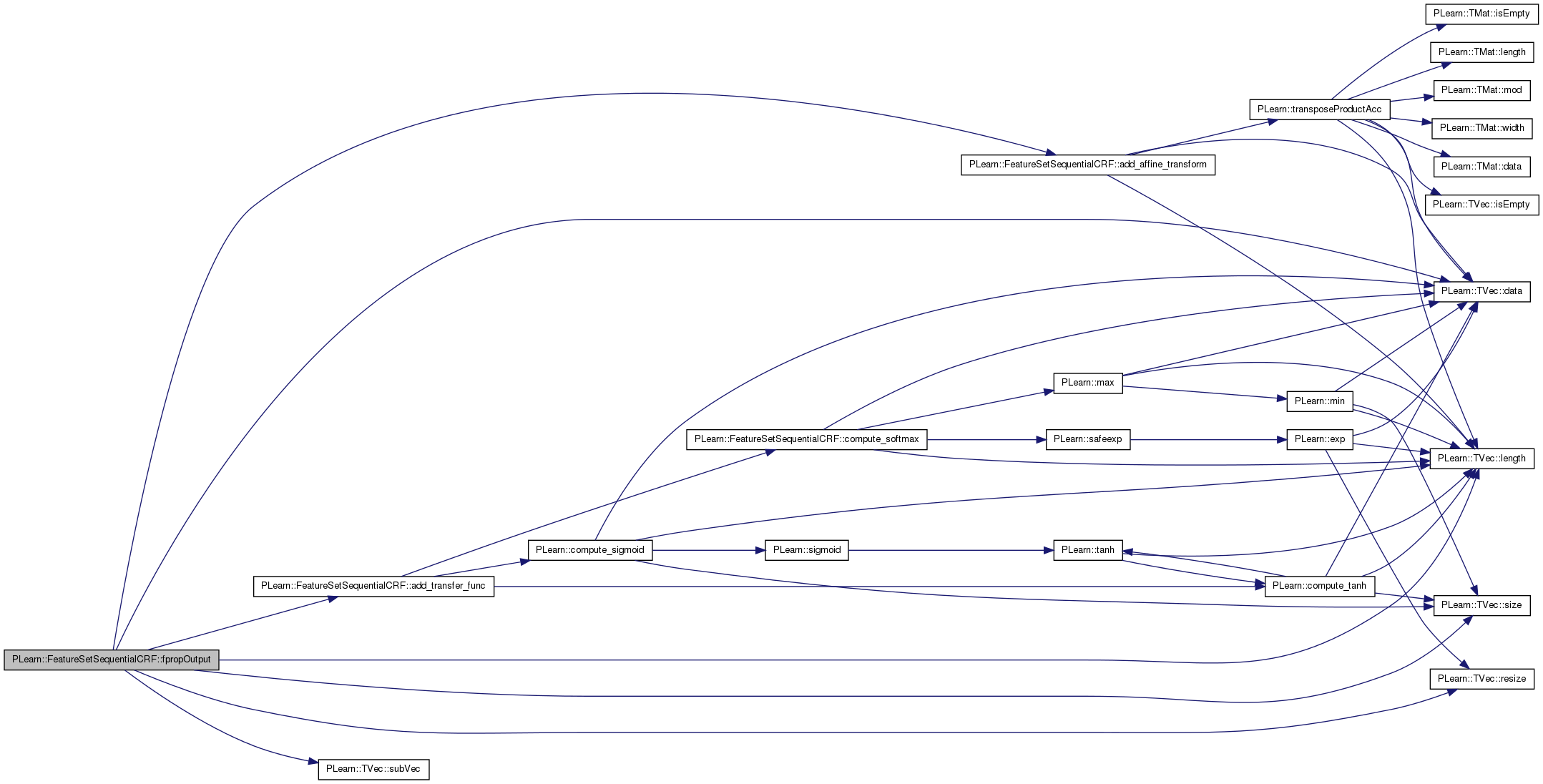

Forward propagation to compute the output.

Definition at line 358 of file FeatureSetSequentialCRF.cc.

References add_affine_transform(), add_transfer_func(), b1, b2, bout, bout_dist_rep, PLearn::TVec< T >::data(), direct_bout, direct_in_to_out, direct_wout, dist_rep_dim, f, feat_input, feat_sets, feats, hidden2v, hiddenv, i, ifeats, PLearn::PLearner::inputsize_, j, last_layer, PLearn::TVec< T >::length(), n_feat_sets, nfeats, nhidden, nhidden2, ni, nj, nnet_input, offset, output_transfer_func, PLERROR, possible_targets_vary, PLearn::TVec< T >::resize(), row, PLearn::TVec< T >::size(), str, PLearn::TVec< T >::subVec(), target_values, target_values_reference_set, val_string_reference_set, w1, w2, wout, and wout_dist_rep.

Referenced by computeOutput(), and fprop().

{

// Get possible target values

if(possible_targets_vary)

{

row.subVec(0,inputsize_) << inputv;

target_values_reference_set->getValues(row,inputsize_,target_values);

outputv.resize(target_values.length());

}

// Get features

ni = inputsize_;

nfeats = 0;

for(int i=0; i<ni; i++)

{

str = val_string_reference_set->getValString(i,inputv[i]);

feat_sets[i%n_feat_sets]->getFeatures(str,feats[i]);

nfeats += feats[i].length();

}

feat_input.resize(nfeats);

offset = 0;

id = 0;

for(int i=0; i<ni; i++)

{

f = feats[i].data();

nj = feats[i].length();

for(int j=0; j<nj; j++)

feat_input[id++] = offset + *f++;

if(dist_rep_dim <= 0 || ((i+1) % n_feat_sets != 0))

offset += feat_sets[i % n_feat_sets]->size();

else

offset = 0;

}

// Fprop to output

if(dist_rep_dim > 0) // x -> d(x)

{

nfeats = 0;

id = 0;

for(int i=0; i<inputsize_;)

{

ifeats = 0;

for(int j=0; j<n_feat_sets; j++,i++)

ifeats += feats[i].length();

add_affine_transform(feat_input.subVec(nfeats,ifeats),

wout_dist_rep, bout_dist_rep,

nnet_input.subVec(id*dist_rep_dim,dist_rep_dim),

true, false);

nfeats += ifeats;

id++;

}

if(nhidden>0) // d(x) -> h1(d(x))

{

add_affine_transform(nnet_input,w1,b1,hiddenv,false,false);

add_transfer_func(hiddenv);

if(nhidden2>0) // h1(d(x)) -> h2(h1(d(x)))

{

add_affine_transform(hiddenv,w2,b2,hidden2v,false,false);

add_transfer_func(hidden2v);

last_layer = hidden2v;

}

else

last_layer = hiddenv;

}

else

last_layer = nnet_input;

// d(x),h1(d(x)),h2(h1(d(x))) -> o(x)

add_affine_transform(last_layer,wout,bout,outputv,false,

possible_targets_vary,target_values);

if(direct_in_to_out && nhidden>0)

add_affine_transform(nnet_input,direct_wout,direct_bout,

outputv,false,possible_targets_vary,target_values);

}

else

{

if(nhidden>0) // x -> h1(x)

{

add_affine_transform(feat_input,w1,b1,hiddenv,true,false);

// Transfert function

add_transfer_func(hiddenv);

if(nhidden2>0) // h1(x) -> h2(h1(x))

{

add_affine_transform(hiddenv,w2,b2,hidden2v,true,false);

add_transfer_func(hidden2v);

last_layer = hidden2v;

}

else

last_layer = hiddenv;

}

else

last_layer = feat_input;

// x, h1(x),h2(h1(x)) -> o(x)

add_affine_transform(last_layer,wout,bout,outputv,nhidden<=0,

possible_targets_vary,target_values);

if(direct_in_to_out && nhidden>0)

add_affine_transform(feat_input,direct_wout,direct_bout,

outputv,true,possible_targets_vary,target_values);

}

if (nhidden2>0 && nhidden<=0)

PLERROR("FeatureSetSequentialCRF::fprop(): can't have nhidden2 (=%d) > 0 while nhidden=0",nhidden2);

if(output_transfer_func!="" && output_transfer_func!="none")

add_transfer_func(outputv, output_transfer_func);

}

| OptionList & PLearn::FeatureSetSequentialCRF::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| OptionMap & PLearn::FeatureSetSequentialCRF::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| RemoteMethodMap & PLearn::FeatureSetSequentialCRF::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file FeatureSetSequentialCRF.cc.

| TVec< string > PLearn::FeatureSetSequentialCRF::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 913 of file FeatureSetSequentialCRF.cc.

References cost_funcs.

{

return cost_funcs;

}

| TVec< string > PLearn::FeatureSetSequentialCRF::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 905 of file FeatureSetSequentialCRF.cc.

References cost_funcs.

Referenced by verify_gradient().

{

return cost_funcs;

}

| void PLearn::FeatureSetSequentialCRF::gradient_affine_transform | ( | Vec | input, |

| Mat | weights, | ||

| Vec | bias, | ||

| Vec | ginput, | ||

| Mat | gweights, | ||

| Vec | gbias, | ||

| Vec | goutput, | ||

| bool | input_is_sparse, | ||

| bool | output_is_sparse, | ||

| real | learning_rate, | ||

| real | weight_decay, | ||

| real | bias_decay, | ||

| Vec | output_indices = Vec(0) |

||

| ) | [protected] |

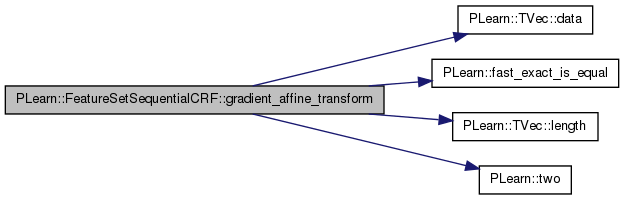

Propagate gradient through affine transform on input using provided weights and bias.

Information about the nature of the input and output need to be provided. If bias.length() == 0, then no backprop is made to bias.

Definition at line 1112 of file FeatureSetSequentialCRF.cc.

References bias_decay, PLearn::TVec< T >::data(), PLearn::fast_exact_is_equal(), i, j, PLearn::TVec< T >::length(), ni, nj, penalty_type, pval1, pval2, pval3, pval4, pval5, PLearn::two(), val, val2, and weight_decay.

Referenced by bprop().

{

// Bias

if(bias.length() != 0)

{

if(output_is_sparse)

{

pval1 = gbias.data();

pval2 = goutput.data();

pval3 = output_indices.data();

ni = goutput.length();

if(fast_exact_is_equal(bias_decay, 0))

{

// Without bias decay

for(int i=0; i<ni; i++)

pval1[(int)*pval3++] += *pval2++;

}

else

{

// With bias decay

if(penalty_type == "L2_square")

{

pval4 = bias.data();

val = -two(learning_rate)*bias_decay;

for(int i=0; i<ni; i++)

{

pval1[(int)*pval3] += *pval2++ + val*(pval4[(int)*pval3]);

pval3++;

}

}

else if(penalty_type == "L1")

{

pval4 = bias.data();

val = -learning_rate*bias_decay;

for(int i=0; i<ni; i++)

{

val2 = pval4[(int)*pval3];

if(val2 > 0 )

pval1[(int)*pval3] += *pval2 + val;

else if(val2 < 0)

pval1[(int)*pval3] += *pval2 - val;

pval2++;

pval3++;

}

}

}

}

else

{

pval1 = gbias.data();

pval2 = goutput.data();

ni = goutput.length();

if(fast_exact_is_equal(bias_decay, 0))

{

// Without bias decay

for(int i=0; i<ni; i++)

*pval1++ += *pval2++;

}

else

{

// With bias decay

if(penalty_type == "L2_square")

{

pval3 = bias.data();

val = -two(learning_rate)*bias_decay;

for(int i=0; i<ni; i++)

{

*pval1++ += *pval2++ + val * (*pval3++);

}

}

else if(penalty_type == "L1")

{

pval3 = bias.data();

val = -learning_rate*bias_decay;

for(int i=0; i<ni; i++)

{

if(*pval3 > 0)

*pval1 += *pval2 + val;

else if(*pval3 < 0)

*pval1 += *pval2 - val;

pval1++;

pval2++;

pval3++;

}

}

}

}

}

// Weights and input (when appropriate)

if(!input_is_sparse && !output_is_sparse)

{

// Input

//productAcc(ginput, weights, goutput);

// Weights

//externalProductAcc(gweights, input, goutput);

// Faster code to do this, which limits the accesses

// to memory

ni = input.length();

nj = goutput.length();

pval3 = ginput.data();

pval5 = input.data();

if(fast_exact_is_equal(weight_decay, 0))

{

// Without weight decay

for(int i=0; i<ni; i++) {

pval1 = goutput.data();

pval2 = weights[i];

pval4 = gweights[i];

for(int j=0; j<nj; j++) {

*pval3 += *pval2 * (*pval1);

*pval4 += *pval5 * (*pval1);

pval1++;

pval2++;

pval4++;

}

pval3++;

pval5++;

}

}

else

{

//With weight decay

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++) {

pval1 = goutput.data();

pval2 = weights[i];

pval4 = gweights[i];

for(int j=0; j<nj; j++) {

*pval3 += *pval2 * (*pval1);

*pval4 += *pval5 * (*pval1) + val * (*pval2);

pval1++;

pval2++;

pval4++;

}

pval3++;

pval5++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++) {

pval1 = goutput.data();

pval2 = weights[i];

pval4 = gweights[i];

for(int j=0; j<nj; j++) {

*pval3 += *pval2 * (*pval1);

if(*pval2 > 0)

*pval4 += *pval5 * (*pval1) + val;

else if(*pval2 < 0)

*pval4 += *pval5 * (*pval1) - val;

pval1++;

pval2++;

pval4++;

}

pval3++;

pval5++;

}

}

}

}

else if(!input_is_sparse && output_is_sparse)

{

ni = goutput.length();

nj = input.length();

pval1 = goutput.data();

pval3 = output_indices.data();

if(fast_exact_is_equal(weight_decay, 0))

{

// Without weight decay

for(int i=0; i<ni; i++)

{

pval2 = input.data();

pval4 = ginput.data();

for(int j=0; j<nj; j++)

{

// Input

*pval4++ += weights(j,(int)(*pval3))*(*pval1);

// Weights

gweights(j,(int)(*pval3)) += (*pval2++)*(*pval1);

}

pval1++;

pval3++;

}

}

else

{

// With weight decay

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

pval2 = input.data();

pval4 = ginput.data();

for(int j=0; j<nj; j++)

{

val2 = weights(j,(int)(*pval3));

// Input

*pval4++ += val2*(*pval1);

// Weights

gweights(j,(int)(*pval3)) += (*pval2++)*(*pval1) + val*val2;

}

pval1++;

pval3++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

pval2 = input.data();

pval4 = ginput.data();

for(int j=0; j<nj; j++)

{

val2 = weights(j,(int)(*pval3));

// Input

*pval4++ += val2*(*pval1);

// Weights

if(val2 > 0)

gweights(j,(int)(*pval3)) += (*pval2)*(*pval1) + val;

else if(val2 < 0)

gweights(j,(int)(*pval3)) += (*pval2)*(*pval1) - val;

pval2++;

}

pval1++;

pval3++;

}

}

}

}

else if(input_is_sparse && !output_is_sparse)

{

ni = input.length();

nj = goutput.length();

if(fast_exact_is_equal(weight_decay, 0))

{

// Without weight decay

if(ni != 0)

{

pval3 = input.data();

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval2 = gweights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

*pval2++ += *pval1++;

}

}

}

else

{

// With weight decay

if(penalty_type == "L2_square")

{

if(ni != 0)

{

pval3 = input.data();

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval2 = gweights[(int)(*pval3)];

pval4 = weights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

{

*pval2++ += *pval1++ + val * (*pval4++);

}

}

}

}

else if(penalty_type == "L1")

{

if(ni != 0)

{

pval3 = input.data();

val = learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval2 = gweights[(int)(*pval3)];

pval4 = weights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

{

if(*pval4 > 0)

*pval2 += *pval1 + val;

else if(*pval4 < 0)

*pval2 += *pval1 - val;

pval1++;

pval2++;

pval4++;

}

}

}

}

}

}

else if(input_is_sparse && output_is_sparse)

{

ni = input.length();

nj = goutput.length();

if(fast_exact_is_equal(weight_decay, 0))

{

// Without weight decay

if(ni != 0)

{

pval2 = input.data();

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval3 = output_indices.data();

for(int j=0; j<nj; j++)

gweights((int)(*pval2),(int)*pval3++) += *pval1++;

pval2++;

}

}

}

else

{

// With weight decay

if(penalty_type == "L2_square")

{

if(ni != 0)

{

pval2 = input.data();

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval3 = output_indices.data();

for(int j=0; j<nj; j++)

{

gweights((int)(*pval2),(int)*pval3)

+= *pval1++

+ val * weights((int)(*pval2),(int)*pval3);

pval3++;

}

pval2++;

}

}

}

else if(penalty_type == "L1")

{

if(ni != 0)

{

pval2 = input.data();

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = goutput.data();

pval3 = output_indices.data();

for(int j=0; j<nj; j++)

{

val2 = weights((int)(*pval2),(int)*pval3);

if(val2 > 0)

gweights((int)(*pval2),(int)*pval3)

+= *pval1 + val;

else if(val2 < 0)

gweights((int)(*pval2),(int)*pval3)

+= *pval1 - val;

pval1++;

pval3++;

}

pval2++;

}

}

}

}

}

// gradient_penalty(input,weights,bias,gweights,gbias,input_is_sparse,output_is_sparse,

// learning_rate,weight_decay,bias_decay,output_indices);

}

| void PLearn::FeatureSetSequentialCRF::gradient_penalty | ( | Vec | input, |

| Mat | weights, | ||

| Vec | bias, | ||

| Mat | gweights, | ||

| Vec | gbias, | ||

| bool | input_is_sparse, | ||

| bool | output_is_sparse, | ||

| real | learning_rate, | ||

| real | weight_decay, | ||

| real | bias_decay, | ||

| Vec | output_indices = Vec(0) |

||

| ) | [protected] |

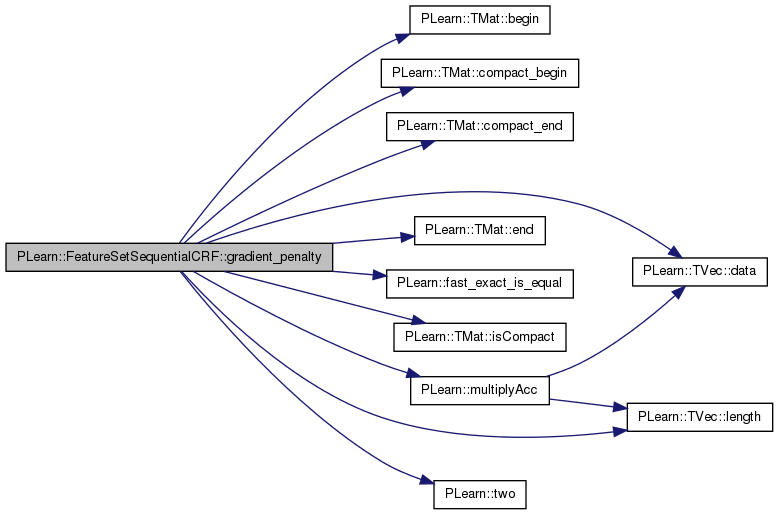

Propagate penalty gradient through weights and bias, scaled by -learning rate.

Definition at line 1505 of file FeatureSetSequentialCRF.cc.

References PLearn::TMat< T >::begin(), bias_decay, PLearn::TMat< T >::compact_begin(), PLearn::TMat< T >::compact_end(), PLearn::TVec< T >::data(), PLearn::TMat< T >::end(), PLearn::fast_exact_is_equal(), i, PLearn::TMat< T >::isCompact(), j, PLearn::TVec< T >::length(), PLearn::multiplyAcc(), ni, nj, penalty_type, pval1, pval2, pval3, PLearn::two(), val, val2, and weight_decay.

{

// Bias

if(!fast_exact_is_equal(bias_decay, 0) && !fast_exact_is_equal(bias.length(), 0) )

{

if(output_is_sparse)

{

pval1 = gbias.data();

pval2 = bias.data();

pval3 = output_indices.data();

ni = output_indices.length();

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*bias_decay;

for(int i=0; i<ni; i++)

{

pval1[(int)*pval3] += val*(pval2[(int)*pval3]);

pval3++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*bias_decay;

for(int i=0; i<ni; i++)

{

val2 = pval2[(int)*pval3];

if(val2 > 0 )

pval1[(int)*pval3++] += val;

else if(val2 < 0)

pval1[(int)*pval3++] -= val;

}

}

}

else

{

pval1 = gbias.data();

pval2 = bias.data();

ni = output_indices.length();

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*bias_decay;

for(int i=0; i<ni; i++)

*pval1++ += val*(*pval2++);

}

else if(penalty_type == "L1")

{

val = -learning_rate*bias_decay;

for(int i=0; i<ni; i++)

{

if(*pval2 > 0)

*pval1 += val;

else if(*pval2 < 0)

*pval1 -= val;

pval1++;

pval2++;

}

}

}

}

// Weights

if(!fast_exact_is_equal(weight_decay, 0))

{

if(!input_is_sparse && !output_is_sparse)

{

if(penalty_type == "L2_square")

{

multiplyAcc(gweights, weights,-two(learning_rate)*weight_decay);

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

if(gweights.isCompact() && weights.isCompact())

{

Mat::compact_iterator itm = gweights.compact_begin();

Mat::compact_iterator itmend = gweights.compact_end();

Mat::compact_iterator itx = weights.compact_begin();

for(; itm!=itmend; ++itm, ++itx)

{

if(*itx > 0)

*itm += val;

else if(*itx < 0)

*itm -= val;

}

}

else // use non-compact iterators

{

Mat::iterator itm = gweights.begin();

Mat::iterator itmend = gweights.end();

Mat::iterator itx = weights.begin();

for(; itm!=itmend; ++itm, ++itx)

{

if(*itx > 0)

*itm += val;

else if(*itx < 0)

*itm -= val;

}

}

}

}

else if(!input_is_sparse && output_is_sparse)

{

ni = output_indices.length();

nj = input.length();

pval1 = output_indices.data();

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

for(int j=0; j<nj; j++)

{

gweights(j,(int)(*pval1)) += val * weights(j,(int)(*pval1));

}

pval1++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

for(int j=0; j<nj; j++)

{

val2 = weights(j,(int)(*pval1));

if(val2 > 0)

gweights(j,(int)(*pval1)) += val;

else if(val2 < 0)

gweights(j,(int)(*pval1)) -= val;

}

pval1++;

}

}

}

else if(input_is_sparse && !output_is_sparse)

{

ni = input.length();

nj = output_indices.length();

if(ni != 0)

{

pval3 = input.data();

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = weights[(int)(*pval3)];

pval2 = gweights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

*pval2++ += val * *pval1++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

pval1 = weights[(int)(*pval3)];

pval2 = gweights[(int)(*pval3++)];

for(int j=0; j<nj;j++)

{

if(*pval1 > 0)

*pval2 += val;

else if(*pval1 < 0)

*pval2 -= val;

pval2++;

pval1++;

}

}

}

}

}

else if(input_is_sparse && output_is_sparse)

{

ni = input.length();

nj = output_indices.length();

if(ni != 0)

{

pval1 = input.data();

if(penalty_type == "L2_square")

{

val = -two(learning_rate)*weight_decay;

for(int i=0; i<ni; i++)

{

pval2 = output_indices.data();

for(int j=0; j<nj; j++)

{

gweights((int)(*pval1),(int)*pval2) += val*weights((int)(*pval1),(int)*pval2);

pval2++;

}

pval1++;

}

}

else if(penalty_type == "L1")

{

val = -learning_rate*weight_decay;

for(int i=0; i<ni; i++)

{

pval2 = output_indices.data();

for(int j=0; j<nj; j++)

{

val2 = weights((int)(*pval1),(int)*pval2);

if(val2 > 0)

gweights((int)(*pval1),(int)*pval2) += val;

else if(val2 < 0)

gweights((int)(*pval1),(int)*pval2) -= val;

pval2++;

}

pval1++;

}

}

}

}

}

}

| void PLearn::FeatureSetSequentialCRF::gradient_transfer_func | ( | Vec & | output, |

| Vec & | gradient_input, | ||

| Vec & | gradient_output, | ||

| string | transfer_func = "default", |

||

| int | nll_softmax_speed_up_target = -1 |

||

| ) | [protected] |

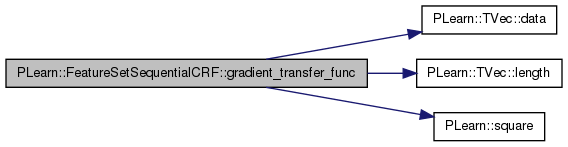

Computes the gradient through the given activation function, the output value and the initial gradient on that output (i.e.

before the activation function). After calling this function, gradient_act_output corresponds the gradient after the activation function. nll_softmax_speed_up_target is to speed up the gradient computation for the output layer using the softmax transfert function and the NLL cost function is applied.

Definition at line 948 of file FeatureSetSequentialCRF.cc.

References PLearn::TVec< T >::data(), grad, hidden_transfer_func, i, PLearn::TVec< T >::length(), ni, nk, PLERROR, pval1, pval2, pval3, PLearn::square(), and val.

Referenced by bprop().

{

if (transfer_func == "default")

transfer_func = hidden_transfer_func;

if(transfer_func=="linear")

{

pval1 = gradient_output.data();

pval2 = gradient_input.data();

ni = output.length();

for(int i=0; i<ni; i++)

*pval2++ += *pval1++;

return;

}

else if(transfer_func=="tanh")

{

pval1 = gradient_output.data();

pval2 = output.data();

pval3 = gradient_input.data();

ni = output.length();

for(int i=0; i<ni; i++)

*pval3++ += (*pval1++)*(1.0-square(*pval2++));

return;

}

else if(transfer_func=="sigmoid")

{

pval1 = gradient_output.data();

pval2 = output.data();

pval3 = gradient_input.data();

ni = output.length();

for(int i=0; i<ni; i++)

{

*pval3++ += (*pval1++)*(*pval2)*(1.0-*pval2);

pval2++;

}

return;

}

else if(transfer_func=="softmax")

{

if(nll_softmax_speed_up_target<0)

{

pval3 = gradient_input.data();

ni = nk = output.length();

for(int i=0; i<ni; i++)

{

val = output[i];

pval1 = gradient_output.data();

pval2 = output.data();

for(int k=0; k<nk; k++)

if(k!=i)

*pval3 -= *pval1++ * val * (*pval2++);

else

{

*pval3 += *pval1++ * val * (1.0-val);

pval2++;

}

pval3++;

}

}

else // Permits speedup and avoids numerical precision errors

{

pval2 = output.data();

pval3 = gradient_input.data();

ni = output.length();

grad = gradient_output[nll_softmax_speed_up_target];

val = output[nll_softmax_speed_up_target];

for(int i=0; i<ni; i++)

{

if(nll_softmax_speed_up_target!=i)

//*pval3++ -= grad * val * (*pval2++);

*pval3++ -= grad * (*pval2++);

else

{

//*pval3++ += grad * val * (1.0-val);

*pval3++ += grad * (1.0-val);

pval2++;

}

}

}

return;

}

else PLERROR("In FeatureSetSequentialCRF::gradient_transfer_func(): Unknown value for transfer_func: %s",transfer_func.c_str());

}

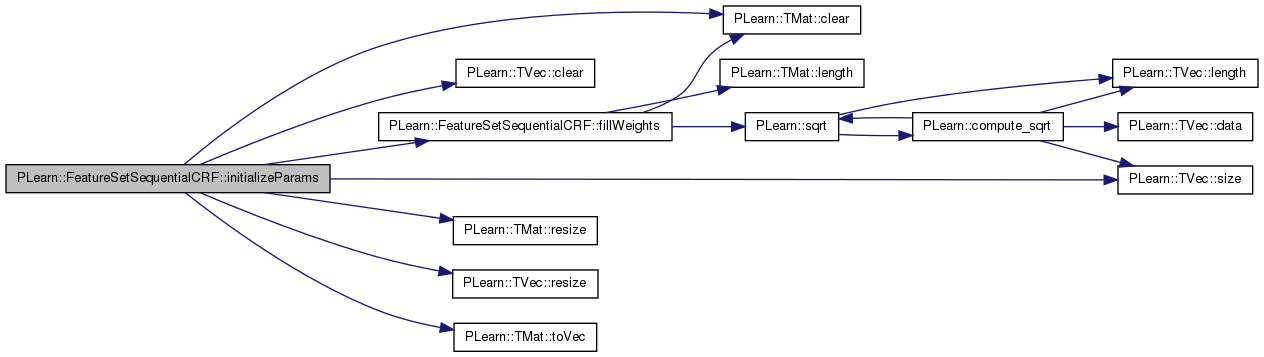

| void PLearn::FeatureSetSequentialCRF::initializeParams | ( | bool | set_seed = true | ) | [protected, virtual] |

Initialize the parameters.

If 'set_seed' is set to false, the seed will not be set in this method (it will be assumed to be already initialized according to the 'seed' option). The index of the extra task (-1 if main task) also needs to be provided.

Definition at line 1766 of file FeatureSetSequentialCRF.cc.

References b1, b2, bout, bout_dist_rep, PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), direct_bout, direct_in_to_out, direct_wout, dist_rep_dim, feat_input, feat_sets, fillWeights(), fixed_output_weights, gradient_act_hidden2v, gradient_act_hiddenv, gradient_act_outputv, gradient_b1, gradient_b2, gradient_bout, gradient_bout_dist_rep, gradient_direct_bout, gradient_direct_wout, gradient_hidden2v, gradient_hiddenv, gradient_nnet_input, gradient_outputv, gradient_w1, gradient_w2, gradient_wout, gradient_wout_dist_rep, hidden2v, hiddenv, i, PLearn::PLearner::inputsize_, n_feat_sets, nhidden, nhidden2, nnet_input, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), rgen, PLearn::PLearner::seed_, PLearn::TVec< T >::size(), total_feats_per_token, total_output_size, PLearn::TMat< T >::toVec(), PLearn::PLearner::train_set, w1, w2, wout, and wout_dist_rep.

Referenced by build_().

{

if (set_seed) {

if (seed_>=0)

rgen->manual_seed(seed_);

}

PP<Dictionary> dict = train_set->getDictionary(inputsize_);

total_output_size = dict->size();

total_feats_per_token = 0;

for(int i=0; i<n_feat_sets; i++)

total_feats_per_token += feat_sets[i]->size();

int nnet_inputsize;

if(dist_rep_dim > 0)

{

wout_dist_rep.resize(total_feats_per_token,dist_rep_dim);

bout_dist_rep.resize(dist_rep_dim);

nnet_inputsize = dist_rep_dim*inputsize_/n_feat_sets;

nnet_input.resize(nnet_inputsize);

fillWeights(wout_dist_rep);

bout_dist_rep.clear();