|

PLearn 0.1

|

|

PLearn 0.1

|

Multi-layer neural network based on matrix-matrix multiplications. More...

#include <mNNet.h>

Public Member Functions | |

| mNNet () | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

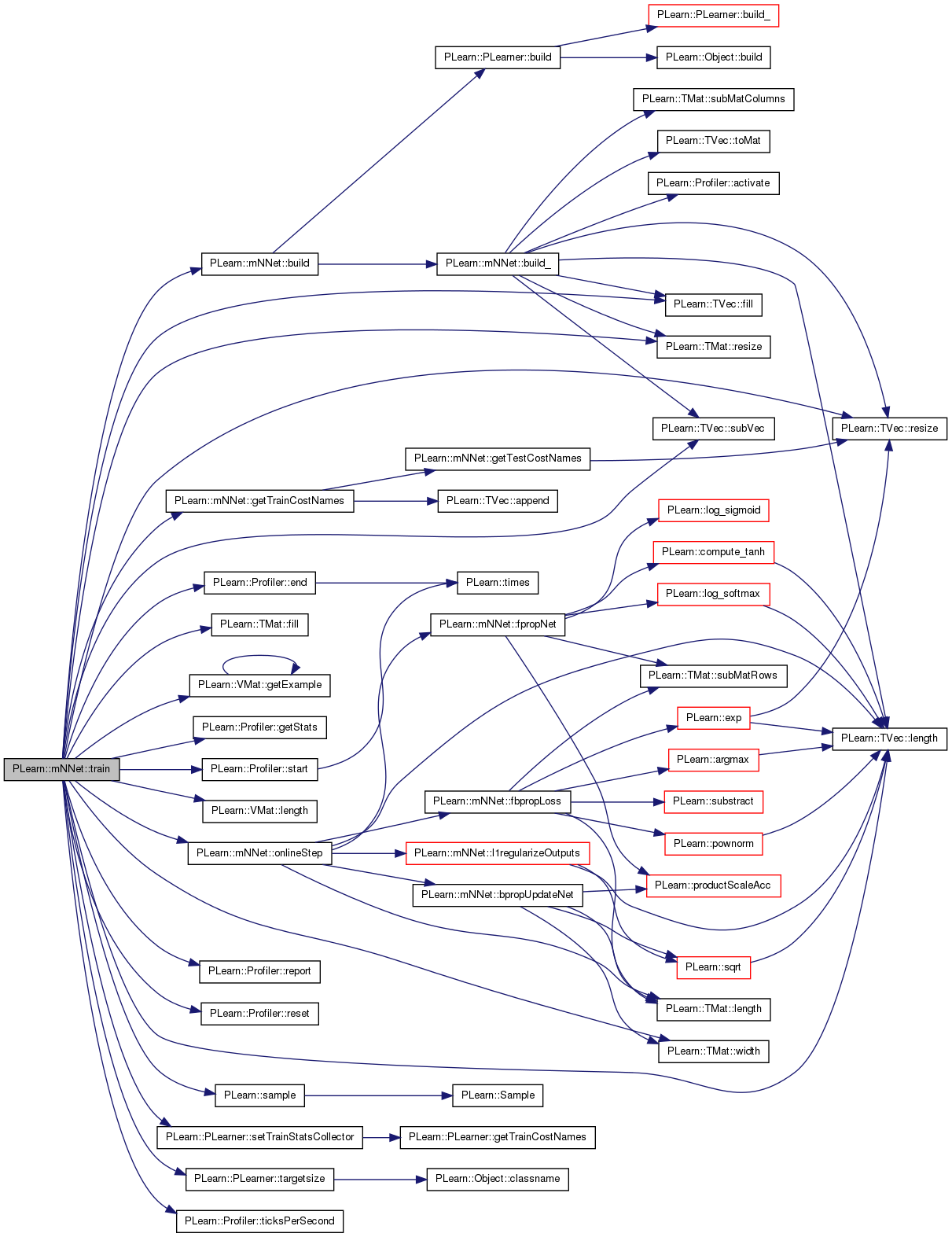

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeOutputs (const Mat &input, Mat &output) const |

| if it is more efficient to compute multipe outputs simultaneously, it can be advantageous to define the latter instead, in which each row of the matrices is associated with one example. | |

| virtual void | computeOutputsAndCosts (const Mat &input, const Mat &target, Mat &output, Mat &costs) const |

| minibatch version of computeOutputAndCosts | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

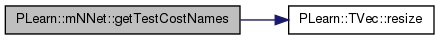

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

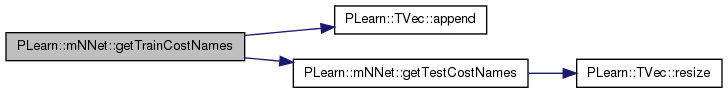

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual mNNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | noutputs |

| number of outputs to the network | |

| TVec< int > | hidden_layer_sizes |

| sizes of hidden layers | |

| real | init_lrate |

| initial learning rate | |

| real | lrate_decay |

| learning rate decay factor | |

| int | minibatch_size |

| update the parameters only so often | |

| string | output_type |

| type of output cost: "NLL" for classification problems, "MSE" for regression | |

| real | output_layer_L1_penalty_factor |

| L1 penalty applied to the output layer's parameters. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

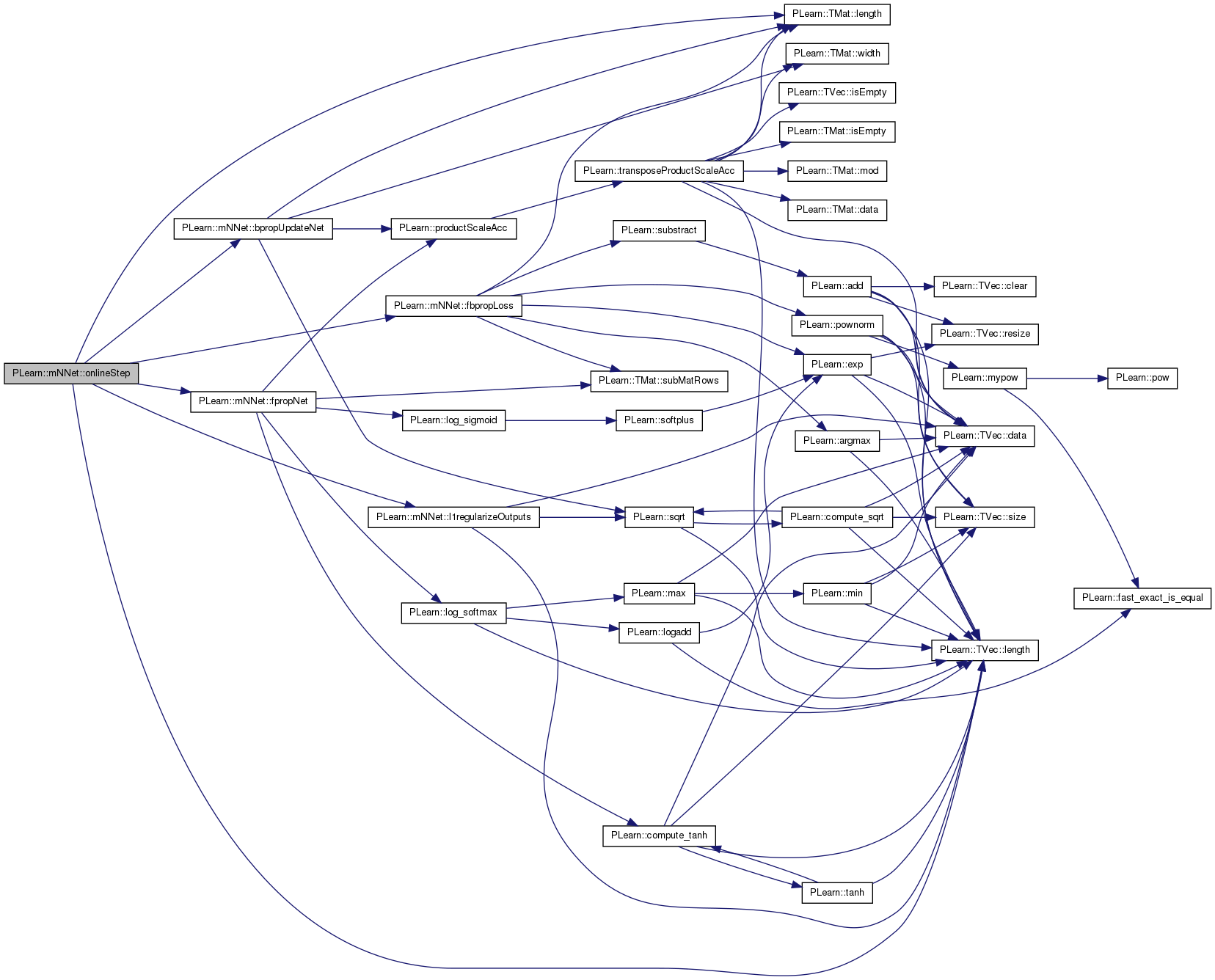

| virtual void | onlineStep (int t, const Mat &targets, Mat &train_costs, Vec example_weights) |

| One minibatch training step. | |

| virtual void | fpropNet (int n_examples) const |

| compute a minibatch of size n_examples network top-layer output given layer 0 output (= network input) (note that log-probabilities are computed for classification tasks, output_type=NLL) | |

| virtual void | fbpropLoss (const Mat &output, const Mat &target, const Vec &example_weights, Mat &train_costs) const |

| compute train costs given the network top-layer output and write into neuron_gradients_per_layer[n_layers-2], gradient on pre-non-linearity activation | |

| virtual void | bpropUpdateNet (const int t) |

| Performs the backprop update. | |

| virtual void | bpropNet (const int t) |

| Computes the gradients without doing the update. | |

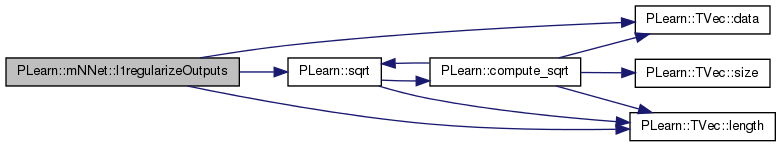

| void | l1regularizeOutputs () |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| int | n_layers |

| number of layers of weights (2 for a neural net with one hidden layer) | |

| TVec< int > | layer_sizes |

| layer sizes (derived from hidden_layer_sizes, inputsize_ and outputsize_) | |

| Vec | all_params |

| All the parameters in one vector. | |

| TVec< Mat > | biases |

| Alternate access to the params - one matrix per layer. | |

| TVec< Mat > | weights |

| TVec< Mat > | layer_params |

| Alternate access to the params - one matrix of dimension layer_sizes[i+1] x (layer_sizes[i]+1) per layer (neuron biases in the first column) | |

| Vec | all_params_gradient |

| Gradient structures - reflect the parameter structures. | |

| TVec< Mat > | layer_params_gradient |

| Mat | neuron_gradients |

| Outputs of the neurons. | |

| TVec< Mat > | neuron_gradients_per_layer |

| Mat | neuron_extended_outputs |

| Gradients on the neurons - same structure as for outputs. | |

| TVec< Mat > | neuron_extended_outputs_per_layer |

| TVec< Mat > | neuron_outputs_per_layer |

| Mat | targets |

| Vec | example_weights |

| Mat | train_costs |

| real | cumulative_training_time |

| Holds training time, an additional cost. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Multi-layer neural network based on matrix-matrix multiplications.

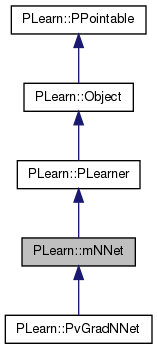

typedef PLearner PLearn::mNNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

| PLearn::mNNet::mNNet | ( | ) |

Definition at line 51 of file mNNet.cc.

References PLearn::PLearner::random_gen.

: noutputs(-1), init_lrate(0.0), lrate_decay(0.0), minibatch_size(1), output_type("NLL"), output_layer_L1_penalty_factor(0.0), n_layers(-1), cumulative_training_time(0.0) { random_gen = new PRandom(); }

| string PLearn::mNNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

| OptionList & PLearn::mNNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

| RemoteMethodMap & PLearn::mNNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

| Object * PLearn::mNNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PvGradNNet.

| StaticInitializer mNNet::_static_initializer_ & PLearn::mNNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

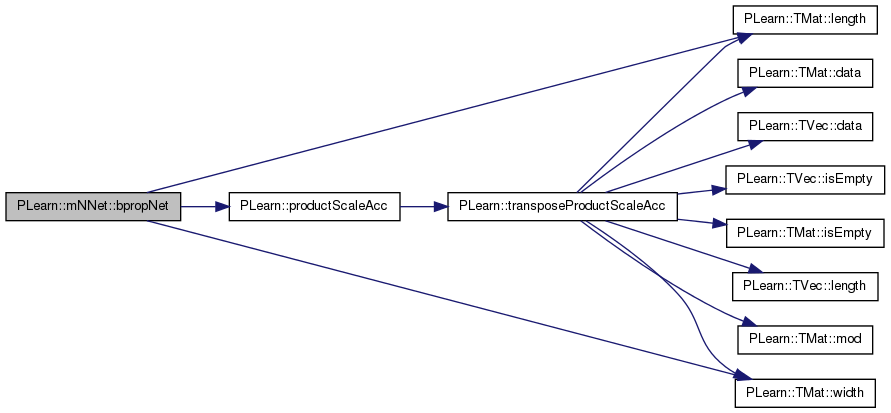

| void PLearn::mNNet::bpropNet | ( | const int | t | ) | [protected, virtual] |

Computes the gradients without doing the update.

Must be called after fbpropLoss

Definition at line 488 of file mNNet.cc.

References grad, i, j, layer_params_gradient, PLearn::TMat< T >::length(), n_layers, neuron_extended_outputs_per_layer, neuron_gradients_per_layer, neuron_outputs_per_layer, PLearn::productScaleAcc(), weights, and PLearn::TMat< T >::width().

Referenced by PLearn::PvGradNNet::bpropUpdateNet().

{

for (int i=n_layers-1;i>0;i--) {

// here neuron_gradients_per_layer[i] contains the gradient on

// activations (weighted sums)

// (minibatch_size x layer_size[i])

Mat previous_neurons_gradient = neuron_gradients_per_layer[i-1];

Mat next_neurons_gradient = neuron_gradients_per_layer[i];

Mat previous_neurons_output = neuron_outputs_per_layer[i-1];

if (i>1) // if not first hidden layer then compute gradient on previous layer

{

// propagate gradients

productScaleAcc(previous_neurons_gradient,next_neurons_gradient,false,

weights[i-1],false,1,0);

// propagate through tanh non-linearity

// TODO IN NEED OF OPTIMIZATION

for (int j=0;j<previous_neurons_gradient.length();j++) {

real* grad = previous_neurons_gradient[j];

real* out = previous_neurons_output[j];

for (int k=0;k<previous_neurons_gradient.width();k++,out++)

grad[k] *= (1 - *out * *out); // gradient through tanh derivative

}

}

// compute gradient on parameters

productScaleAcc(layer_params_gradient[i-1],next_neurons_gradient,true,

neuron_extended_outputs_per_layer[i-1],false,

1,0);

}

}

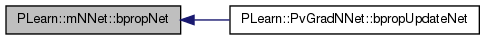

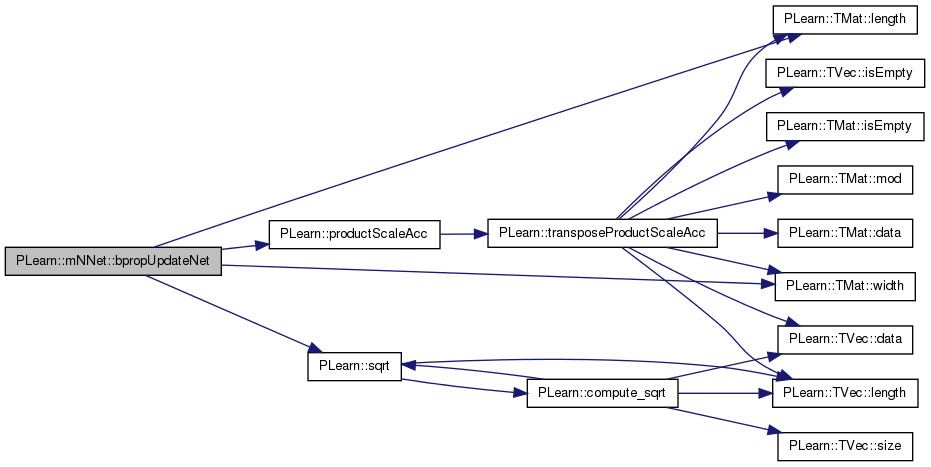

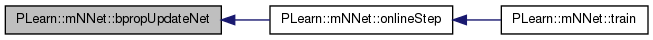

| void PLearn::mNNet::bpropUpdateNet | ( | const int | t | ) | [protected, virtual] |

Performs the backprop update.

Must be called after the fbpropNet and fbpropLoss.

Reimplemented in PLearn::PvGradNNet.

Definition at line 448 of file mNNet.cc.

References grad, i, init_lrate, j, layer_params, PLearn::TMat< T >::length(), lrate_decay, minibatch_size, n_layers, neuron_extended_outputs_per_layer, neuron_gradients_per_layer, neuron_outputs_per_layer, PLearn::productScaleAcc(), PLearn::sqrt(), weights, and PLearn::TMat< T >::width().

Referenced by onlineStep().

{

// mean gradient over minibatch_size examples has less variance

// can afford larger learning rate (divide by sqrt(minibatch)

// instead of minibatch)

real lrate = init_lrate/(1 + t*lrate_decay);

lrate /= sqrt(real(minibatch_size));

for (int i=n_layers-1;i>0;i--) {

// here neuron_gradients_per_layer[i] contains the gradient on

// activations (weighted sums)

// (minibatch_size x layer_size[i])

Mat previous_neurons_gradient = neuron_gradients_per_layer[i-1];

Mat next_neurons_gradient = neuron_gradients_per_layer[i];

Mat previous_neurons_output = neuron_outputs_per_layer[i-1];

if (i>1) // if not first hidden layer then compute gradient on previous layer

{

// propagate gradients

productScaleAcc(previous_neurons_gradient,next_neurons_gradient,false,

weights[i-1],false,1,0);

// propagate through tanh non-linearity

// TODO IN NEED OF OPTIMIZATION

for (int j=0;j<previous_neurons_gradient.length();j++) {

real* grad = previous_neurons_gradient[j];

real* out = previous_neurons_output[j];

for (int k=0;k<previous_neurons_gradient.width();k++,out++)

grad[k] *= (1 - *out * *out); // gradient through tanh derivative

}

}

// compute gradient on parameters and update them in one go (more

// efficient)

productScaleAcc(layer_params[i-1],next_neurons_gradient,true,

neuron_extended_outputs_per_layer[i-1],false,

-lrate,1);

}

}

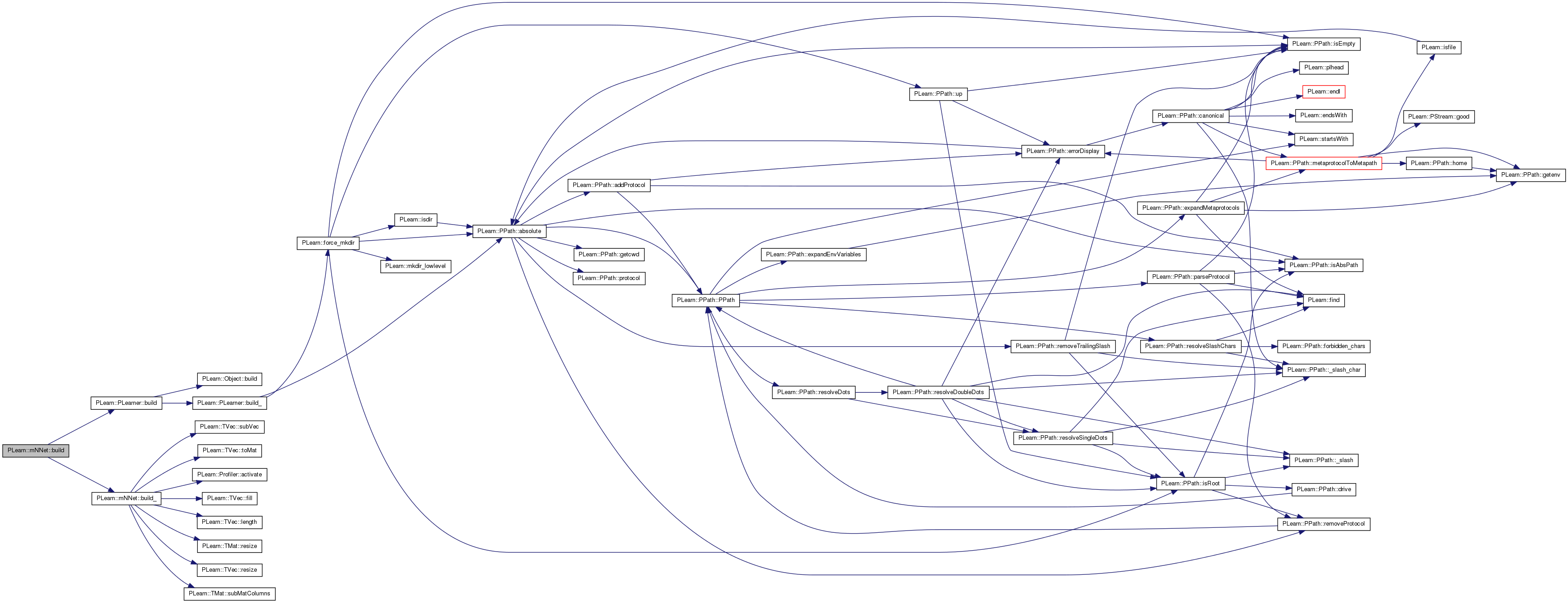

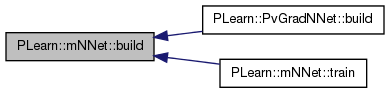

| void PLearn::mNNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 218 of file mNNet.cc.

References PLearn::PLearner::build(), and build_().

Referenced by PLearn::PvGradNNet::build(), and train().

{

inherited::build();

build_();

}

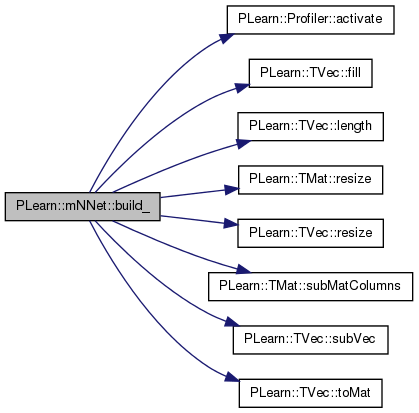

| void PLearn::mNNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 126 of file mNNet.cc.

References PLearn::Profiler::activate(), all_params, all_params_gradient, biases, PLearn::TVec< T >::fill(), hidden_layer_sizes, i, PLearn::PLearner::inputsize_, layer_params, layer_params_gradient, layer_sizes, PLearn::TVec< T >::length(), minibatch_size, n_layers, neuron_extended_outputs, neuron_extended_outputs_per_layer, neuron_gradients, neuron_gradients_per_layer, neuron_outputs_per_layer, noutputs, output_layer_L1_penalty_factor, output_type, PLASSERT_MSG, PLERROR, PLWARNING, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TVec< T >::subVec(), PLearn::PLearner::targetsize_, PLearn::TVec< T >::toMat(), PLearn::PLearner::train_set, and weights.

Referenced by build().

{

// *** Sanity checks ***

if (!train_set)

return;

if (output_type=="MSE")

{

if (noutputs<0) noutputs = targetsize_;

else PLASSERT_MSG(noutputs==targetsize_,"mNNet: noutputs should be -1 or match data's targetsize");

}

else if (output_type=="NLL")

{

// TODO add a check on noutput's value

if (noutputs<0)

PLERROR("mNNet: if output_type=NLL (classification), one \n"

"should provide noutputs = number of classes, or possibly\n"

"1 when 2 classes\n");

}

else if (output_type=="cross_entropy")

{

if(noutputs!=1)

PLERROR("mNNet: if output_type=cross_entropy, then \n"

"noutputs should be 1.\n");

}

else PLERROR("mNNet: output_type should be cross_entropy, NLL or MSE\n");

if( output_layer_L1_penalty_factor < 0. )

PLWARNING("mNNet::build_ - output_layer_L1_penalty_factor is negative!\n");

// *** Determine topology ***

inputsize_ = train_set->inputsize();

while (hidden_layer_sizes.length()>0 && hidden_layer_sizes[hidden_layer_sizes.length()-1]==0)

hidden_layer_sizes.resize(hidden_layer_sizes.length()-1);

n_layers = hidden_layer_sizes.length()+2;

layer_sizes.resize(n_layers);

layer_sizes.subVec(1,n_layers-2) << hidden_layer_sizes;

layer_sizes[0]=inputsize_;

layer_sizes[n_layers-1]=noutputs;

// *** Allocate memory for params and gradients ***

int n_params=0;

int n_neurons=0;

for (int i=0;i<n_layers-1;i++) {

n_neurons+=layer_sizes[i+1];

n_params+=layer_sizes[i+1]*(1+layer_sizes[i]);

}

all_params.resize(n_params);

all_params_gradient.resize(n_params);

// *** Set handles ***

layer_params.resize(n_layers-1);

layer_params_gradient.resize(n_layers-1);

biases.resize(n_layers-1);

weights.resize(n_layers-1);

for (int i=0,p=0;i<n_layers-1;i++) {

int np=layer_sizes[i+1]*(1+layer_sizes[i]);

layer_params[i]=all_params.subVec(p,np).toMat(layer_sizes[i+1],layer_sizes[i]+1);

biases[i]=layer_params[i].subMatColumns(0,1);

weights[i]=layer_params[i].subMatColumns(1,layer_sizes[i]); // weights[0] from layer 0 to layer 1

layer_params_gradient[i]=all_params_gradient.subVec(p,np).toMat(layer_sizes[i+1],layer_sizes[i]+1);

p+=np;

}

// *** Allocate memory for outputs and gradients on neurons ***

neuron_extended_outputs.resize(minibatch_size,layer_sizes[0]+1+n_neurons+n_layers);

neuron_gradients.resize(minibatch_size,n_neurons);

// *** Set handles and biases ***

neuron_outputs_per_layer.resize(n_layers); // layer 0 = input, layer n_layers-1 = output

neuron_extended_outputs_per_layer.resize(n_layers); // layer 0 = input, layer n_layers-1 = output

neuron_gradients_per_layer.resize(n_layers); // layer 0 not used

int k=0, kk=0;

for (int i=0;i<n_layers;i++)

{

neuron_extended_outputs_per_layer[i] = neuron_extended_outputs.subMatColumns(k,1+layer_sizes[i]);

neuron_extended_outputs_per_layer[i].column(0).fill(1.0); // for biases

neuron_outputs_per_layer[i]=neuron_extended_outputs_per_layer[i].subMatColumns(1,layer_sizes[i]);

k+=1+layer_sizes[i];

if(i>0) {

neuron_gradients_per_layer[i] = neuron_gradients.subMatColumns(kk,layer_sizes[i]);

kk+=layer_sizes[i];

}

}

Profiler::activate();

}

| string PLearn::mNNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PvGradNNet.

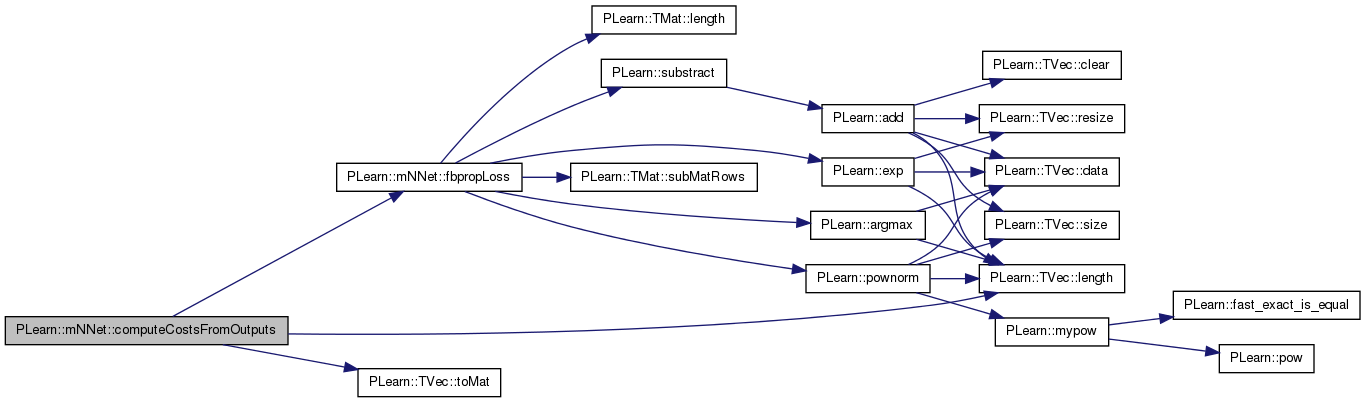

| void PLearn::mNNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 544 of file mNNet.cc.

References fbpropLoss(), PLearn::TVec< T >::length(), PLearn::TVec< T >::toMat(), and w.

{

Vec w(1);

w[0]=1;

Mat outputM = output.toMat(1,output.length());

Mat targetM = target.toMat(1,output.length());

Mat costsM = costs.toMat(1,costs.length());

fbpropLoss(outputM,targetM,w,costsM);

}

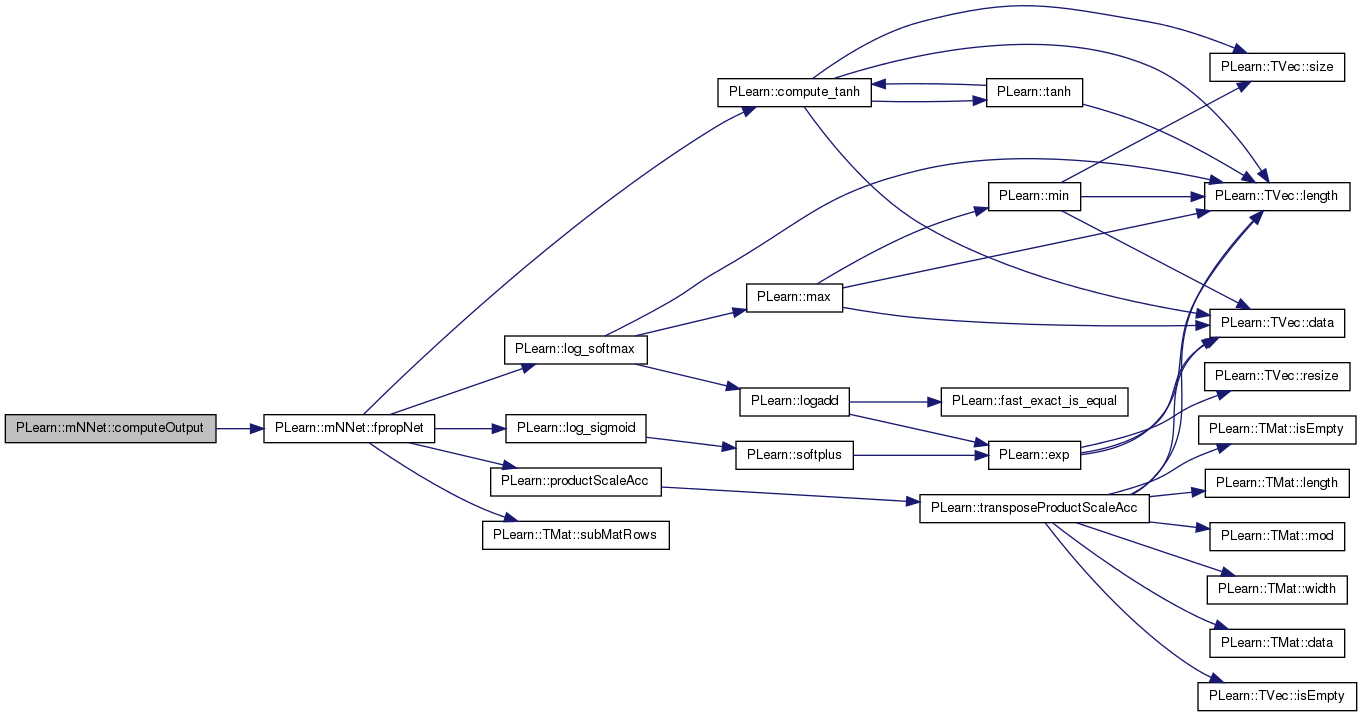

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 345 of file mNNet.cc.

References fpropNet(), n_layers, and neuron_outputs_per_layer.

{

neuron_outputs_per_layer[0](0) << input;

fpropNet(1);

output << neuron_outputs_per_layer[n_layers-1](0);

}

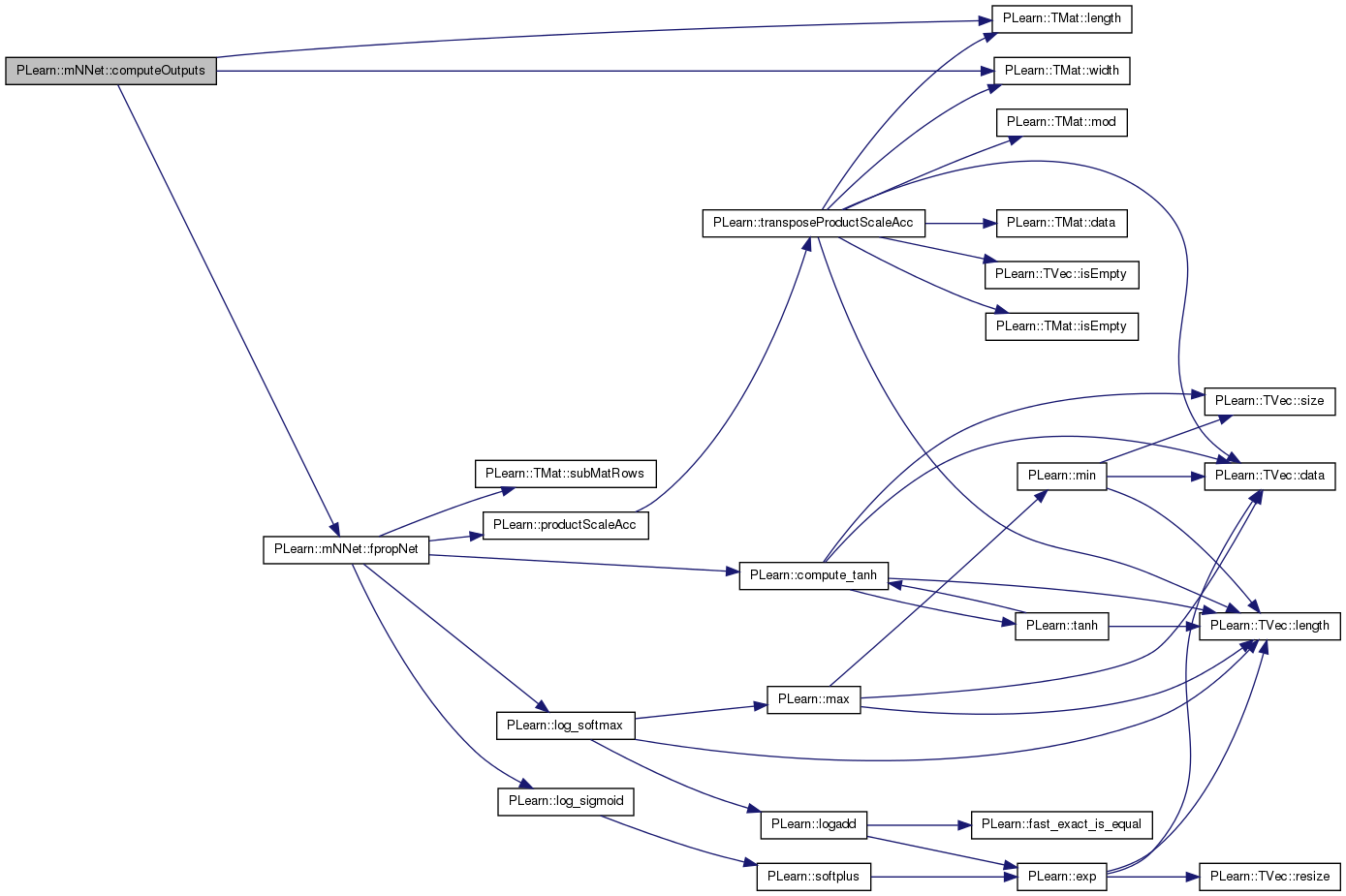

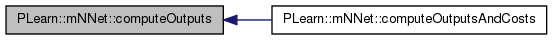

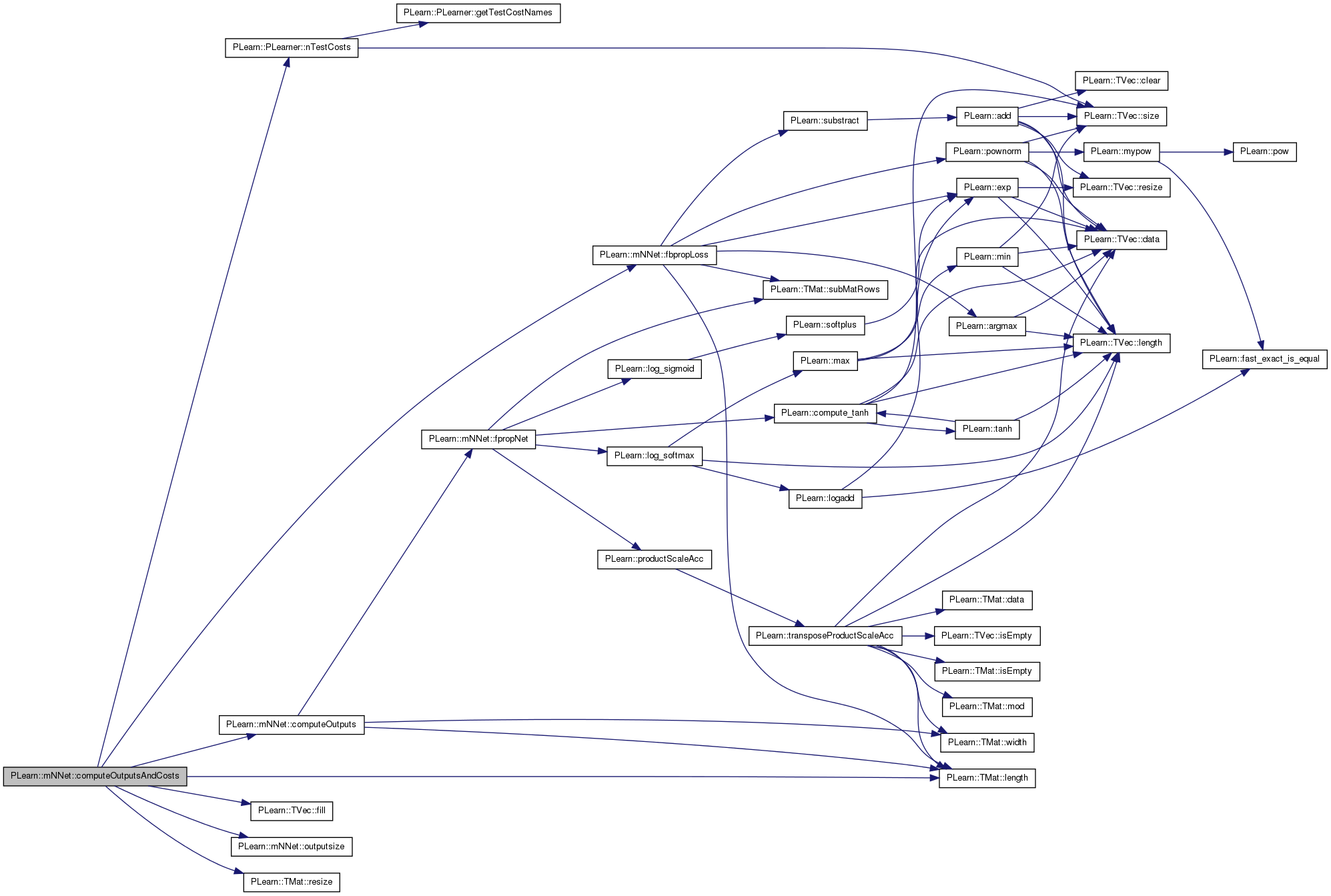

if it is more efficient to compute multipe outputs simultaneously, it can be advantageous to define the latter instead, in which each row of the matrices is associated with one example.

Reimplemented from PLearn::PLearner.

Definition at line 555 of file mNNet.cc.

References fpropNet(), PLearn::TMat< T >::length(), minibatch_size, n_layers, neuron_outputs_per_layer, PLASSERT, PLearn::PLearner::test_minibatch_size, and PLearn::TMat< T >::width().

Referenced by computeOutputsAndCosts().

{

PLASSERT(test_minibatch_size<=minibatch_size);

neuron_outputs_per_layer[0].subMat(0,0,input.length(),input.width()) << input;

fpropNet(input.length());

output << neuron_outputs_per_layer[n_layers-1].subMat(0,0,output.length(),output.width());

}

| void PLearn::mNNet::computeOutputsAndCosts | ( | const Mat & | input, |

| const Mat & | target, | ||

| Mat & | output, | ||

| Mat & | costs | ||

| ) | const [virtual] |

minibatch version of computeOutputAndCosts

Reimplemented from PLearn::PLearner.

Definition at line 562 of file mNNet.cc.

References computeOutputs(), fbpropLoss(), PLearn::TVec< T >::fill(), PLearn::TMat< T >::length(), n, PLearn::PLearner::nTestCosts(), outputsize(), PLASSERT, PLearn::TMat< T >::resize(), and w.

{//TODO

int n=input.length();

PLASSERT(target.length()==n);

output.resize(n,outputsize());

costs.resize(n,nTestCosts());

computeOutputs(input,output);

Vec w(n);

w.fill(1);

fbpropLoss(output,target,w,costs);

}

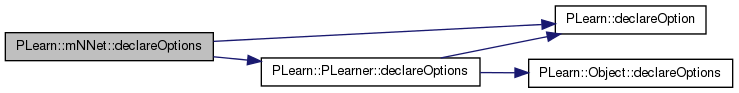

| void PLearn::mNNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 64 of file mNNet.cc.

References PLearn::OptionBase::buildoption, cumulative_training_time, PLearn::declareOption(), PLearn::PLearner::declareOptions(), hidden_layer_sizes, init_lrate, layer_params, layer_sizes, PLearn::OptionBase::learntoption, lrate_decay, minibatch_size, n_layers, noutputs, output_layer_L1_penalty_factor, and output_type.

Referenced by PLearn::PvGradNNet::declareOptions().

{

declareOption(ol, "noutputs", &mNNet::noutputs,

OptionBase::buildoption,

"Number of outputs of the neural network, which can be derived from output_type and targetsize_\n");

declareOption(ol, "hidden_layer_sizes", &mNNet::hidden_layer_sizes,

OptionBase::buildoption,

"Defines the architecture of the multi-layer neural network by\n"

"specifying the number of hidden units in each hidden layer.\n");

declareOption(ol, "init_lrate", &mNNet::init_lrate,

OptionBase::buildoption,

"Initial learning rate\n");

declareOption(ol, "lrate_decay", &mNNet::lrate_decay,

OptionBase::buildoption,

"Learning rate decay factor\n");

// TODO Why this dependance on test_minibatch_size?

declareOption(ol, "minibatch_size", &mNNet::minibatch_size,

OptionBase::buildoption,

"Update the parameters only so often (number of examples).\n"

"Must be greater or equal to test_minibatch_size\n");

declareOption(ol, "output_type",

&mNNet::output_type,

OptionBase::buildoption,

"type of output cost: 'cross_entropy' for binary classification,\n"

"'NLL' for classification problems, or 'MSE' for regression.\n");

declareOption(ol, "output_layer_L1_penalty_factor",

&mNNet::output_layer_L1_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L1 regularization term, i.e.\n"

"minimize L1_penalty_factor * sum_{ij} |weights(i,j)| during training.\n"

"Gets multiplied by the learning rate. Only on output layer!!");

declareOption(ol, "n_layers", &mNNet::n_layers,

OptionBase::learntoption,

"Number of layers of weights plus 1 (ie. 3 for a neural net with one hidden layer).\n"

"Needs not be specified explicitly (derived from hidden_layer_sizes).\n");

declareOption(ol, "layer_sizes", &mNNet::layer_sizes,

OptionBase::learntoption,

"Derived from hidden_layer_sizes, inputsize_ and noutputs\n");

declareOption(ol, "layer_params", &mNNet::layer_params,

OptionBase::learntoption,

"Parameters used while training, for each layer, organized as follows: layer_params[i] \n"

"is a matrix of dimension layer_sizes[i+1] x (layer_sizes[i]+1)\n"

"containing the neuron biases in its first column.\n");

declareOption(ol, "cumulative_training_time", &mNNet::cumulative_training_time,

OptionBase::learntoption,

"Cumulative training time since age=0, in seconds.\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::mNNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 132 of file mNNet.h.

:

//##### Protected Options ###############################################

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

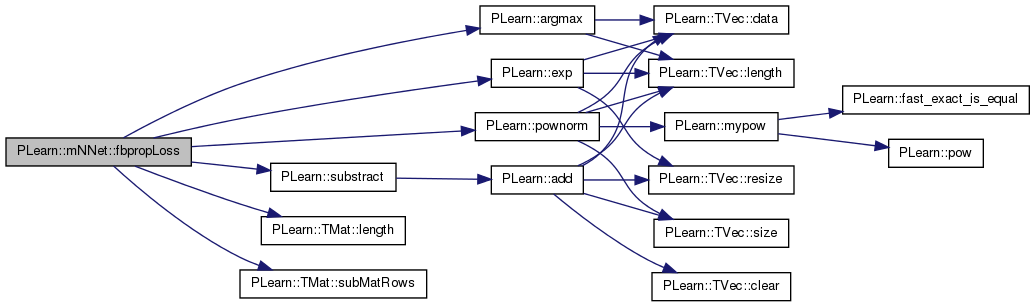

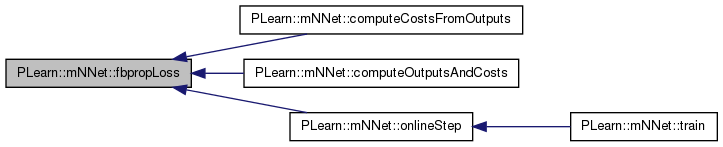

| void PLearn::mNNet::fbpropLoss | ( | const Mat & | output, |

| const Mat & | target, | ||

| const Vec & | example_weights, | ||

| Mat & | train_costs | ||

| ) | const [protected, virtual] |

compute train costs given the network top-layer output and write into neuron_gradients_per_layer[n_layers-2], gradient on pre-non-linearity activation

compute train costs given the (pre-final-non-linearity) network top-layer output

Definition at line 389 of file mNNet.cc.

References PLearn::argmax(), PLearn::exp(), grad, i, PLearn::TMat< T >::length(), minibatch_size, PLearn::PLearner::n_examples, n_layers, neuron_gradients_per_layer, noutputs, output_type, pl_log, PLERROR, PLearn::pownorm(), PLearn::TMat< T >::subMatRows(), and PLearn::substract().

Referenced by computeCostsFromOutputs(), computeOutputsAndCosts(), and onlineStep().

{

int n_examples = output.length();

Mat out_grad = neuron_gradients_per_layer[n_layers-1];

if (n_examples!=minibatch_size)

out_grad = out_grad.subMatRows(0,n_examples);

int target_class;

Vec outp, grad;

if (output_type=="NLL") {

for (int i=0;i<n_examples;i++) {

target_class = int(round(target(i,0)));

#ifdef BOUNDCHECK

if(target_class>=noutputs)

PLERROR("In mNNet::fbpropLoss one target value %d is higher then allowed by nout %d",

target_class, noutputs);

#endif

outp = output(i);

grad = out_grad(i);

exp(outp,grad); // map log-prob to prob

costs(i,0) = -outp[target_class];

costs(i,1) = (target_class == argmax(outp))?0:1;

grad[target_class]-=1;

if (example_weight[i]!=1.0)

costs(i,0) *= example_weight[i];

}

}

else if(output_type=="cross_entropy") {

for (int i=0;i<n_examples;i++) {

target_class = int(round(target(i,0)));

outp = output(i);

grad = out_grad(i);

exp(outp,grad); // map log-prob to prob

if( target_class == 1 ) {

costs(i,0) = - outp[0];

costs(i,1) = (grad[0]>0.5)?0:1;

} else {

costs(i,0) = - pl_log( 1.0 - grad[0] );

costs(i,1) = (grad[0]>0.5)?1:0;

}

grad[0] -= (real)target_class; // ?

if (example_weight[i]!=1.0)

costs(i,0) *= example_weight[i];

}

}

else // if (output_type=="MSE")

{

substract(output,target,out_grad);

for (int i=0;i<n_examples;i++) {

costs(i,0) = pownorm(out_grad(i));

if (example_weight[i]!=1.0) {

out_grad(i) *= example_weight[i];

costs(i,0) *= example_weight[i];

}

}

}

}

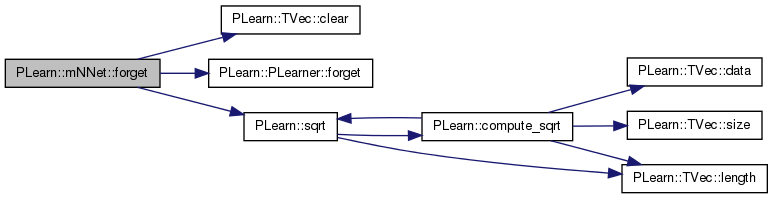

| void PLearn::mNNet::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

(Re-)initialize the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 253 of file mNNet.cc.

References biases, PLearn::TVec< T >::clear(), cumulative_training_time, PLearn::PLearner::forget(), i, layer_sizes, n_layers, PLearn::PLearner::random_gen, PLearn::sqrt(), PLearn::PLearner::stage, and weights.

Referenced by PLearn::PvGradNNet::forget().

{

inherited::forget();

for (int i=0;i<n_layers-1;i++)

{

real delta = 1/sqrt(real(layer_sizes[i]));

random_gen->fill_random_uniform(weights[i],-delta,delta);

biases[i].clear();

}

stage = 0;

cumulative_training_time=0.0;

}

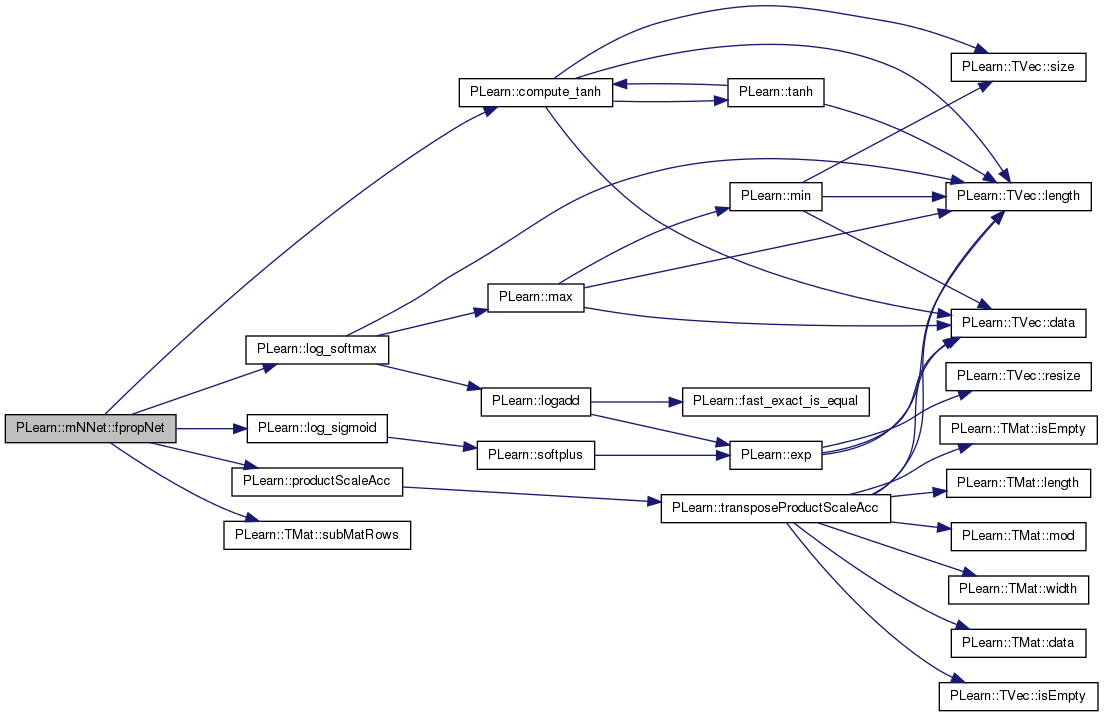

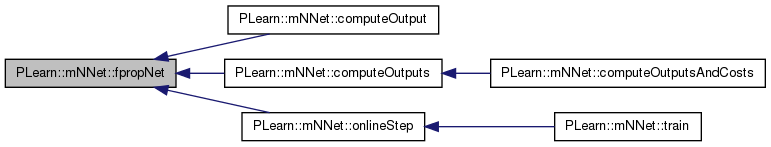

| void PLearn::mNNet::fpropNet | ( | int | n_examples | ) | const [protected, virtual] |

compute a minibatch of size n_examples network top-layer output given layer 0 output (= network input) (note that log-probabilities are computed for classification tasks, output_type=NLL)

compute (pre-final-non-linearity) network top-layer output given input

Definition at line 353 of file mNNet.cc.

References PLearn::compute_tanh(), i, layer_params, PLearn::log_sigmoid(), PLearn::log_softmax(), minibatch_size, PLearn::PLearner::n_examples, n_layers, neuron_extended_outputs_per_layer, neuron_outputs_per_layer, output_type, PLASSERT_MSG, PLearn::productScaleAcc(), and PLearn::TMat< T >::subMatRows().

Referenced by computeOutput(), computeOutputs(), and onlineStep().

{

PLASSERT_MSG(n_examples<=minibatch_size,"mNNet::fpropNet: nb input vectors treated should be <= minibatch_size\n");

for (int i=0;i<n_layers-1;i++)

{

Mat prev_layer = neuron_extended_outputs_per_layer[i];

Mat next_layer = neuron_outputs_per_layer[i+1];

if (n_examples!=minibatch_size) {

prev_layer = prev_layer.subMatRows(0,n_examples);

next_layer = next_layer.subMatRows(0,n_examples);

}

// try to use BLAS for the expensive operation

productScaleAcc(next_layer, prev_layer, false, layer_params[i], true, 1, 0);

// compute layer's output non-linearity

if (i+1<n_layers-1) {

for (int k=0;k<n_examples;k++) {

Vec L=next_layer(k);

compute_tanh(L,L);

}

} else if (output_type=="NLL") {

for (int k=0;k<n_examples;k++) {

Vec L=next_layer(k);

log_softmax(L,L);

}

} else if (output_type=="cross_entropy") {

for (int k=0;k<n_examples;k++) {

Vec L=next_layer(k);

log_sigmoid(L,L);

}

}

}

}

| OptionList & PLearn::mNNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PvGradNNet.

| OptionMap & PLearn::mNNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PvGradNNet.

| RemoteMethodMap & PLearn::mNNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PvGradNNet.

| TVec< string > PLearn::mNNet::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 576 of file mNNet.cc.

References output_type, and PLearn::TVec< T >::resize().

Referenced by getTrainCostNames().

{

TVec<string> costs;

if (output_type=="NLL")

{

costs.resize(3);

costs[0]="NLL";

costs[1]="class_error";

}

else if (output_type=="cross_entropy") {

costs.resize(3);

costs[0]="cross_entropy";

costs[1]="class_error";

}

else if (output_type=="MSE")

{

costs.resize(1);

costs[0]="MSE";

}

return costs;

}

| TVec< string > PLearn::mNNet::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 598 of file mNNet.cc.

References PLearn::TVec< T >::append(), and getTestCostNames().

Referenced by train().

{

TVec<string> costs = getTestCostNames();

costs.append("train_seconds");

costs.append("cum_train_seconds");

return costs;

}

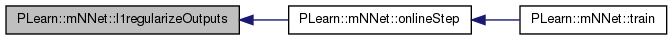

| void PLearn::mNNet::l1regularizeOutputs | ( | ) | [protected] |

Definition at line 519 of file mNNet.cc.

References PLearn::TVec< T >::data(), i, init_lrate, j, layer_params, PLearn::TVec< T >::length(), lrate_decay, minibatch_size, n_layers, output_layer_L1_penalty_factor, PLearn::sqrt(), and PLearn::PLearner::stage.

Referenced by onlineStep().

{

// mean gradient over minibatch_size examples has less variance

// can afford larger learning rate (divide by sqrt(minibatch)

// instead of minibatch)

real lrate = init_lrate/(1 + stage*lrate_decay);

lrate /= sqrt(real(minibatch_size));

// Output layer L1 regularization

if( output_layer_L1_penalty_factor != 0. ) {

real L1_delta = lrate * output_layer_L1_penalty_factor;

real* m_i = layer_params[n_layers-2].data();

for(int i=0; i<layer_params[n_layers-2].length();i++,m_i+=layer_params[n_layers-2].mod()) {

for(int j=0; j<layer_params[n_layers-2].width(); j++) {

if( m_i[j] > L1_delta )

m_i[j] -= L1_delta;

else if( m_i[j] < -L1_delta )

m_i[j] += L1_delta;

else

m_i[j] = 0.;

}

}

}

}

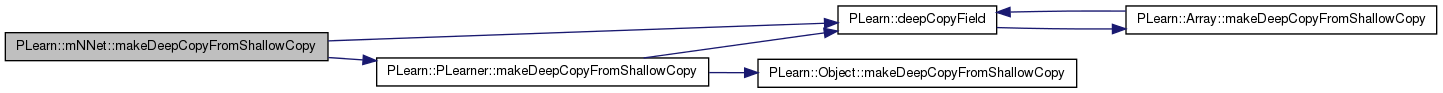

| void PLearn::mNNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Definition at line 225 of file mNNet.cc.

References all_params, all_params_gradient, biases, PLearn::deepCopyField(), example_weights, hidden_layer_sizes, layer_params, layer_params_gradient, layer_sizes, PLearn::PLearner::makeDeepCopyFromShallowCopy(), neuron_extended_outputs, neuron_extended_outputs_per_layer, neuron_gradients, neuron_gradients_per_layer, neuron_outputs_per_layer, targets, train_costs, and weights.

Referenced by PLearn::PvGradNNet::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(hidden_layer_sizes, copies);

deepCopyField(layer_sizes, copies);

deepCopyField(all_params, copies);

deepCopyField(biases, copies);

deepCopyField(weights, copies);

deepCopyField(layer_params, copies);

deepCopyField(all_params_gradient, copies);

deepCopyField(layer_params_gradient, copies);

deepCopyField(neuron_gradients, copies);

deepCopyField(neuron_gradients_per_layer, copies);

deepCopyField(neuron_extended_outputs, copies);

deepCopyField(neuron_extended_outputs_per_layer, copies);

deepCopyField(neuron_outputs_per_layer, copies);

deepCopyField(targets, copies);

deepCopyField(example_weights, copies);

deepCopyField(train_costs, copies);

}

| void PLearn::mNNet::onlineStep | ( | int | t, |

| const Mat & | targets, | ||

| Mat & | train_costs, | ||

| Vec | example_weights | ||

| ) | [protected, virtual] |

One minibatch training step.

Definition at line 333 of file mNNet.cc.

References bpropUpdateNet(), fbpropLoss(), fpropNet(), l1regularizeOutputs(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), minibatch_size, n_layers, neuron_outputs_per_layer, and PLASSERT.

Referenced by train().

{

PLASSERT(targets.length()==minibatch_size && train_costs.length()==minibatch_size && example_weights.length()==minibatch_size);

fpropNet(minibatch_size);

fbpropLoss(neuron_outputs_per_layer[n_layers-1],targets,example_weights,train_costs);

bpropUpdateNet(t);

l1regularizeOutputs();

}

| int PLearn::mNNet::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 248 of file mNNet.cc.

References noutputs.

Referenced by computeOutputsAndCosts().

{

return noutputs;

}

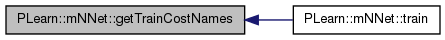

| void PLearn::mNNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 269 of file mNNet.cc.

References b, build(), cumulative_training_time, PLearn::Profiler::end(), example_weights, PLearn::TVec< T >::fill(), PLearn::TMat< T >::fill(), PLearn::VMat::getExample(), PLearn::Profiler::getStats(), getTrainCostNames(), i, PLearn::PLearner::inputsize_, PLearn::VMat::length(), PLearn::TVec< T >::length(), minibatch_size, MISSING_VALUE, neuron_outputs_per_layer, PLearn::PLearner::nstages, onlineStep(), PLERROR, PLearn::Profiler::report(), PLearn::Profiler::reset(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::sample(), PLearn::PLearner::setTrainStatsCollector(), PLearn::PLearner::stage, PLearn::Profiler::start(), PLearn::TVec< T >::subVec(), PLearn::Profiler::Stats::system_duration, targets, PLearn::PLearner::targetsize(), PLearn::Profiler::ticksPerSecond(), train_costs, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::Profiler::Stats::user_duration, PLearn::PLearner::verbosity, and PLearn::TMat< T >::width().

{

if (inputsize_<0)

build();

if(!train_set)

PLERROR("In NNet::train, you did not setTrainingSet");

if(!train_stats)

setTrainStatsCollector(new VecStatsCollector());

targets.resize(minibatch_size,targetsize()); // the train_set's targetsize()

example_weights.resize(minibatch_size);

TVec<string> train_cost_names = getTrainCostNames() ;

train_costs.resize(minibatch_size,train_cost_names.length()-2);

train_costs.fill(MISSING_VALUE) ;

Vec costs_plus_time(train_costs.width()+2);

costs_plus_time[train_costs.width()] = MISSING_VALUE;

costs_plus_time[train_costs.width()+1] = MISSING_VALUE;

Vec costs = costs_plus_time.subVec(0,train_costs.width());

train_stats->forget();

int b, sample, nsamples;

nsamples = train_set->length();

Vec input,target; // TODO discard these variables.

Profiler::reset("training");

Profiler::start("training");

for( ; stage<nstages; stage++)

{

sample = stage % nsamples;

b = stage % minibatch_size;

input = neuron_outputs_per_layer[0](b);

target = targets(b);

train_set->getExample(sample, input, target, example_weights[b]);

if (b+1==minibatch_size) // TODO do also special end-case || stage+1==nstages)

{

onlineStep(stage, targets, train_costs, example_weights );

for (int i=0;i<minibatch_size;i++) {

costs << train_costs(b); // TODO Is the copy necessary? Might be

// better to waste some memory in

// train_costs instead

train_stats->update( costs_plus_time );

}

}

}

Profiler::end("training");

if (verbosity>0)

Profiler::report(cout);

// Take care of the timing stats.

const Profiler::Stats& stats = Profiler::getStats("training");

costs.fill(MISSING_VALUE);

real ticksPerSec = Profiler::ticksPerSecond();

real cpu_time = (stats.user_duration+stats.system_duration)/ticksPerSec;

cumulative_training_time += cpu_time;

costs_plus_time[train_costs.width()] = cpu_time;

costs_plus_time[train_costs.width()+1] = cumulative_training_time;

train_stats->update( costs_plus_time );

train_stats->finalize(); // finalize statistics for this epoch

}

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PvGradNNet.

Vec PLearn::mNNet::all_params [protected] |

All the parameters in one vector.

Definition at line 153 of file mNNet.h.

Referenced by build_(), PLearn::PvGradNNet::build_(), PLearn::PvGradNNet::discountGrad(), makeDeepCopyFromShallowCopy(), PLearn::PvGradNNet::neuronDiscountGrad(), and PLearn::PvGradNNet::pvGrad().

Vec PLearn::mNNet::all_params_gradient [protected] |

Gradient structures - reflect the parameter structures.

Definition at line 163 of file mNNet.h.

Referenced by build_(), PLearn::PvGradNNet::discountGrad(), makeDeepCopyFromShallowCopy(), PLearn::PvGradNNet::neuronDiscountGrad(), and PLearn::PvGradNNet::pvGrad().

TVec<Mat> PLearn::mNNet::biases [protected] |

Alternate access to the params - one matrix per layer.

Definition at line 155 of file mNNet.h.

Referenced by build_(), forget(), and makeDeepCopyFromShallowCopy().

real PLearn::mNNet::cumulative_training_time [protected] |

Holds training time, an additional cost.

Definition at line 183 of file mNNet.h.

Referenced by declareOptions(), forget(), and train().

Vec PLearn::mNNet::example_weights [protected] |

Definition at line 179 of file mNNet.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

sizes of hidden layers

Definition at line 60 of file mNNet.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

initial learning rate

Definition at line 63 of file mNNet.h.

Referenced by bpropUpdateNet(), declareOptions(), and l1regularizeOutputs().

TVec<Mat> PLearn::mNNet::layer_params [protected] |

Alternate access to the params - one matrix of dimension layer_sizes[i+1] x (layer_sizes[i]+1) per layer (neuron biases in the first column)

Definition at line 160 of file mNNet.h.

Referenced by bpropUpdateNet(), build_(), declareOptions(), fpropNet(), l1regularizeOutputs(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::mNNet::layer_params_gradient [protected] |

Definition at line 164 of file mNNet.h.

Referenced by bpropNet(), build_(), and makeDeepCopyFromShallowCopy().

TVec<int> PLearn::mNNet::layer_sizes [protected] |

layer sizes (derived from hidden_layer_sizes, inputsize_ and outputsize_)

Definition at line 150 of file mNNet.h.

Referenced by build_(), PLearn::PvGradNNet::build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and PLearn::PvGradNNet::neuronDiscountGrad().

learning rate decay factor

Definition at line 66 of file mNNet.h.

Referenced by bpropUpdateNet(), declareOptions(), and l1regularizeOutputs().

update the parameters only so often

Definition at line 69 of file mNNet.h.

Referenced by bpropUpdateNet(), build_(), computeOutputs(), declareOptions(), fbpropLoss(), fpropNet(), l1regularizeOutputs(), onlineStep(), and train().

int PLearn::mNNet::n_layers [protected] |

number of layers of weights (2 for a neural net with one hidden layer)

Definition at line 147 of file mNNet.h.

Referenced by bpropNet(), bpropUpdateNet(), build_(), PLearn::PvGradNNet::build_(), computeOutput(), computeOutputs(), declareOptions(), fbpropLoss(), forget(), fpropNet(), l1regularizeOutputs(), PLearn::PvGradNNet::neuronDiscountGrad(), and onlineStep().

Mat PLearn::mNNet::neuron_extended_outputs [mutable, protected] |

Gradients on the neurons - same structure as for outputs.

Definition at line 173 of file mNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::mNNet::neuron_extended_outputs_per_layer [mutable, protected] |

Definition at line 174 of file mNNet.h.

Referenced by bpropNet(), bpropUpdateNet(), build_(), fpropNet(), and makeDeepCopyFromShallowCopy().

Mat PLearn::mNNet::neuron_gradients [protected] |

Outputs of the neurons.

Definition at line 167 of file mNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::mNNet::neuron_gradients_per_layer [protected] |

Definition at line 169 of file mNNet.h.

Referenced by bpropNet(), bpropUpdateNet(), build_(), fbpropLoss(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::mNNet::neuron_outputs_per_layer [mutable, protected] |

Definition at line 176 of file mNNet.h.

Referenced by bpropNet(), bpropUpdateNet(), build_(), computeOutput(), computeOutputs(), fpropNet(), makeDeepCopyFromShallowCopy(), onlineStep(), and train().

number of outputs to the network

Definition at line 57 of file mNNet.h.

Referenced by build_(), declareOptions(), fbpropLoss(), and outputsize().

L1 penalty applied to the output layer's parameters.

Definition at line 75 of file mNNet.h.

Referenced by build_(), declareOptions(), and l1regularizeOutputs().

| string PLearn::mNNet::output_type |

type of output cost: "NLL" for classification problems, "MSE" for regression

Definition at line 72 of file mNNet.h.

Referenced by build_(), declareOptions(), fbpropLoss(), fpropNet(), and getTestCostNames().

Mat PLearn::mNNet::targets [protected] |

Definition at line 178 of file mNNet.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::mNNet::train_costs [protected] |

Definition at line 180 of file mNNet.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

TVec<Mat> PLearn::mNNet::weights [protected] |

Definition at line 156 of file mNNet.h.

Referenced by bpropNet(), bpropUpdateNet(), build_(), forget(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4