|

PLearn 0.1

|

|

PLearn 0.1

|

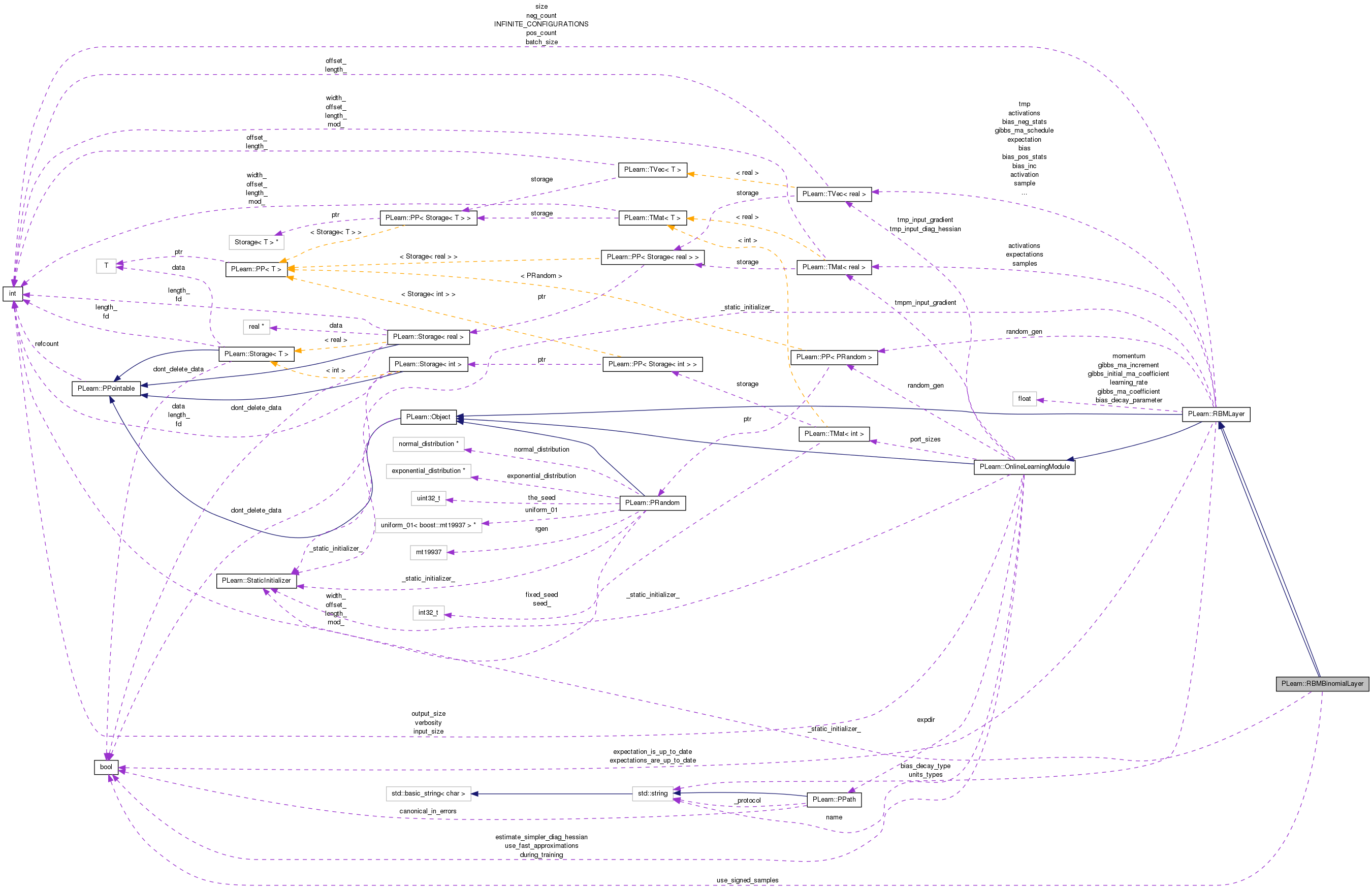

Layer in an RBM formed with binomial units. More...

#include <RBMBinomialLayer.h>

Public Member Functions | |

| RBMBinomialLayer () | |

| Default constructor. | |

| RBMBinomialLayer (int the_size) | |

| Constructor from the number of units. | |

| virtual void | getUnitActivations (int i, PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of all units in this layer. | |

| virtual void | generateSample () |

| generate a sample, and update the sample field | |

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMBinomialLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| RBMBinomialLayer (real the_learning_rate=0.) | |

| Default constructor. | |

| RBMBinomialLayer (int the_size, real the_learning_rate=0.) | |

| Constructor from the number of units. | |

| virtual void | generateSample () |

| generate a sample, and update the sample field | |

| virtual void | generateSamples () |

| Inherited. | |

| virtual void | computeExpectation () |

| Compute expectation. | |

| virtual void | computeExpectations () |

| Compute mini-batch expectations. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| forward propagation | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Batch forward propagation. | |

| virtual void | fprop (const Vec &input, const Vec &rbm_bias, Vec &output) const |

| forward propagation with provided bias | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| back-propagates the output gradient to the input | |

| virtual void | bpropUpdate (const Vec &input, const Vec &rbm_bias, const Vec &output, Vec &input_gradient, Vec &rbm_bias_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input and the bias | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| Back-propagate the output gradient to the input, and update parameters. | |

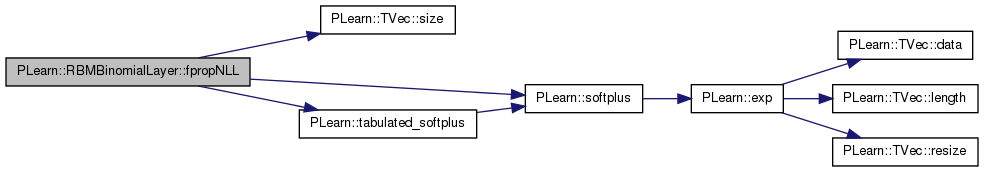

| virtual real | fpropNLL (const Vec &target) |

| Computes the negative log-likelihood of target given the internal activations of the layer. | |

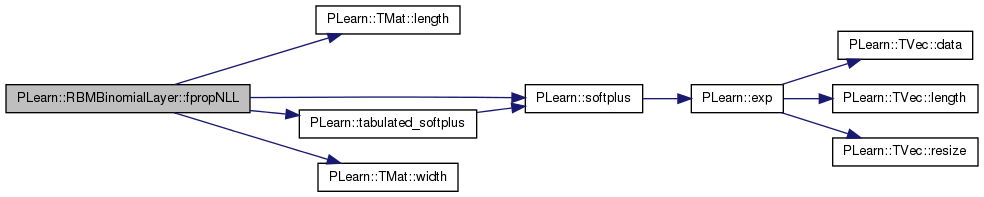

| virtual void | fpropNLL (const Mat &targets, const Mat &costs_column) |

| virtual real | fpropNLL (const Vec &target, const Vec &weights) |

| Computes the weighted negative log-likelihood of target given the internal activations of the layer. | |

| virtual void | bpropNLL (const Vec &target, real nll, Vec &bias_gradient) |

| Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations. | |

| virtual void | bpropNLL (const Mat &targets, const Mat &costs_column, Mat &bias_gradients) |

| virtual real | energy (const Vec &unit_values) const |

| compute -bias' unit_values | |

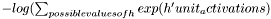

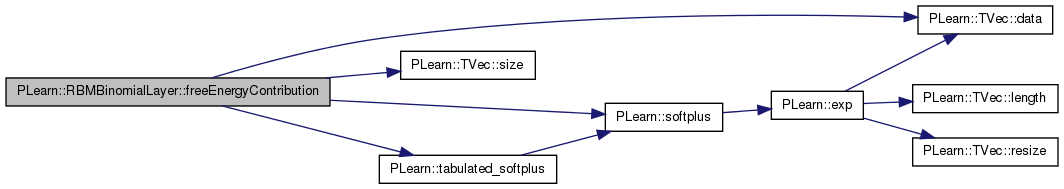

| virtual real | freeEnergyContribution (const Vec &unit_activations) const |

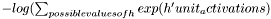

Computes  ) This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. ) This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. | |

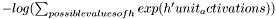

| virtual void | freeEnergyContributionGradient (const Vec &unit_activations, Vec &unit_activations_gradient, real output_gradient=1, bool accumulate=false) const |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations. with respect to unit_activations. | |

| virtual int | getConfigurationCount () |

| Returns a number of different configurations the layer can be in. | |

| virtual void | getConfiguration (int conf_index, Vec &output) |

| Computes the conf_index configuration of the layer. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMBinomialLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | use_signed_samples |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef RBMLayer | inherited |

| typedef RBMLayer | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_ () |

| This does the actual building. | |

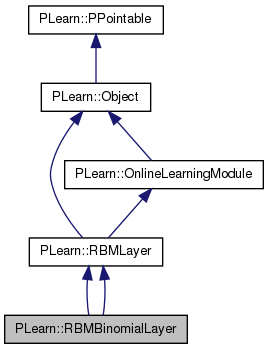

Layer in an RBM formed with binomial units.

Definition at line 53 of file DEPRECATED/RBMBinomialLayer.h.

typedef RBMLayer PLearn::RBMBinomialLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 55 of file DEPRECATED/RBMBinomialLayer.h.

typedef RBMLayer PLearn::RBMBinomialLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 54 of file RBMBinomialLayer.h.

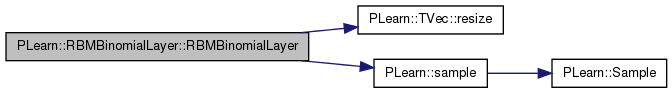

| PLearn::RBMBinomialLayer::RBMBinomialLayer | ( | ) |

| PLearn::RBMBinomialLayer::RBMBinomialLayer | ( | int | the_size | ) |

Constructor from the number of units.

Definition at line 55 of file DEPRECATED/RBMBinomialLayer.cc.

References PLearn::TVec< T >::resize(), and PLearn::sample().

{

size = the_size;

units_types = string( the_size, 'l' );

activations.resize( the_size );

sample.resize( the_size );

expectation.resize( the_size );

expectation_is_up_to_date = false;

}

| PLearn::RBMBinomialLayer::RBMBinomialLayer | ( | real | the_learning_rate = 0. | ) |

Default constructor.

Definition at line 53 of file RBMBinomialLayer.cc.

:

inherited( the_learning_rate ),

use_signed_samples( false )

{

}

Constructor from the number of units.

Definition at line 59 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::activation, PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_neg_stats, PLearn::RBMLayer::bias_pos_stats, PLearn::RBMLayer::expectation, PLearn::TVec< T >::resize(), PLearn::RBMLayer::sample, and PLearn::RBMLayer::size.

:

inherited( the_learning_rate ),

use_signed_samples( false )

{

size = the_size;

activation.resize( the_size );

sample.resize( the_size );

expectation.resize( the_size );

bias.resize( the_size );

bias_pos_stats.resize( the_size );

bias_neg_stats.resize( the_size );

}

| string PLearn::RBMBinomialLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| static string PLearn::RBMBinomialLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| OptionList & PLearn::RBMBinomialLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| static OptionList& PLearn::RBMBinomialLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| RemoteMethodMap & PLearn::RBMBinomialLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| static RemoteMethodMap& PLearn::RBMBinomialLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

Reimplemented from PLearn::RBMLayer.

| static Object* PLearn::RBMBinomialLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

| Object * PLearn::RBMBinomialLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| StaticInitializer RBMBinomialLayer::_static_initializer_ & PLearn::RBMBinomialLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| static void PLearn::RBMBinomialLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

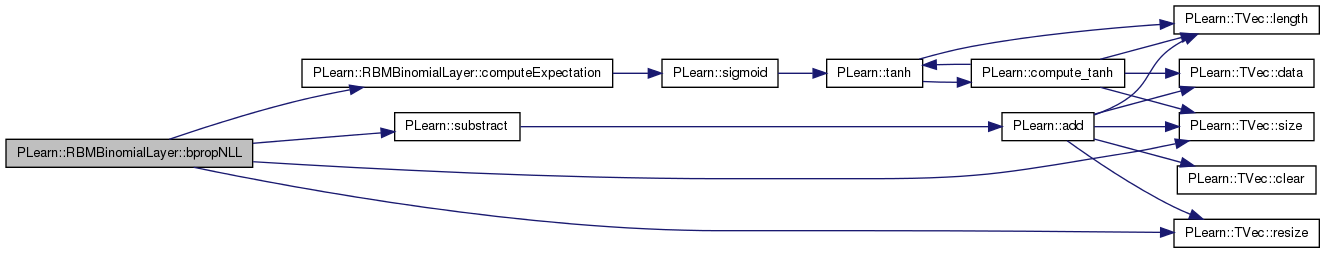

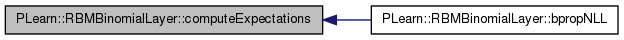

| void PLearn::RBMBinomialLayer::bpropNLL | ( | const Vec & | target, |

| real | nll, | ||

| Vec & | bias_gradient | ||

| ) | [virtual] |

Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations.

Reimplemented from PLearn::RBMLayer.

Definition at line 641 of file RBMBinomialLayer.cc.

References computeExpectation(), PLearn::RBMLayer::expectation, PLearn::OnlineLearningModule::input_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::substract().

{

computeExpectation();

PLASSERT( target.size() == input_size );

bias_gradient.resize( size );

// bias_gradient = expectation - target

substract(expectation, target, bias_gradient);

}

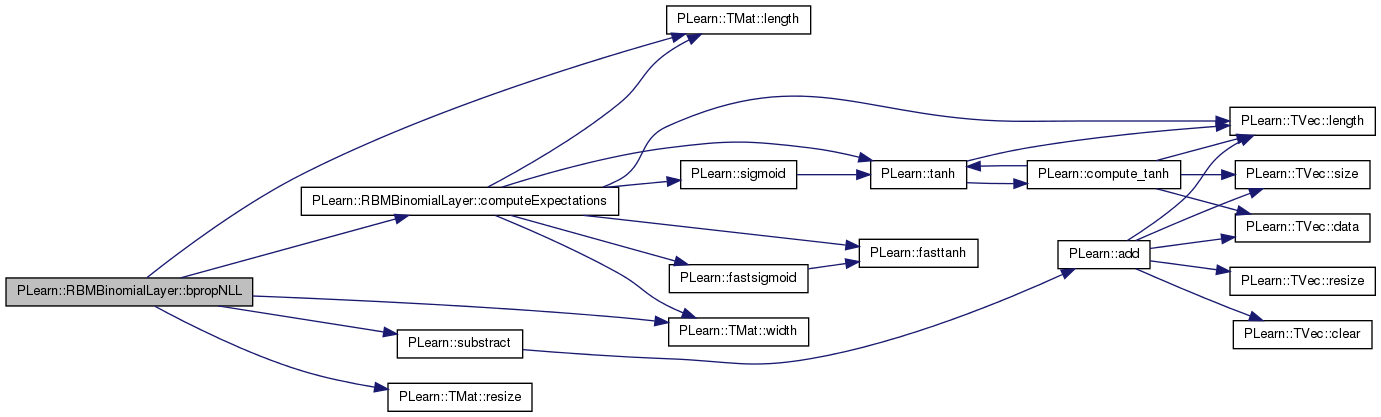

| void PLearn::RBMBinomialLayer::bpropNLL | ( | const Mat & | targets, |

| const Mat & | costs_column, | ||

| Mat & | bias_gradients | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 652 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::batch_size, computeExpectations(), PLearn::RBMLayer::expectations, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), PLearn::substract(), and PLearn::TMat< T >::width().

{

computeExpectations();

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

bias_gradients.resize( batch_size, size );

// bias_gradients = expectations - targets

substract(expectations, targets, bias_gradients);

}

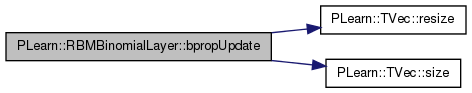

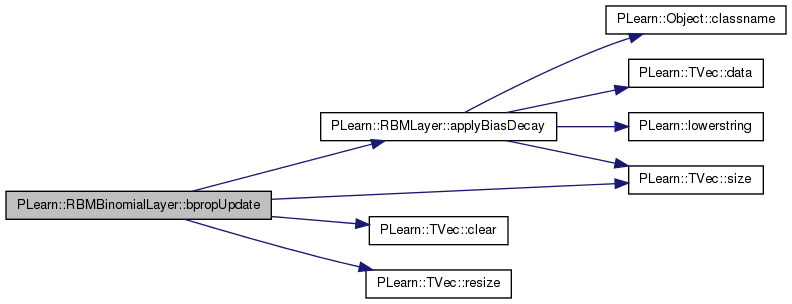

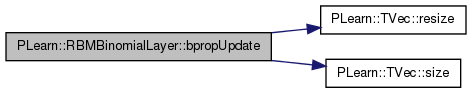

| void PLearn::RBMBinomialLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 102 of file DEPRECATED/RBMBinomialLayer.cc.

References i, PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

input_gradient.resize( size );

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

input_gradient[i] = - output_i * (1-output_i) * output_gradient[i];

}

}

| void PLearn::RBMBinomialLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 257 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::applyBiasDecay(), PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_inc, PLearn::TVec< T >::clear(), i, PLearn::RBMLayer::learning_rate, PLearn::RBMLayer::momentum, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::RBMLayer::size, PLearn::TVec< T >::size(), and use_signed_samples.

{

PLASSERT( input.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( size );

input_gradient.clear();

}

if( momentum != 0. )

bias_inc.resize( size );

if( use_signed_samples )

{

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

real in_grad_i;

in_grad_i = (1 - output_i * output_i) * output_gradient[i];

input_gradient[i] += in_grad_i;

if( momentum == 0. )

{

// update the bias: bias -= learning_rate * input_gradient

bias[i] -= learning_rate * in_grad_i;

}

else

{

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

}

}

}

else

{

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

real in_grad_i;

in_grad_i = output_i * (1-output_i) * output_gradient[i];

input_gradient[i] += in_grad_i;

if( momentum == 0. )

{

// update the bias: bias -= learning_rate * input_gradient

bias[i] -= learning_rate * in_grad_i;

}

else

{

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

}

}

}

applyBiasDecay();

}

| void PLearn::RBMBinomialLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| Vec & | rbm_bias_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input and the bias

TODO: add "accumulate" here.

Reimplemented from PLearn::RBMLayer.

Definition at line 427 of file RBMBinomialLayer.cc.

References i, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, and use_signed_samples.

{

PLASSERT( input.size() == size );

PLASSERT( rbm_bias.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

input_gradient.resize( size );

rbm_bias_gradient.resize( size );

if( use_signed_samples )

{

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

input_gradient[i] = ( 1 - output_i * output_i ) * output_gradient[i];

}

}

else

{

for( int i=0 ; i<size ; i++ )

{

real output_i = output[i];

input_gradient[i] = output_i * (1-output_i) * output_gradient[i];

}

}

rbm_bias_gradient << input_gradient;

}

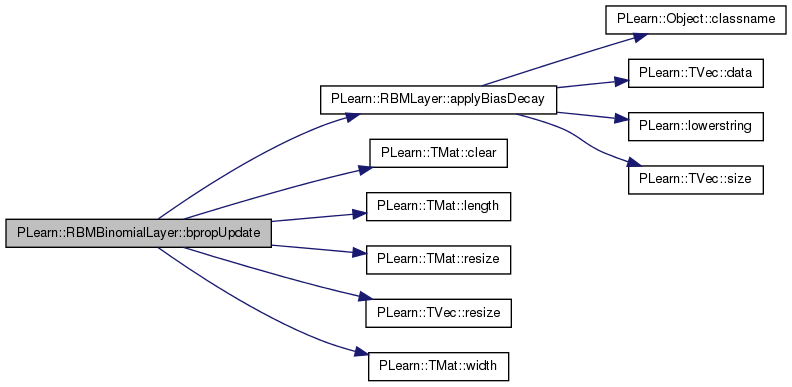

| void PLearn::RBMBinomialLayer::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Back-propagate the output gradient to the input, and update parameters.

Implements PLearn::RBMLayer.

Definition at line 331 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::applyBiasDecay(), PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_inc, PLearn::TMat< T >::clear(), i, j, PLearn::RBMLayer::learning_rate, PLearn::TMat< T >::length(), PLearn::RBMLayer::momentum, PLASSERT, PLASSERT_MSG, PLERROR, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::RBMLayer::size, use_signed_samples, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == size );

PLASSERT( outputs.width() == size );

PLASSERT( output_gradients.width() == size );

int mbatch_size = inputs.length();

PLASSERT( outputs.length() == mbatch_size );

PLASSERT( output_gradients.length() == mbatch_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == size &&

input_gradients.length() == mbatch_size,

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(mbatch_size, size);

input_gradients.clear();

}

if( momentum != 0. )

bias_inc.resize( size );

// TODO Can we do this more efficiently? (using BLAS)

// We use the average gradient over the mini-batch.

real avg_lr = learning_rate / inputs.length();

if( use_signed_samples )

{

for (int j = 0; j < mbatch_size; j++)

{

for( int i=0 ; i<size ; i++ )

{

real output_i = outputs(j, i);

real in_grad_i;

in_grad_i = (1 - output_i * output_i) * output_gradients(j, i);

input_gradients(j, i) += in_grad_i;

if( momentum == 0. )

{

// update the bias: bias -= learning_rate * input_gradient

bias[i] -= avg_lr * in_grad_i;

}

else

{

PLERROR("In RBMBinomialLayer:bpropUpdate - Not implemented for "

"momentum with mini-batches");

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

}

}

}

}

else

{

for (int j = 0; j < mbatch_size; j++)

{

for( int i=0 ; i<size ; i++ )

{

real output_i = outputs(j, i);

real in_grad_i;

in_grad_i = output_i * (1-output_i) * output_gradients(j, i);

input_gradients(j, i) += in_grad_i;

if( momentum == 0. )

{

// update the bias: bias -= learning_rate * input_gradient

bias[i] -= avg_lr * in_grad_i;

}

else

{

PLERROR("In RBMBinomialLayer:bpropUpdate - Not implemented for "

"momentum with mini-batches");

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

}

}

}

}

applyBiasDecay();

}

| void PLearn::RBMBinomialLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

Definition at line 144 of file DEPRECATED/RBMBinomialLayer.cc.

{

inherited::build();

build_();

}

| virtual void PLearn::RBMBinomialLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

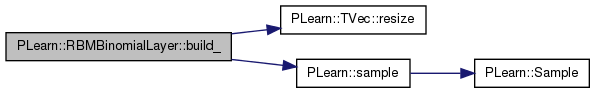

| void PLearn::RBMBinomialLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

Definition at line 131 of file DEPRECATED/RBMBinomialLayer.cc.

References PLearn::TVec< T >::resize(), and PLearn::sample().

{

if( size < 0 )

size = int(units_types.size());

if( size != (int) units_types.size() )

units_types = string( size, 'l' );

activations.resize( size );

sample.resize( size );

expectation.resize( size );

expectation_is_up_to_date = false;

}

| void PLearn::RBMBinomialLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

| virtual string PLearn::RBMBinomialLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| string PLearn::RBMBinomialLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

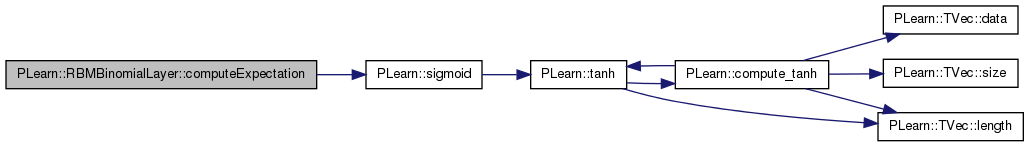

| void PLearn::RBMBinomialLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

Definition at line 91 of file DEPRECATED/RBMBinomialLayer.cc.

References i, and PLearn::sigmoid().

Referenced by bpropNLL().

{

if( expectation_is_up_to_date )

return;

for( int i=0 ; i<size ; i++ )

expectation[i] = sigmoid( -activations[i] );

expectation_is_up_to_date = true;

}

| virtual void PLearn::RBMBinomialLayer::computeExpectation | ( | ) | [virtual] |

Compute expectation.

Implements PLearn::RBMLayer.

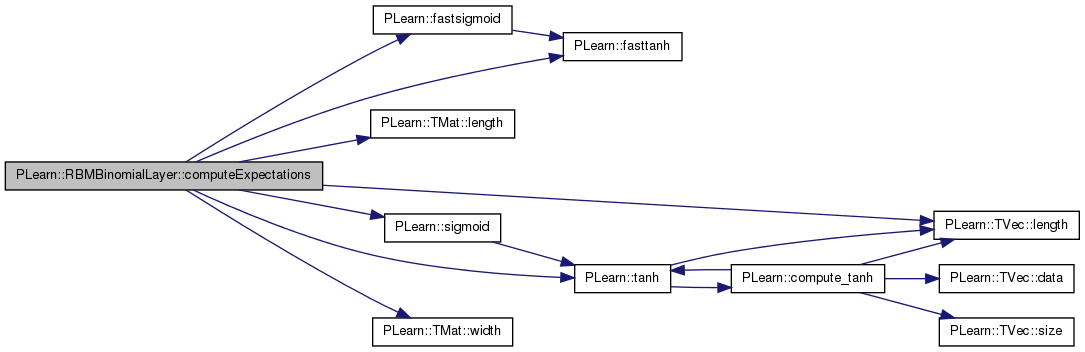

| void PLearn::RBMBinomialLayer::computeExpectations | ( | ) | [virtual] |

Compute mini-batch expectations.

Implements PLearn::RBMLayer.

Definition at line 150 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::activations, PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, PLearn::fastsigmoid(), PLearn::fasttanh(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLASSERT, PLearn::sigmoid(), PLearn::RBMLayer::size, PLearn::tanh(), PLearn::OnlineLearningModule::use_fast_approximations, use_signed_samples, and PLearn::TMat< T >::width().

Referenced by bpropNLL().

{

PLASSERT( activations.length() == batch_size );

if( expectations_are_up_to_date )

return;

PLASSERT( expectations.width() == size

&& expectations.length() == batch_size );

if( use_signed_samples )

if (use_fast_approximations)

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

expectations(k, i) = fasttanh(activations(k, i));

else

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

expectations(k, i) = tanh(activations(k, i));

else

if (use_fast_approximations)

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

expectations(k, i) = fastsigmoid(activations(k, i));

else

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

expectations(k, i) = sigmoid(activations(k, i));

expectations_are_up_to_date = true;

}

| void PLearn::RBMBinomialLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

Definition at line 120 of file DEPRECATED/RBMBinomialLayer.cc.

{

/*

declareOption(ol, "size", &RBMBinomialLayer::size,

OptionBase::buildoption,

"Number of units.");

*/

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static void PLearn::RBMBinomialLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

| static const PPath& PLearn::RBMBinomialLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 97 of file DEPRECATED/RBMBinomialLayer.h.

:

//##### Not Options #####################################################

| static const PPath& PLearn::RBMBinomialLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 149 of file RBMBinomialLayer.h.

:

//##### Not Options #####################################################

| virtual RBMBinomialLayer* PLearn::RBMBinomialLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

| RBMBinomialLayer * PLearn::RBMBinomialLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

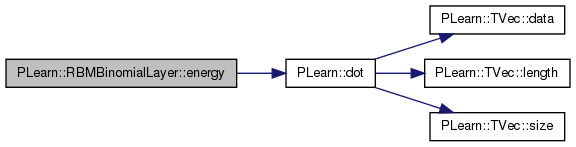

compute -bias' unit_values

Reimplemented from PLearn::RBMLayer.

Definition at line 694 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::bias, and PLearn::dot().

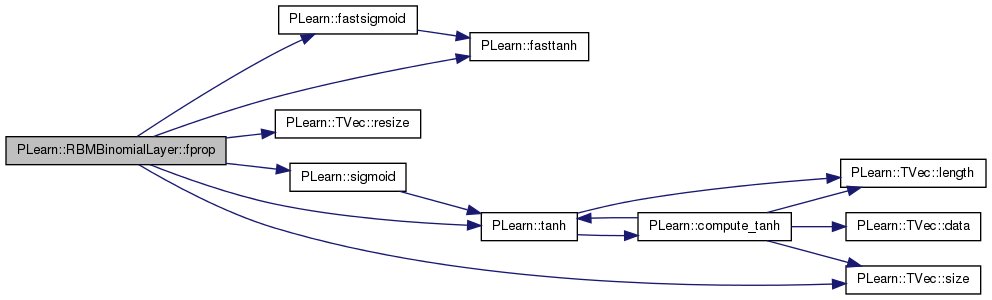

forward propagation

Reimplemented from PLearn::RBMLayer.

Definition at line 183 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::bias, PLearn::fastsigmoid(), PLearn::fasttanh(), PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), PLearn::RBMLayer::size, PLearn::TVec< T >::size(), PLearn::tanh(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

if( use_signed_samples )

if (use_fast_approximations)

for( int i=0 ; i<size ; i++ )

output[i] = fasttanh( input[i] + bias[i] );

else

for( int i=0 ; i<size ; i++ )

output[i] = tanh( input[i] + bias[i] );

else

if (use_fast_approximations)

for( int i=0 ; i<size ; i++ )

output[i] = fastsigmoid( input[i] + bias[i] );

else

for( int i=0 ; i<size ; i++ )

output[i] = sigmoid( input[i] + bias[i] );

}

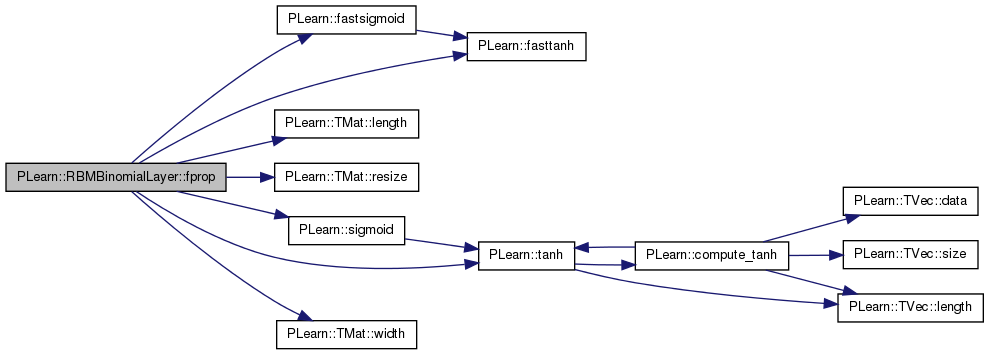

Batch forward propagation.

Reimplemented from PLearn::RBMLayer.

Definition at line 204 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::bias, PLearn::fastsigmoid(), PLearn::fasttanh(), PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), PLearn::sigmoid(), PLearn::RBMLayer::size, PLearn::tanh(), PLearn::OnlineLearningModule::use_fast_approximations, use_signed_samples, and PLearn::TMat< T >::width().

{

int mbatch_size = inputs.length();

PLASSERT( inputs.width() == size );

outputs.resize( mbatch_size, size );

if( use_signed_samples )

if (use_fast_approximations)

for( int k = 0; k < mbatch_size; k++ )

for( int i = 0; i < size; i++ )

outputs(k,i) = fasttanh( inputs(k,i) + bias[i] );

else

for( int k = 0; k < mbatch_size; k++ )

for( int i = 0; i < size; i++ )

outputs(k,i) = tanh( inputs(k,i) + bias[i] );

else

if (use_fast_approximations)

for( int k = 0; k < mbatch_size; k++ )

for( int i = 0; i < size; i++ )

outputs(k,i) = fastsigmoid( inputs(k,i) + bias[i] );

else

for( int k = 0; k < mbatch_size; k++ )

for( int i = 0; i < size; i++ )

outputs(k,i) = sigmoid( inputs(k,i) + bias[i] );

}

| void PLearn::RBMBinomialLayer::fprop | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| Vec & | output | ||

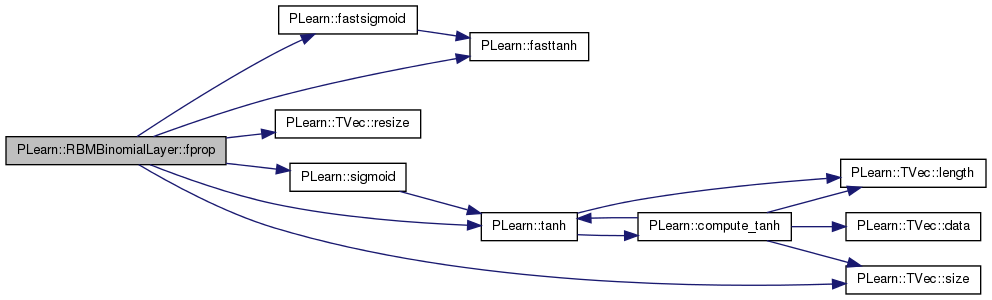

| ) | const [virtual] |

forward propagation with provided bias

Reimplemented from PLearn::RBMLayer.

Definition at line 231 of file RBMBinomialLayer.cc.

References PLearn::fastsigmoid(), PLearn::fasttanh(), PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::tanh(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( input.size() == input_size );

PLASSERT( rbm_bias.size() == input_size );

output.resize( output_size );

if( use_signed_samples )

if (use_fast_approximations)

for( int i=0 ; i<size ; i++ )

output[i] = fasttanh( input[i] + rbm_bias[i]);

else

for( int i=0 ; i<size ; i++ )

output[i] =tanh( input[i] + rbm_bias[i]);

else

if (use_fast_approximations)

for( int i=0 ; i<size ; i++ )

output[i] = fastsigmoid( input[i] + rbm_bias[i]);

else

for( int i=0 ; i<size ; i++ )

output[i] = sigmoid( input[i] + rbm_bias[i]);

}

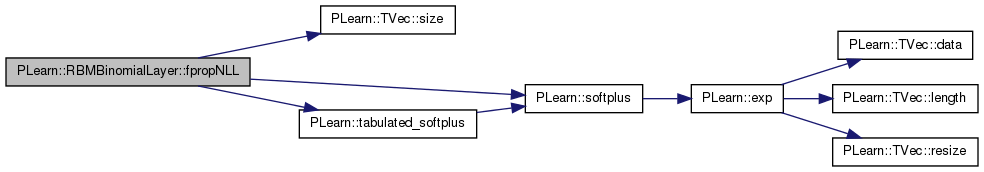

Computes the negative log-likelihood of target given the internal activations of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 460 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::activation, i, PLearn::OnlineLearningModule::input_size, PLASSERT, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( target.size() == input_size );

real ret = 0;

real target_i, activation_i;

if( use_signed_samples )

{

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ )

{

target_i = (target[i]+1)/2;

activation_i = 2*activation[i];

ret += tabulated_softplus(activation_i) - target_i * activation_i;

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following identity:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

}

} else {

for( int i=0 ; i<size ; i++ )

{

target_i = (target[i]+1)/2;

activation_i = 2*activation[i];

ret += softplus(activation_i) - target_i * activation_i;

}

}

}

else

{

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ )

{

target_i = target[i];

activation_i = activation[i];

ret += tabulated_softplus(activation_i) - target_i * activation_i;

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following identity:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

}

} else {

for( int i=0 ; i<size ; i++ )

{

target_i = target[i];

activation_i = activation[i];

ret += softplus(activation_i) - target_i * activation_i;

}

}

}

return ret;

}

Computes the weighted negative log-likelihood of target given the internal activations of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 516 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::activation, i, PLearn::OnlineLearningModule::input_size, PLASSERT, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( target.size() == input_size );

PLASSERT( target.size() == cost_weights.size() );

PLASSERT (cost_weights.size() == size );

real ret = 0;

real target_i, activation_i;

if( use_signed_samples )

{

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ )

{

if(cost_weights[i] != 0)

{

target_i = (target[i]+1)/2;

activation_i = 2*activation[i];

ret += cost_weights[i]*(tabulated_softplus(activation_i) - target_i * activation_i);

}

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following identity:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

}

} else {

for( int i=0 ; i<size ; i++ )

{

if(cost_weights[i] != 0)

{

target_i = (target[i]+1)/2;

activation_i = 2*activation[i];

ret += cost_weights[i]*(softplus(activation_i) - target_i * activation_i);

}

}

}

}

else

{

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ )

{

if(cost_weights[i] != 0)

{

target_i = target[i];

activation_i = activation[i];

ret += cost_weights[i]*(tabulated_softplus(activation_i) - target_i * activation_i);

}

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following identity:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

}

} else {

for( int i=0 ; i<size ; i++ )

{

if(cost_weights[i] != 0)

{

target_i = target[i];

activation_i = activation[i];

ret += cost_weights[i]*(softplus(activation_i) - target_i * activation_i);

}

}

}

}

return ret;

}

Reimplemented from PLearn::RBMLayer.

Definition at line 588 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::activation, PLearn::RBMLayer::activations, PLearn::RBMLayer::batch_size, i, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLASSERT, PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), PLearn::OnlineLearningModule::use_fast_approximations, use_signed_samples, and PLearn::TMat< T >::width().

{

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

if( use_signed_samples )

{

for (int k=0;k<batch_size;k++) // loop over minibatch

{

real nll = 0;

real* activation = activations[k];

real* target = targets[k];

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ ) // loop over outputs

{

nll += tabulated_softplus(2*activation[i])

- (target[i]+1) * activation[i] ;

}

} else {

for( int i=0 ; i<size ; i++ ) // loop over outputs

{

nll += softplus(2*activation[i]) - (target[i]+1)*activation[i] ;

}

}

costs_column(k,0) = nll;

}

}

else

{

for (int k=0;k<batch_size;k++) // loop over minibatch

{

real nll = 0;

real* activation = activations[k];

real* target = targets[k];

if(use_fast_approximations){

for( int i=0 ; i<size ; i++ ) // loop over outputs

{

nll += tabulated_softplus(activation[i])

-target[i] * activation[i] ;

}

} else {

for( int i=0 ; i<size ; i++ ) // loop over outputs

{

nll += softplus(activation[i]) - target[i] * activation[i] ;

}

}

costs_column(k,0) = nll;

}

}

}

| real PLearn::RBMBinomialLayer::freeEnergyContribution | ( | const Vec & | unit_activations | ) | const [virtual] |

Computes  ) This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

) This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

Reimplemented from PLearn::RBMLayer.

Definition at line 699 of file RBMBinomialLayer.cc.

References a, PLearn::TVec< T >::data(), i, PLASSERT, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::softplus(), PLearn::tabulated_softplus(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( unit_activations.size() == size );

// result = -\sum_{i=0}^{size-1} softplus(a_i)

real result = 0;

real* a = unit_activations.data();

if( use_signed_samples )

{

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

result -= tabulated_softplus(2*a[i]) - a[i];

else

result -= softplus(2*a[i]) - a[i];

}

}

else

{

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

result -= tabulated_softplus(a[i]);

else

result -= softplus(a[i]);

}

}

return result;

}

| void PLearn::RBMBinomialLayer::freeEnergyContributionGradient | ( | const Vec & | unit_activations, |

| Vec & | unit_activations_gradient, | ||

| real | output_gradient = 1, |

||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations.

with respect to unit_activations.

Optionally, a gradient with respect to freeEnergyContribution can be given

Reimplemented from PLearn::RBMLayer.

Definition at line 730 of file RBMBinomialLayer.cc.

References a, PLearn::TVec< T >::clear(), PLearn::TVec< T >::data(), PLearn::fastsigmoid(), PLearn::fasttanh(), i, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, PLearn::tanh(), PLearn::OnlineLearningModule::use_fast_approximations, and use_signed_samples.

{

PLASSERT( unit_activations.size() == size );

unit_activations_gradient.resize( size );

if( !accumulate ) unit_activations_gradient.clear();

real* a = unit_activations.data();

real* ga = unit_activations_gradient.data();

if( use_signed_samples )

{

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

ga[i] -= output_gradient *

( fasttanh( a[i] ) );

else

ga[i] -= output_gradient *

( tanh( a[i] ) );

}

}

else

{

for (int i=0; i<size; i++)

{

if (use_fast_approximations)

ga[i] -= output_gradient *

fastsigmoid( a[i] );

else

ga[i] -= output_gradient *

sigmoid( a[i] );

}

}

}

| virtual void PLearn::RBMBinomialLayer::generateSample | ( | ) | [virtual] |

generate a sample, and update the sample field

Implements PLearn::RBMLayer.

| void PLearn::RBMBinomialLayer::generateSample | ( | ) | [virtual] |

generate a sample, and update the sample field

Implements PLearn::RBMLayer.

Definition at line 83 of file DEPRECATED/RBMBinomialLayer.cc.

References i, and PLearn::sample().

{

computeExpectation();

for( int i=0 ; i<size ; i++ )

sample[i] = random_gen->binomial_sample( expectation[i] );

}

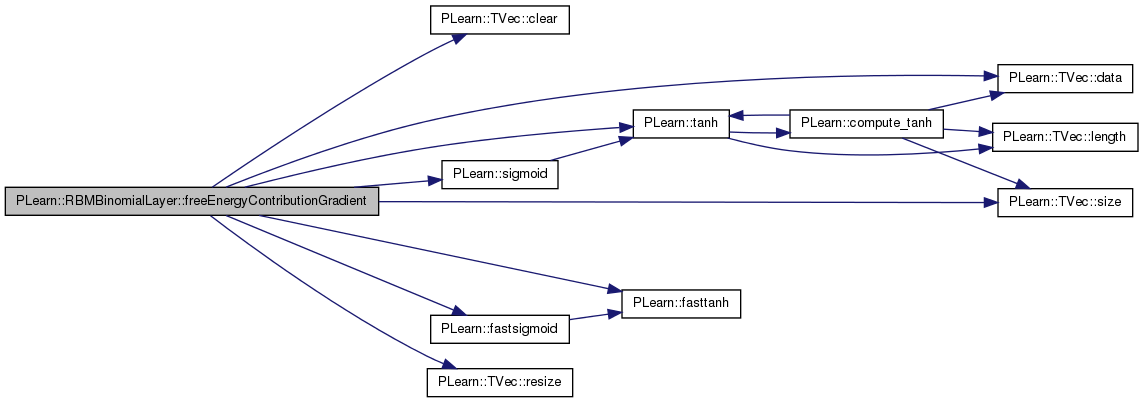

| void PLearn::RBMBinomialLayer::generateSamples | ( | ) | [virtual] |

Inherited.

Implements PLearn::RBMLayer.

Definition at line 96 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, i, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLCHECK_MSG, PLearn::RBMLayer::random_gen, PLearn::RBMLayer::samples, PLearn::RBMLayer::size, use_signed_samples, and PLearn::TMat< T >::width().

{

PLASSERT_MSG(random_gen,

"random_gen should be initialized before generating samples");

PLCHECK_MSG(expectations_are_up_to_date, "Expectations should be computed "

"before calling generateSamples()");

PLASSERT( samples.width() == size && samples.length() == batch_size );

//random_gen->manual_seed(1827);

if( use_signed_samples )

for (int k = 0; k < batch_size; k++) {

for (int i=0 ; i<size ; i++)

samples(k, i) = 2*random_gen->binomial_sample( (expectations(k, i)+1)/2 )-1;

}

else

for (int k = 0; k < batch_size; k++) {

for (int i=0 ; i<size ; i++)

samples(k, i) = random_gen->binomial_sample( expectations(k, i) );

}

}

| void PLearn::RBMBinomialLayer::getAllActivations | ( | PP< RBMParameters > | rbmp, |

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmp".

Implements PLearn::RBMLayer.

Definition at line 77 of file DEPRECATED/RBMBinomialLayer.cc.

{

rbmp->computeUnitActivations( offset, size, activations );

expectation_is_up_to_date = false;

}

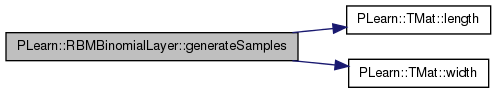

Computes the conf_index configuration of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 771 of file RBMBinomialLayer.cc.

References getConfigurationCount(), i, PLearn::TVec< T >::length(), PLASSERT, PLearn::RBMLayer::size, and use_signed_samples.

{

PLASSERT( output.length() == size );

PLASSERT( conf_index >= 0 && conf_index < getConfigurationCount() );

if( use_signed_samples )

{

for ( int i = 0; i < size; ++i ) {

output[i] = 2 * (conf_index & 1) - 1;

conf_index >>= 1;

}

}

else

{

for ( int i = 0; i < size; ++i ) {

output[i] = conf_index & 1;

conf_index >>= 1;

}

}

}

| int PLearn::RBMBinomialLayer::getConfigurationCount | ( | ) | [virtual] |

Returns a number of different configurations the layer can be in.

Reimplemented from PLearn::RBMLayer.

Definition at line 766 of file RBMBinomialLayer.cc.

References PLearn::RBMLayer::INFINITE_CONFIGURATIONS.

Referenced by getConfiguration().

{

return size < 31 ? 1<<size : INFINITE_CONFIGURATIONS;

}

| virtual OptionList& PLearn::RBMBinomialLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| OptionList & PLearn::RBMBinomialLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| OptionMap & PLearn::RBMBinomialLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| virtual OptionMap& PLearn::RBMBinomialLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| RemoteMethodMap & PLearn::RBMBinomialLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMBinomialLayer.cc.

| virtual RemoteMethodMap& PLearn::RBMBinomialLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| void PLearn::RBMBinomialLayer::getUnitActivations | ( | int | i, |

| PP< RBMParameters > | rbmp, | ||

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of unit "i" of this layer.

This activation vector is computed by the "i+offset"-th unit of "rbmp"

Implements PLearn::RBMLayer.

Definition at line 67 of file DEPRECATED/RBMBinomialLayer.cc.

References PLearn::TVec< T >::subVec().

{

Vec activation = activations.subVec( i, 1 );

rbmp->computeUnitActivations( i+offset, 1, activation );

expectation_is_up_to_date = false;

}

| virtual void PLearn::RBMBinomialLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMBinomialLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

Definition at line 151 of file DEPRECATED/RBMBinomialLayer.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

static StaticInitializer PLearn::RBMBinomialLayer::_static_initializer_ [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 97 of file DEPRECATED/RBMBinomialLayer.h.

Definition at line 60 of file RBMBinomialLayer.h.

Referenced by bpropUpdate(), computeExpectations(), fprop(), fpropNLL(), freeEnergyContribution(), freeEnergyContributionGradient(), generateSamples(), and getConfiguration().

1.7.4

1.7.4