|

PLearn 0.1

|

|

PLearn 0.1

|

#include <SmoothedProbSparseMatrix.h>

Public Member Functions | |

| bool | checkCondProbIntegrity () |

| SmoothedProbSparseMatrix (int n_rows=0, int n_cols=0, string name="pXY", int mode=ROW_WISE, bool double_access=false) | |

| void | normalizeCondLaplace (ProbSparseMatrix &nXY, bool clear_nXY=false) |

| void | normalizeCondBackoff (ProbSparseMatrix &nXY, real disc, Vec &bDist, bool clear_nXY, bool shadow) |

| string | getClassName () const |

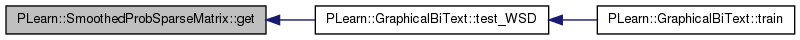

| real | get (int i, int j) |

| void | write (PStream &out) const |

| void | read (PStream &in) |

Protected Attributes | |

| int | smoothingMethod |

| Vec | normalizationSum |

| Vec | backoffDist |

| Vec | backoffNormalization |

| Vec | discountedMass |

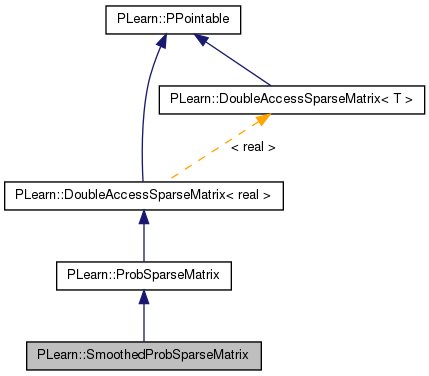

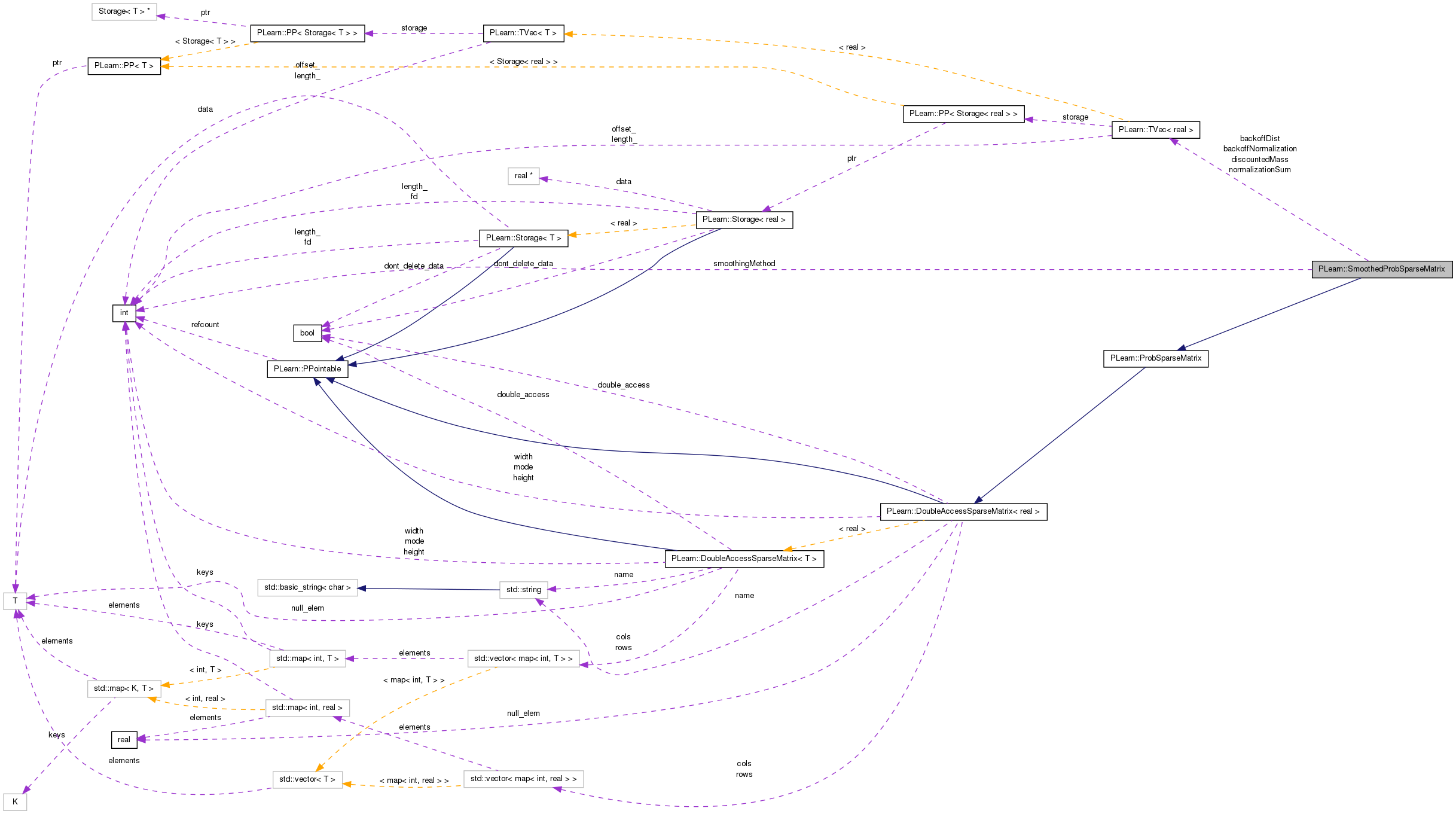

Definition at line 46 of file SmoothedProbSparseMatrix.h.

| PLearn::SmoothedProbSparseMatrix::SmoothedProbSparseMatrix | ( | int | n_rows = 0, |

| int | n_cols = 0, |

||

| string | name = "pXY", |

||

| int | mode = ROW_WISE, |

||

| bool | double_access = false |

||

| ) |

Definition at line 41 of file SmoothedProbSparseMatrix.cc.

References smoothingMethod.

:ProbSparseMatrix(n_rows, n_cols, name, mode, double_access) { smoothingMethod = 0; }

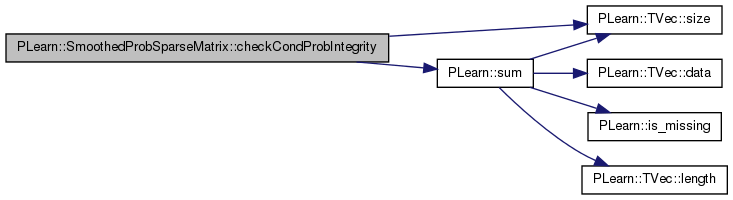

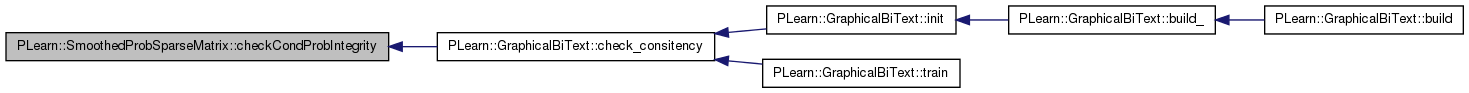

| bool PLearn::SmoothedProbSparseMatrix::checkCondProbIntegrity | ( | ) |

Reimplemented from PLearn::ProbSparseMatrix.

Definition at line 276 of file SmoothedProbSparseMatrix.cc.

References backoffDist, backoffNormalization, PLearn::DoubleAccessSparseMatrix< real >::cols, discountedMass, PLearn::DoubleAccessSparseMatrix< real >::height, i, j, PLearn::DoubleAccessSparseMatrix< real >::mode, normalizationSum, ROW_WISE, PLearn::DoubleAccessSparseMatrix< real >::rows, PLearn::TVec< T >::size(), smoothingMethod, PLearn::sum(), and PLearn::DoubleAccessSparseMatrix< real >::width.

Referenced by PLearn::GraphicalBiText::check_consitency().

{

real sum = 0.0;

real backsum;

int null_size;

if (normalizationSum.size()==0)return false;

//cout << " CheckCondIntegrity : mode " <<smoothingMethod;

if (mode == ROW_WISE){

for (int i = 0; i < height; i++){

map<int, real>& row_i = rows[i];

if(smoothingMethod==1)sum = (width-row_i.size())/normalizationSum[i];

else if(smoothingMethod==2||smoothingMethod==3)sum = discountedMass[i]/normalizationSum[i];

backsum=0;

for (map<int, real>::iterator it = row_i.begin(); it != row_i.end(); ++it){

sum += it->second;

if (smoothingMethod==2)backsum +=backoffDist[it->first];

}

if (smoothingMethod==2){

if (fabs(1.0 - backsum - backoffNormalization[i]) > 1e-4 ){

cout << " Inconsistent backoff normalization " << i << " : "<<backoffNormalization[i]<< " "<< backsum;

return false;

}

}

if (fabs(sum - 1.0) > 1e-4 && (sum > 0.0))

cout << " Inconsistent line " << i << " sum = "<< sum;

return false;

}

return true;

}else{

for (int j = 0; j < width; j++){

map<int, real>& col_j = cols[j];

if(smoothingMethod==1)sum = (height-col_j.size())/normalizationSum[j];

else if(smoothingMethod==2 || smoothingMethod==3)sum = discountedMass[j]/normalizationSum[j];

backsum=0;

for (map<int, real>::iterator it = col_j.begin(); it != col_j.end(); ++it){

sum += it->second;

if (smoothingMethod==2)backsum +=backoffDist[it->first];

}

if (smoothingMethod==2){

if (fabs(1.0 - backsum - backoffNormalization[j]) > 1e-4 ){

cout << " Inconsistent backoff normalization " << j << " : "<<backoffNormalization[j]<< " "<< backsum;

return false;

}

}

if(fabs(sum - 1.0) > 1e-4 && (sum > 0.0)){

cout << " Inconsistent column " << j << " sum = "<< sum;

return false;

}

}

return true;

}

}

Definition at line 226 of file SmoothedProbSparseMatrix.cc.

References backoffDist, backoffNormalization, PLearn::DoubleAccessSparseMatrix< real >::cols, discountedMass, PLearn::DoubleAccessSparseMatrix< real >::height, i, j, PLearn::DoubleAccessSparseMatrix< real >::mode, normalizationSum, PLERROR, ROW_WISE, PLearn::DoubleAccessSparseMatrix< real >::rows, smoothingMethod, and PLearn::DoubleAccessSparseMatrix< real >::width.

Referenced by PLearn::GraphicalBiText::test_WSD().

{

#ifdef BOUNDCHECK

if (i < 0 || i >= height || j < 0 || j >= width)

PLERROR("SmoothedProbSparseMatrix.get : out-of-bound access to (%d, %d), dims = (%d, %d)", i, j, height, width);

#endif

// If the matrix is not yet smoothed

if(smoothingMethod==0){

return ProbSparseMatrix::get(i,j);

}

if (mode == ROW_WISE){

map<int, real>& row_i = rows[i];

map<int, real>::iterator it = row_i.find(j);

if (it == row_i.end()){

// if no data in this column : uniform distribution

if (discountedMass[i]==0)return 1/normalizationSum[i];

// Laplace smoothing

if(smoothingMethod==1)return 1/normalizationSum[i];

// Backoff smoothing

if(smoothingMethod==2)return discountedMass[i]*backoffDist[j]/(normalizationSum[i]* backoffNormalization[i]);

// Non-shadowing backoff

if(smoothingMethod==3)return discountedMass[i]*backoffDist[j]/(normalizationSum[i]);

}else{

// Non-shadowing backoff

if(smoothingMethod==3)return it->second+discountedMass[i]*backoffDist[j]/(normalizationSum[i]);

return it->second;

}

} else{

map<int, real>& col_j = cols[j];

map<int, real>::iterator it = col_j.find(i);

if (it == col_j.end()){

// if no data in this column : uniform distribution

if (discountedMass[j]==0)return 1/normalizationSum[j];

// Laplace smoothing

if(smoothingMethod==1)return 1/normalizationSum[j];

// Backoff smoothing

if(smoothingMethod==2)return discountedMass[j]*backoffDist[i]/(normalizationSum[j]*backoffNormalization[j]);

// Non-shadowing backoff

if(smoothingMethod==3)return discountedMass[j]*backoffDist[i]/(normalizationSum[j]);

}else{

// Non-shadowing backoff

if(smoothingMethod==3)return it->second+discountedMass[j]*backoffDist[i]/(normalizationSum[j]);

return it->second;

}

}

return;

}

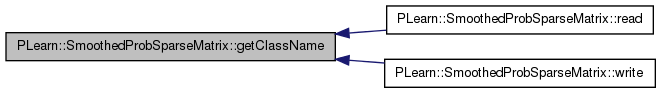

| string PLearn::SmoothedProbSparseMatrix::getClassName | ( | ) | const [inline, virtual] |

Reimplemented from PLearn::ProbSparseMatrix.

Definition at line 69 of file SmoothedProbSparseMatrix.h.

Referenced by read(), and write().

{ return "SmoothedProbSparseMatrix"; }

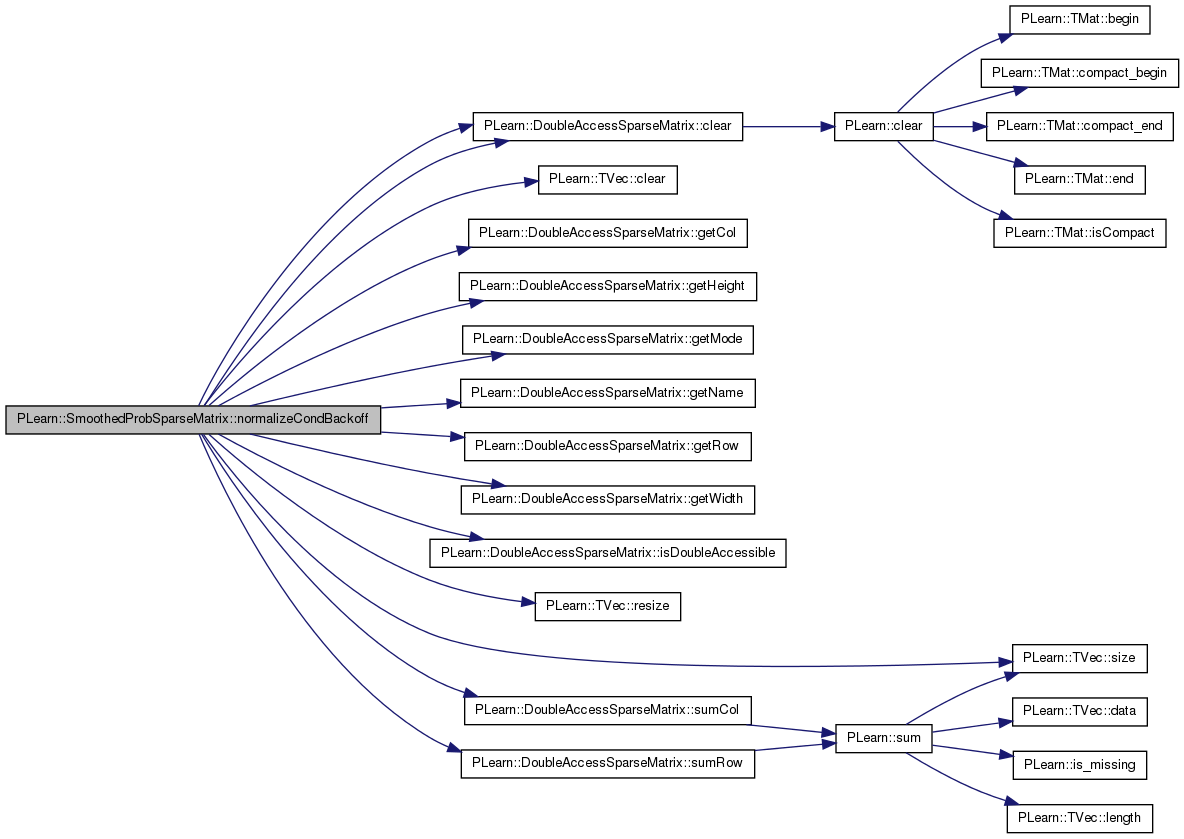

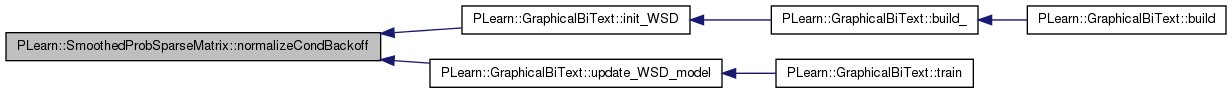

| void PLearn::SmoothedProbSparseMatrix::normalizeCondBackoff | ( | ProbSparseMatrix & | nXY, |

| real | disc, | ||

| Vec & | bDist, | ||

| bool | clear_nXY, | ||

| bool | shadow | ||

| ) |

Definition at line 106 of file SmoothedProbSparseMatrix.cc.

References backoffDist, backoffNormalization, PLearn::DoubleAccessSparseMatrix< T >::clear(), PLearn::TVec< T >::clear(), PLearn::DoubleAccessSparseMatrix< real >::clear(), COLUMN_WISE, discountedMass, PLearn::DoubleAccessSparseMatrix< T >::getCol(), PLearn::DoubleAccessSparseMatrix< T >::getHeight(), PLearn::DoubleAccessSparseMatrix< T >::getMode(), PLearn::DoubleAccessSparseMatrix< T >::getName(), PLearn::DoubleAccessSparseMatrix< T >::getRow(), PLearn::DoubleAccessSparseMatrix< T >::getWidth(), i, PLearn::DoubleAccessSparseMatrix< T >::isDoubleAccessible(), j, PLearn::DoubleAccessSparseMatrix< real >::mode, normalizationSum, PLERROR, PLearn::TVec< T >::resize(), ROW_WISE, PLearn::TVec< T >::size(), smoothingMethod, PLearn::DoubleAccessSparseMatrix< T >::sumCol(), and PLearn::DoubleAccessSparseMatrix< T >::sumRow().

Referenced by PLearn::GraphicalBiText::init_WSD(), and PLearn::GraphicalBiText::update_WSD_model().

{

// disc is the percent of minial value to discount : discval = minvalue*disc

// In case of integer counts, the discounted value is 1*disc = disc

int i,j;

real nij,pij;

real minval,discval;

int nXY_height = nXY.getHeight();

int nXY_width = nXY.getWidth();

// Shadowing or non shadowing smoothing

if(shadow){

smoothingMethod = 2;

}else{

smoothingMethod = 3;

}

// Copy Backoff Distribution

backoffDist.resize(bDist.size());

backoffDist << bDist;

if (mode == ROW_WISE && (nXY.getMode() == ROW_WISE || nXY.isDoubleAccessible())){

clear();

if (backoffDist.size()!=nXY_width)PLERROR("Wrong dimension for backoffDistribution");

normalizationSum.resize(nXY_height);normalizationSum.clear();

backoffNormalization.resize(nXY_height);backoffNormalization.clear();

discountedMass.resize(nXY_height);discountedMass.clear();

for (int i = 0; i < nXY_height; i++){

// normalization

real sum_row_i = nXY.sumRow(i);

if (sum_row_i==0){

// if there is no count in this column : uniform distribution

normalizationSum[j]=nXY_width;

}else{

// Store normalization sum

normalizationSum[i] = sum_row_i;

}

backoffNormalization[i]= 1.0;

map<int, real>& row_i = nXY.getRow(i);

// compute minial value

minval=FLT_MAX;

for (map<int, real>::iterator it = row_i.begin(); it != row_i.end(); ++it){

minval= it->second<minval?it->second:minval;

}

discval = minval*disc;

for (map<int, real>::iterator it = row_i.begin(); it != row_i.end(); ++it){

j = it->first;

nij = it->second;

if(nij>discval){

discountedMass[i]+=discval;

// Discount

pij = (nij -discval)/ sum_row_i;

if (pij<0) PLERROR("modified count < 0 in Backoff Smoothing SmoothedProbSparseMatrix %s",nXY.getName().c_str());

// update backoff normalization factor

backoffNormalization[i]-= backoffDist[j];

}else{

pij = nij/ sum_row_i;

}

// Set modified count

set(i, j,pij);

}

if(discountedMass[i]==0)PLERROR("Discounted mass is null but count are not null in %s line %d",nXY.getName().c_str(),i);

}

if (clear_nXY)nXY.clear();

} else if (mode == COLUMN_WISE && (nXY.getMode() == COLUMN_WISE || nXY.isDoubleAccessible())){

clear();

normalizationSum.resize(nXY_width);normalizationSum.clear();

backoffNormalization.resize(nXY_width);backoffNormalization.clear();

discountedMass.resize(nXY_width);discountedMass.clear();

for ( j = 0; j < nXY_width; j++){

// normalization

real sum_col_j = nXY.sumCol(j);

if (sum_col_j==0){

// if there is no count in this column : uniform distribution

normalizationSum[j]=nXY_height;

continue;

}else{

// Store normalization sum

normalizationSum[j] = sum_col_j;

}

backoffNormalization[j]= 1.0;

map<int, real>& col_j = nXY.getCol(j);

// compute minimal value

minval=FLT_MAX;

for (map<int, real>::iterator it = col_j.begin(); it != col_j.end(); ++it){

minval= (it->second<minval && it->second!=0) ?it->second:minval;

}

discval = minval*disc;

for (map<int, real>::iterator it = col_j.begin(); it != col_j.end(); ++it){

i = it->first;

nij = it->second;

if(nij>discval){

discountedMass[j]+=discval;

// Discount

pij = (nij -discval)/ sum_col_j;

if (pij<0) PLERROR("modified count < 0 in Backoff Smoothing SmoothedProbSparseMatrix %s : i=%d j=%d p=%f",nXY.getName().c_str(),i,j,pij);

// update backoff normalization factor

backoffNormalization[j]-=backoffDist[i];

}else{

pij = nij / sum_col_j;

}

if(pij<=0 || pij>1) PLERROR("Invalide smoothed probability %f in %s",pij,nXY.getName().c_str());

set(i, j, pij);

}

if(discountedMass[j]==0){

PLERROR("Discounted mass is null but count are not null in %s col %d",nXY.getName().c_str(),j);

}

}

if (clear_nXY)nXY.clear();

}else{

PLERROR("pXY and nXY accessibility modes must match");

}

}

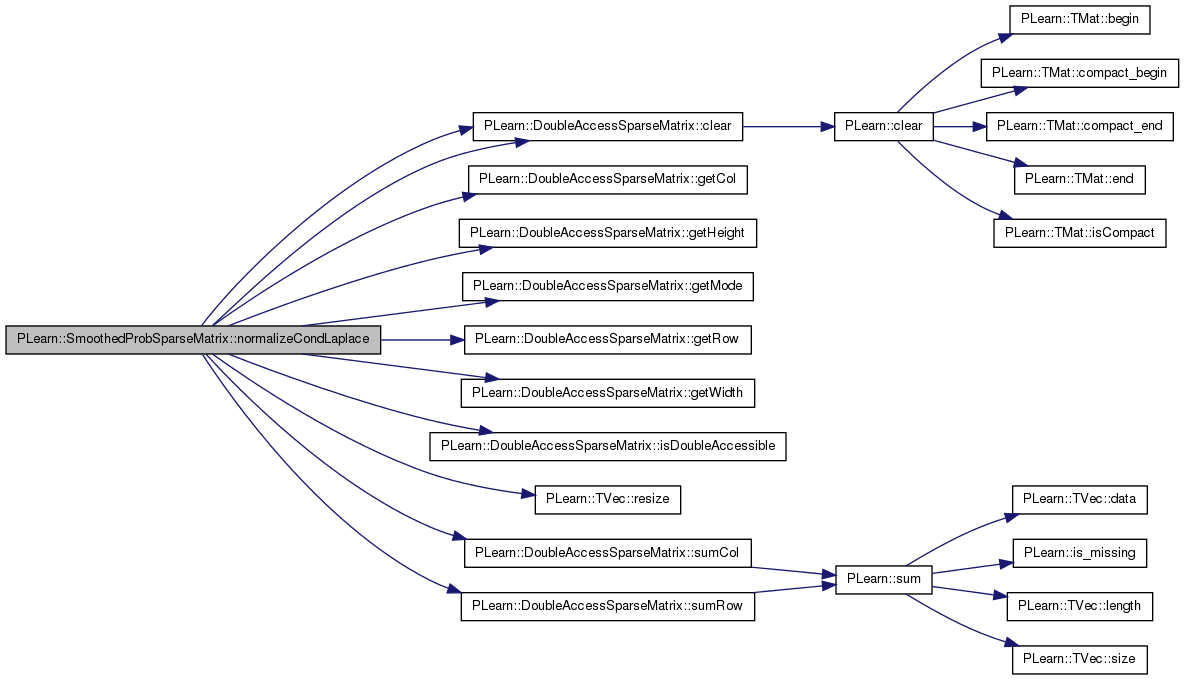

| void PLearn::SmoothedProbSparseMatrix::normalizeCondLaplace | ( | ProbSparseMatrix & | nXY, |

| bool | clear_nXY = false |

||

| ) |

Definition at line 52 of file SmoothedProbSparseMatrix.cc.

References PLearn::DoubleAccessSparseMatrix< T >::clear(), PLearn::DoubleAccessSparseMatrix< real >::clear(), COLUMN_WISE, PLearn::DoubleAccessSparseMatrix< T >::getCol(), PLearn::DoubleAccessSparseMatrix< T >::getHeight(), PLearn::DoubleAccessSparseMatrix< T >::getMode(), PLearn::DoubleAccessSparseMatrix< T >::getRow(), PLearn::DoubleAccessSparseMatrix< T >::getWidth(), i, PLearn::DoubleAccessSparseMatrix< T >::isDoubleAccessible(), j, PLearn::DoubleAccessSparseMatrix< real >::mode, normalizationSum, PLERROR, PLearn::TVec< T >::resize(), ROW_WISE, smoothingMethod, PLearn::DoubleAccessSparseMatrix< T >::sumCol(), and PLearn::DoubleAccessSparseMatrix< T >::sumRow().

{

int nXY_height = nXY.getHeight();

int nXY_width = nXY.getWidth();

smoothingMethod = 1;

if (mode == ROW_WISE && (nXY.getMode() == ROW_WISE || nXY.isDoubleAccessible()))

{

clear();

normalizationSum.resize(nXY_height);

for (int i = 0; i < nXY_height; i++)

{

// Laplace smoothing normalization

real sum_row_i = nXY.sumRow(i)+nXY_width;

// Store normalization sum

normalizationSum[i] = sum_row_i;

map<int, real>& row_i = nXY.getRow(i);

for (map<int, real>::iterator it = row_i.begin(); it != row_i.end(); ++it)

{

int j = it->first;

real nij = it->second;

// Laplace smoothing

if (nij > 0.0){

real pij = (nij +1)/ sum_row_i;

set(i, j, pij);

}

}

}

if (clear_nXY)

nXY.clear();

} else if (mode == COLUMN_WISE && (nXY.getMode() == COLUMN_WISE || nXY.isDoubleAccessible())){

clear();

normalizationSum.resize(nXY_width);

for (int j = 0; j < nXY_width; j++){

// Laplace smoothing normalization

real sum_col_j = nXY.sumCol(j)+nXY_height;

// Store normalization sum

normalizationSum[j] = sum_col_j;

map<int, real>& col_j = nXY.getCol(j);

for (map<int, real>::iterator it = col_j.begin(); it != col_j.end(); ++it){

int i = it->first;

real nij = it->second;

// Laplace smoothing

if (nij > 0.0){

set(i, j, (nij+1) / sum_col_j);

}

}

}

if (clear_nXY)

nXY.clear();

} else{

PLERROR("pXY and nXY accessibility modes must match");

}

}

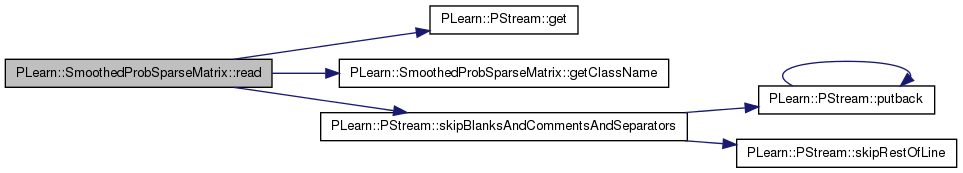

| void PLearn::SmoothedProbSparseMatrix::read | ( | PStream & | in | ) | [virtual] |

Reimplemented from PLearn::DoubleAccessSparseMatrix< real >.

Definition at line 363 of file SmoothedProbSparseMatrix.cc.

References backoffDist, backoffNormalization, c, discountedMass, PLearn::PStream::get(), getClassName(), i, PLearn::PStream::inmode, normalizationSum, PLearn::PStream::plearn_ascii, PLearn::PStream::plearn_binary, PLERROR, PLearn::PStream::raw_ascii, PLearn::PStream::raw_binary, PLearn::PStream::skipBlanksAndCommentsAndSeparators(), and smoothingMethod.

{

ProbSparseMatrix::read(in);

string class_name = getClassName();

switch (in.inmode)

{

case PStream::raw_ascii :

PLERROR("raw_ascii read not implemented in %s", class_name.c_str());

break;

case PStream::raw_binary :

PLERROR("raw_binary read not implemented in %s", class_name.c_str());

break;

case PStream::plearn_ascii :

case PStream::plearn_binary :

{

in.skipBlanksAndCommentsAndSeparators();

string word(class_name.size() + 1, ' ');

for (unsigned int i = 0; i < class_name.size() + 1; i++)

in.get(word[i]);

if (word != class_name + "(")

PLERROR("in %s::(PStream& in), '%s' is not a proper header", class_name.c_str(), word.c_str());

in.skipBlanksAndCommentsAndSeparators();

in >> smoothingMethod;

in.skipBlanksAndCommentsAndSeparators();

in >> normalizationSum;

in.skipBlanksAndCommentsAndSeparators();

in >> backoffDist;

in.skipBlanksAndCommentsAndSeparators();

in >> backoffNormalization;

in.skipBlanksAndCommentsAndSeparators();

in >> discountedMass;

in.skipBlanksAndCommentsAndSeparators();

int c = in.get();

if(c != ')')

PLERROR("in %s::(PStream& in), expected a closing parenthesis, found '%c'", class_name.c_str(), c);

}

break;

default:

PLERROR("unknown inmode in %s::write(PStream& out)", class_name.c_str());

break;

}

}

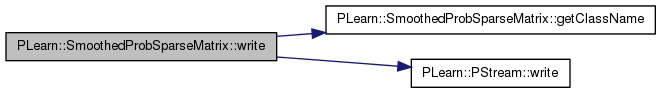

| void PLearn::SmoothedProbSparseMatrix::write | ( | PStream & | out | ) | const [virtual] |

Reimplemented from PLearn::DoubleAccessSparseMatrix< real >.

Definition at line 334 of file SmoothedProbSparseMatrix.cc.

References backoffDist, backoffNormalization, discountedMass, getClassName(), normalizationSum, PLearn::PStream::outmode, PLearn::PStream::plearn_ascii, PLearn::PStream::plearn_binary, PLERROR, PLearn::PStream::pretty_ascii, PLearn::PStream::raw_ascii, PLearn::PStream::raw_binary, smoothingMethod, and PLearn::PStream::write().

{

ProbSparseMatrix::write(out);

string class_name = getClassName();

switch(out.outmode)

{

case PStream::raw_ascii :

case PStream::pretty_ascii :

PLERROR("raw/pretty_ascii write not implemented in %s", class_name.c_str());

break;

case PStream::raw_binary :

PLERROR("raw_binary write not implemented in %s", class_name.c_str());

break;

case PStream::plearn_binary :

case PStream::plearn_ascii :

out.write(class_name + "(");

out << smoothingMethod;

out << normalizationSum;

out << backoffDist;

out << backoffNormalization;

out << discountedMass;

out.write(")\n");

break;

default:

PLERROR("unknown outmode in %s::write(PStream& out)", class_name.c_str());

break;

}

}

Vec PLearn::SmoothedProbSparseMatrix::backoffDist [protected] |

Definition at line 59 of file SmoothedProbSparseMatrix.h.

Referenced by checkCondProbIntegrity(), get(), normalizeCondBackoff(), read(), and write().

Definition at line 60 of file SmoothedProbSparseMatrix.h.

Referenced by checkCondProbIntegrity(), get(), normalizeCondBackoff(), read(), and write().

Vec PLearn::SmoothedProbSparseMatrix::discountedMass [protected] |

Definition at line 62 of file SmoothedProbSparseMatrix.h.

Referenced by checkCondProbIntegrity(), get(), normalizeCondBackoff(), read(), and write().

Definition at line 57 of file SmoothedProbSparseMatrix.h.

Referenced by checkCondProbIntegrity(), get(), normalizeCondBackoff(), normalizeCondLaplace(), read(), and write().

int PLearn::SmoothedProbSparseMatrix::smoothingMethod [protected] |

Definition at line 54 of file SmoothedProbSparseMatrix.h.

Referenced by checkCondProbIntegrity(), get(), normalizeCondBackoff(), normalizeCondLaplace(), read(), SmoothedProbSparseMatrix(), and write().

1.7.4

1.7.4