|

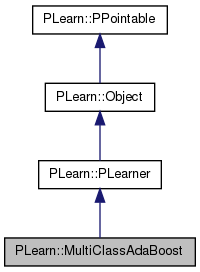

PLearn 0.1

|

|

PLearn 0.1

|

The first sentence should be a BRIEF DESCRIPTION of what the class does. More...

#include <MultiClassAdaBoost.h>

Public Member Functions | |

| MultiClassAdaBoost () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | finalize () |

| *** SUBCLASS WRITING: *** | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| void | computeCostsFromOutputs_ (const Vec &input, const Vec &output, const Vec &target, Vec &sub_costs1, Vec &sub_costs2, Vec &costs) const |

| virtual TVec< string > | getOutputNames () const |

| Returns a vector of length outputsize() containing the outputs' names. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual MultiClassAdaBoost * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Declares the training set. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | forward_sub_learner_test_costs |

| ### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //! | |

| uint16_t | forward_test |

| Did we forward the test function to the sub learner? | |

| PP< AdaBoost > | learner1 |

| The learner1 and learner2 must be trained! | |

| PP< AdaBoost > | learner2 |

| PP< AdaBoost > | learner_template |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | getSubLearnerTarget (const Vec target, TVec< Vec > sub_target) const |

Private Attributes | |

| Vec | tmp_input |

| Vec | tmp_target |

| Vec | tmp_output |

| Global storage to save memory allocations. | |

| Vec | tmp_costs |

| Vec | output1 |

| Vec | output2 |

| Vec | subcosts1 |

| Vec | subcosts2 |

| TVec< VMat > | saved_testset |

| TVec< VMat > | saved_testset1 |

| TVec< VMat > | saved_testset2 |

| real | train_time |

| The time it took for the last execution of the train() function. | |

| real | total_train_time |

| The total time passed in training. | |

| real | test_time |

| The time it took for the last execution of the test() function. | |

| real | total_test_time |

| The total time passed in test() | |

| bool | time_costs |

| bool | warn_once_target_gt_2 |

| bool | done_warn_once_target_gt_2 |

| PP< PTimer > | timer |

| real | time_sum |

| real | time_sum_ft |

| real | time_last_stage |

| real | time_last_stage_ft |

| int | last_stage |

| int | nb_sequential_ft |

| TVec< Vec > | sub_target_tmp |

| string | targetname |

The first sentence should be a BRIEF DESCRIPTION of what the class does.

Place the rest of the class programmer documentation here. Doxygen supports Javadoc-style comments. See http://www.doxygen.org/manual.html

Definition at line 59 of file MultiClassAdaBoost.h.

typedef PLearner PLearn::MultiClassAdaBoost::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 61 of file MultiClassAdaBoost.h.

| PLearn::MultiClassAdaBoost::MultiClassAdaBoost | ( | ) |

Default constructor.

Definition at line 60 of file MultiClassAdaBoost.cc.

:

train_time(0),

total_train_time(0),

test_time(0),

total_test_time(0),

time_costs(true),

warn_once_target_gt_2(false),

done_warn_once_target_gt_2(false),

timer(new PTimer(true)),

time_sum(0),

time_sum_ft(0),

time_last_stage(0),

time_last_stage_ft(0),

last_stage(0),

nb_sequential_ft(0),

forward_sub_learner_test_costs(false),

forward_test(0)

/* ### Initialize all fields to their default value here */

{

// ...

// ### You may (or not) want to call build_() to finish building the object

// ### (doing so assumes the parent classes' build_() have been called too

// ### in the parent classes' constructors, something that you must ensure)

// ### If this learner needs to generate random numbers, uncomment the

// ### line below to enable the use of the inherited PRandom object.

// random_gen = new PRandom();

}

| string PLearn::MultiClassAdaBoost::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

| OptionList & PLearn::MultiClassAdaBoost::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

| RemoteMethodMap & PLearn::MultiClassAdaBoost::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

| Object * PLearn::MultiClassAdaBoost::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 58 of file MultiClassAdaBoost.cc.

| StaticInitializer MultiClassAdaBoost::_static_initializer_ & PLearn::MultiClassAdaBoost::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

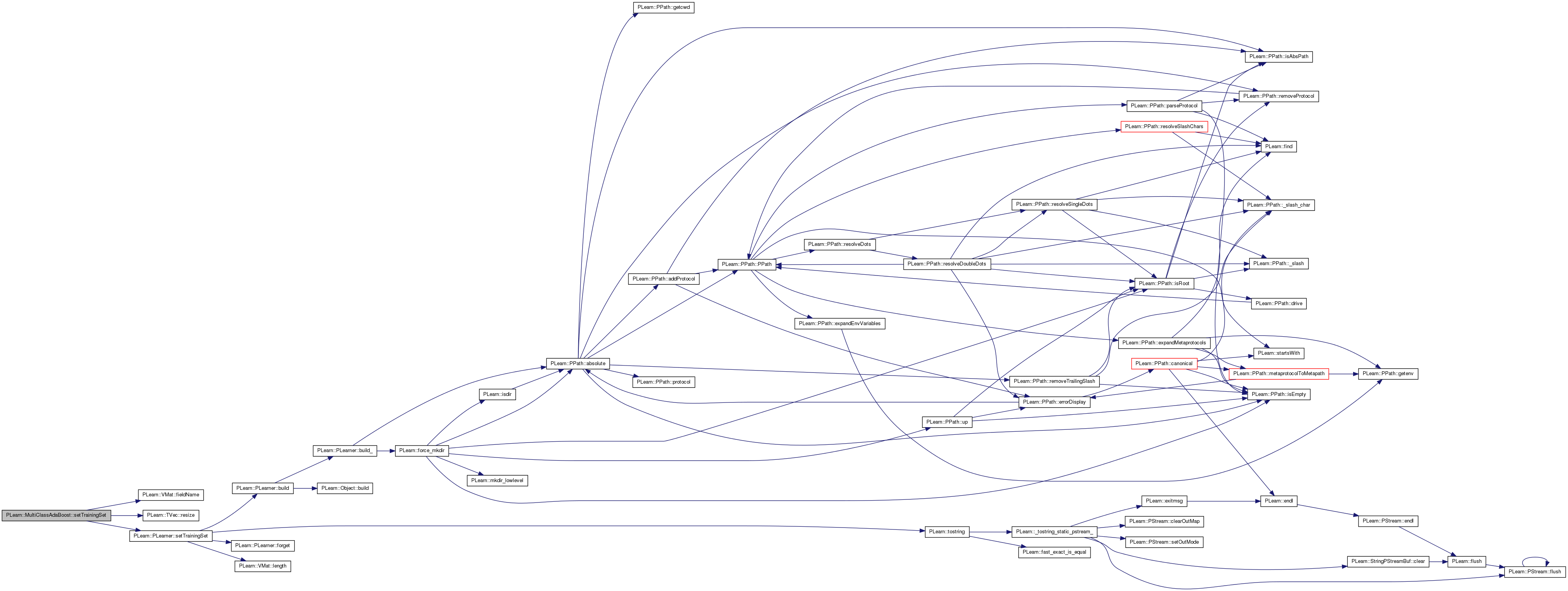

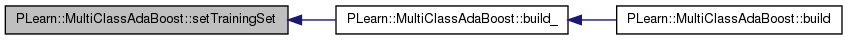

| void PLearn::MultiClassAdaBoost::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 237 of file MultiClassAdaBoost.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::MultiClassAdaBoost::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 201 of file MultiClassAdaBoost.cc.

References PLearn::deepCopy(), i, learner1, learner2, learner_template, output1, output2, outputsize(), PLearn::TVec< T >::resize(), setTrainingSet(), PLearn::TVec< T >::size(), sub_target_tmp, targetname, timer, tmp_output, tmp_target, PLearn::PLearner::train_set, and PLearn::PLearner::train_stats.

Referenced by build().

{

sub_target_tmp.resize(2);

for(int i=0;i<sub_target_tmp.size();i++)

sub_target_tmp[i].resize(1);

if(learner_template){

if(!learner1)

learner1 = ::PLearn::deepCopy(learner_template);

if(!learner2)

learner2 = ::PLearn::deepCopy(learner_template);

}

tmp_target.resize(1);

tmp_output.resize(outputsize());

if(learner1)

output1.resize(learner1->outputsize());

if(learner2)

output2.resize(learner2->outputsize());

if(!train_stats)

train_stats=new VecStatsCollector();

if(train_set){

if(learner1 && learner2)

if(! learner1->getTrainingSet()

|| ! learner2->getTrainingSet()

|| targetname.empty()

)

setTrainingSet(train_set);

}

timer->newTimer("MultiClassAdaBoost::test()", true);

timer->newTimer("MultiClassAdaBoost::test() current",true);

}

| string PLearn::MultiClassAdaBoost::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 58 of file MultiClassAdaBoost.cc.

| void PLearn::MultiClassAdaBoost::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

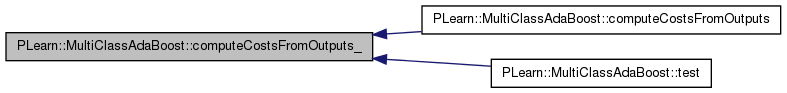

Definition at line 467 of file MultiClassAdaBoost.cc.

References computeCostsFromOutputs_(), PLearn::TVec< T >::resize(), subcosts1, and subcosts2.

{

subcosts1.resize(0);

subcosts2.resize(0);

computeCostsFromOutputs_(input, output, target, subcosts1, subcosts2, costs);

}

| void PLearn::MultiClassAdaBoost::computeCostsFromOutputs_ | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | sub_costs1, | ||

| Vec & | sub_costs2, | ||

| Vec & | costs | ||

| ) | const |

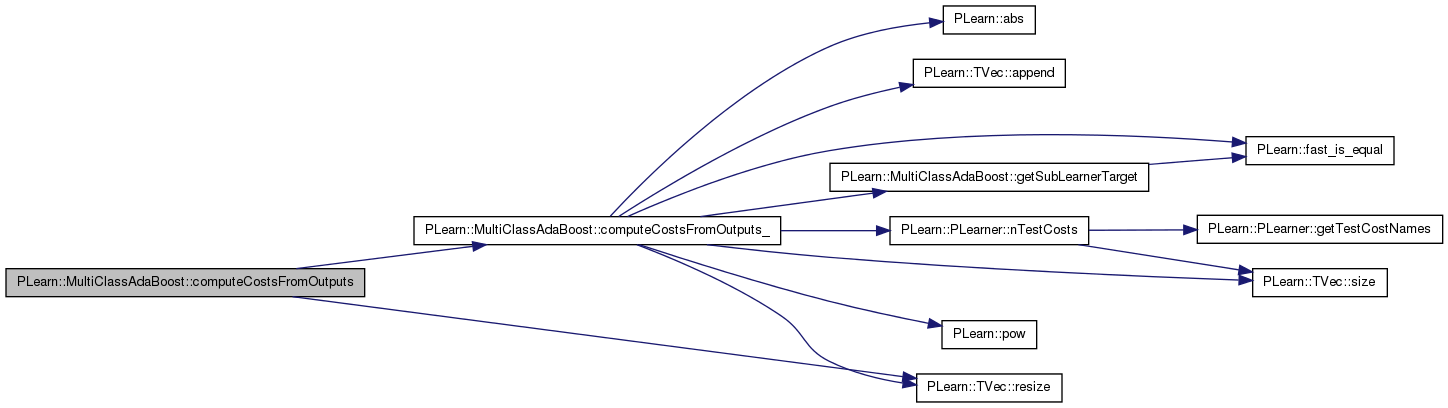

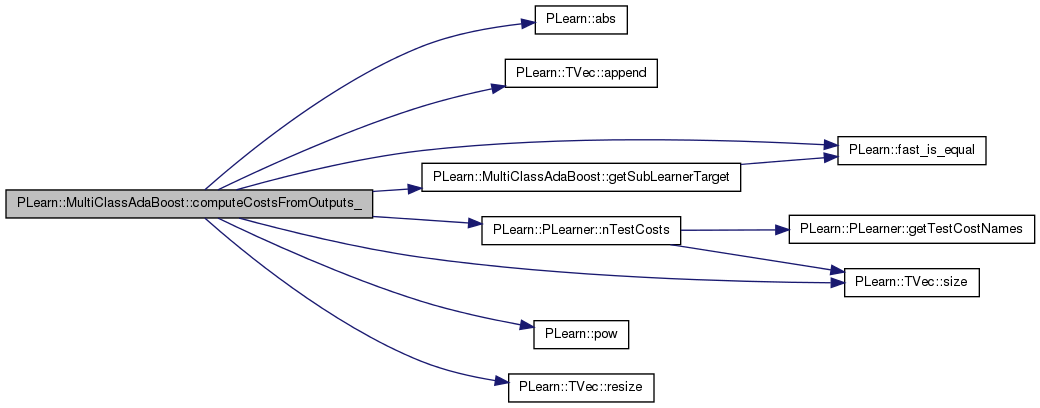

Definition at line 475 of file MultiClassAdaBoost.cc.

References PLearn::abs(), PLearn::TVec< T >::append(), PLearn::fast_is_equal(), forward_sub_learner_test_costs, getSubLearnerTarget(), last_stage, learner1, learner2, PLearn::PLearner::nTestCosts(), PLASSERT, PLearn::pow(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_target_tmp, test_time, time_last_stage, time_last_stage_ft, time_sum, time_sum_ft, total_test_time, total_train_time, and train_time.

Referenced by computeCostsFromOutputs(), and test().

{

PLASSERT(costs.size()==nTestCosts());

int out = int(round(output[0]));

int pred = int(round(target[0]));

costs[0]=int(out != pred);//class_error

costs[1]=abs(out-pred);//linear_class_error

costs[2]=pow(real(abs(out-pred)),2);//square_class_error

//append conflict cost

if(fast_is_equal(round(output[1]),0)

&& fast_is_equal(round(output[2]),1))

costs[3]=1;

else

costs[3]=0;

costs[4]=costs[5]=costs[6]=0;

costs[out+4]=1;

costs[7]=train_time;

costs[8]=total_train_time;

costs[9]=test_time;

costs[10]=total_test_time;

costs[11]=time_sum;

costs[12]=time_sum_ft;

costs[13]=time_last_stage;

costs[14]=time_last_stage_ft;

costs[15]=last_stage;

if(forward_sub_learner_test_costs){

costs.resize(7+4+5);

PLASSERT(sub_costs1.size()==learner1->nTestCosts() || sub_costs1.size()==0);

PLASSERT(sub_costs2.size()==learner2->nTestCosts() || sub_costs2.size()==0);

getSubLearnerTarget(target, sub_target_tmp);

if(sub_costs1.size()==0){

PLASSERT(input.size()>0);

sub_costs1.resize(learner1->nTestCosts());

learner1->computeCostsOnly(input,sub_target_tmp[0],sub_costs1);

}

if(sub_costs2.size()==0){

PLASSERT(input.size()>0);

sub_costs2.resize(learner2->nTestCosts());

learner2->computeCostsOnly(input,sub_target_tmp[1],sub_costs2);

}

sub_costs1+=sub_costs2;

costs.append(sub_costs1);

}

PLASSERT(costs.size()==nTestCosts());

}

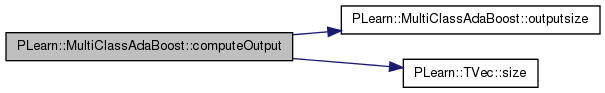

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 350 of file MultiClassAdaBoost.cc.

References learner1, learner2, output1, output2, outputsize(), PLASSERT, and PLearn::TVec< T >::size().

{

PLASSERT(output.size()==outputsize());

PLASSERT(output1.size()==learner1->outputsize());

PLASSERT(output2.size()==learner2->outputsize());

#ifdef _OPENMP

#pragma omp parallel sections default(none)

{

#pragma omp section

learner1->computeOutput(input, output1);

#pragma omp section

learner2->computeOutput(input, output2);

}

#else

learner1->computeOutput(input, output1);

learner2->computeOutput(input, output2);

#endif

int ind1=int(round(output1[0]));

int ind2=int(round(output2[0]));

int ind=-1;

if(ind1==0 && ind2==0)

ind=0;

else if(ind1==1 && ind2==0)

ind=1;

else if(ind1==1 && ind2==1)

ind=2;

else

ind=1;//TODOself.confusion_target;

output[0]=ind;

output[1]=output1[0];

output[2]=output2[0];

}

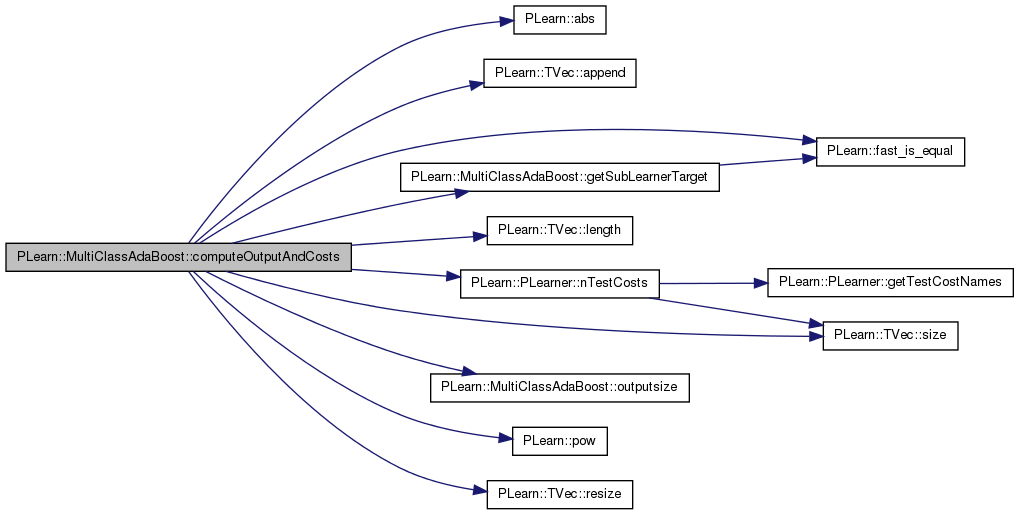

| void PLearn::MultiClassAdaBoost::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 385 of file MultiClassAdaBoost.cc.

References PLearn::abs(), PLearn::TVec< T >::append(), PLearn::fast_is_equal(), forward_sub_learner_test_costs, getSubLearnerTarget(), last_stage, learner1, learner2, PLearn::TVec< T >::length(), MISSING_VALUE, PLearn::PLearner::nTestCosts(), output1, output2, outputsize(), PLASSERT, PLASSERT_MSG, PLearn::pow(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_target_tmp, subcosts1, subcosts2, test_time, time_costs, time_last_stage, time_last_stage_ft, time_sum, time_sum_ft, total_test_time, total_train_time, and train_time.

{

PLASSERT(costs.size()==nTestCosts());

PLASSERT_MSG(output.length()==outputsize(),

"In MultiClassAdaBoost::computeOutputAndCosts -"

" output don't have the good length!");

getSubLearnerTarget(target, sub_target_tmp);

#ifdef _OPENMP

#pragma omp parallel sections default(none)

{

#pragma omp section

learner1->computeOutputAndCosts(input, sub_target_tmp[0],

output1, subcosts1);

#pragma omp section

learner2->computeOutputAndCosts(input, sub_target_tmp[1],

output2, subcosts2);

}

#else

learner1->computeOutputAndCosts(input, sub_target_tmp[0],

output1, subcosts1);

learner2->computeOutputAndCosts(input, sub_target_tmp[1],

output2, subcosts2);

#endif

int ind1=int(round(output1[0]));

int ind2=int(round(output2[0]));

int ind=-1;

if(ind1==0 && ind2==0)

ind=0;

else if(ind1==1 && ind2==0)

ind=1;

else if(ind1==1 && ind2==1)

ind=2;

else

ind=1;//TODOself.confusion_target;

output[0]=ind;

output[1]=output1[0];

output[2]=output2[0];

int out = ind;

int pred = int(round(target[0]));

costs[0]=int(out != pred);//class_error

costs[1]=abs(out-pred);//linear_class_error

costs[2]=pow(real(abs(out-pred)),2);//square_class_error

//append conflict cost

if(fast_is_equal(round(output[1]),0)

&& fast_is_equal(round(output[2]),1))

costs[3]=1;

else

costs[3]=0;

costs[4]=costs[5]=costs[6]=0;

costs[out+4]=1;

if(time_costs){

costs[7]=train_time;

costs[8]=total_train_time;

costs[9]=test_time;

costs[10]=total_test_time;

costs[11]=time_sum;

costs[12]=time_sum_ft;

costs[13]=time_last_stage;

costs[14]=time_last_stage_ft;

costs[15]=last_stage;

}else{

costs[7]=costs[8]=costs[9]=costs[10]=MISSING_VALUE;

costs[11]=costs[12]=costs[13]=costs[14]=costs[15]=MISSING_VALUE;

}

if(forward_sub_learner_test_costs){

costs.resize(7+4+5);

subcosts1+=subcosts2;

costs.append(subcosts1);

}

PLASSERT(costs.size()==nTestCosts());

}

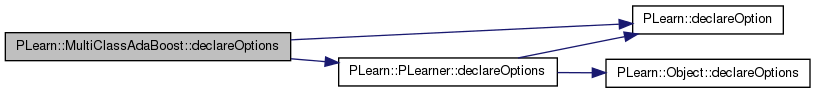

| void PLearn::MultiClassAdaBoost::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 90 of file MultiClassAdaBoost.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), done_warn_once_target_gt_2, forward_sub_learner_test_costs, forward_test, last_stage, learner1, learner2, learner_template, PLearn::OptionBase::learntoption, nb_sequential_ft, PLearn::OptionBase::nosave, test_time, time_costs, time_last_stage, time_last_stage_ft, time_sum, time_sum_ft, total_test_time, total_train_time, train_time, and warn_once_target_gt_2.

{

// ### Declare all of this object's options here.

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. If you don't provide one of these three,

// ### this option will be ignored when loading values from a script.

// ### You can also combine flags, for example with OptionBase::nosave:

// ### (OptionBase::buildoption | OptionBase::nosave)

// ### ex:

// declareOption(ol, "myoption", &MultiClassAdaBoost::myoption,

// OptionBase::buildoption,

// "Help text describing this option");

// ...

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

declareOption(ol, "learner1", &MultiClassAdaBoost::learner1,

OptionBase::learntoption,

"The sub learner to use.");

declareOption(ol, "learner2", &MultiClassAdaBoost::learner2,

OptionBase::learntoption,

"The sub learner to use.");

declareOption(ol, "forward_sub_learner_test_costs",

&MultiClassAdaBoost::forward_sub_learner_test_costs,

OptionBase::buildoption,

"Did we add the learner1 and learner2 costs to our costs.\n");

declareOption(ol, "learner_template",

&MultiClassAdaBoost::learner_template,

OptionBase::buildoption,

"The template to use for learner1 and learner2.\n");

declareOption(ol, "forward_test",

&MultiClassAdaBoost::forward_test,

OptionBase::buildoption,

"if 0, default test. If 1 forward the test fct to the sub"

" learner. If 2, determine at each stage what is the faster"

" based on past test time.\n");

declareOption(ol, "train_time",

&MultiClassAdaBoost::train_time,

OptionBase::learntoption|OptionBase::nosave,

"The time spent in the last call to train() in second.");

declareOption(ol, "total_train_time",

&MultiClassAdaBoost::total_train_time,

OptionBase::learntoption|OptionBase::nosave,

"The total time spent in the train() function in second.");

declareOption(ol, "test_time",

&MultiClassAdaBoost::test_time,

OptionBase::learntoption|OptionBase::nosave,

"The time spent in the last call to test() in second.");

declareOption(ol, "total_test_time",

&MultiClassAdaBoost::total_test_time,

OptionBase::learntoption|OptionBase::nosave,

"The total time spent in the test() function in second.");

declareOption(ol, "time_costs",

&MultiClassAdaBoost::time_costs, OptionBase::buildoption,

"If true, generate the time costs. Else they are nan.");

declareOption(ol, "warn_once_target_gt_2",

&MultiClassAdaBoost::warn_once_target_gt_2,

OptionBase::buildoption,

"If true, generate only one warning if we find target > 2.");

declareOption(ol, "done_warn_once_target_gt_2",

&MultiClassAdaBoost::done_warn_once_target_gt_2,

OptionBase::learntoption|OptionBase::nosave,

"Used to keep track if we have done the warning or not.");

declareOption(ol, "time_sum",

&MultiClassAdaBoost::time_sum,

OptionBase::learntoption|OptionBase::nosave,

"The time spend in test() during the last stage if"

" forward_test==2 and we use the inhereted::test fct."

" If test() is called multiple time for the same stage"

" this is the sum of the time.");

declareOption(ol, "time_sum_ft",

&MultiClassAdaBoost::time_sum_ft,

OptionBase::learntoption|OptionBase::nosave,

"The time spend in test() during the last stage if"

" forward_test is 1 or 2 and we forward the test to the"

" subleaner. If test() is called multiple time for the same"

" stage this is the sum of the time.");

declareOption(ol, "time_last_stage",

&MultiClassAdaBoost::time_last_stage,

OptionBase::learntoption|OptionBase::nosave,

"This is the last value of time_sum in the last stage.");

declareOption(ol, "time_last_stage_ft",

&MultiClassAdaBoost::time_last_stage_ft,

OptionBase::learntoption|OptionBase::nosave,

"This is the last value of time_sum_ft in the last stage.");

declareOption(ol, "last_stage",

&MultiClassAdaBoost::last_stage,

OptionBase::learntoption |OptionBase::nosave,

"The stage at witch time_sum or time_sum_ft was used");

declareOption(ol, "last_stage",

&MultiClassAdaBoost::last_stage,

OptionBase::learntoption |OptionBase::nosave,

"The stage at witch time_sum or time_sum_ft was used");

declareOption(ol, "nb_sequential_ft",

&MultiClassAdaBoost::nb_sequential_ft,

OptionBase::learntoption |OptionBase::nosave,

"The number of sequential time that we forward the test()"

" fct. We must do that as the first time we forward it, the"

" time is higher then the following ones.");

}

| static const PPath& PLearn::MultiClassAdaBoost::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 183 of file MultiClassAdaBoost.h.

:

//##### Protected Options ###############################################

| MultiClassAdaBoost * PLearn::MultiClassAdaBoost::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file MultiClassAdaBoost.cc.

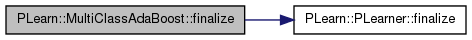

| void PLearn::MultiClassAdaBoost::finalize | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

When this method is called the learner know it we will never train it again. So it can free resources that are needed only during the training. The functions test()/computeOutputs()/... should continue to work.

Reimplemented from PLearn::PLearner.

Definition at line 273 of file MultiClassAdaBoost.cc.

References PLearn::PLearner::finalize(), learner1, and learner2.

{

inherited::finalize();

learner1->finalize();

learner2->finalize();

}

| void PLearn::MultiClassAdaBoost::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 280 of file MultiClassAdaBoost.cc.

References PLearn::PLearner::forget(), learner1, learner2, PLearn::PLearner::stage, and PLearn::PLearner::train_stats.

{

inherited::forget();

stage = 0;

train_stats->forget();

learner1->forget();

learner2->forget();

}

| OptionList & PLearn::MultiClassAdaBoost::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 58 of file MultiClassAdaBoost.cc.

| OptionMap & PLearn::MultiClassAdaBoost::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 58 of file MultiClassAdaBoost.cc.

| TVec< string > PLearn::MultiClassAdaBoost::getOutputNames | ( | ) | const [virtual] |

Returns a vector of length outputsize() containing the outputs' names.

Default version returns ["out0", "out1", ...] Don't forget name should not have space or it will cause trouble when they are saved in the file {metadatadir}/fieldnames

Reimplemented from PLearn::PLearner.

Definition at line 529 of file MultiClassAdaBoost.cc.

{

TVec<string> names(3);

names[0]="prediction";

names[1]="prediction_learner_1";

names[2]="prediction_learner_2";

return names;

}

| RemoteMethodMap & PLearn::MultiClassAdaBoost::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 58 of file MultiClassAdaBoost.cc.

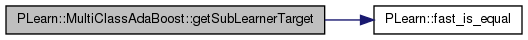

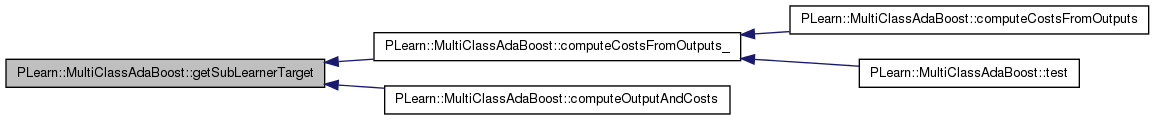

| void PLearn::MultiClassAdaBoost::getSubLearnerTarget | ( | const Vec | target, |

| TVec< Vec > | sub_target | ||

| ) | const [private] |

Definition at line 584 of file MultiClassAdaBoost.cc.

References done_warn_once_target_gt_2, PLearn::fast_is_equal(), PLERROR, PLWARNING, and warn_once_target_gt_2.

Referenced by computeCostsFromOutputs_(), and computeOutputAndCosts().

{

if(fast_is_equal(target[0],0.)){

sub_target[0][0]=0;

sub_target[1][0]=0;

}else if(fast_is_equal(target[0],1.)){

sub_target[0][0]=1;

sub_target[1][0]=0;

}else if(fast_is_equal(target[0],2.)){

sub_target[0][0]=1;

sub_target[1][0]=1;

}else if(target[0]>2){

if(!warn_once_target_gt_2 || ! done_warn_once_target_gt_2){

PLWARNING("In MultiClassAdaBoost::getSubLearnerTarget - "

"We only support target 0/1/2. We got %f. We transform "

"it to a target of 2.", target[0]);

done_warn_once_target_gt_2=true;

if(warn_once_target_gt_2)

PLWARNING("We will show this warning only once.");

}

sub_target[0][0]=1;

sub_target[1][0]=1;

}else{

PLERROR("In MultiClassAdaBoost::getSubLearnerTarget - "

"We only support target 0/1/2. We got %f.", target[0]);

sub_target[0][0]=0;

sub_target[1][0]=0;

}

}

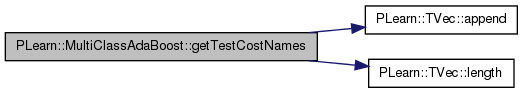

| TVec< string > PLearn::MultiClassAdaBoost::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 538 of file MultiClassAdaBoost.cc.

References PLearn::TVec< T >::append(), forward_sub_learner_test_costs, i, learner1, and PLearn::TVec< T >::length().

{

// Return the names of the costs computed by computeCostsFromOutputs

// (these may or may not be exactly the same as what's returned by

// getTrainCostNames).

// ...

TVec<string> names;

names.append("class_error");

names.append("linear_class_error");

names.append("square_class_error");

names.append("conflict");

names.append("class0");

names.append("class1");

names.append("class2");

names.append("train_time");

names.append("total_train_time");

names.append("test_time");

names.append("total_test_time");

names.append("time_sum");

names.append("time_sum_ft");

names.append("time_last_stage");

names.append("time_last_stage_ft");

names.append("last_stage");

if(forward_sub_learner_test_costs){

TVec<string> subcosts=learner1->getTestCostNames();

for(int i=0;i<subcosts.length();i++){

subcosts[i]="sum_sublearner."+subcosts[i];

}

names.append(subcosts);

}

return names;

}

| TVec< string > PLearn::MultiClassAdaBoost::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 572 of file MultiClassAdaBoost.cc.

{

// Return the names of the objective costs that the train method computes

// and for which it updates the VecStatsCollector train_stats

// (these may or may not be exactly the same as what's returned by

// getTestCostNames).

// ...

TVec<string> names;

return names;

}

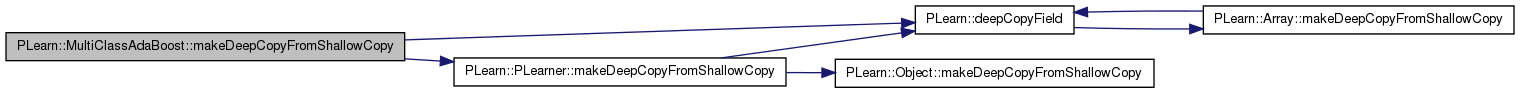

| void PLearn::MultiClassAdaBoost::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 244 of file MultiClassAdaBoost.cc.

References PLearn::deepCopyField(), learner1, learner2, PLearn::PLearner::makeDeepCopyFromShallowCopy(), output1, output2, subcosts1, subcosts2, timer, tmp_costs, tmp_input, tmp_output, and tmp_target.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(tmp_input, copies);

deepCopyField(tmp_target, copies);

deepCopyField(tmp_output, copies);

deepCopyField(tmp_costs, copies);

deepCopyField(output1, copies);

deepCopyField(output2, copies);

deepCopyField(subcosts1, copies);

deepCopyField(subcosts2, copies);

deepCopyField(timer, copies);

deepCopyField(learner1, copies);

deepCopyField(learner2, copies);

//not needed as we only read it.

//deepCopyField(learner_template, copies);

}

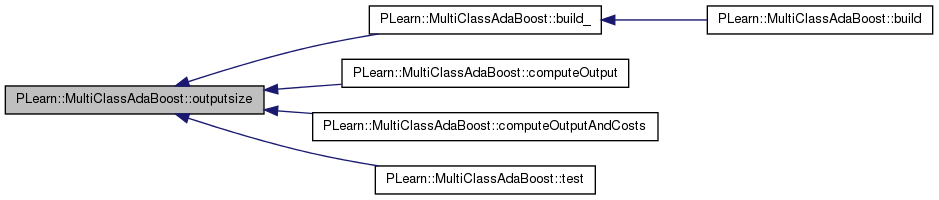

| int PLearn::MultiClassAdaBoost::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 265 of file MultiClassAdaBoost.cc.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), and test().

{

// Compute and return the size of this learner's output (which typically

// may depend on its inputsize(), targetsize() and set options).

return 3;

}

| void PLearn::MultiClassAdaBoost::setTrainingSet | ( | VMat | training_set, |

| bool | call_forget = true |

||

| ) | [virtual] |

Declares the training set.

Then calls build() and forget() if necessary. Also sets this learner's inputsize_ targetsize_ weightsize_ from those of the training_set. Note: You shouldn't have to override this in subclasses, except in maybe to forward the call to an underlying learner.

Reimplemented from PLearn::PLearner.

Definition at line 615 of file MultiClassAdaBoost.cc.

References PLearn::VMat::fieldName(), learner1, learner2, PLCHECK, PLearn::TVec< T >::resize(), PLearn::PLearner::setTrainingSet(), subcosts1, subcosts2, targetname, and PLearn::PLearner::train_set.

Referenced by build_().

{

PLCHECK(learner1 && learner2);

bool training_set_has_changed = !train_set || !(train_set->looksTheSameAs(training_set));

targetname = training_set->fieldName(training_set->inputsize());

//We don't give it if the script give them one explicitly.

//This can be usefull for optimization

if(training_set_has_changed || !learner1->getTrainingSet()){

VMat vmat1 = new OneVsAllVMatrix(training_set,0,true);

if(training_set->hasMetaDataDir())

vmat1->setMetaDataDir(training_set->getMetaDataDir()/"0vsOther");

learner1->setTrainingSet(vmat1, call_forget);

}

if(training_set_has_changed || !learner2->getTrainingSet()){

VMat vmat2 = new OneVsAllVMatrix(training_set,2);

PP<RegressionTreeRegisters> t1 =

(PP<RegressionTreeRegisters>)learner1->getTrainingSet();

if(t1->classname()=="RegressionTreeRegisters"){

vmat2 = new RegressionTreeRegisters(vmat2,

t1->getTSortedRow(),

t1->getTSource(),

learner1->report_progress,

learner1->verbosity,false,false);

}

learner2->setTrainingSet(vmat2, call_forget);

}

//we do it here as RegressionTree need a trainingSet to know

// the number of test.

subcosts2.resize(learner2->nTestCosts());

subcosts1.resize(learner1->nTestCosts());

inherited::setTrainingSet(training_set, call_forget);

}

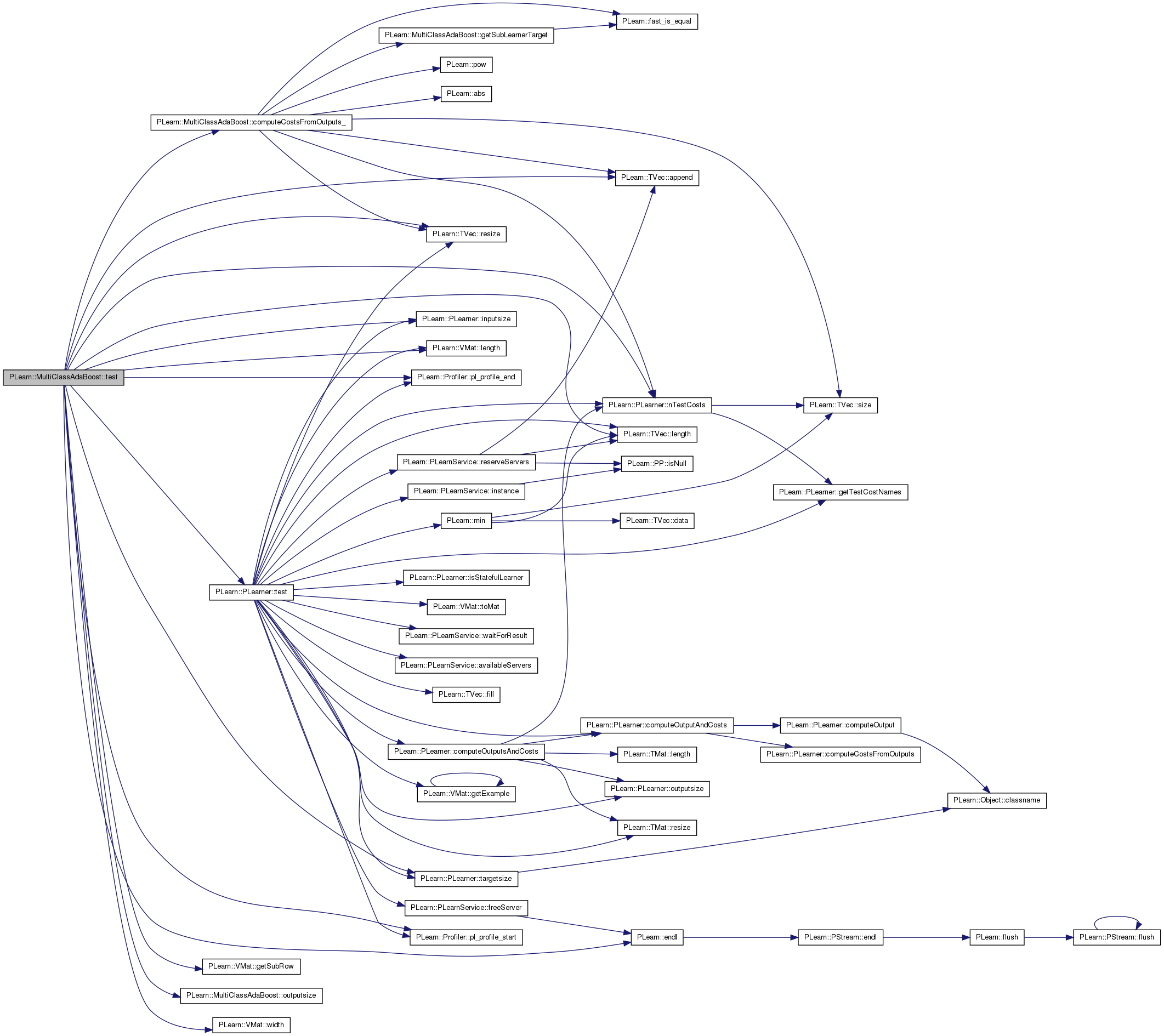

| void PLearn::MultiClassAdaBoost::test | ( | VMat | testset, |

| PP< VecStatsCollector > | test_stats, | ||

| VMat | testoutputs = 0, |

||

| VMat | testcosts = 0 |

||

| ) | const [virtual] |

Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts.

The default version repeatedly calls computeOutputAndCosts or computeCostsOnly. Note that neither test_stats->forget() nor test_stats->finalize() is called, so that you should call them yourself (respectively before and after calling this method) if you don't plan to accumulate statistics.

Reimplemented from PLearn::PLearner.

Definition at line 653 of file MultiClassAdaBoost.cc.

References PLearn::TVec< T >::append(), computeCostsFromOutputs_(), PLearn::endl(), forward_sub_learner_test_costs, forward_test, PLearn::VMat::getSubRow(), i, PLearn::PLearner::inputsize(), last_stage, learner1, learner2, PLearn::VMat::length(), PLearn::TVec< T >::length(), nb_sequential_ft, PLearn::PLearner::nTestCosts(), outputsize(), PLearn::Profiler::pl_profile_end(), PLearn::Profiler::pl_profile_start(), PLASSERT, PLCHECK, PLearn::TVec< T >::resize(), saved_testset, saved_testset1, saved_testset2, PLearn::PLearner::stage, PLearn::PLearner::targetsize(), PLearn::PLearner::test(), time_last_stage, time_last_stage_ft, time_sum, time_sum_ft, timer, tmp_costs, tmp_input, tmp_output, tmp_target, and PLearn::VMat::width().

{

Profiler::pl_profile_start("MultiClassAdaBoost::test()");

Profiler::pl_profile_start("MultiClassAdaBoost::train+test");

timer->startTimer("MultiClassAdaBoost::test()");

if(!forward_test){

inherited::test(testset,test_stats,testoutputs,testcosts);

Profiler::pl_profile_end("MultiClassAdaBoost::test()");

Profiler::pl_profile_end("MultiClassAdaBoost::train+test");

timer->stopTimer("MultiClassAdaBoost::test()");

return;

}

if(last_stage<stage && time_sum>0){

time_last_stage=time_sum;

time_sum=0;

}

if(last_stage<stage && time_sum_ft>0){

if(nb_sequential_ft>0)

time_last_stage_ft=time_sum_ft;

nb_sequential_ft++;

time_sum_ft=0;

}

if(forward_test==2 && time_last_stage<time_last_stage_ft){

EXTREME_MODULE_LOG<<"inherited start time_sum="<<time_sum<<" time_sum_ft="<<time_sum_ft<<" last_stage="<<last_stage <<" stage=" <<stage <<" time_last_stage=" <<time_last_stage<<" time_last_stage_ft=" <<time_last_stage_ft<<endl;

timer->resetTimer("MultiClassAdaBoost::test() current");

timer->startTimer("MultiClassAdaBoost::test() current");

PLCHECK(last_stage<=stage);

inherited::test(testset,test_stats,testoutputs,testcosts);

timer->stopTimer("MultiClassAdaBoost::test() current");

timer->stopTimer("MultiClassAdaBoost::test()");

Profiler::pl_profile_end("MultiClassAdaBoost::test()");

Profiler::pl_profile_end("MultiClassAdaBoost::train+test");

time_sum += timer->getTimer("MultiClassAdaBoost::test() current");

last_stage=stage;

nb_sequential_ft = 0;

EXTREME_MODULE_LOG<<"inherited end time_sum="<<time_sum<<" time_sum_ft="<<time_sum_ft<<" last_stage="<<last_stage <<" stage=" <<stage <<" time_last_stage=" <<time_last_stage<<" time_last_stage_ft=" <<time_last_stage_ft<<endl;

return;

}

EXTREME_MODULE_LOG<<"start time_sum="<<time_sum<<" time_sum_ft="<<time_sum_ft<<" last_stage="<<last_stage <<" stage=" <<stage <<" time_last_stage=" <<time_last_stage<<" time_last_stage_ft=" <<time_last_stage_ft<<endl;

timer->resetTimer("MultiClassAdaBoost::test() current");

timer->startTimer("MultiClassAdaBoost::test() current");

//Profiler::pl_profile_start("MultiClassAdaBoost::test() part1");//cheap

int index=-1;

for(int i=0;i<saved_testset.length();i++){

if(saved_testset[i]==testset){

index=i;break;

}

}

PP<VecStatsCollector> test_stats1 = 0;

PP<VecStatsCollector> test_stats2 = 0;

VMat testoutputs1 = VMat(new MemoryVMatrix(testset->length(),

learner1->outputsize()));

VMat testoutputs2 = VMat(new MemoryVMatrix(testset->length(),

learner2->outputsize()));

VMat testcosts1 = 0;

VMat testcosts2 = 0;

VMat testset1 = 0;

VMat testset2 = 0;

if ((testcosts || test_stats )&& forward_sub_learner_test_costs){

//comment

testcosts1 = VMat(new MemoryVMatrix(testset->length(),

learner1->nTestCosts()));

testcosts2 = VMat(new MemoryVMatrix(testset->length(),

learner2->nTestCosts()));

}

if(index<0){

testset1 = new OneVsAllVMatrix(testset,0,true);

testset2 = new OneVsAllVMatrix(testset,2);

saved_testset.append(testset);

saved_testset1.append(testset1);

saved_testset2.append(testset2);

}else{

//we need to do that as AdaBoost need

//the same dataset to reuse their test results

testset1=saved_testset1[index];

testset2=saved_testset2[index];

PLCHECK(((PP<OneVsAllVMatrix>)testset1)->source==testset);

PLCHECK(((PP<OneVsAllVMatrix>)testset2)->source==testset);

}

//Profiler::pl_profile_end("MultiClassAdaBoost::test() part1");//cheap

Profiler::pl_profile_start("MultiClassAdaBoost::test() subtest");

#ifdef _OPENMP

#pragma omp parallel sections if(false)//false as this is not thread safe right now.

{

#pragma omp section

learner1->test(testset1,test_stats1,testoutputs1,testcosts1);

#pragma omp section

learner2->test(testset2,test_stats2,testoutputs2,testcosts2);

}

#else

learner1->test(testset1,test_stats1,testoutputs1,testcosts1);

learner2->test(testset2,test_stats2,testoutputs2,testcosts2);

#endif

Profiler::pl_profile_end("MultiClassAdaBoost::test() subtest");

VMat my_outputs = 0;

VMat my_costs = 0;

if(testoutputs){

my_outputs=testoutputs;

}else if(bool(testcosts) | bool(test_stats)){

my_outputs=VMat(new MemoryVMatrix(testset->length(),

outputsize()));

}

if(testcosts){

my_costs=testcosts;

}else if(test_stats){

my_costs=VMat(new MemoryVMatrix(testset->length(),

nTestCosts()));

}

// Profiler::pl_profile_start("MultiClassAdaBoost::test() my_outputs");//cheap

if(my_outputs){

for(int row=0;row<testset.length();row++){

real out1=testoutputs1->get(row,0);

real out2=testoutputs2->get(row,0);

int ind1=int(round(out1));

int ind2=int(round(out2));

int ind=-1;

if(ind1==0 && ind2==0)

ind=0;

else if(ind1==1 && ind2==0)

ind=1;

else if(ind1==1 && ind2==1)

ind=2;

else

ind=1;//TODOself.confusion_target;

tmp_output[0]=ind;

tmp_output[1]=out1;

tmp_output[2]=out2;

my_outputs->putOrAppendRow(row,tmp_output);

}

}

// Profiler::pl_profile_end("MultiClassAdaBoost::test() my_outputs");

// Profiler::pl_profile_start("MultiClassAdaBoost::test() my_costs");//cheap

if (my_costs){

tmp_costs.resize(nTestCosts());

// if (forward_sub_learner_test_costs)

//TODO optimize by reusing testoutputs1 and testoutputs2

// PLWARNING("will be long");

int target_index = testset->inputsize();

PLASSERT(testset->targetsize()==1);

Vec costs1,costs2;

if(forward_sub_learner_test_costs){

costs1.resize(learner1->nTestCosts());

costs2.resize(learner2->nTestCosts());

}

for(int row=0;row<testset.length();row++){

//default version

//testset.getExample(row, input, target, weight);

//computeCostsFromOutputs(input,my_outputs(row),target,costs);

//the input is not needed for the cost of this class if the subcost are know.

testset->getSubRow(row,target_index,tmp_target);

// Vec costs1=testcosts1(row);

// Vec costs2=testcosts2(row);

if(forward_sub_learner_test_costs){

testcosts1->getRow(row,costs1);

testcosts2->getRow(row,costs2);

}

//TODO??? tmp_input is empty!!!

computeCostsFromOutputs_(tmp_input, my_outputs(row), tmp_target, costs1,

costs2, tmp_costs);

my_costs->putOrAppendRow(row,tmp_costs);

}

}

// Profiler::pl_profile_end("MultiClassAdaBoost::test() my_costs");

// Profiler::pl_profile_start("MultiClassAdaBoost::test() test_stats");//cheap

if (test_stats){

if(testset->weightsize()==0){

for(int row=0;row<testset.length();row++){

Vec costs = my_costs(row);

test_stats->update(costs, 1);

}

}else{

int weight_index=inputsize()+targetsize();

Vec costs(my_costs.width());

for(int row=0;row<testset.length();row++){

// Vec costs = my_costs(row);

my_costs->getRow(row, costs);

test_stats->update(costs, testset->get(row, weight_index));

}

}

}

// Profiler::pl_profile_end("MultiClassAdaBoost::test() test_stats");

timer->stopTimer("MultiClassAdaBoost::test() current");

timer->stopTimer("MultiClassAdaBoost::test()");

Profiler::pl_profile_end("MultiClassAdaBoost::test()");

Profiler::pl_profile_end("MultiClassAdaBoost::train+test");

time_sum_ft +=timer->getTimer("MultiClassAdaBoost::test() current");

last_stage=stage;

EXTREME_MODULE_LOG<<"end time_sum="<<time_sum<<" time_sum_ft="<<time_sum_ft<<" last_stage="<<last_stage <<" stage=" <<stage <<" time_last_stage=" <<time_last_stage<<" time_last_stage_ft=" <<time_last_stage_ft<<endl;

}

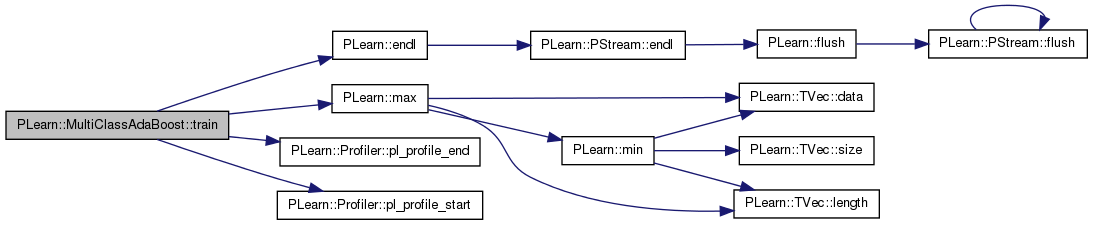

| void PLearn::MultiClassAdaBoost::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 290 of file MultiClassAdaBoost.cc.

References PLearn::endl(), learner1, learner2, PLearn::max(), PLearn::PLearner::nstages, PLearn::Profiler::pl_profile_end(), PLearn::Profiler::pl_profile_start(), PLearn::PLearner::stage, test_time, timer, total_test_time, total_train_time, PLearn::PLearner::train_stats, and train_time.

{

EXTREME_MODULE_LOG<<"train() start"<<endl;

timer->startTimer("MultiClassAdaBoost::train");

Profiler::pl_profile_start("MultiClassAdaBoost::train");

Profiler::pl_profile_start("MultiClassAdaBoost::train+test");

learner1->nstages = nstages;

learner2->nstages = nstages;

//if you use the parallel version, you must disable all verbose, verbosity and report progress int he learner1 and learner2.

//Otherwise this will cause crash due to the parallel printing to stdout stderr.

#ifdef _OPENMP

//the AdaBoost and the weak learner should not print anything as this will cause race condition on the printing

//TODO find a way to have thread safe output?

if(omp_get_max_threads()>1){

learner1->verbosity=0;

learner2->verbosity=0;

learner1->weak_learner_template->verbosity=0;

learner2->weak_learner_template->verbosity=0;

}

EXTREME_MODULE_LOG<<"train() // start"<<endl;

#pragma omp parallel sections default(none)

{

#pragma omp section

learner1->train();

#pragma omp section

learner2->train();

}

EXTREME_MODULE_LOG<<"train() // end"<<endl;

#else

learner1->train();

learner2->train();

#endif

stage=max(learner1->stage,learner2->stage);

train_stats->stats.resize(0);

PP<VecStatsCollector> v;

//we do it this way in case the learner don't have train_stats

if(v=learner1->getTrainStatsCollector())

train_stats->append(*(v),"sublearner1.");

if(v=learner2->getTrainStatsCollector())

train_stats->append(*(v),"sublearner2.");

timer->stopTimer("MultiClassAdaBoost::train");

Profiler::pl_profile_end("MultiClassAdaBoost::train");

Profiler::pl_profile_end("MultiClassAdaBoost::train+test");

real tmp = timer->getTimer("MultiClassAdaBoost::train");

train_time=tmp - total_train_time;

total_train_time=tmp;

//we get the test_time here as we want the test time for all dataset.

//if we put it in the test function, we would have it for one dataset.

tmp = timer->getTimer("MultiClassAdaBoost::test()");

test_time=tmp-total_test_time;

total_test_time=tmp;

EXTREME_MODULE_LOG<<"train() end"<<endl;

}

Reimplemented from PLearn::PLearner.

Definition at line 183 of file MultiClassAdaBoost.h.

bool PLearn::MultiClassAdaBoost::done_warn_once_target_gt_2 [mutable, private] |

Definition at line 89 of file MultiClassAdaBoost.h.

Referenced by declareOptions(), and getSubLearnerTarget().

### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //!

Did we add the learner1 and learner2 costs to our costs

Definition at line 109 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), getTestCostNames(), and test().

Did we forward the test function to the sub learner?

Definition at line 112 of file MultiClassAdaBoost.h.

Referenced by declareOptions(), and test().

int PLearn::MultiClassAdaBoost::last_stage [mutable, private] |

Definition at line 99 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and test().

The learner1 and learner2 must be trained!

Definition at line 115 of file MultiClassAdaBoost.h.

Referenced by build_(), computeCostsFromOutputs_(), computeOutput(), computeOutputAndCosts(), declareOptions(), finalize(), forget(), getTestCostNames(), makeDeepCopyFromShallowCopy(), setTrainingSet(), test(), and train().

Definition at line 116 of file MultiClassAdaBoost.h.

Referenced by build_(), computeCostsFromOutputs_(), computeOutput(), computeOutputAndCosts(), declareOptions(), finalize(), forget(), makeDeepCopyFromShallowCopy(), setTrainingSet(), test(), and train().

Definition at line 117 of file MultiClassAdaBoost.h.

Referenced by build_(), and declareOptions().

int PLearn::MultiClassAdaBoost::nb_sequential_ft [mutable, private] |

Definition at line 100 of file MultiClassAdaBoost.h.

Referenced by declareOptions(), and test().

Vec PLearn::MultiClassAdaBoost::output1 [mutable, private] |

Definition at line 68 of file MultiClassAdaBoost.h.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Vec PLearn::MultiClassAdaBoost::output2 [mutable, private] |

Definition at line 69 of file MultiClassAdaBoost.h.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

TVec<VMat> PLearn::MultiClassAdaBoost::saved_testset [mutable, private] |

Definition at line 73 of file MultiClassAdaBoost.h.

Referenced by test().

TVec<VMat> PLearn::MultiClassAdaBoost::saved_testset1 [mutable, private] |

Definition at line 74 of file MultiClassAdaBoost.h.

Referenced by test().

TVec<VMat> PLearn::MultiClassAdaBoost::saved_testset2 [mutable, private] |

Definition at line 75 of file MultiClassAdaBoost.h.

Referenced by test().

TVec<Vec> PLearn::MultiClassAdaBoost::sub_target_tmp [mutable, private] |

Definition at line 214 of file MultiClassAdaBoost.h.

Referenced by build_(), computeCostsFromOutputs_(), and computeOutputAndCosts().

Vec PLearn::MultiClassAdaBoost::subcosts1 [mutable, private] |

Definition at line 70 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and setTrainingSet().

Vec PLearn::MultiClassAdaBoost::subcosts2 [mutable, private] |

Definition at line 71 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and setTrainingSet().

string PLearn::MultiClassAdaBoost::targetname [private] |

Definition at line 216 of file MultiClassAdaBoost.h.

Referenced by build_(), and setTrainingSet().

real PLearn::MultiClassAdaBoost::test_time [private] |

The time it took for the last execution of the test() function.

Definition at line 83 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and train().

bool PLearn::MultiClassAdaBoost::time_costs [private] |

Definition at line 87 of file MultiClassAdaBoost.h.

Referenced by computeOutputAndCosts(), and declareOptions().

real PLearn::MultiClassAdaBoost::time_last_stage [mutable, private] |

Definition at line 97 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and test().

real PLearn::MultiClassAdaBoost::time_last_stage_ft [mutable, private] |

Definition at line 98 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and test().

real PLearn::MultiClassAdaBoost::time_sum [mutable, private] |

Definition at line 95 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and test().

real PLearn::MultiClassAdaBoost::time_sum_ft [mutable, private] |

Definition at line 96 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and test().

PP<PTimer> PLearn::MultiClassAdaBoost::timer [private] |

Definition at line 91 of file MultiClassAdaBoost.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), test(), and train().

Vec PLearn::MultiClassAdaBoost::tmp_costs [mutable, private] |

Definition at line 66 of file MultiClassAdaBoost.h.

Referenced by makeDeepCopyFromShallowCopy(), and test().

Vec PLearn::MultiClassAdaBoost::tmp_input [mutable, private] |

Definition at line 63 of file MultiClassAdaBoost.h.

Referenced by makeDeepCopyFromShallowCopy(), and test().

Vec PLearn::MultiClassAdaBoost::tmp_output [mutable, private] |

Global storage to save memory allocations.

Reimplemented from PLearn::PLearner.

Definition at line 65 of file MultiClassAdaBoost.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and test().

Vec PLearn::MultiClassAdaBoost::tmp_target [mutable, private] |

Definition at line 64 of file MultiClassAdaBoost.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and test().

The total time passed in test()

Definition at line 85 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and train().

The total time passed in training.

Definition at line 80 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and train().

real PLearn::MultiClassAdaBoost::train_time [private] |

The time it took for the last execution of the train() function.

Definition at line 78 of file MultiClassAdaBoost.h.

Referenced by computeCostsFromOutputs_(), computeOutputAndCosts(), declareOptions(), and train().

Definition at line 88 of file MultiClassAdaBoost.h.

Referenced by declareOptions(), and getSubLearnerTarget().

1.7.4

1.7.4