|

PLearn 0.1

|

|

PLearn 0.1

|

#include <VarArray.h>

Public Types | |

| typedef Var * | iterator |

Public Member Functions | |

| VarArray () | |

| VarArray (int n, int n_extra=10) | |

| VarArray (int n, int initial_length, int n_extra) | |

| VarArray (int n, int initial_length, int initial_width, int n_extra) | |

| VarArray (const Array< Var > &va) | |

| VarArray (Array< Var > &va) | |

| VarArray (const VarArray &va) | |

| VarArray (const Var &v) | |

| VarArray (const Var &v1, const Var &v2) | |

| VarArray (Variable *v) | |

| VarArray (Variable *v1, Variable *v2) | |

| VarArray (Variable *v1, Variable *v2, Variable *v3) | |

| void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Deep copy of an array is not the same as for a TVec, because the shallow copy automatically creates a new storage. | |

| operator Var () | |

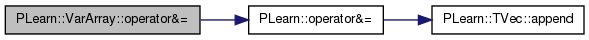

| VarArray & | operator&= (const Var &v) |

| VarArray & | operator&= (const VarArray &va) |

| VarArray | operator& (const Var &v) const |

| VarArray | operator& (const VarArray &va) const |

| int | nelems () const |

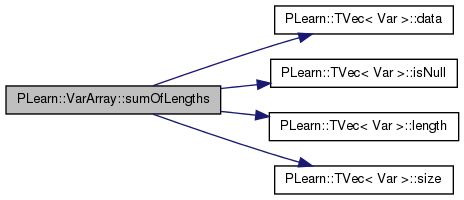

| int | sumOfLengths () const |

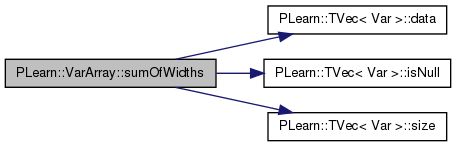

| int | sumOfWidths () const |

| int | maxWidth () const |

| int | maxLength () const |

| VarArray | nonNull () const |

| returns a VarArray containing all the Var's that are non-null | |

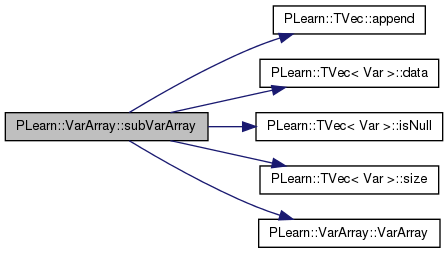

| VarArray & | subVarArray (int start, int len) const |

| void | copyFrom (int start, int len, const VarArray &from) |

| void | copyFrom (int start, const VarArray &from) |

| void | copyFrom (const Vec &datavec) |

| void | copyValuesFrom (const VarArray &from) |

| void | copyTo (const Vec &datavec) const |

| void | accumulateTo (const Vec &datavec) const |

| void | copyGradientFrom (const Vec &datavec) |

| void | copyGradientFrom (const Array< Vec > &datavec) |

| void | accumulateGradientFrom (const Vec &datavec) |

| void | copyGradientTo (const Vec &datavec) |

| void | copyGradientTo (const Array< Vec > &datavec) |

| void | accumulateGradientTo (const Vec &datavec) |

| void | copyTo (real *x, int n) const |

| UNSAFE: x must point to at least n floats! | |

| void | accumulateTo (real *x, int n) const |

| void | copyFrom (const real *x, int n) |

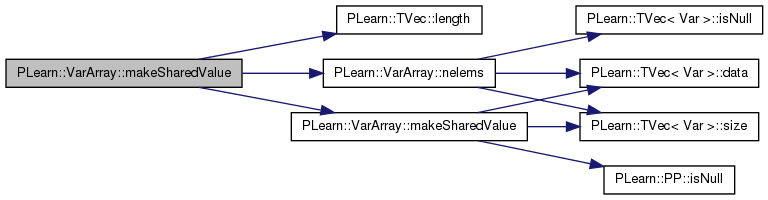

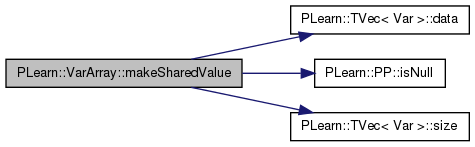

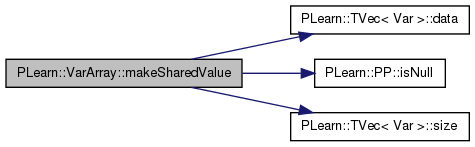

| void | makeSharedValue (real *x, int n) |

| like copyTo but also makes value's point to x | |

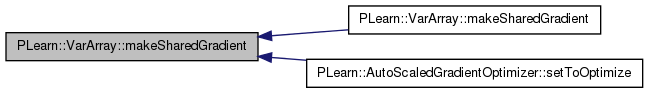

| void | makeSharedGradient (real *x, int n) |

| like copyGradientTo but also makes value's point to x | |

| void | makeSharedValue (PP< Storage< real > > storage, int offset_=0) |

| make value and matValue point into this storage | |

| void | makeSharedValue (Vec &v, int offset_=0) |

| void | makeSharedGradient (Vec &v, int offset_=0) |

| void | makeSharedGradient (PP< Storage< real > > storage, int offset_=0) |

| void | copyGradientTo (real *x, int n) |

| void | copyGradientFrom (const real *x, int n) |

| void | accumulateGradientFrom (const real *x, int n) |

| void | accumulateGradientTo (real *x, int n) |

| void | copyMinValueTo (const Vec &minv) |

| void | copyMaxValueTo (const Vec &maxv) |

| void | copyMinValueTo (real *x, int n) |

| void | copyMaxValueTo (real *x, int n) |

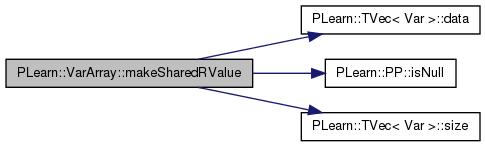

| void | makeSharedRValue (Vec &v, int offset_=0) |

| void | makeSharedRValue (PP< Storage< real > > storage, int offset_=0) |

| void | copyRValueTo (const Vec &datavec) |

| void | copyRValueFrom (const Vec &datavec) |

| void | copyRValueTo (real *x, int n) |

| void | copyRValueFrom (const real *x, int n) |

| void | resizeRValue () |

| void | setMark () const |

| void | clearMark () const |

| void | markPath () const |

| void | buildPath (VarArray &fpath) const |

| void | setDontBpropHere (bool val) |

| void | fprop () |

| void | sizeprop () |

| void | sizefprop () |

| void | bprop () |

| void | bbprop () |

| void | rfprop () |

| void | fbprop () |

| void | sizefbprop () |

| void | fbbprop () |

| void | fillGradient (real value) |

| void | clearGradient () |

| void | clearDiagHessian () |

| VarArray | sources () const |

| VarArray | ancestors () const |

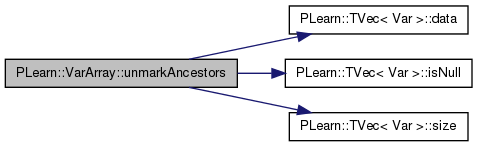

| void | unmarkAncestors () const |

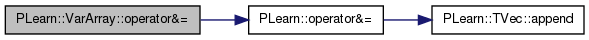

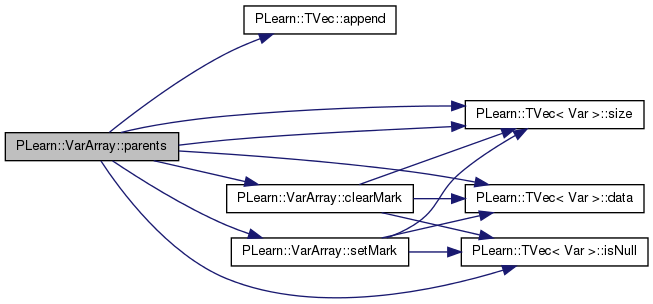

| VarArray | parents () const |

| returns the set of all the direct parents of the Var in this array (previously marked parents are NOT included) | |

| void | symbolicBprop () |

| computes symbolicBprop on a propagation path | |

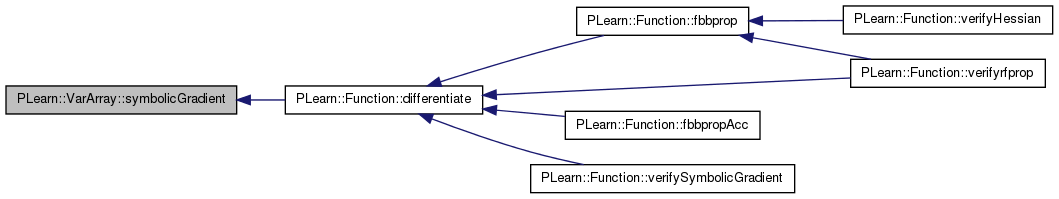

| VarArray | symbolicGradient () |

| returns a VarArray of all the ->g members of the Vars in this array. | |

| void | clearSymbolicGradient () |

| clears the symbolic gradient in all vars of this array | |

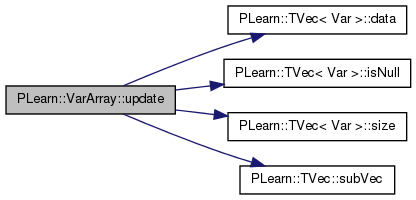

| bool | update (real step_size, Vec direction, real coeff=1.0, real b=0.0) |

| bool | update (Vec step_sizes, Vec direction, real coeff=1.0, real b=0.0) |

| Update the variables in the VarArray with different step sizes, and an optional scaling coefficient + constant coefficient step_sizes and direction must have the same length As with the update with a fixed step size, there is a possible scaling down, and the return value indicates contraints have been hit. | |

| bool | update (Vec step_sizes, Vec direction, Vec coeff) |

| Same as update(Vec step_sizes, Vec direction, real coeff) except there can be 1 different coeff for each variable. | |

| real | maxUpdate (Vec direction) |

| bool | update (real step_size, bool clear=false) |

| void | updateWithWeightDecay (real step_size, real weight_decay, bool L1=false, bool clear=true) |

| for each element of the array: if (L1) value += learning_rate*gradient decrease |value| by learning_rate*weight_decay if it does not make value change sign else // L2 value += learning_rate*(gradient - weight_decay*value) if (clear) gradient=0 | |

| void | updateAndClear () |

| value += step_size*gradient; gradient.clear(); | |

| bool | update (Vec new_value) |

| void | read (istream &in) |

| void | write (ostream &out) const |

| void | printNames () const |

| void | printInfo (bool print_gradient=false) |

| Var | operator[] (Var index) |

| Var & | operator[] (int i) |

| const Var & | operator[] (int i) const |

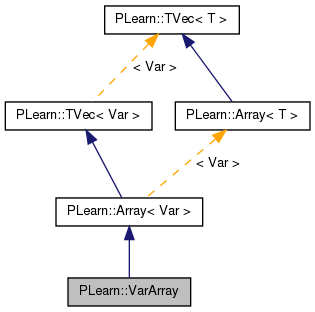

Definition at line 56 of file VarArray.h.

| typedef Var* PLearn::VarArray::iterator |

Reimplemented from PLearn::Array< Var >.

Definition at line 60 of file VarArray.h.

| PLearn::VarArray::VarArray | ( | ) |

Definition at line 52 of file VarArray.cc.

Referenced by subVarArray().

: Array<Var>(0,10)

{}

Definition at line 56 of file VarArray.cc.

: Array<Var>(n,n_extra) {}

Definition at line 66 of file VarArray.h.

: Array<Var>(va) {}

Definition at line 67 of file VarArray.h.

: Array<Var>(va) {}

| PLearn::VarArray::VarArray | ( | const VarArray & | va | ) | [inline] |

Definition at line 68 of file VarArray.h.

: Array<Var>(va) {}

| PLearn::VarArray::VarArray | ( | const Var & | v | ) |

Definition at line 76 of file VarArray.cc.

: Array<Var>(1,10)

{ (*this)[0] = v; }

Definition at line 80 of file VarArray.cc.

: Array<Var>(2,10)

{

(*this)[0] = v1;

(*this)[1] = v2;

}

| PLearn::VarArray::VarArray | ( | Variable * | v | ) |

Definition at line 87 of file VarArray.cc.

: Array<Var>(1,10)

{ (*this)[0] = Var(v); }

Definition at line 91 of file VarArray.cc.

: Array<Var>(2,10)

{

(*this)[0] = Var(v1);

(*this)[1] = Var(v2);

}

Definition at line 98 of file VarArray.cc.

:

Array<Var>(3)

{

(*this)[0] = Var(v1);

(*this)[1] = Var(v2);

(*this)[2] = Var(v3);

}

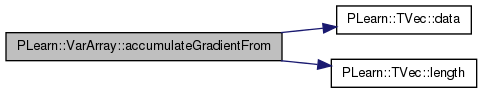

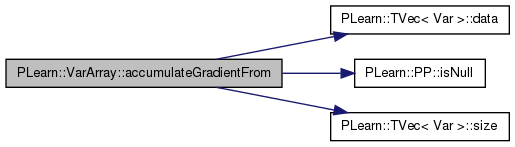

| void PLearn::VarArray::accumulateGradientFrom | ( | const Vec & | datavec | ) |

Definition at line 359 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

{

accumulateGradientFrom(datavec.data(),datavec.length());

}

Definition at line 386 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::accumulateGradientFrom total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

value[j] += *data++;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::accumulateGradientFrom total length of all Vars in the array differs from length of argument");

}

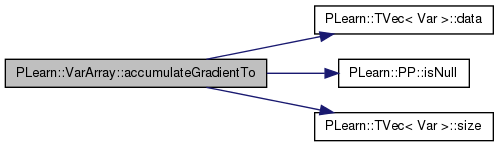

Definition at line 440 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::accumulateGradientTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ += value[j];

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::accumulateGradientTo total length of all Vars in the array differs from length of argument");

}

| void PLearn::VarArray::accumulateGradientTo | ( | const Vec & | datavec | ) |

Definition at line 435 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

{

accumulateGradientTo(datavec.data(), datavec.length());

}

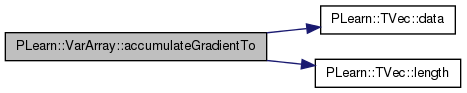

| void PLearn::VarArray::accumulateTo | ( | const Vec & | datavec | ) | const |

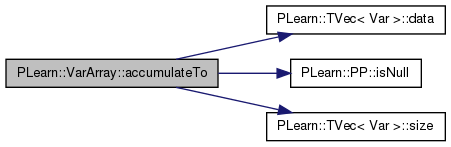

Definition at line 263 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::Function::fbbpropAcc().

{

accumulateTo(datavec.data(), datavec.length());

}

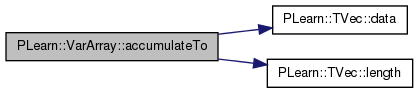

Definition at line 290 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->valuedata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ += value[j];

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyTo total length of all Vars in the array differs from length of argument");

}

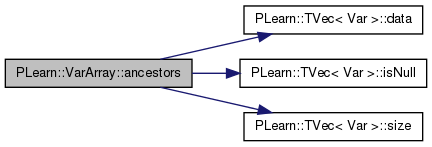

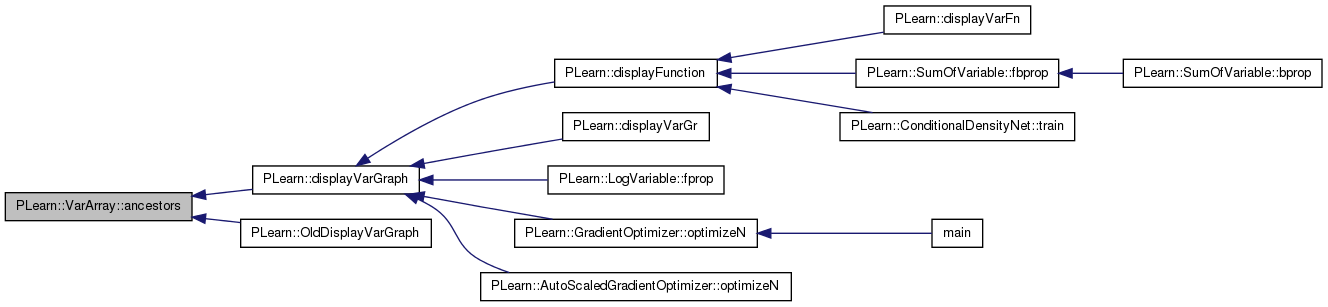

| VarArray PLearn::VarArray::ancestors | ( | ) | const |

Definition at line 1031 of file VarArray.cc.

References a, PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::displayVarGraph(), and PLearn::OldDisplayVarGraph().

{

VarArray a;

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

a &= array[i]->ancestors();

return a;

}

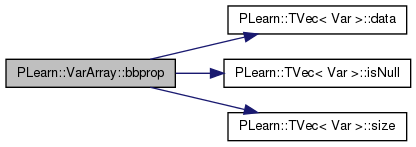

| void PLearn::VarArray::bbprop | ( | ) |

Definition at line 759 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=size()-1; i>=0; i--)

if (!array[i].isNull())

array[i]->bbprop();

}

| void PLearn::VarArray::bprop | ( | ) |

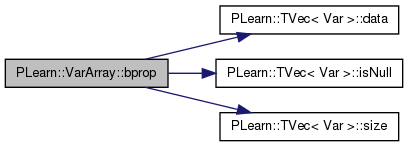

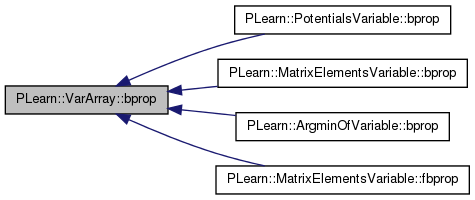

Definition at line 751 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::PotentialsVariable::bprop(), PLearn::MatrixElementsVariable::bprop(), PLearn::ArgminOfVariable::bprop(), and PLearn::MatrixElementsVariable::fbprop().

{

iterator array = data();

for(int i=size()-1; i>=0; i--)

if (!array[i].isNull())

array[i]->bprop();

}

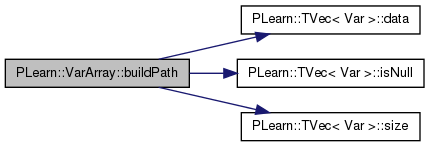

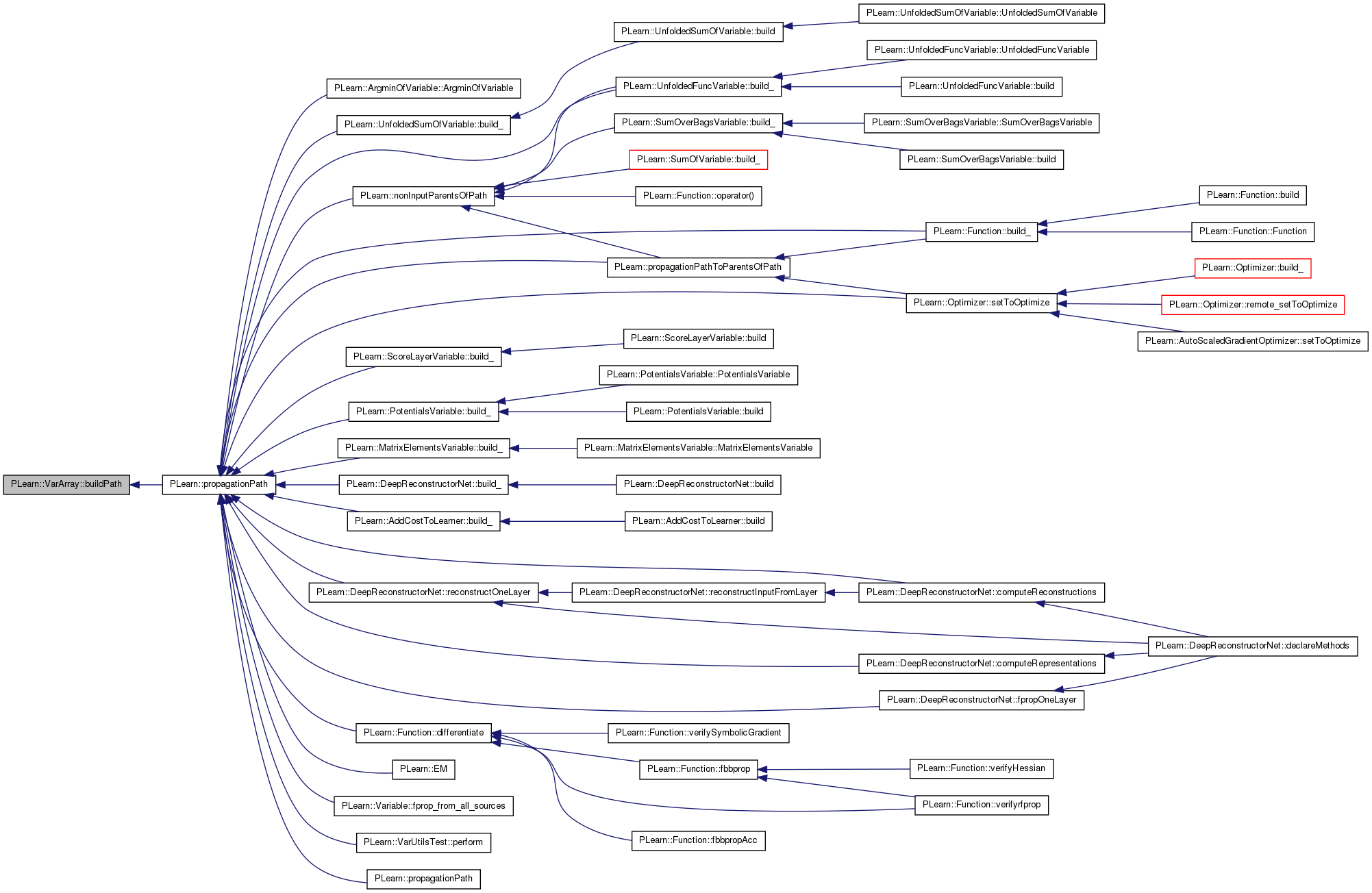

| void PLearn::VarArray::buildPath | ( | VarArray & | fpath | ) | const |

Definition at line 717 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

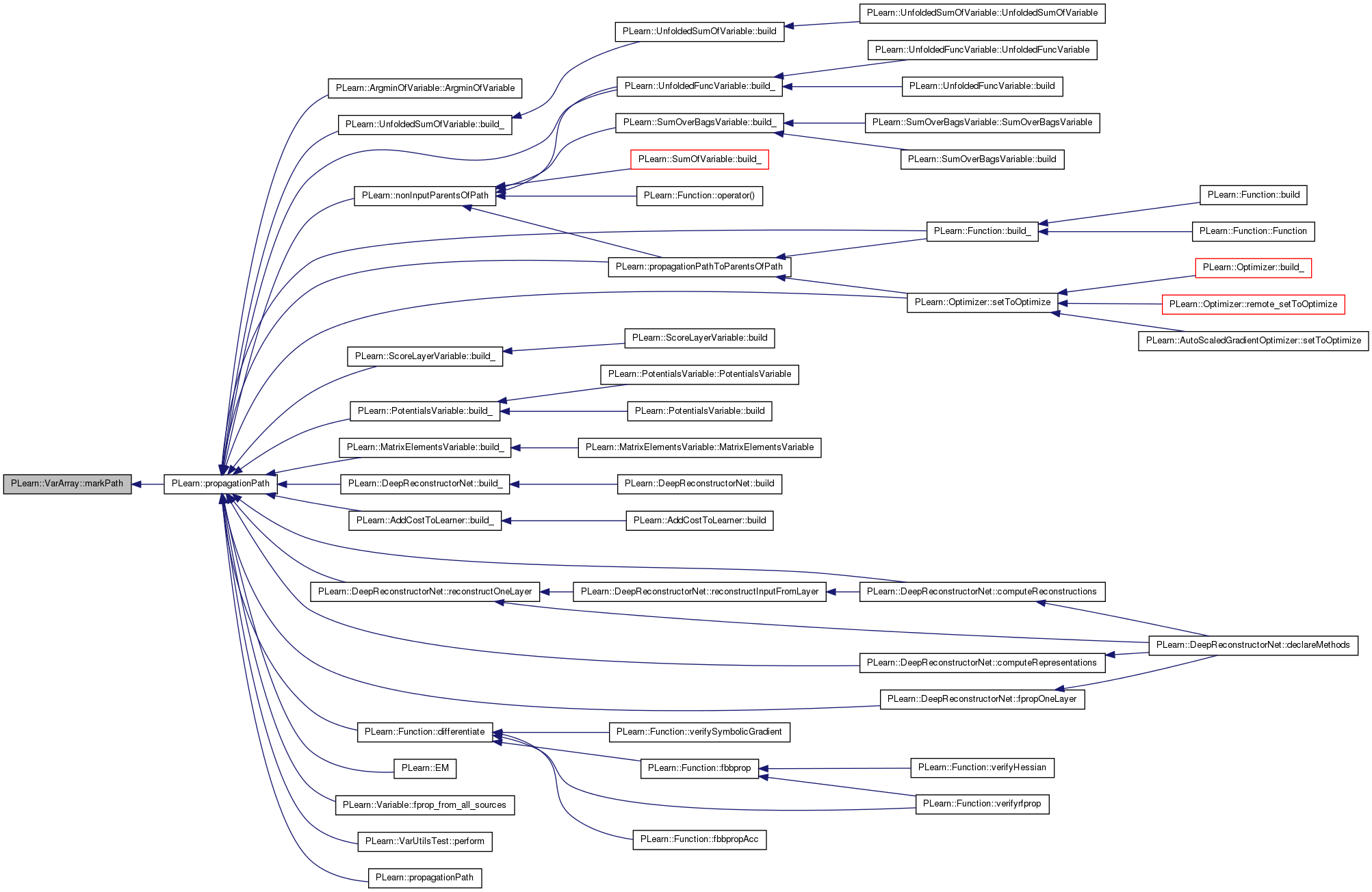

Referenced by PLearn::propagationPath().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->buildPath(path);

}

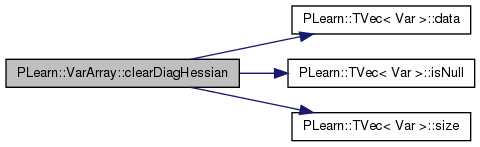

| void PLearn::VarArray::clearDiagHessian | ( | ) |

Definition at line 894 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->clearDiagHessian();

}

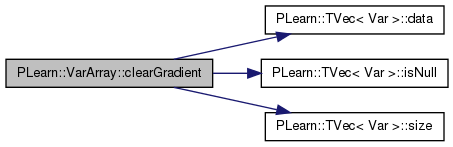

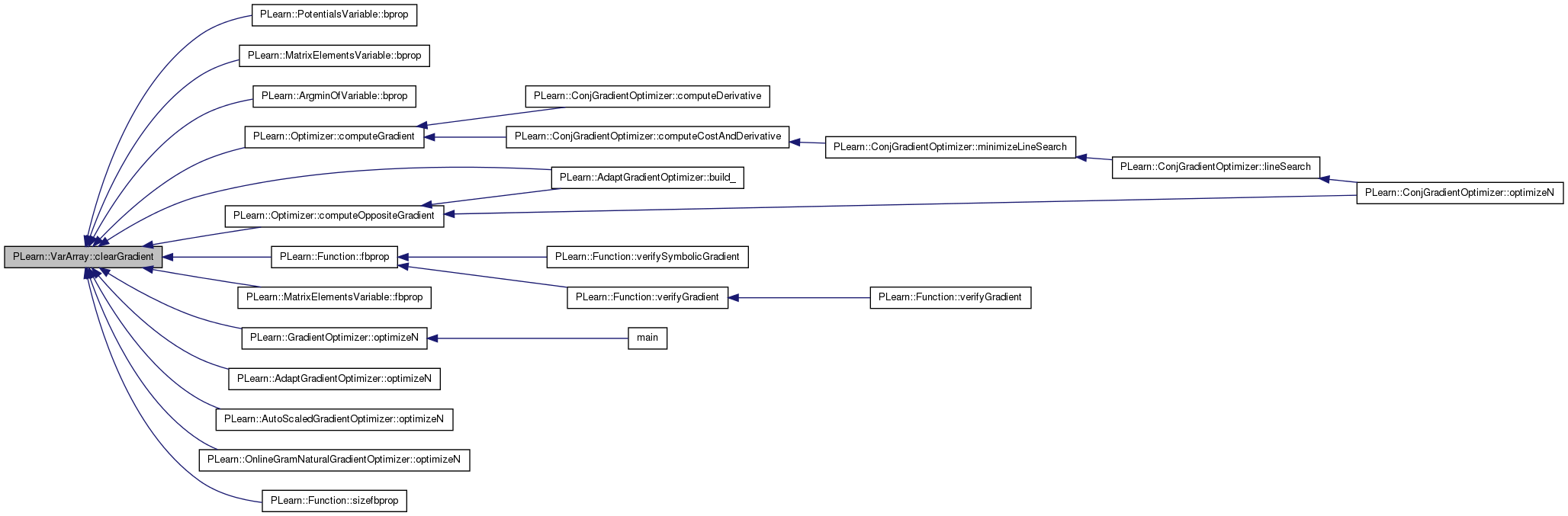

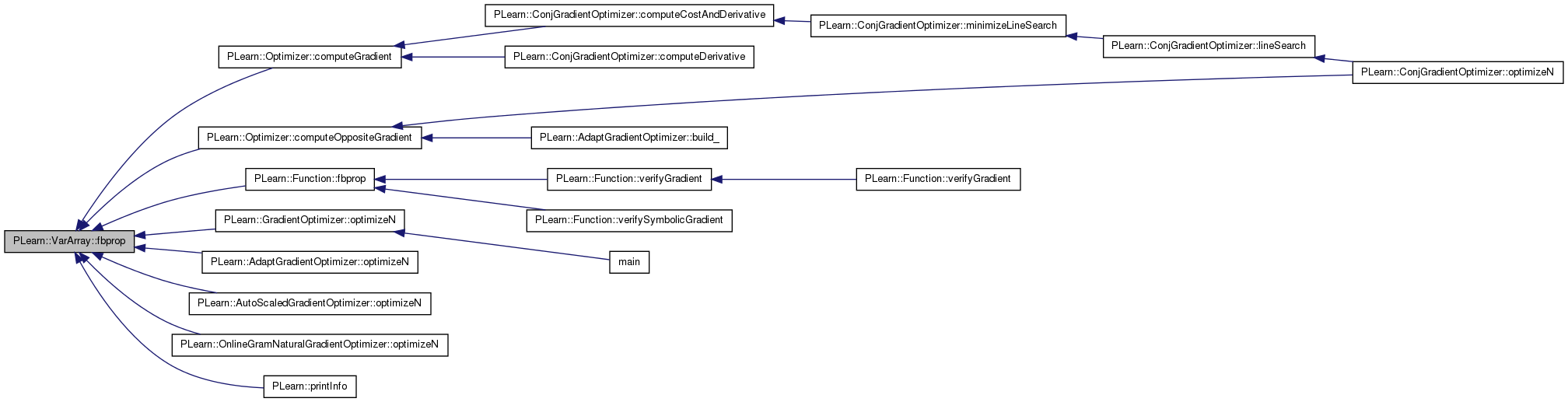

| void PLearn::VarArray::clearGradient | ( | ) |

Definition at line 886 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::PotentialsVariable::bprop(), PLearn::MatrixElementsVariable::bprop(), PLearn::ArgminOfVariable::bprop(), PLearn::AdaptGradientOptimizer::build_(), PLearn::Optimizer::computeGradient(), PLearn::Optimizer::computeOppositeGradient(), PLearn::Function::fbprop(), PLearn::MatrixElementsVariable::fbprop(), PLearn::GradientOptimizer::optimizeN(), PLearn::AdaptGradientOptimizer::optimizeN(), PLearn::AutoScaledGradientOptimizer::optimizeN(), PLearn::OnlineGramNaturalGradientOptimizer::optimizeN(), and PLearn::Function::sizefbprop().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->clearGradient();

}

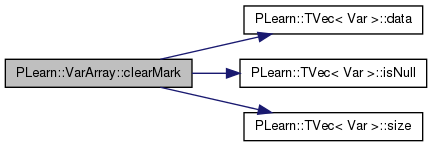

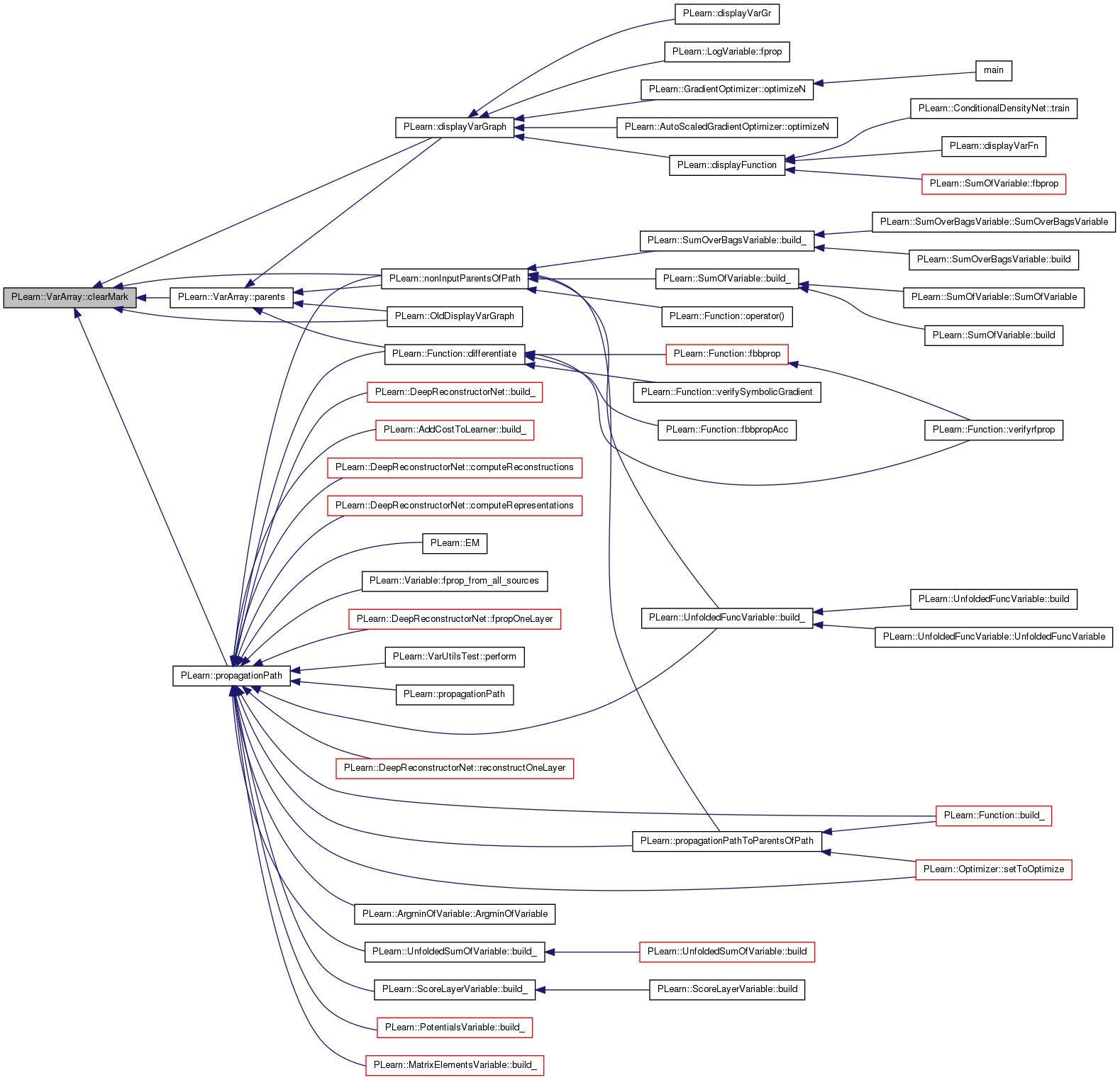

| void PLearn::VarArray::clearMark | ( | ) | const |

Definition at line 699 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::displayVarGraph(), PLearn::nonInputParentsOfPath(), PLearn::OldDisplayVarGraph(), parents(), and PLearn::propagationPath().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->clearMark();

}

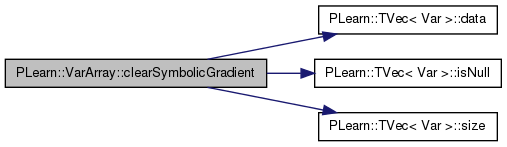

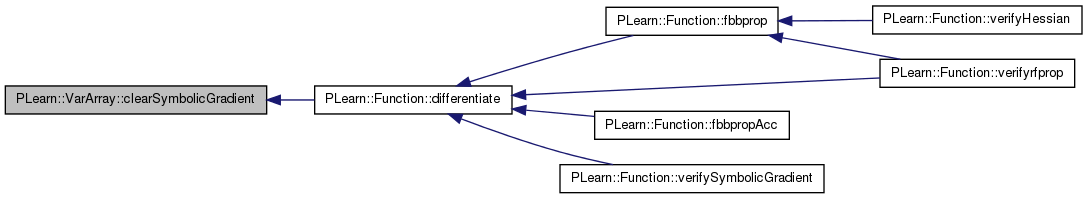

| void PLearn::VarArray::clearSymbolicGradient | ( | ) |

clears the symbolic gradient in all vars of this array

Definition at line 870 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::differentiate().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->clearSymbolicGradient();

}

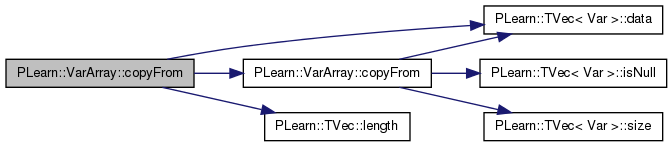

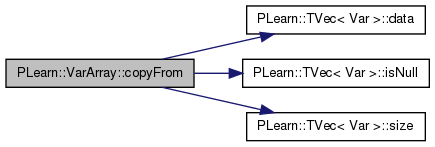

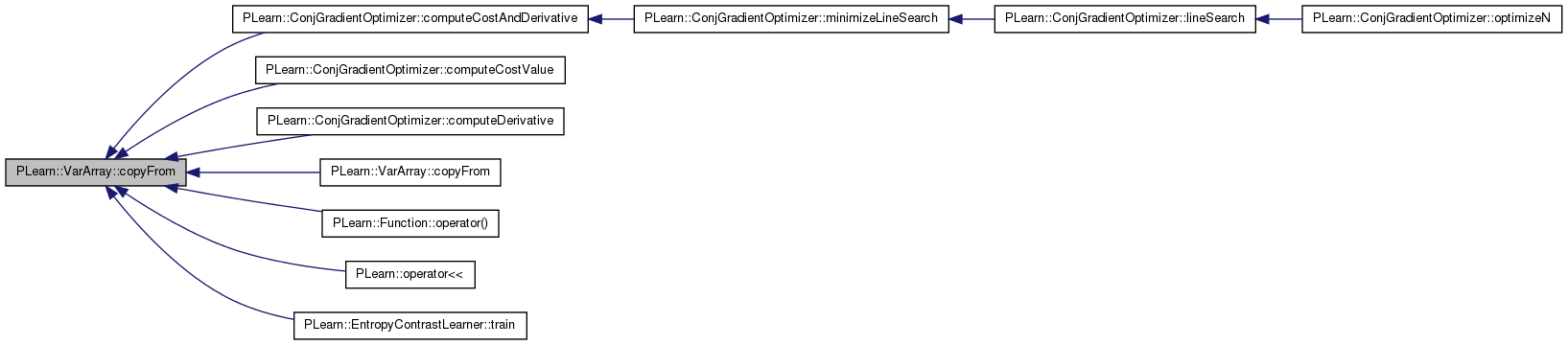

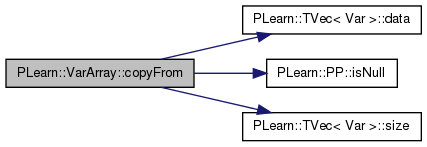

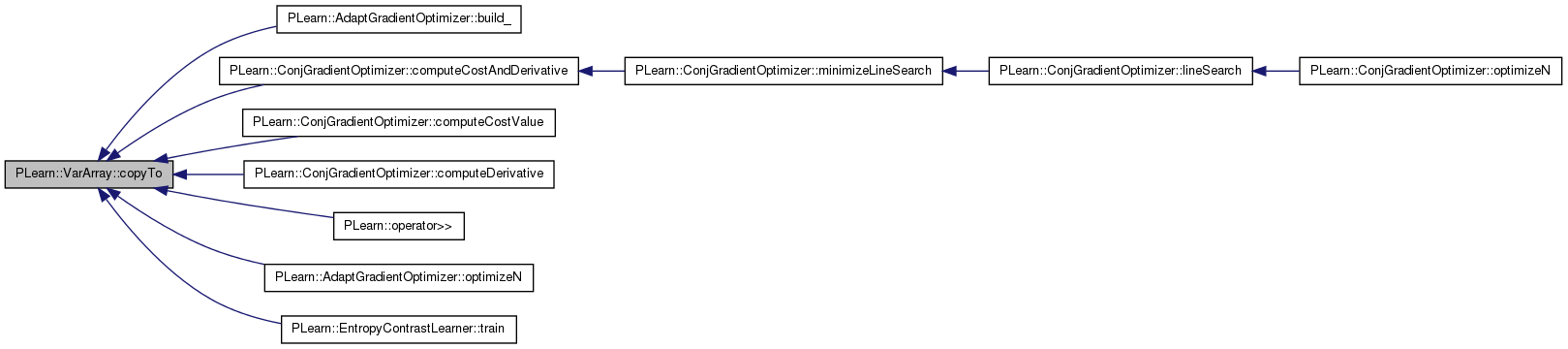

| void PLearn::VarArray::copyFrom | ( | const Vec & | datavec | ) |

Definition at line 140 of file VarArray.cc.

References copyFrom(), PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

{

copyFrom(datavec.data(),datavec.length());

}

Definition at line 681 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLERROR, and PLearn::TVec< Var >::size().

Referenced by PLearn::ConjGradientOptimizer::computeCostAndDerivative(), PLearn::ConjGradientOptimizer::computeCostValue(), PLearn::ConjGradientOptimizer::computeDerivative(), copyFrom(), PLearn::Function::operator()(), PLearn::operator<<(), and PLearn::EntropyContrastLearner::train().

{

int max_position=start+len-1;

if (max_position>size())

PLERROR("VarArray is to short");

iterator array = data();

for(int i=0; i<len; i++)

if (!from[i].isNull())

array[i+start]=from[i];

}

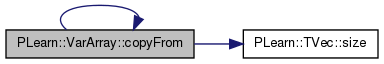

Definition at line 99 of file VarArray.h.

References copyFrom(), and PLearn::TVec< T >::size().

Referenced by copyFrom().

{copyFrom(start,from.size(),from);}

Definition at line 145 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->valuedata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyFrom total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

value[j] = *data++;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyFrom total length of all Vars in the array (%d) differs from length of argument (%d)",

ncopied,n);

}

Definition at line 364 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyGradientFrom total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

value[j] = *data++;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyGradientFrom total length of all Vars in the array differs from length of argument");

}

Definition at line 317 of file VarArray.cc.

References PLearn::TVec< T >::data(), PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLearn::TVec< T >::length(), n, PLERROR, PLearn::TVec< T >::size(), and PLearn::TVec< Var >::size().

{

iterator array = this->data();

if (size()!=datavec.size())

PLERROR("VarArray::copyGradientFrom has argument of size %d, expected %d",datavec.size(),size());

for(int i=0; i<size(); i++)

{

Var& v = array[i];

real* data = datavec[i].data();

int n = datavec[i].length();

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(vlength!=n)

PLERROR("IN VarArray::copyGradientFrom length of -th Var in the array differs from length of %d-th argument",i);

for(int j=0; j<vlength; j++)

value[j] = *data++;

}

}

}

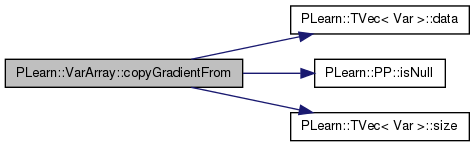

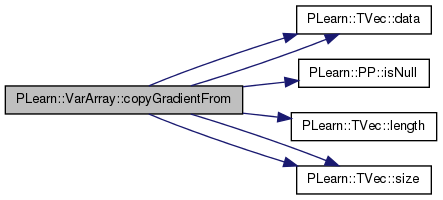

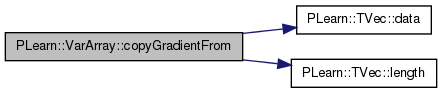

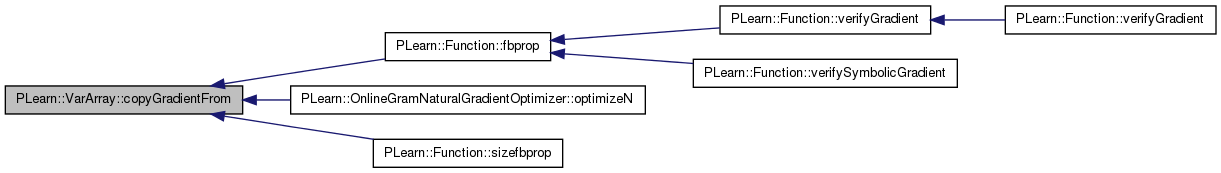

| void PLearn::VarArray::copyGradientFrom | ( | const Vec & | datavec | ) |

Definition at line 312 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::Function::fbprop(), PLearn::OnlineGramNaturalGradientOptimizer::optimizeN(), and PLearn::Function::sizefbprop().

{

copyGradientFrom(datavec.data(),datavec.length());

}

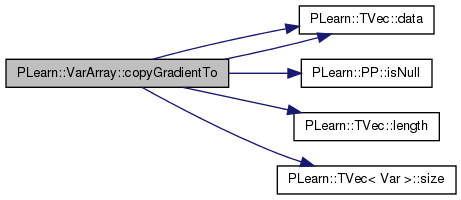

Definition at line 413 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyGradientTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ = value[j];

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyGradientTo total length of all Vars in the array differs from length of argument");

}

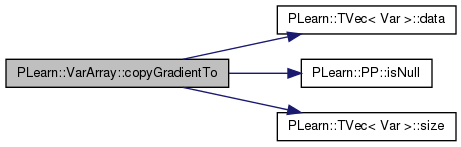

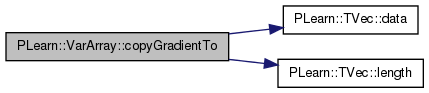

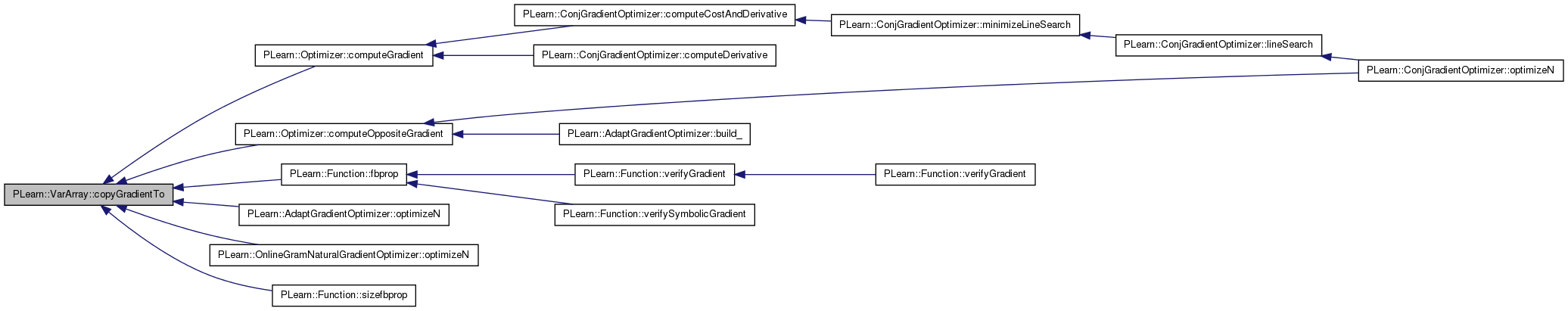

| void PLearn::VarArray::copyGradientTo | ( | const Vec & | datavec | ) |

Definition at line 408 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::Optimizer::computeGradient(), PLearn::Optimizer::computeOppositeGradient(), PLearn::Function::fbprop(), PLearn::AdaptGradientOptimizer::optimizeN(), PLearn::OnlineGramNaturalGradientOptimizer::optimizeN(), and PLearn::Function::sizefbprop().

{

copyGradientTo(datavec.data(), datavec.length());

}

Definition at line 339 of file VarArray.cc.

References PLearn::TVec< T >::data(), PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLearn::TVec< T >::length(), n, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

for(int i=0; i<size(); i++)

{

Var& v = array[i];

real* data = datavec[i].data();

int n = datavec[i].length();

if (!v.isNull())

{

real* value = v->gradientdata;

int vlength = v->nelems();

if(vlength!=n)

PLERROR("IN VarArray::copyGradientFrom length of -th Var in the array differs from length of %d-th argument",i);

for(int j=0; j<vlength; j++)

*data++ = value[j];

}

}

}

| void PLearn::VarArray::copyMaxValueTo | ( | const Vec & | maxv | ) |

Definition at line 489 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

{

copyMaxValueTo(datavec.data(), datavec.length());

}

Definition at line 494 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real value = v->max_value;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyMaxValueTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ = value;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyMaxValueTo total length of all Vars in the array differs from length of argument");

}

| void PLearn::VarArray::copyMinValueTo | ( | const Vec & | minv | ) |

Definition at line 462 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

{

copyMinValueTo(datavec.data(), datavec.length());

}

Definition at line 467 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real value = v->min_value;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyMinValueTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ = value;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyMinValueTo total length of all Vars in the array differs from length of argument");

}

| void PLearn::VarArray::copyRValueFrom | ( | const Vec & | datavec | ) |

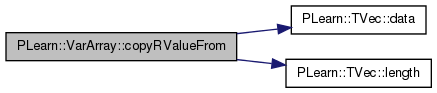

Definition at line 585 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::Function::rfprop().

{

copyRValueFrom(datavec.data(),datavec.length());

}

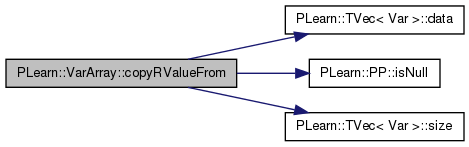

Definition at line 557 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

v->resizeRValue();

real* value = v->rvaluedata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyRValueFrom total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

value[j] = *data++;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyRValueFrom total length of all Vars in the array differs from length of argument");

}

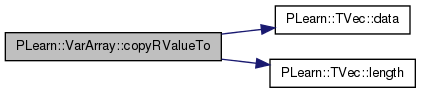

| void PLearn::VarArray::copyRValueTo | ( | const Vec & | datavec | ) |

Definition at line 580 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::Function::rfprop().

{

copyRValueTo(datavec.data(), datavec.length());

}

Definition at line 533 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->rvaluedata;

if (!value)

PLERROR("VarArray::copyRValueTo: empty Var in the array (number %d)",i);

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyRValueTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ = value[j];

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyRValueTo total length of all Vars in the array differs from length of argument");

}

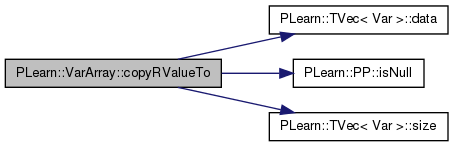

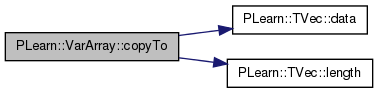

| void PLearn::VarArray::copyTo | ( | const Vec & | datavec | ) | const |

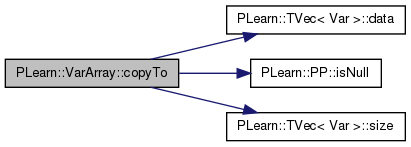

Definition at line 258 of file VarArray.cc.

References PLearn::TVec< T >::data(), and PLearn::TVec< T >::length().

Referenced by PLearn::AdaptGradientOptimizer::build_(), PLearn::ConjGradientOptimizer::computeCostAndDerivative(), PLearn::ConjGradientOptimizer::computeCostValue(), PLearn::ConjGradientOptimizer::computeDerivative(), PLearn::operator>>(), PLearn::AdaptGradientOptimizer::optimizeN(), and PLearn::EntropyContrastLearner::train().

{

copyTo(datavec.data(), datavec.length());

}

UNSAFE: x must point to at least n floats!

Definition at line 268 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), j, PLERROR, and PLearn::TVec< Var >::size().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

real* value = v->valuedata;

int vlength = v->nelems();

if(ncopied+vlength>n)

PLERROR("IN VarArray::copyTo total length of all Vars in the array exceeds length of argument");

for(int j=0; j<vlength; j++)

*data++ = value[j];

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::copyTo total length of all Vars in the array differs from length of argument");

}

| void PLearn::VarArray::copyValuesFrom | ( | const VarArray & | from | ) |

Definition at line 128 of file VarArray.cc.

References i, PLERROR, PLearn::TVec< T >::size(), and PLearn::TVec< Var >::size().

{

if (size()!=from.size())

PLERROR("VarArray::copyValuesFrom(a): a does not have the same size (%d) as this (%d)\n",

from.size(),size());

for (int i=0;i<size();i++)

(*this)[i]->value << from[i]->value;

}

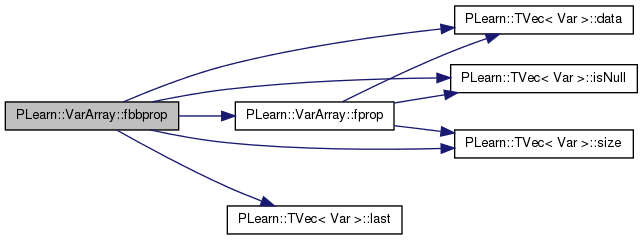

| void PLearn::VarArray::fbbprop | ( | ) |

Definition at line 832 of file VarArray.cc.

References PLearn::TVec< Var >::data(), fprop(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::last(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size()-1; i++)

if (!array[i].isNull())

array[i]->fprop();

if (size() > 0) {

last()->fbbprop();

for(int i=size()-2; i>=0; i--)

if (!array[i].isNull())

{

array[i]->bprop();

array[i]->bbprop();

}

}

}

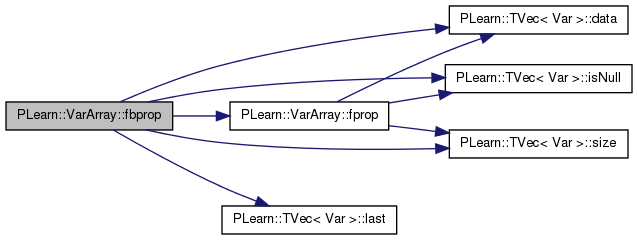

| void PLearn::VarArray::fbprop | ( | ) |

Definition at line 777 of file VarArray.cc.

References PLearn::TVec< Var >::data(), fprop(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::last(), PLERROR, and PLearn::TVec< Var >::size().

Referenced by PLearn::Optimizer::computeGradient(), PLearn::Optimizer::computeOppositeGradient(), PLearn::Function::fbprop(), PLearn::GradientOptimizer::optimizeN(), PLearn::AdaptGradientOptimizer::optimizeN(), PLearn::AutoScaledGradientOptimizer::optimizeN(), PLearn::OnlineGramNaturalGradientOptimizer::optimizeN(), and PLearn::printInfo().

{

iterator array = data();

for(int i=0; i<size()-1; i++)

if (!array[i].isNull())

array[i]->fprop();

if (size() > 0) {

last()->fbprop();

#ifdef BOUNDCHECK

if (last()->gradient.hasMissing())

PLERROR("VarArray::fbprop has NaN gradient");

#endif

for(int i=size()-2; i>=0; i--)

if (!array[i].isNull())

{

#ifdef BOUNDCHECK

if (array[i]->gradient.hasMissing())

PLERROR("VarArray::fbprop has NaN gradient");

#endif

array[i]->bprop();

}

}

}

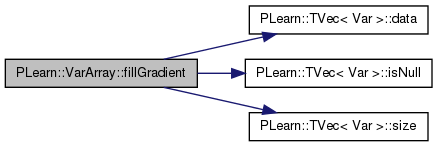

| void PLearn::VarArray::fillGradient | ( | real | value | ) |

Definition at line 878 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->fillGradient(value);

}

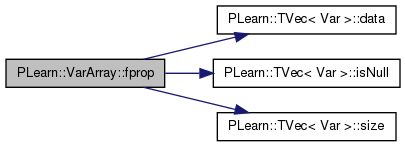

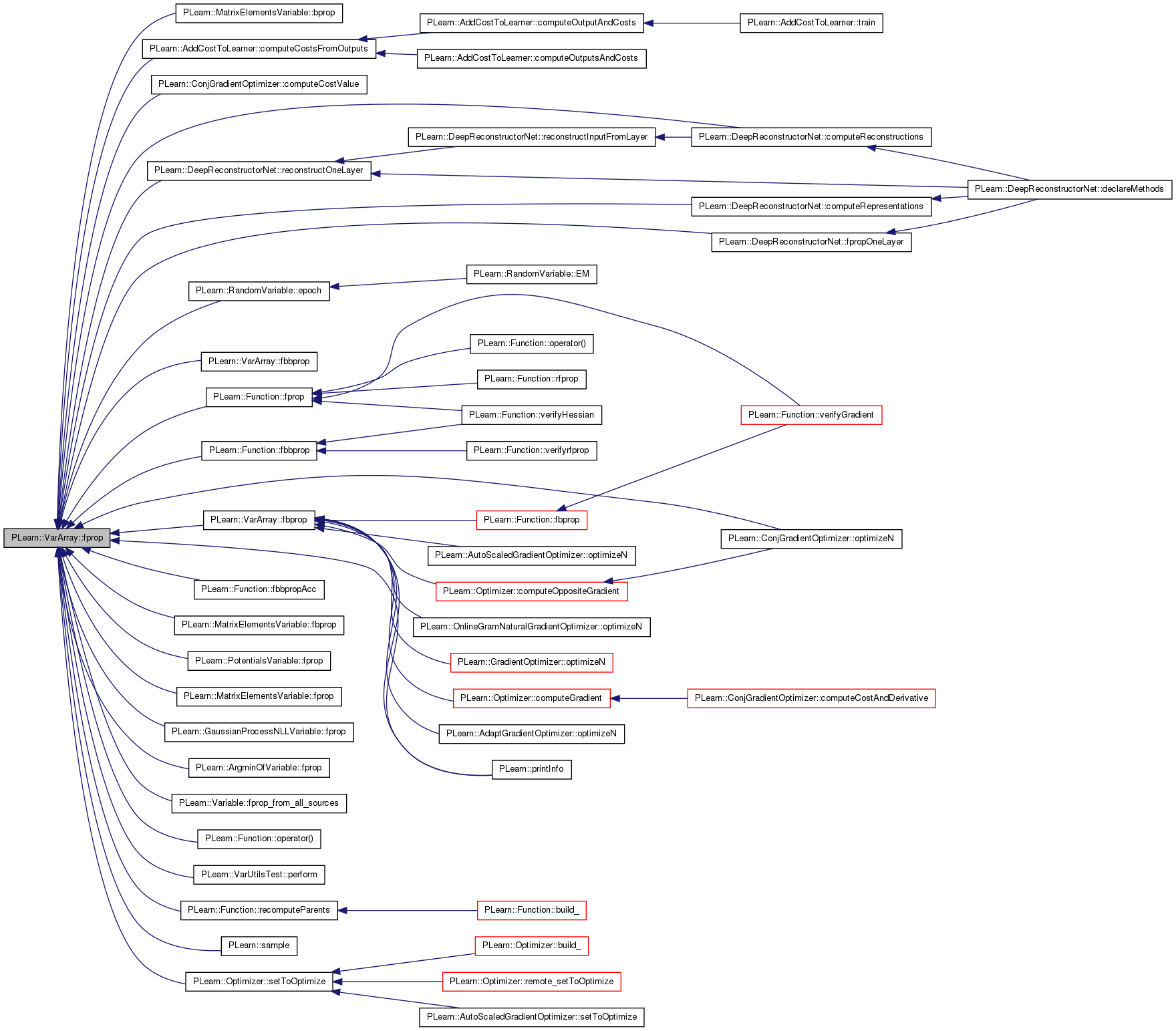

| void PLearn::VarArray::fprop | ( | ) |

Definition at line 725 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::MatrixElementsVariable::bprop(), PLearn::AddCostToLearner::computeCostsFromOutputs(), PLearn::ConjGradientOptimizer::computeCostValue(), PLearn::DeepReconstructorNet::computeReconstructions(), PLearn::DeepReconstructorNet::computeRepresentations(), PLearn::RandomVariable::epoch(), fbbprop(), PLearn::Function::fbbprop(), PLearn::Function::fbbpropAcc(), fbprop(), PLearn::MatrixElementsVariable::fbprop(), PLearn::PotentialsVariable::fprop(), PLearn::MatrixElementsVariable::fprop(), PLearn::GaussianProcessNLLVariable::fprop(), PLearn::Function::fprop(), PLearn::ArgminOfVariable::fprop(), PLearn::Variable::fprop_from_all_sources(), PLearn::DeepReconstructorNet::fpropOneLayer(), PLearn::Function::operator()(), PLearn::ConjGradientOptimizer::optimizeN(), PLearn::VarUtilsTest::perform(), PLearn::printInfo(), PLearn::Function::recomputeParents(), PLearn::DeepReconstructorNet::reconstructOneLayer(), PLearn::sample(), and PLearn::Optimizer::setToOptimize().

{

iterator array = data();

for(int i=0; i<size(); i++)

{

if (!array[i].isNull())

array[i]->fprop();

}

}

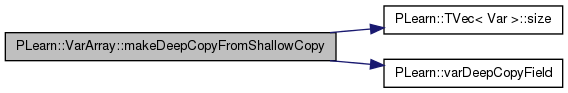

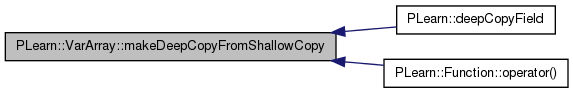

| void PLearn::VarArray::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) |

Deep copy of an array is not the same as for a TVec, because the shallow copy automatically creates a new storage.

Reimplemented from PLearn::Array< Var >.

Definition at line 119 of file VarArray.cc.

References i, PLearn::TVec< Var >::size(), and PLearn::varDeepCopyField().

Referenced by PLearn::deepCopyField(), and PLearn::Function::operator()().

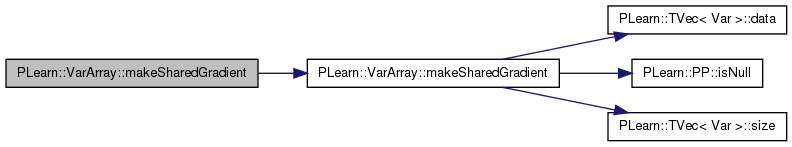

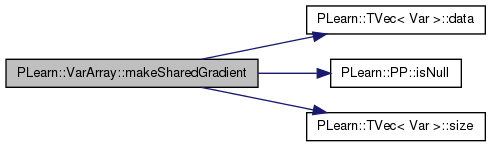

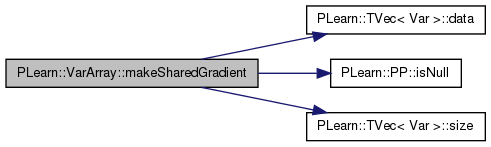

Definition at line 204 of file VarArray.cc.

References makeSharedGradient(), PLearn::TVec< T >::offset_, and PLearn::TVec< T >::storage.

{

makeSharedGradient( v.storage, v.offset_+offset_);

}

Definition at line 214 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< Var >::storage.

{

iterator array = data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

int vlength = v->nelems();

v->makeSharedGradient(storage,offset_+ncopied);

ncopied += vlength;

}

}

}

like copyGradientTo but also makes value's point to x

Definition at line 238 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), PLERROR, and PLearn::TVec< Var >::size().

Referenced by makeSharedGradient(), and PLearn::AutoScaledGradientOptimizer::setToOptimize().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

int vlength = v->nelems();

v->makeSharedGradient(data,vlength);

data += vlength;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::makeSharedGradient total length of all Vars in the array (%d) differs from length of argument (%d)",

ncopied,n);

}

Definition at line 209 of file VarArray.cc.

References PLearn::TVec< T >::offset_, and PLearn::TVec< T >::storage.

{

makeSharedRValue( v.storage, v.offset_+offset_);

}

Definition at line 516 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< Var >::storage.

{

iterator array = data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

v->resizeRValue();

int vlength = v->nelems();

v->makeSharedRValue(storage,offset_+ncopied);

ncopied += vlength;

}

}

}

Definition at line 230 of file VarArray.cc.

References PLearn::TVec< T >::length(), makeSharedValue(), nelems(), PLearn::TVec< Var >::offset_, PLERROR, and PLearn::TVec< T >::storage.

{

if (v.length() < nelems()+offset_)

PLERROR("VarArray::makeSharedValue(Vec,int): vector too short (%d < %d + %d)",

v.length(),nelems(),offset_);

makeSharedValue(v.storage,offset_);

}

like copyTo but also makes value's point to x

Definition at line 168 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), PLERROR, and PLearn::TVec< Var >::size().

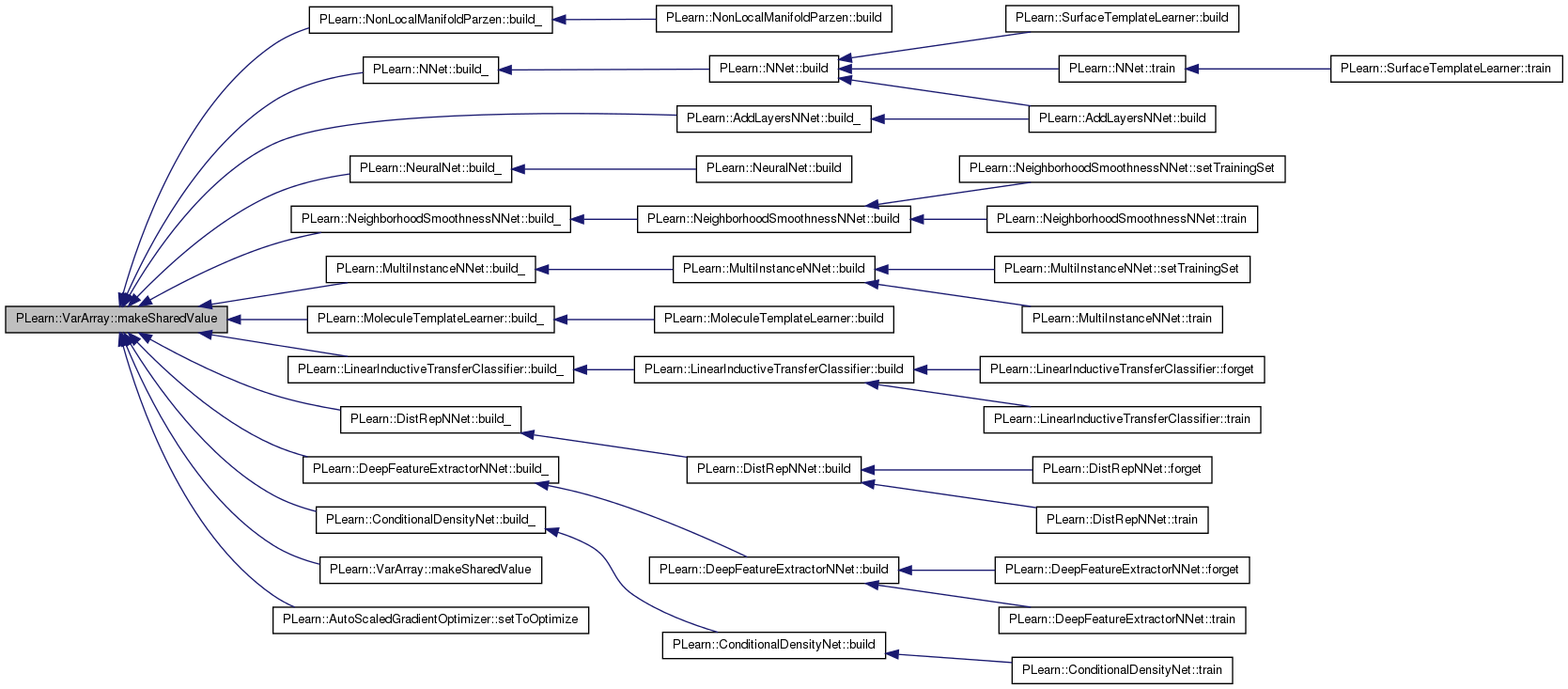

Referenced by PLearn::NonLocalManifoldParzen::build_(), PLearn::NNet::build_(), PLearn::NeuralNet::build_(), PLearn::NeighborhoodSmoothnessNNet::build_(), PLearn::MultiInstanceNNet::build_(), PLearn::MoleculeTemplateLearner::build_(), PLearn::LinearInductiveTransferClassifier::build_(), PLearn::DistRepNNet::build_(), PLearn::DeepFeatureExtractorNNet::build_(), PLearn::ConditionalDensityNet::build_(), PLearn::AddLayersNNet::build_(), makeSharedValue(), and PLearn::AutoScaledGradientOptimizer::setToOptimize().

{

iterator array = this->data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

int vlength = v->nelems();

v->makeSharedValue(data,vlength);

data += vlength;

ncopied += vlength;

}

}

if(ncopied!=n)

PLERROR("IN VarArray::makeSharedValue total length of all Vars in the array (%d) differs from length of argument (%d)",

ncopied,n);

}

make value and matValue point into this storage

Definition at line 188 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::PP< T >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< Var >::storage.

{

iterator array = data();

int ncopied=0; // number of elements copied so far

for(int i=0; i<size(); i++)

{

Var& v = array[i];

if (!v.isNull())

{

int vlength = v->nelems();

v->makeSharedValue(storage,offset_+ncopied);

ncopied += vlength;

}

}

}

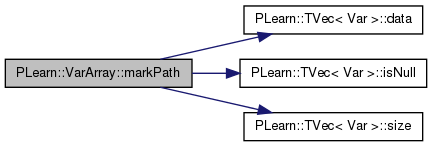

| void PLearn::VarArray::markPath | ( | ) | const |

Definition at line 707 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::propagationPath().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull()){

array[i]->markPath();

//cout<<"mark :"<<i<<" "<<array[i]->getName()<<endl;

}

}

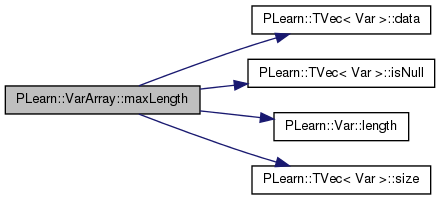

| int PLearn::VarArray::maxLength | ( | ) | const |

Definition at line 642 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::Var::length(), and PLearn::TVec< Var >::size().

Referenced by PLearn::ConcatColumnsVariable::recomputeSize().

{

iterator array = data();

int maxlength = 0;

for(int i=0; i<size(); i++)

if (!array[i].isNull())

{

int l = array[i]->length();

if (l>maxlength)

maxlength=l;

}

return maxlength;

}

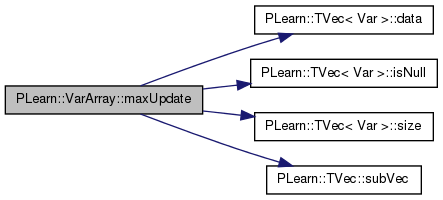

Using the box constraints on the values, return the maximum allowable step_size in the given direction i.e., argmax_{step_size} {new = value + step_size * direction, new in box}

Definition at line 957 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), MIN, PLearn::TVec< Var >::size(), and PLearn::TVec< T >::subVec().

{

real max_step_size = FLT_MAX;

int pos=0;

iterator array = data();

for(int i=0; i<size(); pos+=array[i++]->nelems())

if (!array[i].isNull())

max_step_size = MIN(max_step_size,

array[i]->maxUpdate(direction.subVec(pos,array[i]->nelems())));

return max_step_size;

}

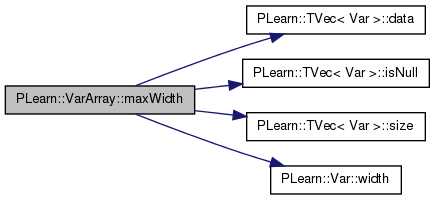

| int PLearn::VarArray::maxWidth | ( | ) | const |

Definition at line 628 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), w, and PLearn::Var::width().

Referenced by PLearn::ConcatRowsVariable::recomputeSize().

{

iterator array = data();

int maxwidth = 0;

for(int i=0; i<size(); i++)

if (!array[i].isNull())

{

int w = array[i]->width();

if (w>maxwidth)

maxwidth=w;

}

return maxwidth;

}

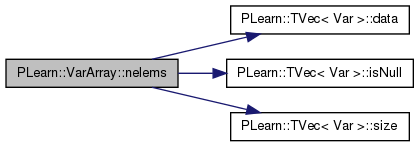

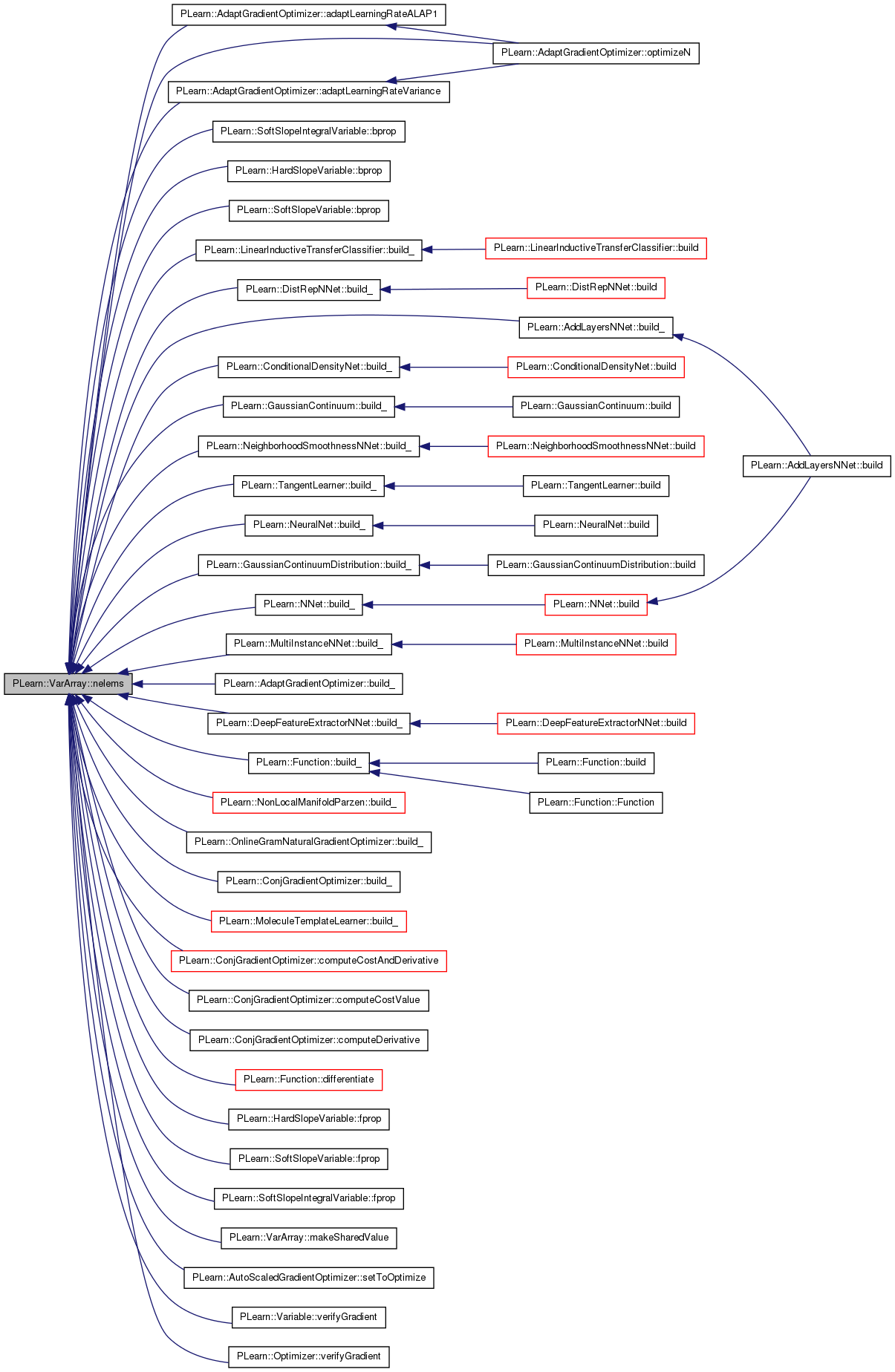

| int PLearn::VarArray::nelems | ( | ) | const |

Definition at line 590 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::AdaptGradientOptimizer::adaptLearningRateALAP1(), PLearn::AdaptGradientOptimizer::adaptLearningRateVariance(), PLearn::SoftSlopeIntegralVariable::bprop(), PLearn::HardSlopeVariable::bprop(), PLearn::SoftSlopeVariable::bprop(), PLearn::LinearInductiveTransferClassifier::build_(), PLearn::DistRepNNet::build_(), PLearn::AddLayersNNet::build_(), PLearn::ConditionalDensityNet::build_(), PLearn::GaussianContinuum::build_(), PLearn::NeighborhoodSmoothnessNNet::build_(), PLearn::TangentLearner::build_(), PLearn::NeuralNet::build_(), PLearn::GaussianContinuumDistribution::build_(), PLearn::NNet::build_(), PLearn::MultiInstanceNNet::build_(), PLearn::AdaptGradientOptimizer::build_(), PLearn::DeepFeatureExtractorNNet::build_(), PLearn::Function::build_(), PLearn::NonLocalManifoldParzen::build_(), PLearn::OnlineGramNaturalGradientOptimizer::build_(), PLearn::ConjGradientOptimizer::build_(), PLearn::MoleculeTemplateLearner::build_(), PLearn::ConjGradientOptimizer::computeCostAndDerivative(), PLearn::ConjGradientOptimizer::computeCostValue(), PLearn::ConjGradientOptimizer::computeDerivative(), PLearn::Function::differentiate(), PLearn::HardSlopeVariable::fprop(), PLearn::SoftSlopeVariable::fprop(), PLearn::SoftSlopeIntegralVariable::fprop(), makeSharedValue(), PLearn::AdaptGradientOptimizer::optimizeN(), PLearn::AutoScaledGradientOptimizer::setToOptimize(), PLearn::Variable::verifyGradient(), and PLearn::Optimizer::verifyGradient().

{

iterator array = data();

int totallength = 0;

for(int i=0; i<size(); i++)

if (!array[i].isNull())

totallength += array[i]->nelems();

return totallength;

}

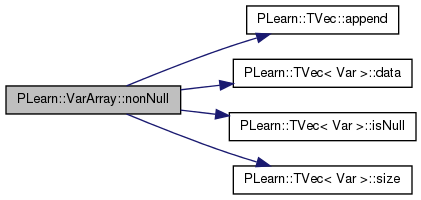

| VarArray PLearn::VarArray::nonNull | ( | ) | const |

returns a VarArray containing all the Var's that are non-null

Definition at line 656 of file VarArray.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

VarArray results(0, size());

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

results.append(array[i]);

return results;

}

| PLearn::VarArray::operator Var | ( | ) | [inline] |

Definition at line 77 of file VarArray.h.

References PLERROR.

{

if(size()!=1)

PLERROR("Cannot cast VarArray containing more than one variable to Var");

return operator[](0);

}

Definition at line 86 of file VarArray.h.

References PLearn::operator&().

{ return PLearn::operator&(*this,v); }

Definition at line 87 of file VarArray.h.

References PLearn::operator&().

{ return PLearn::operator&(*this,va); }

Definition at line 85 of file VarArray.h.

References PLearn::operator&=().

{ PLearn::operator&=(*this,va); return *this; }

Definition at line 84 of file VarArray.h.

References PLearn::operator&=().

{ PLearn::operator&=(*this,v); return *this;}

Reimplemented from PLearn::TVec< Var >.

Definition at line 234 of file VarArray.h.

{ return Array<Var>::operator[](i); }

Definition at line 233 of file VarArray.h.

{ return Array<Var>::operator[](i); }

Definition at line 1016 of file VarArray.cc.

{

return new VarArrayElementVariable(*this, index);

}

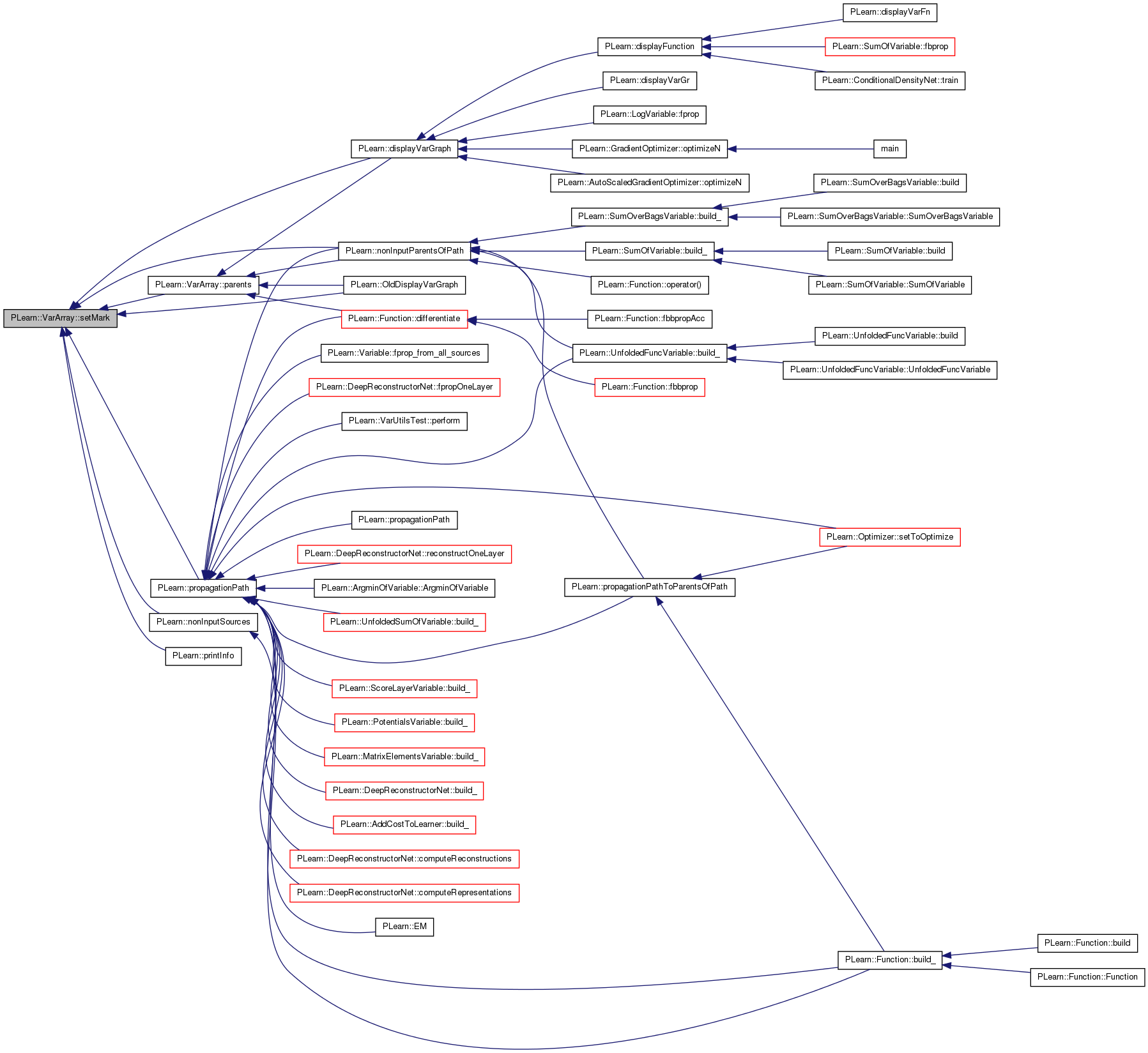

| VarArray PLearn::VarArray::parents | ( | ) | const |

returns the set of all the direct parents of the Var in this array (previously marked parents are NOT included)

Definition at line 1049 of file VarArray.cc.

References PLearn::TVec< T >::append(), clearMark(), PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), setMark(), PLearn::TVec< T >::size(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::differentiate(), PLearn::displayVarGraph(), PLearn::nonInputParentsOfPath(), and PLearn::OldDisplayVarGraph().

{

setMark();

VarArray all_parents;

VarArray parents_i;

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

{

parents_i = array[i]->parents();

if(parents_i.size()>0)

{

parents_i.setMark();

all_parents.append(parents_i);

}

}

clearMark();

all_parents.clearMark();

return all_parents;

}

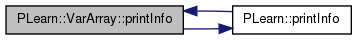

| void PLearn::VarArray::printInfo | ( | bool | print_gradient = false | ) | [inline] |

Definition at line 224 of file VarArray.h.

References i, and PLearn::printInfo().

Referenced by PLearn::RandomVariable::epoch(), PLearn::printInfo(), and PLearn::Variable::printInfos().

{

iterator array = data();

for (int i=0;i<size();i++)

if (!array[i].isNull())

array[i]->printInfo(print_gradient);

}

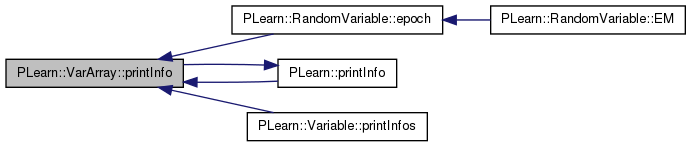

| void PLearn::VarArray::printNames | ( | ) | const |

Definition at line 1069 of file VarArray.cc.

References PLearn::endl(), i, and PLearn::TVec< Var >::size().

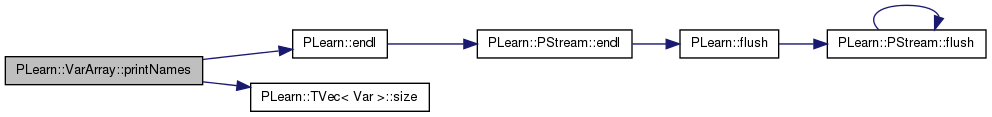

| void PLearn::VarArray::read | ( | istream & | in | ) |

Reimplemented from PLearn::Array< Var >.

Definition at line 1000 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->read(in);

}

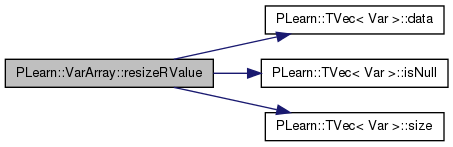

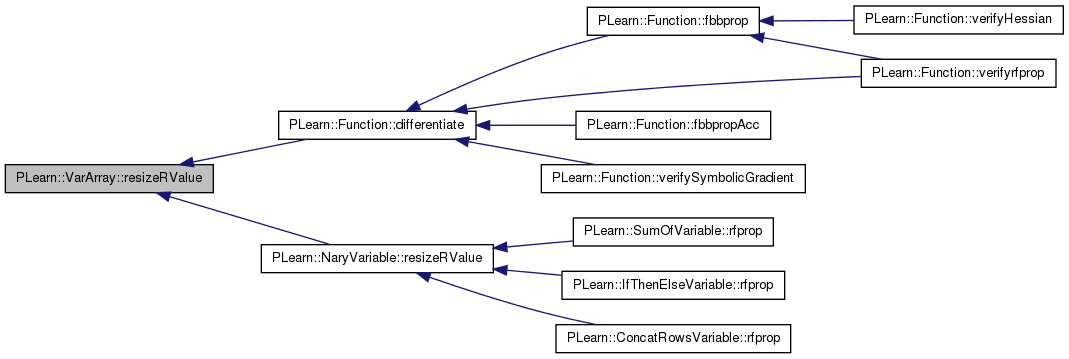

| void PLearn::VarArray::resizeRValue | ( | ) |

Definition at line 600 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::differentiate(), and PLearn::NaryVariable::resizeRValue().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->resizeRValue();

}

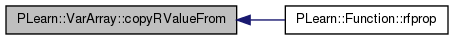

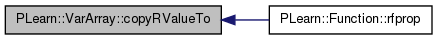

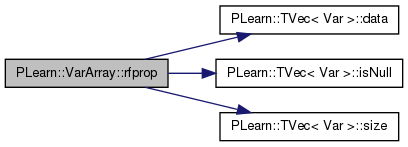

| void PLearn::VarArray::rfprop | ( | ) |

Definition at line 767 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::rfprop().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->rfprop();

}

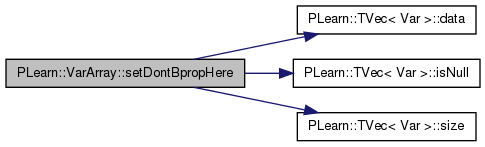

| void PLearn::VarArray::setDontBpropHere | ( | bool | val | ) |

Definition at line 903 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->setDontBpropHere(val);

}

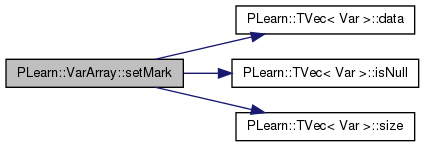

| void PLearn::VarArray::setMark | ( | ) | const |

Definition at line 691 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::displayVarGraph(), PLearn::nonInputParentsOfPath(), PLearn::nonInputSources(), PLearn::OldDisplayVarGraph(), parents(), PLearn::printInfo(), and PLearn::propagationPath().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->setMark();

}

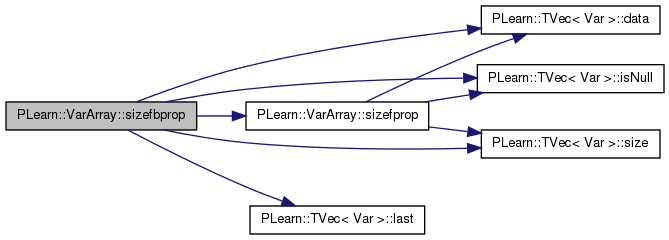

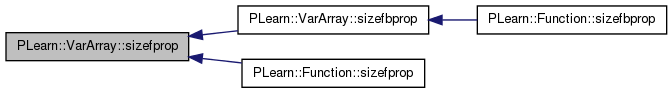

| void PLearn::VarArray::sizefbprop | ( | ) |

Definition at line 803 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::last(), PLERROR, PLearn::TVec< Var >::size(), and sizefprop().

Referenced by PLearn::Function::sizefbprop().

{

iterator array = data();

for(int i=0; i<size()-1; i++)

if (!array[i].isNull())

array[i]->sizefprop();

if (size() > 0) {

{

last()->sizeprop();

last()->fbprop();

}

#ifdef BOUNDCHECK

if (last()->gradient.hasMissing())

PLERROR("VarArray::fbprop has NaN gradient");

#endif

for(int i=size()-2; i>=0; i--)

if (!array[i].isNull())

{

#ifdef BOUNDCHECK

if (array[i]->gradient.hasMissing())

PLERROR("VarArray::fbprop has NaN gradient");

#endif

array[i]->bprop();

}

}

}

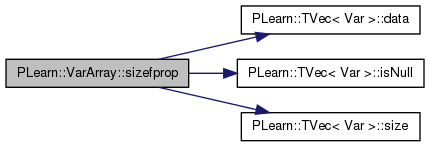

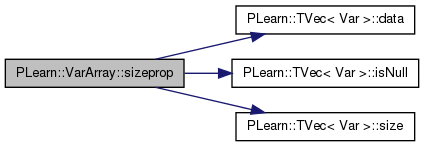

| void PLearn::VarArray::sizefprop | ( | ) |

Definition at line 743 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by sizefbprop(), and PLearn::Function::sizefprop().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->sizefprop();

}

| void PLearn::VarArray::sizeprop | ( | ) |

Definition at line 735 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->sizeprop();

}

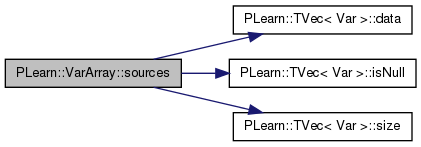

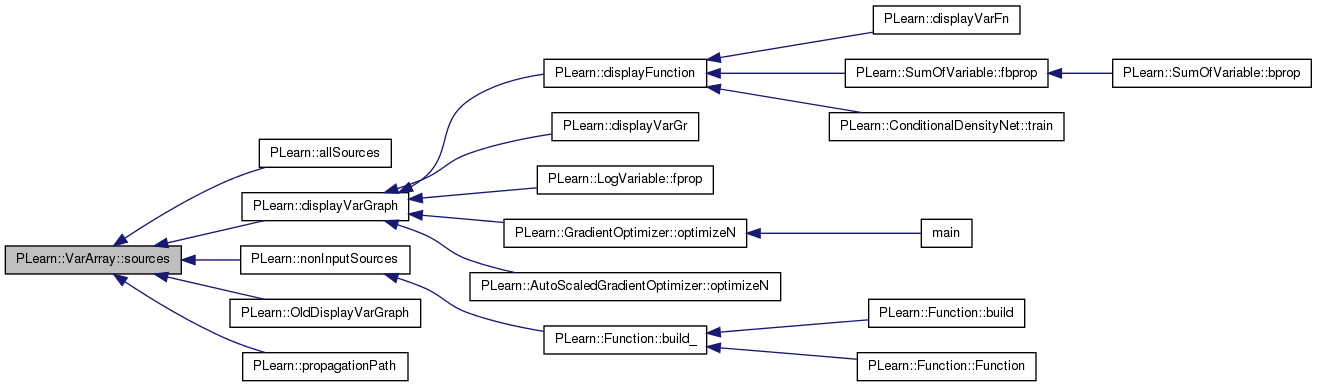

| VarArray PLearn::VarArray::sources | ( | ) | const |

Definition at line 1021 of file VarArray.cc.

References a, PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::allSources(), PLearn::displayVarGraph(), PLearn::nonInputSources(), PLearn::OldDisplayVarGraph(), and PLearn::propagationPath().

{

VarArray a;

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

a &= array[i]->sources();

return a;

}

Definition at line 667 of file VarArray.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLERROR, PLearn::TVec< Var >::size(), and VarArray().

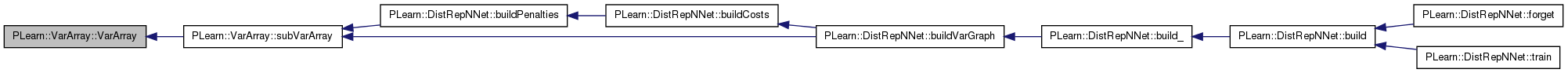

Referenced by PLearn::DistRepNNet::buildPenalties(), and PLearn::DistRepNNet::buildVarGraph().

{

int max_position=start+len-1;

if (max_position>size())

PLERROR("Error in :subVarArray(int start,int len), start+len>=nelems");

VarArray* results=new VarArray(0, len);

iterator array = data();

for(int i=start; i<start+len; i++)

if (!array[i].isNull())

results->append(array[i]);

return *results;

}

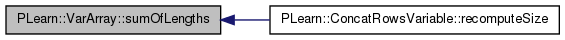

| int PLearn::VarArray::sumOfLengths | ( | ) | const |

Definition at line 608 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::length(), and PLearn::TVec< Var >::size().

Referenced by PLearn::ConcatRowsVariable::recomputeSize().

{

iterator array = data();

int totallength = 0;

for(int i=0; i<size(); i++)

if (!array[i].isNull())

totallength += array[i]->length();

return totallength;

}

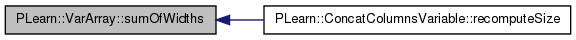

| int PLearn::VarArray::sumOfWidths | ( | ) | const |

Definition at line 618 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::ConcatColumnsVariable::recomputeSize().

{

iterator array = data();

int totalwidth = 0;

for(int i=0; i<size(); i++)

if (!array[i].isNull())

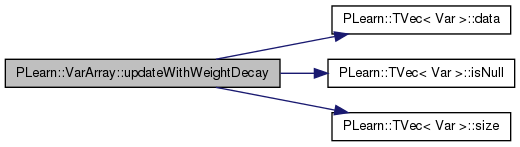

totalwidth += array[i]->width();

return totalwidth;

}

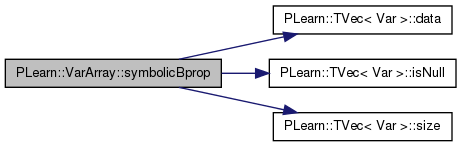

| void PLearn::VarArray::symbolicBprop | ( | ) |

computes symbolicBprop on a propagation path

Definition at line 852 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::differentiate().

{

iterator array = data();

for(int i=size()-1; i>=0; i--)

if (!array[i].isNull())

array[i]->symbolicBprop();

}

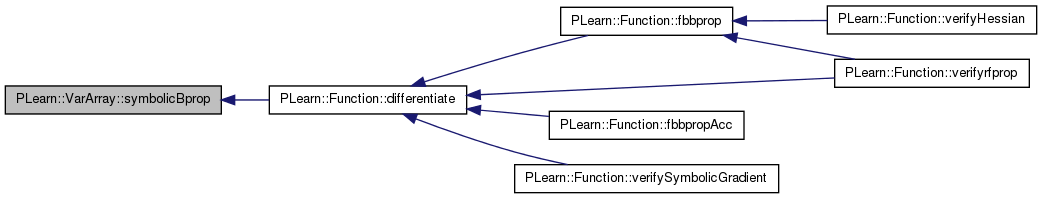

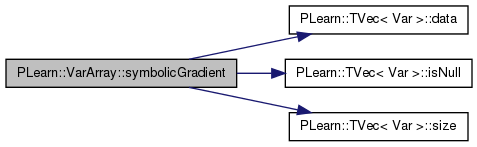

| VarArray PLearn::VarArray::symbolicGradient | ( | ) |

returns a VarArray of all the ->g members of the Vars in this array.

Definition at line 860 of file VarArray.cc.

References PLearn::TVec< Var >::data(), g, i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::Function::differentiate().

{

iterator array = data();

VarArray symbolic_gradients(size());

for(int i=0; i<size(); i++)

if (!array[i].isNull())

symbolic_gradients[i] = array[i]->g;

return symbolic_gradients;

}

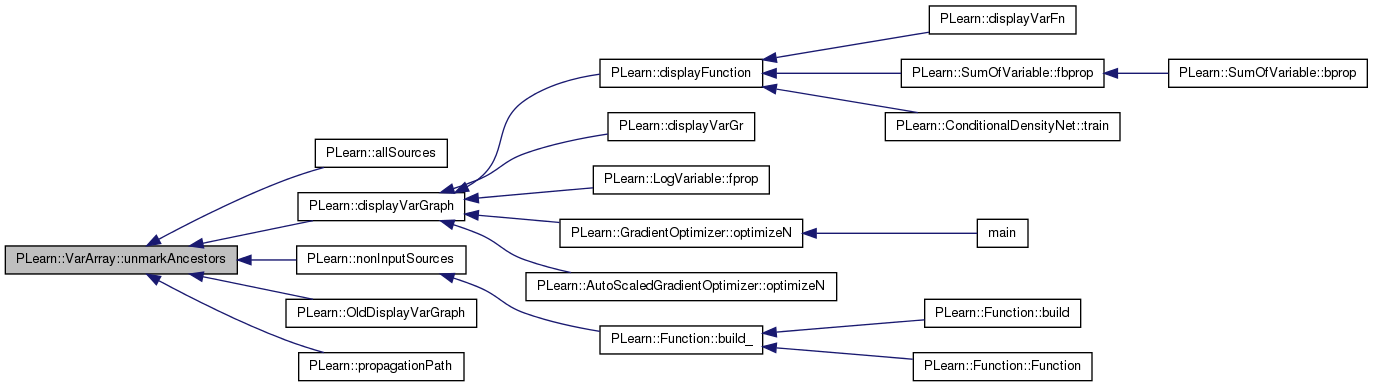

| void PLearn::VarArray::unmarkAncestors | ( | ) | const |

Definition at line 1041 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

Referenced by PLearn::allSources(), PLearn::displayVarGraph(), PLearn::nonInputSources(), PLearn::OldDisplayVarGraph(), and PLearn::propagationPath().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->unmarkAncestors();

}

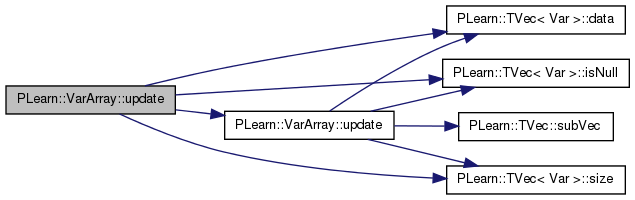

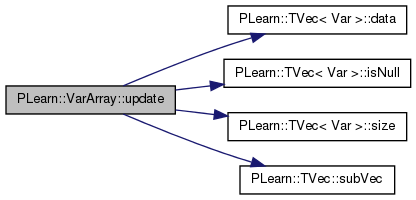

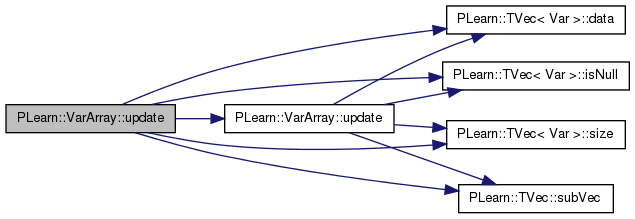

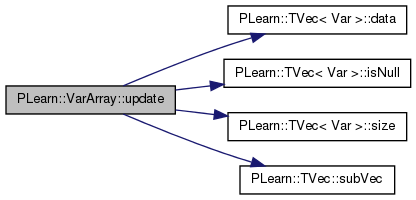

Update the variables in the VarArray with different step sizes, and an optional scaling coefficient + constant coefficient step_sizes and direction must have the same length As with the update with a fixed step size, there is a possible scaling down, and the return value indicates contraints have been hit.

Definition at line 940 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< T >::subVec().

{

bool hit = false;

int pos = 0;

iterator array = data();

for (int i=0; i<size(); pos+=array[i++]->nelems()) {

if (!array[i].isNull()) {

hit = hit ||

array[i]->update(

step_sizes.subVec(pos, array[i]->nelems()),

direction.subVec(pos, array[i]->nelems()),

coeff, b);

}

}

return hit;

}

set value = value + step_size * gradient with step_size possibly scaled down s.t. box constraints are satisfied return true if box constraints have been hit with the update

Definition at line 969 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), and update().

{

bool hit = false;

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

hit = hit || array[i]->update(step_size,clear);

return hit;

}

Same as update(Vec step_sizes, Vec direction, real coeff) except there can be 1 different coeff for each variable.

Definition at line 923 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< T >::subVec().

{

bool hit = false;

int pos = 0;

iterator array = data();

for (int i=0; i<size(); pos+=array[i++]->nelems()) {

if (!array[i].isNull()) {

hit = hit ||

array[i]->update(

step_sizes.subVec(pos, array[i]->nelems()),

direction.subVec(pos, array[i]->nelems()),

coeffs[i]);

}

}

return hit;

}

set value = new_value projected down in each direction independently in the subspace in which the box constraints are satisfied. return true if box constraints have been hit with the update

Definition at line 988 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), PLearn::TVec< T >::subVec(), and update().

{

bool hit = false;

int pos=0;

iterator array = data();

for(int i=0; i<size(); pos+=array[i++]->nelems())

if (!array[i].isNull())

hit = hit ||

array[i]->update(new_value.subVec(pos,array[i]->nelems()));

return hit;

}

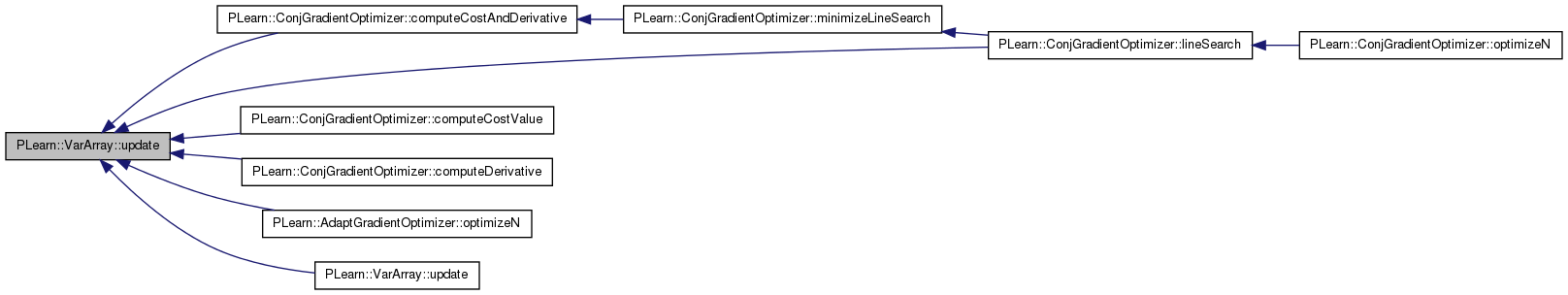

set value = value + (step_size * coeff + b) * direction with step_size possibly scaled down s.t. box constraints are satisfied return true if box constraints have been hit with the update

Definition at line 911 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), PLearn::TVec< Var >::size(), and PLearn::TVec< T >::subVec().

Referenced by PLearn::ConjGradientOptimizer::computeCostAndDerivative(), PLearn::ConjGradientOptimizer::computeCostValue(), PLearn::ConjGradientOptimizer::computeDerivative(), PLearn::ConjGradientOptimizer::lineSearch(), PLearn::AdaptGradientOptimizer::optimizeN(), and update().

{

bool hit = false;

int pos=0;

iterator array = data();

for(int i=0; i<size(); pos+=array[i++]->nelems())

if (!array[i].isNull())

hit = hit ||

array[i]->update(step_size,direction.subVec(pos,array[i]->nelems()), coeff, b);

return hit;

}

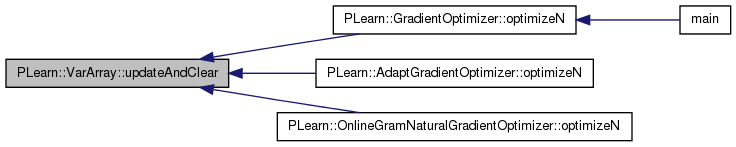

| void PLearn::VarArray::updateAndClear | ( | ) | [inline] |

value += step_size*gradient; gradient.clear();

Definition at line 238 of file VarArray.h.

References i.

Referenced by PLearn::GradientOptimizer::optimizeN(), PLearn::AdaptGradientOptimizer::optimizeN(), and PLearn::OnlineGramNaturalGradientOptimizer::optimizeN().

| void PLearn::VarArray::updateWithWeightDecay | ( | real | step_size, |

| real | weight_decay, | ||

| bool | L1 = false, |

||

| bool | clear = true |

||

| ) |

for each element of the array: if (L1) value += learning_rate*gradient decrease |value| by learning_rate*weight_decay if it does not make value change sign else // L2 value += learning_rate*(gradient - weight_decay*value) if (clear) gradient=0

Definition at line 979 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->updateWithWeightDecay(step_size,weight_decay,L1,clear);

return;

}

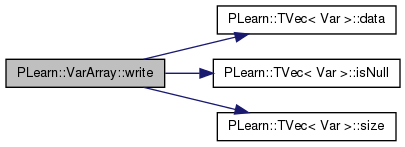

| void PLearn::VarArray::write | ( | ostream & | out | ) | const |

Reimplemented from PLearn::Array< Var >.

Definition at line 1008 of file VarArray.cc.

References PLearn::TVec< Var >::data(), i, PLearn::TVec< Var >::isNull(), and PLearn::TVec< Var >::size().

{

iterator array = data();

for(int i=0; i<size(); i++)

if (!array[i].isNull())

array[i]->write(out);

}

1.7.4

1.7.4