|

PLearn 0.1

|

|

PLearn 0.1

|

Carry out an hyper-parameter optimization according to an Oracle. More...

#include <HyperOptimize.h>

Public Member Functions | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual HyperOptimize * | deepCopy (CopiesMap &copies) const |

| HyperOptimize () | |

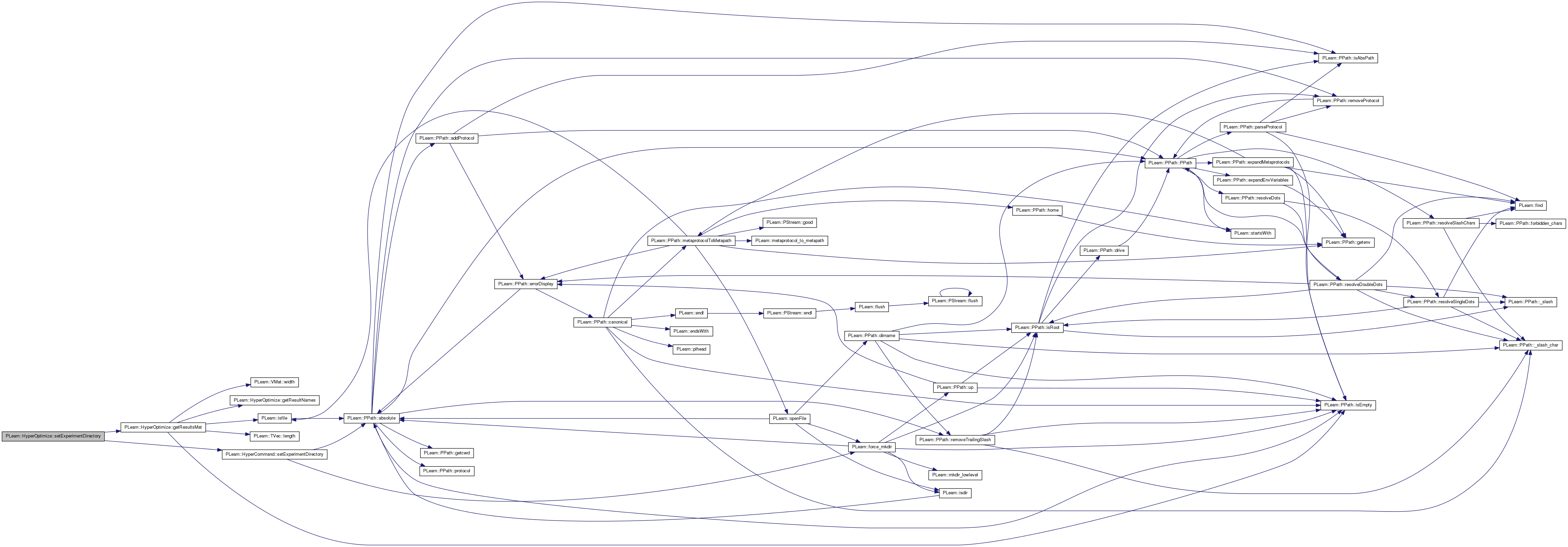

| virtual void | setExperimentDirectory (const PPath &the_expdir) |

| Sets the expdir and calls createResultsMat. | |

| virtual TVec< string > | getResultNames () const |

| Returns the names of the results returned by the optimize() method. | |

| virtual void | forget () |

| Resets the command's internal state as if freshly constructed (default does nothing) | |

| virtual Vec | optimize () |

| Executes the command, returning the resulting costvec of its optimization (or an empty vec if it didn't do any testng). | |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | which_cost_pos |

| string | which_cost |

| int | min_n_trials |

| PP< OptionsOracle > | oracle |

| bool | provide_tester_expdir |

| TVec< PP< HyperCommand > > | sub_strategy |

| A possible sub-strategy to optimize other hyper parameters. | |

| bool | rerun_after_sub |

| bool | provide_sub_expdir |

| bool | save_best_learner |

| int | auto_save |

| int | auto_save_test |

| int | auto_save_diff_time |

| PP< Splitter > | splitter |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | getResultsMat () |

| void | reportResult (int trialnum, const Vec &results) |

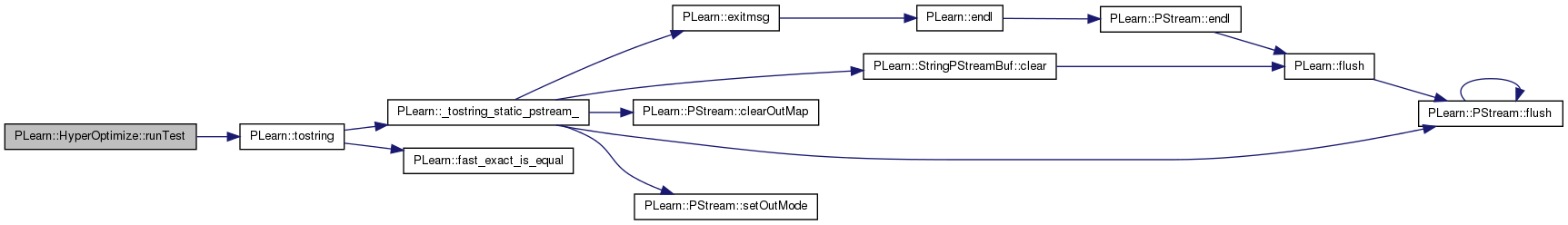

| Vec | runTest (int trialnum) |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| VMat | resultsmat |

| Store the results computed for each trial. | |

| real | best_objective |

| Vec | best_results |

| PP< PLearner > | best_learner |

| int | trialnum |

| TVec< string > | option_vals |

| PP< PTimer > | auto_save_timer |

Private Types | |

| typedef HyperCommand | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

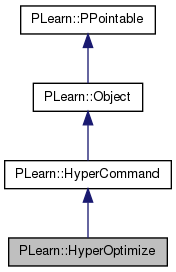

Carry out an hyper-parameter optimization according to an Oracle.

HyperOptimize is part of a sequence of HyperCommands (specified within an HyperLearner) to optimize a validation cost over settings of hyper-parameters provided by an Oracle. [NOTE: The "underlying learner" is the PLearner object (specified within the enclosing HyperLearner) whose hyper-parameters we are trying to optimize.]

The sequence of steps followed by HyperOptimize is as follows:

Optionally, instead of a plain Train/Test in Step 3, a SUB-STRATEGY may be invoked. This can be viewed as a "sub-routine" for hyperoptimization and can be used to implement a form of conditioning: given the current setting for hyper-parameters X,Y,Z, find the best setting of hyper-parameters T,U,V. The most common example is for doing early-stopping when training a neural network: a first-level HyperOptimize command can use an ExplicitListOracle to jointly optimize over weight-decays and the number of hidden units. A sub-strategy can then be used with an EarlyStoppingOracle to find the optimal number of training stages (epochs) for each combination of weight-decay/hidden units.

Note that after optimization, the matrix of all trials is available through the option 'resultsmat' (which is declared as nosave). This is available even if no expdir has been declared.

Definition at line 106 of file HyperOptimize.h.

typedef HyperCommand PLearn::HyperOptimize::inherited [private] |

Reimplemented from PLearn::HyperCommand.

Definition at line 108 of file HyperOptimize.h.

| PLearn::HyperOptimize::HyperOptimize | ( | ) |

Definition at line 110 of file HyperOptimize.cc.

: best_objective(REAL_MAX), trialnum(0), auto_save_timer(new PTimer()), which_cost_pos(-1), which_cost(), min_n_trials(0), provide_tester_expdir(false), rerun_after_sub(false), provide_sub_expdir(true), save_best_learner(false), auto_save(0), auto_save_test(0), auto_save_diff_time(3*60*60) { }

| string PLearn::HyperOptimize::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

| OptionList & PLearn::HyperOptimize::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

| RemoteMethodMap & PLearn::HyperOptimize::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

| Object * PLearn::HyperOptimize::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 107 of file HyperOptimize.cc.

| StaticInitializer HyperOptimize::_static_initializer_ & PLearn::HyperOptimize::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

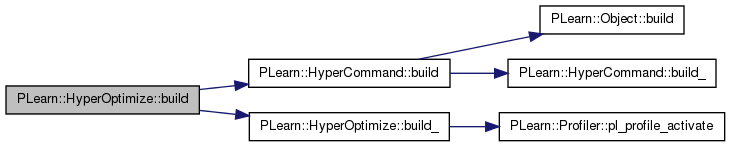

| void PLearn::HyperOptimize::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::HyperCommand.

Definition at line 234 of file HyperOptimize.cc.

References PLearn::HyperCommand::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::HyperOptimize::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::HyperCommand.

Definition at line 226 of file HyperOptimize.cc.

References PLearn::Profiler::pl_profile_activate().

Referenced by build().

| string PLearn::HyperOptimize::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 107 of file HyperOptimize.cc.

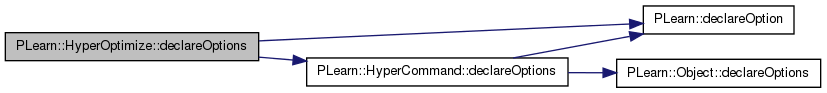

| void PLearn::HyperOptimize::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::HyperCommand.

Definition at line 129 of file HyperOptimize.cc.

References auto_save, auto_save_diff_time, auto_save_test, best_learner, best_objective, best_results, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::HyperCommand::declareOptions(), PLearn::OptionBase::learntoption, min_n_trials, PLearn::OptionBase::nosave, option_vals, oracle, provide_sub_expdir, provide_tester_expdir, rerun_after_sub, resultsmat, save_best_learner, splitter, sub_strategy, trialnum, and which_cost.

{

declareOption(

ol, "which_cost", &HyperOptimize::which_cost, OptionBase::buildoption,

"An index or a name in the tester's statnames to be used as the"

" objective cost to minimize. If the index <0, we will take the last"

" learner as the best.");

declareOption(

ol, "min_n_trials", &HyperOptimize::min_n_trials, OptionBase::buildoption,

"Minimum nb of trials before saving best model");

declareOption(

ol, "oracle", &HyperOptimize::oracle, OptionBase::buildoption,

"Oracle to interrogate to get hyper-parameter values to try.");

declareOption(

ol, "provide_tester_expdir", &HyperOptimize::provide_tester_expdir, OptionBase::buildoption,

"Should the tester be provided with an expdir for each option combination to test");

declareOption(

ol, "sub_strategy", &HyperOptimize::sub_strategy, OptionBase::buildoption,

"Optional sub-strategy to optimize other hyper-params (for each combination given by the oracle)");

declareOption(

ol, "rerun_after_sub", &HyperOptimize::rerun_after_sub, OptionBase::buildoption,

"If this is true, a new evaluation will be performed after executing the sub-strategy, \n"

"using this HyperOptimizer's splitter and which_cost. \n"

"This is useful if the sub_strategy optimizes a different cost, or uses different splitting.\n");

declareOption(

ol, "provide_sub_expdir", &HyperOptimize::provide_sub_expdir, OptionBase::buildoption,

"Should sub_strategy commands be provided an expdir");

declareOption(

ol, "save_best_learner", &HyperOptimize::save_best_learner,

OptionBase::buildoption,

"If true, the best learner at any step will be saved in the\n"

"strategy expdir, as 'current_best_learner.psave'.");

declareOption(

ol, "splitter", &HyperOptimize::splitter, OptionBase::buildoption,

"If not specified, we'll use default splitter specified in the hyper-learner's tester option");

declareOption(

ol, "auto_save", &HyperOptimize::auto_save, OptionBase::buildoption,

"Save the hlearner and reload it if necessary.\n"

"0 mean never, 1 mean always and >0 save iff trialnum%auto_save == 0.\n"

"In the last case, it save after the last trial.\n"

"See auto_save_diff_time as both condition must be true to save.\n");

declareOption(

ol, "auto_save_diff_time", &HyperOptimize::auto_save_diff_time,

OptionBase::buildoption,

"HyperOptimize::auto_save_diff_time is the mininum amount of time\n"

"(in seconds) before the first save point, then between two\n"

"consecutive save points.");

declareOption(

ol, "auto_save_test", &HyperOptimize::auto_save_test, OptionBase::buildoption,

"exit after each auto_save. This is usefull to test auto_save.\n"

"0 mean never, 1 mean always and >0 save iff trialnum%auto_save == 0");

declareOption(

ol, "resultsmat", &HyperOptimize::resultsmat,

OptionBase::learntoption | OptionBase::nosave,

"Gives access to the results of all trials during the last training.\n"

"The last row lists the best results found and kept. Note that this\n"

"is declared 'nosave' and is intended for programmatic access by other\n"

"functions through the getOption() mechanism. If an expdir is declared\n"

"this matrix is available under the name 'results.pmat' in the expdir.");

declareOption(ol, "best_objective", &HyperOptimize::best_objective,

OptionBase::learntoption,

"The best objective seen up to date.");

declareOption(ol, "best_results", &HyperOptimize::best_results,

OptionBase::learntoption,

"The best result seen up to date." );

declareOption(ol, "best_learner", &HyperOptimize::best_learner,

OptionBase::learntoption,

"A copy of the learner to the best learner seen up to date." );

declareOption(ol, "trialnum", &HyperOptimize::trialnum,

OptionBase::learntoption, "The number of trial done." );

declareOption(ol, "option_vals", &HyperOptimize::option_vals,

OptionBase::learntoption,"The option value to try." );

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::HyperOptimize::declaringFile | ( | ) | [inline, static] |

| HyperOptimize * PLearn::HyperOptimize::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::HyperCommand.

Definition at line 107 of file HyperOptimize.cc.

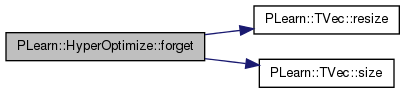

| void PLearn::HyperOptimize::forget | ( | ) | [virtual] |

Resets the command's internal state as if freshly constructed (default does nothing)

Reimplemented from PLearn::HyperCommand.

Definition at line 346 of file HyperOptimize.cc.

References best_learner, best_objective, best_results, i, n, option_vals, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_strategy, and trialnum.

{

trialnum = 0;

option_vals.resize(0);

best_objective = REAL_MAX;

best_results = Vec();

best_learner = 0;

for (int i=0, n=sub_strategy.size() ; i<n ; ++i)

sub_strategy[i]->forget();

}

| OptionList & PLearn::HyperOptimize::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 107 of file HyperOptimize.cc.

| OptionMap & PLearn::HyperOptimize::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 107 of file HyperOptimize.cc.

| RemoteMethodMap & PLearn::HyperOptimize::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 107 of file HyperOptimize.cc.

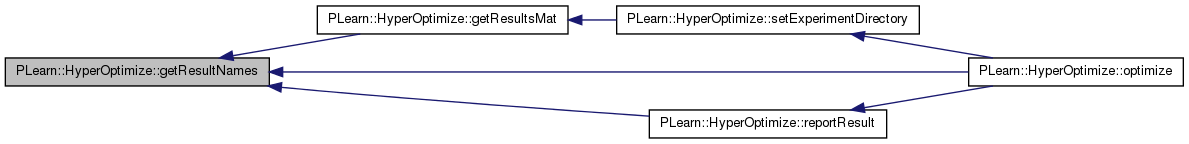

| TVec< string > PLearn::HyperOptimize::getResultNames | ( | ) | const [virtual] |

Returns the names of the results returned by the optimize() method.

Implements PLearn::HyperCommand.

Definition at line 341 of file HyperOptimize.cc.

References PLearn::HyperCommand::hlearner, and PLearn::HyperLearner::tester.

Referenced by getResultsMat(), optimize(), and reportResult().

{

return hlearner->tester->getStatNames();

}

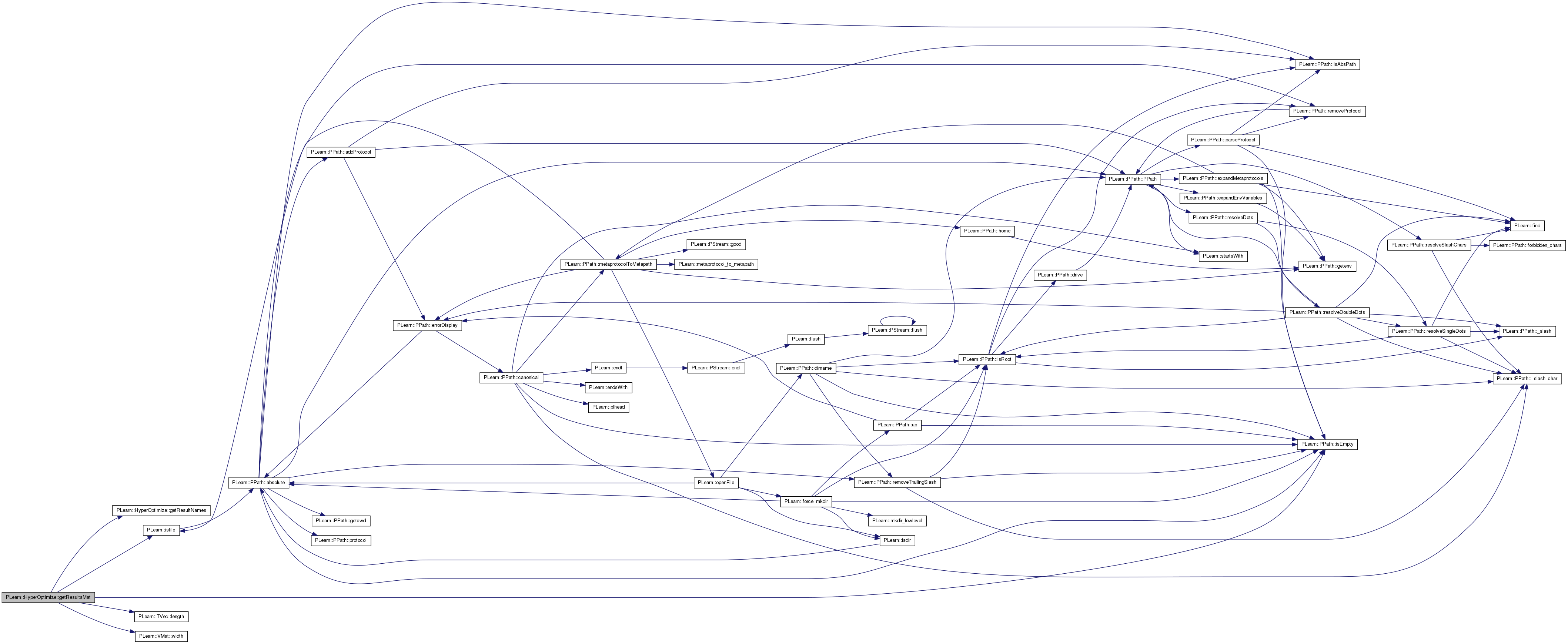

| void PLearn::HyperOptimize::getResultsMat | ( | ) | [protected] |

Definition at line 246 of file HyperOptimize.cc.

References PLearn::HyperCommand::expdir, getResultNames(), PLearn::HyperCommand::hlearner, PLearn::PPath::isEmpty(), PLearn::isfile(), j, PLearn::TVec< T >::length(), PLearn::HyperLearner::option_fields, PLERROR, resultsmat, w, and PLearn::VMat::width().

Referenced by setExperimentDirectory().

{

TVec<string> cost_fields = getResultNames();

TVec<string> option_fields = hlearner->option_fields;

int w = 2 + option_fields.length() + cost_fields.length();

// If we have an expdir, create a FileVMatrix to save the results.

// Otherwise, just a MemoryVMatrix to make the results available as a

// getOption after training.

if (! expdir.isEmpty())

{

string fname = expdir+"results.pmat";

if(isfile(fname)){

//we reload the old version if it exist

resultsmat = new FileVMatrix(fname, true);

if(resultsmat.width()!=w)

PLERROR("In HyperOptimize::getResultsMat() - The existing "

"results mat(%s) that we should reload don't have the "

"width that we need. Did you added some statnames?",

fname.c_str());

return;

}else

resultsmat = new FileVMatrix(fname,0,w);

}

else

resultsmat = new MemoryVMatrix(0,w);

int j=0;

resultsmat->declareField(j++, "_trial_");

resultsmat->declareField(j++, "_objective_");

for(int k=0; k<option_fields.length(); k++)

resultsmat->declareField(j++, option_fields[k]);

for(int k=0; k<cost_fields.length(); k++)

resultsmat->declareField(j++, cost_fields[k]);

if (! expdir.isEmpty())

resultsmat->saveFieldInfos();

}

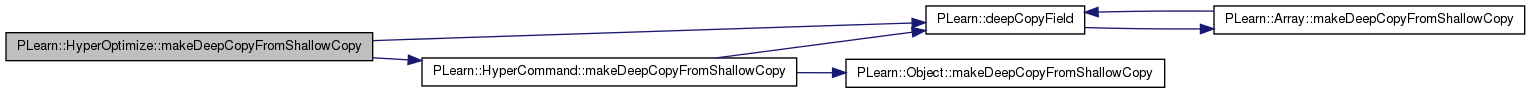

| void PLearn::HyperOptimize::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::HyperCommand.

Definition at line 620 of file HyperOptimize.cc.

References auto_save_timer, best_learner, best_results, PLearn::deepCopyField(), PLearn::HyperCommand::makeDeepCopyFromShallowCopy(), option_vals, oracle, resultsmat, splitter, and sub_strategy.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(resultsmat, copies);

deepCopyField(best_results, copies);

deepCopyField(best_learner, copies);

deepCopyField(option_vals, copies);

deepCopyField(auto_save_timer, copies);

deepCopyField(oracle, copies);

deepCopyField(sub_strategy, copies);

deepCopyField(splitter, copies);

}

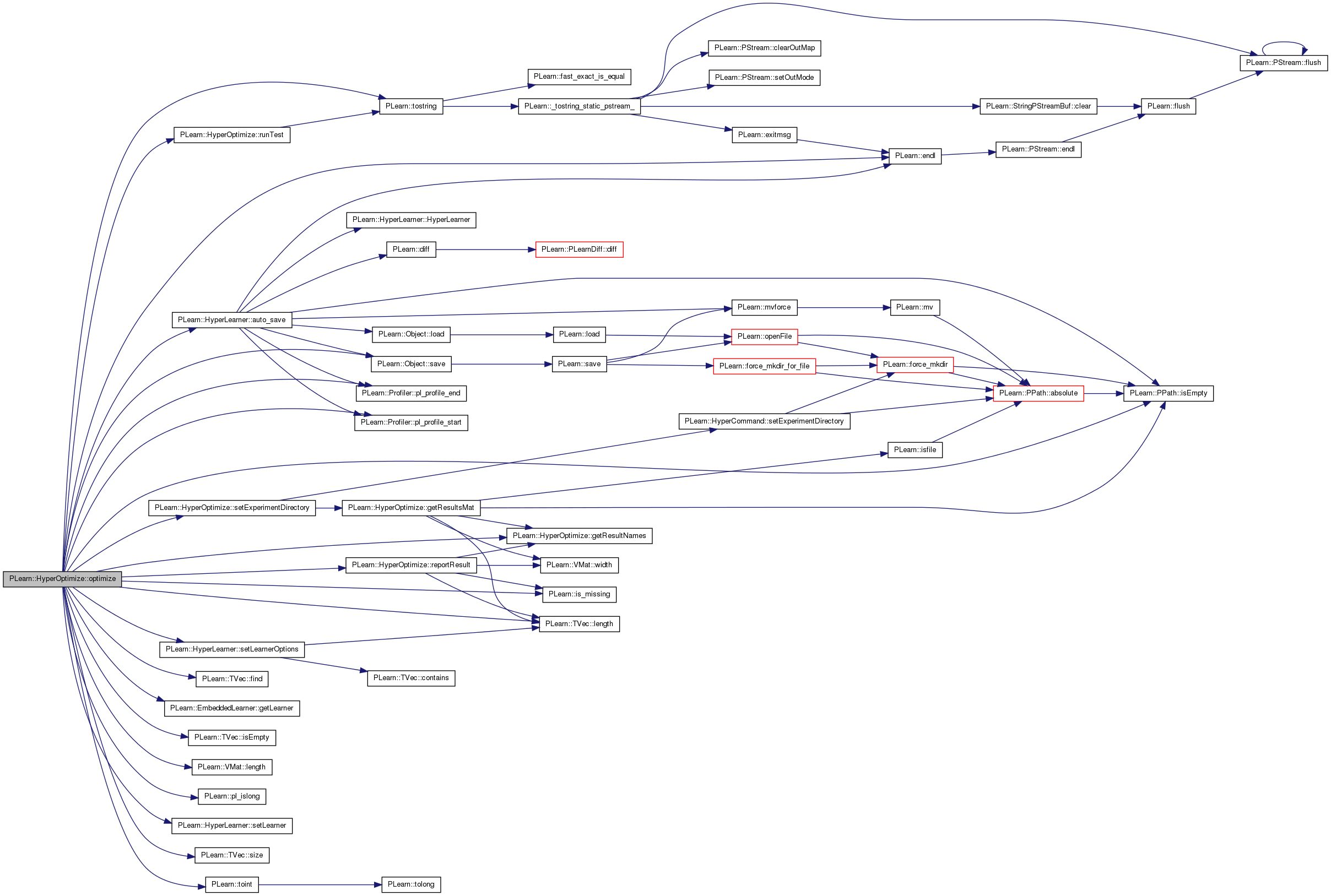

| Vec PLearn::HyperOptimize::optimize | ( | ) | [virtual] |

Executes the command, returning the resulting costvec of its optimization (or an empty vec if it didn't do any testng).

Implements PLearn::HyperCommand.

Definition at line 358 of file HyperOptimize.cc.

References PLearn::HyperLearner::auto_save(), auto_save, auto_save_diff_time, auto_save_test, auto_save_timer, best_learner, best_objective, best_results, PLearn::endl(), PLearn::HyperCommand::expdir, PLearn::TVec< T >::find(), PLearn::EmbeddedLearner::getLearner(), getResultNames(), PLearn::HyperCommand::hlearner, i, PLearn::is_missing(), PLearn::TVec< T >::isEmpty(), PLearn::PPath::isEmpty(), PLearn::TVec< T >::length(), PLearn::VMat::length(), min_n_trials, MISSING_VALUE, n, option_vals, oracle, PLearn::perr, PLearn::pl_islong(), PLearn::Profiler::pl_profile_end(), PLearn::Profiler::pl_profile_start(), PLERROR, PLWARNING, provide_sub_expdir, reportResult(), rerun_after_sub, resultsmat, runTest(), PLearn::Object::save(), save_best_learner, setExperimentDirectory(), PLearn::HyperLearner::setLearner(), PLearn::HyperLearner::setLearnerOptions(), PLearn::TVec< T >::size(), sub_strategy, PLearn::toint(), PLearn::tostring(), trialnum, PLearn::HyperCommand::verbosity, which_cost, and which_cost_pos.

{

//in the case when auto_save is true. This function can be called even

//if the optimisation is finished. We must not redo it in this case.

if(trialnum>0&&!option_vals&&resultsmat.length()==trialnum+1){

hlearner->setLearner(best_learner);

if (!best_results.isEmpty() && resultsmat->get(resultsmat.length()-1,0)!=-1)

reportResult(-1,best_results);

return best_results;

}

TVec<string> option_names;

option_names = oracle->getOptionNames();

if(trialnum==0){

if(option_vals.size()==0)

option_vals = oracle->generateFirstTrial();

if (option_vals.size() != option_names.size())

PLERROR("HyperOptimize::optimize: the number (%d) of option values (%s) "

"does not match the number (%d) of option names (%s) ",

option_vals.size(), tostring(option_vals).c_str(),

option_names.size(), tostring(option_names).c_str());

}

which_cost_pos= getResultNames().find(which_cost);

if(which_cost_pos < 0){

if(!pl_islong(which_cost))

PLERROR("In HyperOptimize::optimize() - option 'which_cost' with "

"value '%s' is not a number and is not a valid result test name",

which_cost.c_str());

which_cost_pos= toint(which_cost);

}

Vec results;

while(option_vals)

{

auto_save_timer->startTimer("auto_save");

if(verbosity>0) {

// Print current option-value pairs in slightly comprehensible form

string kv;

for (int i=0, n=option_names.size() ; i<n ; ++i) {

kv += option_names[i] + '=' + option_vals[i];

if (i < n-1)

kv += ", ";

}

perr << "In HyperOptimize::optimize() - We optimize with "

"parameters " << kv << "\n";

}

// This will also call build and forget on the learner unless unnecessary

// because the modified options don't require it.

hlearner->setLearnerOptions(option_names, option_vals);

if(sub_strategy)

{

Vec best_sub_results;

for(int commandnum=0; commandnum<sub_strategy.length(); commandnum++)

{

sub_strategy[commandnum]->setHyperLearner(hlearner);

sub_strategy[commandnum]->forget();

if(!expdir.isEmpty() && provide_sub_expdir)

sub_strategy[commandnum]->setExperimentDirectory(

expdir / ("Trials"+tostring(trialnum)) / ("Step"+tostring(commandnum))

);

best_sub_results = sub_strategy[commandnum]->optimize();

}

if(rerun_after_sub)

results = runTest(trialnum);

else

results = best_sub_results;

}

else

results = runTest(trialnum);

reportResult(trialnum,results);

real objective = MISSING_VALUE;

if (which_cost_pos>=0)

objective = results[which_cost_pos];

else

{//The best is always the last

best_objective = objective;

best_results = results;

best_learner = hlearner->getLearner();

}

option_vals = oracle->generateNextTrial(option_vals,objective);

++trialnum;

if(!is_missing(objective) &&

(objective < best_objective || best_results.length()==0) && (trialnum>=min_n_trials || !option_vals))

{

best_objective = objective;

best_results = results;

CopiesMap copies;

best_learner = NULL;

Profiler::pl_profile_start("HyperOptimizer::optimize::deepCopy");

best_learner = hlearner->getLearner()->deepCopy(copies);

Profiler::pl_profile_end("HyperOptimizer::optimize::deepCopy");

if (save_best_learner && !expdir.isEmpty()) {

PLearn::save(expdir / "current_best_learner.psave",

best_learner);

}

}

if(verbosity>1) {

perr << "In HyperOptimize::optimize() - cost=" << which_cost

<< " nb of trials="<<trialnum

<< " Current value=" << objective << " Best value= "

<< best_objective << endl;

}

auto_save_timer->stopTimer("auto_save");

if (auto_save > 0 &&

(trialnum % auto_save == 0 || option_vals.isEmpty()))

{

int s = int(auto_save_timer->getTimer("auto_save"));

if(s > auto_save_diff_time || option_vals.isEmpty()) {

hlearner->auto_save();

auto_save_timer->resetTimer("auto_save");

if(auto_save_test>0 && trialnum%auto_save_test==0)

PLERROR("In HyperOptimize::optimize() - auto_save_test is true,"

" exiting");

}

}

}

// Detect the case where no trials at all were performed!

if (trialnum == 0)

PLWARNING("In HyperOptimize::optimize - No trials at all were completed;\n"

"perhaps the oracle settings are wrong?");

// revert to best_learner if one found.

hlearner->setLearner(best_learner);

if (best_results.isEmpty())

// This could happen for instance if all results are NaN.

PLWARNING("In HyperOptimize::optimize - Could not find a best result,"

" something must be wrong");

else

// report best result again, if not empty

reportResult(-1,best_results);

return best_results;

}

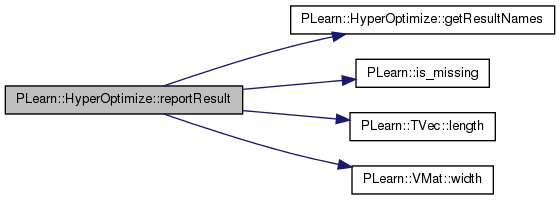

Definition at line 285 of file HyperOptimize.cc.

References PLearn::HyperCommand::expdir, getResultNames(), PLearn::HyperCommand::hlearner, PLearn::is_missing(), j, PLearn::EmbeddedLearner::learner_, PLearn::TVec< T >::length(), PLearn::HyperLearner::option_fields, PLERROR, resultsmat, trialnum, which_cost_pos, and PLearn::VMat::width().

Referenced by optimize().

{

if(expdir!="")

{

TVec<string> cost_fields = getResultNames();

TVec<string> option_fields = hlearner->option_fields;

if(results.length() != cost_fields.length())

PLERROR("In HyperOptimize::reportResult - Length of results vector (%d) "

"differs from number of cost fields (%d)",

results.length(), cost_fields.length());

// ex: _trial_ _objective_ nepochs nhidden ... train_error

Vec newres(resultsmat.width());

int j=0;

newres[j++] = trialnum;

newres[j++] = which_cost_pos;

for(int k=0; k<option_fields.length(); k++)

{

string optstr = hlearner->learner_->getOption(option_fields[k]);

real optreal = toreal(optstr);

if(is_missing(optreal)) // it's not directly a real: get a mapping for it

optreal = resultsmat->addStringMapping(k, optstr);

newres[j++] = optreal;

}

for(int k=0; k<cost_fields.length(); k++)

newres[j++] = results[k];

resultsmat->appendRow(newres);

resultsmat->flush();

}

}

restore default splitter

Definition at line 321 of file HyperOptimize.cc.

References PLearn::HyperCommand::expdir, PLearn::HyperCommand::hlearner, provide_tester_expdir, splitter, PLearn::HyperLearner::tester, and PLearn::tostring().

Referenced by optimize().

{

PP<PTester> tester = hlearner->tester;

string testerexpdir = "";

if(expdir!="" && provide_tester_expdir)

testerexpdir = expdir / ("Trials"+tostring(trialnum)) / "";

tester->setExperimentDirectory(testerexpdir);

PP<Splitter> default_splitter = tester->splitter;

if(splitter) // set our own splitter

tester->splitter = splitter;

Vec results = tester->perform(false);

tester->splitter = default_splitter;

return results;

}

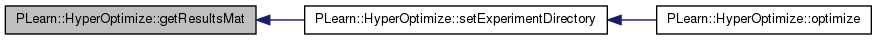

| void PLearn::HyperOptimize::setExperimentDirectory | ( | const PPath & | the_expdir | ) | [virtual] |

Sets the expdir and calls createResultsMat.

Reimplemented from PLearn::HyperCommand.

Definition at line 240 of file HyperOptimize.cc.

References getResultsMat(), and PLearn::HyperCommand::setExperimentDirectory().

Referenced by optimize().

{

inherited::setExperimentDirectory(the_expdir);

getResultsMat();

}

Reimplemented from PLearn::HyperCommand.

Definition at line 122 of file HyperOptimize.h.

Definition at line 137 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

Definition at line 139 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

Definition at line 138 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

PP<PTimer> PLearn::HyperOptimize::auto_save_timer [protected] |

Definition at line 118 of file HyperOptimize.h.

Referenced by makeDeepCopyFromShallowCopy(), and optimize().

PP<PLearner> PLearn::HyperOptimize::best_learner [protected] |

Definition at line 115 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and optimize().

real PLearn::HyperOptimize::best_objective [protected] |

Definition at line 113 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), and optimize().

Vec PLearn::HyperOptimize::best_results [protected] |

Definition at line 114 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and optimize().

Definition at line 130 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

TVec<string> PLearn::HyperOptimize::option_vals [protected] |

Definition at line 117 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and optimize().

Definition at line 131 of file HyperOptimize.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and optimize().

Definition at line 135 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

Definition at line 132 of file HyperOptimize.h.

Referenced by declareOptions(), and runTest().

Definition at line 134 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

VMat PLearn::HyperOptimize::resultsmat [protected] |

Store the results computed for each trial.

Definition at line 112 of file HyperOptimize.h.

Referenced by declareOptions(), getResultsMat(), makeDeepCopyFromShallowCopy(), optimize(), and reportResult().

Definition at line 136 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

Definition at line 140 of file HyperOptimize.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and runTest().

A possible sub-strategy to optimize other hyper parameters.

Definition at line 133 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and optimize().

int PLearn::HyperOptimize::trialnum [protected] |

Definition at line 116 of file HyperOptimize.h.

Referenced by declareOptions(), forget(), optimize(), and reportResult().

Definition at line 129 of file HyperOptimize.h.

Referenced by declareOptions(), and optimize().

Definition at line 122 of file HyperOptimize.h.

Referenced by optimize(), and reportResult().

1.7.4

1.7.4