|

PLearn 0.1

|

|

PLearn 0.1

|

#include <TangentLearner.h>

Public Member Functions | |

| TangentLearner () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual TangentLearner * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | initializeParams () |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| string | training_targets |

| bool | use_subspace_distance |

| bool | normalize_by_neighbor_distance |

| bool | ordered_vectors |

| real | smart_initialization |

| real | initialization_regularization |

| int | n_neighbors |

| int | n_dim |

| PP< Optimizer > | optimizer |

| Var | embedding |

| Func | output_f |

| Func | tangent_predictor |

| Func | projection_error_f |

| string | architecture_type |

| string | output_type |

| int | n_hidden_units |

| int | batch_size |

| real | norm_penalization |

| real | svd_threshold |

| real | projection_error_regularization |

| real | V_slack |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Func | cost_of_one_example |

| Var | b |

| Var | W |

| Var | c |

| Var | V |

| Var | tangent_targets |

| VarArray | parameters |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Definition at line 54 of file TangentLearner.h.

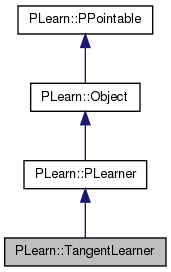

typedef PLearner PLearn::TangentLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 59 of file TangentLearner.h.

| PLearn::TangentLearner::TangentLearner | ( | ) |

Default constructor.

Definition at line 97 of file TangentLearner.cc.

: training_targets("local_neighbors"), use_subspace_distance(false), normalize_by_neighbor_distance(true), ordered_vectors(false), smart_initialization(0),initialization_regularization(1e-3), n_neighbors(5), n_dim(1), architecture_type("single_neural_network"), output_type("tangent_plane"), n_hidden_units(-1), batch_size(1), norm_penalization(0), svd_threshold(1e-5), projection_error_regularization(0), V_slack(0) { }

| string PLearn::TangentLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

| OptionList & PLearn::TangentLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

| RemoteMethodMap & PLearn::TangentLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

| Object * PLearn::TangentLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 152 of file TangentLearner.cc.

| StaticInitializer TangentLearner::_static_initializer_ & PLearn::TangentLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

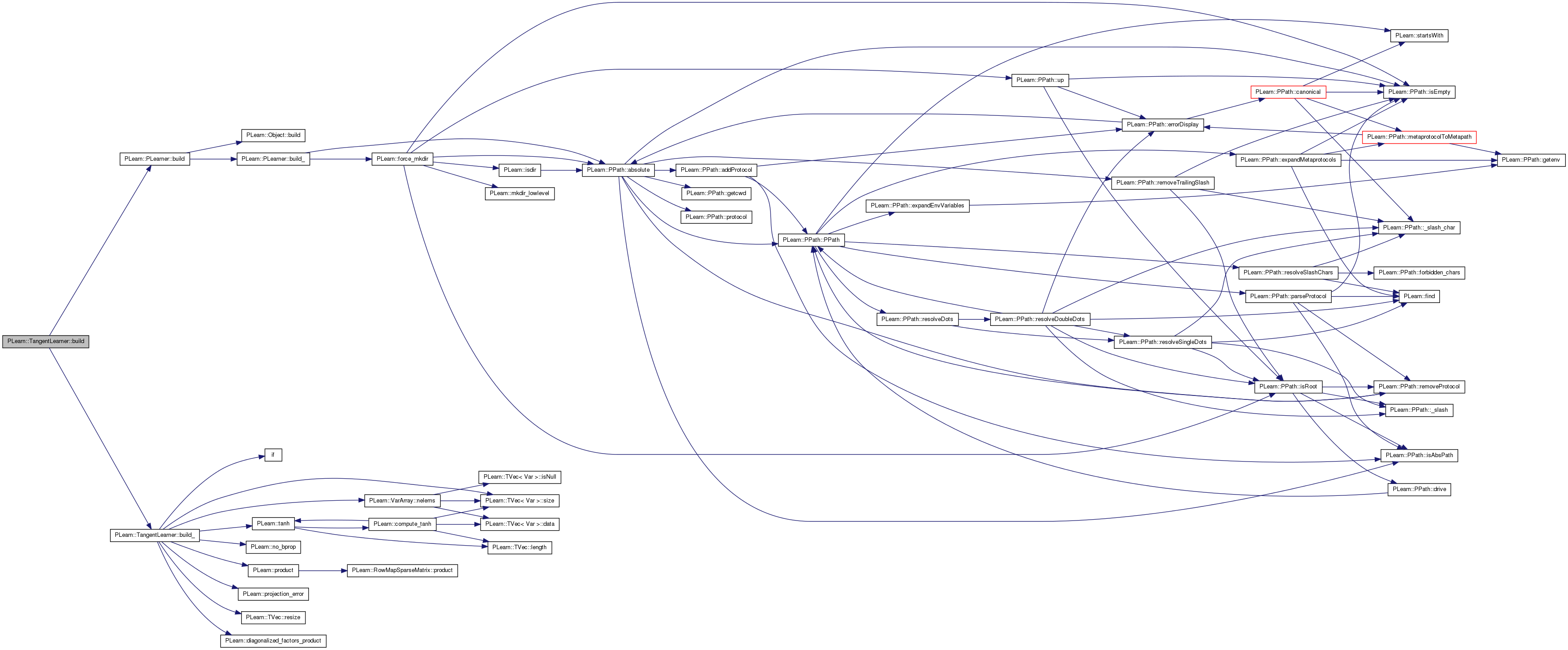

| void PLearn::TangentLearner::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 375 of file TangentLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

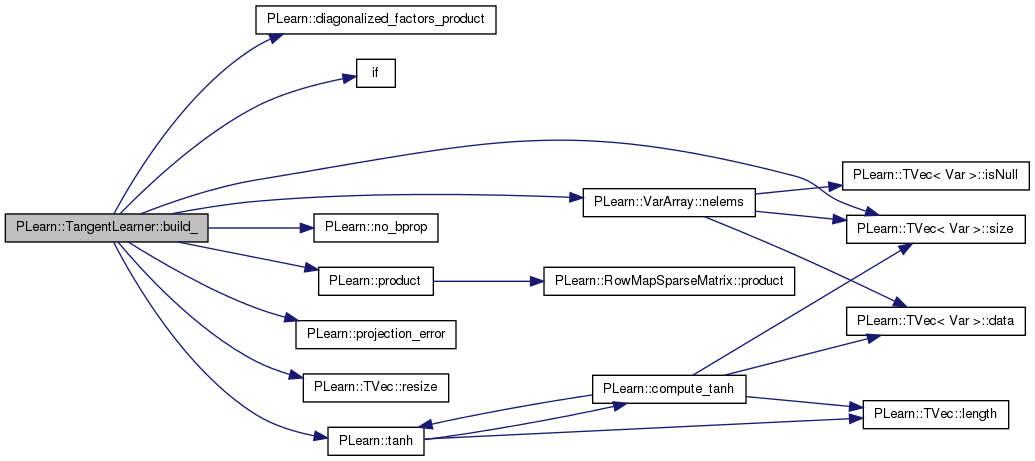

| void PLearn::TangentLearner::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 260 of file TangentLearner.cc.

References a, architecture_type, b, cost_of_one_example, PLearn::diagonalized_factors_product(), embedding, if(), PLearn::PLearner::inputsize_, n_dim, n_hidden_units, n_neighbors, PLearn::VarArray::nelems(), PLearn::no_bprop(), norm_penalization, normalize_by_neighbor_distance, ordered_vectors, output_f, output_type, parameters, PLERROR, PLearn::product(), PLearn::projection_error(), projection_error_f, projection_error_regularization, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), svd_threshold, tangent_predictor, tangent_targets, PLearn::tanh(), training_targets, use_subspace_distance, V, V_slack, W, and x.

Referenced by build().

{

int n = PLearner::inputsize_;

if (n>0)

{

if (architecture_type == "multi_neural_network")

{

if (n_hidden_units <= 0)

PLERROR("TangentLearner::Number of hidden units should be positive, now %d\n",n_hidden_units);

}

if (architecture_type == "single_neural_network")

{

if (n_hidden_units <= 0)

PLERROR("TangentLearner::Number of hidden units should be positive, now %d\n",n_hidden_units);

Var x(n);

b = Var(n_dim*n,1,"b");

W = Var(n_dim*n,n_hidden_units,"W");

c = Var(n_hidden_units,1,"c");

V = Var(n_hidden_units,n,"V");

tangent_predictor = Func(x, b & W & c & V, b + product(W,tanh(c + product(V,x))));

output_f = tangent_predictor;

}

else if (architecture_type == "linear")

{

Var x(n);

b = Var(n_dim*n,1,"b");

W = Var(n_dim*n,n,"W");

tangent_predictor = Func(x, b & W, b + product(W,x));

output_f = tangent_predictor;

}

else if (architecture_type == "embedding_neural_network")

{

if (n_hidden_units <= 0)

PLERROR("TangentLearner::Number of hidden units should be positive, now %d\n",n_hidden_units);

Var x(n);

W = Var(n_dim,n_hidden_units,"W");

c = Var(n_hidden_units,1,"c");

V = Var(n_hidden_units,n,"V");

b = Var(n_dim,n,"b");

Var a = tanh(c + product(V,x));

Var tangent_plane = diagonalized_factors_product(W,1-a*a,V);

tangent_predictor = Func(x, W & c & V, tangent_plane);

embedding = product(W,a);

if (output_type=="tangent_plane")

output_f = tangent_predictor;

else if (output_type=="embedding")

output_f = Func(x, embedding);

else if (output_type=="tangent_plane+embedding")

output_f = Func(x, tangent_plane & embedding);

}

else if (architecture_type == "slack_embedding_neural_network")

{

if (n_hidden_units <= 0)

PLERROR("TangentLearner::Number of hidden units should be positive, now %d\n",n_hidden_units);

Var x(n);

W = Var(n_dim,n_hidden_units,"W");

c = Var(n_hidden_units,1,"c");

V = Var(n_hidden_units,n,"V");

b = Var(n_dim,n,"b");

Var a = tanh(c + product(V,x));

Var tangent_plane = diagonalized_factors_product(W,1-a*a,no_bprop(V,V_slack));

tangent_predictor = Func(x, W & c & V, tangent_plane);

embedding = product(W,a);

if (output_type=="tangent_plane")

output_f = tangent_predictor;

else if (output_type=="embedding")

output_f = Func(x, embedding);

else if (output_type=="tangent_plane+embedding")

output_f = Func(x, tangent_plane & embedding);

}

else if (architecture_type == "embedding_quadratic")

{

Var x(n);

b = Var(n_dim,n,"b");

W = Var(n_dim*n,n,"W");

Var Wx = product(W,x);

Var tangent_plane = Wx + b;

tangent_predictor = Func(x, W & b, tangent_plane);

embedding = product(new PlusVariable(b,Wx),x);

if (output_type=="tangent_plane")

output_f = tangent_predictor;

else if (output_type=="embedding")

output_f = Func(x, embedding);

else if (output_type=="tangent_plane+embedding")

output_f = Func(x, tangent_plane & embedding);

}

else if (architecture_type != "")

PLERROR("TangentLearner::build, unknown architecture_type option %s (should be 'neural_network', 'linear', or empty string '')\n",

architecture_type.c_str());

if (parameters.size()>0 && parameters.nelems() == tangent_predictor->parameters.nelems())

tangent_predictor->parameters.copyValuesFrom(parameters);

parameters.resize(tangent_predictor->parameters.size());

for (int i=0;i<parameters.size();i++)

parameters[i] = tangent_predictor->parameters[i];

if (training_targets=="local_evectors")

tangent_targets = Var(n_dim,n);

else if (training_targets=="local_neighbors")

tangent_targets = Var(n_neighbors,n);

else PLERROR("TangentLearner::build, option training_targets is %s, should be 'local_evectors' or 'local_neighbors'.",

training_targets.c_str());

Var proj_err = projection_error(tangent_predictor->outputs[0], tangent_targets, norm_penalization, n,

normalize_by_neighbor_distance, use_subspace_distance, svd_threshold,

projection_error_regularization, ordered_vectors);

projection_error_f = Func(tangent_predictor->outputs[0] & tangent_targets, proj_err);

cost_of_one_example = Func(tangent_predictor->inputs & tangent_targets, tangent_predictor->parameters, proj_err);

}

}

| string PLearn::TangentLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 152 of file TangentLearner.cc.

| void PLearn::TangentLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 531 of file TangentLearner.cc.

References PLERROR.

{

PLERROR("TangentLearner::computeCostsFromOutputs not defined for this learner");

}

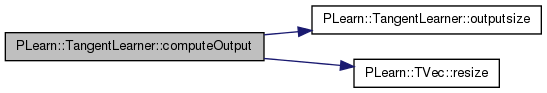

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 524 of file TangentLearner.cc.

References output_f, outputsize(), and PLearn::TVec< T >::resize().

{

int nout = outputsize();

output.resize(nout);

output << output_f(input);

}

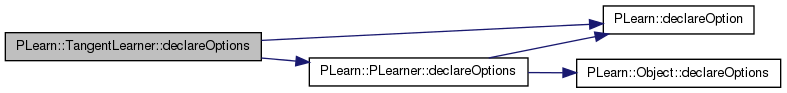

| void PLearn::TangentLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 154 of file TangentLearner.cc.

References architecture_type, batch_size, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), initialization_regularization, PLearn::OptionBase::learntoption, n_dim, n_hidden_units, n_neighbors, norm_penalization, normalize_by_neighbor_distance, optimizer, ordered_vectors, output_type, parameters, projection_error_regularization, smart_initialization, svd_threshold, training_targets, use_subspace_distance, and V_slack.

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

declareOption(ol, "training_targets", &TangentLearner::training_targets, OptionBase::buildoption,

"Specifies a strategy for training the tangent plane predictor. Possible values are the strings\n"

" local_evectors : local principal components (based on n_neighbors of x)\n"

" local_neighbors : difference between x and its n_neighbors.\n"

);

declareOption(ol, "smart_initialization",&TangentLearner::smart_initialization,OptionBase::buildoption,

"Use of Smart Initialization");

declareOption(ol, "initialization_regularization",&TangentLearner::initialization_regularization,OptionBase::buildoption,

"initialization_regularization");

declareOption(ol, "use_subspace_distance", &TangentLearner::use_subspace_distance, OptionBase::buildoption,

"Minimize distance between subspace spanned by f_i and by (x-neighbors), instead of between\n"

"the individual targets t_j and the subspace spanned by the f_i.\n");

declareOption(ol, "normalize_by_neighbor_distance", &TangentLearner::normalize_by_neighbor_distance,

OptionBase::buildoption, "Whether to normalize cost by distance of neighbor.\n");

declareOption(ol, "ordered_vectors", &TangentLearner::ordered_vectors,

OptionBase::buildoption, "Whether to apply a differential cost to each f_i so as to\n"

"obtain an ordering similar to the one obtained with principal component analysis.\n");

declareOption(ol, "n_neighbors", &TangentLearner::n_neighbors, OptionBase::buildoption,

"Number of nearest neighbors to consider.\n"

);

declareOption(ol, "n_dim", &TangentLearner::n_dim, OptionBase::buildoption,

"Number of tangent vectors to predict.\n"

);

declareOption(ol, "optimizer", &TangentLearner::optimizer, OptionBase::buildoption,

"Optimizer that optimizes the cost function Number of tangent vectors to predict.\n"

);

//declareOption(ol, "tangent_predictor", &TangentLearner::tangent_predictor, OptionBase::buildoption,

// "Func that specifies the parametrized mapping from inputs to predicted tangent planes\n"

// );

declareOption(ol, "architecture_type", &TangentLearner::architecture_type, OptionBase::buildoption,

"For pre-defined tangent_predictor types: \n"

" multi_neural_network : prediction[j] = b[j] + W[j]*tanh(c[j] + V[j]*x), where W[j] has n_hidden_units columns\n"

" where there is a separate set of parameters for each of n_dim tangent vectors to predict.\n"

" single_neural_network : prediction = b + W*tanh(c + V*x), where W has n_hidden_units columns\n"

" where the resulting vector is viewed as a n_dim by n matrix\n"

" linear : prediction = b + W*x\n"

" embedding_neural_network: prediction[k,i] = d(e[k]/d(x[i), where e(x) is an ordinary neural\n"

" network representing the embedding function (see output_type option)\n"

" slack_embedding_neural_network: like embedding_neural_network but outside V is replaced by\n"

" a call to no_bprop(V,V_slack), i.e. the gradient to it can\n"

" reduced (0<V_slack<1) or eliminated (V_slack=1).\n"

" embedding_quadratic: prediction[k,i] = d(e_k/d(x_i) = A_k x + b_k, where e_k(x) is a quadratic\n"

" form in x, i.e. e_k = x' A_k x + b_k' x\n"

" (empty string): specify explicitly the function with tangent_predictor option\n"

"where (b,W,c,V) are parameters to be optimized.\n"

);

declareOption(ol, "V_slack", &TangentLearner::V_slack, OptionBase::buildoption,

"Coefficient that multiplies gradient on outside V when architecture_type=='slack_embedding_neural_network'\n"

);

declareOption(ol, "n_hidden_units", &TangentLearner::n_hidden_units, OptionBase::buildoption,

"Number of hidden units (if architecture_type is some kidn of neural network)\n"

);

declareOption(ol, "output_type", &TangentLearner::output_type, OptionBase::buildoption,

"Default value (the only one considered if architecture_type != embedding_*) is\n"

" tangent_plane: output the predicted tangent plane.\n"

" embedding: output the embedding vector (only if architecture_type == embedding_*).\n"

" tangent_plane+embedding: output both (in this order).\n"

);

declareOption(ol, "batch_size", &TangentLearner::batch_size, OptionBase::buildoption,

" how many samples to use to estimate the average gradient before updating the weights\n"

" 0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "norm_penalization", &TangentLearner::norm_penalization, OptionBase::buildoption,

"Factor that multiplies an extra penalization of the norm of f_i so that ||f_i|| be close to 1.\n"

"The penalty is norm_penalization*sum_i (1 - ||f_i||^2)^2.\n"

);

declareOption(ol, "svd_threshold", &TangentLearner::svd_threshold, OptionBase::buildoption,

"Threshold to accept singular values of F in solving for linear combination weights on tangent subspace.\n"

);

declareOption(ol, "projection_error_regularization", &TangentLearner::projection_error_regularization, OptionBase::buildoption,

"Term added to the linear system matrix involved in fitting subspaces in the projection error computation.\n"

);

declareOption(ol, "parameters", &TangentLearner::parameters, OptionBase::learntoption,

"Parameters of the tangent_predictor function.\n"

);

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::TangentLearner::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 151 of file TangentLearner.h.

| TangentLearner * PLearn::TangentLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 152 of file TangentLearner.cc.

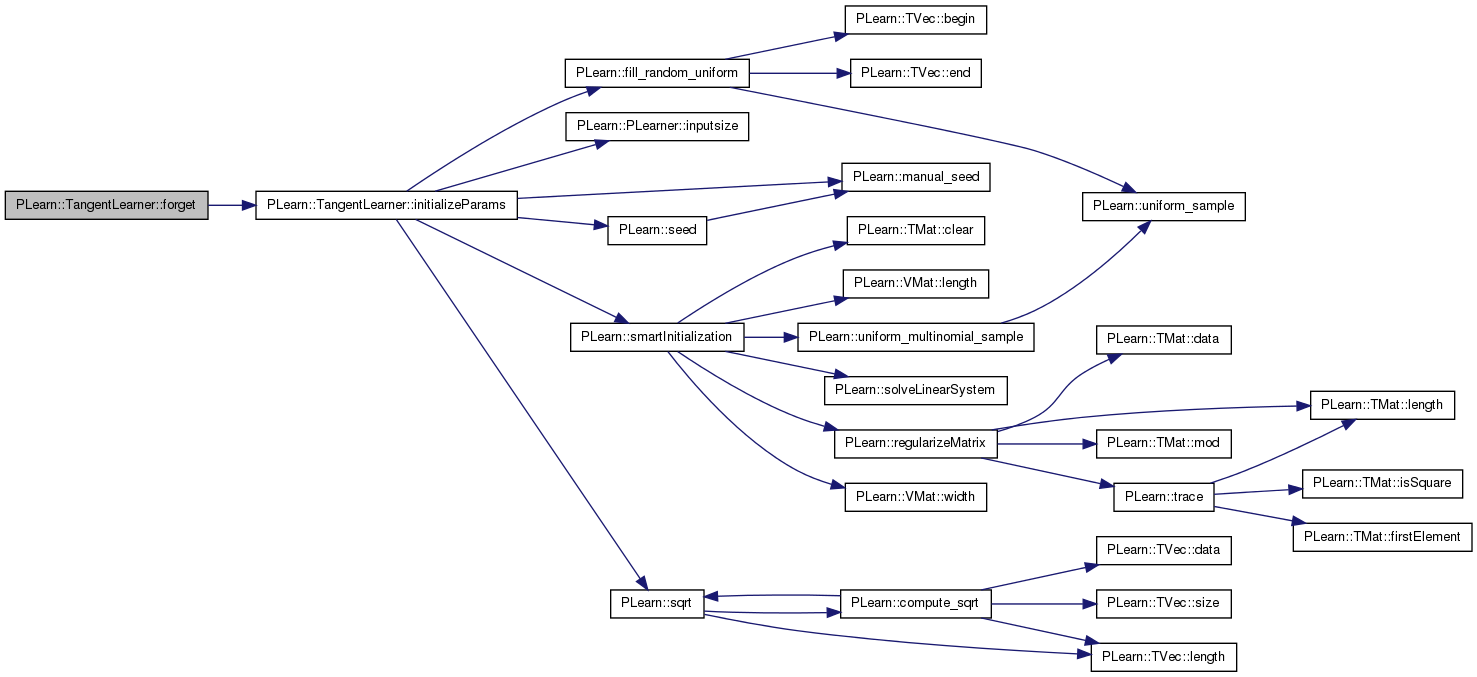

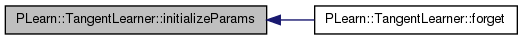

| void PLearn::TangentLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 403 of file TangentLearner.cc.

References initializeParams(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

if (train_set) initializeParams();

stage = 0;

}

| OptionList & PLearn::TangentLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 152 of file TangentLearner.cc.

| OptionMap & PLearn::TangentLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 152 of file TangentLearner.cc.

| RemoteMethodMap & PLearn::TangentLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 152 of file TangentLearner.cc.

| TVec< string > PLearn::TangentLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 537 of file TangentLearner.cc.

References getTrainCostNames().

{

return getTrainCostNames();

}

| TVec< string > PLearn::TangentLearner::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 542 of file TangentLearner.cc.

Referenced by getTestCostNames().

{

TVec<string> cost(1); cost[0] = "projection_error";

return cost;

}

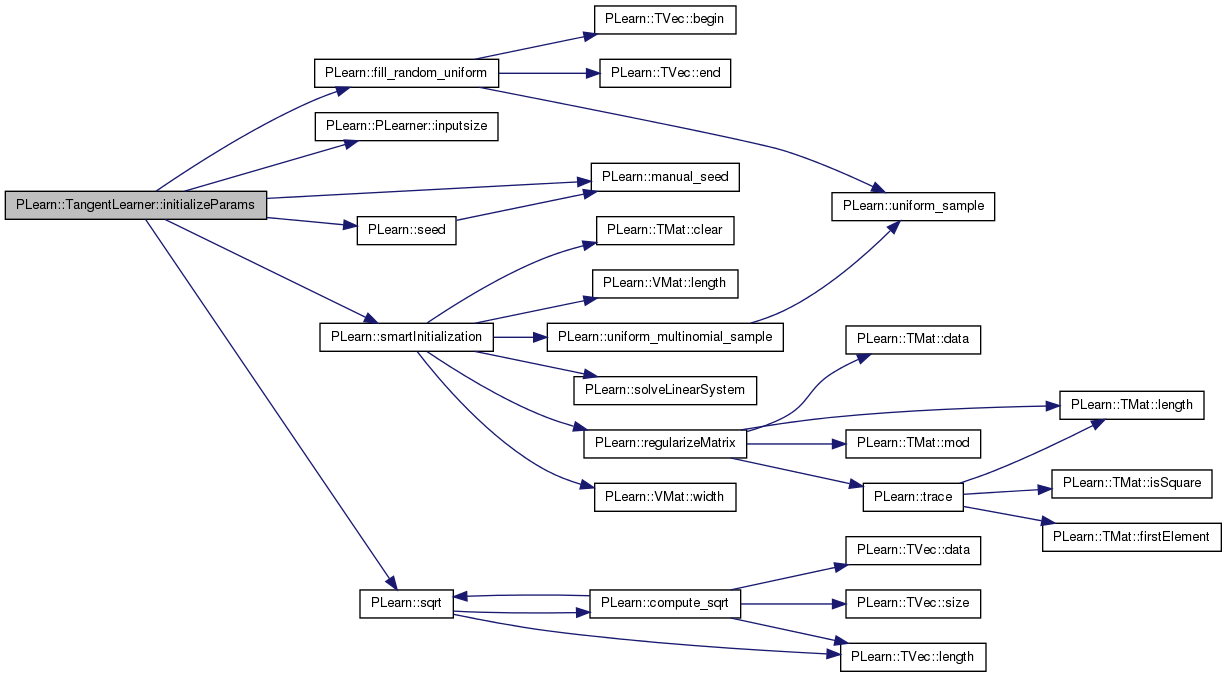

| void PLearn::TangentLearner::initializeParams | ( | ) | [virtual] |

Definition at line 469 of file TangentLearner.cc.

References architecture_type, b, PLearn::fill_random_uniform(), initialization_regularization, PLearn::PLearner::inputsize(), PLearn::manual_seed(), n_hidden_units, optimizer, PLERROR, PLearn::seed(), PLearn::PLearner::seed_, smart_initialization, PLearn::smartInitialization(), PLearn::sqrt(), PLearn::PLearner::train_set, V, and W.

Referenced by forget().

{

if (seed_>=0)

manual_seed(seed_);

else

PLearn::seed();

if (architecture_type=="single_neural_network")

{

if (smart_initialization)

{

V->matValue<<smartInitialization(train_set,n_hidden_units,smart_initialization,initialization_regularization);

W->value<<(1/real(n_hidden_units));

b->matValue.clear();

c->matValue.clear();

}

else

{

real delta = 1.0 / sqrt(real(inputsize()));

fill_random_uniform(V->value, -delta, delta);

delta = 1.0 / real(n_hidden_units);

fill_random_uniform(W->matValue, -delta, delta);

c->matValue.clear();

//fill_random_uniform(c->matValue,-3,3);

//b->matValue.clear();

}

}

else if (architecture_type=="linear")

{

real delta = 1.0 / sqrt(real(inputsize()));

b->matValue.clear();

fill_random_uniform(W->matValue, -delta, delta);

}

else if (architecture_type=="embedding_neural_network")

{

real delta = 1.0 / sqrt(real(inputsize()));

fill_random_uniform(V->value, -delta, delta);

delta = 1.0 / real(n_hidden_units);

fill_random_uniform(W->matValue, -delta, delta);

c->value.clear();

b->value.clear();

}

else if (architecture_type=="embedding_quadratic")

{

real delta = 1.0 / sqrt(real(inputsize()));

fill_random_uniform(W->matValue, -delta, delta);

b->value.clear();

}

else PLERROR("other types not handled yet!");

// Reset optimizer

if(optimizer)

optimizer->reset();

}

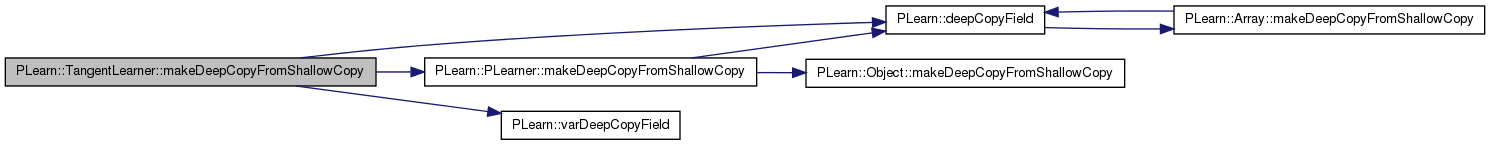

| void PLearn::TangentLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 383 of file TangentLearner.cc.

References b, cost_of_one_example, PLearn::deepCopyField(), PLearn::PLearner::makeDeepCopyFromShallowCopy(), optimizer, parameters, tangent_predictor, tangent_targets, V, PLearn::varDeepCopyField(), and W.

{ inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(cost_of_one_example, copies);

varDeepCopyField(b, copies);

varDeepCopyField(W, copies);

varDeepCopyField(c, copies);

varDeepCopyField(V, copies);

varDeepCopyField(tangent_targets, copies);

deepCopyField(parameters, copies);

deepCopyField(optimizer, copies);

deepCopyField(tangent_predictor, copies);

}

| int PLearn::TangentLearner::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 398 of file TangentLearner.cc.

References output_f.

Referenced by computeOutput().

{

return output_f->outputsize;

}

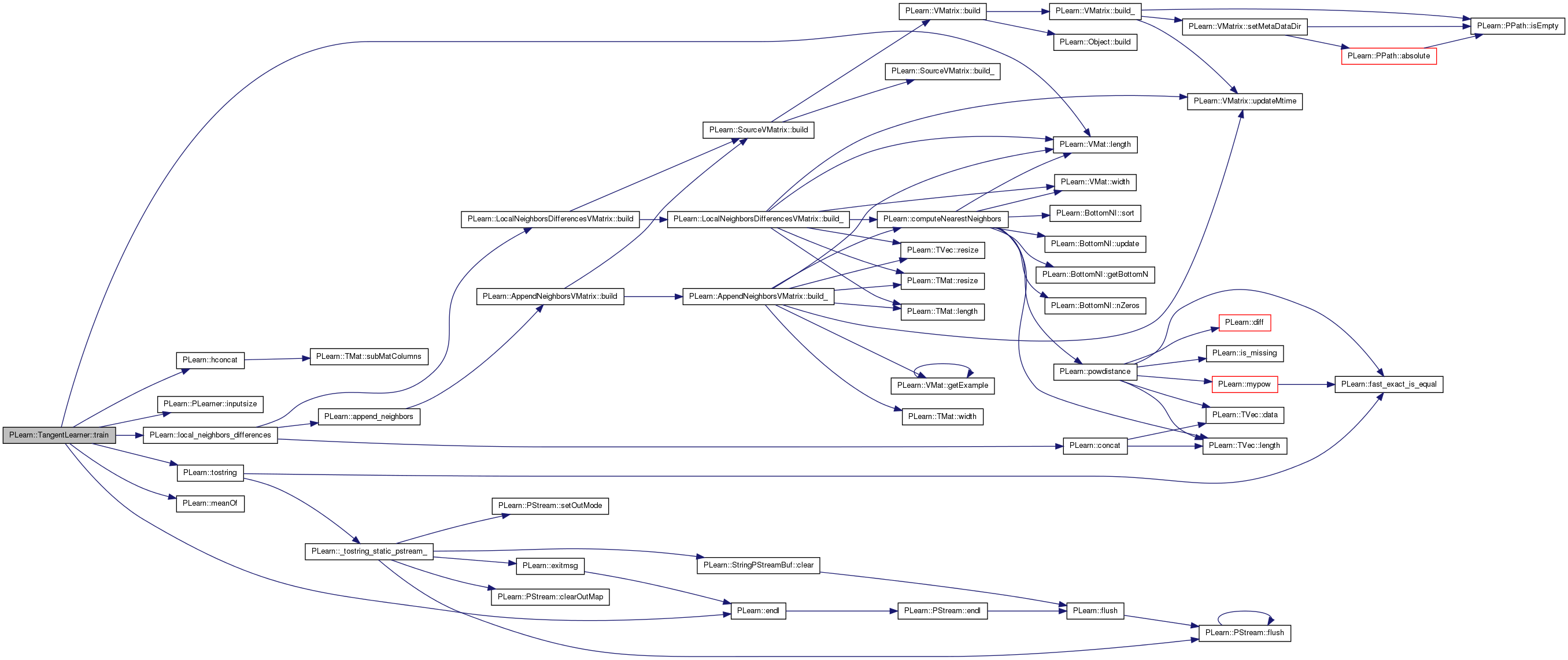

| void PLearn::TangentLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 409 of file TangentLearner.cc.

References batch_size, cost_of_one_example, PLearn::endl(), PLearn::hconcat(), PLearn::PLearner::inputsize(), PLearn::VMat::length(), PLearn::local_neighbors_differences(), PLearn::meanOf(), n_neighbors, PLearn::PLearner::nstages, optimizer, parameters, PLERROR, PLearn::PLearner::report_progress, PLearn::PLearner::stage, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_targets, and PLearn::PLearner::verbosity.

{

VMat train_set_with_targets;

VMat targets_vmat;

if (!cost_of_one_example)

PLERROR("TangentLearner::train: build has not been run after setTrainingSet!");

if (training_targets == "local_evectors")

{

//targets_vmat = new LocalPCAVMatrix(train_set, n_neighbors, n_dim);

PLERROR("local_evectors not yet implemented");

}

else if (training_targets == "local_neighbors")

{

targets_vmat = local_neighbors_differences(train_set, n_neighbors);

//cout << targets_vmat;

}

else PLERROR("TangentLearner::train, unknown training_targets option %s (should be 'local_evectors' or 'local_neighbors')\n",

training_targets.c_str());

train_set_with_targets = hconcat(train_set, targets_vmat);

train_set_with_targets->defineSizes(inputsize(),inputsize()*n_neighbors,0);

int l = train_set->length();

int nsamples = batch_size>0 ? batch_size : l;

Var totalcost = meanOf(train_set_with_targets, cost_of_one_example, nsamples);

if(optimizer)

{

optimizer->setToOptimize(parameters, totalcost);

optimizer->build();

}

else PLERROR("TangentLearner::train can't train without setting an optimizer first!");

// number of optimizer stages corresponding to one learner stage (one epoch)

int optstage_per_lstage = l/nsamples;

PP<ProgressBar> pb;

if(report_progress>0)

pb = new ProgressBar("Training TangentLearner from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

optimizer->optimizeN(*train_stats);

train_stats->finalize();

if(verbosity>2)

cout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

cout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

}

Reimplemented from PLearn::PLearner.

Definition at line 151 of file TangentLearner.h.

Definition at line 99 of file TangentLearner.h.

Referenced by build_(), declareOptions(), and initializeParams().

Var PLearn::TangentLearner::b [protected] |

Definition at line 65 of file TangentLearner.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 103 of file TangentLearner.h.

Referenced by declareOptions(), and train().

Var PLearn::TangentLearner::c [protected] |

Definition at line 65 of file TangentLearner.h.

Func PLearn::TangentLearner::cost_of_one_example [protected] |

Definition at line 64 of file TangentLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 93 of file TangentLearner.h.

Referenced by build_().

Definition at line 88 of file TangentLearner.h.

Referenced by declareOptions(), and initializeParams().

Definition at line 90 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 101 of file TangentLearner.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 89 of file TangentLearner.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 105 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 85 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 92 of file TangentLearner.h.

Referenced by declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 86 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 94 of file TangentLearner.h.

Referenced by build_(), computeOutput(), and outputsize().

Definition at line 100 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

VarArray PLearn::TangentLearner::parameters [protected] |

Definition at line 73 of file TangentLearner.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 96 of file TangentLearner.h.

Referenced by build_().

Definition at line 107 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 87 of file TangentLearner.h.

Referenced by declareOptions(), and initializeParams().

Definition at line 106 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 95 of file TangentLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::TangentLearner::tangent_targets [protected] |

Definition at line 66 of file TangentLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 83 of file TangentLearner.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 84 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::TangentLearner::V [protected] |

Definition at line 65 of file TangentLearner.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 108 of file TangentLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::TangentLearner::W [protected] |

Definition at line 65 of file TangentLearner.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4