|

PLearn 0.1

|

|

PLearn 0.1

|

The first sentence should be a BRIEF DESCRIPTION of what the class does. More...

#include <DeepReconstructorNet.h>

Public Member Functions | |

| DeepReconstructorNet () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

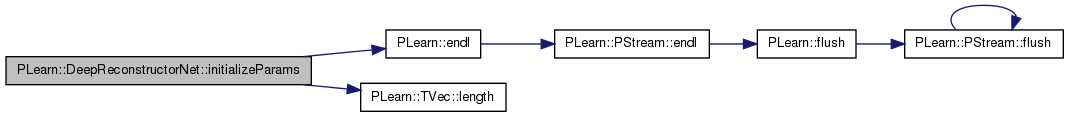

| virtual void | initializeParams (bool set_seed=true) |

| Mat | getParameterValue (const string &varname) |

| Returns the matValue of the parameter variable with the given name. | |

| Vec | getParameterRow (const string &varname, int n) |

| Returns the nth row of the matValue of the parameter variable with the given name. | |

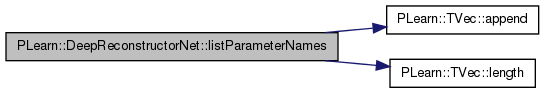

| TVec< string > | listParameterNames () |

| Returns a list of the names of the parameters (in the same order as in listParameter) | |

| TVec< Mat > | listParameter () |

| Returns a list of the parameters. | |

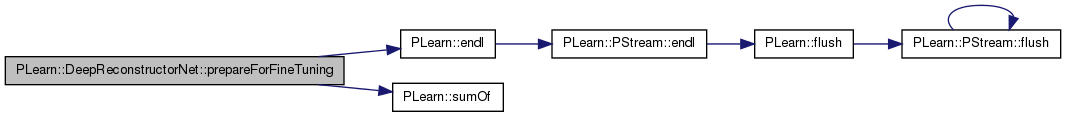

| void | prepareForFineTuning () |

| void | fineTuningFor1Epoch () |

| void | trainSupervisedLayer (VMat inputs, VMat targets) |

| TVec< Mat > | computeRepresentations (Mat input) |

| void | reconstructInputFromLayer (int layer) |

| TVec< Mat > | computeReconstructions (Mat input) |

| Mat | getMatValue (int layer) |

| void | setMatValue (int layer, Mat values) |

| Mat | fpropOneLayer (int layer) |

| Mat | reconstructOneLayer (int layer) |

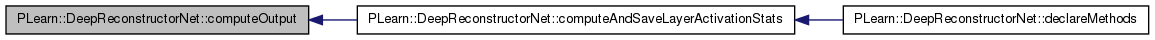

| void | computeAndSaveLayerActivationStats (VMat dataset, int which_layer, const string &filebasename, int nfirstunits=10, int notherunits=10) |

| computeAndSaveLayerActivationStats will compute statistics (univariate and bivariate) of the post-nonlinearity activations of a hidden layer on a given dataset: | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DeepReconstructorNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

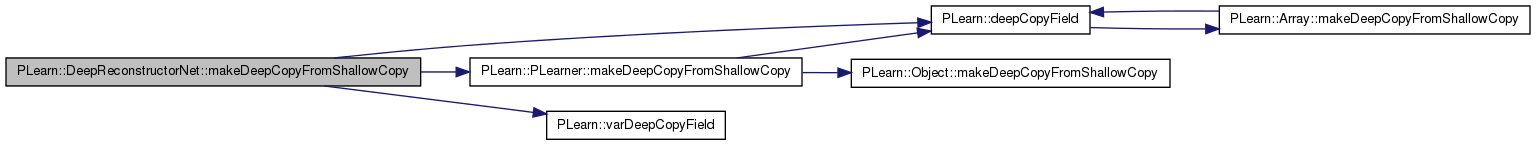

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< pair< int, int > > | unsupervised_nepochs |

| ### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //! | |

| Vec | unsupervised_min_improvement_rate |

| pair< int, int > | supervised_nepochs |

| real | supervised_min_improvement_rate |

| VarArray | layers |

| VarArray | reconstruction_costs |

| TVec< string > | reconstruction_costs_names |

| VarArray | reconstructed_layers |

| VarArray | hidden_for_reconstruction |

| TVec< PP< Optimizer > > | reconstruction_optimizers |

| PP< Optimizer > | reconstruction_optimizer |

| Var | target |

| VarArray | supervised_costs |

| Var | supervised_costvec |

| TVec< string > | supervised_costs_names |

| Var | fullcost |

| VarArray | parameters |

| int | minibatch_size |

| PP< Optimizer > | supervised_optimizer |

| PP< Optimizer > | fine_tuning_optimizer |

| TVec< int > | group_sizes |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | trainHiddenLayer (int which_input_layer, VMat inputs) |

| void | buildHiddenLayerOutputs (int which_input_layer, VMat inputs, VMat outputs) |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declare the methods that are remote-callable. | |

Protected Attributes | |

| TVec< Func > | compute_layer |

| Func | compute_output |

| Func | output_and_target_to_cost |

| TVec< VMat > | outmat |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| int | nout |

The first sentence should be a BRIEF DESCRIPTION of what the class does.

Place the rest of the class programmer documentation here. Doxygen supports Javadoc-style comments. See http://www.doxygen.org/manual.html

Definition at line 62 of file DeepReconstructorNet.h.

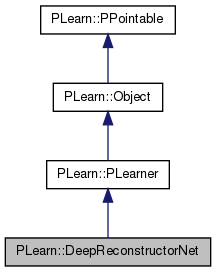

typedef PLearner PLearn::DeepReconstructorNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file DeepReconstructorNet.h.

| PLearn::DeepReconstructorNet::DeepReconstructorNet | ( | ) |

Default constructor.

Definition at line 58 of file DeepReconstructorNet.cc.

:supervised_nepochs(pair<int,int>(0,0)), supervised_min_improvement_rate(-10000), minibatch_size(1) { }

| string PLearn::DeepReconstructorNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

| OptionList & PLearn::DeepReconstructorNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

| RemoteMethodMap & PLearn::DeepReconstructorNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

| Object * PLearn::DeepReconstructorNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 56 of file DeepReconstructorNet.cc.

| StaticInitializer DeepReconstructorNet::_static_initializer_ & PLearn::DeepReconstructorNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

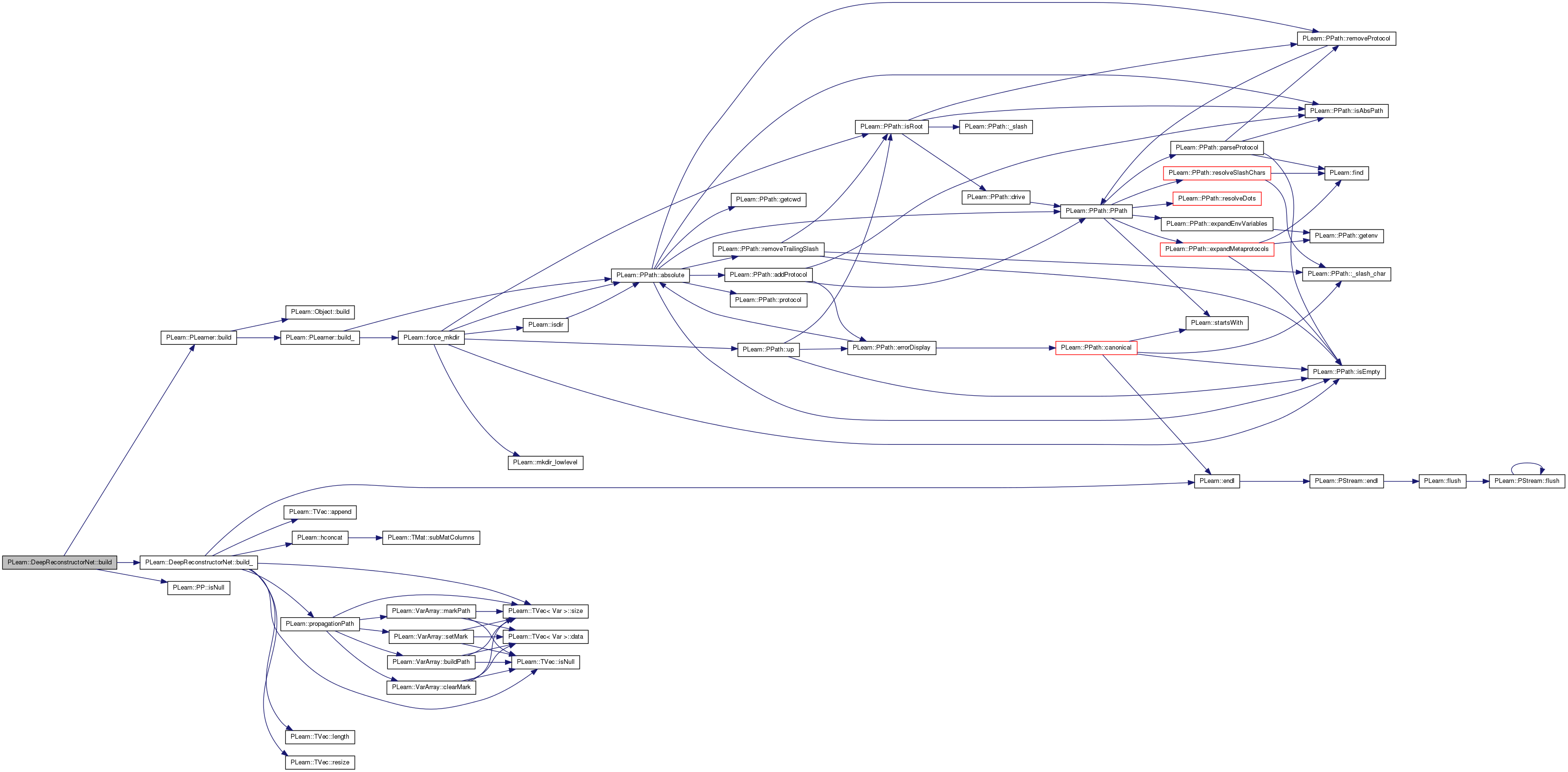

| void PLearn::DeepReconstructorNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 354 of file DeepReconstructorNet.cc.

References PLearn::PLearner::build(), build_(), PLearn::PP< T >::isNull(), and PLearn::PLearner::random_gen.

{

if(random_gen.isNull())

random_gen = new PRandom();

inherited::build();

build_();

}

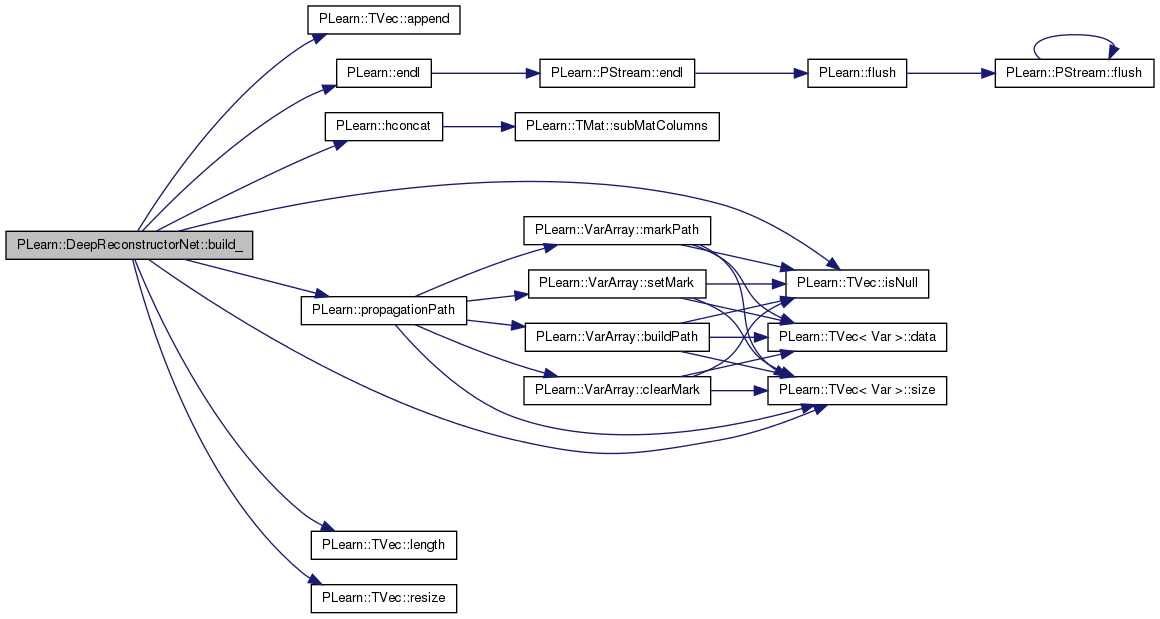

| void PLearn::DeepReconstructorNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 268 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::append(), compute_layer, compute_output, PLearn::endl(), fullcost, PLearn::hconcat(), hidden_for_reconstruction, i, PLearn::TVec< T >::isNull(), layers, PLearn::TVec< T >::length(), n, nout, outmat, output_and_target_to_cost, parameters, PLearn::perr, PLERROR, PLearn::propagationPath(), reconstructed_layers, reconstruction_costs, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), supervised_costs, supervised_costvec, and target.

Referenced by build().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of

// ### all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning"

// ### options have been modified.

// ### You should assume that the parent class' build_() has already been

// ### called.

int nlayers = layers.length();

compute_layer.resize(nlayers-1);

for(int k=0; k<nlayers-1; k++)

compute_layer[k] = Func(layers[k], layers[k+1]);

compute_output = Func(layers[0], layers[nlayers-1]);

nout = layers[nlayers-1]->size();

output_and_target_to_cost = Func(layers[nlayers-1]&target, supervised_costvec);

if(supervised_costs.isNull())

PLERROR("You must provide a supervised_cost");

supervised_costvec = hconcat(supervised_costs);

if(supervised_costs.length()>0)

fullcost += supervised_costs[0];

for(int i=1; i<supervised_costs.length(); i++)

fullcost += supervised_costs[i];

int n_rec_costs = reconstruction_costs.length();

for(int k=0; k<n_rec_costs; k++)

fullcost += reconstruction_costs[k];

//displayVarGraph(fullcost);

Var input = layers[0];

Func f(input&target, fullcost);

parameters = f->parameters;

outmat.resize(n_rec_costs);

// older versions did not specify hidden_for_reconstruction

// if it's not there, let's try to infer it

if( (reconstructed_layers.length()!=0) && (hidden_for_reconstruction.length()==0) )

{

int n = reconstructed_layers.length();

for(int k=0; k<n; k++)

{

VarArray proppath = propagationPath(layers[k+1],reconstructed_layers[k]);

if(proppath.length()>0) // great, we found a path from layers[k+1] !

hidden_for_reconstruction.append(layers[k+1]);

else // ok this is getting much more difficult, let's try to guess

{

// let's consider the full path from sources to reconstructed_layers[k]

VarArray fullproppath = propagationPath(reconstructed_layers[k]);

// look for a variable with same type and dimension as layers[k+1]

int pos;

for(pos = fullproppath.length()-2; pos>=0; pos--)

{

if( fullproppath[pos]->length() == layers[k+1]->length() &&

fullproppath[pos]->width() == layers[k+1]->width() &&

fullproppath[pos]->classname() == layers[k+1]->classname() )

break; // found a matching one!

}

if(pos>=0) // found a match at pos, let's use it

{

hidden_for_reconstruction.append(fullproppath[pos]);

perr << "Found match for hidden_for_reconstruction " << k << endl;

//displayVarGraph(propagationPath(hidden_for_reconstruction[k],reconstructed_layers[k])

// ,true, 333, "reconstr");

}

else

{

PLERROR("Unable to guess hidden_for_reconstruction variable. Unable to find match.");

}

}

}

}

if( reconstructed_layers.length() != hidden_for_reconstruction.length() )

PLERROR("reconstructed_layers and hidden_for_reconstruction should have the same number of elements.");

}

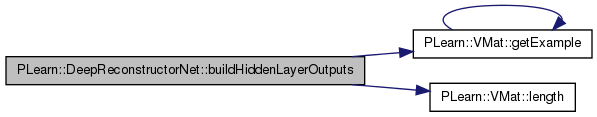

| void PLearn::DeepReconstructorNet::buildHiddenLayerOutputs | ( | int | which_input_layer, |

| VMat | inputs, | ||

| VMat | outputs | ||

| ) | [protected] |

Definition at line 516 of file DeepReconstructorNet.cc.

References compute_layer, PLearn::VMat::getExample(), i, in, PLearn::VMat::length(), and target.

Referenced by train().

{

int l = inputs.length();

Vec in;

Vec target;

real weight;

Func f = compute_layer[which_input_layer];

Vec out(f->outputsize);

for(int i=0; i<l; i++)

{

inputs->getExample(i,in,target,weight);

f->fprop(in, out);

/*

if(i==0)

{

perr << "Function used for building hidden layer " << which_input_layer << endl;

displayFunction(f, true);

}

*/

outputs->putOrAppendRow(i,out);

}

outputs->flush();

}

| string PLearn::DeepReconstructorNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file DeepReconstructorNet.cc.

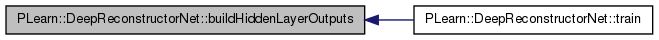

| void PLearn::DeepReconstructorNet::computeAndSaveLayerActivationStats | ( | VMat | dataset, |

| int | which_layer, | ||

| const string & | filebasename, | ||

| int | nfirstunits = 10, |

||

| int | notherunits = 10 |

||

| ) |

computeAndSaveLayerActivationStats will compute statistics (univariate and bivariate) of the post-nonlinearity activations of a hidden layer on a given dataset:

which_layer: 1 means first hidden layer, 2, second hidden layer, etc...

Definition at line 876 of file DeepReconstructorNet.cc.

References PLearn::VecStatsCollector::build(), PLearn::columnMean(), PLearn::VecStatsCollector::compute_covariance, computeOutput(), PLearn::concat(), PLearn::TMat< T >::fill(), PLearn::VecStatsCollector::finalize(), PLearn::VMat::getExample(), i, j, layers, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::VecStatsCollector::maxnvalues, PLearn::Object::save(), PLearn::savePMat(), PLearn::savePMatFieldnames(), PLearn::VecStatsCollector::setFieldNames(), PLearn::PRandom::shuffleElements(), PLearn::TMat< T >::subMat(), PLearn::TVec< T >::subVec(), target, PLearn::tostring(), and PLearn::VecStatsCollector::update().

Referenced by declareMethods().

{

int len = dataset.length();

Var layer = layers[which_layer];

int layersize = layer->size();

Mat actstats(1+layersize,6);

actstats.fill(0.);

TVec<string> actstatsfields(6);

actstatsfields[0] = "E[act]";

actstatsfields[1] = "E[act^2]";

actstatsfields[2] = "[0,.25)";

actstatsfields[3] = "[.25,.50)";

actstatsfields[4] = "[.50,.75)";

actstatsfields[5] = "[.75,1.00]";

// build the list of indexes of the units for which we want to keep bivariate statistics

// we will take the nfirstunits first units, and notherunits at random from the rest.

// resulting list of indices will be put in unitindexes.

TVec<int> unitindexes(0,nfirstunits-1,1);

if(notherunits>0)

{

TVec<int> randomindexes(notherunits, layersize, 1);

PRandom rnd;

rnd.shuffleElements(randomindexes);

randomindexes = randomindexes.subVec(0,notherunits);

unitindexes = concat(unitindexes, randomindexes);

}

int nselectunits = unitindexes.length();

Vec selectedactivations(nselectunits); // will hold the activations of the selected units

TVec<string> fieldnames(nselectunits);

for(int k=0; k<nselectunits; k++)

fieldnames[k] = tostring(unitindexes[k]);

VecStatsCollector stcol;

stcol.maxnvalues = 20;

stcol.compute_covariance = true;

stcol.setFieldNames(fieldnames);

stcol.build();

const int nbins = 5;

// bivariate nbins*nbins histograms will be computed for each of the nselectunits*nselectunits pairs of units

Mat bihist(nselectunits*nselectunits, nbins*nbins);

bihist.fill(0.);

TVec<string> bihistfields(nbins*nbins);

for(int k=0; k<nbins*nbins; k++)

bihistfields[k] = tostring(1+k/nbins)+","+tostring(1+k%nbins);

Vec input;

Vec target;

real weight;

Vec output;

for(int t=0; t<len; t++)

{

dataset.getExample(t, input, target, weight);

computeOutput(input, output);

Vec activations = layer->value;

// collect simple univariate stats for all units

for(int k=0; k<layersize; k++)

{

real act = activations[k];

actstats(k+1,0) += act;

actstats(k+1,1) += act*act;

if(act<0.25)

actstats(k+1,2)++;

else if(act<0.50)

actstats(k+1,3)++;

else if(act<0.75)

actstats(k+1,4)++;

else

actstats(k+1,5)++;

}

// collect more extensive stats for selected units

for(int k=0; k<nselectunits; k++)

selectedactivations[k] = activations[unitindexes[k]];

stcol.update(selectedactivations);

// collect bivariate histograms for selected units

for(int i=0; i<nselectunits; i++)

{

real act_i = selectedactivations[i];

int binpos_i = int(act_i*nbins);

if(binpos_i<0)

binpos_i = 0;

else if(binpos_i>=nbins)

binpos_i = nbins-1;

for(int j=0; j<nselectunits; j++)

{

real act_j = selectedactivations[j];

int binpos_j = int(act_j*nbins);

if(binpos_j<0)

binpos_j = 0;

else if(binpos_j>=nbins)

binpos_j = nbins-1;

bihist(i*nselectunits+j, nbins*binpos_i+binpos_j)++;

}

}

}

stcol.finalize();

PLearn::save(filebasename+"_selected_statscol.psave", stcol);

bihist *= 1./len;

string pmatfilename = filebasename+"_selected_bihist.pmat";

savePMat(pmatfilename, bihist);

savePMatFieldnames(pmatfilename, bihistfields);

actstats *= 1./len;

Vec meanvec = actstats(0);

columnMean(actstats.subMat(1,0,layersize,6), meanvec);

pmatfilename = filebasename+"_all_simplestats.pmat";

savePMat(pmatfilename, actstats);

savePMatFieldnames(pmatfilename, actstatsfields);

}

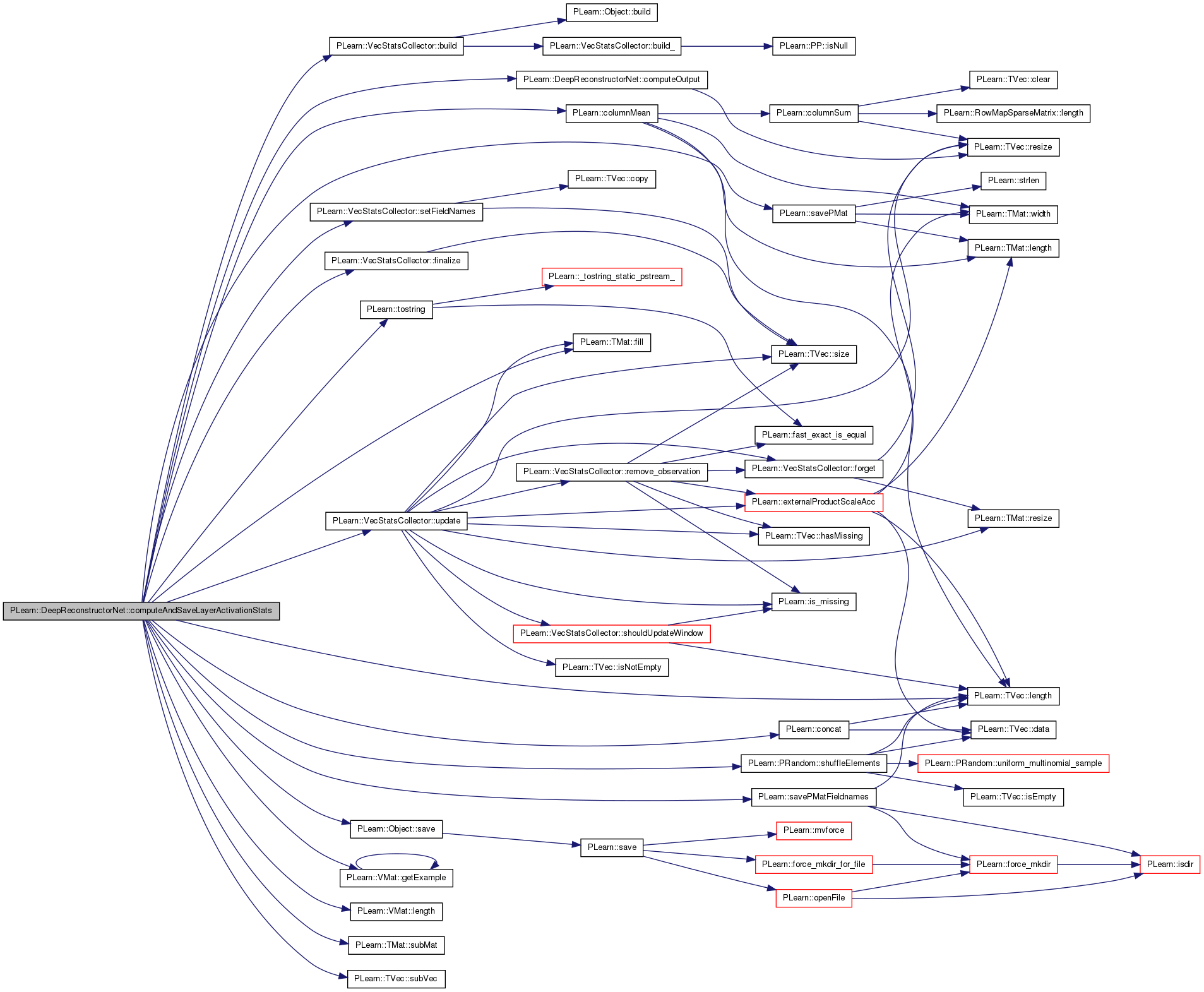

| void PLearn::DeepReconstructorNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 1003 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::length(), output_and_target_to_cost, PLearn::TVec< T >::resize(), and supervised_costs_names.

{

costs.resize(supervised_costs_names.length());

output_and_target_to_cost->fprop(output&target, costs);

}

| void PLearn::DeepReconstructorNet::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 997 of file DeepReconstructorNet.cc.

References compute_output, nout, and PLearn::TVec< T >::resize().

Referenced by computeAndSaveLayerActivationStats().

{

output.resize(nout);

compute_output->fprop(input, output);

}

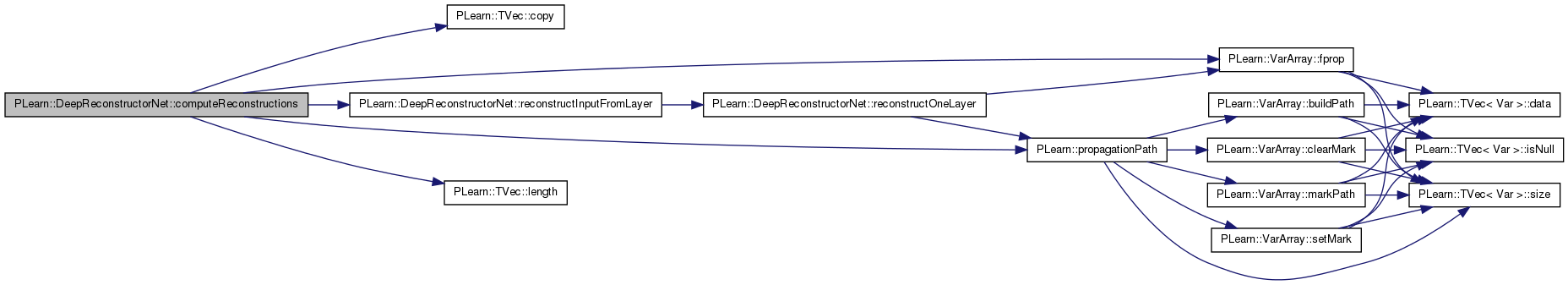

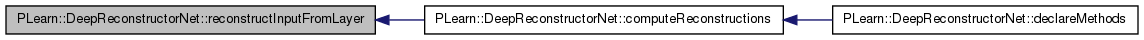

Definition at line 612 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::copy(), PLearn::VarArray::fprop(), layers, PLearn::TVec< T >::length(), PLearn::propagationPath(), and reconstructInputFromLayer().

Referenced by declareMethods().

{

int nlayers = layers.length();

VarArray proppath = propagationPath(layers[0],layers[nlayers-1]);

layers[0]->matValue << input;

proppath.fprop();

TVec<Mat> reconstructions(nlayers-2);

for(int k=1; k<nlayers-1; k++)

{

reconstructInputFromLayer(k);

reconstructions[k-1] = layers[0]->matValue.copy();

}

return reconstructions;

}

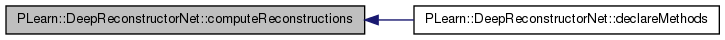

Definition at line 554 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::copy(), PLearn::VarArray::fprop(), layers, PLearn::TVec< T >::length(), and PLearn::propagationPath().

Referenced by declareMethods().

{

int nlayers = layers.length();

TVec<Mat> representations(nlayers);

VarArray proppath = propagationPath(layers[0],layers[nlayers-1]);

layers[0]->matValue << input;

proppath.fprop();

// perr << "Graph for computing representations" << endl;

// displayVarGraph(proppath,true, 333, "repr");

for(int k=0; k<nlayers; k++)

representations[k] = layers[k]->matValue.copy();

return representations;

}

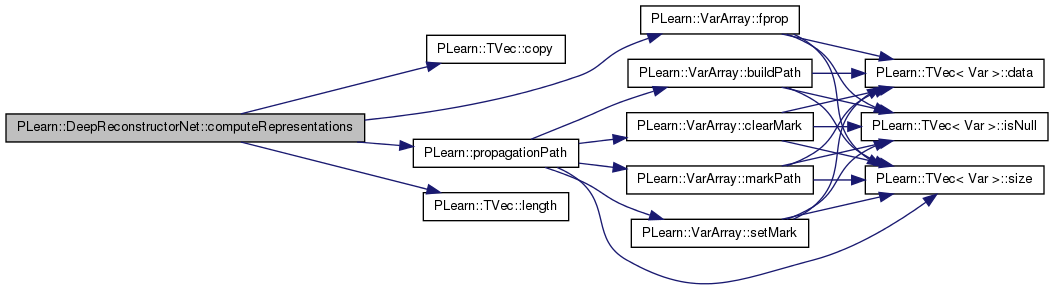

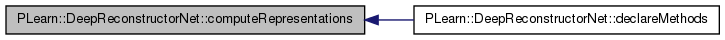

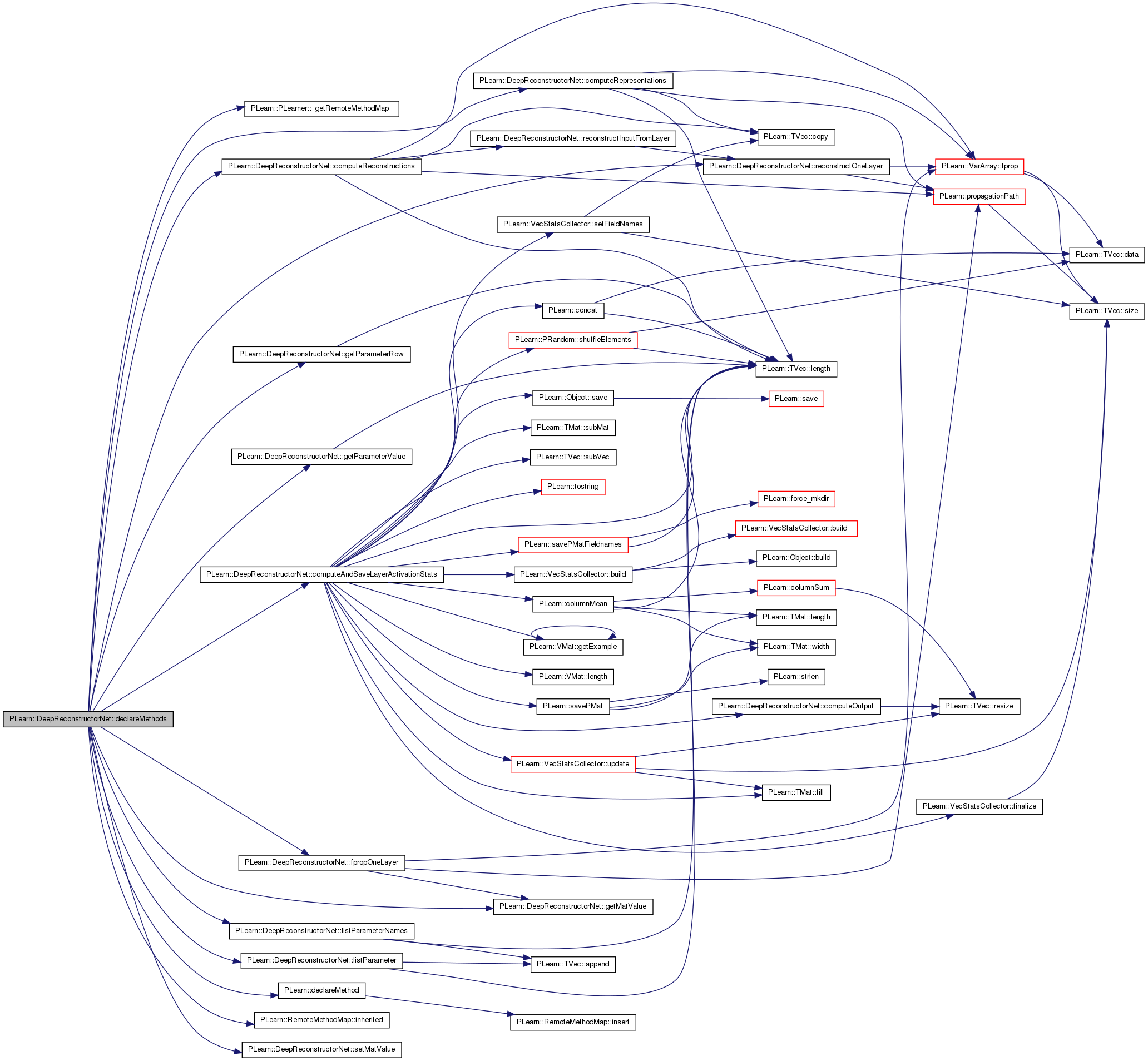

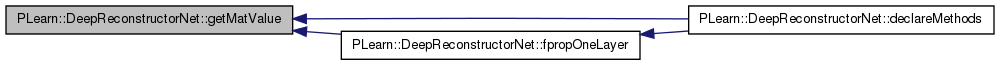

| void PLearn::DeepReconstructorNet::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declare the methods that are remote-callable.

Reimplemented from PLearn::PLearner.

Definition at line 164 of file DeepReconstructorNet.cc.

References PLearn::PLearner::_getRemoteMethodMap_(), computeAndSaveLayerActivationStats(), computeReconstructions(), computeRepresentations(), PLearn::declareMethod(), fpropOneLayer(), getMatValue(), getParameterRow(), getParameterValue(), PLearn::RemoteMethodMap::inherited(), listParameter(), listParameterNames(), reconstructOneLayer(), and setMatValue().

{

rmm.inherited(inherited::_getRemoteMethodMap_());

declareMethod(rmm,

"getParameterValue",

&DeepReconstructorNet::getParameterValue,

(BodyDoc("Returns the matValue of the parameter variable with the given name"),

ArgDoc("varname", "name of the variable searched for"),

RetDoc("Returns the value of the parameter as a Mat")));

declareMethod(rmm,

"getParameterRow",

&DeepReconstructorNet::getParameterRow,

(BodyDoc("Returns the matValue of the parameter variable with the given name"),

ArgDoc("varname", "name of the variable searched for"),

ArgDoc("n", "row number"),

RetDoc("Returns the nth row of the value of the parameter as a Mat")));

declareMethod(rmm,

"listParameterNames",

&DeepReconstructorNet::listParameterNames,

(BodyDoc("Returns a list of the names of the parameters"),

RetDoc("Returns a list of the names of the parameters")));

declareMethod(rmm,

"listParameter",

&DeepReconstructorNet::listParameter,

(BodyDoc("Returns a list of the parameters"),

RetDoc("Returns a list of the names")));

declareMethod(rmm,

"computeRepresentations",

&DeepReconstructorNet::computeRepresentations,

(BodyDoc("Compute the representation of each hidden layer"),

ArgDoc("input", "the input"),

RetDoc("The representations")));

declareMethod(rmm,

"computeReconstructions",

&DeepReconstructorNet::computeReconstructions,

(BodyDoc("Compute the reconstructions of the input of each hidden layer"),

ArgDoc("input", "the input"),

RetDoc("The reconstructions")));

declareMethod(rmm,

"getMatValue",

&DeepReconstructorNet::getMatValue,

(BodyDoc(""),

ArgDoc("layer", "no of the layer"),

RetDoc("the matValue")));

declareMethod(rmm,

"setMatValue",

&DeepReconstructorNet::setMatValue,

(BodyDoc(""),

ArgDoc("layer", "no of the layer"),

ArgDoc("values", "the values")));

declareMethod(rmm,

"fpropOneLayer",

&DeepReconstructorNet::fpropOneLayer,

(BodyDoc(""),

ArgDoc("layer", "no of the layer"),

RetDoc("")));

declareMethod(rmm,

"reconstructOneLayer",

&DeepReconstructorNet::reconstructOneLayer,

(BodyDoc(""),

ArgDoc("layer", "no of the layer"),

RetDoc("")));

declareMethod(rmm,

"computeAndSaveLayerActivationStats",

&DeepReconstructorNet::computeAndSaveLayerActivationStats,

(BodyDoc("computeAndSaveLayerActivationStats will compute statistics (univariate and bivariate)\n"

"of the post-nonlinearity activations of a hidden layer on a given dataset:\n"

"\n"

" - It will compute a matrix of simple statistics for all units of that layer and \n"

" save it in filebasename_all_simplestats.pmat \n"

" - It will also select a subset of the units made of the first nfirstunits units \n"

" and of notherunits randomly selected units among the others.\n"

" For this selected subset more extensive statistics are computed and saved:\n"

" + a VecStatsCollector collecting univariate histograms and bivariate\n"

" covariance will be saved in filebasename_selected_statscol.psave\n"

" + a matrix of bivariate histograms will be saved as \n"

" filebasename_selected_bihist.pmat \n"

" Row i*nselectedunits+j of that matrix will contain the 5*5 bivariate\n"

" histogram for the activations of selected_unit_i vs selected_unit_j.\n"

"\n"

"which_layer: 1 means first hidden layer, 2, second hidden layer, etc... \n"),

ArgDoc("dataset", "the data vmatrix to compute activaitons on"),

ArgDoc("which_layer", "the layer (1 for first hidden layer)"),

ArgDoc("filebasename", "basename for generated files"),

ArgDoc("nfirstunits", "number of first units to select for extensive stats."),

ArgDoc("notherunits", "number of other units to select for extensive stats.")

));

}

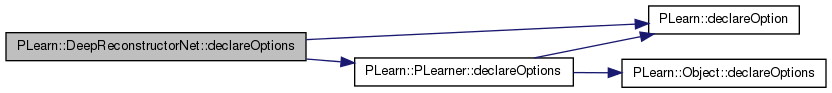

| void PLearn::DeepReconstructorNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 65 of file DeepReconstructorNet.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), fine_tuning_optimizer, group_sizes, hidden_for_reconstruction, layers, PLearn::OptionBase::learntoption, minibatch_size, reconstructed_layers, reconstruction_costs, reconstruction_costs_names, reconstruction_optimizer, reconstruction_optimizers, supervised_costs, supervised_costs_names, supervised_costvec, supervised_min_improvement_rate, supervised_nepochs, supervised_optimizer, target, unsupervised_min_improvement_rate, and unsupervised_nepochs.

{

// ### Declare all of this object's options here.

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. If you don't provide one of these three,

// ### this option will be ignored when loading values from a script.

// ### You can also combine flags, for example with OptionBase::nosave:

// ### (OptionBase::buildoption | OptionBase::nosave)

declareOption(ol, "unsupervised_nepochs", &DeepReconstructorNet::unsupervised_nepochs,

OptionBase::buildoption,

"unsupervised_nepochs[k] contains a pair of integers giving the minimum and\n"

"maximum number of epochs for the training of layer k+1 (taking layer k"

"as input). Thus k=0 corresponds to the training of the first hidden layer.");

declareOption(ol, "unsupervised_min_improvement_rate", &DeepReconstructorNet::unsupervised_min_improvement_rate,

OptionBase::buildoption,

"unsupervised_min_improvement_rate[k] should contain the minimum required relative improvement rate\n"

"for the training of layer k+1 (taking input from layer k.)");

declareOption(ol, "supervised_nepochs", &DeepReconstructorNet::supervised_nepochs,

OptionBase::buildoption,

"");

declareOption(ol, "supervised_min_improvement_rate", &DeepReconstructorNet::supervised_min_improvement_rate,

OptionBase::buildoption,

"supervised_min_improvement_rate contains the minimum required relative improvement rate\n"

"for the training of the supervised layer.");

declareOption(ol, "layers", &DeepReconstructorNet::layers,

OptionBase::buildoption,

"layers[0] is the input variable ; last layer is final output layer");

declareOption(ol, "reconstruction_costs", &DeepReconstructorNet::reconstruction_costs,

OptionBase::buildoption,

"recontruction_costs[k] is the reconstruction cost for layer[k]");

declareOption(ol, "reconstruction_costs_names", &DeepReconstructorNet::reconstruction_costs_names,

OptionBase::buildoption,

"The names to be given to each of the elements of a vector cost");

declareOption(ol, "reconstructed_layers", &DeepReconstructorNet::reconstructed_layers,

OptionBase::buildoption,

"reconstructed_layers[k] is the reconstruction of layer k from hidden_for_reconstruction[k]\n"

"(which corresponds to a version of layer k+1. See further explanation of hidden_for_reconstruction.\n");

declareOption(ol, "hidden_for_reconstruction", &DeepReconstructorNet::hidden_for_reconstruction,

OptionBase::buildoption,

"reconstructed_layers[k] is reconstructed from hidden_for_reconstruction[k]\n"

"which corresponds to a version of layer k+1.\n"

"hidden_for_reconstruction[k] can however be different from layers[k+1] \n"

"since e.g. layers[k+1] may be obtained by transforming a clean input, while \n"

"hidden_for_reconstruction[k] may be obtained by transforming a corrupted input \n");

declareOption(ol, "reconstruction_optimizers", &DeepReconstructorNet::reconstruction_optimizers,

OptionBase::buildoption,

"");

declareOption(ol, "reconstruction_optimizer", &DeepReconstructorNet::reconstruction_optimizer,

OptionBase::buildoption,

"");

declareOption(ol, "target", &DeepReconstructorNet::target,

OptionBase::buildoption,

"");

declareOption(ol, "supervised_costs", &DeepReconstructorNet::supervised_costs,

OptionBase::buildoption,

"");

declareOption(ol, "supervised_costvec", &DeepReconstructorNet::supervised_costvec,

OptionBase::learntoption,

"");

declareOption(ol, "supervised_costs_names", &DeepReconstructorNet::supervised_costs_names,

OptionBase::buildoption,

"");

declareOption(ol, "minibatch_size", &DeepReconstructorNet::minibatch_size,

OptionBase::buildoption,

"");

declareOption(ol, "supervised_optimizer", &DeepReconstructorNet::supervised_optimizer,

OptionBase::buildoption,

"");

declareOption(ol, "fine_tuning_optimizer", &DeepReconstructorNet::fine_tuning_optimizer,

OptionBase::buildoption,

"");

declareOption(ol, "group_sizes", &DeepReconstructorNet::group_sizes,

OptionBase::buildoption,

"");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::DeepReconstructorNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 232 of file DeepReconstructorNet.h.

:

//##### Protected Options ###############################################

| DeepReconstructorNet * PLearn::DeepReconstructorNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepReconstructorNet.cc.

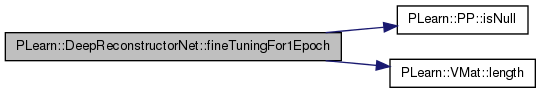

| void PLearn::DeepReconstructorNet::fineTuningFor1Epoch | ( | ) |

Definition at line 629 of file DeepReconstructorNet.cc.

References fine_tuning_optimizer, PLearn::PP< T >::isNull(), PLearn::VMat::length(), minibatch_size, PLearn::PLearner::train_set, and PLearn::PLearner::train_stats.

Referenced by train().

{

if(train_stats.isNull())

train_stats = new VecStatsCollector();

int l = train_set->length();

fine_tuning_optimizer->reset();

fine_tuning_optimizer->nstages = l/minibatch_size;

fine_tuning_optimizer->optimizeN(*train_stats);

}

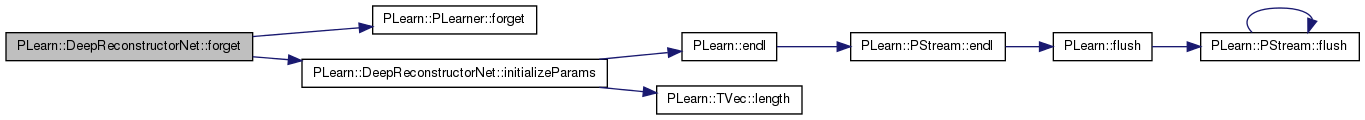

| void PLearn::DeepReconstructorNet::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

(Re-)initialize the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

Reimplemented from PLearn::PLearner.

Definition at line 413 of file DeepReconstructorNet.cc.

References PLearn::PLearner::forget(), and initializeParams().

{

inherited::forget();

initializeParams();

}

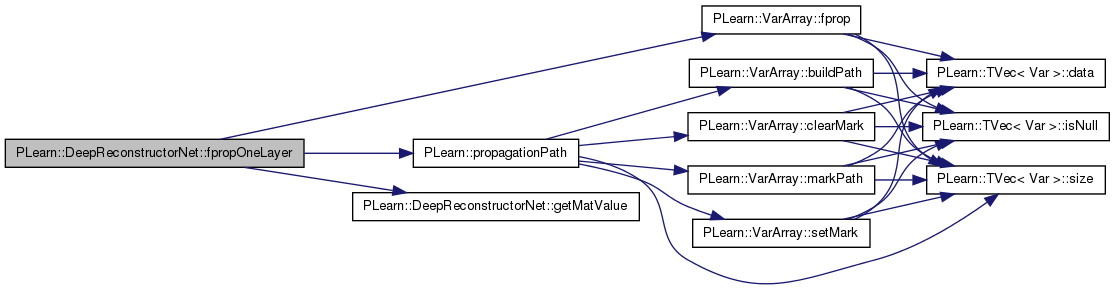

Definition at line 1067 of file DeepReconstructorNet.cc.

References PLearn::VarArray::fprop(), getMatValue(), layers, and PLearn::propagationPath().

Referenced by declareMethods().

{

VarArray proppath = propagationPath( layers[layer], layers[layer+1] );

proppath.fprop();

return getMatValue(layer+1);

}

Definition at line 1057 of file DeepReconstructorNet.cc.

References layers.

Referenced by declareMethods(), and fpropOneLayer().

{

return layers[layer]->matValue;

}

| OptionList & PLearn::DeepReconstructorNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file DeepReconstructorNet.cc.

| OptionMap & PLearn::DeepReconstructorNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file DeepReconstructorNet.cc.

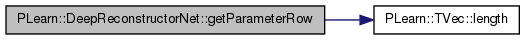

Returns the nth row of the matValue of the parameter variable with the given name.

Definition at line 1030 of file DeepReconstructorNet.cc.

References i, PLearn::TVec< T >::length(), parameters, and PLERROR.

Referenced by declareMethods().

{

for(int i=0; i<parameters.length(); i++)

if(parameters[i]->getName() == varname)

return parameters[i]->matValue(n);

PLERROR("There is no parameter named %s", varname.c_str());

return Vec(0);

}

| Mat PLearn::DeepReconstructorNet::getParameterValue | ( | const string & | varname | ) |

Returns the matValue of the parameter variable with the given name.

Definition at line 1020 of file DeepReconstructorNet.cc.

References i, PLearn::TVec< T >::length(), parameters, and PLERROR.

Referenced by declareMethods().

{

for(int i=0; i<parameters.length(); i++)

if(parameters[i]->getName() == varname)

return parameters[i]->matValue;

PLERROR("There is no parameter named %s", varname.c_str());

return Mat(0,0);

}

| RemoteMethodMap & PLearn::DeepReconstructorNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file DeepReconstructorNet.cc.

| TVec< string > PLearn::DeepReconstructorNet::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 1010 of file DeepReconstructorNet.cc.

References supervised_costs_names.

{

return supervised_costs_names;

}

| TVec< string > PLearn::DeepReconstructorNet::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1015 of file DeepReconstructorNet.cc.

References supervised_costs_names.

{

return supervised_costs_names;

}

| void PLearn::DeepReconstructorNet::initializeParams | ( | bool | set_seed = true | ) | [virtual] |

Definition at line 362 of file DeepReconstructorNet.cc.

References PLearn::endl(), i, PLearn::TVec< T >::length(), parameters, PLearn::perr, PLearn::PLearner::random_gen, and PLearn::PLearner::seed_.

Referenced by forget().

{

perr << "Initializing parameters..." << endl;

if (set_seed && seed_ != 0)

random_gen->manual_seed(seed_);

for(int i=0; i<parameters.length(); i++)

dynamic_cast<SourceVariable*>((Variable*)parameters[i])->randomInitialize(random_gen);

}

Returns a list of the parameters.

Definition at line 1048 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::append(), i, PLearn::TVec< T >::length(), and parameters.

Referenced by declareMethods().

{

TVec<Mat> matList(0);

for (int i=0; i<parameters.length(); i++)

matList.append(parameters[i]->matValue);

return matList;

}

| TVec< string > PLearn::DeepReconstructorNet::listParameterNames | ( | ) |

Returns a list of the names of the parameters (in the same order as in listParameter)

Definition at line 1039 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::append(), i, PLearn::TVec< T >::length(), and parameters.

Referenced by declareMethods().

{

TVec<string> nameListe(0);

for (int i=0; i<parameters.length(); i++)

if (parameters[i]->getName() != "")

nameListe.append(parameters[i]->getName());

return nameListe;

}

| void PLearn::DeepReconstructorNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 373 of file DeepReconstructorNet.cc.

References compute_layer, compute_output, PLearn::deepCopyField(), fine_tuning_optimizer, fullcost, group_sizes, hidden_for_reconstruction, layers, PLearn::PLearner::makeDeepCopyFromShallowCopy(), output_and_target_to_cost, parameters, reconstructed_layers, reconstruction_costs, reconstruction_optimizer, reconstruction_optimizers, supervised_costs, supervised_costs_names, supervised_costvec, supervised_min_improvement_rate, supervised_nepochs, supervised_optimizer, target, unsupervised_min_improvement_rate, unsupervised_nepochs, and PLearn::varDeepCopyField().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(unsupervised_nepochs, copies);

deepCopyField(unsupervised_min_improvement_rate, copies);

deepCopyField(supervised_nepochs, copies);

deepCopyField(supervised_min_improvement_rate, copies);

deepCopyField(layers, copies);

deepCopyField(reconstruction_costs, copies);

deepCopyField(reconstructed_layers, copies);

deepCopyField(hidden_for_reconstruction, copies);

deepCopyField(reconstruction_optimizers, copies);

deepCopyField(reconstruction_optimizer, copies);

varDeepCopyField(target, copies);

deepCopyField(supervised_costs, copies);

varDeepCopyField(supervised_costvec, copies);

deepCopyField(supervised_costs_names, copies);

varDeepCopyField(fullcost, copies);

deepCopyField(parameters,copies);

deepCopyField(supervised_optimizer, copies);

deepCopyField(fine_tuning_optimizer, copies);

deepCopyField(compute_layer, copies);

deepCopyField(compute_output, copies);

deepCopyField(output_and_target_to_cost, copies);

// deepCopyField(outmat, copies); // deep copying vmatrices, especially if opened in write mode, is probably a bad idea

deepCopyField(group_sizes, copies);

}

| int PLearn::DeepReconstructorNet::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 404 of file DeepReconstructorNet.cc.

{

// Compute and return the size of this learner's output (which typically

// may depend on its inputsize(), targetsize() and set options).

//TODO : retourner la bonne chose ici

return 0;

}

| void PLearn::DeepReconstructorNet::prepareForFineTuning | ( | ) |

Definition at line 540 of file DeepReconstructorNet.cc.

References PLearn::endl(), fine_tuning_optimizer, layers, minibatch_size, PLearn::perr, PLearn::sumOf(), supervised_costvec, target, and PLearn::PLearner::train_set.

Referenced by train().

{

Func f(layers[0]&target, supervised_costvec);

Var totalcost = sumOf(train_set, f, minibatch_size);

perr << "Function used for fine tuning" << endl;

// displayFunction(f, true);

// displayVarGraph(supervised_costvec);

// displayVarGraph(totalcost);

VarArray params = totalcost->parents();

fine_tuning_optimizer->setToOptimize(params, totalcost);

}

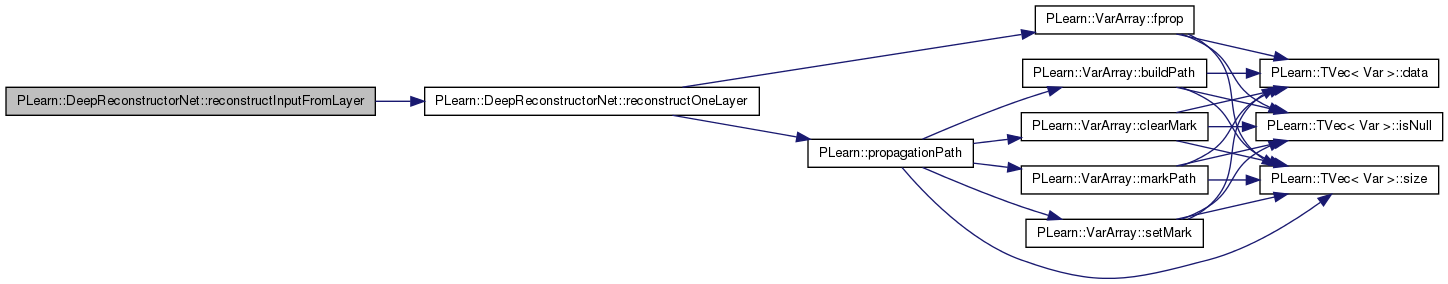

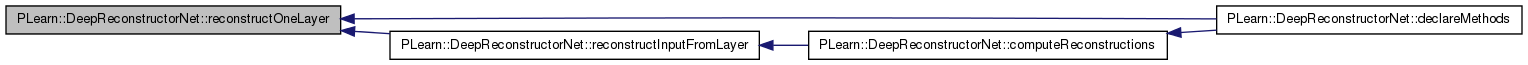

| void PLearn::DeepReconstructorNet::reconstructInputFromLayer | ( | int | layer | ) |

Definition at line 569 of file DeepReconstructorNet.cc.

References layers, and reconstructOneLayer().

Referenced by computeReconstructions().

{

for(int k=layer; k>0; k--)

layers[k-1]->matValue << reconstructOneLayer(k);

/*

for(int k=layer; k>0; k--)

{

VarArray proppath = propagationPath(hidden_for_reconstruction[k-1],reconstructed_layers[k-1]);

perr << "RECONSTRUCTING reconstructed_layers["<<k-1

<< "] from layers["<< k

<< "] " << endl;

perr << "proppath:" << endl;

perr << proppath << endl;

perr << "proppath length: " << proppath.length() << endl;

//perr << ">>>> reconstructed layers before fprop:" << endl;

//perr << reconstructed_layers[k-1]->matValue << endl;

proppath.fprop();

//perr << ">>>> reconstructed layers after fprop:" << endl;

//perr << reconstructed_layers[k-1]->matValue << endl;

perr << "Graph for reconstructing layer " << k-1 << " from layer " << k << endl;

displayVarGraph(proppath,true, 333, "reconstr");

//WARNING MEGA-HACK

if (reconstructed_layers[k-1].width() == 2*layers[k-1].width())

{

Mat temp(layers[k-1].length(), layers[k-1].width());

for (int n=0; n < layers[k-1].length(); n++)

for (int i=0; i < layers[k-1].width(); i++)

temp(n,i) = reconstructed_layers[k-1]->matValue(n,i*2);

temp >> layers[k-1]->matValue;

}

//END OF MEGA-HACK

else

reconstructed_layers[k-1]->matValue >> layers[k-1]->matValue;

}

*/

}

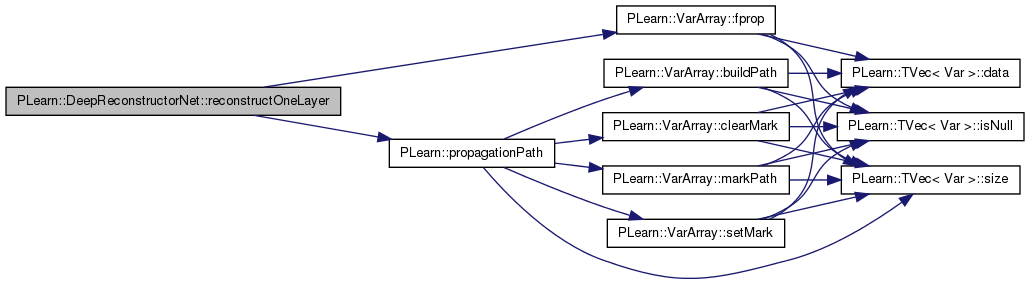

Definition at line 1074 of file DeepReconstructorNet.cc.

References PLearn::VarArray::fprop(), hidden_for_reconstruction, layers, PLearn::propagationPath(), and reconstructed_layers.

Referenced by declareMethods(), and reconstructInputFromLayer().

{

layers[layer]->matValue >> hidden_for_reconstruction[layer-1]->matValue;

VarArray proppath = propagationPath(hidden_for_reconstruction[layer-1],reconstructed_layers[layer-1]);

proppath.fprop();

return reconstructed_layers[layer-1]->matValue;

}

Definition at line 1062 of file DeepReconstructorNet.cc.

References layers.

Referenced by declareMethods().

{

layers[layer]->matValue << values;

}

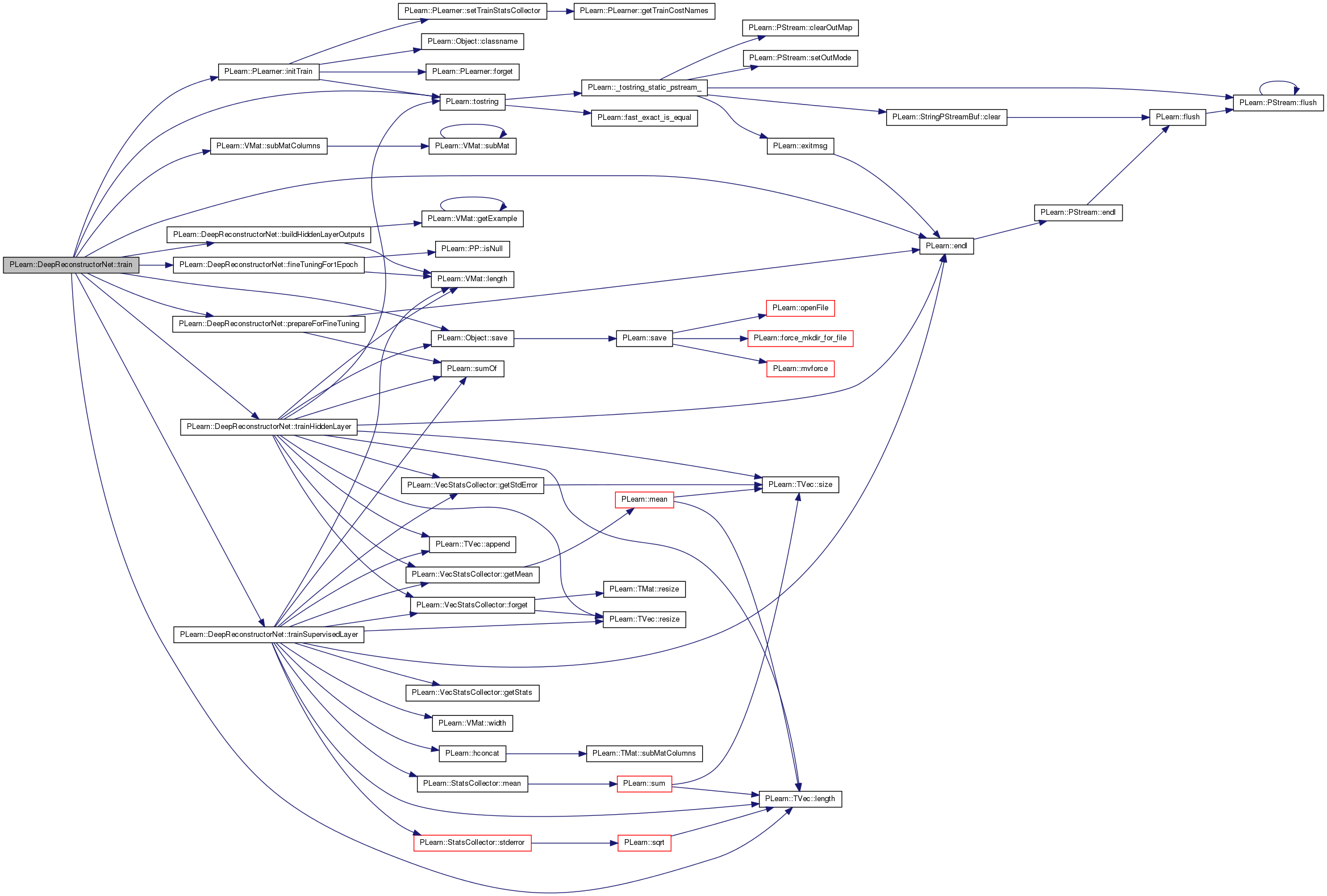

| void PLearn::DeepReconstructorNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 429 of file DeepReconstructorNet.cc.

References buildHiddenLayerOutputs(), PLearn::endl(), PLearn::PLearner::expdir, fineTuningFor1Epoch(), PLearn::PLearner::initTrain(), layers, PLearn::TVec< T >::length(), PLearn::PLearner::nstages, outmat, PLearn::perr, PLearn::PStream::plearn_binary, prepareForFineTuning(), reconstruction_costs, PLearn::Object::save(), PLearn::PLearner::stage, PLearn::VMat::subMatColumns(), supervised_nepochs, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, trainHiddenLayer(), and trainSupervisedLayer().

{

// The role of the train method is to bring the learner up to

// stage==nstages, updating train_stats with training costs measured

// on-line in the process.

// This generic PLearner method does a number of standard stuff useful for

// (almost) any learner, and return 'false' if no training should take

// place. See PLearner.h for more details.

if (!initTrain())

return;

while(stage<nstages)

{

if(stage<1)

{

PPath outmatfname = expdir/"outmat";

int nreconstructions = reconstruction_costs.length();

int insize = train_set->inputsize();

VMat inputs = train_set.subMatColumns(0,insize);

VMat targets = train_set.subMatColumns(insize, train_set->targetsize());

VMat dset = inputs;

bool must_train_supervised_layer = supervised_nepochs.second>0;

PLearn::save(expdir/"learner.psave", *this);

for(int k=0; k<nreconstructions; k++)

{

trainHiddenLayer(k, dset);

PLearn::save(expdir/"learner.psave", *this, PStream::plearn_binary, false);

// 'if' is a hack to avoid precomputing last hidden layer if not needed

if(k<nreconstructions-1 || must_train_supervised_layer)

{

int width = layers[k+1].width();

outmat[k] = new FileVMatrix(outmatfname+tostring(k+1)+".pmat",0,width);

outmat[k]->defineSizes(width,0);

buildHiddenLayerOutputs(k, dset, outmat[k]);

dset = outmat[k];

}

}

if(must_train_supervised_layer)

{

trainSupervisedLayer(dset, targets);

PLearn::save(expdir/"learner.psave", *this);

}

for(int k=0; k<reconstruction_costs.length(); k++)

{

if(outmat[k].isNotNull())

{

perr << "Closing outmat " << k+1 << endl;

outmat[k] = 0;

}

}

perr << "\n\n*********************************************" << endl;

perr << "**** Now performing fine tuning ****" << endl;

perr << "********************************************* \n" << endl;

}

else

{

perr << "+++ Fine tuning stage " << stage+1 << " **" << endl;

prepareForFineTuning();

fineTuningFor1Epoch();

}

++stage;

train_stats->finalize(); // finalize statistics for this epoch

}

/*

while(stage<nstages)

{

// clear statistics of previous epoch

train_stats->forget();

//... train for 1 stage, and update train_stats,

// using train_set->getExample(input, target, weight)

// and train_stats->update(train_costs)

++stage;

train_stats->finalize(); // finalize statistics for this epoch

}

*/

}

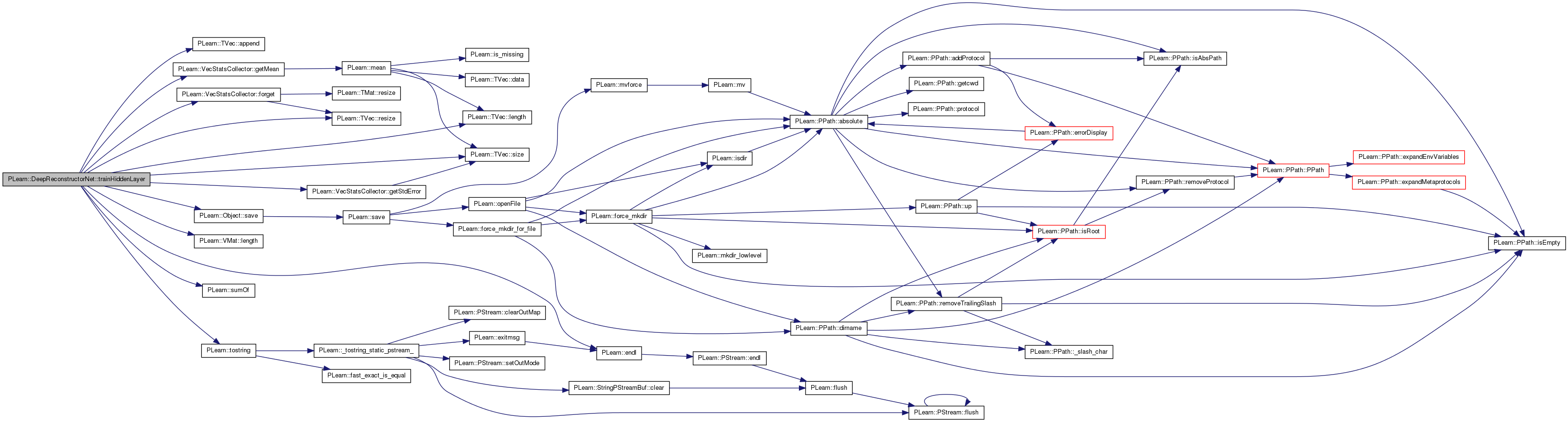

| void PLearn::DeepReconstructorNet::trainHiddenLayer | ( | int | which_input_layer, |

| VMat | inputs | ||

| ) | [protected] |

Definition at line 746 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::append(), PLearn::endl(), PLearn::PLearner::expdir, PLearn::VecStatsCollector::forget(), PLearn::VecStatsCollector::getMean(), PLearn::VecStatsCollector::getStdError(), layers, PLearn::TVec< T >::length(), PLearn::VMat::length(), m, minibatch_size, n, PLearn::perr, PLearn::PStream::plearn_binary, reconstruction_costs, reconstruction_costs_names, reconstruction_optimizer, reconstruction_optimizers, PLearn::TVec< T >::resize(), PLearn::Object::save(), PLearn::TVec< T >::size(), PLearn::sumOf(), PLearn::tostring(), unsupervised_min_improvement_rate, and unsupervised_nepochs.

Referenced by train().

{

int l = inputs->length();

pair<int,int> nepochs = unsupervised_nepochs[which_input_layer];

real min_improvement = -10000;

if(unsupervised_min_improvement_rate.length()!=0)

min_improvement = unsupervised_min_improvement_rate[which_input_layer];

perr << "\n\n*********************************************" << endl;

perr << "*** Training (unsupervised) layer " << which_input_layer+1 << " for max. " << nepochs.second << " epochs " << endl;

perr << "*** each epoch has " << l << " examples and " << l/minibatch_size << " optimizer stages (updates)" << endl;

Func f(layers[which_input_layer], reconstruction_costs[which_input_layer]);

Var totalcost = sumOf(inputs, f, minibatch_size);

VarArray params = totalcost->parents();

//displayVarGraph(reconstruction_costs[which_input_layer]);

//displayFunction(f,false,false, 333, "train_func");

//displayVarGraph(totalcost,true);

if ( reconstruction_optimizers.size() !=0 )

{

reconstruction_optimizers[which_input_layer]->setToOptimize(params, totalcost);

reconstruction_optimizers[which_input_layer]->reset();

}

else

{

reconstruction_optimizer->setToOptimize(params, totalcost);

reconstruction_optimizer->reset();

}

Vec costrow;

TVec<string> colnames;

VMat training_curve;

VecStatsCollector st;

real prev_mean = -1;

real relative_improvement = 1000;

for(int n=0; n<nepochs.first || (n<nepochs.second && relative_improvement >= min_improvement); n++)

{

st.forget();

if ( reconstruction_optimizers.size() !=0 )

{

reconstruction_optimizers[which_input_layer]->nstages = l/minibatch_size;

reconstruction_optimizers[which_input_layer]->optimizeN(st);

}

else

{

reconstruction_optimizer->nstages = l/minibatch_size;

reconstruction_optimizer->optimizeN(st);

}

int reconstr_cost_pos = 0;

Vec means = st.getMean();

Vec stderrs = st.getStdError();

perr << "Epoch " << n+1 << ": " << means << " +- " << stderrs;

real m = means[reconstr_cost_pos];

// real er = stderrs[reconstr_cost_pos];

if(n>0)

{

relative_improvement = (prev_mean-m)/fabs(prev_mean);

perr << " improvement: " << relative_improvement*100 << " %";

}

perr << endl;

int ncosts = means.length();

if(reconstruction_costs_names.length()!=ncosts)

{

reconstruction_costs_names.resize(ncosts);

for(int k=0; k<ncosts; k++)

reconstruction_costs_names[k] = "cost"+tostring(k);

}

if(colnames.length()==0)

{

colnames.append("nepochs");

colnames.append("relative_improvement");

for(int k=0; k<ncosts; k++)

{

colnames.append(reconstruction_costs_names[k]+"_mean");

colnames.append(reconstruction_costs_names[k]+"_stderr");

}

training_curve = new FileVMatrix(expdir/"training_costs_layer_"+tostring(which_input_layer+1)+".pmat",0,colnames);

}

costrow.resize(colnames.length());

// int k=0;

costrow[0] = (real)n+1;

costrow[1] = relative_improvement*100;

for(int k=0; k<ncosts; k++)

{

costrow[2+k*2] = means[k];

costrow[2+k*2+1] = stderrs[k];

}

training_curve->appendRow(costrow);

training_curve->flush();

prev_mean = m;

// save_learner_after_each_pretraining_epoch

PLearn::save(expdir/"learner.psave", *this, PStream::plearn_binary, false);

/*

if(n==0)

{

perr << "Displaying reconstruciton_cost" << endl;

displayVarGraph(reconstruction_costs[which_input_layer],true);

perr << "Displaying optimized funciton f" << endl;

displayFunction(f,true,false, 333, "train_func");

}

*/

}

}

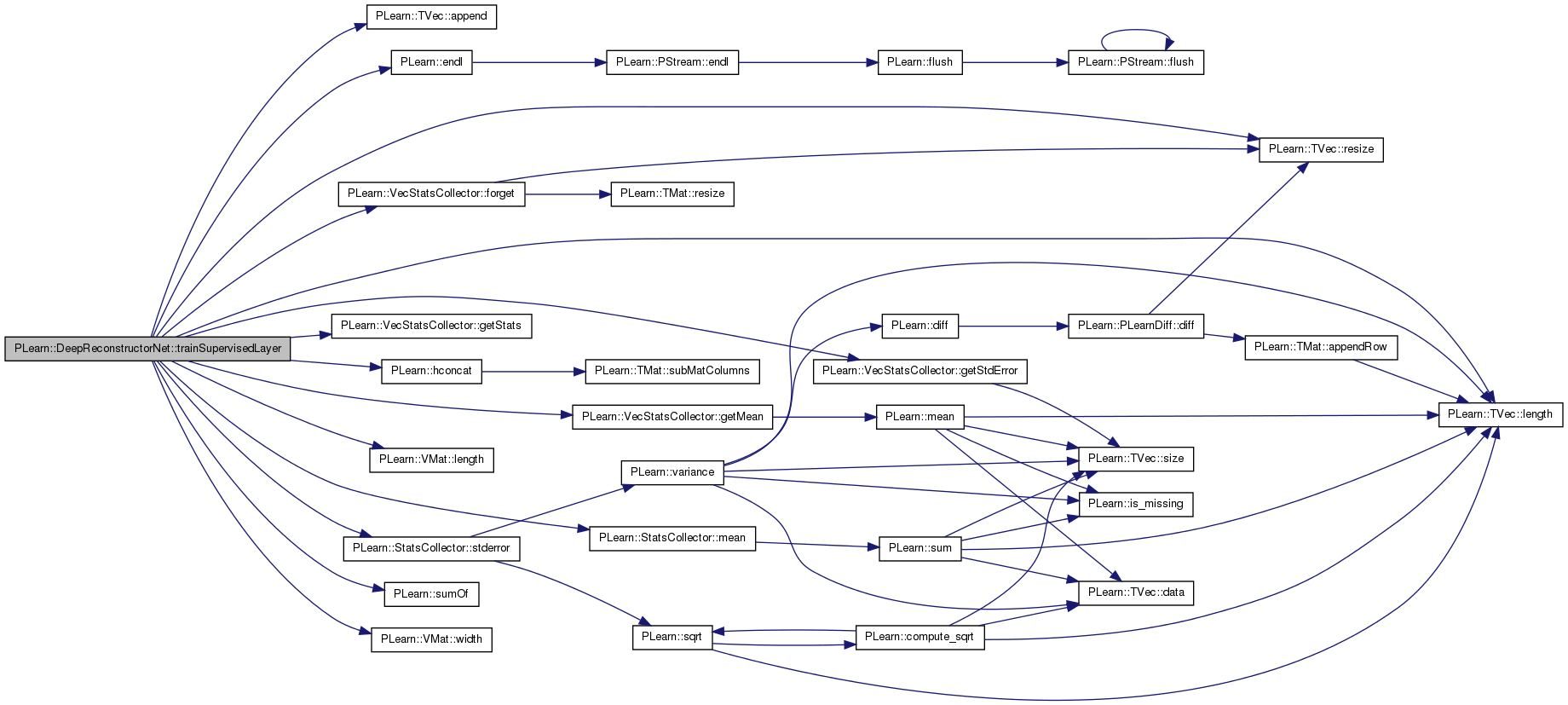

Definition at line 672 of file DeepReconstructorNet.cc.

References PLearn::TVec< T >::append(), PLearn::endl(), PLearn::PLearner::expdir, PLearn::VecStatsCollector::forget(), PLearn::VecStatsCollector::getMean(), PLearn::VecStatsCollector::getStats(), PLearn::VecStatsCollector::getStdError(), PLearn::hconcat(), layers, PLearn::TVec< T >::length(), PLearn::VMat::length(), m, PLearn::StatsCollector::mean(), minibatch_size, n, PLearn::perr, PLearn::TVec< T >::resize(), PLearn::StatsCollector::stderror(), PLearn::sumOf(), supervised_costs_names, supervised_costvec, supervised_min_improvement_rate, supervised_nepochs, supervised_optimizer, target, and PLearn::VMat::width().

Referenced by train().

{

int l = inputs->length();

pair<int,int> nepochs = supervised_nepochs;

real min_improvement = supervised_min_improvement_rate;

int last_hidden_layer = layers.length()-2;

perr << "\n\n*********************************************" << endl;

perr << "*** Training only supervised layer for max. " << nepochs.second << " epochs " << endl;

perr << "*** each epoch has " << l << " examples and " << l/minibatch_size << " optimizer stages (updates)" << endl;

Func f(layers[last_hidden_layer]&target, supervised_costvec);

// displayVarGraph(supervised_costvec);

VMat inputs_targets = hconcat(inputs, targets);

inputs_targets->defineSizes(inputs.width(),targets.width());

Var totalcost = sumOf(inputs_targets, f, minibatch_size);

// displayVarGraph(totalcost);

VarArray params = totalcost->parents();

supervised_optimizer->setToOptimize(params, totalcost);

supervised_optimizer->reset();

TVec<string> colnames;

VMat training_curve;

Vec costrow;

colnames.append("nepochs");

colnames.append("relative_improvement");

int ncosts=supervised_costs_names.length();

for(int k=0; k<ncosts; k++)

{

colnames.append(supervised_costs_names[k]+"_mean");

colnames.append(supervised_costs_names[k]+"_stderr");

}

training_curve = new FileVMatrix(expdir/"training_costs_output.pmat",0,colnames);

costrow.resize(colnames.length());

VecStatsCollector st;

real prev_mean = -1;

real relative_improvement = 1000;

for(int n=0; n<nepochs.first || (n<nepochs.second && relative_improvement >= min_improvement); n++)

{

st.forget();

supervised_optimizer->nstages = l/minibatch_size;

supervised_optimizer->optimizeN(st);

const StatsCollector& s = st.getStats(0);

real m = s.mean();

Vec means = st.getMean();

Vec stderrs = st.getStdError();

perr << "Epoch " << n+1 << " Mean costs: " << means << " stderr " << stderrs << endl;

perr << "mean error: " << m << " +- " << s.stderror() << endl;

if(prev_mean>0)

{

relative_improvement = (prev_mean-m)/prev_mean;

perr << "Relative improvement: " << relative_improvement*100 << " %"<< endl;

}

prev_mean = m;

//displayVarGraph(supervised_costvec, true);

// save to a file

costrow[0] = (real)n+1;

costrow[1] = relative_improvement*100;

for(int k=0; k<ncosts; k++) {

costrow[2+k*2] = means[k];

costrow[2+k*2+1] = stderrs[k];

}

training_curve->appendRow(costrow);

training_curve->flush();

}

}

Reimplemented from PLearn::PLearner.

Definition at line 232 of file DeepReconstructorNet.h.

TVec<Func> PLearn::DeepReconstructorNet::compute_layer [protected] |

Definition at line 126 of file DeepReconstructorNet.h.

Referenced by build_(), buildHiddenLayerOutputs(), and makeDeepCopyFromShallowCopy().

Func PLearn::DeepReconstructorNet::compute_output [protected] |

Definition at line 127 of file DeepReconstructorNet.h.

Referenced by build_(), computeOutput(), and makeDeepCopyFromShallowCopy().

Definition at line 118 of file DeepReconstructorNet.h.

Referenced by declareOptions(), fineTuningFor1Epoch(), makeDeepCopyFromShallowCopy(), and prepareForFineTuning().

Definition at line 109 of file DeepReconstructorNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 121 of file DeepReconstructorNet.h.

Referenced by declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 93 of file DeepReconstructorNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and reconstructOneLayer().

Definition at line 80 of file DeepReconstructorNet.h.

Referenced by build_(), computeAndSaveLayerActivationStats(), computeReconstructions(), computeRepresentations(), declareOptions(), fpropOneLayer(), getMatValue(), makeDeepCopyFromShallowCopy(), prepareForFineTuning(), reconstructInputFromLayer(), reconstructOneLayer(), setMatValue(), train(), trainHiddenLayer(), and trainSupervisedLayer().

Definition at line 113 of file DeepReconstructorNet.h.

Referenced by declareOptions(), fineTuningFor1Epoch(), prepareForFineTuning(), trainHiddenLayer(), and trainSupervisedLayer().

int PLearn::DeepReconstructorNet::nout [private] |

Definition at line 269 of file DeepReconstructorNet.h.

Referenced by build_(), and computeOutput().

TVec<VMat> PLearn::DeepReconstructorNet::outmat [protected] |

Definition at line 129 of file DeepReconstructorNet.h.

Definition at line 128 of file DeepReconstructorNet.h.

Referenced by build_(), computeCostsFromOutputs(), and makeDeepCopyFromShallowCopy().

Definition at line 111 of file DeepReconstructorNet.h.

Referenced by build_(), getParameterRow(), getParameterValue(), initializeParams(), listParameter(), listParameterNames(), and makeDeepCopyFromShallowCopy().

Definition at line 89 of file DeepReconstructorNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and reconstructOneLayer().

Definition at line 83 of file DeepReconstructorNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), train(), and trainHiddenLayer().

Definition at line 86 of file DeepReconstructorNet.h.

Referenced by declareOptions(), and trainHiddenLayer().

Definition at line 99 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainHiddenLayer().

Definition at line 96 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainHiddenLayer().

Definition at line 104 of file DeepReconstructorNet.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 107 of file DeepReconstructorNet.h.

Referenced by computeCostsFromOutputs(), declareOptions(), getTestCostNames(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), and trainSupervisedLayer().

Definition at line 105 of file DeepReconstructorNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), prepareForFineTuning(), and trainSupervisedLayer().

Definition at line 76 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainSupervisedLayer().

Definition at line 75 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), train(), and trainSupervisedLayer().

Definition at line 116 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainSupervisedLayer().

Definition at line 102 of file DeepReconstructorNet.h.

Referenced by build_(), buildHiddenLayerOutputs(), computeAndSaveLayerActivationStats(), declareOptions(), makeDeepCopyFromShallowCopy(), prepareForFineTuning(), and trainSupervisedLayer().

Definition at line 73 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainHiddenLayer().

### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //!

Definition at line 72 of file DeepReconstructorNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and trainHiddenLayer().

1.7.4

1.7.4