|

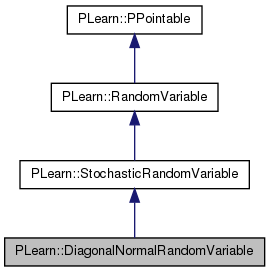

PLearn 0.1

|

|

PLearn 0.1

|

#include <RandomVar.h>

Public Member Functions | |

| DiagonalNormalRandomVariable (const RandomVar &mean, const RandomVar &log_variance, real minimum_standard_deviation=1e-10) | |

| DiagonalNormalRandomVariable. | |

| virtual char * | classname () |

| Var | logP (const Var &obs, const RVInstanceArray &RHS, RVInstanceArray *parameters_to_learn) |

| void | setValueFromParentsValue () |

| void | EMUpdate () |

| void | EMBprop (const Vec obs, real posterior) |

| void | EMEpochInitialize () |

| Initialization of an individual EMEpoch. | |

| const RandomVar & | mean () |

| convenience inlines | |

| const RandomVar & | log_variance () |

| bool & | learn_the_mean () |

| bool & | learn_the_variance () |

Protected Attributes | |

| real | minimum_variance |

| real | normfactor |

| normalization constant = dimension * log(2 PI) | |

| bool | shared_variance |

| iff log_variance->length()==1 | |

| Vec | mu_num |

| temporaries used during EM learning | |

| Vec | sigma_num |

| numerator for variance update in EM algorithm | |

| real | denom |

| denominator for mean and variance update in EM algorithm | |

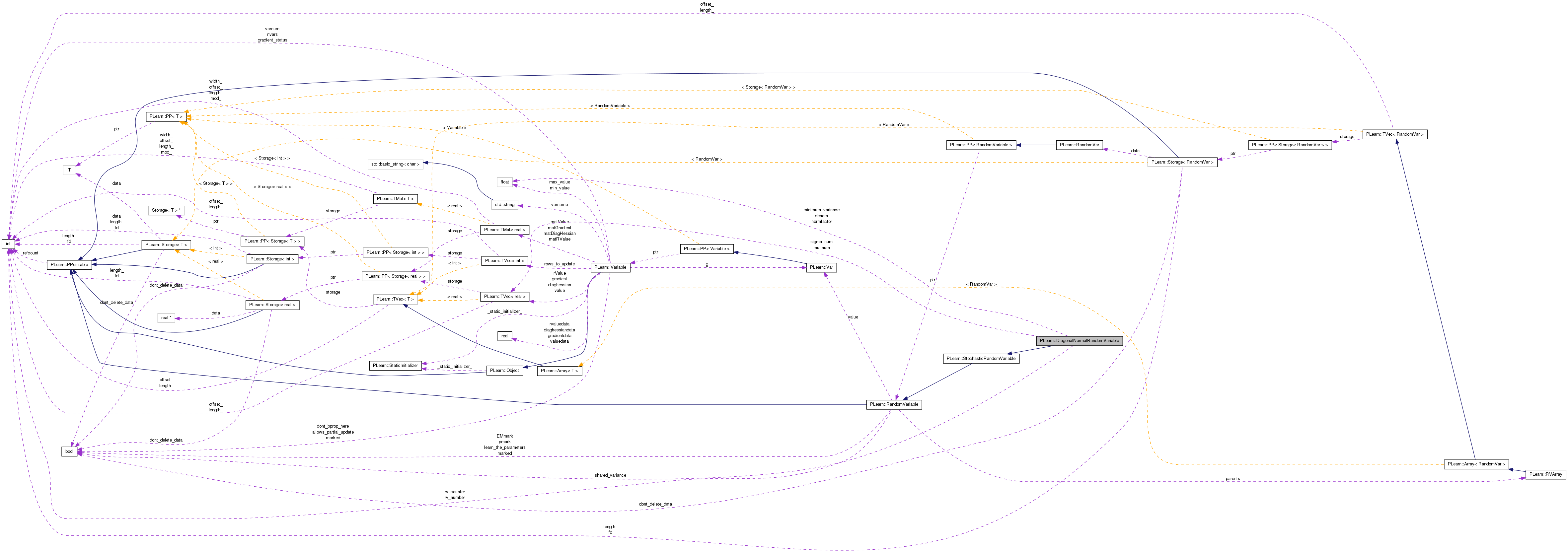

Definition at line 1205 of file RandomVar.h.

| PLearn::DiagonalNormalRandomVariable::DiagonalNormalRandomVariable | ( | const RandomVar & | mean, |

| const RandomVar & | log_variance, | ||

| real | minimum_standard_deviation = 1e-10 |

||

| ) |

Definition at line 1855 of file RandomVar.cc.

:StochasticRandomVariable(mean & log_var, mean->length()), minimum_variance(minimum_standard_deviation*minimum_standard_deviation), normfactor(mean->length()*Log2Pi), shared_variance(log_var->length()==1), mu_num(mean->length()), sigma_num(log_var->length()) { }

| virtual char* PLearn::DiagonalNormalRandomVariable::classname | ( | ) | [inline, virtual] |

Implements PLearn::RandomVariable.

Definition at line 1221 of file RandomVar.h.

{ return "DiagonalNormalRandomVariable"; }

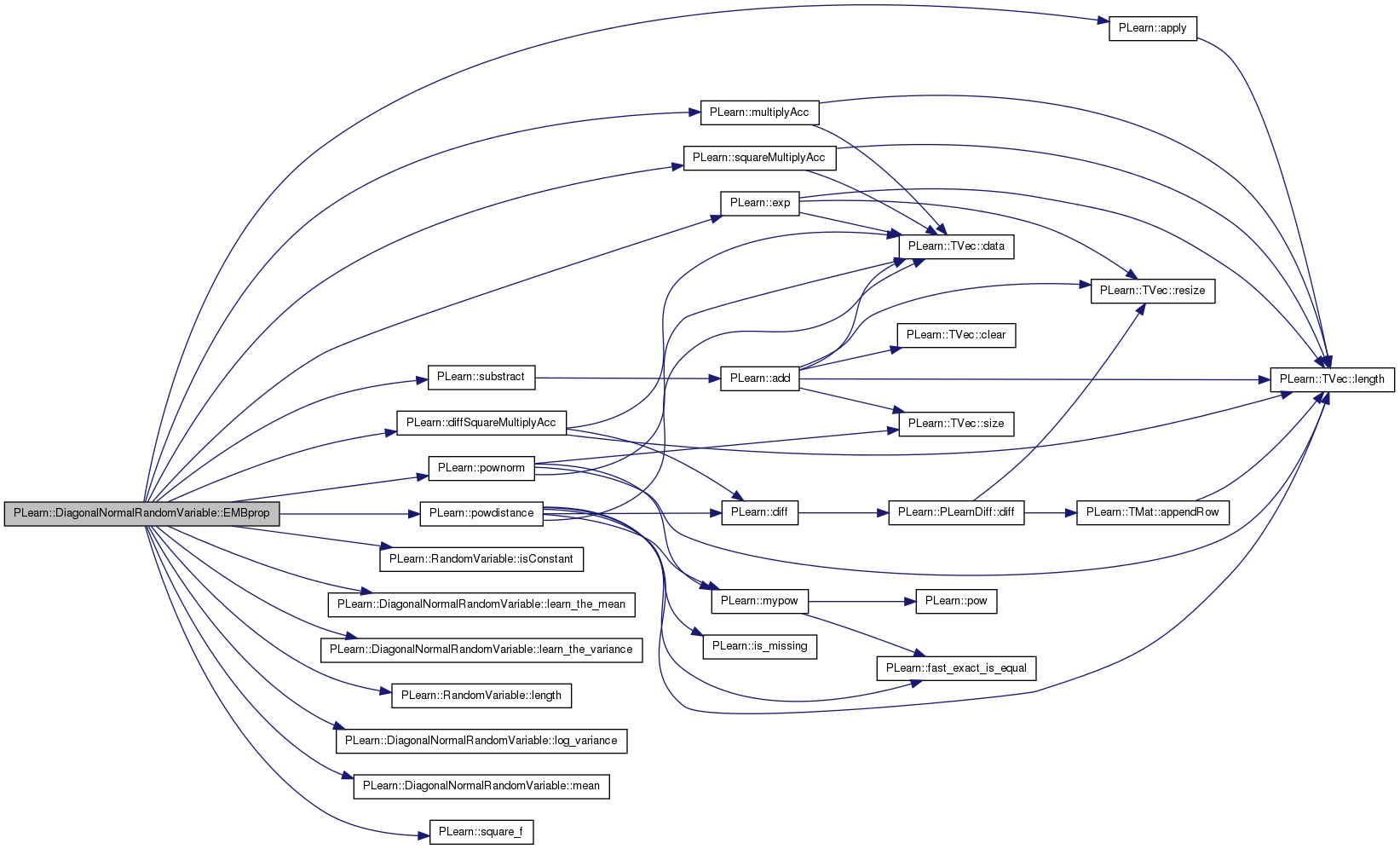

************ EM STUFF ********** propagate posterior information to parents in order to perform an EMupdate at the end of an EMEpoch. In the case of mixture-like RVs and their components, the posterior is the probability of the component "this" given the observation "obs".

Implements PLearn::RandomVariable.

Definition at line 1913 of file RandomVar.cc.

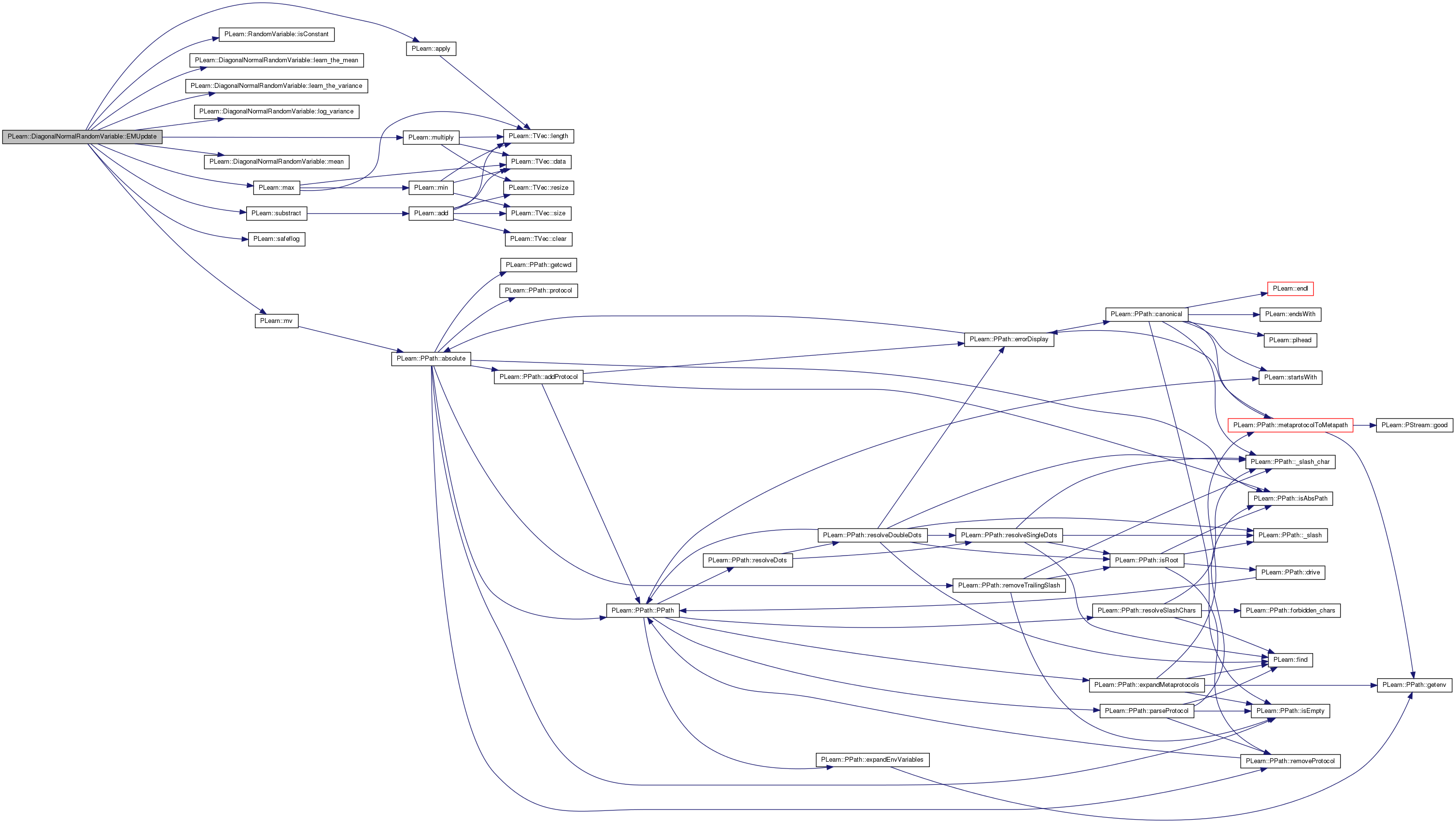

References PLearn::apply(), denom, PLearn::diffSquareMultiplyAcc(), PLearn::exp(), PLearn::RandomVariable::isConstant(), learn_the_mean(), learn_the_variance(), PLearn::RandomVariable::length(), log_variance(), mean(), minimum_variance, mu_num, PLearn::multiplyAcc(), PLERROR, PLearn::powdistance(), PLearn::pownorm(), shared_variance, sigma_num, PLearn::square_f(), PLearn::squareMultiplyAcc(), PLearn::substract(), and PLearn::RandomVariable::value.

{

if (learn_the_mean())

multiplyAcc(mu_num, obs,posterior);

else if (!mean()->isConstant())

{

if (!shared_variance)

PLERROR("DiagonalNormalRandomVariable: don't know how to EMBprop "

"into mean if variance is not shared");

mean()->EMBprop(obs,posterior/

(minimum_variance+exp(log_variance()->value->value[0])));

}

if (learn_the_variance())

{

if (learn_the_mean())

{

// sigma_num[i] += obs[i]*obs[i]*posterior

if (shared_variance)

sigma_num[0] += posterior*pownorm(obs)/mean()->length();

else

squareMultiplyAcc(sigma_num, obs,posterior);

}

else

{

// sigma_num[i] += (obs[i]-mean[i])^2*posterior

if (shared_variance)

sigma_num[0] += posterior*powdistance(obs,mean()->value->value)

/mean()->length();

else

diffSquareMultiplyAcc(sigma_num, obs,

mean()->value->value,

posterior);

}

}

else if (!log_variance()->isConstant())

{

// use sigma_num as a temporary for log_var's observation

if (shared_variance)

log_variance()->EMBprop(Vec(1,powdistance(obs,mean()->value->value)

/mean()->length()),

posterior);

else

{

substract(obs,mean()->value->value,sigma_num);

apply(sigma_num,sigma_num,square_f);

log_variance()->EMBprop(sigma_num,posterior);

}

}

if (learn_the_mean() || learn_the_variance()) denom += posterior;

}

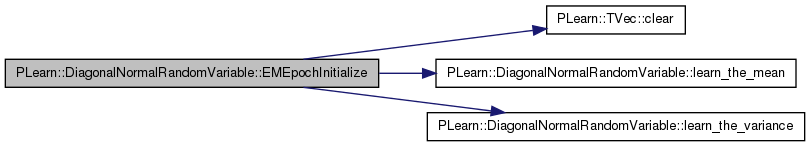

| void PLearn::DiagonalNormalRandomVariable::EMEpochInitialize | ( | ) | [virtual] |

Initialization of an individual EMEpoch.

the default just propagates to the unmarked parents

Reimplemented from PLearn::RandomVariable.

Definition at line 1902 of file RandomVar.cc.

References PLearn::TVec< T >::clear(), denom, PLearn::RandomVariable::EMmark, learn_the_mean(), learn_the_variance(), mu_num, and sigma_num.

{

if (EMmark) return;

RandomVariable::EMEpochInitialize();

if (learn_the_mean())

mu_num.clear();

if (learn_the_variance())

sigma_num.clear();

denom = 0.0;

}

| void PLearn::DiagonalNormalRandomVariable::EMUpdate | ( | ) | [virtual] |

update the fixed (non-random) parameters using internal learning mechanism, at end of an EMEpoch. the default just propagates to the unmarked parents.

Reimplemented from PLearn::RandomVariable.

Definition at line 1964 of file RandomVar.cc.

References PLearn::apply(), denom, PLearn::RandomVariable::EMmark, PLearn::RandomVariable::isConstant(), learn_the_mean(), learn_the_variance(), log_variance(), PLearn::max(), mean(), minimum_variance, mu_num, PLearn::multiply(), PLearn::mv(), PLearn::safeflog(), shared_variance, sigma_num, and PLearn::substract().

{

if (EMmark) return;

EMmark=true;

// maybe we should issue a warning if

// (learn_the_mean || learn_the_variance) && denom==0

// (it means that all posteriors reaching EMBprop were 0)

//

if (denom>0 && (learn_the_mean() || learn_the_variance()))

{

Vec lv = log_variance()->value->value;

Vec mv = mean()->value->value;

if (learn_the_mean())

multiply(mu_num,real(1.0/denom),mv);

if (learn_the_variance())

{

if (learn_the_mean())

{

// variance = sigma_num/denom - squared(mean)

sigma_num /= denom;

multiply(mv,mv,mu_num); // use mu_num as a temporary vec

if (shared_variance)

lv[0] = sigma_num[0] - PLearn::mean(mu_num);

else

substract(sigma_num,mu_num,lv);

// now lv really holds variance

// log_variance = log(max(0,variance-minimum_variance))

substract(lv,minimum_variance,lv);

max(lv,real(0.),lv);

apply(lv,lv,safeflog);

}

else

{

multiply(sigma_num,1/denom,lv);

// now log_variance really holds variance

// log_variance = log(max(0,variance-minimum_variance))

substract(lv,minimum_variance,lv);

max(lv,real(0.),lv);

apply(lv,lv,safeflog);

}

}

}

if (!learn_the_mean() && !mean()->isConstant())

mean()->EMUpdate();

if (!learn_the_variance() && !log_variance()->isConstant())

log_variance()->EMUpdate();

}

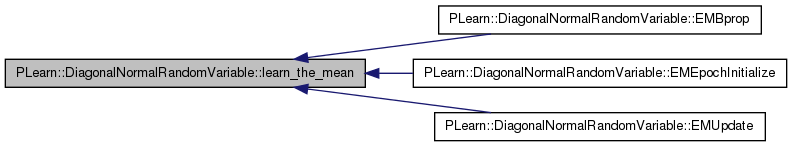

| bool& PLearn::DiagonalNormalRandomVariable::learn_the_mean | ( | ) | [inline] |

Definition at line 1233 of file RandomVar.h.

Referenced by EMBprop(), EMEpochInitialize(), and EMUpdate().

{ return learn_the_parameters[0]; }

| bool& PLearn::DiagonalNormalRandomVariable::learn_the_variance | ( | ) | [inline] |

Definition at line 1234 of file RandomVar.h.

Referenced by EMBprop(), EMEpochInitialize(), and EMUpdate().

{ return learn_the_parameters[1]; }

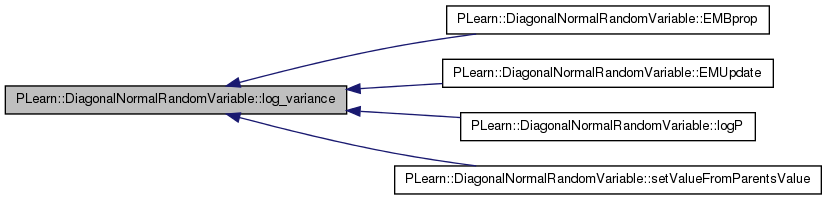

| const RandomVar& PLearn::DiagonalNormalRandomVariable::log_variance | ( | ) | [inline] |

Definition at line 1232 of file RandomVar.h.

Referenced by EMBprop(), EMUpdate(), logP(), and setValueFromParentsValue().

{ return parents[1]; }

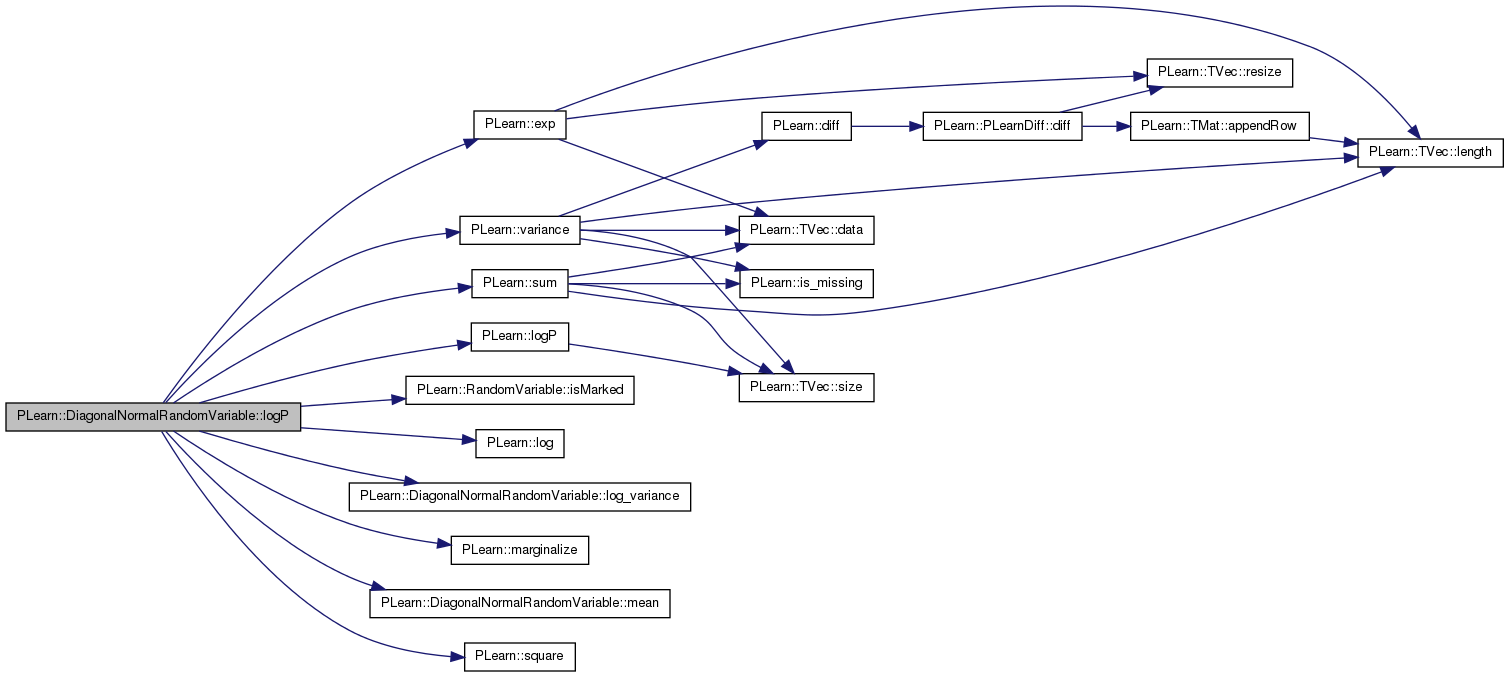

| Var PLearn::DiagonalNormalRandomVariable::logP | ( | const Var & | obs, |

| const RVInstanceArray & | RHS, | ||

| RVInstanceArray * | parameters_to_learn | ||

| ) | [virtual] |

Construct a Var that computes logP(This = obs | RHS ). This function SHOULD NOT be used directly, but is called by the global function logP (same argument), which does proper massaging of the network before and after this call.

Implements PLearn::RandomVariable.

Definition at line 1864 of file RandomVar.cc.

References PLearn::exp(), PLearn::RandomVariable::isMarked(), PLearn::log(), log_variance(), PLearn::logP(), PLearn::marginalize(), mean(), minimum_variance, normfactor, shared_variance, PLearn::square(), PLearn::sum(), PLearn::RandomVariable::value, and PLearn::variance().

{

if (mean()->isMarked() && log_variance()->isMarked())

{

if (log_variance()->value->getName()[0]=='#')

log_variance()->value->setName("log_variance");

if (mean()->value->getName()[0]=='#')

mean()->value->setName("mean");

Var variance = minimum_variance+exp(log_variance()->value);

variance->setName("variance");

if (shared_variance)

return (-0.5)*(sum(square(obs-mean()->value))/variance+

(mean()->length())*log(variance) + normfactor);

else

return (-0.5)*(sum(square(obs-mean()->value)/variance)+

sum(log(variance))+ normfactor);

}

// else

// probably not feasible..., but try in case we know a trick

if (mean()->isMarked())

return

PLearn::logP(ConditionalExpression(RVInstance(marginalize(this,

log_variance()),

obs),RHS),true,parameters_to_learn);

else

return PLearn::logP(ConditionalExpression(RVInstance(marginalize(this,mean()),

obs),RHS),true,parameters_to_learn);

}

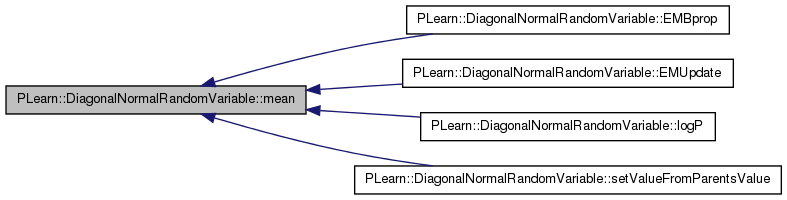

| const RandomVar& PLearn::DiagonalNormalRandomVariable::mean | ( | ) | [inline] |

convenience inlines

Definition at line 1231 of file RandomVar.h.

Referenced by EMBprop(), EMUpdate(), logP(), and setValueFromParentsValue().

{ return parents[0]; }

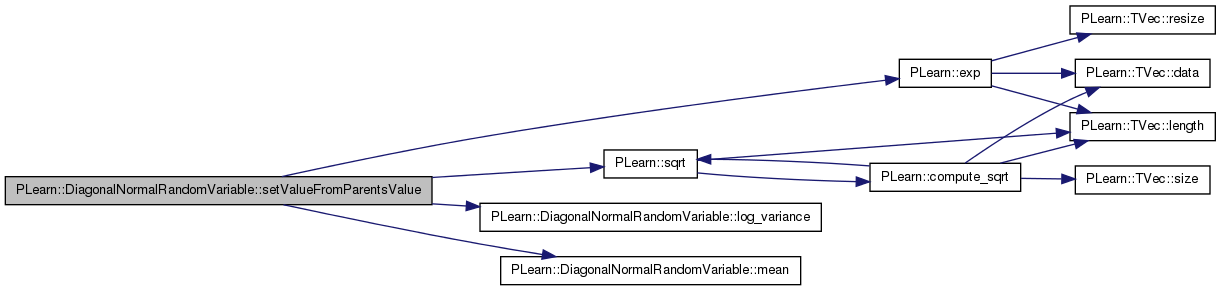

| void PLearn::DiagonalNormalRandomVariable::setValueFromParentsValue | ( | ) | [virtual] |

ALL BELOW THIS IS NOT NECESSARY FOR ORDINARY USERS < but may be necessary when writing subclasses. Note < however that normally the subclasses should not be < direct subclasses of RandomVariable but rather be < subclasses of StochasticRandomVariable and of < FunctionalRandomVariable.

define the formula that gives a value to this RV given its parent's value (sets the value field). If the RV is stochastic, the formula may also be "stochastic" (using SampleVariable's to define the Var).

Implements PLearn::RandomVariable.

Definition at line 1894 of file RandomVar.cc.

References PLearn::exp(), log_variance(), mean(), minimum_variance, PLearn::sqrt(), and PLearn::RandomVariable::value.

{

value =

Var(new DiagonalNormalSampleVariable(mean()->value,

sqrt(minimum_variance+

exp(log_variance()->value))));

}

real PLearn::DiagonalNormalRandomVariable::denom [protected] |

denominator for mean and variance update in EM algorithm

Definition at line 1240 of file RandomVar.h.

Referenced by EMBprop(), EMEpochInitialize(), and EMUpdate().

mean = parents[0] log_variance = parents[1] variance = minimum_variance + exp(log_variance);

Definition at line 1212 of file RandomVar.h.

Referenced by EMBprop(), EMUpdate(), logP(), and setValueFromParentsValue().

Vec PLearn::DiagonalNormalRandomVariable::mu_num [protected] |

temporaries used during EM learning

numerator for mean update in EM algorithm

Definition at line 1238 of file RandomVar.h.

Referenced by EMBprop(), EMEpochInitialize(), and EMUpdate().

real PLearn::DiagonalNormalRandomVariable::normfactor [protected] |

normalization constant = dimension * log(2 PI)

Definition at line 1213 of file RandomVar.h.

Referenced by logP().

iff log_variance->length()==1

Definition at line 1214 of file RandomVar.h.

Referenced by EMBprop(), EMUpdate(), and logP().

Vec PLearn::DiagonalNormalRandomVariable::sigma_num [protected] |

numerator for variance update in EM algorithm

Definition at line 1239 of file RandomVar.h.

Referenced by EMBprop(), EMEpochInitialize(), and EMUpdate().

1.7.4

1.7.4