|

PLearn 0.1

|

|

PLearn 0.1

|

#include <GaussianContinuumDistribution.h>

Definition at line 58 of file GaussianContinuumDistribution.h.

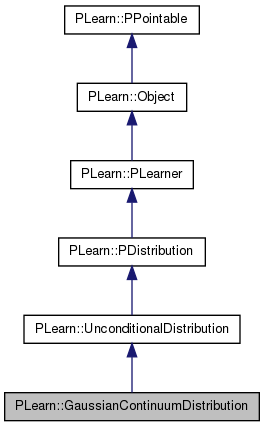

typedef UnconditionalDistribution PLearn::GaussianContinuumDistribution::inherited [private] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 63 of file GaussianContinuumDistribution.h.

| PLearn::GaussianContinuumDistribution::GaussianContinuumDistribution | ( | ) |

Default constructor.

Definition at line 88 of file GaussianContinuumDistribution.cc.

: weight_mu_and_tangent(0), include_current_point(false), random_walk_step_prop(1), use_noise(false),use_noise_direction(false), noise(-1), noise_type("uniform"), n_random_walk_step(0), n_random_walk_per_point(0),walk_on_noise(true),min_sigma(0.00001), min_diff(0.01),fixed_min_sigma(0.00001), fixed_min_diff(0.01), min_p_x(0.001),sm_bigger_than_sn(true), n_neighbors(5), n_neighbors_density(-1), mu_n_neighbors(2), n_dim(1), sigma_grad_scale_factor(1), update_parameters_every_n_epochs(5), variances_transfer_function("softplus"), architecture_type("single_neural_network"), n_hidden_units(-1), batch_size(1), norm_penalization(0), svd_threshold(1e-5) { }

| string PLearn::GaussianContinuumDistribution::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| OptionList & PLearn::GaussianContinuumDistribution::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| RemoteMethodMap & PLearn::GaussianContinuumDistribution::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| Object * PLearn::GaussianContinuumDistribution::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| StaticInitializer GaussianContinuumDistribution::_static_initializer_ & PLearn::GaussianContinuumDistribution::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

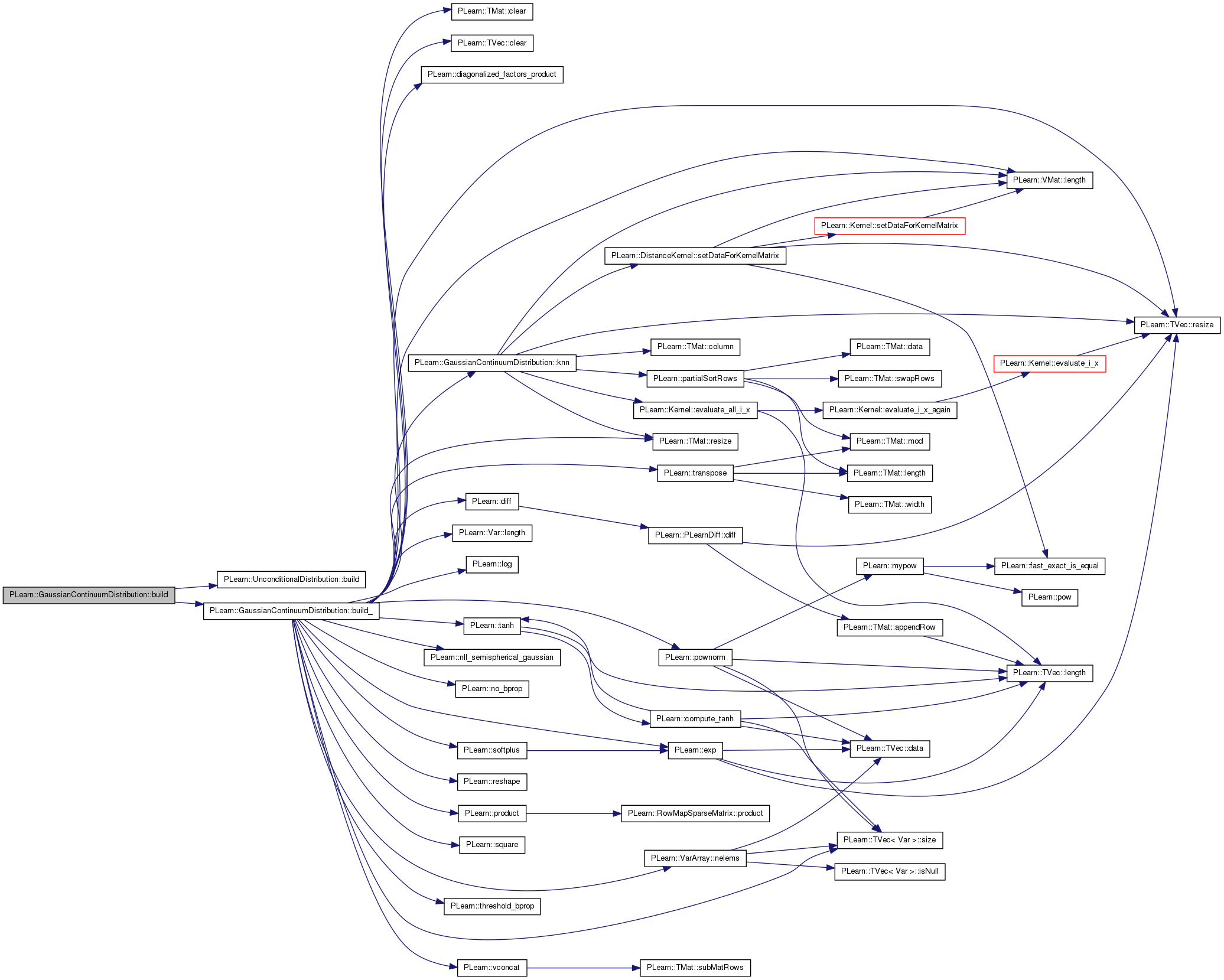

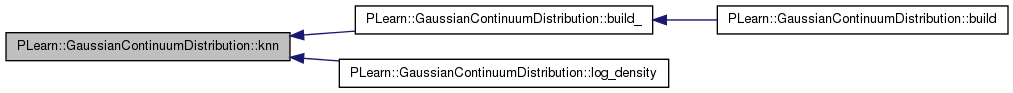

| void PLearn::GaussianContinuumDistribution::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 1103 of file GaussianContinuumDistribution.cc.

References PLearn::UnconditionalDistribution::build(), and build_().

{

inherited::build();

build_();

}

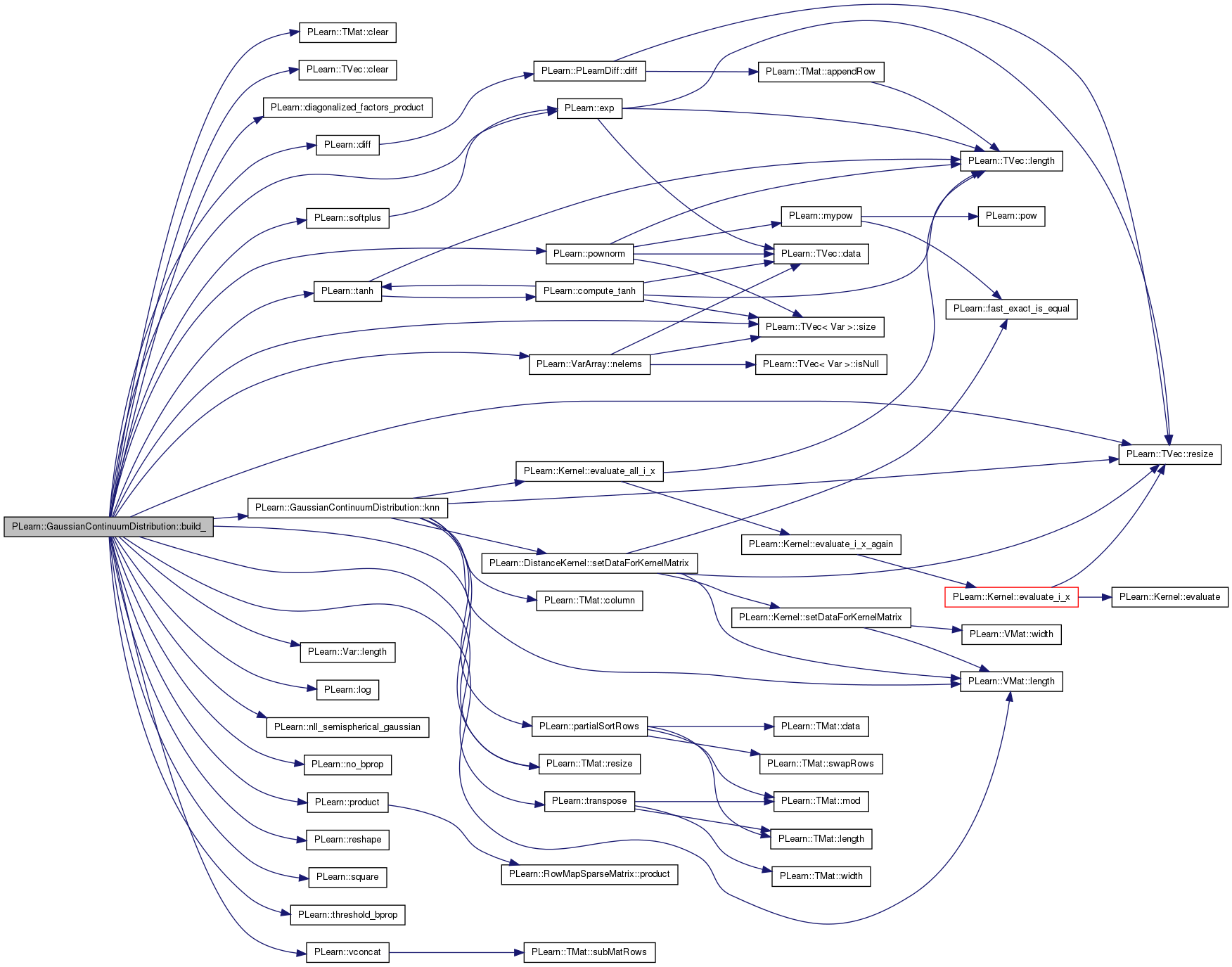

| void PLearn::GaussianContinuumDistribution::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 527 of file GaussianContinuumDistribution.cc.

References a, architecture_type, b, Bs, c, PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), cost_of_one_example, PLearn::diagonalized_factors_product(), PLearn::diff(), dist, embedding, PLearn::exp(), fixed_min_d, fixed_min_diff, fixed_min_sig, fixed_min_sigma, Fs, i, include_current_point, PLearn::PLearner::inputsize_, knn(), PLearn::Var::length(), PLearn::VMat::length(), PLearn::log(), min_d, min_p_x, min_sig, MISSING_VALUE, mu, mu_n_neighbors, mu_noisy, mus, muV, n, n_dim, n_hidden_units, n_neighbors, n_neighbors_density, neighbor_row, PLearn::VarArray::nelems(), PLearn::nll_semispherical_gaussian(), PLearn::no_bprop(), noise, noise_type, noise_var, noisy_data, output_embedding, output_f_all, p_neighbors, p_neighbors_and_point, p_target, p_x, parameters, PLERROR, PLearn::pownorm(), predictor, PLearn::product(), reference_set, PLearn::reshape(), PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sigma_grad_scale_factor, PLearn::TVec< T >::size(), sm, sm_bigger_than_sn, smb, sms, smV, sn, snb, sns, snV, PLearn::softplus(), PLearn::square(), sum_nll, svd_threshold, t_row, tangent_plane, tangent_targets, tangent_targets_and_point, PLearn::tanh(), target_index, PLearn::threshold_bprop(), train_nearest_neighbors, PLearn::PLearner::train_set, PLearn::transpose(), use_noise, use_noise_direction, Ut_svd, V, V_svd, variances_transfer_function, PLearn::vconcat(), w, W, weight_mu_and_tangent, x, x_minus_neighbor, z, zm, and zn.

Referenced by build().

{

n = PLearner::inputsize_;

if (n>0)

{

Var log_n_examples(1,1,"log(n_examples)");

if(train_set)

reference_set = train_set;

{

if (n_hidden_units <= 0)

PLERROR("GaussianContinuumDistribution::Number of hidden units should be positive, now %d\n",n_hidden_units);

x = Var(n);

c = Var(n_hidden_units,1,"c ");

V = Var(n_hidden_units,n,"V ");

Var a = tanh(c + product(V,x));

muV = Var(n,n_hidden_units,"muV ");

smV = Var(1,n_hidden_units,"smV ");

smb = Var(1,1,"smB ");

snV = Var(1,n_hidden_units,"snV ");

snb = Var(1,1,"snB ");

if(architecture_type == "embedding_neural_network")

{

W = Var(n_dim,n_hidden_units,"W ");

tangent_plane = diagonalized_factors_product(W,1-a*a,V);

embedding = product(W,a);

output_embedding = Func(x,embedding);

}

else if(architecture_type == "single_neural_network")

{

b = Var(n_dim*n,1,"b");

W = Var(n_dim*n,n_hidden_units,"W ");

tangent_plane = reshape(b + product(W,a),n_dim,n);

}

else

PLERROR("GaussianContinuumDistribution::build_, unknown architecture_type option %s",

architecture_type.c_str());

mu = product(muV,a);

fixed_min_sig = new SourceVariable(1,1);

fixed_min_sig->value[0] = fixed_min_sigma;

min_sig = Var(1,1);

min_sig->setName("min_sig");

fixed_min_d = new SourceVariable(1,1);

fixed_min_d->value[0] = fixed_min_diff;

min_d = Var(1,1);

min_d->setName("min_d");

if(noise > 0)

{

if(noise_type == "uniform")

{

PP<UniformDistribution> temp = new UniformDistribution();

Vec lower_noise(n);

Vec upper_noise(n);

for(int i=0; i<n; i++)

{

lower_noise[i] = -1*noise;

upper_noise[i] = noise;

}

temp->min = lower_noise;

temp->max = upper_noise;

dist = temp;

}

else if(noise_type == "gaussian")

{

PP<GaussianDistribution> temp = new GaussianDistribution();

Vec mu(n); mu.clear();

Vec eig_values(n);

Mat eig_vectors(n,n); eig_vectors.clear();

for(int i=0; i<n; i++)

{

eig_values[i] = noise; // maybe should be adjusted to the sigma noiseat the input

eig_vectors(i,i) = 1.0;

}

temp->mu = mu;

temp->eigenvalues = eig_values;

temp->eigenvectors = eig_vectors;

dist = temp;

}

else PLERROR("In GaussianContinuumDistribution::build_() : noise_type %c not defined",noise_type.c_str());

noise_var = new PDistributionVariable(x,dist);

if(use_noise_direction)

{

for(int k=0; k<n_dim; k++)

{

Var index_var = new SourceVariable(1,1);

index_var->value[0] = k;

Var f_k = new VarRowVariable(tangent_plane,index_var);

noise_var = noise_var - product(f_k,noise_var)* transpose(f_k)/pownorm(f_k,2);

}

}

noise_var = no_bprop(noise_var);

noise_var->setName(noise_type);

}

else

{

noise_var = new SourceVariable(n,1);

noise_var->setName("no noise");

for(int i=0; i<n; i++)

noise_var->value[i] = 0;

}

// Path for noisy mu

Var a_noisy = tanh(c + product(V,x+noise_var));

mu_noisy = product(muV,a_noisy);

if(sm_bigger_than_sn)

{

if(variances_transfer_function == "softplus") sn = softplus(snb + product(snV,a)) + min_sig + fixed_min_sig;

else if(variances_transfer_function == "square") sn = square(snb + product(snV,a)) + min_sig + fixed_min_sig;

else if(variances_transfer_function == "exp") sn = exp(snb + product(snV,a)) + min_sig + fixed_min_sig;

else PLERROR("In GaussianContinuumDistribution::build_ : unknown variances_transfer_function option %s ", variances_transfer_function.c_str());

Var diff;

if(variances_transfer_function == "softplus") diff = softplus(smb + product(smV,a)) + min_d + fixed_min_d;

else if(variances_transfer_function == "square") diff = square(smb + product(smV,a)) + min_d + fixed_min_d;

else if(variances_transfer_function == "exp") diff = exp(smb + product(smV,a)) + min_d + fixed_min_d;

sm = sn + diff;

}

else

{

if(variances_transfer_function == "softplus"){

sm = softplus(smb + product(smV,a)) + min_sig + fixed_min_sig;

sn = softplus(snb + product(snV,a)) + min_sig + fixed_min_sig;

}

else if(variances_transfer_function == "square"){

sm = square(smb + product(smV,a)) + min_sig + fixed_min_sig;

sn = square(snb + product(snV,a)) + min_sig + fixed_min_sig;

}

else if(variances_transfer_function == "exp"){

sm = exp(smb + product(smV,a)) + min_sig + fixed_min_sig;

sn = exp(snb + product(snV,a)) + min_sig + fixed_min_sig;

}

else PLERROR("In GaussianContinuumDistribution::build_ : unknown variances_transfer_function option %s ", variances_transfer_function.c_str());

}

if(sigma_grad_scale_factor > 0)

{

//sm = no_bprop(sm,sigma_grad_scale_factor);

//sn = no_bprop(sn,sigma_grad_scale_factor);

sn = threshold_bprop(sn,sigma_grad_scale_factor);

}

mu_noisy->setName("mu_noisy ");

tangent_plane->setName("tangent_plane ");

mu->setName("mu ");

sm->setName("sm ");

sn->setName("sn ");

a_noisy->setName("a_noisy ");

a->setName("a ");

if(architecture_type == "embedding_neural_network")

embedding->setName("embedding ");

x->setName("x ");

if(architecture_type == "embedding_neural_network")

predictor = Func(x, W & c & V & muV & smV & smb & snV & snb & min_sig & min_d, tangent_plane & mu & sm & sn );

if(architecture_type == "single_neural_network")

predictor = Func(x, b & W & c & V & muV & smV & smb & snV & snb & min_sig & min_d, tangent_plane & mu & sm & sn );

output_f_all = Func(x,tangent_plane & mu & sm & sn);

}

if (parameters.size()>0 && parameters.nelems() == predictor->parameters.nelems())

predictor->parameters.copyValuesFrom(parameters);

parameters.resize(predictor->parameters.size());

for (int i=0;i<parameters.size();i++)

parameters[i] = predictor->parameters[i];

Var target_index = Var(1,1);

target_index->setName("target_index");

Var neighbor_indexes = Var(n_neighbors,1);

neighbor_indexes->setName("neighbor_indexes");

p_x = Var(reference_set->length(),1);

p_x->setName("p_x");

for(int i=0; i<p_x.length(); i++)

p_x->value[i] = MISSING_VALUE;

//p_target = new VarRowsVariable(p_x,target_index);

p_target = new SourceVariable(1,1);

p_target->value[0] = log(1.0/reference_set->length());

p_target->setName("p_target");

p_neighbors =new VarRowsVariable(p_x,neighbor_indexes);

p_neighbors->setName("p_neighbors");

tangent_targets = Var(n_neighbors,n);

if(include_current_point)

{

Var temp = new SourceVariable(1,n);

temp->value.fill(0);

tangent_targets_and_point = vconcat(temp & tangent_targets);

p_neighbors_and_point = vconcat(p_target & p_neighbors);

}

else

{

tangent_targets_and_point = tangent_targets;

p_neighbors_and_point = p_neighbors;

}

if(mu_n_neighbors < 0 ) mu_n_neighbors = n_neighbors;

// compute - log ( sum_{neighbors of x} P(neighbor|x) ) according to semi-spherical model

Var nll = nll_semispherical_gaussian(tangent_plane, mu, sm, sn, tangent_targets_and_point, p_target, p_neighbors_and_point, noise_var, mu_noisy,

use_noise, svd_threshold, min_p_x, mu_n_neighbors); // + log_n_examples;

//nll_f = Func(tangent_plane & mu & sm & sn & tangent_targets, nll);

Var knn = new SourceVariable(1,1);

knn->setName("knn");

knn->value[0] = n_neighbors + (include_current_point ? 1 : 0);

if(weight_mu_and_tangent != 0)

{

sum_nll = new ColumnSumVariable(nll) / knn + weight_mu_and_tangent * ((Var) new RowSumVariable(square(product(no_bprop(tangent_plane),mu_noisy))));

}

else

sum_nll = new ColumnSumVariable(nll) / knn;

cost_of_one_example = Func(x & tangent_targets & target_index & neighbor_indexes, predictor->parameters, sum_nll);

noisy_data = Func(x,x + noise_var); // Func to verify what's the noisy data like (doesn't work so far, this problem will be investigated)

//verify_gradient_func = Func(predictor->inputs & tangent_targets & target_index & neighbor_indexes, predictor->parameters & mu_noisy, sum_nll);

if(n_neighbors_density > reference_set.length()-!include_current_point || n_neighbors_density < 0) n_neighbors_density = reference_set.length() - !include_current_point;

train_nearest_neighbors.resize(reference_set.length(), n_neighbors_density-1);

t_row.resize(n);

Ut_svd.resize(n,n);

V_svd.resize(n_dim,n_dim);

z.resize(n);

zm.resize(n);

zn.resize(n);

x_minus_neighbor.resize(n);

neighbor_row.resize(n);

w.resize(n_dim);

Bs.resize(reference_set.length());

Fs.resize(reference_set.length());

mus.resize(reference_set.length(), n);

sms.resize(reference_set.length());

sns.resize(reference_set.length());

}

}

| string PLearn::GaussianContinuumDistribution::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

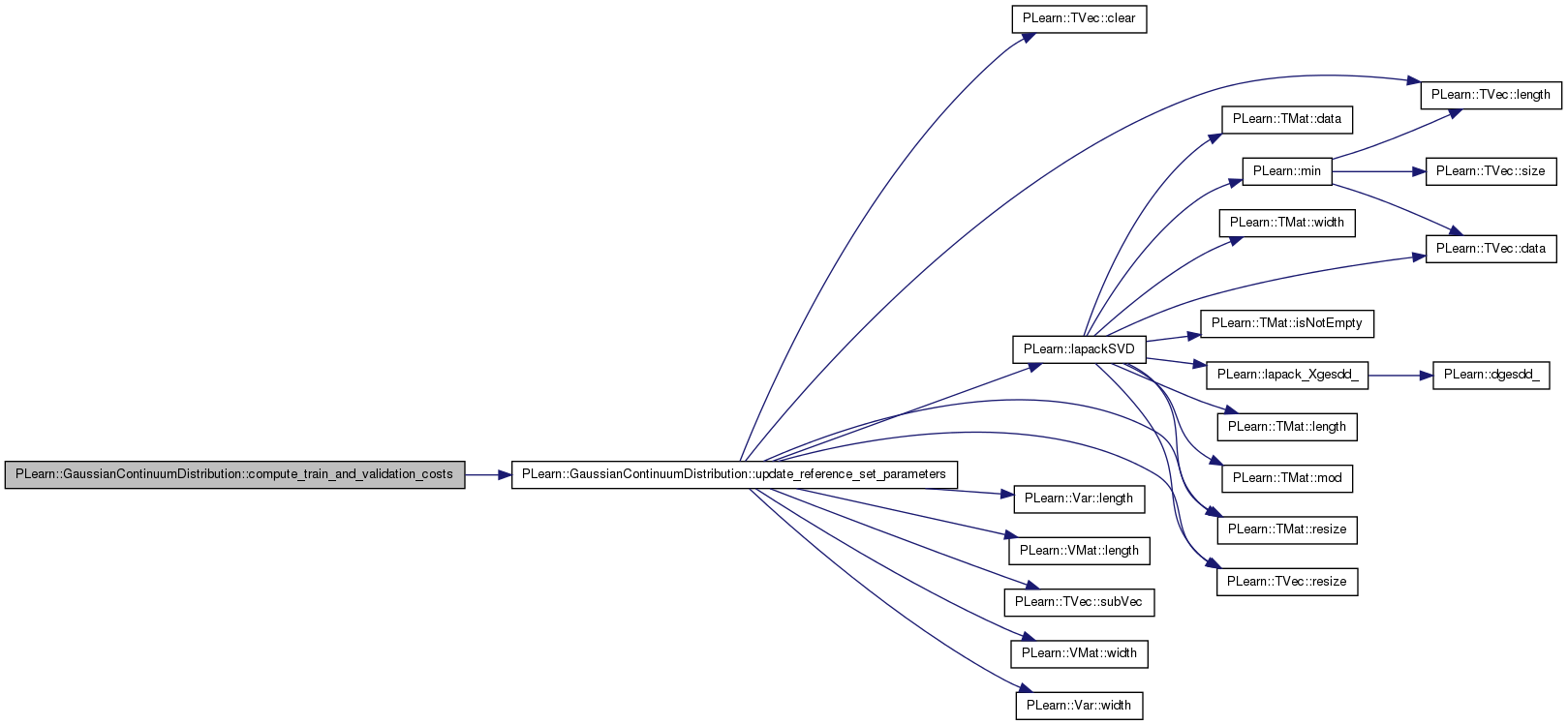

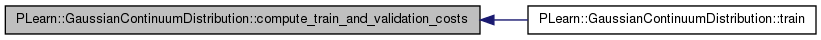

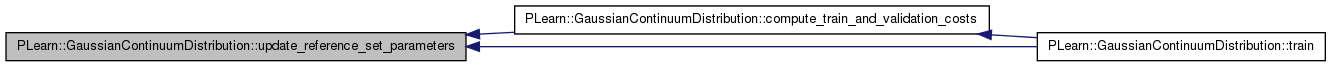

| void PLearn::GaussianContinuumDistribution::compute_train_and_validation_costs | ( | ) | [private] |

Definition at line 1036 of file GaussianContinuumDistribution.cc.

References update_reference_set_parameters().

Referenced by train().

{

update_reference_set_parameters();

// estimate p(x) for the training set

/*

real nll_train = 0;

for(int t=0; t<train_set.length(); t++)

{

train_set->getRow(t,t_row);

p_x->value[t] = 0;

// fetching nearest neighbors for density estimation

for(int neighbor=0; neighbor<train_nearest_neighbors.width(); neighbor++)

{

train_set->getRow(train_nearest_neighbors(t,neighbor),neighbor_row);

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mus(train_nearest_neighbors(t,neighbor)),z);

product(w, Bs[train_nearest_neighbors(t,neighbor)], z);

transposeProduct(zm, Fs[train_nearest_neighbors(t,neighbor)], w);

substract(z,zm,zn);

p_x->value[t] += exp(-0.5*(pownorm(zm,2)/sms[train_nearest_neighbors(t,neighbor)] + pownorm(zn,2)/sns[train_nearest_neighbors(t,neighbor)]

+ n_dim*log(sms[train_nearest_neighbors(t,neighbor)]) + (n-n_dim)*log(sns[train_nearest_neighbors(t,neighbor)])) - n/2.0 * Log2Pi);

}

p_x->value[t] /= train_set.length();

nll_train -= log(p_x->value[t]);

}

nll_train /= train_set.length();

if(verbosity > 2) cout << "NLL train = " << nll_train << endl;

// estimate p(x) for the validation set

real nll_validation = 0;

for(int t=0; t<valid_set.length(); t++)

{

valid_set->getRow(t,t_row);

real this_p_x = 0;

// fetching nearest neighbors for density estimation

for(int neighbor=0; neighbor<n_neighbors_density; neighbor++)

{

train_set->getRow(validation_nearest_neighbors(t,neighbor), neighbor_row);

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mus(validation_nearest_neighbors(t,neighbor)),z);

product(w, Bs[validation_nearest_neighbors(t,neighbor)], z);

transposeProduct(zm, Fs[validation_nearest_neighbors(t,neighbor)], w);

substract(z,zm,zn);

this_p_x += exp(-0.5*(pownorm(zm,2)/sms[validation_nearest_neighbors(t,neighbor)] + pownorm(zn,2)/sns[validation_nearest_neighbors(t,neighbor)]

+ n_dim*log(sms[validation_nearest_neighbors(t,neighbor)]) + (n-n_dim)*log(sns[validation_nearest_neighbors(t,neighbor)])) - n/2.0 * Log2Pi);

}

this_p_x /= train_set.length(); // When points will be added using a random walk, this will need to be changed (among other things...)

nll_validation -= log(this_p_x);

}

nll_validation /= valid_set.length();

if(verbosity > 2) cout << "NLL validation = " << nll_validation << endl;

*/

}

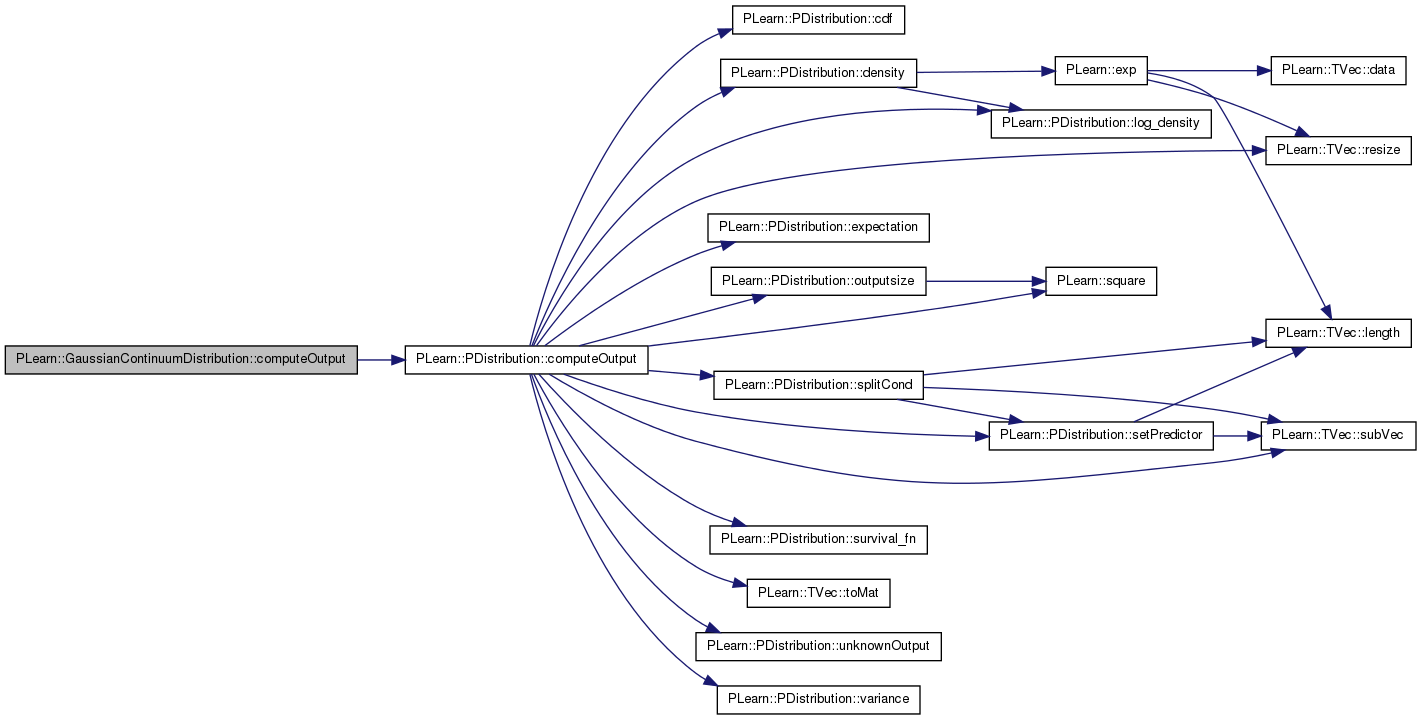

| void PLearn::GaussianContinuumDistribution::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Produce outputs according to what is specified in outputs_def.

Reimplemented from PLearn::PDistribution.

Definition at line 1392 of file GaussianContinuumDistribution.cc.

References PLearn::PDistribution::computeOutput(), embedding, output_embedding, and PLearn::PDistribution::outputs_def.

{

switch(outputs_def[0])

{

case 'm':

output_embedding(input);

output << embedding->value;

break;

default:

inherited::computeOutput(input,output);

}

}

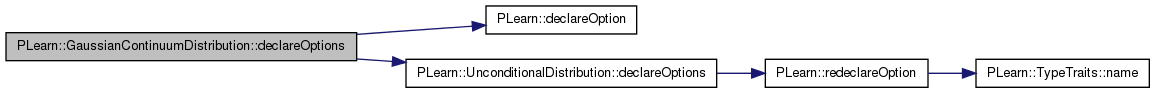

| void PLearn::GaussianContinuumDistribution::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 350 of file GaussianContinuumDistribution.cc.

References architecture_type, batch_size, Bs, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::UnconditionalDistribution::declareOptions(), fixed_min_diff, fixed_min_sigma, Fs, include_current_point, PLearn::OptionBase::learntoption, min_diff, min_p_x, min_sigma, mu_n_neighbors, mus, n_dim, n_hidden_units, n_neighbors, n_neighbors_density, n_random_walk_per_point, n_random_walk_step, noise, noise_type, optimizer, parameters, random_walk_step_prop, reference_set, sigma_grad_scale_factor, sm_bigger_than_sn, sms, sns, svd_threshold, update_parameters_every_n_epochs, use_noise, use_noise_direction, variances_transfer_function, walk_on_noise, and weight_mu_and_tangent.

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

declareOption(ol, "weight_mu_and_tangent", &GaussianContinuumDistribution::weight_mu_and_tangent, OptionBase::buildoption,

"Weight of the cost on the scalar product between the manifold directions and mu.\n"

);

declareOption(ol, "include_current_point", &GaussianContinuumDistribution::include_current_point, OptionBase::buildoption,

"Indication that the current point should be included in the nearest neighbors.\n"

);

declareOption(ol, "n_neighbors", &GaussianContinuumDistribution::n_neighbors, OptionBase::buildoption,

"Number of nearest neighbors to consider for gradient descent.\n"

);

declareOption(ol, "n_neighbors_density", &GaussianContinuumDistribution::n_neighbors_density, OptionBase::buildoption,

"Number of nearest neighbors to consider for p(x) density estimation.\n"

);

declareOption(ol, "mu_n_neighbors", &GaussianContinuumDistribution::mu_n_neighbors, OptionBase::buildoption,

"Number of nearest neighbors to learn the mus (if < 0, mu_n_neighbors = n_neighbors).\n"

);

declareOption(ol, "n_dim", &GaussianContinuumDistribution::n_dim, OptionBase::buildoption,

"Number of tangent vectors to predict.\n"

);

declareOption(ol, "update_parameters_every_n_epochs", &GaussianContinuumDistribution::update_parameters_every_n_epochs, OptionBase::buildoption,

"Frequency of the update of the stored parameters of the reference set. \n"

);

declareOption(ol, "sigma_grad_scale_factor", &GaussianContinuumDistribution::sigma_grad_scale_factor, OptionBase::buildoption,

"Scaling factor of the gradient on the sigmas. \n"

);

declareOption(ol, "optimizer", &GaussianContinuumDistribution::optimizer, OptionBase::buildoption,

"Optimizer that optimizes the cost function.\n"

);

declareOption(ol, "variances_transfer_function", &GaussianContinuumDistribution::variances_transfer_function,

OptionBase::buildoption,

"Type of output transfer function for predicted variances, to force them to be >0:\n"

" square : take the square\n"

" exp : apply the exponential\n"

" softplus : apply the function log(1+exp(.))\n"

);

declareOption(ol, "architecture_type", &GaussianContinuumDistribution::architecture_type, OptionBase::buildoption,

"For pre-defined tangent_predictor types: \n"

" single_neural_network : prediction = b + W*tanh(c + V*x), where W has n_hidden_units columns\n"

" where the resulting vector is viewed as a n_dim by n matrix\n"

" embedding_neural_network: prediction[k,i] = d(e[k])/d(x[i), where e(x) is an ordinary neural\n"

" network representing the embedding function (see output_type option)\n"

"where (b,W,c,V) are parameters to be optimized.\n"

);

declareOption(ol, "n_hidden_units", &GaussianContinuumDistribution::n_hidden_units, OptionBase::buildoption,

"Number of hidden units (if architecture_type is some kind of neural network)\n"

);

/*

declareOption(ol, "output_type", &GaussianContinuumDistribution::output_type, OptionBase::buildoption,

"Default value (the only one considered if architecture_type != embedding_*) is\n"

" tangent_plane: output the predicted tangent plane.\n"

" embedding: output the embedding vector (only if architecture_type == embedding_*).\n"

" tangent_plane+embedding: output both (in this order).\n"

);

*/

declareOption(ol, "batch_size", &GaussianContinuumDistribution::batch_size, OptionBase::buildoption,

" how many samples to use to estimate the average gradient before updating the weights\n"

" 0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "svd_threshold", &GaussianContinuumDistribution::svd_threshold, OptionBase::buildoption,

"Threshold to accept singular values of F in solving for linear combination weights on tangent subspace.\n"

);

declareOption(ol, "sm_bigger_than_sn", &GaussianContinuumDistribution::sm_bigger_than_sn, OptionBase::buildoption,

"Indication that sm should always be bigger than sn.\n"

);

declareOption(ol, "walk_on_noise", &GaussianContinuumDistribution::walk_on_noise, OptionBase::buildoption,

"Indication that the random walk should also consider the noise variation.\n"

);

declareOption(ol, "parameters", &GaussianContinuumDistribution::parameters, OptionBase::learntoption,

"Parameters of the tangent_predictor function.\n"

);

declareOption(ol, "Bs", &GaussianContinuumDistribution::Bs, OptionBase::learntoption,

"The B matrices for the training set.\n"

);

declareOption(ol, "Fs", &GaussianContinuumDistribution::Fs, OptionBase::learntoption,

"The F (tangent planes) matrices for the training set.\n"

);

declareOption(ol, "mus", &GaussianContinuumDistribution::mus, OptionBase::learntoption,

"The mu vectors for the training set.\n"

);

declareOption(ol, "sms", &GaussianContinuumDistribution::sms, OptionBase::learntoption,

"The sm values for the training set.\n"

);

declareOption(ol, "sns", &GaussianContinuumDistribution::sns, OptionBase::learntoption,

"The sn values for the training set.\n"

);

declareOption(ol, "min_sigma", &GaussianContinuumDistribution::min_sigma, OptionBase::buildoption,

"The minimum value for sigma noise and manifold.\n"

);

declareOption(ol, "min_diff", &GaussianContinuumDistribution::min_diff, OptionBase::buildoption,

"The minimum value for the difference between sigma manifold and noise.\n"

);

declareOption(ol, "fixed_min_sigma", &GaussianContinuumDistribution::fixed_min_sigma, OptionBase::buildoption,

"The fixed minimum value for sigma noise and manifold.\n"

);

declareOption(ol, "fixed_min_diff", &GaussianContinuumDistribution::fixed_min_diff, OptionBase::buildoption,

"The fixed minimum value for the difference between sigma manifold and noise.\n"

);

declareOption(ol, "min_p_x", &GaussianContinuumDistribution::min_p_x, OptionBase::buildoption,

"The minimum value for p_x, for stability concerns when doing gradient descent.\n"

);

declareOption(ol, "n_random_walk_step", &GaussianContinuumDistribution::n_random_walk_step, OptionBase::buildoption,

"The number of random walk step.\n"

);

declareOption(ol, "n_random_walk_per_point", &GaussianContinuumDistribution::n_random_walk_per_point, OptionBase::buildoption,

"The number of random walks per training set point.\n"

);

declareOption(ol, "noise", &GaussianContinuumDistribution::noise, OptionBase::buildoption,

"Noise parameter for the training data. For uniform noise, this gives the half the length \n" "of the uniform window (centered around the origin), and for gaussian noise, this gives the variance of the noise in all directions.\n"

);

declareOption(ol, "noise_type", &GaussianContinuumDistribution::noise_type, OptionBase::buildoption,

"Type of the noise (\"uniform\" or \"gaussian\").\n"

);

declareOption(ol, "use_noise", &GaussianContinuumDistribution::use_noise, OptionBase::buildoption,

"Indication that the training should be done using noise on training data.\n"

);

declareOption(ol, "use_noise_direction", &GaussianContinuumDistribution::use_noise_direction, OptionBase::buildoption,

"Indication that the noise should be directed in the noise directions.\n"

);

declareOption(ol, "random_walk_step_prop", &GaussianContinuumDistribution::random_walk_step_prop, OptionBase::buildoption,

"Proportion or confidence of the random walk steps.\n"

);

declareOption(ol, "reference_set", &GaussianContinuumDistribution::reference_set, OptionBase::learntoption,

"Reference points for density computation.\n"

);

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::GaussianContinuumDistribution::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 219 of file GaussianContinuumDistribution.h.

| GaussianContinuumDistribution * PLearn::GaussianContinuumDistribution::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

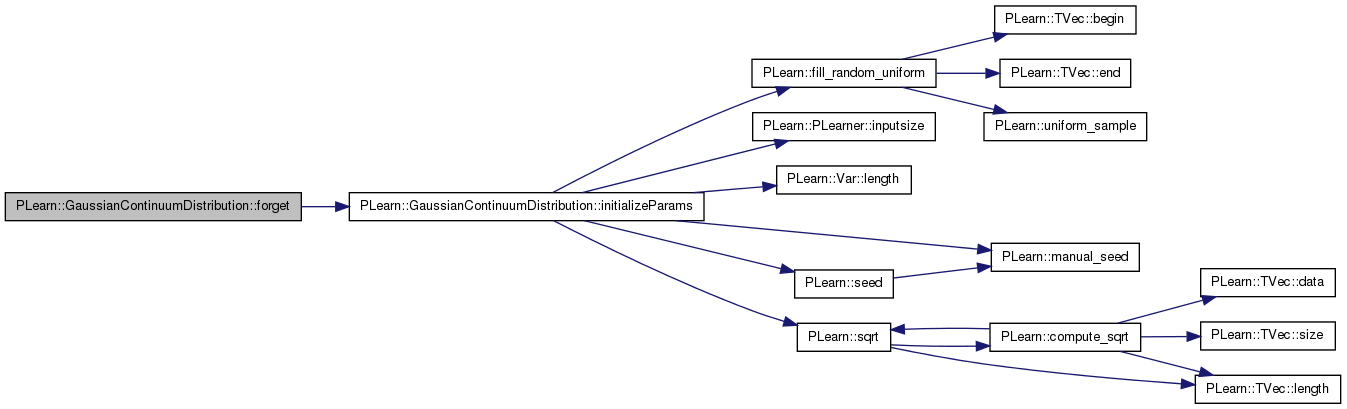

| void PLearn::GaussianContinuumDistribution::forget | ( | ) | [protected, virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 1174 of file GaussianContinuumDistribution.cc.

References initializeParams(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

if (train_set) initializeParams();

stage = 0;

}

| OptionList & PLearn::GaussianContinuumDistribution::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| OptionMap & PLearn::GaussianContinuumDistribution::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

| RemoteMethodMap & PLearn::GaussianContinuumDistribution::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 126 of file GaussianContinuumDistribution.cc.

Definition at line 1383 of file GaussianContinuumDistribution.cc.

References reference_set, and PLearn::VMat::width().

{

Vec ret(reference_set->width());

reference_set->getRow(j,ret);

return ret;

}

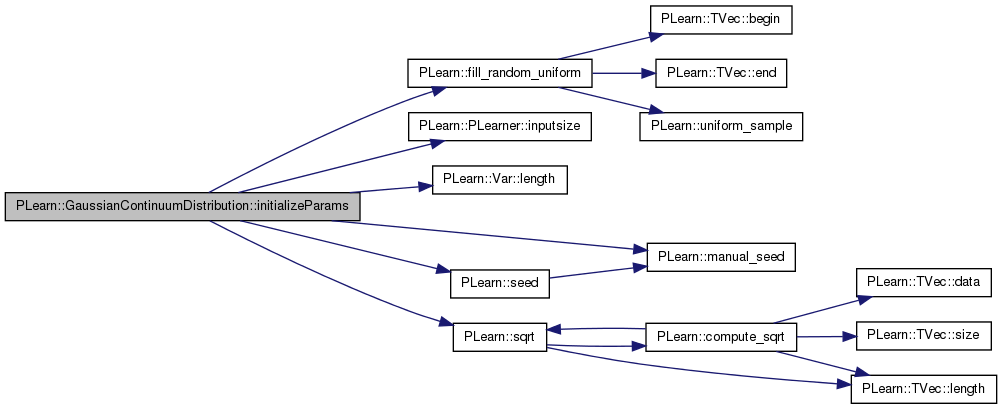

| void PLearn::GaussianContinuumDistribution::initializeParams | ( | ) | [protected, virtual] |

Definition at line 1268 of file GaussianContinuumDistribution.cc.

References architecture_type, b, c, PLearn::fill_random_uniform(), i, PLearn::PLearner::inputsize(), PLearn::Var::length(), PLearn::manual_seed(), min_d, min_diff, min_sig, min_sigma, MISSING_VALUE, muV, n_hidden_units, optimizer, p_x, PLERROR, PLearn::seed(), PLearn::PLearner::seed_, smb, smV, snb, snV, PLearn::sqrt(), V, and W.

Referenced by forget().

{

if (seed_>=0)

manual_seed(seed_);

else

PLearn::seed();

if (architecture_type=="embedding_neural_network")

{

real delta = 1.0 / sqrt(real(inputsize()));

fill_random_uniform(V->value, -delta, delta);

delta = 1.0 / real(n_hidden_units);

fill_random_uniform(W->matValue, -delta, delta);

c->value.clear();

fill_random_uniform(smV->matValue, -delta, delta);

smb->value.clear();

fill_random_uniform(smV->matValue, -delta, delta);

snb->value.clear();

fill_random_uniform(snV->matValue, -delta, delta);

fill_random_uniform(muV->matValue, -delta, delta);

min_sig->value[0] = min_sigma;

min_d->value[0] = min_diff;

}

else if (architecture_type=="single_neural_network")

{

real delta = 1.0 / sqrt(real(inputsize()));

fill_random_uniform(V->value, -delta, delta);

delta = 1.0 / real(n_hidden_units);

fill_random_uniform(W->matValue, -delta, delta);

c->value.clear();

fill_random_uniform(smV->matValue, -delta, delta);

smb->value.clear();

fill_random_uniform(smV->matValue, -delta, delta);

snb->value.clear();

fill_random_uniform(snV->matValue, -delta, delta);

fill_random_uniform(muV->matValue, -delta, delta);

b->value.clear();

min_sig->value[0] = min_sigma;

min_d->value[0] = min_diff;

}

else PLERROR("other types not handled yet!");

for(int i=0; i<p_x.length(); i++)

//p_x->value[i] = log(1.0/p_x.length());

p_x->value[i] = MISSING_VALUE;

if(optimizer)

optimizer->reset();

}

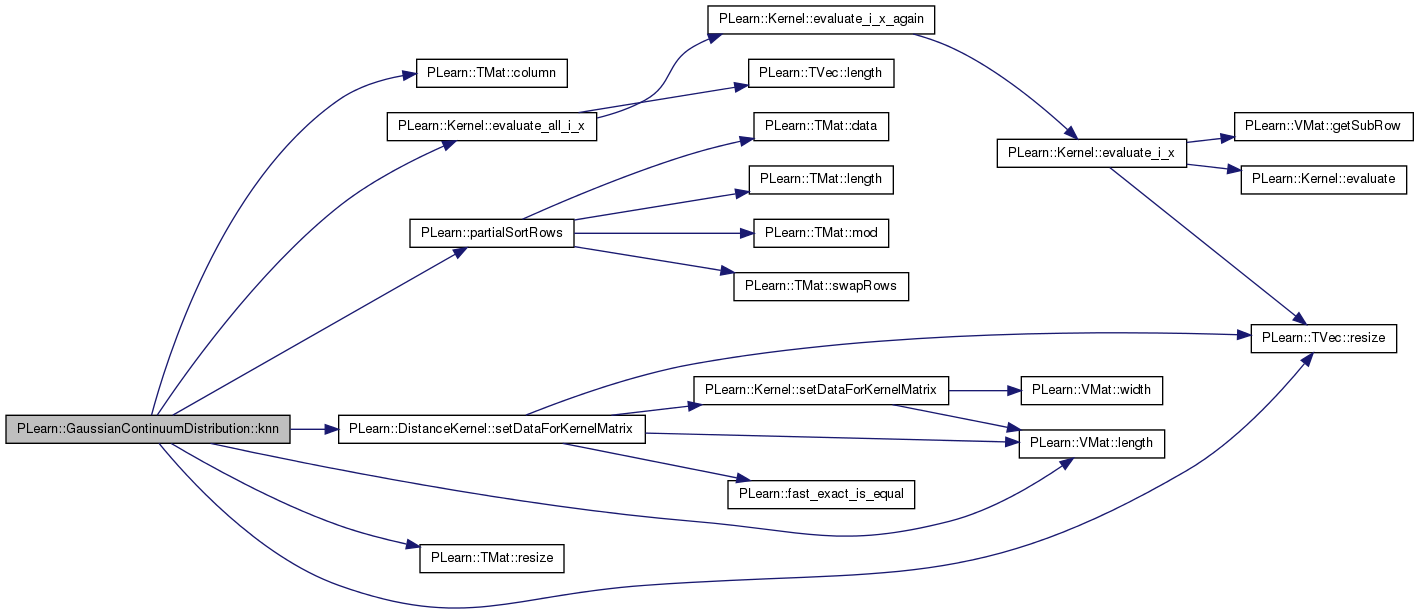

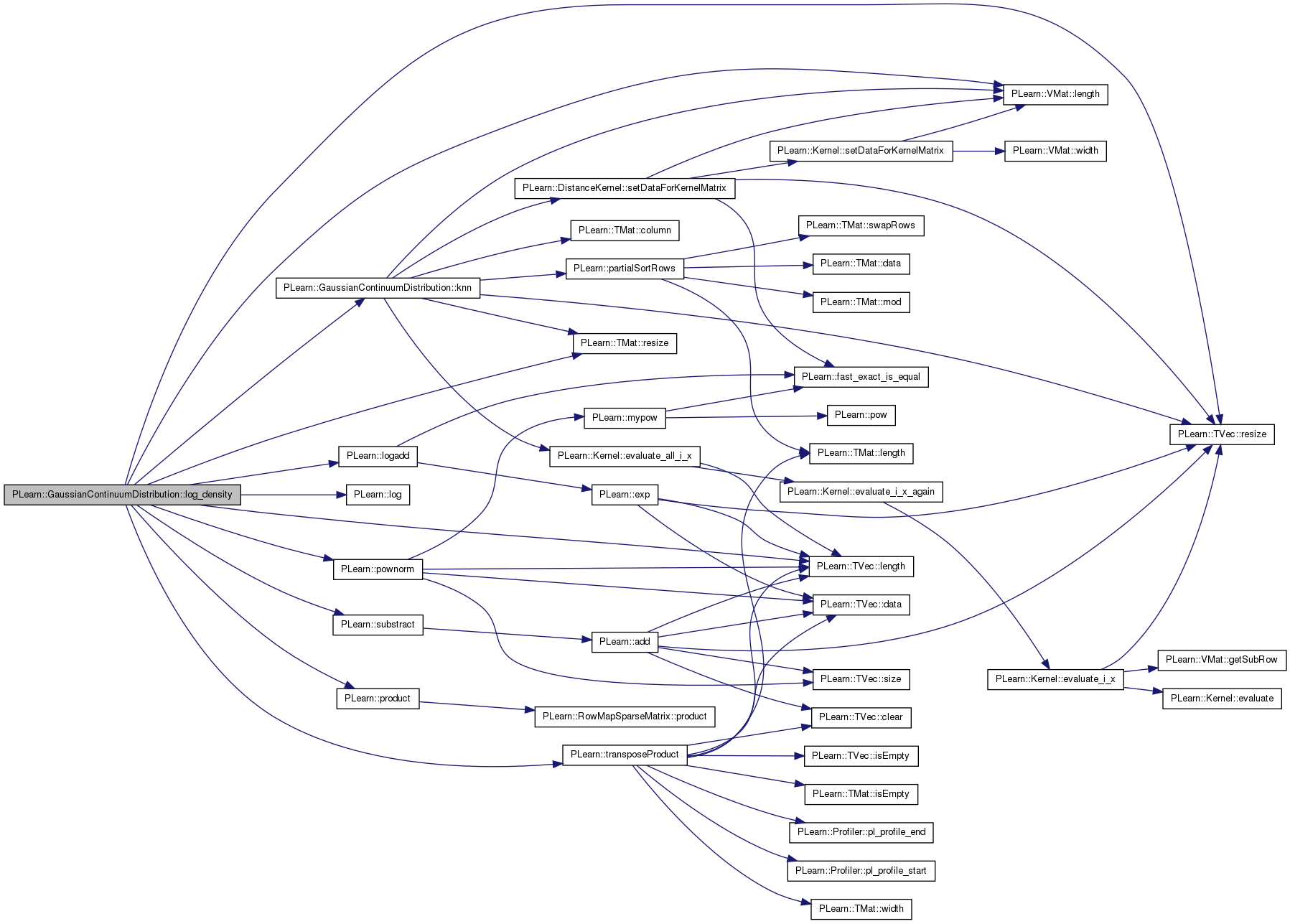

| void PLearn::GaussianContinuumDistribution::knn | ( | const VMat & | vm, |

| const Vec & | x, | ||

| const int & | k, | ||

| TVec< int > & | neighbors, | ||

| bool | sortk | ||

| ) | const [private] |

Definition at line 828 of file GaussianContinuumDistribution.cc.

References PLearn::TMat< T >::column(), d, distances, dk, PLearn::Kernel::evaluate_all_i_x(), i, include_current_point, j, PLearn::VMat::length(), n, PLearn::partialSortRows(), PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::DistanceKernel::setDataForKernelMatrix(), and t_dist.

Referenced by build_(), and log_density().

{

int n = vm->length();

distances.resize(n,2);

distances.column(1) << Vec(0, n-1, 1);

dk.setDataForKernelMatrix(vm);

t_dist.resize(n);

dk.evaluate_all_i_x(x, t_dist);

distances.column(0) << t_dist;

partialSortRows(distances, k, sortk);

neighbors.resize(k);

for (int i = 0, j=0; i < k && j<n; j++)

{

real d = distances(j,0);

if (include_current_point || d>0) //Ouach, caca!!!

{

neighbors[i] = int(distances(j,1));

i++;

}

}

}

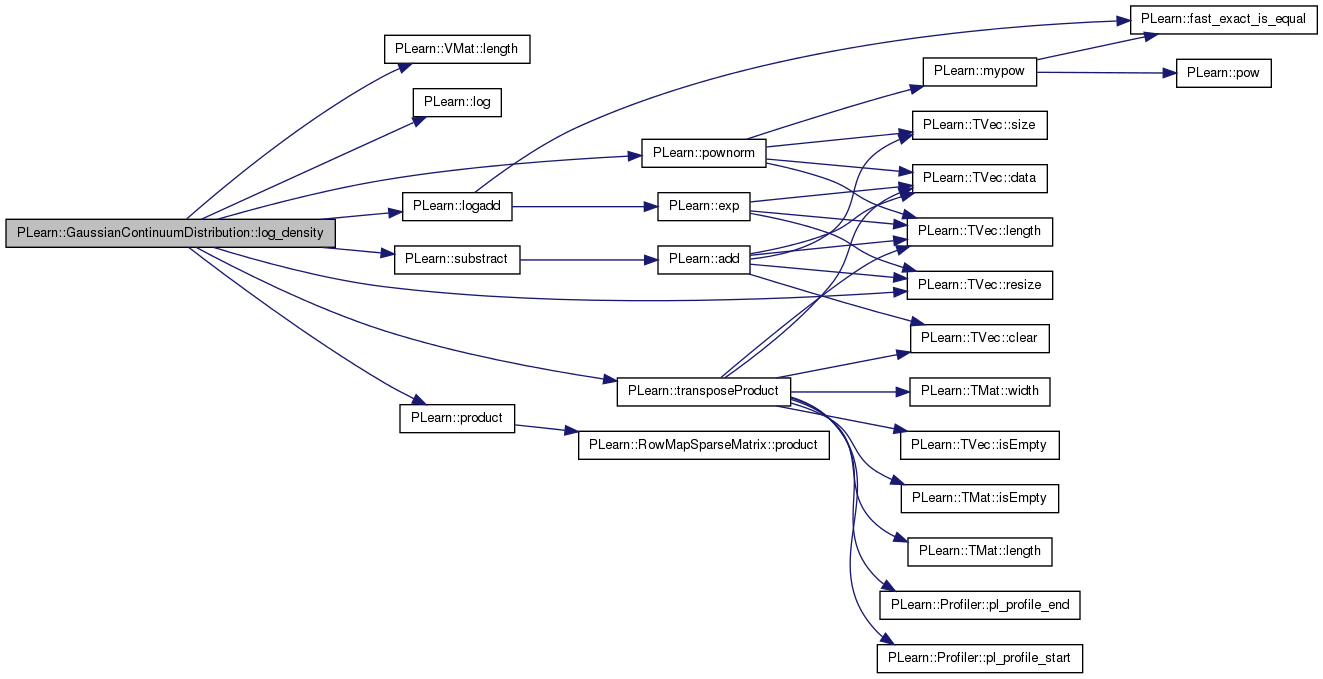

Return log of probability density log(p(y)).

Reimplemented from PLearn::PDistribution.

Definition at line 1320 of file GaussianContinuumDistribution.cc.

References Bs, Fs, knn(), PLearn::VMat::length(), PLearn::TVec< T >::length(), PLearn::log(), Log2Pi, log_gauss, PLearn::logadd(), mus, n, n_dim, n_neighbors_density, neighbor_row, PLearn::pownorm(), PLearn::product(), reference_set, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), sms, sns, PLearn::substract(), t_nn, t_row, PLearn::transposeProduct(), w, w_mat, x, x_minus_neighbor, z, zm, and zn.

{

// Compute log-density.

// Fetching nearest neighbors for density estimation.

knn(reference_set,x,n_neighbors_density,t_nn,bool(0));

w_mat.resize(t_nn.length(), w.length());

Vec w_vec;

t_row << x;

log_gauss.resize(t_nn.length());

real log_ref_set = log((real)reference_set.length());

for(int neighbor=0; neighbor<t_nn.length(); neighbor++)

{

w_vec = w_mat(neighbor);

reference_set->getRow(t_nn[neighbor],neighbor_row);

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mus(t_nn[neighbor]),z);

product(w_vec, Bs[t_nn[neighbor]], z);

transposeProduct(zm, Fs[t_nn[neighbor]], w_vec);

substract(z,zm,zn);

log_gauss[neighbor] = -0.5*(pownorm(zm,2)/sms[t_nn[neighbor]] + pownorm(zn,2)/sns[t_nn[neighbor]]

+ n_dim*log(sms[t_nn[neighbor]]) + (n-n_dim)*log(sns[t_nn[neighbor]])) - n/2.0 * Log2Pi - log_ref_set;

}

return logadd(log_gauss);

}

Return log density of ith point in reference_set.

Definition at line 1346 of file GaussianContinuumDistribution.cc.

References Bs, Fs, PLearn::VMat::length(), PLearn::log(), Log2Pi, log_gauss, PLearn::logadd(), mus, n, n_dim, neighbor_row, PLearn::pownorm(), PLearn::product(), reference_set, PLearn::TVec< T >::resize(), sms, sns, PLearn::substract(), t_row, PLearn::transposeProduct(), w, x_minus_neighbor, z, zm, and zn.

{

// compute log-density

// fetching nearest neighbors for density estimation

//knn(reference_set,x,n_neighbors_density,t_nn,bool(0));

//t_row << x;

reference_set->getRow(i,t_row);

int bla = 0;

log_gauss.resize(reference_set.length()-1);

real log_ref_set = log((real)reference_set.length());

for(int neighbor=0; neighbor<reference_set.length(); neighbor++)

{

if(neighbor == i)

{

bla = 1;

continue;

}

reference_set->getRow(neighbor,neighbor_row);

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mus(neighbor),z);

product(w, Bs[neighbor], z);

transposeProduct(zm, Fs[neighbor], w);

substract(z,zm,zn);

log_gauss[neighbor-bla] = -0.5*(pownorm(zm,2)/sms[neighbor] + pownorm(zn,2)/sns[neighbor]

+ n_dim*log(sms[neighbor]) + (n-n_dim)*log(sns[neighbor])) - n/2.0 * Log2Pi - log_ref_set;

}

return logadd(log_gauss);

}

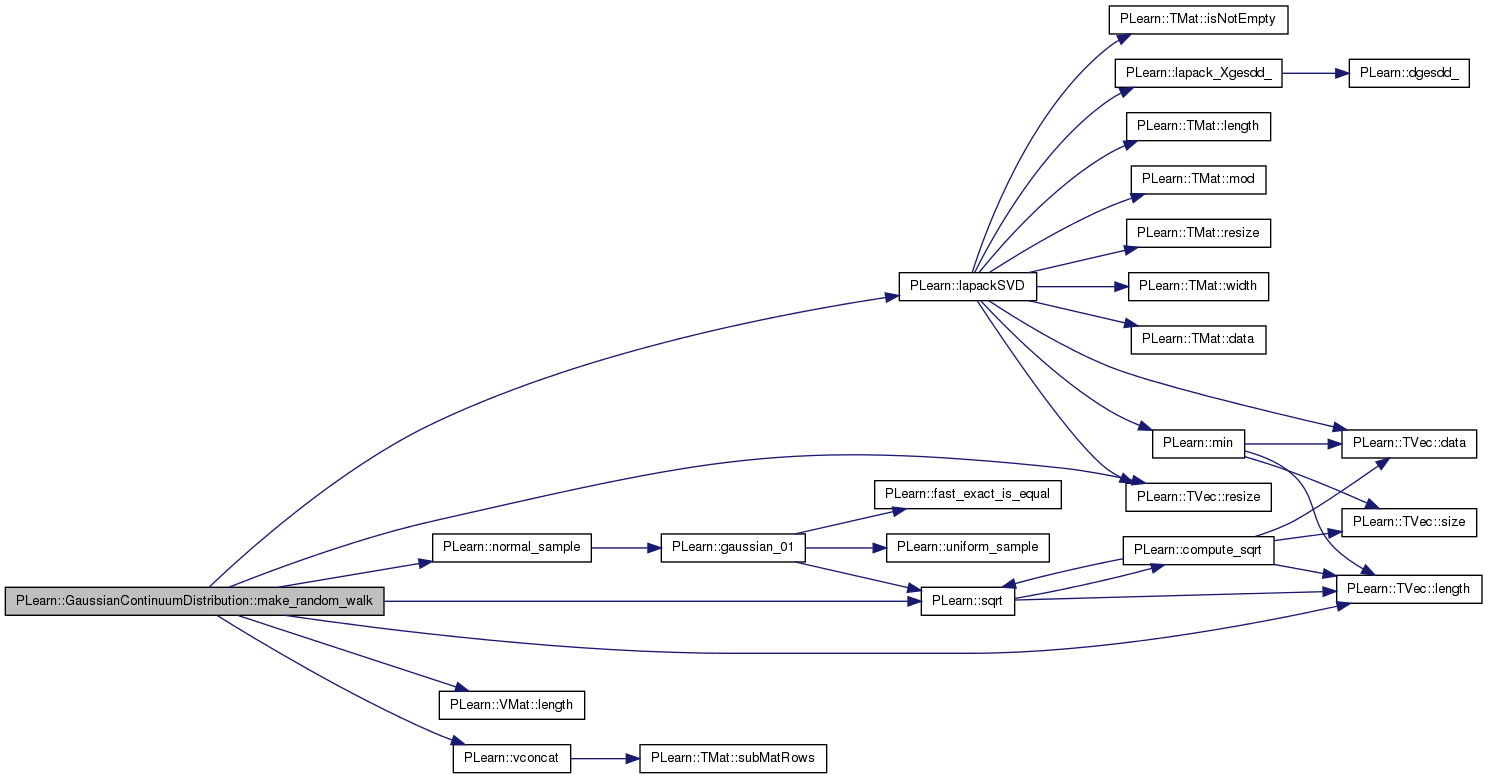

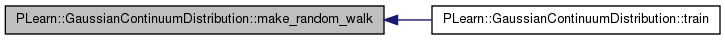

| void PLearn::GaussianContinuumDistribution::make_random_walk | ( | ) | [private] |

Definition at line 850 of file GaussianContinuumDistribution.cc.

References i, ith_step_generated_set, j, PLearn::lapackSVD(), PLearn::TVec< T >::length(), PLearn::VMat::length(), mu, n, n_dim, n_random_walk_per_point, n_random_walk_step, PLearn::normal_sample(), output_f_all, PLERROR, random_walk_step_prop, reference_set, PLearn::TVec< T >::resize(), S_svd, sm, sn, PLearn::sqrt(), svd_threshold, t_row, tangent_plane, PLearn::PLearner::train_set, Ut_svd, V_svd, PLearn::vconcat(), walk_on_noise, and z.

Referenced by train().

{

if(n_random_walk_step < 1) PLERROR("Number of step in random walk should be at least one");

if(n_random_walk_per_point < 1) PLERROR("Number of random walk per training set point should be at least one");

ith_step_generated_set.resize(n_random_walk_step);

Mat generated_set(train_set.length()*n_random_walk_per_point,n);

for(int t=0; t<train_set.length(); t++)

{

train_set->getRow(t,t_row);

output_f_all(t_row);

real this_sm = sm->value[0];

real this_sn = sn->value[0];

Vec this_mu(n); this_mu << mu->value;

static Mat this_F(n_dim,n); this_F << tangent_plane->matValue;

// N.B. this is the SVD of F'

lapackSVD(this_F, Ut_svd, S_svd, V_svd,'A',1.5);

for(int rwp=0; rwp<n_random_walk_per_point; rwp++)

{

TVec<real> z_m(n_dim);

TVec<real> z(n);

for(int i=0; i<n_dim; i++)

z_m[i] = normal_sample();

for(int i=0; i<n; i++)

z[i] = normal_sample();

Vec new_point = generated_set(t*n_random_walk_per_point+rwp);

for(int j=0; j<n; j++)

{

new_point[j] = 0;

for(int k=0; k<n_dim; k++)

new_point[j] += Ut_svd(k,j)*z_m[k];

new_point[j] *= sqrt(this_sm-this_sn);

if(walk_on_noise)

new_point[j] += z[j]*sqrt(this_sn);

}

new_point *= random_walk_step_prop;

new_point += this_mu + t_row;

}

}

// Test of generation of random points

/*

int n_test_gen_points = 3;

int n_test_gen_generated = 30;

Mat test_gen(n_test_gen_points*n_test_gen_generated,n);

for(int p=0; p<n_test_gen_points; p++)

{

for(int t=0; t<n_test_gen_generated; t++)

{

valid_set->getRow(p,t_row);

output_f_all(t_row);

real this_sm = sm->value[0];

real this_sn = sn->value[0];

Vec this_mu(n); this_mu << mu->value;

static Mat this_F(n_dim,n); this_F << tangent_plane->matValue;

// N.B. this is the SVD of F'

lapackSVD(this_F, Ut_svd, S_svd, V_svd);

TVec<real> z_m(n_dim);

TVec<real> z(n);

for(int i=0; i<n_dim; i++)

z_m[i] = normal_sample();

for(int i=0; i<n; i++)

z[i] = normal_sample();

Vec new_point = test_gen(p*n_test_gen_generated+t);

for(int j=0; j<n; j++)

{

new_point[j] = 0;

for(int k=0; k<n_dim; k++)

new_point[j] += Ut_svd(k,j)*z_m[k];

new_point[j] *= sqrt(this_sm-this_sn);

if(walk_on_noise)

new_point[j] += z[j]*sqrt(this_sn);

}

new_point += this_mu + t_row;

}

}

PLearn::save("test_gen.psave",test_gen);

*/

//PLearn::save("gen_points_0.psave",generated_set);

ith_step_generated_set[0] = VMat(generated_set);

for(int step=1; step<n_random_walk_step; step++)

{

Mat generated_set(ith_step_generated_set[step-1].length(),n);

for(int t=0; t<ith_step_generated_set[step-1].length(); t++)

{

ith_step_generated_set[step-1]->getRow(t,t_row);

output_f_all(t_row);

real this_sm = sm->value[0];

real this_sn = sn->value[0];

Vec this_mu(n); this_mu << mu->value;

static Mat this_F(n_dim,n); this_F << tangent_plane->matValue;

// N.B. this is the SVD of F'

lapackSVD(this_F, Ut_svd, S_svd, V_svd,'A',1.5);

TVec<real> z_m(n_dim);

TVec<real> z(n);

for(int i=0; i<n_dim; i++)

z_m[i] = normal_sample();

for(int i=0; i<n; i++)

z[i] = normal_sample();

Vec new_point = generated_set(t);

for(int j=0; j<n; j++)

{

new_point[j] = 0;

for(int k=0; k<n_dim; k++)

if(S_svd[k] > svd_threshold)

new_point[j] += Ut_svd(k,j)*z_m[k];

new_point[j] *= sqrt(this_sm-this_sn);

if(walk_on_noise)

new_point[j] += z[j]*sqrt(this_sn);

}

new_point *= random_walk_step_prop;

new_point += this_mu + t_row;

}

/*

string path = " ";

if(step == n_random_walk_step-1)

path = "gen_points_last.psave";

else

path = "gen_points_" + tostring(step) + ".psave";

PLearn::save(path,generated_set);

*/

ith_step_generated_set[step] = VMat(generated_set);

}

reference_set = vconcat(train_set & ith_step_generated_set);

// Single random walk

/*

Mat single_walk_set(100,n);

train_set->getRow(train_set.length()-1,single_walk_set(0));

for(int step=1; step<100; step++)

{

t_row << single_walk_set(step-1);

output_f_all(t_row);

real this_sm = sm->value[0];

real this_sn = sn->value[0];

Vec this_mu(n); this_mu << mu->value;

static Mat this_F(n_dim,n); this_F << tangent_plane->matValue;

// N.B. this is the SVD of F'

lapackSVD(this_F, Ut_svd, S_svd, V_svd);

TVec<real> z_m(n_dim);

TVec<real> z(n);

for(int i=0; i<n_dim; i++)

z_m[i] = normal_sample();

for(int i=0; i<n; i++)

z[i] = normal_sample();

Vec new_point = single_walk_set(step);

for(int j=0; j<n; j++)

{

new_point[j] = 0;

for(int k=0; k<n_dim; k++)

if(S_svd[k] > svd_threshold)

new_point[j] += Ut_svd(k,j)*z_m[k];

new_point[j] *= sqrt(this_sm-this_sn);

if(walk_on_noise)

new_point[j] += z[j]*sqrt(this_sn);

}

new_point *= random_walk_step_prop;

new_point += this_mu + t_row;

}

PLearn::save("image_single_rw.psave",single_walk_set);

*/

}

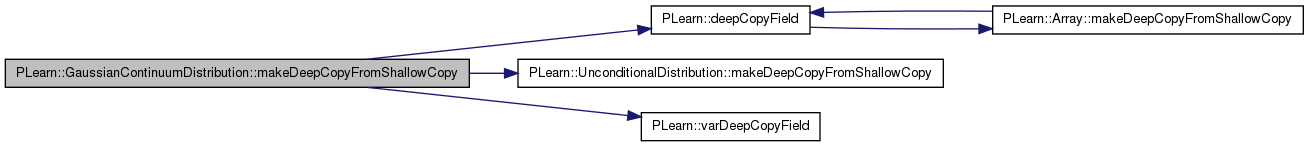

| void PLearn::GaussianContinuumDistribution::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 1117 of file GaussianContinuumDistribution.cc.

References b, Bs, c, cost_of_one_example, PLearn::deepCopyField(), dist, embedding, Fs, ith_step_generated_set, log_gauss, PLearn::UnconditionalDistribution::makeDeepCopyFromShallowCopy(), mu, mu_noisy, mus, muV, noise_var, noisy_data, optimizer, output_embedding, output_f, output_f_all, parameters, predictor, projection_error_f, reference_set, S_svd, sm, smb, sms, smV, sn, snb, sns, snV, sum_nll, tangent_plane, tangent_targets, tangent_targets_and_point, train_nearest_neighbors, Ut_svd, V, V_svd, PLearn::varDeepCopyField(), W, w_mat, and x.

{ inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(cost_of_one_example, copies);

deepCopyField(reference_set,copies);

varDeepCopyField(x, copies);

varDeepCopyField(noise_var, copies);

varDeepCopyField(b, copies);

varDeepCopyField(W, copies);

varDeepCopyField(c, copies);

varDeepCopyField(V, copies);

varDeepCopyField(tangent_targets, copies);

varDeepCopyField(muV, copies);

varDeepCopyField(smV, copies);

varDeepCopyField(smb, copies);

varDeepCopyField(snV, copies);

varDeepCopyField(snb, copies);

varDeepCopyField(mu, copies);

varDeepCopyField(sm, copies);

varDeepCopyField(sn, copies);

varDeepCopyField(mu_noisy, copies);

varDeepCopyField(tangent_plane, copies);

varDeepCopyField(tangent_targets_and_point, copies);

varDeepCopyField(sum_nll, copies);

// varDeepCopyField(min_sig, copies);

// varDeepCopyField(min_d, copies);

varDeepCopyField(embedding, copies);

deepCopyField(dist, copies);

deepCopyField(ith_step_generated_set, copies);

deepCopyField(train_nearest_neighbors, copies);

deepCopyField(Bs, copies);

deepCopyField(Fs, copies);

deepCopyField(mus, copies);

deepCopyField(sms, copies);

deepCopyField(sns, copies);

deepCopyField(Ut_svd, copies);

deepCopyField(V_svd, copies);

deepCopyField(S_svd, copies);

//deepCopyField(dk, copies);

deepCopyField(parameters, copies);

deepCopyField(optimizer, copies);

deepCopyField(predictor, copies);

deepCopyField(output_f, copies);

deepCopyField(output_f_all, copies);

deepCopyField(projection_error_f, copies);

deepCopyField(noisy_data, copies);

deepCopyField(output_embedding, copies);

// TODO : NB: It is not complete !

deepCopyField(log_gauss, copies);

deepCopyField(w_mat, copies);

}

| int PLearn::GaussianContinuumDistribution::outputsize | ( | ) | const [virtual] |

Returned value depends on outputs_def.

Reimplemented from PLearn::PDistribution.

Definition at line 1408 of file GaussianContinuumDistribution.cc.

References n_dim, PLearn::PDistribution::outputs_def, and PLearn::PDistribution::outputsize().

{

switch(outputs_def[0])

{

case 'm':

return n_dim;

break;

default:

return inherited::outputsize();

}

}

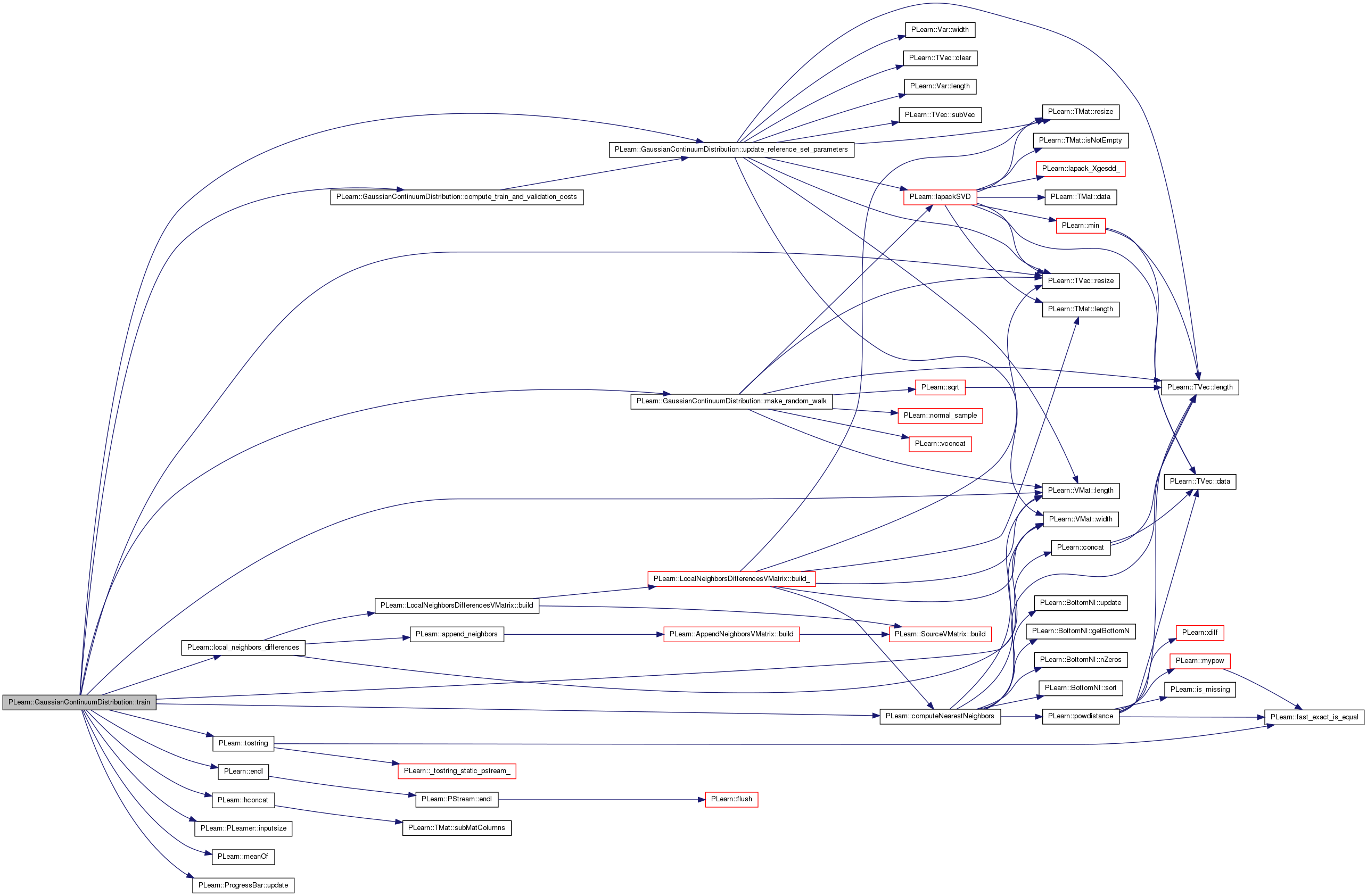

| void PLearn::GaussianContinuumDistribution::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Reimplemented from PLearn::PDistribution.

Definition at line 1180 of file GaussianContinuumDistribution.cc.

References batch_size, compute_train_and_validation_costs(), PLearn::computeNearestNeighbors(), cost_of_one_example, PLearn::endl(), PLearn::hconcat(), PLearn::PLearner::inputsize(), PLearn::VMat::length(), PLearn::local_neighbors_differences(), make_random_walk(), PLearn::meanOf(), n_neighbors, n_random_walk_step, PLearn::PLearner::nstages, optimizer, parameters, PLERROR, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::PLearner::stage, t_row, PLearn::tostring(), train_nearest_neighbors, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::ProgressBar::update(), update_parameters_every_n_epochs, update_reference_set_parameters(), PLearn::PLearner::verbosity, and PLearn::VMat::width().

{

// Set train_stats if not already done.

if (!train_stats)

train_stats = new VecStatsCollector();

// find nearest neighbors...

// ... on the training set

if(stage == 0)

for(int t=0; t<train_set.length(); t++)

{

train_set->getRow(t,t_row);

TVec<int> nn = train_nearest_neighbors(t);

computeNearestNeighbors(train_set, t_row, nn, t);

}

VMat train_set_with_targets;

VMat targets_vmat;

if (!cost_of_one_example)

PLERROR("GaussianContinuumDistribution::train: build has not been run after setTrainingSet!");

targets_vmat = local_neighbors_differences(train_set, n_neighbors, false, true);

train_set_with_targets = hconcat(train_set, targets_vmat);

train_set_with_targets->defineSizes(inputsize()+inputsize()*n_neighbors+1+n_neighbors,0);

int l = train_set->length();

//log_n_examples->value[0] = log(real(l));

int nsamples = batch_size>0 ? batch_size : l;

Var totalcost = meanOf(train_set_with_targets, cost_of_one_example, nsamples);

if(optimizer)

{

optimizer->setToOptimize(parameters, totalcost);

optimizer->build();

}

else PLERROR("GaussianContinuumDistribution::train can't train without setting an optimizer first!");

// number of optimizer stages corresponding to one learner stage (one epoch)

int optstage_per_lstage = l/nsamples;

ProgressBar* pb = 0;

if(report_progress>0)

pb = new ProgressBar("Training GaussianContinuumDistribution from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

t_row.resize(train_set.width());

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

optimizer->optimizeN(*train_stats);

train_stats->finalize();

if(verbosity>2)

cout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

if(stage != 0 && stage%update_parameters_every_n_epochs == 0)

{

compute_train_and_validation_costs();

}

}

if(verbosity>1)

cout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

if(pb)

delete pb;

update_reference_set_parameters();

if(n_random_walk_step > 0)

{

make_random_walk();

update_reference_set_parameters();

}

}

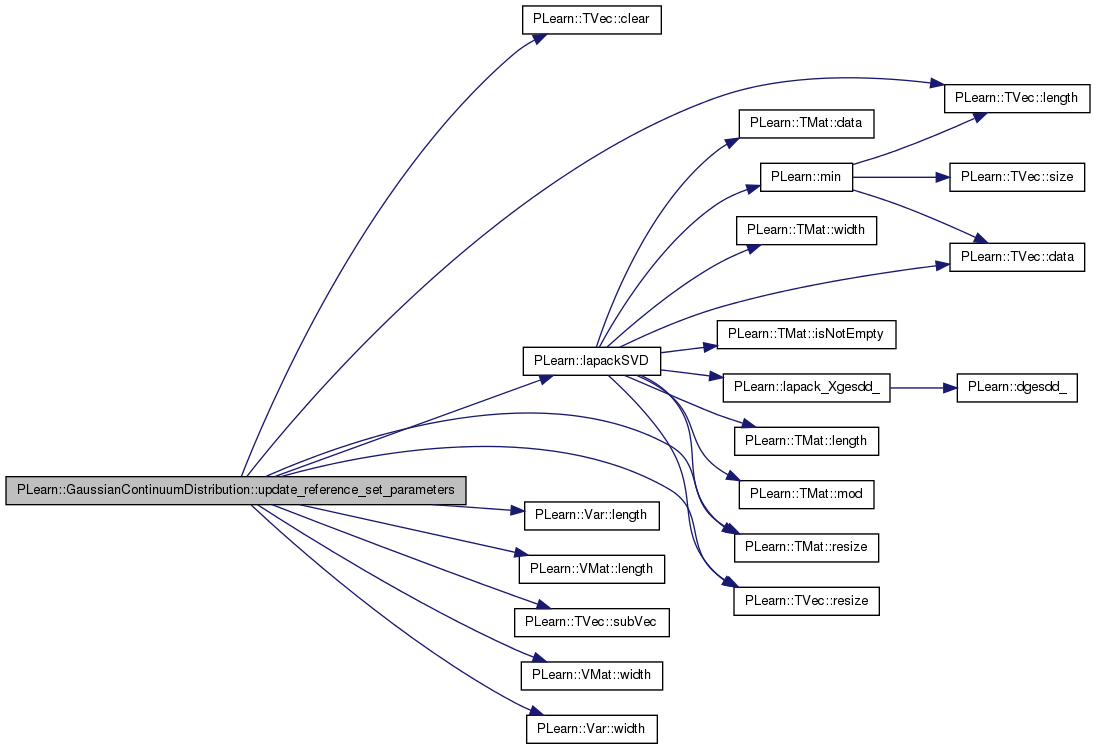

| void PLearn::GaussianContinuumDistribution::update_reference_set_parameters | ( | ) | [private] |

Definition at line 778 of file GaussianContinuumDistribution.cc.

References Bs, PLearn::TVec< T >::clear(), Fs, i, j, PLearn::lapackSVD(), PLearn::Var::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), mus, n, n_dim, predictor, reference_set, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), S_svd, sms, sns, PLearn::TVec< T >::subVec(), svd_threshold, t_row, tangent_plane, Ut_svd, V_svd, PLearn::VMat::width(), and PLearn::Var::width().

Referenced by compute_train_and_validation_costs(), and train().

{

// Compute Fs, Bs, mus, sms, sns

Bs.resize(reference_set.length());

Fs.resize(reference_set.length());

mus.resize(reference_set.length(), n);

sms.resize(reference_set.length());

sns.resize(reference_set.length());

for(int t=0; t<reference_set.length(); t++)

{

Fs[t].resize(tangent_plane.length(), tangent_plane.width());

reference_set->getRow(t,t_row);

predictor->fprop(t_row, Fs[t].toVec() & mus(t) & sms.subVec(t,1) & sns.subVec(t,1));

// computing B

static Mat F_copy;

F_copy.resize(Fs[t].length(),Fs[t].width());

F_copy << Fs[t];

// N.B. this is the SVD of F'

lapackSVD(F_copy, Ut_svd, S_svd, V_svd,'A',1.5);

Bs[t].resize(n_dim,reference_set.width());

Bs[t].clear();

for (int k=0;k<S_svd.length();k++)

{

real s_k = S_svd[k];

if (s_k>svd_threshold) // ignore the components that have too small singular value (more robust solution)

{

real coef = 1/s_k;

for (int i=0;i<n_dim;i++)

{

real* Bi = Bs[t][i];

for (int j=0;j<n;j++)

Bi[j] += V_svd(i,k)*Ut_svd(k,j)*coef;

}

}

}

}

/*

for(int t=0; t<train_set.length(); t++)

{

//train_set->getRow(t,t_row);

p_x->value[t] = log_density(t);

//p_x->value[t] = exp(log_density(t));

}

*/

}

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 219 of file GaussianContinuumDistribution.h.

Definition at line 160 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), and initializeParams().

Var PLearn::GaussianContinuumDistribution::b [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 164 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and train().

Definition at line 104 of file GaussianContinuumDistribution.h.

TVec< Mat > PLearn::GaussianContinuumDistribution::Bs [protected] |

Definition at line 90 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), getEigenvectors(), log_density(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Var PLearn::GaussianContinuumDistribution::c [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 68 of file GaussianContinuumDistribution.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

PP<PDistribution> PLearn::GaussianContinuumDistribution::dist [protected] |

Definition at line 82 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Mat PLearn::GaussianContinuumDistribution::distances [mutable, protected] |

Definition at line 100 of file GaussianContinuumDistribution.h.

Referenced by knn().

DistanceKernel PLearn::GaussianContinuumDistribution::dk [mutable, protected] |

Definition at line 102 of file GaussianContinuumDistribution.h.

Referenced by knn().

Definition at line 151 of file GaussianContinuumDistribution.h.

Referenced by build_(), computeOutput(), and makeDeepCopyFromShallowCopy().

Definition at line 80 of file GaussianContinuumDistribution.h.

Referenced by build_().

Definition at line 140 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Definition at line 80 of file GaussianContinuumDistribution.h.

Referenced by build_().

Definition at line 139 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

TVec< Mat > PLearn::GaussianContinuumDistribution::Fs [protected] |

Definition at line 90 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Definition at line 127 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), and knn().

Definition at line 85 of file GaussianContinuumDistribution.h.

Referenced by make_random_walk(), and makeDeepCopyFromShallowCopy().

To store log(P(x|k)).

Definition at line 116 of file GaussianContinuumDistribution.h.

Referenced by log_density(), and makeDeepCopyFromShallowCopy().

Var PLearn::GaussianContinuumDistribution::min_d [protected] |

Definition at line 79 of file GaussianContinuumDistribution.h.

Referenced by build_(), and initializeParams().

Definition at line 138 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and initializeParams().

Definition at line 141 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::min_sig [protected] |

Definition at line 79 of file GaussianContinuumDistribution.h.

Referenced by build_(), and initializeParams().

Definition at line 137 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and initializeParams().

Var PLearn::GaussianContinuumDistribution::mu [protected] |

Definition at line 76 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), and makeDeepCopyFromShallowCopy().

Definition at line 145 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::mu_noisy [protected] |

Definition at line 76 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Mat PLearn::GaussianContinuumDistribution::mus [protected] |

Definition at line 91 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Var PLearn::GaussianContinuumDistribution::muV [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

int PLearn::GaussianContinuumDistribution::n [protected] |

Definition at line 67 of file GaussianContinuumDistribution.h.

Referenced by build_(), knn(), log_density(), make_random_walk(), and update_reference_set_parameters().

Definition at line 146 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), log_density(), make_random_walk(), outputsize(), and update_reference_set_parameters().

Definition at line 162 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 143 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 144 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), and log_density().

Definition at line 134 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and make_random_walk().

Definition at line 133 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), make_random_walk(), and train().

Definition at line 77 of file GaussianContinuumDistribution.h.

Vec PLearn::GaussianContinuumDistribution::neighbor_row [mutable, protected] |

Definition at line 98 of file GaussianContinuumDistribution.h.

Referenced by build_(), and log_density().

Definition at line 131 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Definition at line 132 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::noise_var [protected] |

Definition at line 70 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 157 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 166 of file GaussianContinuumDistribution.h.

Definition at line 150 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 152 of file GaussianContinuumDistribution.h.

Referenced by build_(), computeOutput(), and makeDeepCopyFromShallowCopy().

Definition at line 153 of file GaussianContinuumDistribution.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 154 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), and makeDeepCopyFromShallowCopy().

Definition at line 161 of file GaussianContinuumDistribution.h.

Definition at line 77 of file GaussianContinuumDistribution.h.

Referenced by build_().

Definition at line 77 of file GaussianContinuumDistribution.h.

Referenced by build_().

Var PLearn::GaussianContinuumDistribution::p_target [protected] |

Definition at line 77 of file GaussianContinuumDistribution.h.

Referenced by build_().

Var PLearn::GaussianContinuumDistribution::p_x [protected] |

Definition at line 77 of file GaussianContinuumDistribution.h.

Referenced by build_(), and initializeParams().

Definition at line 111 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 155 of file GaussianContinuumDistribution.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Definition at line 156 of file GaussianContinuumDistribution.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 128 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and make_random_walk().

Definition at line 118 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), getTrainPoint(), log_density(), make_random_walk(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Vec PLearn::GaussianContinuumDistribution::S_svd [protected] |

Definition at line 96 of file GaussianContinuumDistribution.h.

Referenced by make_random_walk(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Definition at line 135 of file GaussianContinuumDistribution.h.

Definition at line 147 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::sm [protected] |

Definition at line 76 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), and makeDeepCopyFromShallowCopy().

Definition at line 142 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::smb [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Vec PLearn::GaussianContinuumDistribution::sms [protected] |

Definition at line 92 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Var PLearn::GaussianContinuumDistribution::smV [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::GaussianContinuumDistribution::sn [protected] |

Definition at line 76 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), and makeDeepCopyFromShallowCopy().

Var PLearn::GaussianContinuumDistribution::snb [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Vec PLearn::GaussianContinuumDistribution::sns [protected] |

Definition at line 93 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Var PLearn::GaussianContinuumDistribution::snV [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::GaussianContinuumDistribution::sum_nll [protected] |

Definition at line 78 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 167 of file GaussianContinuumDistribution.h.

Referenced by build_(), declareOptions(), make_random_walk(), and update_reference_set_parameters().

Vec PLearn::GaussianContinuumDistribution::t_dist [mutable, protected] |

Definition at line 99 of file GaussianContinuumDistribution.h.

Referenced by knn().

TVec<int> PLearn::GaussianContinuumDistribution::t_nn [mutable] |

Definition at line 115 of file GaussianContinuumDistribution.h.

Referenced by log_density().

Vec PLearn::GaussianContinuumDistribution::t_row [mutable, protected] |

Definition at line 98 of file GaussianContinuumDistribution.h.

Referenced by build_(), log_density(), make_random_walk(), train(), and update_reference_set_parameters().

Definition at line 75 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Definition at line 74 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 74 of file GaussianContinuumDistribution.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 77 of file GaussianContinuumDistribution.h.

Referenced by build_().

Definition at line 88 of file GaussianContinuumDistribution.h.

Definition at line 89 of file GaussianContinuumDistribution.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 148 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and train().

Definition at line 129 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Definition at line 130 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Mat PLearn::GaussianContinuumDistribution::Ut_svd [protected] |

Definition at line 95 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Var PLearn::GaussianContinuumDistribution::V [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Mat PLearn::GaussianContinuumDistribution::V_svd [protected] |

Definition at line 95 of file GaussianContinuumDistribution.h.

Referenced by build_(), make_random_walk(), makeDeepCopyFromShallowCopy(), and update_reference_set_parameters().

Definition at line 149 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::W [protected] |

Definition at line 71 of file GaussianContinuumDistribution.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Vec PLearn::GaussianContinuumDistribution::w [mutable, protected] |

Definition at line 97 of file GaussianContinuumDistribution.h.

Referenced by build_(), and log_density().

To store local coordinates.

Definition at line 117 of file GaussianContinuumDistribution.h.

Referenced by log_density(), and makeDeepCopyFromShallowCopy().

Definition at line 136 of file GaussianContinuumDistribution.h.

Referenced by declareOptions(), and make_random_walk().

Definition at line 126 of file GaussianContinuumDistribution.h.

Referenced by build_(), and declareOptions().

Var PLearn::GaussianContinuumDistribution::x [protected] |

Definition at line 70 of file GaussianContinuumDistribution.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::GaussianContinuumDistribution::x_minus_neighbor [mutable, protected] |

Definition at line 97 of file GaussianContinuumDistribution.h.

Referenced by build_(), and log_density().

Vec PLearn::GaussianContinuumDistribution::z [mutable, protected] |

Definition at line 97 of file GaussianContinuumDistribution.h.

Referenced by build_(), log_density(), and make_random_walk().

Vec PLearn::GaussianContinuumDistribution::zm [mutable, protected] |

Definition at line 97 of file GaussianContinuumDistribution.h.

Referenced by build_(), and log_density().

Vec PLearn::GaussianContinuumDistribution::zn [mutable, protected] |

Definition at line 97 of file GaussianContinuumDistribution.h.

Referenced by build_(), and log_density().

1.7.4

1.7.4