|

PLearn 0.1

|

|

PLearn 0.1

|

#include <ConditionalDensityNet.h>

Definition at line 53 of file ConditionalDensityNet.h.

typedef PDistribution PLearn::ConditionalDensityNet::inherited [private] |

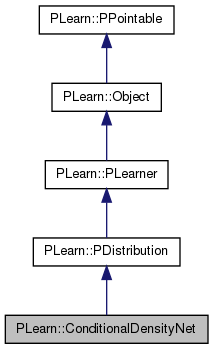

Reimplemented from PLearn::PDistribution.

Definition at line 58 of file ConditionalDensityNet.h.

| PLearn::ConditionalDensityNet::ConditionalDensityNet | ( | ) |

Definition at line 72 of file ConditionalDensityNet.cc.

: nhidden(0), nhidden2(0), weight_decay(0), bias_decay(1e-6), layer1_weight_decay(0), layer1_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), direct_in_to_out_weight_decay(0), penalty_type("L2_square"), L1_penalty(false), direct_in_to_out(false), batch_size(1), c_penalization(0), maxY(1), // if Y is normalized to be in interval [0,1], that would be OK thresholdY(0.1), log_likelihood_vs_squared_error_balance(1), separate_mass_point(1), n_output_density_terms(0), generate_precision(1e-3), steps_type("sloped_steps"), centers_initialization("data"), curve_positions("uniform"), scale(5.0), unconditional_p0(0.01), mu_is_fixed(true), initial_hardness(1) {}

| string PLearn::ConditionalDensityNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| OptionList & PLearn::ConditionalDensityNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| RemoteMethodMap & PLearn::ConditionalDensityNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| Object * PLearn::ConditionalDensityNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| StaticInitializer ConditionalDensityNet::_static_initializer_ & PLearn::ConditionalDensityNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

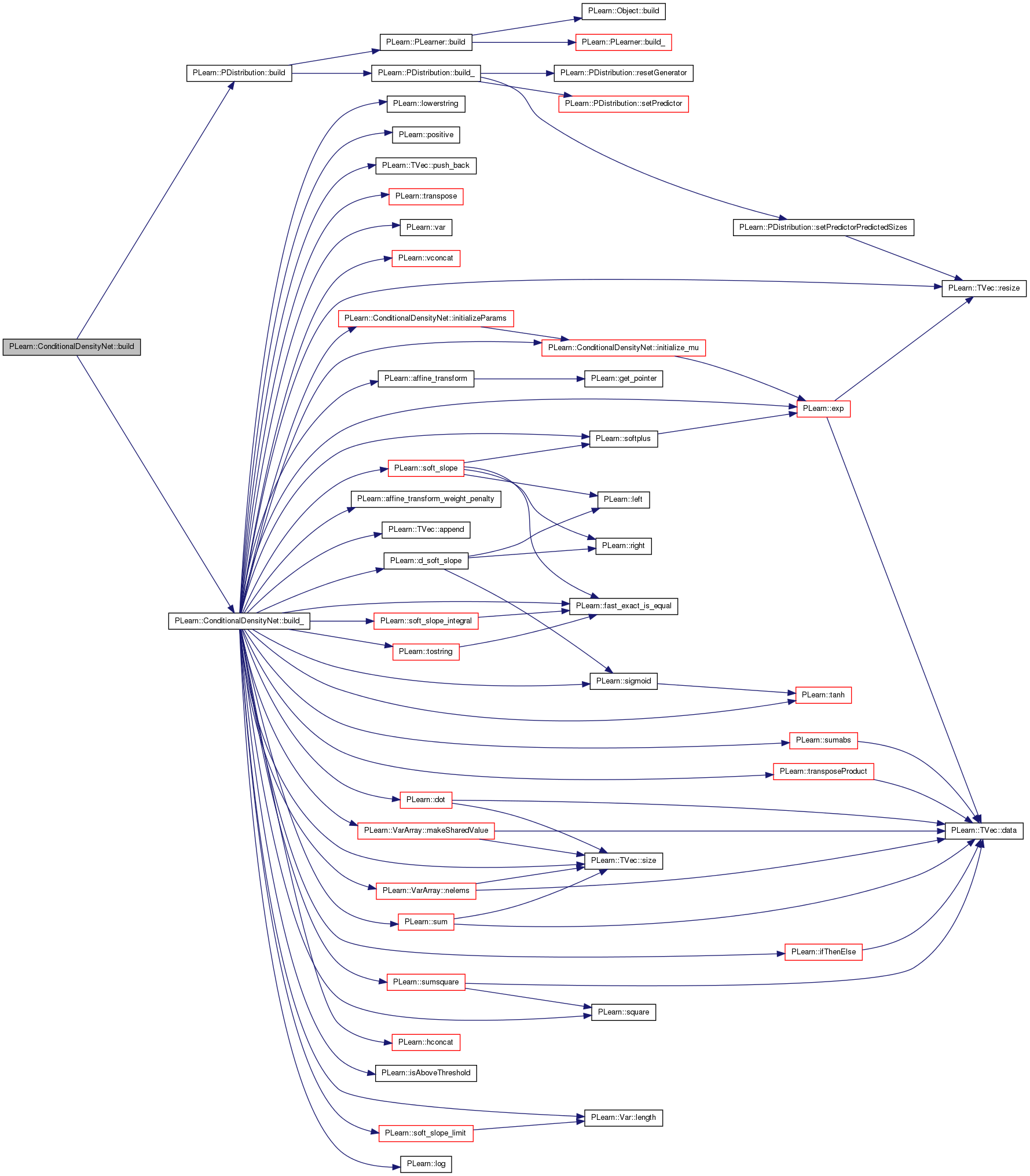

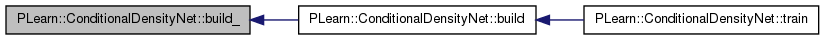

| void PLearn::ConditionalDensityNet::build | ( | ) | [virtual] |

simply calls inherited::build() then build_()

Reimplemented from PLearn::PDistribution.

Definition at line 774 of file ConditionalDensityNet.cc.

References PLearn::PDistribution::build(), and build_().

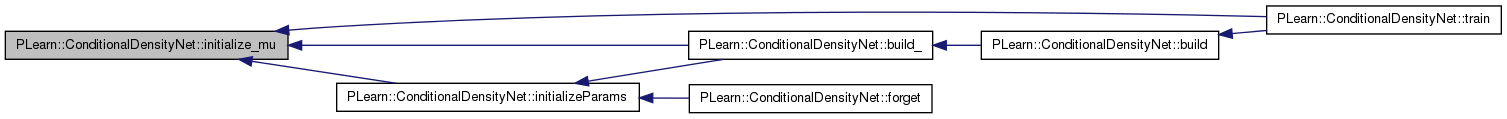

Referenced by train().

{

inherited::build();

build_();

}

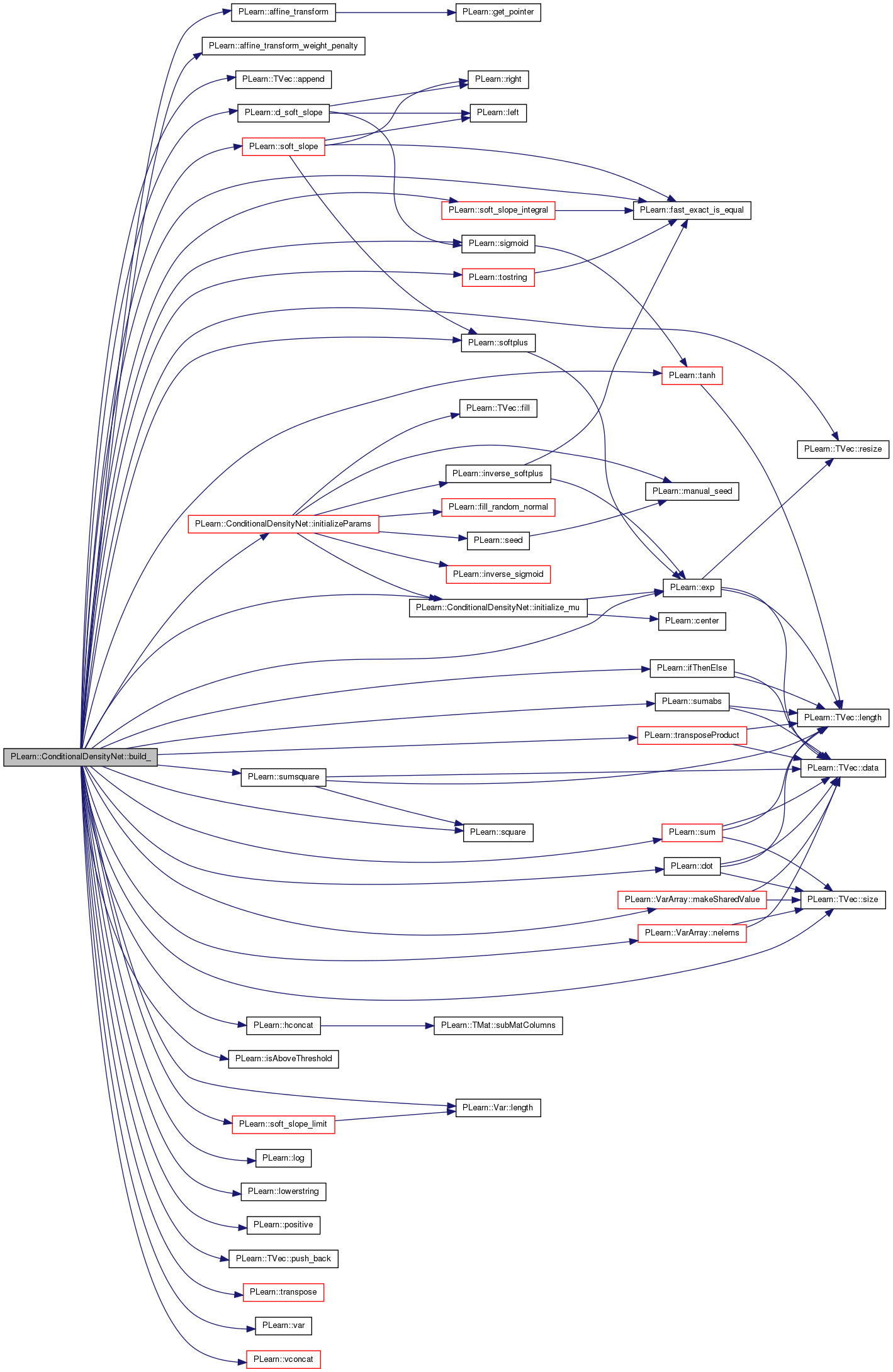

| void PLearn::ConditionalDensityNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PDistribution.

Definition at line 306 of file ConditionalDensityNet.cc.

References a, PLearn::affine_transform(), PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), b, bias_decay, c, c_penalization, cdf_f, centers, centers_M, costs, cum_denominator, cum_numerator, cumulative, curve_positions, PLearn::d_soft_slope(), delta_steps, density, density_f, direct_in_to_out, direct_in_to_out_weight_decay, PLearn::dot(), PLearn::exp(), expected_value, f, PLearn::fast_exact_is_equal(), PLearn::hconcat(), i, PLearn::ifThenElse(), in2distr_f, initial_hardness, initial_hardnesses, initialize_mu(), initializeParams(), input, PLearn::PLearner::inputsize_, invars, PLearn::isAboveThreshold(), j, L1_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::Var::length(), PLearn::log(), log_likelihood_vs_squared_error_balance, PLearn::PDistribution::lower_bound, PLearn::lowerstring(), PLearn::VarArray::makeSharedValue(), mass_cost, maxY, mean_f, mu, mu_is_fixed, PLearn::PDistribution::n_curve_points, n_output_density_terms, PLearn::VarArray::nelems(), nhidden, nhidden2, output, output_and_target, output_and_target_values, output_layer_bias_decay, output_layer_weight_decay, outputs, PLearn::PDistribution::outputs_def, params, paramsvalues, penalties, penalty_type, PLDEPRECATED, PLERROR, PLWARNING, pos_a, pos_b, pos_c, pos_y_cost, PLearn::positive(), PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), sampleweight, scale, separate_mass_point, PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::soft_slope(), PLearn::soft_slope_integral(), PLearn::soft_slope_limit(), PLearn::softplus(), PLearn::square(), steps, steps_0, steps_gradient, steps_integral, steps_M, steps_type, PLearn::sum(), PLearn::sumabs(), PLearn::sumsquare(), PLearn::tanh(), target, PLearn::PLearner::targetsize_, test_costf, test_costs, thresholdY, PLearn::tostring(), PLearn::PLearner::train_set, training_cost, PLearn::transpose(), PLearn::transposeProduct(), unconditional_cdf, unconditional_delta_cdf, PLearn::PDistribution::upper_bound, PLearn::var(), PLearn::vconcat(), w1, w2, wdirect, weight_decay, PLearn::PLearner::weightsize_, wout, and y_values.

Referenced by build().

{

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

lower_bound = 0;

upper_bound = maxY;

int n_output_parameters = mu_is_fixed?(1+n_output_density_terms*2):(1+n_output_density_terms*3);

if (n_curve_points<0)

n_curve_points = n_output_density_terms+1;

// init. basic vars

input = Var(n_input, "input");

output = input;

params.resize(0);

// first hidden layer

if(nhidden>0)

{

w1 = Var(1+n_input, nhidden, "w1");

output = tanh(affine_transform(output,w1));

params.append(w1);

}

// second hidden layer

if(nhidden2>0)

{

w2 = Var(1+nhidden, nhidden2, "w2");

output = tanh(affine_transform(output,w2));

params.append(w2);

}

if (nhidden2>0 && nhidden==0)

PLERROR("ConditionalDensityNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",nhidden2);

if (nhidden==-1)

// special code meaning that the inputs should be ignored, only use biases

{

wout = Var(1, n_output_parameters, "wout");

output = transpose(wout);

}

// output layer before transfer function

else

{

wout = Var(1+output->size(), n_output_parameters, "wout");

output = affine_transform(output,wout);

}

params.append(wout);

// direct in-to-out layer

if(direct_in_to_out)

{

wdirect = Var(n_input, n_output_parameters, "wdirect");

//wdirect = Var(1+inputsize(), n_output_parameters, "wdirect");

output += transposeProduct(wdirect, input);// affine_transform(input,wdirect);

params.append(wdirect);

}

/*

* target and weights

*/

target = Var(n_target, "target");

if(weightsize_>0)

{

if (weightsize_!=1)

PLERROR("ConditionalDensityNet: expected weightsize to be 1 or 0 (or unspecified = -1, meaning 0), got %d",weightsize_);

sampleweight = Var(1, "weight");

}

// output = parameters of the Y distribution

int i=0;

a = output[i++]; a->setName("a");

//b = new SubMatVariable(output,0,i,1,n_output_density_terms);

b = new SubMatVariable(output,i,0,n_output_density_terms,1);

b->setName("b");

i+=n_output_density_terms;

//c = new SubMatVariable(output,0,i,1,n_output_density_terms);

c = new SubMatVariable(output,i,0,n_output_density_terms,1);

c->setName("c");

// we don't want to clear mu if this build is called

// just after a load(), because mu is a learnt option

if (!mu || (mu->length()!=n_output_density_terms && train_set))

{

if (mu_is_fixed)

//mu = Var(1,n_output_density_terms);

mu = Var(n_output_density_terms,1);

else

{

i+=n_output_density_terms;

//mu = new SubMatVariable(output,0,i,1,n_output_density_terms);

mu = new SubMatVariable(output,i,0,n_output_density_terms,1);

}

}

mu->setName("mu");

/*

* output density

*/

Var nll; // negative log likelihood

Var max_y = var(maxY);

Var left_side = vconcat(var(0.0) & (new SubMatVariable(mu,0,0,n_output_density_terms-1,1)));

centers = target-mu;

centers_M = max_y-mu;

unconditional_cdf.resize(n_output_density_terms);

if (unconditional_delta_cdf)

{

// don't clear it if this build is called just after a load

if (unconditional_delta_cdf.length()!=n_output_density_terms)

unconditional_delta_cdf->resize(n_output_density_terms,1);

}

else

unconditional_delta_cdf = Var(n_output_density_terms,1);

initial_hardnesses = var(initial_hardness) / (mu - left_side);

pos_b = softplus(b)*unconditional_delta_cdf;

pos_c = softplus(c)*initial_hardnesses;

Var scaled_centers = pos_c*centers;

// scaled centers evaluated at target = M

Var scaled_centers_M = pos_c*centers_M;

// scaled centers evaluated at target = 0

Var scaled_centers_0 = -pos_c*mu;

Var lhopital, inverse_denominator, density_numerator;

if (separate_mass_point)

{

pos_a = sigmoid(a);

if (steps_type=="sigmoid_steps")

{

steps = sigmoid(scaled_centers);

// steps evaluated at target = M

steps_M = sigmoid(scaled_centers_M);

steps_0 = sigmoid(scaled_centers_0);

// derivative of steps wrt target

steps_gradient = pos_c*steps*(1-steps);

steps_integral = (softplus(scaled_centers_M) - softplus(scaled_centers_0))/pos_c;

delta_steps = centers_M*steps_M + mu*sigmoid(scaled_centers_0);

}

else if (steps_type=="sloped_steps")

{

steps = soft_slope(target, pos_c, left_side, mu);

steps_M = soft_slope(max_y, pos_c, left_side, mu);

steps_0 = soft_slope(var(0.0), pos_c, left_side, mu);

steps_gradient = d_soft_slope(target, pos_c, left_side, mu);

steps_integral = soft_slope_integral(pos_c,left_side,mu,0.0,maxY);

delta_steps = soft_slope_limit(target, pos_c, left_side, mu);

}

else PLERROR("ConditionalDensityNet::build, steps_type option value unknown: %s",steps_type.c_str());

density_numerator = dot(pos_b,steps_gradient);

cum_denominator = dot(pos_b,positive(steps_M-steps_0));

inverse_denominator = 1.0/cum_denominator;

cum_numerator = dot(pos_b,(steps-steps_0));

cumulative = pos_a + (1-pos_a) * cum_numerator * inverse_denominator;

density = density_numerator * inverse_denominator; // this is the conditional density for Y>0

// apply l'hopital rule if pos_c --> 0 to avoid blow-up (N.B. lim_{pos_c->0} pos_b/pos_c*steps_integral = pos_b*delta_steps)

lhopital = ifThenElse(isAboveThreshold(pos_c,1e-20),steps_integral,delta_steps);

expected_value = max_y - ((pos_a-(1-pos_a)*inverse_denominator*dot(pos_b,steps_0))*max_y +

(1-pos_a)*dot(pos_b,lhopital)*inverse_denominator);

mass_cost = -log(ifThenElse(isAboveThreshold(target,0.0,1,0,true),(1-pos_a),pos_a));

pos_y_cost = ifThenElse(isAboveThreshold(target,0.0,1,0,true),-log(density),var(0.0));

nll = -log(ifThenElse(isAboveThreshold(target,0.0,1,0,true),density*(1-pos_a),pos_a));

}

else

{

pos_a = var(0.0);

if (steps_type=="sigmoid_steps")

{

steps = sigmoid(scaled_centers);

// steps evaluated at target = M

steps_M = sigmoid(scaled_centers_M);

steps_0 = sigmoid(scaled_centers_0);

// derivative of steps wrt target

steps_gradient = pos_c*steps*(1-steps);

steps_integral = (softplus(scaled_centers_M) - softplus(scaled_centers_0))/pos_c;

delta_steps = centers_M*steps_M + mu*sigmoid(scaled_centers_0);

}

else if (steps_type=="sloped_steps")

{

steps = soft_slope(target, pos_c, left_side, mu);

steps_M = soft_slope(max_y, pos_c, left_side, mu);

steps_0 = soft_slope(var(0.0), pos_c, left_side, mu);

steps_gradient = d_soft_slope(target, pos_c, left_side, mu);

steps_integral = soft_slope_integral(pos_c,left_side,mu,0.0,maxY);

delta_steps = soft_slope_limit(target, pos_c, left_side, mu);

}

else PLERROR("ConditionalDensityNet::build, steps_type option value unknown: %s",steps_type.c_str());

density_numerator = dot(pos_b,steps_gradient);

cum_denominator = dot(pos_b,steps_M - steps_0);

inverse_denominator = 1.0/cum_denominator;

cum_numerator = dot(pos_b,steps - steps_0);

cumulative = cum_numerator * inverse_denominator;

density = density_numerator * inverse_denominator;

// apply l'hopital rule if pos_c --> 0 to avoid blow-up (N.B. lim_{pos_c->0} pos_b/pos_c*steps_integral = pos_b*delta_steps)

lhopital = ifThenElse(isAboveThreshold(pos_c,1e-20),steps_integral,delta_steps);

expected_value = dot(pos_b,lhopital)*inverse_denominator;

nll = -log(ifThenElse(isAboveThreshold(target,0.0,1,0,true),density,cumulative));

}

max_y->setName("maxY");

left_side->setName("left_side");

pos_a->setName("pos_a");

pos_b->setName("pos_b");

pos_c->setName("pos_c");

steps->setName("steps");

steps_M->setName("steps_M");

steps_integral->setName("steps_integral");

expected_value->setName("expected_value");

density_numerator->setName("density_numerator");

cum_denominator->setName("cum_denominator");

inverse_denominator->setName("inverse_denominator");

cum_numerator->setName("cum_numerator");

cumulative->setName("cumulative");

density->setName("density");

lhopital->setName("lhopital");

/*

* cost functions:

* training_criterion = log_likelihood_vs_squared_error_balance*neg_log_lik

* +(1-log_likelihood_vs_squared_error_balance)*squared_err

* +penalties

* neg_log_lik = -log(1_{target=0} cumulative + 1_{target>0} density)

* squared_err = square(target - expected_value)

*/

costs.resize(3);

costs[1] = nll;

costs[2] = square(target-expected_value);

// for debugging gradient computation error

if (fast_exact_is_equal(log_likelihood_vs_squared_error_balance, 1))

costs[0] = costs[1];

else if (fast_exact_is_equal(log_likelihood_vs_squared_error_balance, 0))

costs[0] = costs[2];

else costs[0] = log_likelihood_vs_squared_error_balance*costs[1]+

(1-log_likelihood_vs_squared_error_balance)*costs[2];

if (c_penalization > 0) {

costs[0] = costs[0] + c_penalization * sumsquare(c);

}

// for debugging

//costs[0] = mass_cost + pos_y_cost;

//costs[1] = mass_cost;

//costs[2] = pos_y_cost;

/*

* weight and bias decay penalty

*/

if( L1_penalty )

{

PLDEPRECATED("Option \"L1_penalty\" deprecated. Please use \"penalty_type = L1\" instead.");

L1_penalty = 0;

penalty_type = "L1";

}

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l1_square" || pt == "l1 square" || pt == "l1square" )

penalty_type = "L1_square";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("L2 penalty not supported, assuming you want L2 square");

penalty_type = "L2_square";

}

else

PLERROR("penalty_type \"%s\" not supported", penalty_type.c_str());

// create penalties

penalties.resize(0); // prevents penalties from being added twice by consecutive builds

if(w1 && (!fast_exact_is_equal(layer1_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(layer1_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w1, (layer1_weight_decay + weight_decay), (layer1_bias_decay + bias_decay), penalty_type));

if(w2 && (!fast_exact_is_equal(layer2_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(layer2_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w2, (layer2_weight_decay + weight_decay), (layer2_bias_decay + bias_decay), penalty_type));

if(wout && (!fast_exact_is_equal(output_layer_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(output_layer_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(wout, (output_layer_weight_decay + weight_decay),

(output_layer_bias_decay + bias_decay), penalty_type));

if(wdirect && !fast_exact_is_equal(direct_in_to_out_weight_decay + weight_decay, 0))

{

if (penalty_type == "L1_square")

penalties.append(square(sumabs(wdirect))*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type == "L1")

penalties.append(sumabs(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type == "L2_square")

penalties.append(sumsquare(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

}

test_costs = hconcat(costs);

// apply penalty to cost

if(penalties.size() != 0) {

// only multiply by sampleweight if there are weights

if (weightsize_>0)

training_cost = hconcat(sampleweight*sum(hconcat(costs[0] & penalties))

& (test_costs*sampleweight));

else {

training_cost = hconcat(sum(hconcat(costs[0] & penalties)) & test_costs);

}

}

else {

// only multiply by sampleweight if there are weights

if(weightsize_>0) {

training_cost = test_costs*sampleweight;

} else {

training_cost = test_costs;

}

}

training_cost->setName("training_cost");

test_costs->setName("test_costs");

output->setName("output");

// Shared values hack...

bool use_paramsvalues=(bool)paramsvalues && (paramsvalues.size() == params.nelems());

if(use_paramsvalues)

{

params << paramsvalues;

initialize_mu(mu->value);

}

else

{

paramsvalues.resize(params.nelems());

initializeParams();

}

params.makeSharedValue(paramsvalues);

VarArray output_and_target = output & target;

output_and_target_values.resize(output.length()+target.length());

output_and_target.makeSharedValue(output_and_target_values);

cdf_f = Func(output_and_target,cumulative);

mean_f = Func(output,expected_value);

density_f = Func(output_and_target,density);

// Funcs

VarArray outvars;

VarArray testinvars;

invars.resize(0);

if(input)

{

invars.push_back(input);

testinvars.push_back(input);

}

if(expected_value)

{

outvars.push_back(expected_value);

}

if(target)

{

invars.push_back(target);

testinvars.push_back(target);

outvars.push_back(target);

}

if(sampleweight)

{

invars.push_back(sampleweight);

}

VarArray outputs_array;

for (unsigned int k=0;k<outputs_def.length();k++)

{

if (outputs_def[k]=='e')

outputs_array &= expected_value;

else if (outputs_def[k]=='t')

{

Func survival_f(target&output,var(1.0)-cumulative);

Var threshold_y(1,1);

threshold_y->valuedata[0]=thresholdY;

outputs_array &= survival_f(threshold_y & output);

}

else if (outputs_def[k]=='S' || outputs_def[k]=='C' ||

outputs_def[k]=='L' || outputs_def[k]=='D')

{

Func prob_f(target&output,outputs_def[k]=='S'?(var(1.0)-cumulative):

(outputs_def[k]=='C'?cumulative:

(outputs_def[k]=='D'?density:log(density))));

y_values.resize(n_curve_points);

if (curve_positions=="uniform")

{

real delta = maxY/(n_curve_points-1);

for (int j=0;j<n_curve_points;j++)

{

y_values[j] = var(j*delta);

y_values[j]->setName("y"+tostring(j));

outputs_array &= prob_f(y_values[j] & output);

}

} else // log-scale

{

real denom = 1.0/(1-exp(-scale));

for (int j=0;j<n_curve_points;j++)

{

y_values[j] = var((exp(scale*(j-n_output_density_terms)/n_output_density_terms)-exp(-scale))*denom);

y_values[j]->setName("y"+tostring(j));

outputs_array &= prob_f(y_values[j] & output);

}

}

} else

outputs_array &= expected_value;

// PLERROR("ConditionalDensityNet::build: can't handle outputs_def with option value = %c",outputs_def[k]);

}

outputs = hconcat(outputs_array);

if (mu_is_fixed)

f = Func(input, params&mu, outputs);

else

f = Func(input, params, outputs);

f->recomputeParents();

in2distr_f = Func(input,pos_a);

in2distr_f->recomputeParents();

if (mu_is_fixed)

test_costf = Func(testinvars, params&mu, outputs&test_costs);

else

test_costf = Func(testinvars, params, outputs&test_costs);

if (use_paramsvalues)

test_costf->recomputeParents();

}

// PDistribution::finishConditionalBuild();

}

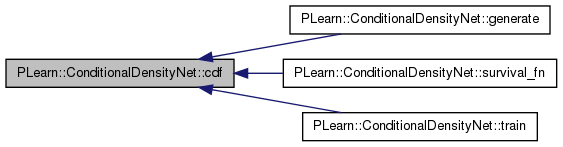

return survival fn = P(X<=x)

Reimplemented from PLearn::PDistribution.

Definition at line 877 of file ConditionalDensityNet.cc.

References cdf_f, output_and_target_values, PLERROR, and target.

Referenced by generate(), survival_fn(), and train().

{

Vec cum(1);

target->value << y;

cdf_f->fprop(output_and_target_values,cum);

#ifdef BOUNDCHECK

if (cum[0] < -1e-3)

PLERROR("In ConditionalDensityNet::cdf - The cdf is < 0");

#endif

return cum[0];

}

| string PLearn::ConditionalDensityNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

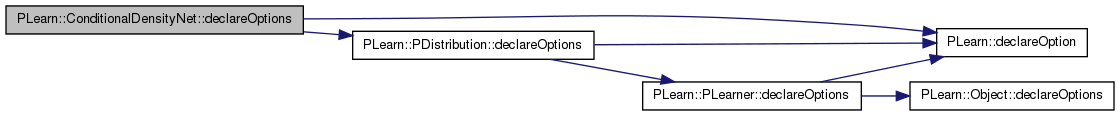

| void PLearn::ConditionalDensityNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PDistribution.

Definition at line 155 of file ConditionalDensityNet.cc.

References batch_size, bias_decay, PLearn::OptionBase::buildoption, c_penalization, centers_initialization, curve_positions, PLearn::declareOption(), PLearn::PDistribution::declareOptions(), direct_in_to_out, direct_in_to_out_weight_decay, generate_precision, initial_hardness, L1_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, log_likelihood_vs_squared_error_balance, maxY, mu, mu_is_fixed, n_output_density_terms, nhidden, nhidden2, optimizer, output_layer_bias_decay, output_layer_weight_decay, paramsvalues, penalty_type, scale, separate_mass_point, steps_type, thresholdY, unconditional_cdf, unconditional_delta_cdf, unconditional_p0, weight_decay, and y_values.

{

declareOption(ol, "nhidden", &ConditionalDensityNet::nhidden, OptionBase::buildoption,

" number of hidden units in first hidden layer (0 means no hidden layer)\n");

declareOption(ol, "nhidden2", &ConditionalDensityNet::nhidden2, OptionBase::buildoption,

" number of hidden units in second hidden layer (0 means no hidden layer)\n");

declareOption(ol, "weight_decay", &ConditionalDensityNet::weight_decay, OptionBase::buildoption,

" global weight decay for all layers\n");

declareOption(ol, "bias_decay", &ConditionalDensityNet::bias_decay, OptionBase::buildoption,

" global bias decay for all layers\n");

declareOption(ol, "layer1_weight_decay", &ConditionalDensityNet::layer1_weight_decay, OptionBase::buildoption,

" Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer1_bias_decay", &ConditionalDensityNet::layer1_bias_decay, OptionBase::buildoption,

" Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer2_weight_decay", &ConditionalDensityNet::layer2_weight_decay, OptionBase::buildoption,

" Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer2_bias_decay", &ConditionalDensityNet::layer2_bias_decay, OptionBase::buildoption,

" Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(ol, "output_layer_weight_decay", &ConditionalDensityNet::output_layer_weight_decay, OptionBase::buildoption,

" Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_bias_decay", &ConditionalDensityNet::output_layer_bias_decay, OptionBase::buildoption,

" Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "direct_in_to_out_weight_decay", &ConditionalDensityNet::direct_in_to_out_weight_decay, OptionBase::buildoption,

" Additional weight decay for the direct in-to-out layer. Is added to 'weight_decay'.\n");

declareOption(ol, "penalty_type", &ConditionalDensityNet::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L1_square\": square of the L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "L1_penalty", &ConditionalDensityNet::L1_penalty, OptionBase::buildoption,

"Deprecated - You should use \"penalty_type\" instead\n"

"should we use L1 penalty instead of the default L2 penalty on the weights?\n");

declareOption(ol, "direct_in_to_out", &ConditionalDensityNet::direct_in_to_out, OptionBase::buildoption,

" should we include direct input to output connections? (default=0)\n");

declareOption(ol, "optimizer", &ConditionalDensityNet::optimizer, OptionBase::buildoption,

" specify the optimizer to use\n");

declareOption(ol, "batch_size", &ConditionalDensityNet::batch_size, OptionBase::buildoption,

" how many samples to use to estimate the avergage gradient before updating the weights\n"

" 0 is equivalent to specifying training_set->length(); default=1 (stochastic gradient)\n");

declareOption(ol, "maxY", &ConditionalDensityNet::maxY, OptionBase::buildoption,

" maximum allowed value for Y. Default = 1.0 (data normalized in [0,1]\n");

declareOption(ol, "thresholdY", &ConditionalDensityNet::thresholdY, OptionBase::buildoption,

" threshold value of Y for which we might want to compute P(Y>thresholdY), with outputs_def='t'\n");

declareOption(ol, "log_likelihood_vs_squared_error_balance", &ConditionalDensityNet::log_likelihood_vs_squared_error_balance,

OptionBase::buildoption,

" Relative weight given to negative log-likelihood (1- this weight given squared error). Default=1\n");

declareOption(ol, "n_output_density_terms", &ConditionalDensityNet::n_output_density_terms,

OptionBase::buildoption,

" Number of terms (steps) in the output density function.\n");

declareOption(ol, "steps_type", &ConditionalDensityNet::steps_type,

OptionBase::buildoption,

" The type of steps used to build the cumulative distribution.\n"

" Allowed values are:\n"

" - sigmoid_steps: g(y,theta,i) = sigmoid(s(c_i)*(y-mu_i))\n"

" - sloped_steps: g(y,theta,i) = s(s(c_i)*(mu_i-y))-s(s(c_i)*(mu_i-y))\nDefault=sloped_steps\n");

declareOption(ol, "centers_initialization", &ConditionalDensityNet::centers_initialization,

OptionBase::buildoption,

" How to initialize the step centers (mu_i). Allowed values are:\n"

" - data: from the data at regular quantiles, with last one at maxY (default)\n"

" - uniform: at regular intervals in [0,maxY]\n"

" - log-scale: as the exponential of values at regular intervals in log-scale, using formula:\n"

" i-th position = (exp(scale*(i+1-n_output_density_terms)/n_output_density_terms)-exp(-scale))/(1-exp(-scale))\n");

declareOption(ol, "curve_positions", &ConditionalDensityNet::curve_positions,

OptionBase::buildoption,

" How to choose the y-values for the probability curve (upper case output_def):\n"

" - uniform: at regular intervals in [0,maxY]\n"

" - log-scale: as the exponential of values at regular intervals in log-scale, using formula:\n"

" i-th position = (exp(scale*(i+1-n_output_density_terms)/n_output_density_terms)-exp(-scale))/(1-exp(-scale))\n");

declareOption(ol, "scale", &ConditionalDensityNet::scale,

OptionBase::buildoption,

" scale used in the log-scale formula for centers_initialization and curve_positions");

declareOption(ol, "unconditional_p0", &ConditionalDensityNet::unconditional_p0, OptionBase::buildoption,

" approximate unconditional probability of Y=0 (mass point), used\n"

" to initialize the parameters.\n");

declareOption(ol, "mu_is_fixed", &ConditionalDensityNet::mu_is_fixed, OptionBase::buildoption,

" whether to keep the step centers (mu[i]) fixed or to learn them.\n");

declareOption(ol, "separate_mass_point", &ConditionalDensityNet::separate_mass_point, OptionBase::buildoption,

" whether to model separately the mass point at the origin.\n");

declareOption(ol, "initial_hardness", &ConditionalDensityNet::initial_hardness, OptionBase::buildoption,

" value that scales softplus(c).\n");

declareOption(ol, "c_penalization", &ConditionalDensityNet::c_penalization, OptionBase::buildoption,

" the penalization coefficient for the 'c' output of the neural network");

declareOption(ol, "generate_precision", &ConditionalDensityNet::generate_precision, OptionBase::buildoption,

" precision when generating a new sample\n");

declareOption(ol, "paramsvalues", &ConditionalDensityNet::paramsvalues, OptionBase::learntoption,

" The learned neural network parameter vector\n");

declareOption(ol, "unconditional_cdf", &ConditionalDensityNet::unconditional_cdf, OptionBase::learntoption,

" Unconditional cumulative distribution function.\n");

declareOption(ol, "unconditional_delta_cdf", &ConditionalDensityNet::unconditional_delta_cdf, OptionBase::learntoption,

" Variations of the cdf from one step center to the next (this is u_i in above eqns).\n");

declareOption(ol, "mu", &ConditionalDensityNet::mu, OptionBase::learntoption,

" Step centers.\n");

declareOption(ol, "y_values", &ConditionalDensityNet::y_values, OptionBase::learntoption,

" Values of Y at which the cumulative (or density or survival) curves are computed if required.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::ConditionalDensityNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PDistribution.

Definition at line 245 of file ConditionalDensityNet.h.

| ConditionalDensityNet * PLearn::ConditionalDensityNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

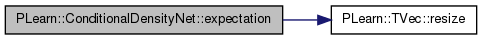

| void PLearn::ConditionalDensityNet::expectation | ( | Vec & | mu | ) | const [virtual] |

return E[X]

Reimplemented from PLearn::PDistribution.

Definition at line 889 of file ConditionalDensityNet.cc.

References mean_f, output, and PLearn::TVec< T >::resize().

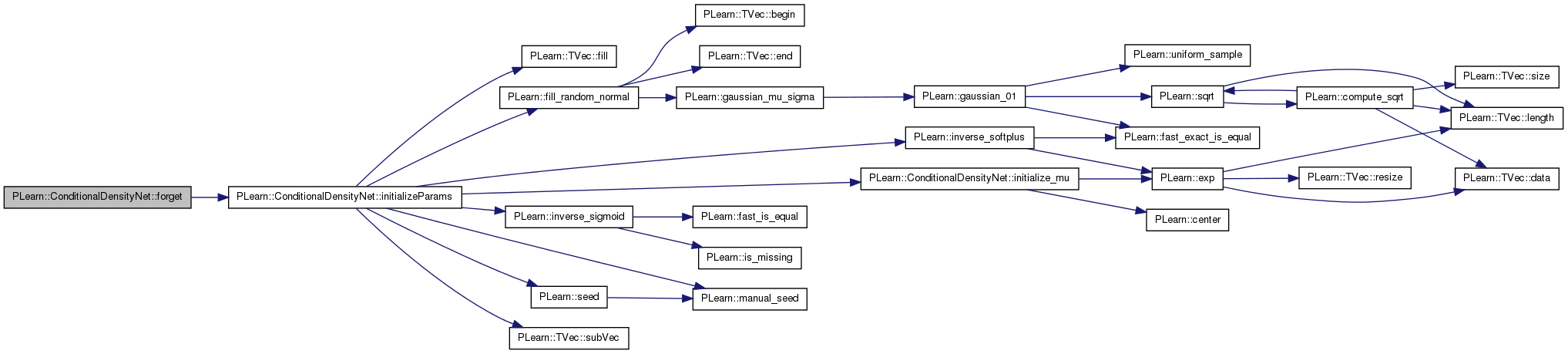

| void PLearn::ConditionalDensityNet::forget | ( | ) | [virtual] |

(Re-)initializes the PDistribution in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) You may remove this method if your distribution does not implement it

Remove this method, if your distribution does not implement it.

Reimplemented from PLearn::PDistribution.

Definition at line 1029 of file ConditionalDensityNet.cc.

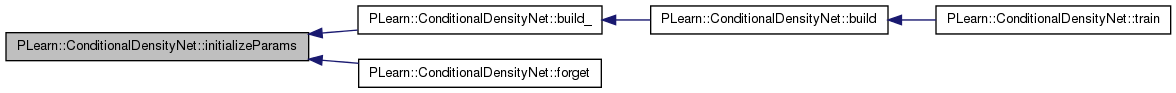

References initializeParams(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

if (train_set) initializeParams();

stage = 0;

}

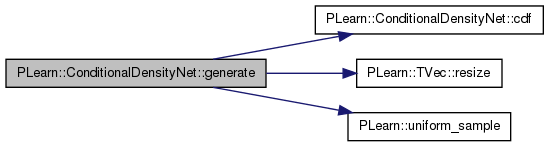

| void PLearn::ConditionalDensityNet::generate | ( | Vec & | x | ) | const [virtual] |

return a pseudo-random sample generated from the distribution.

Reimplemented from PLearn::PDistribution.

Definition at line 905 of file ConditionalDensityNet.cc.

References cdf(), generate_precision, maxY, PLearn::TVec< T >::resize(), u, and PLearn::uniform_sample().

{

real u = uniform_sample();

y.resize(1);

if (u<pos_a->value[0]) // mass point

{

y[0]=0;

return;

}

// then find y s.t. P(Y<y|x) = u by binary search

real y0=0;

real y2=maxY;

real delta;

real p;

do

{

delta = y2 - y0;

y[0] = y0 + delta*0.5;

p = cdf(y);

if (p<u)

// increase y

y0 = y[0];

else

// decrease y

y2 = y[0];

}

while (delta > generate_precision * maxY);

}

| OptionList & PLearn::ConditionalDensityNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| OptionMap & PLearn::ConditionalDensityNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

| RemoteMethodMap & PLearn::ConditionalDensityNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 153 of file ConditionalDensityNet.cc.

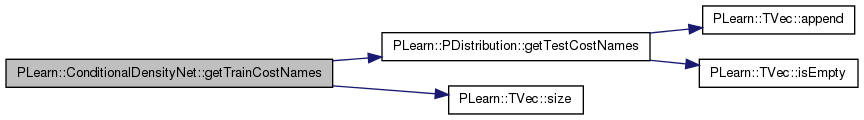

| TVec< string > PLearn::ConditionalDensityNet::getTrainCostNames | ( | ) | const [virtual] |

Return [ ].

Reimplemented from PLearn::PDistribution.

Definition at line 748 of file ConditionalDensityNet.cc.

References PLearn::PDistribution::getTestCostNames(), penalties, and PLearn::TVec< T >::size().

{

if (penalties.size() > 0)

{

TVec<string> cost_funcs(4);

cost_funcs[0]="training_criterion+penalty";

cost_funcs[1]="training_criterion";

cost_funcs[2]="NLL";

cost_funcs[3]="mse";

return cost_funcs;

}

else return getTestCostNames();

}

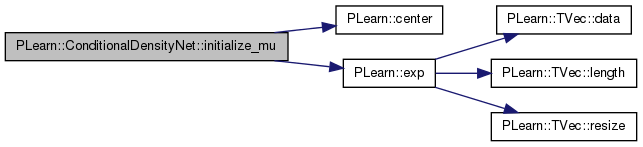

| void PLearn::ConditionalDensityNet::initialize_mu | ( | Vec & | mu_ | ) |

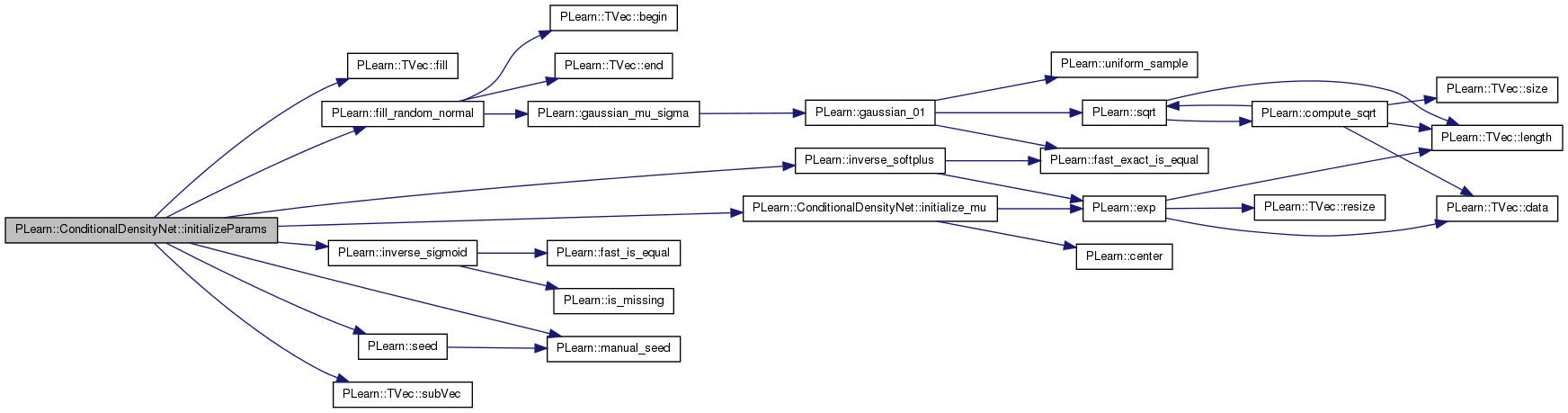

Definition at line 1010 of file ConditionalDensityNet.cc.

References PLearn::center(), centers_initialization, PLearn::exp(), i, maxY, n_output_density_terms, PLERROR, and scale.

Referenced by build_(), initializeParams(), and train().

{

if (centers_initialization=="uniform")

{

real delta=maxY/n_output_density_terms;

real center=delta;

for (int i=0;i<n_output_density_terms;i++,center+=delta)

mu_[i]=center;

} else if (centers_initialization=="log-scale")

{

real denom = 1.0/(1-exp(-scale));

for (int i=0;i<n_output_density_terms;i++)

mu_[i]=(exp(scale*(i+1-n_output_density_terms)/n_output_density_terms)-exp(-scale))*denom;

} else if (centers_initialization!="data")

PLERROR("ConditionalDensityNet::initialize_mu: unknown value %s for centers_initialization option",

centers_initialization.c_str());

}

| void PLearn::ConditionalDensityNet::initializeParams | ( | ) |

Definition at line 940 of file ConditionalDensityNet.cc.

References centers_initialization, direct_in_to_out, PLearn::TVec< T >::fill(), PLearn::fill_random_normal(), i, initialize_mu(), PLearn::inverse_sigmoid(), PLearn::inverse_softplus(), PLearn::manual_seed(), mu, mu_is_fixed, n_output_density_terms, nhidden, nhidden2, optimizer, PLearn::seed(), PLearn::PLearner::seed_, separate_mass_point, PLearn::TVec< T >::subVec(), unconditional_delta_cdf, unconditional_p0, w1, w2, wdirect, and wout.

Referenced by build_(), and forget().

{

if (seed_>=0)

manual_seed(seed_);

else

PLearn::seed();

//real delta = 1./sqrt(inputsize());

real delta = 1.0 / n_input;

/*

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, delta);

//wdirect->matValue(0).clear();

}

*/

if(nhidden>0)

{

//fill_random_uniform(w1->value, -delta, +delta);

//delta = 1./sqrt(nhidden);

fill_random_normal(w1->value, 0, delta);

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, 0.01*delta);

wdirect->matValue(0).clear();

}

delta = 1.0/nhidden;

w1->matValue(0).clear();

}

if(nhidden2>0)

{

//fill_random_uniform(w2->value, -delta, +delta);

//delta = 1./sqrt(nhidden2);

delta = 0.1/nhidden2;

fill_random_normal(w2->value, 0, delta);

w2->matValue(0).clear();

}

//fill_random_uniform(wout->value, -delta, +delta);

fill_random_normal(wout->value, 0, delta);

// Mat a_weights = wout->matValue.column(0); // Does not seem to be used anymore.

// a_weights *= 3.0; // to get more dynamic range

if (centers_initialization!="data")

{

Vec output_biases = wout->matValue(0);

Vec mu_;

int i=0;

Vec a_ = output_biases.subVec(i++,1);

Vec b_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

Vec c_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

if (mu_is_fixed)

mu_ = mu->value;

else

mu_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

initialize_mu(mu_);

b_.fill(inverse_softplus(1.0));

c_.fill(inverse_softplus(1.0));

if (separate_mass_point)

a_[0] = unconditional_p0>0?inverse_sigmoid(unconditional_p0):-50;

else a_[0] = -50;

unconditional_delta_cdf->value.fill((1.0-unconditional_p0)/n_output_density_terms);

}

// Reset optimizer

if(optimizer)

optimizer->reset();

}

return log of probability density log(p(x))

Reimplemented from PLearn::PDistribution.

Definition at line 862 of file ConditionalDensityNet.cc.

References d, density_f, output_and_target_values, pl_log, PLearn::TVec< T >::resize(), and target.

{

static Vec d;

d.resize(1);

target->value << y;

density_f->fprop(output_and_target_values, d);

return pl_log(d[0]);

}

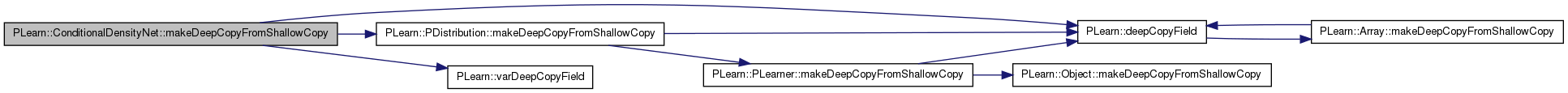

| void PLearn::ConditionalDensityNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PDistribution.

Definition at line 789 of file ConditionalDensityNet.cc.

References a, b, c, cdf_f, centers, centers_M, costs, cum_denominator, cum_numerator, cumulative, PLearn::deepCopyField(), delta_steps, density, density_f, expected_value, f, in2distr_f, initial_hardnesses, input, invars, PLearn::PDistribution::makeDeepCopyFromShallowCopy(), mass_cost, mean_f, minus_prev_centers_0, minus_scaled_prev_centers_0, mu, optimizer, output, output_and_target, output_and_target_to_cost, output_and_target_values, outputs, params, paramsvalues, penalties, pos_a, pos_b, pos_c, pos_y_cost, prev_centers, prev_centers_M, sampleweight, scaled_prev_centers, scaled_prev_centers_M, steps, steps_0, steps_gradient, steps_integral, steps_M, target, test_costf, test_costs, totalcost, training_cost, unconditional_cdf, unconditional_delta_cdf, PLearn::varDeepCopyField(), w1, w2, wdirect, wout, and y_values.

{

inherited::makeDeepCopyFromShallowCopy(copies);

varDeepCopyField(input, copies);

varDeepCopyField(target, copies);

varDeepCopyField(sampleweight, copies);

varDeepCopyField(w1, copies);

varDeepCopyField(w2, copies);

varDeepCopyField(wout, copies);

varDeepCopyField(wdirect, copies);

varDeepCopyField(output, copies);

varDeepCopyField(outputs, copies);

varDeepCopyField(a, copies);

varDeepCopyField(pos_a, copies);

varDeepCopyField(b, copies);

varDeepCopyField(pos_b, copies);

varDeepCopyField(c, copies);

varDeepCopyField(pos_c, copies);

varDeepCopyField(density, copies);

varDeepCopyField(cumulative, copies);

varDeepCopyField(expected_value, copies);

deepCopyField(costs, copies);

deepCopyField(penalties, copies);

varDeepCopyField(training_cost, copies);

varDeepCopyField(test_costs, copies);

deepCopyField(invars, copies);

deepCopyField(params, copies);

deepCopyField(paramsvalues, copies);

varDeepCopyField(centers, copies);

varDeepCopyField(centers_M, copies);

varDeepCopyField(steps, copies);

varDeepCopyField(steps_M, copies);

varDeepCopyField(steps_0, copies);

varDeepCopyField(steps_gradient, copies);

varDeepCopyField(steps_integral, copies);

varDeepCopyField(delta_steps, copies);

varDeepCopyField(cum_numerator, copies);

varDeepCopyField(cum_denominator, copies);

deepCopyField(unconditional_cdf, copies);

varDeepCopyField(unconditional_delta_cdf, copies);

varDeepCopyField(initial_hardnesses, copies);

varDeepCopyField(prev_centers, copies);

varDeepCopyField(prev_centers_M, copies);

varDeepCopyField(scaled_prev_centers, copies);

varDeepCopyField(scaled_prev_centers_M, copies);

varDeepCopyField(minus_prev_centers_0, copies);

varDeepCopyField(minus_scaled_prev_centers_0, copies);

deepCopyField(y_values, copies);

varDeepCopyField(mu, copies);

deepCopyField(f, copies);

deepCopyField(test_costf, copies);

deepCopyField(output_and_target_to_cost, copies);

deepCopyField(cdf_f, copies);

deepCopyField(mean_f, copies);

deepCopyField(density_f, copies);

deepCopyField(in2distr_f, copies);

deepCopyField(output_and_target, copies);

deepCopyField(output_and_target_values, copies);

varDeepCopyField(totalcost, copies);

varDeepCopyField(mass_cost, copies);

varDeepCopyField(pos_y_cost, copies);

deepCopyField(optimizer, copies);

}

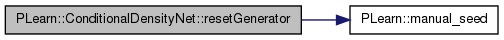

| void PLearn::ConditionalDensityNet::resetGenerator | ( | long | g_seed | ) | [virtual] |

Resets the random number generator used by generate using the given seed.

Reimplemented from PLearn::PDistribution.

Definition at line 900 of file ConditionalDensityNet.cc.

References PLearn::manual_seed().

{

manual_seed(g_seed);

}

| void PLearn::ConditionalDensityNet::setInput | ( | const Vec & | input | ) | const [virtual] |

Set the value for the input part of a conditional probability.

Definition at line 853 of file ConditionalDensityNet.cc.

References f, in2distr_f, PLERROR, and pos_a.

{

#ifdef BOUNDCHECK

if (!f)

PLERROR("ConditionalDensityNet:setInput: build was not completed (maybe because training set was not provided)!");

#endif

in2distr_f->fprop(in,pos_a->value);

}

return survival fn = P(X>x)

Reimplemented from PLearn::PDistribution.

Definition at line 871 of file ConditionalDensityNet.cc.

References cdf().

{

return 1 - cdf(y);

}

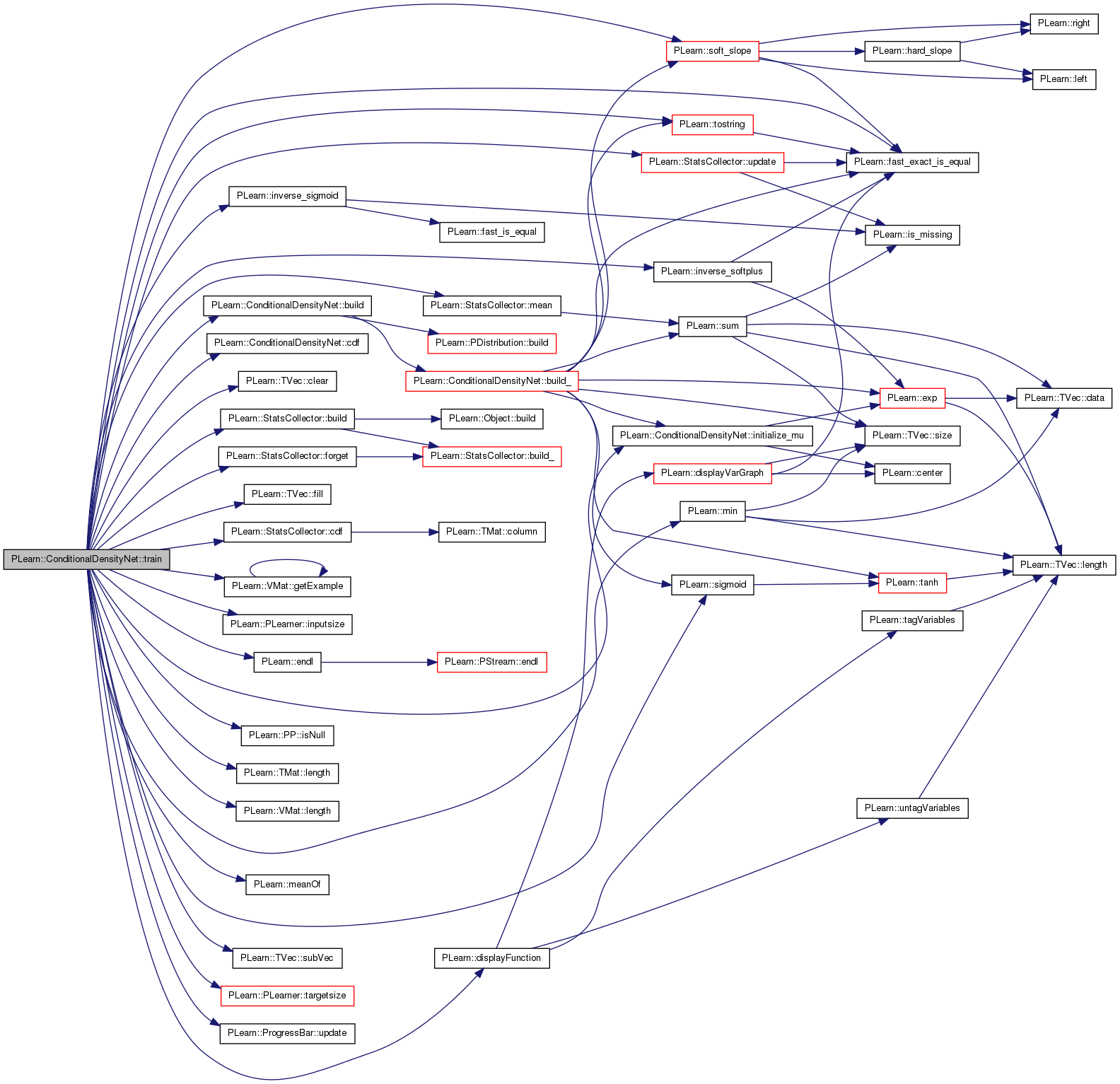

| void PLearn::ConditionalDensityNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Remove this method, if your distribution does not implement it.

You may remove this method if your distribution does not implement it

Reimplemented from PLearn::PDistribution.

Definition at line 1036 of file ConditionalDensityNet.cc.

References batch_size, PLearn::StatsCollector::build(), build(), PLearn::StatsCollector::cdf(), cdf(), centers_initialization, PLearn::TVec< T >::clear(), PLearn::displayFunction(), PLearn::endl(), f, PLearn::fast_exact_is_equal(), PLearn::TVec< T >::fill(), PLearn::StatsCollector::forget(), PLearn::VMat::getExample(), i, initial_hardness, initialize_mu(), input, PLearn::PLearner::inputsize(), invars, PLearn::inverse_sigmoid(), PLearn::inverse_softplus(), PLearn::PP< T >::isNull(), j, PLearn::TMat< T >::length(), PLearn::VMat::length(), PLearn::StatsCollector::maxnvalues, maxY, PLearn::StatsCollector::mean(), PLearn::meanOf(), PLearn::min(), mu, mu_is_fixed, n_output_density_terms, PLearn::PLearner::nstages, optimizer, outputs, params, PLERROR, PLearn::PLearner::report_progress, separate_mass_point, PLearn::sigmoid(), PLearn::soft_slope(), PLearn::PLearner::stage, steps_type, PLearn::TVec< T >::subVec(), target, PLearn::PLearner::targetsize(), test_costf, PLearn::tostring(), totalcost, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_cost, unconditional_cdf, unconditional_delta_cdf, unconditional_p0, PLearn::ProgressBar::update(), PLearn::StatsCollector::update(), PLearn::PLearner::verbosity, and wout.

{

int i=0, j=0;

if(!train_set)

PLERROR("In ConditionalDensityNet::train, you did not setTrainingSet");

if(!train_stats)

PLERROR("In ConditionalDensityNet::train, you did not setTrainStatsCollector");

/*

if (!already_sorted || n_margin > 0)

PLERROR("In ConditionalDensityNet::train - Currently, can only be trained if the data is given as input, target");

*/

if(f.isNull()) // Net has not been properly built yet (because build was called before the learner had a proper training set)

build();

int l = train_set->length();

int nsamples = batch_size>0 ? batch_size : l;

Func paramf = Func(invars, training_cost); // parameterized function to optimize

Var totalcost = meanOf(train_set, paramf, nsamples);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

}

// number of optimiser stages corresponding to one learner stage (one epoch)

int optstage_per_lstage = l/nsamples;

ProgressBar* pb = 0;

if(report_progress)

pb = new ProgressBar("Training ConditionalDensityNet from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

// estimate the unconditional cdf

static real weight;

if (stage==0)

{

Vec mu_values = mu->value;

unconditional_cdf.clear();

real sum_w=0;

unconditional_p0 = 0;

static StatsCollector sc;

bool init_mu_from_data=centers_initialization=="data";

if (init_mu_from_data)

{

sc.maxnvalues = min(l,100*n_output_density_terms);

sc.build();

sc.forget();

}

Vec tmp1(inputsize());

Vec tmp2(targetsize());

for (i=0;i<l;i++)

{

train_set->getExample(i, tmp1, tmp2, weight);

input->value << tmp1.subVec(0, n_input);

target->value << tmp1.subVec(n_input, n_target);

real y = target->valuedata[0];

if (y < 0)

PLERROR("In ConditionalDensityNet::train - Found a negative target");

if (y > maxY)

PLERROR("In ConditionalDensityNet::train - Found a target > maxY");

if (fast_exact_is_equal(y, 0))

unconditional_p0 += weight;

if (init_mu_from_data)

sc.update(y,weight);

else

for (int k=0;k<n_output_density_terms;k++)

if (y<=mu_values[k])

unconditional_cdf[k] += weight;

sum_w += weight;

}

static Mat cdf;

unconditional_p0 *= 1.0/sum_w;

if (init_mu_from_data)

{

cdf = sc.cdf();

int k=3;

real mean_y = sc.mean();

real current_mean_fraction = 0;

real prev_cdf = unconditional_p0;

real prev_y = 0;

for (int q=0;q<n_output_density_terms;q++)

{

real target_fraction = mean_y*(q+1.0)/n_output_density_terms;

for (;k<cdf.length() && current_mean_fraction < target_fraction;k++)

{

current_mean_fraction += (cdf(k,0)+prev_y)*0.5*(cdf(k,1)-prev_cdf);

prev_cdf = cdf(k,1);

prev_y = cdf(k,0);

}

if (q==n_output_density_terms-1)

{

mu_values[q]=maxY;

unconditional_cdf[q]=1.0;

}

else

{

mu_values[q]=cdf(k,0);

unconditional_cdf[q]=cdf(k,1);

}

}

}

else

for (j=0;j<n_output_density_terms;j++)

unconditional_cdf[j] *= 1.0/sum_w;

unconditional_delta_cdf->valuedata[0]=unconditional_cdf[0]-unconditional_p0;

for (i=1;i<n_output_density_terms;i++)

unconditional_delta_cdf->valuedata[i]=unconditional_cdf[i]-unconditional_cdf[i-1];

// initialize biases based on unconditional distribution

Vec output_biases = wout->matValue(0);

i=0;

Vec a_ = output_biases.subVec(i++,1);

Vec b_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

Vec c_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

Vec mu_;

Vec s_c(n_output_density_terms);

if (mu_is_fixed)

mu_ = mu->value;

else

mu_ = output_biases.subVec(i,n_output_density_terms); i+=n_output_density_terms;

b_.fill(inverse_softplus(1.0));

initialize_mu(mu_);

for (i=0;i<n_output_density_terms;i++)

{

real prev_mu = i==0?0:mu_[i-1];

real delta = mu_[i]-prev_mu;

s_c[i] = delta>0?initial_hardness/delta:-50;

c_[i] = inverse_softplus(1.0);

}

if (centers_initialization!="data")

unconditional_delta_cdf->value.fill(1.0/n_output_density_terms);

real *dcdf = unconditional_delta_cdf->valuedata;

if (separate_mass_point)

a_[0] = unconditional_p0>0?inverse_sigmoid(unconditional_p0):-50;

else if (fast_exact_is_equal(dcdf[0], 0))

a_[0]=unconditional_p0>0?inverse_softplus(unconditional_p0):-50;

else

{

real s=0;

if (steps_type=="sigmoid_steps")

for (i=0;i<n_output_density_terms;i++)

s+=dcdf[i]*(unconditional_p0*sigmoid(s_c[i]*(maxY-mu_[i]))-sigmoid(-s_c[i]*mu_[i]));

else

for (i=0;i<n_output_density_terms;i++)

{

real prev_mu = i==0?0:mu_[i-1];

real ss1 = soft_slope(maxY,s_c[i],prev_mu,mu_[i]);

real ss2 = soft_slope(0,s_c[i],prev_mu,mu_[i]);

s+=dcdf[i]*(unconditional_p0*ss1 - ss2);

}

real sa=s/(1-unconditional_p0);

a_[0]=sa>0?inverse_softplus(sa):-50;

/*

Mat At(n_output_density_terms,n_output_density_terms); // transpose of the linear system matrix

Mat rhs(1,n_output_density_terms); // right hand side of the linear system

// solve the system to find b's that make the unconditional fit the observed data

// sum_j sb_j dcdf_j (cdf_j step_j(maxY) - step_j(mu_i)) = sa (1 - cdf_i)

//

for (int i=0;i<n_output_density_terms;i++)

{

real* Ati = At[i];

real prev_mu = i==0?0:mu_[i-1];

for (int j=0;j<n_output_density_terms;j++)

{

if (steps_type=="sigmoid_steps")

Ati[j] = dcdf[i]*(unconditional_cdf[j]*sigmoid(initial_hardness*(maxY-mu_[i]))-

sigmoid(initial_hardness*(mu_[j]-mu_[i])));

else

Ati[j] = dcdf[i]*(unconditional_cdf[j]*soft_slope(maxY,initial_hardness,prev_mu,mu_[i])-

soft_slope(mu_[j],initial_hardness,prev_mu,mu_[i]));

}

rhs[0][i] = sa*(1-unconditional_cdf[i]);

}

TVec<int> pivots(n_output_density_terms);

int status = lapackSolveLinearSystem(At,rhs,pivots);

if (status==0)

for (int i=0;i<n_output_density_terms;i++)

b_[i] = inverse_softplus(rhs[0][i]);

else

PLWARNING("ConditionalDensityNet::initializeParams() Could not invert matrix to obtain exact init. of b");

*/

}

test_costf->recomputeParents();

// debugging

static bool display_graph = false;

if (display_graph) f->fprop(input->value,outputs->value);

//displayVarGraph(outputs,true);

if (display_graph)

displayFunction(f,true);

if (display_graph)

displayFunction(test_costf,true);

}

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

early_stop = optimizer->optimizeN(*train_stats);

//if (verify_gradient)

// training_cost->verifyGradient(verify_gradient);

//if (stage==nstages-1 && verify_gradient)

static bool verify_gradient = false;

if (verify_gradient)

{

if (batch_size == 0)

{

cout << "OPTIMIZER" << endl;

optimizer->verifyGradient(0.001);

}

}

static bool display_graph = false;

if (display_graph)

displayFunction(f,true);

if (display_graph)

displayFunction(test_costf,true);

train_stats->finalize();

if(verbosity>2)

cerr << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

cerr << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

if(pb)

delete pb;

test_costf->recomputeParents();

}

| void PLearn::ConditionalDensityNet::variance | ( | Mat & | cov | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 895 of file ConditionalDensityNet.cc.

References PLERROR.

{

PLERROR("variance not implemented for ConditionalDensityNet");

}

Reimplemented from PLearn::PDistribution.

Definition at line 245 of file ConditionalDensityNet.h.

Var PLearn::ConditionalDensityNet::a [protected] |

Definition at line 76 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::b [protected] |

Definition at line 77 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 157 of file ConditionalDensityNet.h.

Referenced by declareOptions(), and train().

Definition at line 141 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::c [protected] |

Definition at line 78 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 162 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Func PLearn::ConditionalDensityNet::cdf_f [mutable] |

Definition at line 121 of file ConditionalDensityNet.h.

Referenced by build_(), cdf(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::centers [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 193 of file ConditionalDensityNet.h.

Referenced by declareOptions(), initialize_mu(), initializeParams(), and train().

Var PLearn::ConditionalDensityNet::centers_M [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::ConditionalDensityNet::costs [protected] |

Definition at line 83 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::cum_denominator [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::cum_numerator [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::cumulative [protected] |

Definition at line 80 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 194 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::delta_steps [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::density [protected] |

Definition at line 79 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::ConditionalDensityNet::density_f [mutable] |

Definition at line 123 of file ConditionalDensityNet.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Definition at line 152 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 148 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::expected_value [protected] |

Definition at line 81 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::ConditionalDensityNet::f [mutable] |

Definition at line 117 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), setInput(), and train().

Definition at line 181 of file ConditionalDensityNet.h.

Referenced by declareOptions(), and generate().

Definition at line 124 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and setInput().

Definition at line 205 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and train().

Var PLearn::ConditionalDensityNet::initial_hardnesses [protected] |

Definition at line 107 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::input [protected] |

Definition at line 66 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

VarArray PLearn::ConditionalDensityNet::invars [protected] |

Definition at line 88 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 151 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 143 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 142 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 145 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 144 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 173 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 128 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 165 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), generate(), initialize_mu(), and train().

Func PLearn::ConditionalDensityNet::mean_f [mutable] |

Definition at line 122 of file ConditionalDensityNet.h.

Referenced by build_(), expectation(), and makeDeepCopyFromShallowCopy().

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 116 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 202 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), initializeParams(), and train().

Definition at line 179 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), initialize_mu(), initializeParams(), and train().

Definition at line 137 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 138 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 155 of file ConditionalDensityNet.h.

Referenced by declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::ConditionalDensityNet::output [protected] |

Definition at line 74 of file ConditionalDensityNet.h.

Referenced by build_(), expectation(), and makeDeepCopyFromShallowCopy().

Definition at line 125 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 119 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 126 of file ConditionalDensityNet.h.

Referenced by build_(), cdf(), log_density(), and makeDeepCopyFromShallowCopy().

Definition at line 147 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 146 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::outputs [protected] |

Definition at line 75 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

VarArray PLearn::ConditionalDensityNet::params [protected] |

Definition at line 89 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 93 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::ConditionalDensityNet::penalties [protected] |

Definition at line 84 of file ConditionalDensityNet.h.

Referenced by build_(), getTrainCostNames(), and makeDeepCopyFromShallowCopy().

Definition at line 150 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::pos_a [protected] |

Definition at line 76 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and setInput().

Var PLearn::ConditionalDensityNet::pos_b [protected] |

Definition at line 77 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::pos_c [protected] |

Definition at line 78 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 129 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::prev_centers [protected] |

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::prev_centers_M [protected] |

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::sampleweight [protected] |

Definition at line 68 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 195 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and initialize_mu().

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 110 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 176 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), initializeParams(), and train().

Var PLearn::ConditionalDensityNet::steps [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::steps_0 [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::steps_gradient [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::steps_integral [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::steps_M [protected] |

Definition at line 97 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 187 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and train().

Var PLearn::ConditionalDensityNet::target [protected] |

Definition at line 67 of file ConditionalDensityNet.h.

Referenced by build_(), cdf(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 118 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::ConditionalDensityNet::test_costs [protected] |

Definition at line 86 of file ConditionalDensityNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 168 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Definition at line 127 of file ConditionalDensityNet.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Var PLearn::ConditionalDensityNet::training_cost [protected] |

Definition at line 85 of file ConditionalDensityNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::ConditionalDensityNet::unconditional_cdf [protected] |

Definition at line 102 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 104 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 199 of file ConditionalDensityNet.h.

Referenced by declareOptions(), initializeParams(), and train().

Var PLearn::ConditionalDensityNet::w1 [protected] |

Definition at line 69 of file ConditionalDensityNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::w2 [protected] |

Definition at line 70 of file ConditionalDensityNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::ConditionalDensityNet::wdirect [protected] |

Definition at line 72 of file ConditionalDensityNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 140 of file ConditionalDensityNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::ConditionalDensityNet::wout [protected] |

Definition at line 71 of file ConditionalDensityNet.h.

Referenced by build_(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 115 of file ConditionalDensityNet.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4