|

PLearn 0.1

|

|

PLearn 0.1

|

#include <SoftmaxLossVariable.h>

Public Member Functions | |

| SoftmaxLossVariable () | |

| Default constructor for persistence. | |

| SoftmaxLossVariable (Variable *input1, Variable *input2) | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SoftmaxLossVariable * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | recomputeSize (int &l, int &w) const |

| Recomputes the length l and width w that this variable should have, according to its parent variables. | |

| virtual void | fprop () |

| compute output given input | |

| virtual void | bprop () |

| virtual void | bbprop () |

| compute an approximation to diag(d^2/dinput^2) given diag(d^2/doutput^2), with diag(d^2/dinput^2) ~=~ (doutput/dinput)' diag(d^2/doutput^2) (doutput/dinput) In particular: if 'C' depends on 'y' and 'y' depends on x ... | |

| virtual void | symbolicBprop () |

| compute a piece of new Var graph that represents the symbolic derivative of this Var | |

| virtual void | rfprop () |

Static Public Member Functions | |

| static string | _classname_ () |

| SoftmaxLossVariable. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Types | |

| typedef BinaryVariable | inherited |

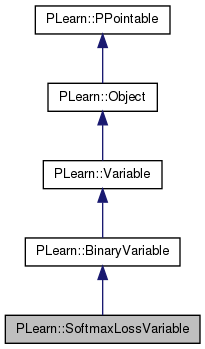

Definition at line 53 of file SoftmaxLossVariable.h.

typedef BinaryVariable PLearn::SoftmaxLossVariable::inherited [private] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 55 of file SoftmaxLossVariable.h.

| PLearn::SoftmaxLossVariable::SoftmaxLossVariable | ( | ) | [inline] |

| string PLearn::SoftmaxLossVariable::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

| OptionList & PLearn::SoftmaxLossVariable::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

| RemoteMethodMap & PLearn::SoftmaxLossVariable::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

| Object * PLearn::SoftmaxLossVariable::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 56 of file SoftmaxLossVariable.cc.

| StaticInitializer SoftmaxLossVariable::_static_initializer_ & PLearn::SoftmaxLossVariable::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

| void PLearn::SoftmaxLossVariable::bbprop | ( | ) | [virtual] |

compute an approximation to diag(d^2/dinput^2) given diag(d^2/doutput^2), with diag(d^2/dinput^2) ~=~ (doutput/dinput)' diag(d^2/doutput^2) (doutput/dinput) In particular: if 'C' depends on 'y' and 'y' depends on x ...

d^2C/dx^2 = d^2C/dy^2 * (dy/dx)^2 + dC/dy * d^2y/dx^2 (diaghessian) (gradient)

Reimplemented from PLearn::Variable.

Definition at line 108 of file SoftmaxLossVariable.cc.

References PLERROR.

{

PLERROR("SofmaxVariable::bbprop() not implemented");

}

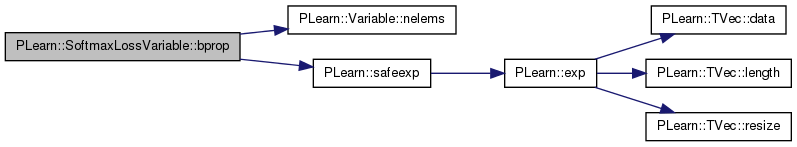

| void PLearn::SoftmaxLossVariable::bprop | ( | ) | [virtual] |

Implements PLearn::Variable.

Definition at line 92 of file SoftmaxLossVariable.cc.

References PLearn::Variable::gradientdata, i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Variable::nelems(), PLearn::safeexp(), and PLearn::Variable::valuedata.

{

int classnum = (int)input2->valuedata[0];

real input_index = input1->valuedata[classnum];

real vali = valuedata[0];

for(int i=0; i<input1->nelems(); i++)

{

if (i!=classnum)

//input1->gradientdata[i] = -gradientdata[i]/*?*/*vali*vali*safeexp(input1->valuedata[i]-input_index);

input1->gradientdata[i] = -gradientdata[i]*vali*vali*safeexp(input1->valuedata[i]-input_index);

else

input1->gradientdata[i] = gradientdata[i]*vali*(1.-vali);

}

}

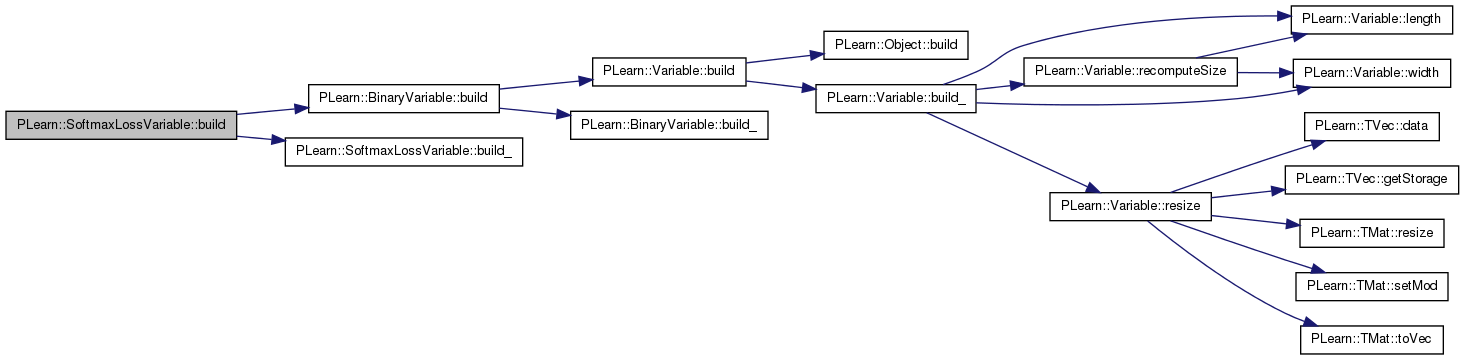

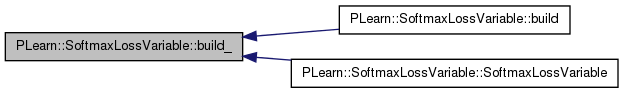

| void PLearn::SoftmaxLossVariable::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::BinaryVariable.

Definition at line 65 of file SoftmaxLossVariable.cc.

References PLearn::BinaryVariable::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::SoftmaxLossVariable::build_ | ( | ) | [protected] |

This does the actual building.

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SoftmaxLossVariable.cc.

References PLearn::BinaryVariable::input2, and PLERROR.

Referenced by build(), and SoftmaxLossVariable().

{

if(input2 && !input2->isScalar())

PLERROR("In RowAtPositionVariable: position must be a scalar");

}

| string PLearn::SoftmaxLossVariable::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file SoftmaxLossVariable.cc.

| static const PPath& PLearn::SoftmaxLossVariable::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 62 of file SoftmaxLossVariable.h.

:

void build_();

| SoftmaxLossVariable * PLearn::SoftmaxLossVariable::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file SoftmaxLossVariable.cc.

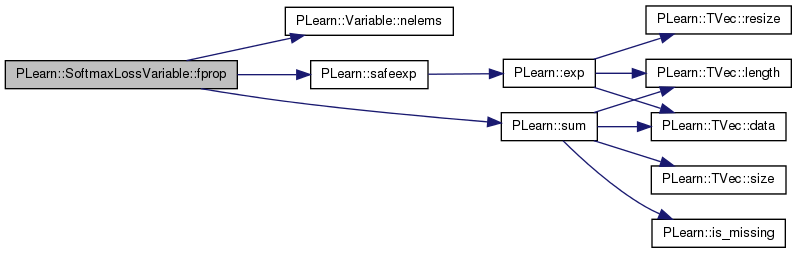

| void PLearn::SoftmaxLossVariable::fprop | ( | ) | [virtual] |

compute output given input

Implements PLearn::Variable.

Definition at line 81 of file SoftmaxLossVariable.cc.

References i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Variable::nelems(), PLearn::safeexp(), PLearn::sum(), and PLearn::Variable::valuedata.

{

int classnum = (int)input2->valuedata[0];

real input_index = input1->valuedata[classnum];

real sum=0;

for(int i=0; i<input1->nelems(); i++)

sum += safeexp(input1->valuedata[i]-input_index);

valuedata[0] = 1.0/sum;

}

| OptionList & PLearn::SoftmaxLossVariable::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file SoftmaxLossVariable.cc.

| OptionMap & PLearn::SoftmaxLossVariable::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file SoftmaxLossVariable.cc.

| RemoteMethodMap & PLearn::SoftmaxLossVariable::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file SoftmaxLossVariable.cc.

Recomputes the length l and width w that this variable should have, according to its parent variables.

This is used for ex. by sizeprop() The default version stupidly returns the current dimensions, so make sure to overload it in subclasses if this is not appropriate.

Reimplemented from PLearn::Variable.

Definition at line 78 of file SoftmaxLossVariable.cc.

{ l=1; w=1; }

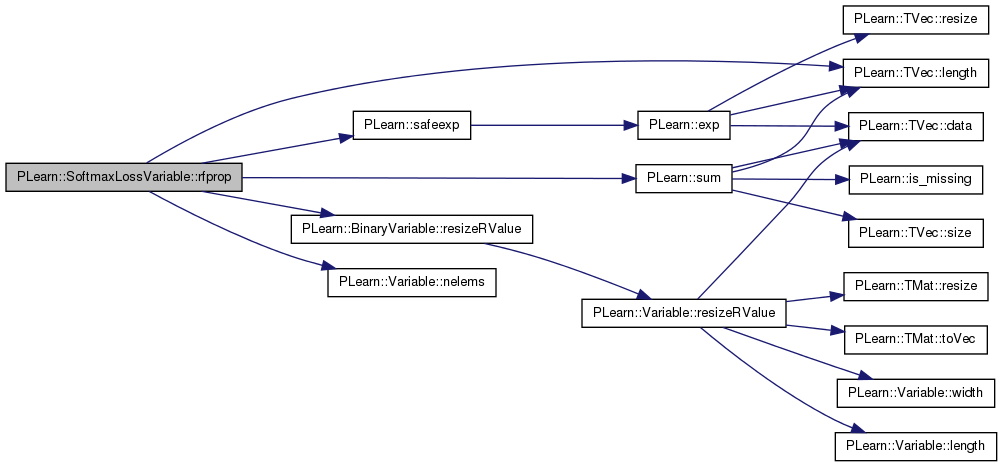

| void PLearn::SoftmaxLossVariable::rfprop | ( | ) | [virtual] |

Reimplemented from PLearn::Variable.

Definition at line 123 of file SoftmaxLossVariable.cc.

References i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::TVec< T >::length(), PLearn::Variable::nelems(), PLearn::BinaryVariable::resizeRValue(), PLearn::Variable::rValue, PLearn::Variable::rvaluedata, PLearn::safeexp(), PLearn::sum(), and PLearn::Variable::valuedata.

{

if (rValue.length()==0) resizeRValue();

int classnum = (int)input2->valuedata[0];

real input_index = input1->valuedata[classnum];

real vali = valuedata[0];

real sum = 0;

for(int i=0; i<input1->nelems(); i++)

{

real res =vali * input1->rvaluedata[i];

if (i != classnum)

sum -= res * vali* safeexp(input1->valuedata[i]-input_index);

else sum += res * (1 - vali);

}

rvaluedata[0] = sum;

}

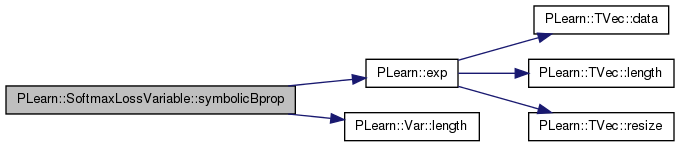

| void PLearn::SoftmaxLossVariable::symbolicBprop | ( | ) | [virtual] |

compute a piece of new Var graph that represents the symbolic derivative of this Var

Reimplemented from PLearn::Variable.

Definition at line 114 of file SoftmaxLossVariable.cc.

References PLearn::exp(), PLearn::Variable::g, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Var::length(), and PLearn::Variable::Var.

{

Var gi = -g * Var(this) * Var(this) * exp(input1-input1(input2));

Var gindex = new RowAtPositionVariable(g * Var(this), input2, input1->length());

input1->accg(gi+gindex);

}

Reimplemented from PLearn::BinaryVariable.

Definition at line 62 of file SoftmaxLossVariable.h.

1.7.4

1.7.4