|

PLearn 0.1

|

|

PLearn 0.1

|

Cost for weak learner in MarginBoost version of AdaBoost Cost for a weak learner used in the functional gradient descent view of boosting on a margin-based loss function. More...

#include <GradientAdaboostCostVariable.h>

Public Member Functions | |

| GradientAdaboostCostVariable () | |

| Default constructor for persistence. | |

| GradientAdaboostCostVariable (Variable *output, Variable *target) | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual GradientAdaboostCostVariable * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | recomputeSize (int &l, int &w) const |

| Recomputes the length l and width w that this variable should have, according to its parent variables. | |

| virtual void | fprop () |

| compute output given input | |

| virtual void | bprop () |

Static Public Member Functions | |

| static string | _classname_ () |

| GradientAdaboostCostVariable. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | declareOptions (OptionList &ol) |

| Declare options (data fields) for the class. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | build_ () |

| This does the actual building. | |

Protected Attributes | |

| real | margin |

Private Types | |

| typedef BinaryVariable | inherited |

Cost for weak learner in MarginBoost version of AdaBoost Cost for a weak learner used in the functional gradient descent view of boosting on a margin-based loss function.

See "Functional Gradient Techniques for Combining Hypotheses" by Mason et al.

Definition at line 55 of file GradientAdaboostCostVariable.h.

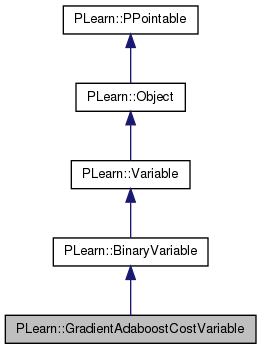

typedef BinaryVariable PLearn::GradientAdaboostCostVariable::inherited [private] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 57 of file GradientAdaboostCostVariable.h.

| PLearn::GradientAdaboostCostVariable::GradientAdaboostCostVariable | ( | ) | [inline] |

Default constructor for persistence.

Definition at line 64 of file GradientAdaboostCostVariable.h.

{}

| PLearn::GradientAdaboostCostVariable::GradientAdaboostCostVariable | ( | Variable * | output, |

| Variable * | target | ||

| ) |

Definition at line 61 of file GradientAdaboostCostVariable.cc.

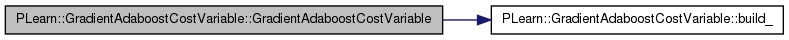

References build_().

| string PLearn::GradientAdaboostCostVariable::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| OptionList & PLearn::GradientAdaboostCostVariable::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| RemoteMethodMap & PLearn::GradientAdaboostCostVariable::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| Object * PLearn::GradientAdaboostCostVariable::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| StaticInitializer GradientAdaboostCostVariable::_static_initializer_ & PLearn::GradientAdaboostCostVariable::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| void PLearn::GradientAdaboostCostVariable::bprop | ( | ) | [virtual] |

Implements PLearn::Variable.

Definition at line 105 of file GradientAdaboostCostVariable.cc.

References PLearn::Variable::gradientdata, i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, and PLearn::Variable::length().

{

for(int i=0; i<length(); i++)

input1->gradientdata[i] += (gradientdata[i])*-2*(2*input2->valuedata[i]-1);

}

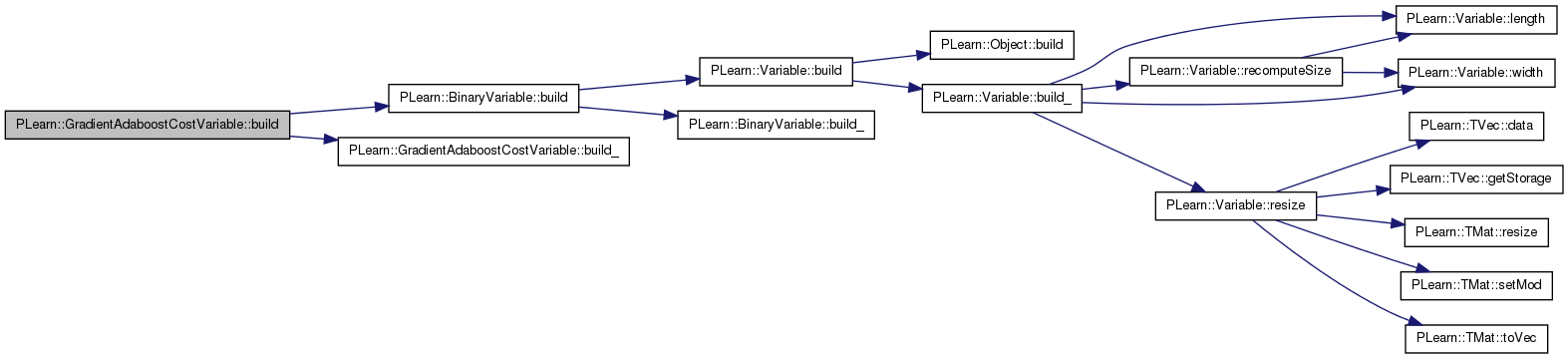

| void PLearn::GradientAdaboostCostVariable::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::BinaryVariable.

Definition at line 68 of file GradientAdaboostCostVariable.cc.

References PLearn::BinaryVariable::build(), and build_().

{

inherited::build();

build_();

}

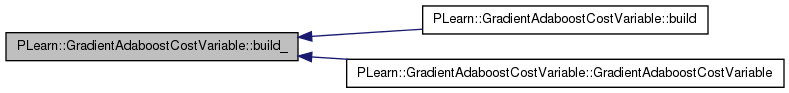

| void PLearn::GradientAdaboostCostVariable::build_ | ( | ) | [protected] |

This does the actual building.

Reimplemented from PLearn::BinaryVariable.

Definition at line 75 of file GradientAdaboostCostVariable.cc.

References PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, and PLERROR.

Referenced by build(), and GradientAdaboostCostVariable().

{

if (input2 && input2->size() != input1->size())

PLERROR("In GradientAdaboostCostVariable: target and output should have same size");

}

| string PLearn::GradientAdaboostCostVariable::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

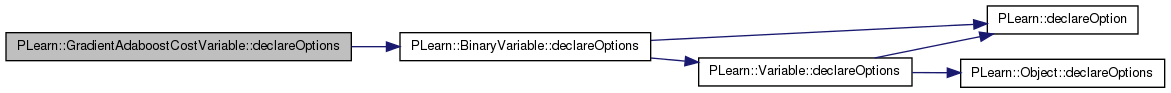

| void PLearn::GradientAdaboostCostVariable::declareOptions | ( | OptionList & | ol | ) | [static] |

Declare options (data fields) for the class.

Redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options). Please call the inherited method AT THE END to get the options listed in a consistent order (from most recently defined to least recently defined).

static void MyDerivedClass::declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "The size of the input; it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "The learned model weights"); inherited::declareOptions(ol); }

| ol | List of options that is progressively being constructed for the current class. |

Reimplemented from PLearn::BinaryVariable.

Definition at line 82 of file GradientAdaboostCostVariable.cc.

References PLearn::BinaryVariable::declareOptions().

{

inherited::declareOptions(ol);

}

| static const PPath& PLearn::GradientAdaboostCostVariable::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 67 of file GradientAdaboostCostVariable.h.

:

void build_();

| GradientAdaboostCostVariable * PLearn::GradientAdaboostCostVariable::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| void PLearn::GradientAdaboostCostVariable::fprop | ( | ) | [virtual] |

compute output given input

Implements PLearn::Variable.

Definition at line 96 of file GradientAdaboostCostVariable.cc.

References i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Variable::length(), and PLearn::Variable::valuedata.

{

for(int i=0; i<length(); i++)

valuedata[i] = -1*(2*input1->valuedata[i]-1)*(2*input2->valuedata[i]-1);

}

| OptionList & PLearn::GradientAdaboostCostVariable::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| OptionMap & PLearn::GradientAdaboostCostVariable::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

| RemoteMethodMap & PLearn::GradientAdaboostCostVariable::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 56 of file GradientAdaboostCostVariable.cc.

Recomputes the length l and width w that this variable should have, according to its parent variables.

This is used for ex. by sizeprop() The default version stupidly returns the current dimensions, so make sure to overload it in subclasses if this is not appropriate.

Reimplemented from PLearn::Variable.

Definition at line 90 of file GradientAdaboostCostVariable.cc.

References PLearn::BinaryVariable::input1.

{ l=input1->size(), w=1; }

Reimplemented from PLearn::BinaryVariable.

Definition at line 67 of file GradientAdaboostCostVariable.h.

real PLearn::GradientAdaboostCostVariable::margin [protected] |

Definition at line 60 of file GradientAdaboostCostVariable.h.

1.7.4

1.7.4