|

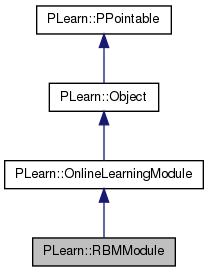

PLearn 0.1

|

|

PLearn 0.1

|

The first sentence should be a BRIEF DESCRIPTION of what the class does. More...

#include <RBMModule.h>

Public Member Functions | |

| RBMModule () | |

| Default constructor. | |

| virtual void | CDUpdate (const Mat &v_0, const Mat &h_0, const Mat &v_k, const Mat &h_k) |

| Perform one CD_k update, given the Markov chain statistics. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) | |

| virtual void | forget () |

| Reset the parameters to the state they would be BEFORE starting training. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Throws an error (please use explicitely the two different kinds of learning rates available here). | |

| virtual void | fprop (const TVec< Mat * > &ports_value) |

| Overridden. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| Overridden. | |

| virtual const TVec< string > & | getPorts () |

| Returns all ports in a RBMModule. | |

| virtual const TMat< int > & | getPortSizes () |

| The ports' sizes are given by the corresponding RBM layers. | |

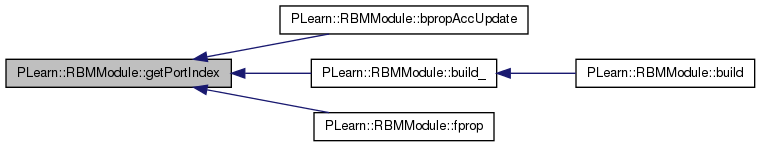

| virtual int | getPortIndex (const string &port) |

| Return the index (as in the list of ports returned by getPorts()) of a given port. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

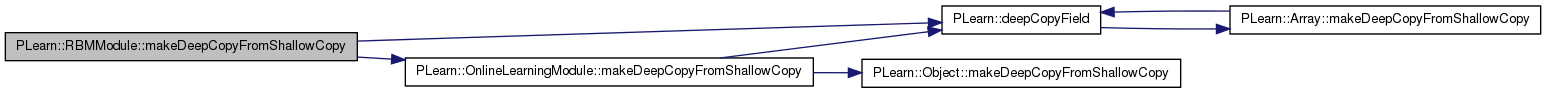

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| void | setAllLearningRates (real lr) |

| Forward the given learning rate to all elements of this module. | |

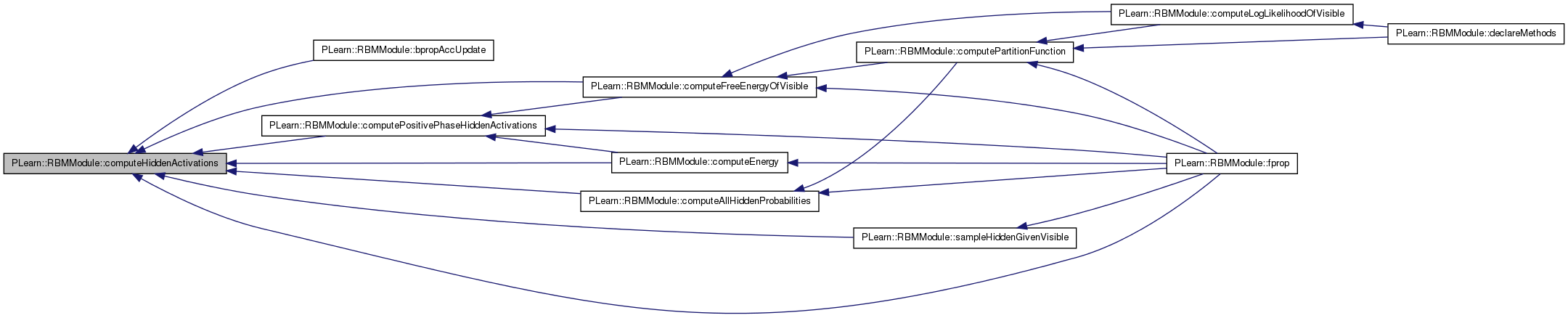

| void | computeHiddenActivations (const Mat &visible) |

| Compute activations on the hidden layer based on the provided visible input. | |

| void | computeVisibleActivations (const Mat &hidden, bool using_reconstruction_connection=false) |

| Compute activations on the visible layer. | |

| void | computePositivePhaseHiddenActivations (const Mat &visible) |

| Compute activations on the hidden layer based on the provided visible input during positive phase. | |

| void | sampleHiddenGivenVisible (const Mat &visible) |

| Sample hidden layer data based on the provided 'visible' inputs. | |

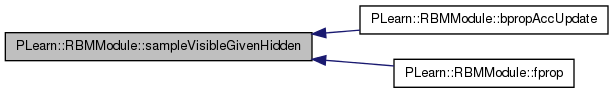

| void | sampleVisibleGivenHidden (const Mat &hidden) |

| Sample visible layer data based on the provided 'hidden' inputs. | |

| void | computeFreeEnergyOfVisible (const Mat &visible, Mat &energy, bool positive_phase=true) |

| Compute free energy on the visible layer and store it in the 'energy' matrix. | |

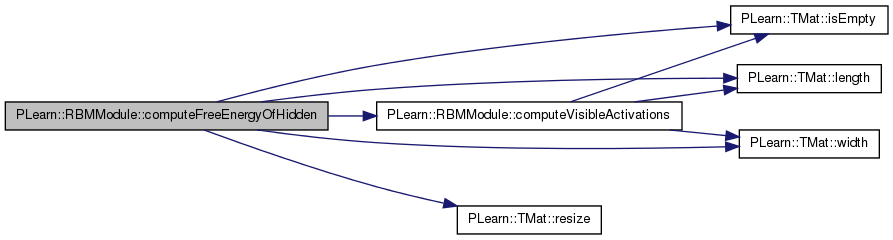

| void | computeFreeEnergyOfHidden (const Mat &hidden, Mat &energy) |

| Compute free energy on the hidden layer and store it in the 'energy' matrix. | |

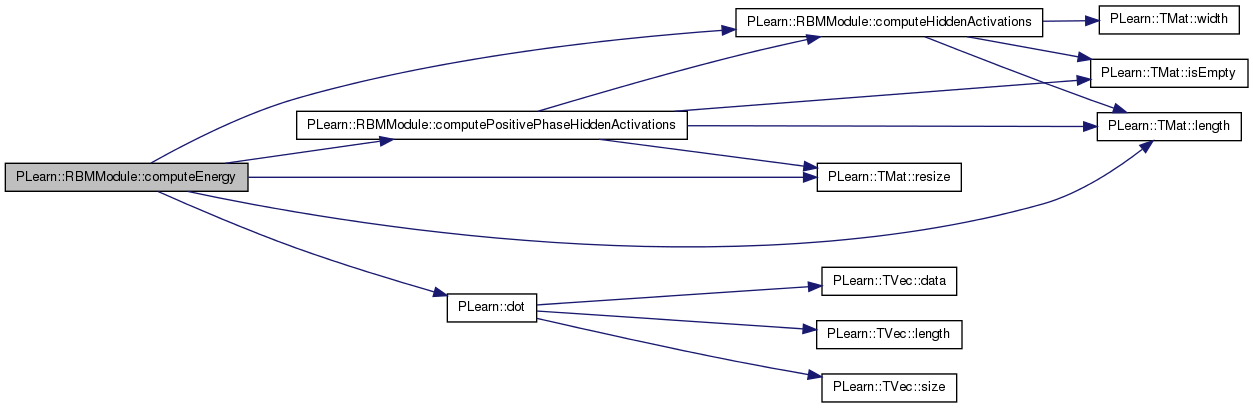

| void | computeEnergy (const Mat &visible, const Mat &hidden, Mat &energy, bool positive_phase=true) |

| Compute energy of the joint (visible, hidden) configuration and store it in the 'energy' matrix. | |

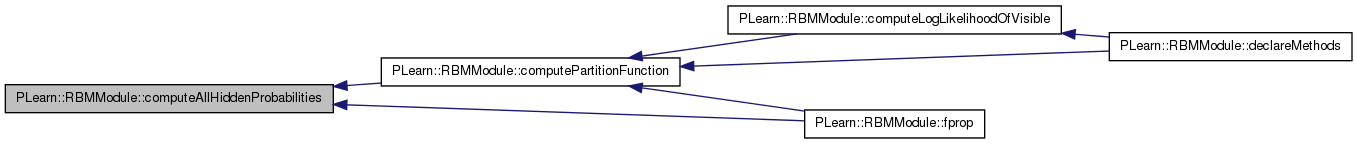

| void | computePartitionFunction () |

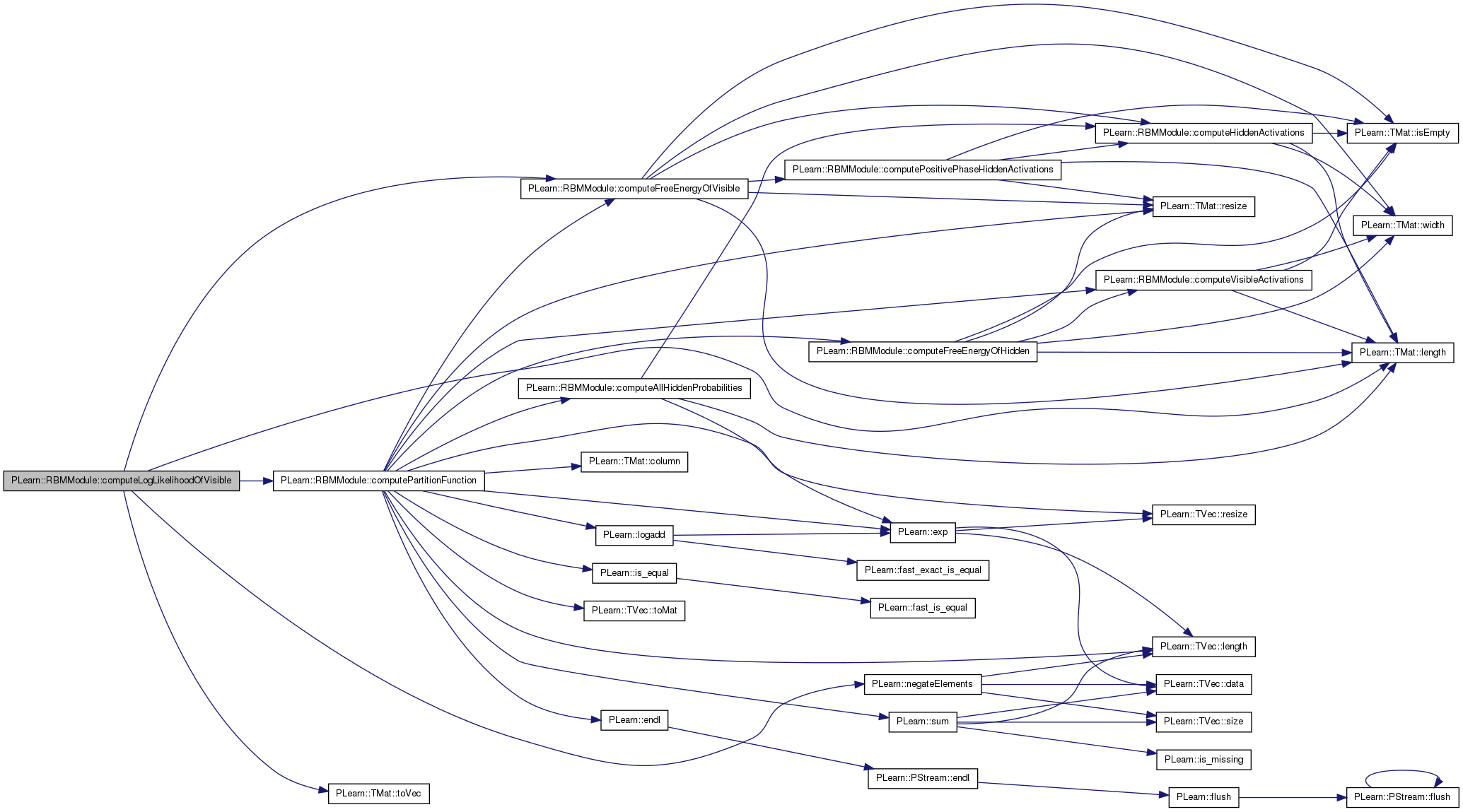

| Vec | computeLogLikelihoodOfVisible (const Mat &visible) |

| See remote documentation. | |

| void | computeAllHiddenProbabilities (const Mat &visible, const Mat &p_hidden) |

| Compute probabilities of all hidden configurations given some visible inputs. | |

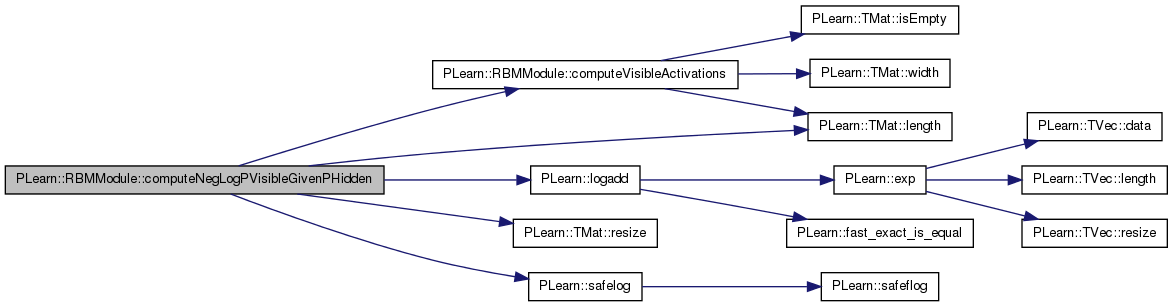

| void | computeNegLogPVisibleGivenPHidden (Mat visible, Mat hidden, Mat *neg_log_phidden, Mat &neg_log_pvisible_given_phidden) |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< RBMLayer > | hidden_layer |

| PP< RBMLayer > | visible_layer |

| PP< RBMConnection > | connection |

| PP< RBMConnection > | reconstruction_connection |

| real | cd_learning_rate |

| real | grad_learning_rate |

| bool | tied_connection_weights |

| bool | compute_contrastive_divergence |

| bool | compare_true_gradient_with_cd |

| int | n_steps_compare |

| int | n_Gibbs_steps_CD |

| Number of Gibbs sampling steps in negative phase of contrastive divergence. | |

| int | min_n_Gibbs_steps |

| used to generate samples from the RBM | |

| int | n_Gibbs_steps_per_generated_sample |

| bool | compute_log_likelihood |

| bool | minimize_log_likelihood |

| int | Gibbs_step |

| used to generate samples from the RBM | |

| real | log_partition_function |

| bool | partition_function_is_stale |

| bool | deterministic_reconstruction_in_cd |

| bool | stochastic_reconstruction |

| bool | standard_cd_grad |

| bool | standard_cd_bias_grad |

| bool | standard_cd_weights_grad |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | addPortName (const string &name) |

| Add a new port to the 'portname_to_index' map and 'ports' vector. | |

| void | setLearningRatesOnlyForLayers (real lr) |

| Forward the given learning rate to all elements of the layers and to the reconstruction connections (NOT of the connection weights). | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

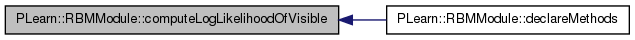

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declare the methods that are remote-callable. | |

Protected Attributes | |

| Mat * | hidden_bias |

| Mat * | weights |

| Mat | hidden_exp_grad |

| Used to store gradient w.r.t. expectations of the hidden layer. | |

| Mat | hidden_act_grad |

| Used to store gradient w.r.t. activations of the hidden layer. | |

| Mat | visible_exp_grad |

| Used to store gradient w.r.t. expectations of the visible layer. | |

| Mat | visible_act_grad |

| Used to store gradient w.r.t. activations of the visible layer. | |

| Vec | visible_bias_grad |

| Used to store gradient w.r.t. bias of visible layer. | |

| Mat | hidden_exp_store |

| Used to cache the hidden layer expectations and activations. | |

| Mat | hidden_act_store |

| Mat * | hidden_act |

| bool | hidden_activations_are_computed |

| bool | hidden_is_output |

| Mat | store_weights_grad |

| Used to store the contrastive divergence gradient w.r.t. weights. | |

| Mat | store_hidden_bias_grad |

| Used to store the contrastive divergence gradient w.r.t. hidden bias. | |

| TVec< string > | ports |

| List of port names. | |

| map< string, int > | portname_to_index |

| Map from a port name to its index in the 'ports' vector. | |

| Mat | energy_inputs |

| Used to store inputs generated to compute the free energy. | |

| Vec | all_p_visible |

| P(x) for all possible configurations x of visible layer. | |

| Mat | all_hidden_cond_prob |

| Used to store P(h|x) for all values of h and all values of x. | |

| Mat | all_visible_cond_prob |

| Used to store P(x|h) for all values of h and all values of x. | |

| Mat | p_ht_given_x |

| Used to store P(h_t|x) for all values of h_t and some values of x. | |

| Mat | p_xt_given_x |

| Used to store P(x_t|x) for all values of x_t and some values of x. | |

Private Types | |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

The first sentence should be a BRIEF DESCRIPTION of what the class does.

Place the rest of the class programmer documentation here. Doxygen supports Javadoc-style comments. See http://www.doxygen.org/manual.html

Definition at line 60 of file RBMModule.h.

typedef OnlineLearningModule PLearn::RBMModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 62 of file RBMModule.h.

| PLearn::RBMModule::RBMModule | ( | ) |

Default constructor.

Definition at line 136 of file RBMModule.cc.

:

cd_learning_rate(0),

grad_learning_rate(0),

tied_connection_weights(false),

compute_contrastive_divergence(false),

compare_true_gradient_with_cd(false),

n_steps_compare(1),

n_Gibbs_steps_CD(1),

min_n_Gibbs_steps(1),

n_Gibbs_steps_per_generated_sample(-1),

compute_log_likelihood(false),

minimize_log_likelihood(false),

Gibbs_step(0),

log_partition_function(0),

partition_function_is_stale(true),

deterministic_reconstruction_in_cd(false),

stochastic_reconstruction(false),

standard_cd_grad(true),

standard_cd_bias_grad(true),

standard_cd_weights_grad(true),

hidden_bias(NULL),

weights(NULL),

hidden_act(NULL),

hidden_activations_are_computed(false)

{

}

| string PLearn::RBMModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

| OptionList & PLearn::RBMModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

| RemoteMethodMap & PLearn::RBMModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

| Object * PLearn::RBMModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 131 of file RBMModule.cc.

| StaticInitializer RBMModule::_static_initializer_ & PLearn::RBMModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

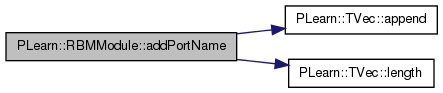

| void PLearn::RBMModule::addPortName | ( | const string & | name | ) | [protected] |

Add a new port to the 'portname_to_index' map and 'ports' vector.

Definition at line 507 of file RBMModule.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< T >::length(), PLearn::OnlineLearningModule::name, PLASSERT, portname_to_index, and ports.

Referenced by build_().

{

PLASSERT( portname_to_index.find(name) == portname_to_index.end() );

portname_to_index[name] = ports.length();

ports.append(name);

}

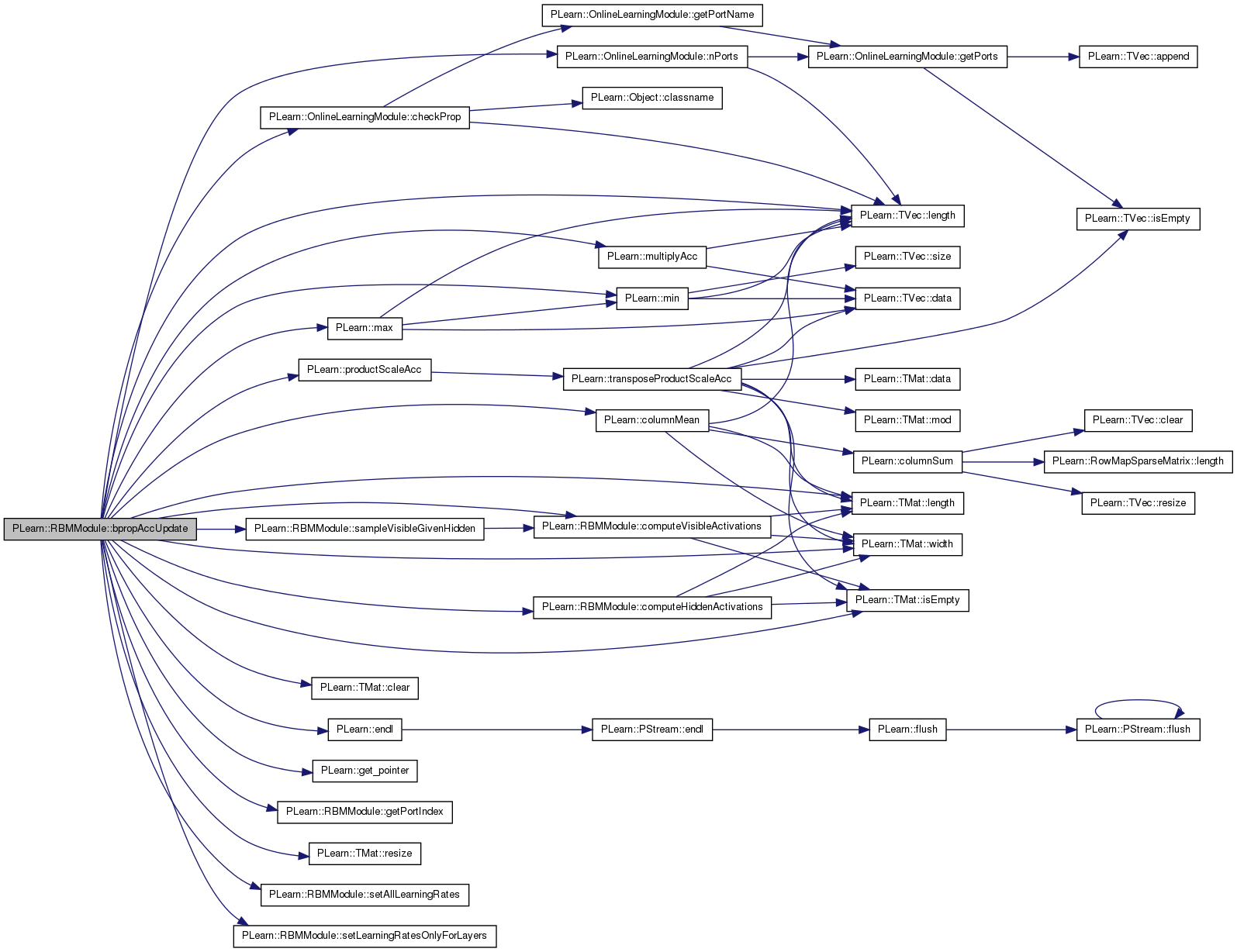

| void PLearn::RBMModule::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

Overridden.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 1892 of file RBMModule.cc.

References c, cd_learning_rate, PLearn::OnlineLearningModule::checkProp(), PLearn::TMat< T >::clear(), PLearn::columnMean(), compute_contrastive_divergence, computeHiddenActivations(), computeVisibleActivations(), connection, deterministic_reconstruction_in_cd, PLearn::endl(), energy_inputs, PLearn::get_pointer(), getPortIndex(), grad_learning_rate, hidden_act, hidden_act_grad, hidden_bias, hidden_exp_grad, hidden_is_output, hidden_layer, i, PLearn::TMat< T >::isEmpty(), j, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::max(), PLearn::min(), minimize_log_likelihood, PLearn::multiplyAcc(), n_Gibbs_steps_CD, PLearn::OnlineLearningModule::name, PLearn::OnlineLearningModule::nPorts(), partition_function_is_stale, PLASSERT, PLASSERT_MSG, PLCHECK_MSG, PLERROR, PLearn::productScaleAcc(), reconstruction_connection, PLearn::TMat< T >::resize(), sampleVisibleGivenHidden(), setAllLearningRates(), setLearningRatesOnlyForLayers(), standard_cd_bias_grad, standard_cd_grad, standard_cd_weights_grad, store_hidden_bias_grad, store_weights_grad, tied_connection_weights, visible_act_grad, visible_bias_grad, visible_exp_grad, visible_layer, w, weights, PLearn::TMat< T >::width(), and x.

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( ports_gradient.length() == nPorts() );

Mat* visible = ports_value[getPortIndex("visible")];

Mat* visible_grad = ports_gradient[getPortIndex("visible")];

Mat* hidden_grad = ports_gradient[getPortIndex("hidden.state")];

Mat* hidden_activations_grad =

ports_gradient[getPortIndex("hidden_activations.state")];

Mat* hidden = ports_value[getPortIndex("hidden.state")];

hidden_act = ports_value[getPortIndex("hidden_activations.state")];

Mat* visible_activations = ports_value[getPortIndex("visible_activations.state")];

Mat* reconstruction_error_grad = 0;

Mat* hidden_bias_grad = ports_gradient[getPortIndex("hidden_bias")];

weights = ports_value[getPortIndex("weights")];

Mat* weights_grad = ports_gradient[getPortIndex("weights")];

hidden_bias = ports_value[getPortIndex("hidden_bias")];

Mat* energy_grad = ports_gradient[getPortIndex("energy")];

Mat* contrastive_divergence_grad = NULL;

Mat* contrastive_divergence = NULL;

if (compute_contrastive_divergence)

contrastive_divergence = ports_value[getPortIndex("contrastive_divergence")];

bool computed_contrastive_divergence = compute_contrastive_divergence &&

contrastive_divergence && !contrastive_divergence->isEmpty();

// Ensure the gradient w.r.t. contrastive divergence is 1 (if provided).

if (computed_contrastive_divergence) {

contrastive_divergence_grad =

ports_gradient[getPortIndex("contrastive_divergence")];

if (contrastive_divergence_grad) {

PLASSERT( !contrastive_divergence_grad->isEmpty() );

PLASSERT( min(*contrastive_divergence_grad) >= 1 );

PLASSERT( max(*contrastive_divergence_grad) <= 1 );

}

}

if(reconstruction_connection)

reconstruction_error_grad =

ports_gradient[getPortIndex("reconstruction_error.state")];

// Ensure the visible gradient is not provided as input. This is because we

// accumulate more than once in 'visible_grad'.

// PLASSERT_MSG( !visible_grad || visible_grad->isEmpty(), "If visible gradient is desired "

// " the corresponding matrix should have 0 length" );

bool compute_visible_grad = visible_grad && visible_grad->isEmpty();

bool compute_hidden_grad = hidden_grad && hidden_grad->isEmpty();

bool compute_weights_grad = weights_grad && weights_grad->isEmpty();

bool provided_hidden_grad = hidden_grad && !hidden_grad->isEmpty();

bool provided_hidden_act_grad = hidden_activations_grad &&

!hidden_activations_grad->isEmpty();

int mbs = (visible && !visible->isEmpty()) ? visible->length() : -1;

// BPROP of UPWARD FPROP

if (provided_hidden_grad || provided_hidden_act_grad)

{

// Note: the assert below is for behavior compatibility with previous

// code. It might not be necessary, or might need to be modified.

PLASSERT( visible && !visible->isEmpty() );

// Note: we need to perform the following steps even if the gradient

// learning rate is equal to 0. This is because we must propagate the

// gradient to the visible layer, even though no update is required.

if (tied_connection_weights)

setLearningRatesOnlyForLayers(grad_learning_rate);

else

setAllLearningRates(grad_learning_rate);

PLASSERT_MSG( hidden && hidden_act ,

"To compute gradients in bprop, the "

"hidden_activations.state port must have been filled "

"during fprop" );

// Compute gradient w.r.t. activations of the hidden layer.

if (provided_hidden_grad)

hidden_layer->bpropUpdate(

*hidden_act, *hidden, hidden_act_grad, *hidden_grad,

false);

if (provided_hidden_act_grad) {

if (!provided_hidden_grad) {

// 'hidden_act_grad' will not have been resized nor filled yet,

// so we need to do it now.

hidden_act_grad.resize(hidden_activations_grad->length(),

hidden_activations_grad->width());

hidden_act_grad.clear();

}

hidden_act_grad += *hidden_activations_grad;

}

if (hidden_bias_grad)

{

PLASSERT( hidden_bias_grad->isEmpty() &&

hidden_bias_grad->width() == hidden_layer->size );

hidden_bias_grad->resize(mbs,hidden_layer->size);

*hidden_bias_grad += hidden_act_grad;

}

// Compute gradient w.r.t. expectations of the visible layer (=

// inputs).

Mat* store_visible_grad = NULL;

if (compute_visible_grad) {

PLASSERT( visible_grad->width() == visible_layer->size );

store_visible_grad = visible_grad;

} else {

// We do not actually need to store the gradient, but since it

// is required in bpropUpdate, we provide a dummy matrix to

// store it.

store_visible_grad = &visible_exp_grad;

}

store_visible_grad->resize(mbs,visible_layer->size);

if (weights)

{

int up = connection->up_size;

int down = connection->down_size;

PLASSERT( !weights->isEmpty() &&

weights_grad && weights_grad->isEmpty() &&

weights_grad->width() == up * down );

weights_grad->resize(mbs, up * down);

Mat w, wg;

Vec v,h,vg,hg;

for(int i=0; i<mbs; i++)

{

w = Mat(up, down,(*weights)(i));

wg = Mat(up, down,(*weights_grad)(i));

v = (*visible)(i);

h = (*hidden_act)(i);

vg = (*store_visible_grad)(i);

hg = hidden_act_grad(i);

connection->petiteCulotteOlivierUpdate(

v,

w,

h,

vg,

wg,

hg,true);

}

}

else

{

connection->bpropUpdate(

*visible, *hidden_act, *store_visible_grad,

hidden_act_grad, true);

}

partition_function_is_stale = true;

}

// BPROP of DOWNWARD FPROP

if (compute_hidden_grad && visible_grad && !compute_visible_grad)

{

PLASSERT(visible && !visible->isEmpty());

PLASSERT(visible_activations && !visible_activations->isEmpty());

PLASSERT(hidden && !hidden->isEmpty());

setAllLearningRates(grad_learning_rate);

visible_layer->bpropUpdate(*visible_activations,

*visible, visible_act_grad, *visible_grad,

false);

// PLASSERT_MSG(!visible_bias_grad,"back-prop into visible bias not implemented for downward fprop");

// PLASSERT_MSG(!weights_grad,"back-prop into weights not implemented for downward fprop");

// hidden_grad->resize(mbs,hidden_layer->size);

TVec<Mat*> ports_value(2);

TVec<Mat*> ports_gradient(2);

ports_value[0] = visible_activations;

ports_value[1] = hidden;

ports_gradient[0] = &visible_act_grad;

ports_gradient[1] = hidden_grad;

connection->bpropAccUpdate(ports_value,ports_gradient);

}

if (cd_learning_rate > 0 && minimize_log_likelihood) {

PLASSERT( visible && !visible->isEmpty() );

PLASSERT( hidden && !hidden->isEmpty() );

if (tied_connection_weights)

setLearningRatesOnlyForLayers(cd_learning_rate);

else

setAllLearningRates(cd_learning_rate);

// positive phase

visible_layer->accumulatePosStats(*visible);

hidden_layer->accumulatePosStats(*hidden);

connection->accumulatePosStats(*visible,*hidden);

// negative phase

PLCHECK_MSG(hidden_layer->size<32 || visible_layer->size<32,

"To minimize exact log-likelihood of an RBM, hidden_layer->size "

"or visible_layer->size must be <32");

// gradient of partition function

if (hidden_layer->size > visible_layer->size)

// do it by summing over visible configurations

{

PLASSERT(visible_layer->classname()=="RBMBinomialLayer");

// assuming a binary input we sum over all bit configurations

int n_configurations = 1 << visible_layer->size; // = 2^{visible_layer->size}

energy_inputs.resize(1, visible_layer->size);

Vec input = energy_inputs(0);

// COULD BE DONE MORE EFFICIENTLY BY DOING MANY CONFIGURATIONS

// AT ONCE IN A 'MINIBATCH'

for (int c=0;c<n_configurations;c++)

{

// convert integer c into a bit-wise visible representation

int x=c;

for (int i=0;i<visible_layer->size;i++)

{

input[i]= x & 1; // take least significant bit

x >>= 1; // and shift right (divide by 2)

}

connection->setAsDownInput(input);

hidden_layer->getAllActivations(connection,0,false);

hidden_layer->computeExpectation();

visible_layer->accumulateNegStats(input);

hidden_layer->accumulateNegStats(hidden_layer->expectation);

connection->accumulateNegStats(input,hidden_layer->expectation);

}

}

else

{

PLASSERT(hidden_layer->classname()=="RBMBinomialLayer");

// assuming a binary hidden we sum over all bit configurations

int n_configurations = 1 << hidden_layer->size; // = 2^{hidden_layer->size}

energy_inputs.resize(1, hidden_layer->size);

Vec h = energy_inputs(0);

for (int c=0;c<n_configurations;c++)

{

// convert integer c into a bit-wise hidden representation

int x=c;

for (int i=0;i<hidden_layer->size;i++)

{

h[i]= x & 1; // take least significant bit

x >>= 1; // and shift right (divide by 2)

}

connection->setAsUpInput(h);

visible_layer->getAllActivations(connection,0,false);

visible_layer->computeExpectation();

visible_layer->accumulateNegStats(visible_layer->expectation);

hidden_layer->accumulateNegStats(h);

connection->accumulateNegStats(visible_layer->expectation,h);

}

}

// update

visible_layer->update();

hidden_layer->update();

connection->update();

}

if (cd_learning_rate > 0 && !minimize_log_likelihood) {

EXTREME_MODULE_LOG << "Performing contrastive divergence step in RBM '"

<< name << "'" << endl;

// Perform a step of contrastive divergence.

PLASSERT( visible && !visible->isEmpty() );

if (tied_connection_weights)

setLearningRatesOnlyForLayers(cd_learning_rate);

else

setAllLearningRates(cd_learning_rate);

Mat* negative_phase_visible_samples =

computed_contrastive_divergence?ports_value[getPortIndex("negative_phase_visible_samples.state")]:0;

const Mat* negative_phase_hidden_expectations =

computed_contrastive_divergence ?

ports_value[getPortIndex("negative_phase_hidden_expectations.state")]

: NULL;

Mat* negative_phase_hidden_activations =

computed_contrastive_divergence ?

ports_value[getPortIndex("negative_phase_hidden_activations.state")]

: NULL;

PLASSERT( visible && hidden );

PLASSERT( !negative_phase_visible_samples ||

!negative_phase_visible_samples->isEmpty() );

Mat vis_expect_ptr;

if (!negative_phase_visible_samples)

{

// Generate hidden samples.

hidden_layer->setExpectations(*hidden);

for( int i=0; i<n_Gibbs_steps_CD; i++)

{

hidden_layer->generateSamples();

if (deterministic_reconstruction_in_cd)

{

// (Negative phase) compute visible expectations

computeVisibleActivations(hidden_layer->samples);

visible_layer->computeExpectations();

// compute corresponding hidden expectations.

computeHiddenActivations(visible_layer->getExpectations());

}

else // classical CD learning

{

// (Negative phase) Generate visible samples.

sampleVisibleGivenHidden(hidden_layer->samples);

// compute corresponding hidden expectations.

computeHiddenActivations(visible_layer->samples);

}

hidden_layer->computeExpectations();

}

PLASSERT( !computed_contrastive_divergence );

PLASSERT( !negative_phase_hidden_expectations );

PLASSERT( !negative_phase_hidden_activations );

if (deterministic_reconstruction_in_cd) {

vis_expect_ptr = visible_layer->getExpectations();

negative_phase_visible_samples = &vis_expect_ptr;

}

else // classical CD learning

negative_phase_visible_samples = &(visible_layer->samples);

negative_phase_hidden_activations = &(hidden_layer->activations);

negative_phase_hidden_expectations = &(hidden_layer->getExpectations());

}

PLASSERT( negative_phase_hidden_expectations &&

!negative_phase_hidden_expectations->isEmpty() );

PLASSERT( negative_phase_hidden_activations &&

!negative_phase_hidden_activations->isEmpty() );

// Perform update.

visible_layer->update(*visible, *negative_phase_visible_samples);

bool connection_update_is_done = false;

if (compute_weights_grad) {

// First resize the 'weights_grad' matrix.

int up = connection->up_size;

int down = connection->down_size;

PLASSERT( weights && !weights->isEmpty() &&

weights_grad->width() == up * down );

weights_grad->resize(mbs, up * down);

if (standard_cd_weights_grad)

{

// Perform both computation of weights gradient and do update

// at the same time.

Mat wg;

Vec vp, hp, vn, hn;

for(int i=0; i<mbs; i++)

{

vp = (*visible)(i);

hp = (*hidden)(i);

vn = (*negative_phase_visible_samples)(i);

hn = (*negative_phase_hidden_expectations)(i);

wg = Mat(up, down,(*weights_grad)(i));

connection->petiteCulotteOlivierCD(

vp, hp,

vn,

hn,

wg,

true);

connection_update_is_done = true;

}

}

}

if (!standard_cd_weights_grad || !standard_cd_grad) {

// Compute 'true' gradient of contrastive divergence w.r.t.

// the weights matrix.

int up = connection->up_size;

int down = connection->down_size;

Mat* weights_g = weights_grad;

if (!weights_g) {

// We need to store the gradient in another matrix.

store_weights_grad.resize(mbs, up * down);

store_weights_grad.clear();

weights_g = & store_weights_grad;

}

PLASSERT( connection->classname() == "RBMMatrixConnection" &&

visible_layer->classname() == "RBMBinomialLayer" &&

hidden_layer->classname() == "RBMBinomialLayer" );

for (int k = 0; k < mbs; k++) {

int idx = 0;

for (int i = 0; i < up; i++) {

real p_i_p = (*hidden)(k, i);

real a_i_p = (*hidden_act)(k, i);

real p_i_n =

(*negative_phase_hidden_expectations)(k, i);

real a_i_n =

(*negative_phase_hidden_activations)(k, i);

real scale_p = 1 + (1 - p_i_p) * a_i_p;

real scale_n = 1 + (1 - p_i_n) * a_i_n;

for (int j = 0; j < down; j++, idx++) {

// Weight 'idx' is the (i,j)-th element in the

// 'weights' matrix.

real v_j_p = (*visible)(k, j);

real v_j_n =

(*negative_phase_visible_samples)(k, j);

(*weights_g)(k, idx) +=

p_i_n * v_j_n * scale_n // Negative phase.

-(p_i_p * v_j_p * scale_p); // Positive phase.

}

}

}

if (!standard_cd_grad && !tied_connection_weights) {

// Update connection manually.

Mat& weights = ((RBMMatrixConnection*)

get_pointer(connection))->weights;

real lr = cd_learning_rate / mbs;

for (int k = 0; k < mbs; k++) {

int idx = 0;

for (int i = 0; i < up; i++)

for (int j = 0; j < down; j++, idx++)

weights(i, j) -= lr * (*weights_g)(k, idx);

}

connection_update_is_done = true;

}

}

if (!connection_update_is_done)

connection->update(*visible, *hidden,

*negative_phase_visible_samples,

*negative_phase_hidden_expectations);

Mat* hidden_bias_g = hidden_bias_grad;

if (!standard_cd_grad && !hidden_bias_grad) {

// We need to compute the CD gradient w.r.t. bias of hidden layer,

// but there is no bias coming from the outside. Thus we need

// another matrix to store this gradient.

store_hidden_bias_grad.resize(mbs, hidden_layer->size);

store_hidden_bias_grad.clear();

hidden_bias_g = & store_hidden_bias_grad;

}

if (hidden_bias_g)

{

if (hidden_bias_g->isEmpty()) {

PLASSERT(hidden_bias_g->width() == hidden_layer->size);

hidden_bias_g->resize(mbs,hidden_layer->size);

}

PLASSERT_MSG( hidden_layer->classname() == "RBMBinomialLayer" &&

visible_layer->classname() == "RBMBinomialLayer",

"Only implemented for binomial layers" );

// d(contrastive_divergence)/dhidden_bias

for (int k = 0; k < hidden_bias_g->length(); k++) {

for (int i = 0; i < hidden_bias_g->width(); i++) {

real p_i_p = (*hidden)(k, i);

real a_i_p = (*hidden_act)(k, i);

real p_i_n = (*negative_phase_hidden_expectations)(k, i);

real a_i_n = (*negative_phase_hidden_activations)(k, i);

(*hidden_bias_g)(k, i) +=

standard_cd_bias_grad ? p_i_n - p_i_p :

p_i_n * (1 - p_i_n) * a_i_n + p_i_n // Neg. phase

-( p_i_p * (1 - p_i_p) * a_i_p + p_i_p ); // Pos. phase

}

}

}

if (standard_cd_grad) {

hidden_layer->update(*hidden, *negative_phase_hidden_expectations);

} else {

PLASSERT( hidden_layer->classname() == "RBMBinomialLayer" );

// Update hidden layer by hand.

Vec& bias = hidden_layer->bias;

real lr = cd_learning_rate / mbs;

for (int i = 0; i < mbs; i++)

bias -= lr * (*hidden_bias_g)(i);

}

partition_function_is_stale = true;

} else {

PLCHECK_MSG( !contrastive_divergence_grad ||

(!hidden_bias_grad && !weights_grad),

"You currently cannot compute the "

"gradient of contrastive divergence w.r.t. external ports "

"when 'cd_learning_rate' is set to 0" );

}

if (reconstruction_error_grad && !reconstruction_error_grad->isEmpty()) {

if (tied_connection_weights)

setLearningRatesOnlyForLayers(grad_learning_rate);

else

setAllLearningRates(grad_learning_rate);

PLASSERT( reconstruction_connection != 0 );

// Perform gradient descent on Autoassociator reconstruction cost

Mat* visible_reconstruction = ports_value[getPortIndex("visible_reconstruction.state")];

Mat* visible_reconstruction_activations = ports_value[getPortIndex("visible_reconstruction_activations.state")];

Mat* reconstruction_error = ports_value[getPortIndex("reconstruction_error.state")];

PLASSERT( hidden != 0 );

PLASSERT( visible && hidden_act &&

visible_reconstruction && visible_reconstruction_activations &&

reconstruction_error);

//int mbs = reconstruction_error_grad->length();

PLCHECK_MSG( !weights, "In RBMModule::bpropAccUpdate(): reconstruction cost "

"for conditional weights is not implemented");

// Backprop reconstruction gradient

// Must change visible_layer's expectation

visible_layer->getExpectations() << *visible_reconstruction;

visible_layer->bpropNLL(*visible,*reconstruction_error,

visible_act_grad);

// Combine with incoming gradient

PLASSERT( (*reconstruction_error_grad).width() == 1 );

for (int t=0;t<mbs;t++)

visible_act_grad(t) *= (*reconstruction_error_grad)(t,0);

// Visible bias update

columnMean(visible_act_grad, visible_bias_grad);

visible_layer->update(visible_bias_grad);

// Reconstruction connection update

hidden_exp_grad.resize(mbs, hidden_layer->size);

hidden_exp_grad.clear();

hidden_exp_grad.resize(0, hidden_layer->size);

TVec<Mat*> rec_ports_value(2);

rec_ports_value[0] = visible_reconstruction_activations;

rec_ports_value[1] = hidden;

TVec<Mat*> rec_ports_gradient(2);

rec_ports_gradient[0] = &visible_act_grad;

rec_ports_gradient[1] = &hidden_exp_grad;

reconstruction_connection->bpropAccUpdate( rec_ports_value,

rec_ports_gradient );

// UGLY HACK WHICH BREAKS THE RULE THAT RBMMODULE CAN BE CALLED IN DIFFERENT CONTEXTS AND fprop/bprop ORDERS

// BUT NECESSARY WHEN hidden WAS AN INPUT

if (hidden_is_output)

{

// Hidden layer bias update

hidden_layer->bpropUpdate(*hidden_act,

*hidden, hidden_act_grad,

hidden_exp_grad, false);

if (hidden_bias_grad)

{

if (hidden_bias_grad->isEmpty()) {

PLASSERT( hidden_bias_grad->width() == hidden_layer->size );

hidden_bias_grad->resize(mbs,hidden_layer->size);

}

*hidden_bias_grad += hidden_act_grad;

}

// Connection update

if(compute_visible_grad)

{

// The length of 'visible_grad' must be either 0 (if not computed

// previously) or the size of the mini-batches (otherwise).

PLASSERT( visible_grad->width() == visible_layer->size &&

(visible_grad->length() == 0 ||

visible_grad->length() == mbs) );

visible_grad->resize(mbs, visible_grad->width());

connection->bpropUpdate(

*visible, *hidden_act,

*visible_grad, hidden_act_grad, true);

}

else

{

visible_exp_grad.resize(mbs,visible_layer->size);

connection->bpropUpdate(

*visible, *hidden_act,

visible_exp_grad, hidden_act_grad, true);

}

}

else if (hidden_grad && hidden_grad->isEmpty()) // copy the hidden gradient

{

hidden_grad->resize(mbs,hidden_layer->size);

*hidden_grad << hidden_exp_grad;

}

partition_function_is_stale = true;

}

if (energy_grad && !energy_grad->isEmpty() &&

visible_grad && visible_grad->isEmpty())

// compute the gradient of the free-energy wrt input

{

// very cheap shot, specializing to the common case...

PLASSERT(hidden_layer->classname()=="RBMBinomialLayer");

PLASSERT(visible_layer->classname()=="RBMBinomialLayer" ||

visible_layer->classname()=="RBMGaussianlLayer");

PLASSERT(connection->classname()=="RBMMatrixConnection");

PLASSERT(hidden && !hidden->isEmpty());

// FE(x) = -b'x - sum_i softplus(hidden_layer->activation[i])

// dFE(x)/dx = -b - sum_i sigmoid(hidden_layer->activation[i]) W_i

// dC/dxt = -b dC/dFE - dC/dFE sum_i p_ti W_i

int mbs=energy_grad->length();

visible_grad->resize(mbs,visible_layer->size);

Mat& weights = ((RBMMatrixConnection*)

get_pointer(connection))->weights;

bool same_dC_dFE=true;

real dC_dFE=(*energy_grad)(0,0);

const Mat& p = *hidden;

for (int t=0;t<mbs;t++)

{

real new_dC_dFE=(*energy_grad)(t,0);

if (new_dC_dFE!=dC_dFE)

same_dC_dFE=false;

dC_dFE = new_dC_dFE;

multiplyAcc((*visible_grad)(t),visible_layer->bias,-dC_dFE);

}

if (same_dC_dFE)

productScaleAcc(*visible_grad, p, false, weights, false, -dC_dFE,

real(1));

else

for (int t=0;t<mbs;t++)

productScaleAcc((*visible_grad)(t), weights, true, p(t),

-(*energy_grad)(t, 0), real(1));

}

// Explicit error message in the case of the 'visible' port.

if (compute_visible_grad && visible_grad->isEmpty())

PLERROR("In RBMModule::bpropAccUpdate - The gradient with respect "

"to the 'visible' port was asked, but not computed");

checkProp(ports_gradient);

// Reset pointers to ensure we do not reuse them by mistake.

hidden_act = NULL;

weights = NULL;

hidden_bias = NULL;

}

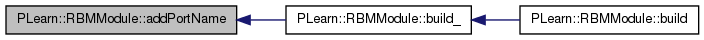

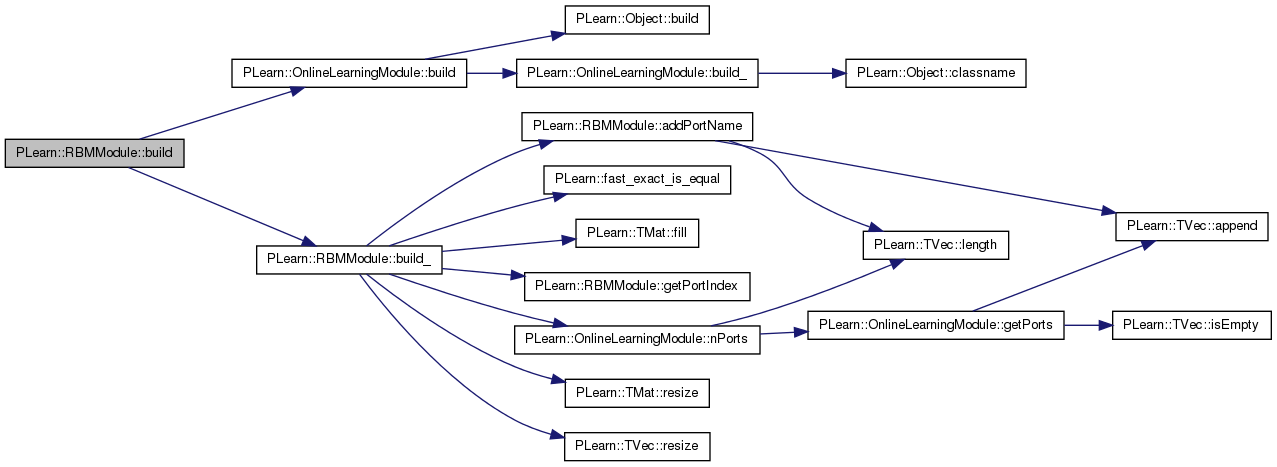

| void PLearn::RBMModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 498 of file RBMModule.cc.

References PLearn::OnlineLearningModule::build(), and build_().

{

inherited::build();

build_();

}

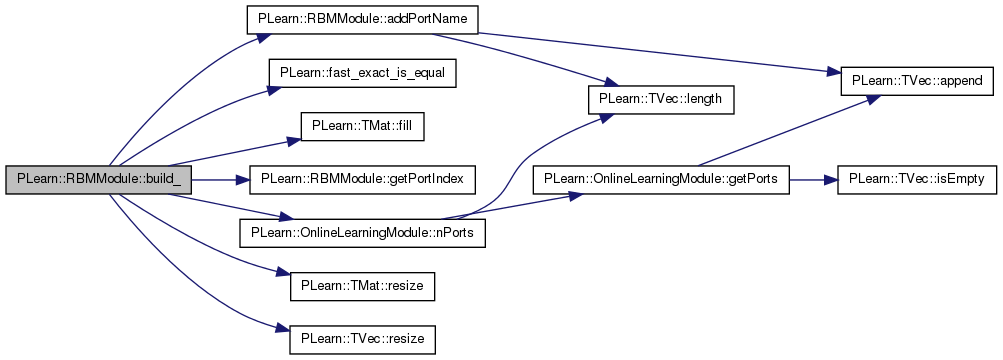

| void PLearn::RBMModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 366 of file RBMModule.cc.

References addPortName(), cd_learning_rate, compute_contrastive_divergence, connection, PLearn::fast_exact_is_equal(), PLearn::TMat< T >::fill(), getPortIndex(), grad_learning_rate, hidden_layer, min_n_Gibbs_steps, n_Gibbs_steps_per_generated_sample, PLearn::OnlineLearningModule::nPorts(), PLASSERT, PLCHECK_MSG, PLWARNING, PLearn::OnlineLearningModule::port_sizes, portname_to_index, ports, PLearn::OnlineLearningModule::random_gen, reconstruction_connection, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), standard_cd_bias_grad, standard_cd_grad, visible_bias_grad, and visible_layer.

Referenced by build().

{

PLASSERT( cd_learning_rate >= 0 && grad_learning_rate >= 0 );

if(visible_layer)

visible_bias_grad.resize(visible_layer->size);

// Forward random generator to underlying modules.

if (random_gen) {

if (hidden_layer && !hidden_layer->random_gen) {

hidden_layer->random_gen = random_gen;

hidden_layer->build();

hidden_layer->forget();

}

if (visible_layer && !visible_layer->random_gen) {

visible_layer->random_gen = random_gen;

visible_layer->build();

visible_layer->forget();

}

if (connection && !connection->random_gen) {

connection->random_gen = random_gen;

connection->build();

connection->forget();

}

if (reconstruction_connection &&

!reconstruction_connection->random_gen) {

reconstruction_connection->random_gen = random_gen;

reconstruction_connection->build();

reconstruction_connection->forget();

}

}

// buid ports and port_sizes

ports.resize(0);

portname_to_index.clear();

addPortName("visible");

addPortName("hidden.state");

addPortName("hidden_activations.state");

addPortName("visible_sample");

addPortName("visible_expectation");

addPortName("visible_activations.state");

addPortName("hidden_sample");

addPortName("energy");

addPortName("hidden_bias");

addPortName("weights");

addPortName("neg_log_likelihood");

// a column matrix with one element -log P(h) for each row h of "hidden",

// used as an input port, with neg_log_pvisible_given_phidden as output

addPortName("neg_log_phidden");

// compute column matrix with one entry -log P(x) = -log( sum_h P(x|h) P(h) ) for

// each row x of "visible", and where {P(h)}_h is provided

// in "neg_log_phidden" for the set of h's in "hidden".

addPortName("neg_log_pvisible_given_phidden");

addPortName("median_reldiff_cd_nll");

addPortName("mean_diff_cd_nll");

addPortName("agreement_cd_nll");

addPortName("agreement_stoch");

addPortName("bound_cd_nll");

addPortName("weights_stats");

addPortName("ratio_cd_leftout");

addPortName("abs_cd");

addPortName("nll_grad");

if(reconstruction_connection)

{

addPortName("visible_reconstruction.state");

addPortName("visible_reconstruction_activations.state");

addPortName("reconstruction_error.state");

}

if (compute_contrastive_divergence)

{

addPortName("contrastive_divergence");

addPortName("negative_phase_visible_samples.state");

addPortName("negative_phase_hidden_expectations.state");

addPortName("negative_phase_hidden_activations.state");

}

port_sizes.resize(nPorts(), 2);

port_sizes.fill(-1);

if (visible_layer) {

port_sizes(getPortIndex("visible"), 1) = visible_layer->size;

port_sizes(getPortIndex("visible_sample"), 1) = visible_layer->size;

port_sizes(getPortIndex("visible_expectation"), 1) = visible_layer->size;

port_sizes(getPortIndex("visible_activations.state"), 1) = visible_layer->size;

}

if (hidden_layer) {

port_sizes(getPortIndex("hidden.state"), 1) = hidden_layer->size;

port_sizes(getPortIndex("hidden_activations.state"), 1) = hidden_layer->size;

port_sizes(getPortIndex("hidden_sample"), 1) = hidden_layer->size;

port_sizes(getPortIndex("hidden_bias"),1) = hidden_layer->size;

if(visible_layer)

port_sizes(getPortIndex("weights"),1) = hidden_layer->size * visible_layer->size;

}

port_sizes(getPortIndex("energy"),1) = 1;

port_sizes(getPortIndex("neg_log_likelihood"),1) = 1;

port_sizes(getPortIndex("neg_log_phidden"),1) = 1;

port_sizes(getPortIndex("neg_log_pvisible_given_phidden"),1) = 1;

if(reconstruction_connection)

{

if (visible_layer) {

port_sizes(getPortIndex("visible_reconstruction.state"),1) =

visible_layer->size;

port_sizes(getPortIndex("visible_reconstruction_activations.state"),1) =

visible_layer->size;

}

port_sizes(getPortIndex("reconstruction_error.state"),1) = 1;

}

if (compute_contrastive_divergence)

{

port_sizes(getPortIndex("contrastive_divergence"),1) = 1;

if (visible_layer)

port_sizes(getPortIndex("negative_phase_visible_samples.state"),1) = visible_layer->size;

if (hidden_layer)

port_sizes(getPortIndex("negative_phase_hidden_expectations.state"),1) = hidden_layer->size;

if (fast_exact_is_equal(cd_learning_rate, 0))

PLWARNING("In RBMModule::build_ - Contrastive divergence is "

"computed but 'cd_learning_rate' is set to 0: no internal "

"update will be performed AND no contrastive divergence "

"gradient will be propagated.");

}

PLCHECK_MSG(!(!standard_cd_grad && standard_cd_bias_grad), "You cannot "

"compute the standard CD gradient w.r.t. external hidden bias and "

"use the 'true' CD gradient w.r.t. internal hidden bias");

if (n_Gibbs_steps_per_generated_sample<0)

n_Gibbs_steps_per_generated_sample = min_n_Gibbs_steps;

}

| void PLearn::RBMModule::CDUpdate | ( | const Mat & | v_0, |

| const Mat & | h_0, | ||

| const Mat & | v_k, | ||

| const Mat & | h_k | ||

| ) | [virtual] |

Perform one CD_k update, given the Markov chain statistics.

Definition at line 354 of file RBMModule.cc.

References connection, hidden_layer, partition_function_is_stale, and visible_layer.

Referenced by declareMethods().

{

visible_layer->update(v_0, v_k);

hidden_layer->update(h_0, h_k);

connection->update(v_0, h_0, v_k, h_k);

partition_function_is_stale = true;

}

| string PLearn::RBMModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 131 of file RBMModule.cc.

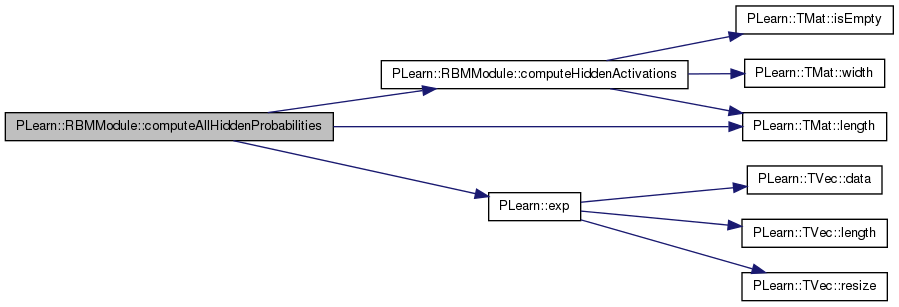

Compute probabilities of all hidden configurations given some visible inputs.

The 'p_hidden' matrix is filled such that the (i,j)-th element is P(hidden_configuration_i | visible_j).

Definition at line 660 of file RBMModule.cc.

References computeHiddenActivations(), PLearn::exp(), hidden_layer, i, j, and PLearn::TMat< T >::length().

Referenced by computePartitionFunction(), and fprop().

{

Vec hidden(hidden_layer->size);

computeHiddenActivations(visible);

int n_conf = hidden_layer->getConfigurationCount();

for (int i = 0; i < n_conf; i++) {

hidden_layer->getConfiguration(i, hidden);

for (int j = 0; j < visible.length(); j++) {

hidden_layer->activation = hidden_layer->activations(j);

real neg_log_p_h_given_v = hidden_layer->fpropNLL(hidden);

p_hidden(i, j) = exp(-neg_log_p_h_given_v);

}

}

}

| void PLearn::RBMModule::computeEnergy | ( | const Mat & | visible, |

| const Mat & | hidden, | ||

| Mat & | energy, | ||

| bool | positive_phase = true |

||

| ) |

Compute energy of the joint (visible, hidden) configuration and store it in the 'energy' matrix.

The 'positive_phase' boolean is used to save computations when we know we are in the positive phase of fprop.

Definition at line 523 of file RBMModule.cc.

References computeHiddenActivations(), computePositivePhaseHiddenActivations(), PLearn::dot(), hidden_act, hidden_layer, i, PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), and visible_layer.

Referenced by fprop().

{

int mbs=hidden.length();

energy.resize(mbs, 1);

Mat* hidden_activations = NULL;

if (positive_phase) {

computePositivePhaseHiddenActivations(visible);

hidden_activations = hidden_act;

} else {

computeHiddenActivations(visible);

hidden_activations = & hidden_layer->activations;

}

PLASSERT( hidden_activations );

for (int i=0;i<mbs;i++)

energy(i,0) = visible_layer->energy(visible(i))

- dot(hidden(i), (*hidden_activations)(i));

// Why not: + hidden_layer->energy(hidden(i)) ?

}

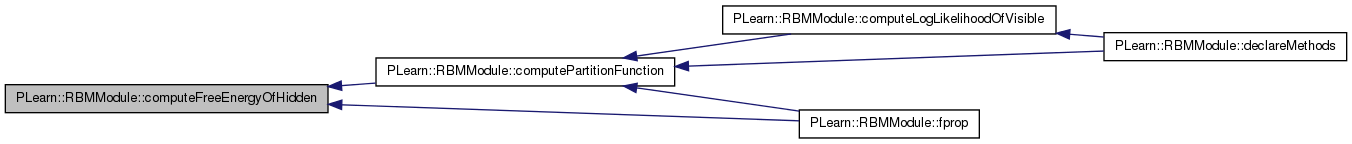

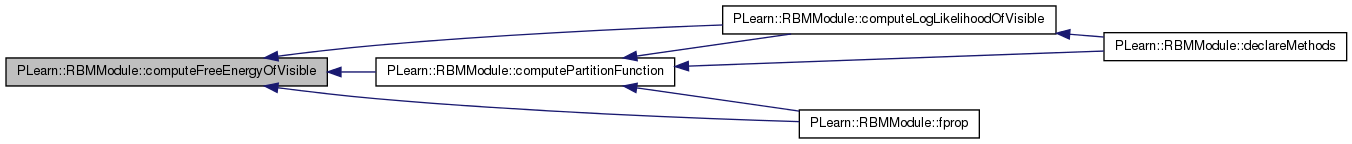

Compute free energy on the hidden layer and store it in the 'energy' matrix.

Definition at line 552 of file RBMModule.cc.

References computeVisibleActivations(), hidden_layer, i, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), visible_layer, and PLearn::TMat< T >::width().

Referenced by computePartitionFunction(), and fprop().

{

int mbs=hidden.length();

if (energy.isEmpty())

energy.resize(mbs,1);

else {

PLASSERT( energy.length() == mbs && energy.width() == 1 );

}

computeVisibleActivations(hidden, false);

for (int i=0;i<mbs;i++)

{

energy(i,0) = hidden_layer->energy(hidden(i))

+ visible_layer->freeEnergyContribution(

visible_layer->activations(i));

}

}

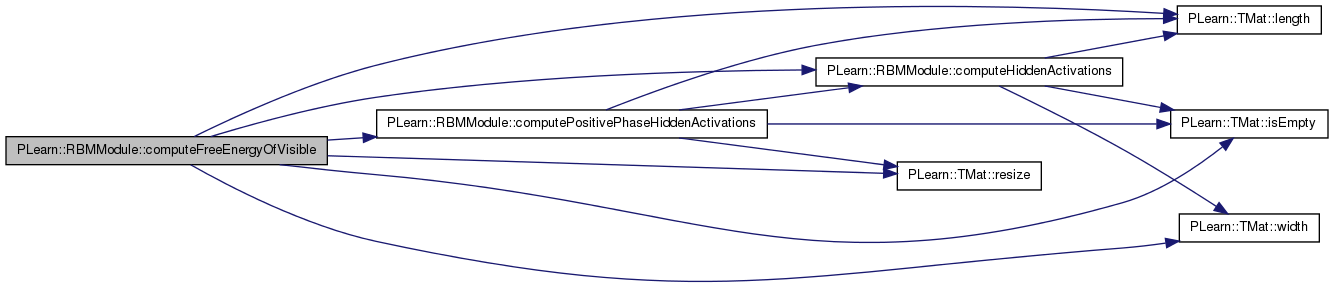

| void PLearn::RBMModule::computeFreeEnergyOfVisible | ( | const Mat & | visible, |

| Mat & | energy, | ||

| bool | positive_phase = true |

||

| ) |

Compute free energy on the visible layer and store it in the 'energy' matrix.

The 'positive_phase' boolean is used to save computations when we know we are in the positive phase of fprop.

Definition at line 579 of file RBMModule.cc.

References computeHiddenActivations(), computePositivePhaseHiddenActivations(), hidden_act, hidden_layer, i, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), visible_layer, and PLearn::TMat< T >::width().

Referenced by computeLogLikelihoodOfVisible(), computePartitionFunction(), and fprop().

{

int mbs=visible.length();

if (energy.isEmpty())

energy.resize(mbs,1);

else {

PLASSERT( energy.length() == mbs && energy.width() == 1 );

}

Mat* hidden_activations = NULL;

if (positive_phase && hidden_act) {

computePositivePhaseHiddenActivations(visible);

hidden_activations = hidden_act;

}

else {

computeHiddenActivations(visible);

hidden_activations = & hidden_layer->activations;

}

PLASSERT( hidden_activations && hidden_activations->length() == mbs

&& hidden_activations->width() == hidden_layer->size );

for (int i=0;i<mbs;i++)

{

energy(i,0) = visible_layer->energy(visible(i))

+ hidden_layer->freeEnergyContribution((*hidden_activations)(i));

}

}

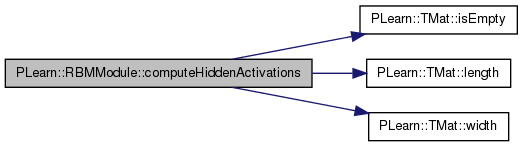

| void PLearn::RBMModule::computeHiddenActivations | ( | const Mat & | visible | ) |

Compute activations on the hidden layer based on the provided visible input.

If 'hidden_bias' is not null nor empty, then it is used as an additional bias for hidden activations.

Definition at line 610 of file RBMModule.cc.

References connection, hidden_bias, hidden_layer, i, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLASSERT, weights, and PLearn::TMat< T >::width().

Referenced by bpropAccUpdate(), computeAllHiddenProbabilities(), computeEnergy(), computeFreeEnergyOfVisible(), computePositivePhaseHiddenActivations(), fprop(), and sampleHiddenGivenVisible().

{

if(weights && !weights->isEmpty())

{

Mat old_weights;

Vec old_activation;

connection->getAllWeights(old_weights);

old_activation = hidden_layer->activation;

int up = connection->up_size;

int down = connection->down_size;

PLASSERT( weights->width() == up * down );

hidden_layer->setBatchSize( visible.length() );

for(int i=0; i<visible.length(); i++)

{

connection->setAllWeights(Mat(up, down, (*weights)(i)));

connection->setAsDownInput(visible(i));

hidden_layer->activation = hidden_layer->activations(i);

hidden_layer->getAllActivations(connection, 0, false);

if (hidden_bias && !hidden_bias->isEmpty())

hidden_layer->activation += (*hidden_bias)(i);

}

connection->setAllWeights(old_weights);

hidden_layer->activation = old_activation;

}

else

{

connection->setAsDownInputs(visible);

hidden_layer->getAllActivations(connection, 0, true);

if (hidden_bias && !hidden_bias->isEmpty())

hidden_layer->activations += *hidden_bias;

}

}

See remote documentation.

Note that this is really a convenience method only, to avoid having to obtain this likelihood through the ports.

Definition at line 646 of file RBMModule.cc.

References computeFreeEnergyOfVisible(), computePartitionFunction(), i, PLearn::TMat< T >::length(), log_partition_function, PLearn::negateElements(), and PLearn::TMat< T >::toVec().

Referenced by declareMethods().

{

Mat energy;

computePartitionFunction();

computeFreeEnergyOfVisible(visible, energy, false);

negateElements(energy);

for (int i = 0; i < energy.length(); i++)

energy(i, 0) -= log_partition_function;

return energy.toVec();

}

| void PLearn::RBMModule::computeNegLogPVisibleGivenPHidden | ( | Mat | visible, |

| Mat | hidden, | ||

| Mat * | neg_log_phidden, | ||

| Mat & | neg_log_pvisible_given_phidden | ||

| ) |

Definition at line 1862 of file RBMModule.cc.

References computeVisibleActivations(), i, PLearn::TMat< T >::length(), PLearn::logadd(), PLearn::TMat< T >::resize(), PLearn::safelog(), and visible_layer.

Referenced by fprop().

{

computeVisibleActivations(hidden,true);

int n_h = hidden.length();

int T = visible.length();

real default_neg_log_ph = safelog(real(n_h)); // default P(h)=1/Nh: -log(1/Nh) = log(Nh)

Vec old_act = visible_layer->activation;

neg_log_pvisible_given_phidden.resize(T,1);

for (int t=0;t<T;t++)

{

Vec x_t = visible(t);

real log_p_xt=0;

for (int i=0;i<n_h;i++)

{

visible_layer->activation = visible_layer->activations(i);

real neg_log_p_xt_given_hi = visible_layer->fpropNLL(x_t);

real neg_log_p_hi = neg_log_phidden?(*neg_log_phidden)(i,0):default_neg_log_ph;

if (i==0)

log_p_xt = -(neg_log_p_xt_given_hi + neg_log_p_hi);

else

log_p_xt = logadd(log_p_xt, -(neg_log_p_xt_given_hi + neg_log_p_hi));

}

neg_log_pvisible_given_phidden(t,0) = -log_p_xt;

}

visible_layer->activation = old_act;

}

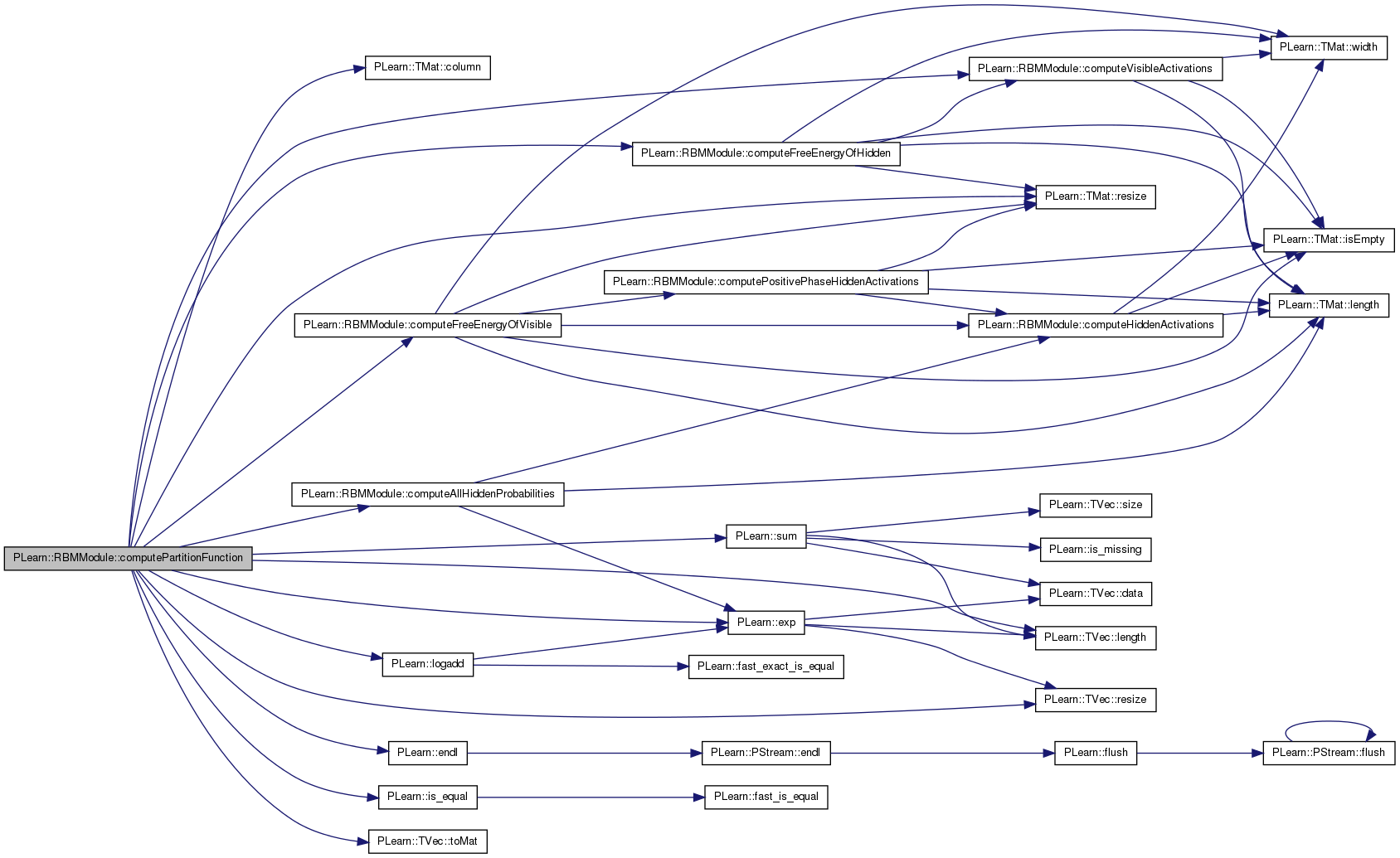

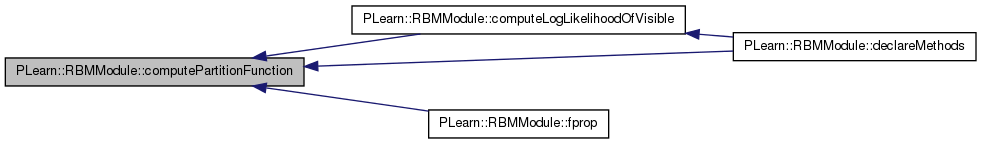

| void PLearn::RBMModule::computePartitionFunction | ( | ) |

Definition at line 781 of file RBMModule.cc.

References all_hidden_cond_prob, all_p_visible, all_visible_cond_prob, c, PLearn::TMat< T >::column(), compare_true_gradient_with_cd, computeAllHiddenProbabilities(), computeFreeEnergyOfHidden(), computeFreeEnergyOfVisible(), computeVisibleActivations(), d, PLearn::endl(), energy_inputs, PLearn::exp(), hidden_activations_are_computed, hidden_layer, i, PLearn::RBMLayer::INFINITE_CONFIGURATIONS, PLearn::is_equal(), PLearn::TVec< T >::length(), log_partition_function, PLearn::logadd(), PLearn::OnlineLearningModule::name, PLASSERT, PLASSERT_MSG, PLCHECK, PLWARNING, PLearn::pout, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::sum(), PLearn::TVec< T >::toMat(), PLearn::OnlineLearningModule::verbosity, and visible_layer.

Referenced by computeLogLikelihoodOfVisible(), declareMethods(), and fprop().

{

int hidden_configurations = hidden_layer->getConfigurationCount();

int visible_configurations = visible_layer->getConfigurationCount();

PLASSERT_MSG(hidden_configurations != RBMLayer::INFINITE_CONFIGURATIONS ||

visible_configurations != RBMLayer::INFINITE_CONFIGURATIONS,

"To compute exact log-likelihood of an RBM maximum configurations of hidden "

"or visible layer must be less than 2^31.");

// Compute partition function

if (hidden_configurations > visible_configurations ||

compare_true_gradient_with_cd)

// do it by log-summing minus-free-energy of visible configurations

{

if (compare_true_gradient_with_cd) {

all_p_visible.resize(visible_configurations);

all_visible_cond_prob.resize(visible_configurations,

hidden_configurations);

all_hidden_cond_prob.resize(hidden_configurations,

visible_configurations);

}

energy_inputs.resize(1, visible_layer->size);

Vec input = energy_inputs(0);

// COULD BE DONE MORE EFFICIENTLY BY DOING MANY CONFIGURATIONS

// AT ONCE IN A 'MINIBATCH'

Mat free_energy(1, 1);

log_partition_function = 0;

PP<ProgressBar> pb;

if (verbosity >= 2)

pb = new ProgressBar("Computing partition function",\

visible_configurations);

for (int c = 0; c < visible_configurations; c++)

{

visible_layer->getConfiguration(c, input);

computeFreeEnergyOfVisible(energy_inputs, free_energy, false);

real fe = free_energy(0,0);

if (c==0)

log_partition_function = -fe;

else

log_partition_function = logadd(log_partition_function, -fe);

if (compare_true_gradient_with_cd) {

all_p_visible[c] = -fe;

// Compute P(visible | hidden) and P(hidden | visible) for all

// values of hidden.

computeAllHiddenProbabilities(input.toMat(1, input.length()),

all_hidden_cond_prob.column(c));

Vec hidden(hidden_layer->size);

for (int d = 0; d < hidden_configurations; d++) {

hidden_layer->getConfiguration(d, hidden);

computeVisibleActivations(hidden.toMat(1, hidden.length()),

false);

visible_layer->activation = visible_layer->activations(0);

real neg_log_p_v_given_h = visible_layer->fpropNLL(input);

all_visible_cond_prob(c, d) = exp(-neg_log_p_v_given_h);

}

}

if (pb)

pb->update(c + 1);

}

pb = NULL;

hidden_activations_are_computed = false;

if (compare_true_gradient_with_cd) {

// Normalize probabilities.

for (int i = 0; i < all_p_visible.length(); i++)

all_p_visible[i] =

exp(all_p_visible[i] - log_partition_function);

//pout << "All P(x): " << all_p_visible << endl;

//pout << "Sum_x P(x) = " << sum(all_p_visible) << endl;

if (!is_equal(sum(all_p_visible), 1)) {

PLWARNING("The sum of all probability is not 1: %f",

sum(all_p_visible));

// Renormalize.

all_p_visible /= sum(all_p_visible);

}

PLCHECK( is_equal(sum(all_p_visible), 1) );

}

}

else

// do it by summing free-energy of hidden configurations

{

PLASSERT( !compare_true_gradient_with_cd );

energy_inputs.resize(1, hidden_layer->size);

Vec input = energy_inputs(0);

// COULD BE DONE MORE EFFICIENTLY BY DOING MANY CONFIGURATIONS

// AT ONCE IN A 'MINIBATCH'

Mat free_energy(1, 1);

log_partition_function = 0;

for (int c = 0; c < hidden_configurations; c++)

{

hidden_layer->getConfiguration(c, input);

//pout << "Input = " << input << endl;

computeFreeEnergyOfHidden(energy_inputs, free_energy);

//pout << "FE = " << free_energy(0, 0) << endl;

real fe = free_energy(0,0);

if (c==0)

log_partition_function = -fe;

else

log_partition_function = logadd(log_partition_function, -fe);

}

}

if (false)

pout << "Log Z(" << name << ") = " << log_partition_function << endl;

}

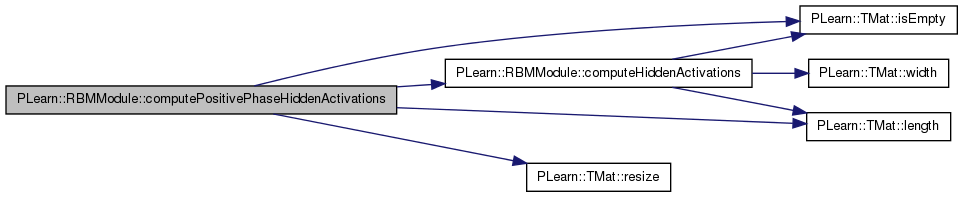

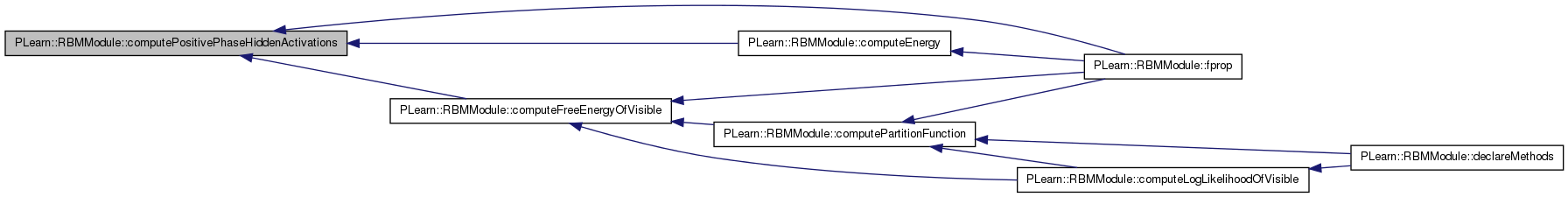

| void PLearn::RBMModule::computePositivePhaseHiddenActivations | ( | const Mat & | visible | ) |

Compute activations on the hidden layer based on the provided visible input during positive phase.

This method is called to ensure hidden hidden activations are computed only once, and during a fprop it should always be called with the same 'visible' input. If 'hidden_act' is not null, it is filled with the computed hidden activations.

Definition at line 679 of file RBMModule.cc.

References computeHiddenActivations(), hidden_act, hidden_activations_are_computed, hidden_layer, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLASSERT, and PLearn::TMat< T >::resize().

Referenced by computeEnergy(), computeFreeEnergyOfVisible(), and fprop().

{

if (hidden_activations_are_computed) {

// Nothing to do.

PLASSERT( !hidden_act || !hidden_act->isEmpty() );

return;

}

computeHiddenActivations(visible);

if (hidden_act && hidden_act->isEmpty())

{

hidden_act->resize(visible.length(),hidden_layer->size);

*hidden_act << hidden_layer->activations;

}

hidden_activations_are_computed = true;

}

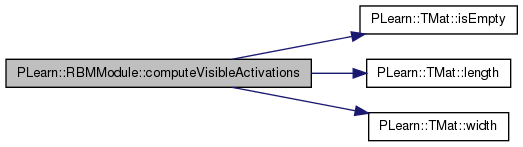

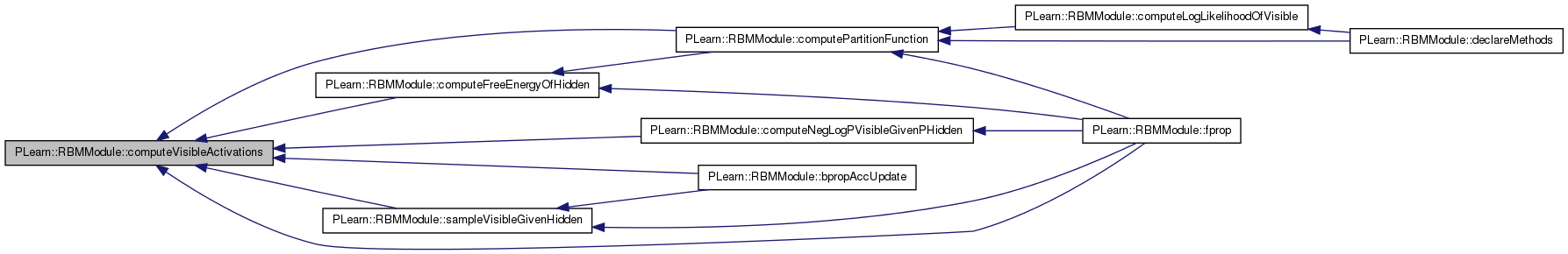

| void PLearn::RBMModule::computeVisibleActivations | ( | const Mat & | hidden, |

| bool | using_reconstruction_connection = false |

||

| ) |

Compute activations on the visible layer.

If 'using_reconstruction_connection' is true, then we use the reconstruction connection to compute these activations. Otherwise, we use the normal connection, in a 'top->down' fashion.

Definition at line 698 of file RBMModule.cc.

References connection, i, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLASSERT, reconstruction_connection, visible_layer, weights, and PLearn::TMat< T >::width().

Referenced by bpropAccUpdate(), computeFreeEnergyOfHidden(), computeNegLogPVisibleGivenPHidden(), computePartitionFunction(), fprop(), and sampleVisibleGivenHidden().

{

if (using_reconstruction_connection)

{

PLASSERT( reconstruction_connection );

reconstruction_connection->setAsUpInputs(hidden);

visible_layer->getAllActivations(reconstruction_connection, 0, true);

}

else

{

if(weights && !weights->isEmpty())

{

PLASSERT( connection->classname() == "RBMMatrixConnection" );

Mat old_weights;

Vec old_activation;

connection->getAllWeights(old_weights);

old_activation = visible_layer->activation;

int up = connection->up_size;

int down = connection->down_size;

PLASSERT( weights->width() == up * down );

visible_layer->setBatchSize( hidden.length() );

for(int i=0; i<hidden.length(); i++)

{

connection->setAllWeights(Mat(up,down,(*weights)(i)));

connection->setAsUpInput(hidden(i));

visible_layer->activation = visible_layer->activations(i);

visible_layer->getAllActivations(connection, 0, false);

}

connection->setAllWeights(old_weights);

visible_layer->activation = old_activation;

}

else

{

connection->setAsUpInputs(hidden);

visible_layer->getAllActivations(connection, 0, true);

}

}

}

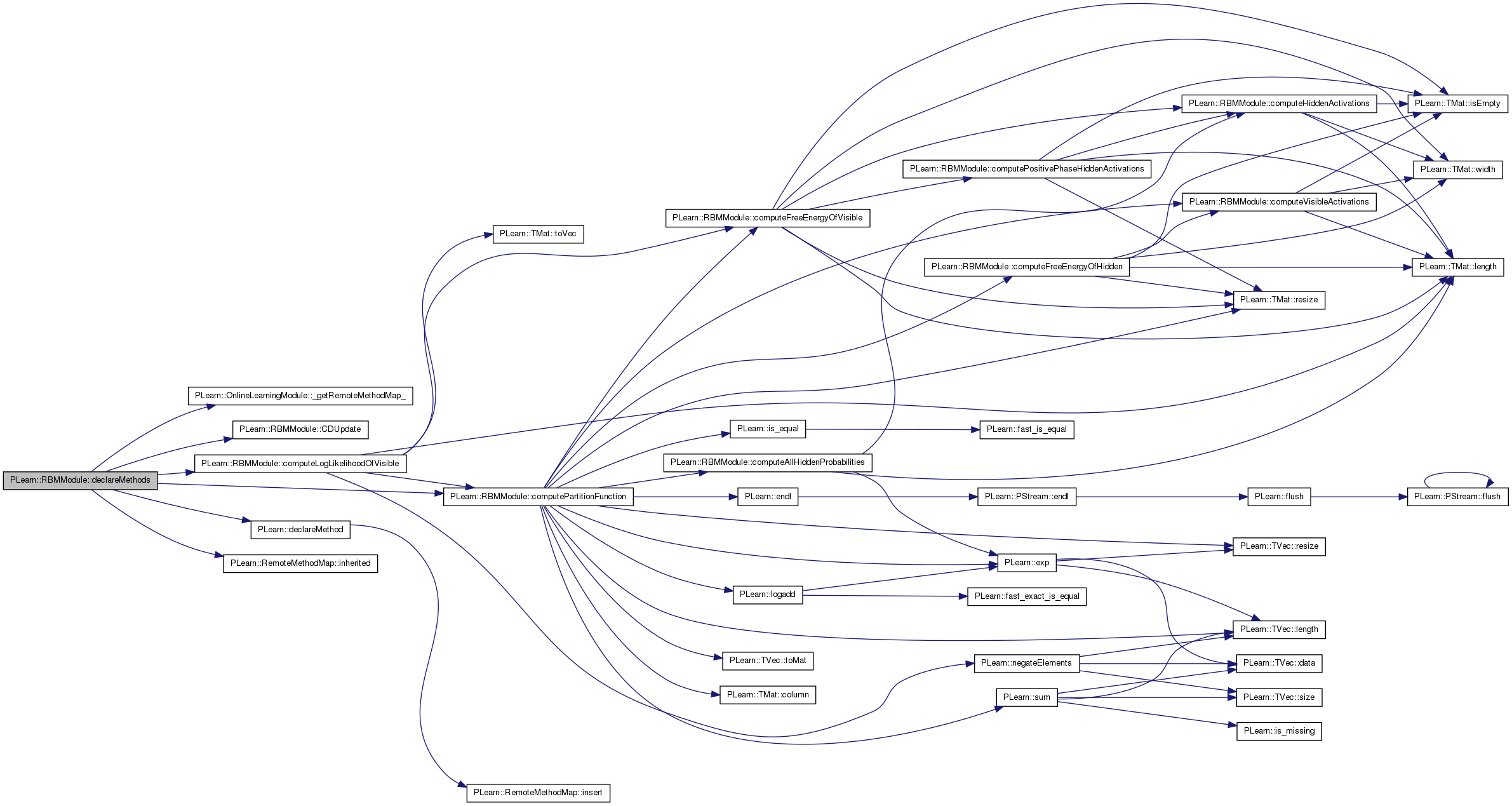

| void PLearn::RBMModule::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declare the methods that are remote-callable.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 329 of file RBMModule.cc.

References PLearn::OnlineLearningModule::_getRemoteMethodMap_(), CDUpdate(), computeLogLikelihoodOfVisible(), computePartitionFunction(), PLearn::declareMethod(), and PLearn::RemoteMethodMap::inherited().

{

// Make sure that inherited methods are declared

rmm.inherited(inherited::_getRemoteMethodMap_());

declareMethod(rmm, "CDUpdate", &RBMModule::CDUpdate,

(BodyDoc("Perform one CD_k update"),

ArgDoc ("v_0", "Positive phase statistics on visible layer"),

ArgDoc ("h_0", "Positive phase statistics on hidden layer"),

ArgDoc ("v_k", "Negative phase statistics on visible layer"),

ArgDoc ("h_k", "Negative phase statistics on hidden layer")

));

declareMethod(rmm, "computePartitionFunction",

&RBMModule::computePartitionFunction,

(BodyDoc("Compute the log partition function (will be stored within "

"the 'log_partition_function' field)")));

declareMethod(rmm, "computeLogLikelihoodOfVisible",

&RBMModule::computeLogLikelihoodOfVisible,

(BodyDoc("Compute log-likehood"),

ArgDoc("visible", "Matrix of visible inputs"),

RetDoc("A vector with the log-likelihood of each input")));

}

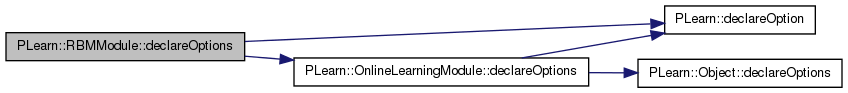

| void PLearn::RBMModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 166 of file RBMModule.cc.

References PLearn::OptionBase::advanced_level, PLearn::OptionBase::buildoption, cd_learning_rate, compare_true_gradient_with_cd, compute_contrastive_divergence, compute_log_likelihood, connection, PLearn::declareOption(), PLearn::OnlineLearningModule::declareOptions(), deterministic_reconstruction_in_cd, Gibbs_step, grad_learning_rate, hidden_layer, PLearn::OptionBase::learntoption, log_partition_function, min_n_Gibbs_steps, minimize_log_likelihood, n_Gibbs_steps_CD, n_Gibbs_steps_per_generated_sample, n_steps_compare, partition_function_is_stale, reconstruction_connection, standard_cd_bias_grad, standard_cd_grad, standard_cd_weights_grad, stochastic_reconstruction, tied_connection_weights, and visible_layer.

{

// Build options.

declareOption(ol, "visible_layer", &RBMModule::visible_layer,

OptionBase::buildoption,

"Visible layer of the RBM.");

declareOption(ol, "hidden_layer", &RBMModule::hidden_layer,

OptionBase::buildoption,

"Hidden layer of the RBM.");

declareOption(ol, "connection", &RBMModule::connection,

OptionBase::buildoption,

"Connection between the visible and hidden layers.");

declareOption(ol, "reconstruction_connection",

&RBMModule::reconstruction_connection,

OptionBase::buildoption,

"Reconstruction connection between the hidden and visible layers.");

declareOption(ol, "stochastic_reconstruction",

&RBMModule::stochastic_reconstruction,

OptionBase::buildoption,

"If set to true, then reconstruction is not deterministic. Instead,\n"

"we sample a hidden vector given the visible input, then use the\n"

"visible layer's expectation given this sample as reconstruction.",

OptionBase::advanced_level);

declareOption(ol, "grad_learning_rate", &RBMModule::grad_learning_rate,

OptionBase::buildoption,

"Learning rate for the gradient descent step.");

declareOption(ol, "cd_learning_rate", &RBMModule::cd_learning_rate,

OptionBase::buildoption,

"Learning rate for the constrastive divergence step. Note that when\n"

"set to 0, the gradient of the contrastive divergence will not be\n"

"computed at all.");

declareOption(ol, "tied_connection_weights", &RBMModule::tied_connection_weights,

OptionBase::buildoption,

"Whether to keep fixed the connection weights during learning.");

declareOption(ol, "compute_contrastive_divergence", &RBMModule::compute_contrastive_divergence,

OptionBase::buildoption,

"Compute the constrastive divergence in an output port.");

declareOption(ol, "deterministic_reconstruction_in_cd",

&RBMModule::deterministic_reconstruction_in_cd,

OptionBase::buildoption,

"Whether to use the expectation of the visible (given a hidden sample)\n"

"or a sample of the visible in the contrastive divergence learning.\n"

"In other words, instead of the classical Gibbs sampling\n"

" v_0 --> h_0 ~ p(h|v_0) --> v_1 ~ p(v|h_0) --> p(h|v_1)\n"

"we will have by setting 'deterministic_reconstruction_in_cd=1'\n"

" v_0 --> h_0 ~ p(h|v_0) --> v_1 = E(v|h_0) --> p(h|E(v|h_0)).");

declareOption(ol, "standard_cd_grad",

&RBMModule::standard_cd_grad,

OptionBase::buildoption,

"Whether to use the standard contrastive divergence gradient for\n"

"updates, or the true gradient of the contrastive divergence. This\n"

"affects only the gradient w.r.t. internal parameters of the layers\n"

"and connections. Currently, this option works only with layers of\n"

"the type 'RBMBinomialLayer', connected by a 'RBMMatrixConnection'.");

declareOption(ol, "standard_cd_bias_grad",

&RBMModule::standard_cd_bias_grad,

OptionBase::buildoption,

"This option is only used when biases of the hidden layer are given\n"

"through the 'hidden_bias' port. When this is the case, the gradient\n"

"of contrastive divergence w.r.t. these biases is either computed:\n"

"- by the usual formula if 'standard_cd_bias_grad' is true\n"

"- by the true gradient if 'standard_cd_bias_grad' is false.");

declareOption(ol, "standard_cd_weights_grad",

&RBMModule::standard_cd_weights_grad,

OptionBase::buildoption,

"This option is only used when weights of the connection are given\n"

"through the 'weights' port. When this is the case, the gradient of\n"

"contrastive divergence w.r.t. weights is either computed:\n"

"- by the usual formula if 'standard_cd_weights_grad' is true\n"

"- by the true gradient if 'standard_cd_weights_grad' is false.");

declareOption(ol, "n_Gibbs_steps_CD",

&RBMModule::n_Gibbs_steps_CD,

OptionBase::buildoption,

"Number of Gibbs sampling steps in negative phase of "

"contrastive divergence.");

declareOption(ol, "min_n_Gibbs_steps", &RBMModule::min_n_Gibbs_steps,

OptionBase::buildoption,

"Used in generative mode (when visible_sample or hidden_sample is requested)\n"

"when one has to sample from the joint or a marginal of visible and hidden,\n"

"and thus a Gibbs chain has to be run. This option gives the minimum number\n"

"of Gibbs steps to perform in the chain before outputting a sample.\n");

declareOption(ol, "n_Gibbs_steps_per_generated_sample",

&RBMModule::n_Gibbs_steps_per_generated_sample,

OptionBase::buildoption,

"Used in generative mode (when visible_sample or hidden_sample is requested)\n"

"when one has to sample from the joint or a marginal of visible and hidden,\n"

"This option gives the number of steps to run in the Gibbs chain between\n"

"consecutive generated samples that are produced in output of the fprop method.\n"

"By default this is equal to min_n_Gibbs_steps.\n");

declareOption(ol, "compute_log_likelihood",

&RBMModule::compute_log_likelihood,

OptionBase::buildoption,

"Whether to compute the exact RBM generative model's log-likelihood\n"

"(on the neg_log_likelihood port). If false then the neg_log_likelihood\n"

"port just computes the input visible's free energy.\n");

declareOption(ol, "minimize_log_likelihood",

&RBMModule::minimize_log_likelihood,

OptionBase::buildoption,

"Whether to minimize the exact RBM generative model's log-likelihood\n"

"i.e. take stochastic gradient steps w.r.t. the log-likelihood instead\n"

"of w.r.t. the contrastive divergence.\n");

declareOption(ol, "compare_true_gradient_with_cd",

&RBMModule::compare_true_gradient_with_cd,

OptionBase::buildoption,

"If true, then will compute the true gradient (of the NLL) as well\n"

"as the exact non-stochastic CD update, and compare them.",

OptionBase::advanced_level);

declareOption(ol, "n_steps_compare",

&RBMModule::n_steps_compare,

OptionBase::buildoption,

"Number of steps for which we want to compare CD with the true\n"

"gradient (when 'compare_true_gradient_with_cd' is true). This will\n"

"compute P(x_t|x) for t from 1 to 'n_steps_compare'.",

OptionBase::advanced_level);

// Learnt options.

declareOption(ol, "Gibbs_step",

&RBMModule::Gibbs_step,

OptionBase::learntoption,

"Used in generative mode (when visible_sample or hidden_sample is requested)\n"

"when one has to sample from the joint or a marginal of visible and hidden,\n"

"Keeps track of the number of steps that have been run since the beginning\n"

"of the chain.\n");

declareOption(ol, "log_partition_function",

&RBMModule::log_partition_function,

OptionBase::learntoption,

"log(Z) = log(sum_{h,x} exp(-energy(h,x))\n"

"only computed if compute_log_likelihood is true and\n"

"the neg_log_likelihood port is requested.\n");

declareOption(ol, "partition_function_is_stale",

&RBMModule::partition_function_is_stale,

OptionBase::learntoption,

"Whether parameters have changed since the last computation\n"

"of the log_partition_function (to know if it should be recomputed\n"

"when the neg_log_likelihood port is requested.\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 131 of file RBMModule.cc.

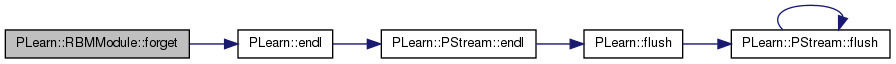

| void PLearn::RBMModule::forget | ( | ) | [virtual] |

Reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 2501 of file RBMModule.cc.

References connection, PLearn::endl(), Gibbs_step, hidden_layer, PLearn::OnlineLearningModule::name, partition_function_is_stale, PLASSERT, reconstruction_connection, and visible_layer.

{

DBG_MODULE_LOG << "Forgetting RBMModule '" << name << "'" << endl;

PLASSERT( hidden_layer && visible_layer && connection );

hidden_layer->forget();

visible_layer->forget();

connection->forget();

if (reconstruction_connection && reconstruction_connection != connection)

// We avoid to call forget() twice if the connections are the same.

reconstruction_connection->forget();

Gibbs_step = 0;

partition_function_is_stale = true;

}

Overridden.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 889 of file RBMModule.cc.

References PLearn::abs(), all_hidden_cond_prob, all_p_visible, all_visible_cond_prob, PLearn::TVec< T >::append(), PLearn::argmax(), PLearn::OnlineLearningModule::checkProp(), PLearn::TMat< T >::clear(), PLearn::TMat< T >::column(), PLearn::columnSum(), compare_true_gradient_with_cd, compute_contrastive_divergence, compute_log_likelihood, computeAllHiddenProbabilities(), computeEnergy(), computeFreeEnergyOfHidden(), computeFreeEnergyOfVisible(), computeHiddenActivations(), computeNegLogPVisibleGivenPHidden(), computePartitionFunction(), computePositivePhaseHiddenActivations(), computeVisibleActivations(), connection, PLearn::TMat< T >::copy(), deterministic_reconstruction_in_cd, PLearn::diff(), PLearn::OnlineLearningModule::during_training, PLearn::fast_exact_is_equal(), PLearn::TMat< T >::fill(), PLearn::get_pointer(), getPortIndex(), Gibbs_step, hidden_act, hidden_act_store, hidden_activations_are_computed, hidden_bias, hidden_exp_store, hidden_is_output, hidden_layer, i, PLearn::ipow(), PLearn::is_equal(), PLearn::TVec< T >::isEmpty(), PLearn::TMat< T >::isEmpty(), j, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), log_partition_function, PLearn::max(), PLearn::mean(), PLearn::median(), min_n_Gibbs_steps, MISSING_VALUE, n_Gibbs_steps_CD, n_Gibbs_steps_per_generated_sample, n_steps_compare, PLearn::OnlineLearningModule::name, PLearn::negateElements(), PLearn::OnlineLearningModule::nPorts(), p_ht_given_x, p_xt_given_x, partition_function_is_stale, PLASSERT, PLASSERT_MSG, PLCHECK, PLCHECK_MSG, PLERROR, PLearn::product(), PLearn::OnlineLearningModule::random_gen, reconstruction_connection, PLearn::TMat< T >::resize(), sampleHiddenGivenVisible(), sampleVisibleGivenHidden(), PLearn::sigmoid(), PLearn::TMat< T >::size(), stochastic_reconstruction, PLearn::TVec< T >::toMat(), PLearn::TMat< T >::toVecCopy(), PLearn::transposeProduct(), PLearn::transposeProductScaleAcc(), PLearn::OnlineLearningModule::verbosity, visible_layer, weights, and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( visible_layer );

PLASSERT( hidden_layer );

PLASSERT( connection );

Mat* visible = ports_value[getPortIndex("visible")];

bool visible_is_output = visible && visible->isEmpty();

Mat* hidden = ports_value[getPortIndex("hidden.state")];

// hidden_is_output is needed in BPROP, which is VERY BAD, VIOLATING OUR DESIGN ASSUMPTIONS

hidden_is_output = hidden && hidden->isEmpty();

hidden_act = ports_value[getPortIndex("hidden_activations.state")];

bool hidden_act_is_output = hidden_act && hidden_act->isEmpty();

Mat* visible_sample = ports_value[getPortIndex("visible_sample")];

bool visible_sample_is_output = visible_sample && visible_sample->isEmpty();

Mat* visible_expectation = ports_value[getPortIndex("visible_expectation")];

bool visible_expectation_is_output = visible_expectation && visible_expectation->isEmpty();

Mat* visible_activation = ports_value[getPortIndex("visible_activations.state")];

bool visible_activation_is_output = visible_activation && visible_activation->isEmpty();

Mat* hidden_sample = ports_value[getPortIndex("hidden_sample")];

bool hidden_sample_is_output = hidden_sample && hidden_sample->isEmpty();

Mat* energy = ports_value[getPortIndex("energy")];

bool energy_is_output = energy && energy->isEmpty();

Mat* neg_log_likelihood = ports_value[getPortIndex("neg_log_likelihood")];

bool neg_log_likelihood_is_output = neg_log_likelihood && neg_log_likelihood->isEmpty();

Mat* neg_log_phidden = ports_value[getPortIndex("neg_log_phidden")];

bool neg_log_phidden_is_output = neg_log_phidden && neg_log_phidden->isEmpty();

Mat* neg_log_pvisible_given_phidden = ports_value[getPortIndex("neg_log_pvisible_given_phidden")];

bool neg_log_pvisible_given_phidden_is_output = neg_log_pvisible_given_phidden && neg_log_pvisible_given_phidden->isEmpty();

Mat* median_reldiff_cd_nll = ports_value[getPortIndex("median_reldiff_cd_nll")];

bool median_reldiff_cd_nll_is_output = median_reldiff_cd_nll && median_reldiff_cd_nll->isEmpty();

Mat* mean_diff_cd_nll = ports_value[getPortIndex("mean_diff_cd_nll")];

bool mean_diff_cd_nll_is_output = mean_diff_cd_nll && mean_diff_cd_nll->isEmpty();

Mat* agreement_cd_nll = ports_value[getPortIndex("agreement_cd_nll")];

bool agreement_cd_nll_is_output = agreement_cd_nll && agreement_cd_nll->isEmpty();

Mat* agreement_stoch = ports_value[getPortIndex("agreement_stoch")];

bool agreement_stoch_is_output = agreement_stoch && agreement_stoch->isEmpty();

Mat* bound_cd_nll = ports_value[getPortIndex("bound_cd_nll")];

bool bound_cd_nll_is_output = bound_cd_nll && bound_cd_nll->isEmpty();

Mat* weights_stats = ports_value[getPortIndex("weights_stats")];

bool weights_stats_is_output = weights_stats && weights_stats->isEmpty();

Mat* ratio_cd_leftout = ports_value[getPortIndex("ratio_cd_leftout")];

bool ratio_cd_leftout_is_output = ratio_cd_leftout && ratio_cd_leftout->isEmpty();

Mat* abs_cd = ports_value[getPortIndex("abs_cd")];

bool abs_cd_is_output = abs_cd && abs_cd->isEmpty();

Mat* nll_grad = ports_value[getPortIndex("nll_grad")];

bool nll_grad_is_output = nll_grad && nll_grad->isEmpty();

hidden_bias = ports_value[getPortIndex("hidden_bias")];

//bool hidden_bias_is_output = hidden_bias && hidden_bias->isEmpty();

weights = ports_value[getPortIndex("weights")];

//bool weights_is_output = weights && weights->isEmpty();

Mat* visible_reconstruction = 0;

Mat* visible_reconstruction_activations = 0;

Mat* reconstruction_error = 0;

if(reconstruction_connection)

{

visible_reconstruction =

ports_value[getPortIndex("visible_reconstruction.state")];

visible_reconstruction_activations =

ports_value[getPortIndex("visible_reconstruction_activations.state")];

reconstruction_error =

ports_value[getPortIndex("reconstruction_error.state")];

}

bool visible_reconstruction_is_output = visible_reconstruction && visible_reconstruction->isEmpty();

bool visible_reconstruction_activations_is_output = visible_reconstruction_activations && visible_reconstruction_activations->isEmpty();

bool reconstruction_error_is_output = reconstruction_error && reconstruction_error->isEmpty();

Mat* contrastive_divergence = 0;

Mat* negative_phase_visible_samples = 0;

Mat* negative_phase_hidden_expectations = 0;

Mat* negative_phase_hidden_activations = NULL;

if (compute_contrastive_divergence)

{

contrastive_divergence = ports_value[getPortIndex("contrastive_divergence")];

/* YB: I don't agree with this error message: the behavior should be adapted to the provided ports.

if (!contrastive_divergence || !contrastive_divergence->isEmpty())

PLERROR("In RBMModule::fprop - When option "

"'compute_contrastive_divergence' is 'true', the "

"'contrastive_divergence' port should be provided, as an "

"output.");*/

negative_phase_visible_samples =

ports_value[getPortIndex("negative_phase_visible_samples.state")];

negative_phase_hidden_expectations =

ports_value[getPortIndex("negative_phase_hidden_expectations.state")];

negative_phase_hidden_activations =

ports_value[getPortIndex("negative_phase_hidden_activations.state")];

}

bool contrastive_divergence_is_output = contrastive_divergence && contrastive_divergence->isEmpty();

//bool negative_phase_visible_samples_is_output = negative_phase_visible_samples && negative_phase_visible_samples->isEmpty();