|

PLearn 0.1

|

|

PLearn 0.1

|

#include <EntropyContrastLearner.h>

Public Member Functions | |

| EntropyContrastLearner () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual EntropyContrastLearner * | deepCopy (CopiesMap &copies) const |

| virtual void | initializeParams () |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

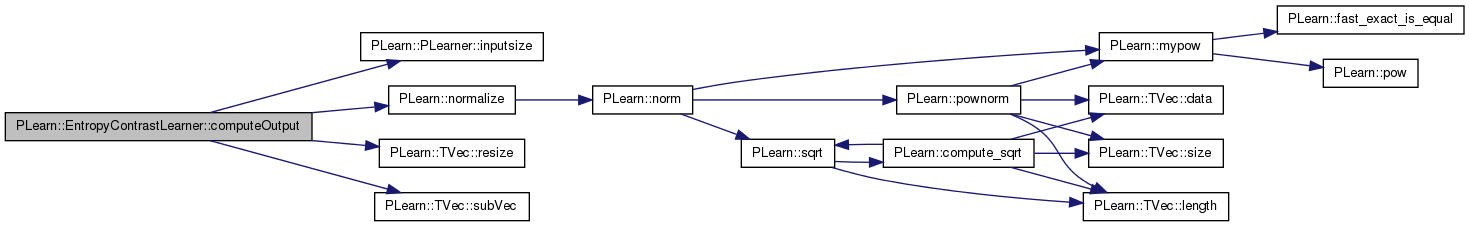

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| string | distribution |

| int | nconstraints |

| int | nhidden |

| The number of constraints. | |

| PP< Optimizer > | optimizer |

| The number of constraints. | |

| real | weight_real |

| real | weight_generated |

| real | weight_extra |

| real | weight_decay_hidden |

| real | weight_decay_output |

| bool | normalize_constraints |

| bool | save_best_params |

| real | sigma_generated |

| real | sigma_min_threshold |

| real | eps |

| Vec | gradient_scaling |

| bool | save_x_hat |

| string | gen_method |

| bool | use_sigma_min_threshold |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Var | x |

| Var | x_hat |

| VarArray | V |

| VarArray | W |

| VarArray | V_b |

| VarArray | W_b |

| Vec | V_save |

| Vec | V_b_save |

| Vec | W_save |

| Vec | W_b_save |

| VarArray | g |

| Var | mu |

| Var | sigma |

| Var | mu_hat |

| Var | sigma_hat |

| Var | training_cost |

| VarArray | costs |

| Var | f |

| Var | f_hat |

| VarArray | params |

| Func | f_output |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Definition at line 54 of file EntropyContrastLearner.h.

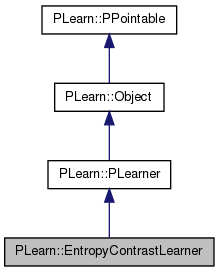

typedef PLearner PLearn::EntropyContrastLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file EntropyContrastLearner.h.

| PLearn::EntropyContrastLearner::EntropyContrastLearner | ( | ) |

Default constructor.

Definition at line 71 of file EntropyContrastLearner.cc.

: distribution("normal"), weight_real(1), weight_generated(1), weight_extra(1), weight_decay_hidden(0), weight_decay_output(0), normalize_constraints(true), save_best_params(true), sigma_generated(0.1), sigma_min_threshold(0.1), eps(0.0001), save_x_hat(false), gen_method("N(0,I)"), use_sigma_min_threshold(true) { // ### You may or may not want to call build_() to finish building the object // build_(); }

| string PLearn::EntropyContrastLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

| OptionList & PLearn::EntropyContrastLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

| RemoteMethodMap & PLearn::EntropyContrastLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

| Object * PLearn::EntropyContrastLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 92 of file EntropyContrastLearner.cc.

| StaticInitializer EntropyContrastLearner::_static_initializer_ & PLearn::EntropyContrastLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

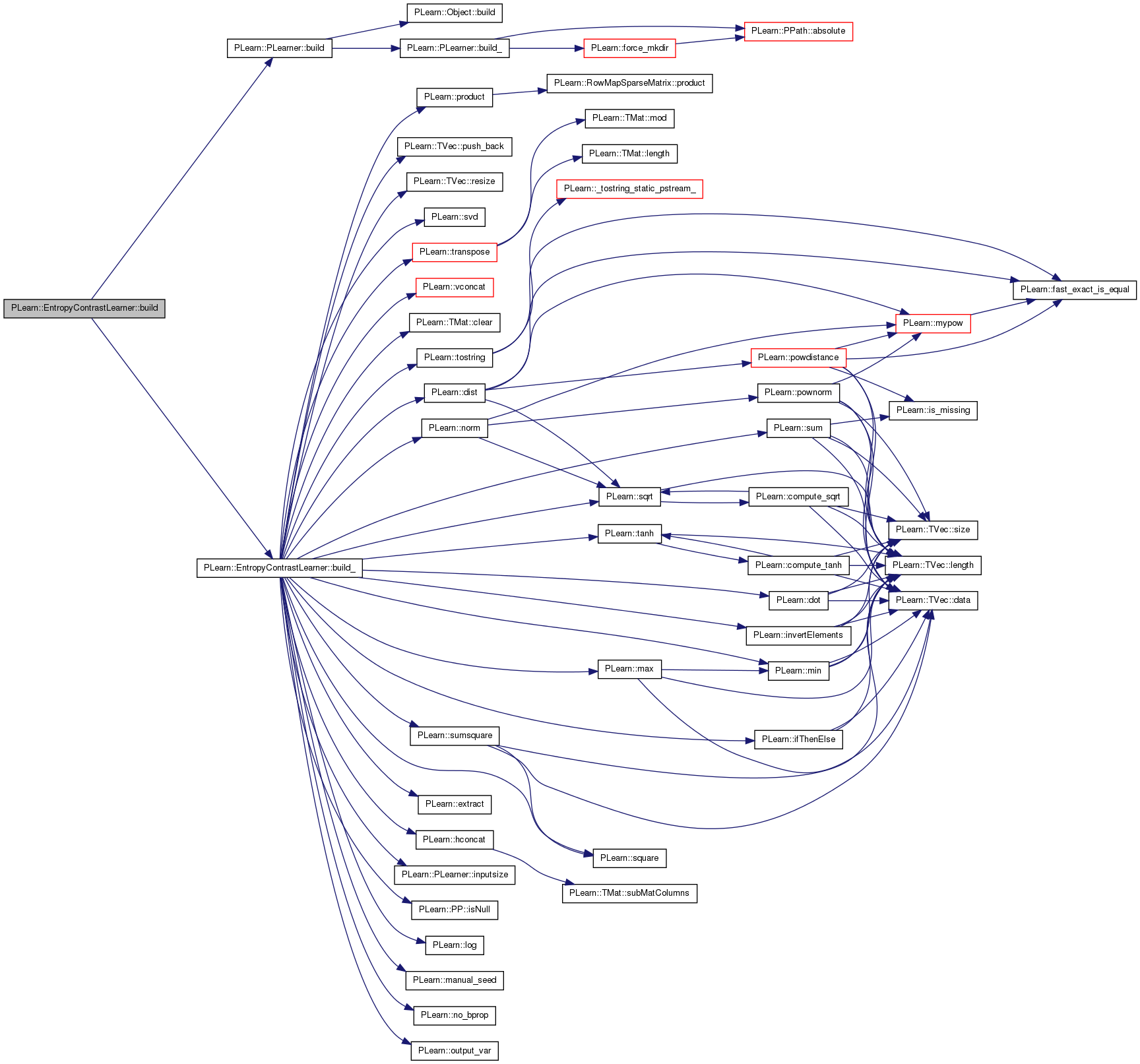

| void PLearn::EntropyContrastLearner::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 385 of file EntropyContrastLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

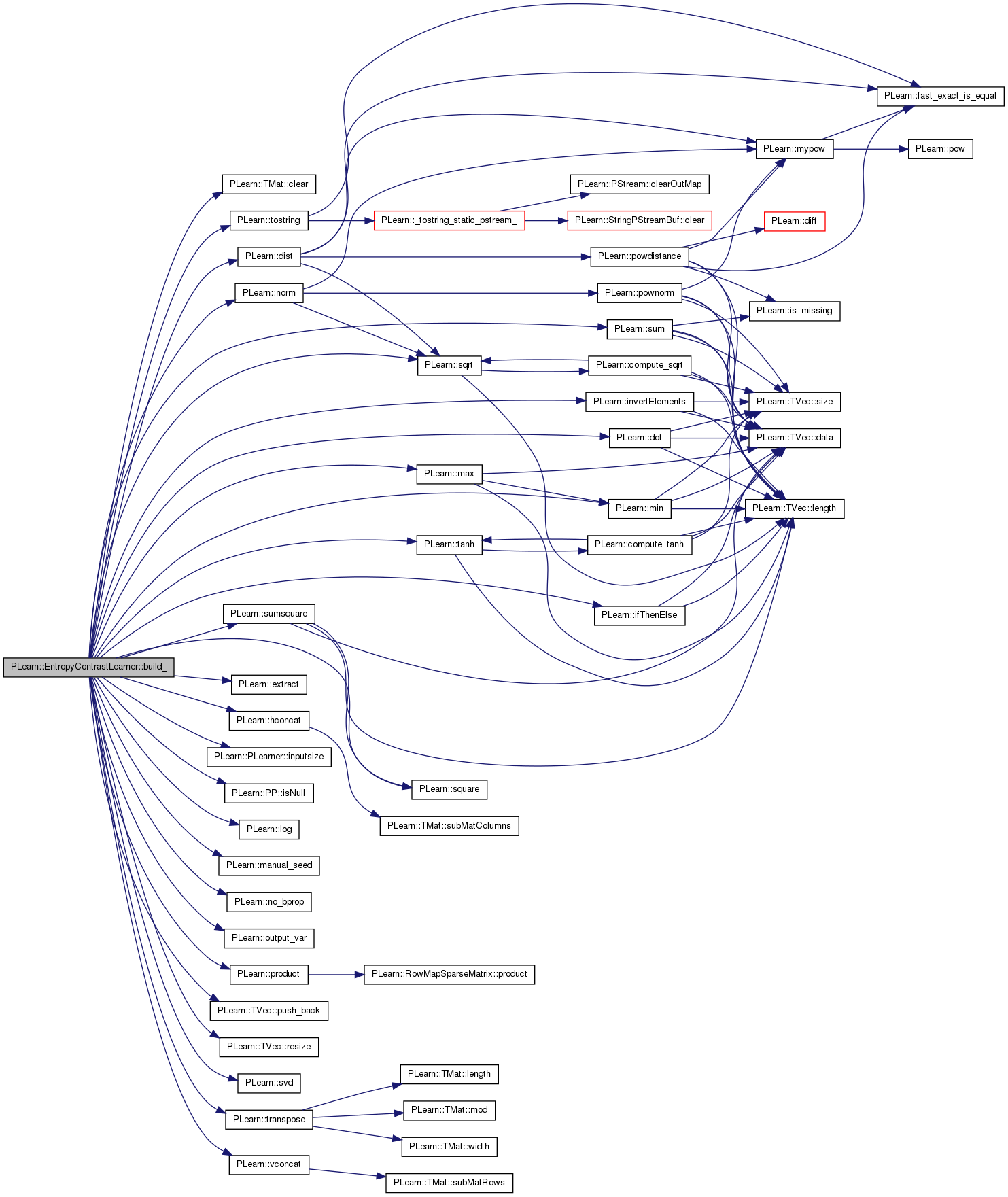

| void PLearn::EntropyContrastLearner::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 139 of file EntropyContrastLearner.cc.

References PLearn::TMat< T >::clear(), costs, PLearn::dist(), distribution, PLearn::dot(), eps, PLearn::extract(), f, f_hat, f_output, g, gen_method, grad, gradient_scaling, PLearn::hconcat(), i, PLearn::ifThenElse(), INDEX, PLearn::PLearner::inputsize(), PLearn::invertElements(), PLearn::PP< T >::isNull(), j, PLearn::log(), PLearn::manual_seed(), PLearn::max(), PLearn::min(), mu, mu_hat, N, n, nconstraints, nhidden, PLearn::no_bprop(), PLearn::norm(), PLearn::output_var(), params, PLearn::product(), PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), save_x_hat, sigma, sigma_generated, sigma_hat, sigma_min_threshold, PLearn::sqrt(), PLearn::square(), PLearn::sum(), PLearn::sumsquare(), PLearn::svd(), PLearn::tanh(), PLearn::tostring(), PLearn::PLearner::train_set, training_cost, PLearn::transpose(), use_sigma_min_threshold, V, V_b, V_b_save, V_save, PLearn::vconcat(), W, W_b, W_b_save, W_save, weight_decay_hidden, weight_decay_output, weight_extra, weight_generated, weight_real, x, x_hat, and zero.

Referenced by build().

{

manual_seed(time(NULL));

if (train_set) {

// input data

int n = inputsize();

x = Var(n, "input");

V_save.resize(nconstraints*nhidden*inputsize());

V_b_save.resize(nconstraints*nhidden);

V.resize(nconstraints);

V_b.resize(nconstraints);

for(int k=0 ; k<nconstraints ; ++k) {

V[k] = Var(nhidden,inputsize(),("V_"+tostring(k)).c_str());

V_b[k] = Var(nhidden,1,("V_b_"+tostring(k)).c_str());

params.push_back(V[k]);

params.push_back(V_b[k]);

}

int W_size = (nconstraints*(nconstraints+1))/2;

W.resize(W_size);

W_b.resize(nconstraints);

W_save.resize(W_size*nhidden);

W_b_save.resize(nconstraints);

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=0 ; j<=i ; ++j) {

W[INDEX(i,j)] = Var(1,nhidden,("W_"+tostring(i)+tostring(j)).c_str());

params.push_back(W[INDEX(i,j)]);

}

W_b[i] = Var(1,1,("W_b_"+tostring(i)).c_str());

params.push_back(W_b[i]);

}

// hidden layer

VarArray hf(nconstraints);

for(int k=0 ; k<nconstraints ; ++k) {

hf[k] = tanh(product(V[k],x)+V_b[k]);

}

// network output

VarArray f(nconstraints);

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=i ; j>=0 ; --j) {

if (j==i) {

f[i] = product(W[INDEX(i,j)],hf[j]) + W_b[i];

} else {

f[i] = f[i] + product(W[INDEX(i,j)],no_bprop(hf[j]));

}

}

}

VarArray hg(nconstraints);

for(int k=0 ; k<nconstraints ; ++k) {

hg[k] = (1-square(tanh(product(V[k],x)+V_b[k] )))*V[k];

}

g.resize(nconstraints);

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=i ; j>=0 ; --j) {

if (j==i) {

g[i] = product(W[INDEX(i,j)],hg[j]);

} else {

g[i] = g[i] + product(W[INDEX(i,j)],no_bprop(hg[j]));

}

}

}

// generated data

PP<GaussianDistribution> dist = new GaussianDistribution();

Vec eig_values(n);

Mat eig_vectors(n,n); eig_vectors.clear();

for(int i=0; i<n; i++)

{

eig_values[i] = 0.1;

eig_vectors(i,i) = 1.0;

}

dist->mu = Vec(n);

dist->eigenvalues = eig_values;

dist->eigenvectors = eig_vectors;

PP<PDistribution> temp;

temp = dist;

x_hat = new PDistributionVariable(x,temp);

if (gen_method=="local_gaussian") {

Var grad = transpose(vconcat(g));

Var gs = Var(1,nconstraints);

gs->value << gradient_scaling;

grad = grad*invertElements(gs);

Var svd_vec = svd(grad);

int M = inputsize();

int N = nconstraints;

Var U = extract(svd_vec,0,M,M);

Var D = extract(svd_vec,M*M+N*N,M,1);

Var sigma_1 = Var(M,1,"sigma_1");

sigma_1->matValue.fill(sigma_generated);

Var eps_var = Var(M,1,"epsilon");

eps_var->matValue.fill(1/eps);

Var zero = Var(M);

zero->matValue.fill(0);

Var sigma1;

sigma1 = 5*square(invertElements(min(ifThenElse(D>zero,D,eps_var))));

Var one = Var(M);

one->matValue.fill(1);

D = ifThenElse(D>zero,invertElements(square(D)+1e-10),sigma1*one);

x_hat = no_bprop(product(U,(sqrt(D)*x_hat))+x);

}

if (save_x_hat) {

x_hat = output_var(x_hat,"x_hat.dat");

}

VarArray hf_hat(nconstraints);

for(int k=0 ; k<nconstraints ; ++k) {

hf_hat[k] = tanh(product(V[k],x_hat)+V_b[k]);

}

VarArray f_hat(nconstraints);

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=i ; j>=0 ; --j) {

if (j==i) {

f_hat[i] = product(W[INDEX(i,j)],hf_hat[j]) + W_b[i];

} else {

f_hat[i] = f_hat[i] + product(W[INDEX(i,j)],no_bprop(hf_hat[j]));

}

}

}

// extra cost - to keep constrains perpendicular

Var extra_cost;

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=i+1 ; j<nconstraints ; ++j) {

Var tmp = no_bprop(g[i]);

if (extra_cost.isNull()) {

extra_cost = square(dot(tmp,g[j])/product(norm(tmp),norm(g[j])));

} else {

extra_cost = extra_cost + square(dot(tmp,g[j])/product(norm(tmp),norm(g[j])));

}

}

}

Var f_var = hconcat(f);

Var f_hat_var = hconcat(f_hat);

Var c_entropy;

if (distribution=="normal") {

mu = Var(1,nconstraints,"mu");

params.push_back(mu);

sigma = Var(1,nconstraints,"sigma");

params.push_back(sigma);

mu_hat = Var(1,nconstraints,"mu_hat");

params.push_back(mu_hat);

sigma_hat = Var(1,nconstraints,"sigma_hat");

params.push_back(sigma_hat);

Var c_mu = square(no_bprop(f_var)-mu);

c_mu->setName("mu cost");

Var c_sigma = square(sigma-square(no_bprop(c_mu)));

c_sigma->setName("sigma cost");

if (use_sigma_min_threshold) {

Var sigma_min = Var(1,nconstraints);

sigma_min->matValue.fill(sigma_min_threshold);

sigma = max(sigma,no_bprop(sigma_min));

}

Var c_mu_hat = square(no_bprop(f_hat_var)-mu_hat);

c_mu_hat->setName("generated mu cost");

Var c_sigma_hat = square(sigma_hat-square(no_bprop(c_mu_hat)));

c_sigma_hat->setName("generated sigma cost");

c_entropy = weight_real*square(f_var-no_bprop(mu))/no_bprop(sigma) -

weight_generated*square(f_hat_var-no_bprop(mu_hat))/no_bprop(sigma_hat);

c_entropy->setName("entropy cost");

costs = c_entropy & c_mu & c_sigma & c_mu_hat & c_sigma_hat;

if (nconstraints>1) {

costs &= weight_extra*extra_cost;

}

if (weight_decay_hidden>0) {

costs &= weight_decay_hidden*sumsquare(hconcat(V));

}

if (weight_decay_output>0) {

costs &= weight_decay_output*sumsquare(hconcat(W));

}

}

else if (distribution=="student") {

c_entropy = weight_real*log(real(1)+square(f_var)) - weight_generated*log(real(1)+square(f_hat_var));

costs.push_back(c_entropy);

if (nconstraints>1) {

costs &= weight_extra*extra_cost;

}

if (weight_decay_hidden>0) {

costs &= weight_decay_hidden*sumsquare(hconcat(V));

}

if (weight_decay_output>0) {

costs &= weight_decay_output*sumsquare(hconcat(W));

}

}

training_cost = sum(hconcat(costs));

training_cost->setName("cost");

f_output = Func(x, hconcat(g));

}

}

| string PLearn::EntropyContrastLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file EntropyContrastLearner.cc.

| void PLearn::EntropyContrastLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 548 of file EntropyContrastLearner.cc.

{

// Compute the costs from *already* computed output.

// ...

}

| void PLearn::EntropyContrastLearner::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 528 of file EntropyContrastLearner.cc.

References f_output, PLearn::PLearner::inputsize(), nconstraints, PLearn::normalize(), normalize_constraints, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::subVec().

{

int nout = inputsize()*nconstraints;

output.resize(nout);

f_output->fprop(input,output);

if (normalize_constraints) {

int is = inputsize();

for(int k=0 ; k<nconstraints ; ++k) {

Vec tmp(is);

tmp = output.subVec(k*is,is);

normalize(tmp,2);

tmp /= 15;

}

}

}

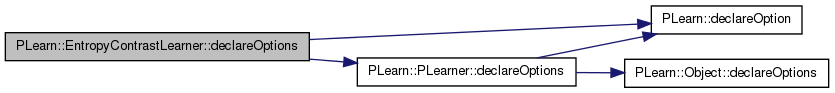

| void PLearn::EntropyContrastLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 94 of file EntropyContrastLearner.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), distribution, eps, gen_method, gradient_scaling, nconstraints, nhidden, normalize_constraints, optimizer, save_best_params, save_x_hat, sigma_generated, sigma_min_threshold, use_sigma_min_threshold, weight_decay_hidden, weight_decay_output, weight_extra, weight_generated, and weight_real.

{

declareOption(ol, "nconstraints", &EntropyContrastLearner::nconstraints, OptionBase::buildoption,

"The number of constraints to create (that's also the outputsize)");

declareOption(ol, "nhidden", &EntropyContrastLearner::nhidden, OptionBase::buildoption,

"the number of hidden units");

declareOption(ol, "optimizer", &EntropyContrastLearner::optimizer, OptionBase::buildoption,

"specify the optimizer to use\n");

declareOption(ol, "distribution", &EntropyContrastLearner::distribution, OptionBase::buildoption,

"the distribution to use\n");

declareOption(ol, "weight_real", &EntropyContrastLearner::weight_real, OptionBase::buildoption,

"the relative weight for the cost of the real data, for default is 1\n");

declareOption(ol, "weight_generated", &EntropyContrastLearner::weight_generated, OptionBase::buildoption,

"the relative weight for the cost of the generated data, for default is 1\n");

declareOption(ol, "weight_extra", &EntropyContrastLearner::weight_extra, OptionBase::buildoption,

"the relative weight for the extra cost, for default is 1\n");

declareOption(ol, "weight_decay_hidden", &EntropyContrastLearner::weight_decay_hidden, OptionBase::buildoption,

"decay factor for the hidden units\n");

declareOption(ol, "weight_decay_output", &EntropyContrastLearner::weight_decay_output, OptionBase::buildoption,

"decay factor for the output units\n");

declareOption(ol, "normalize_constraints", &EntropyContrastLearner::normalize_constraints, OptionBase::buildoption,

"normalize the output constraints\n");

declareOption(ol, "save_best_params", &EntropyContrastLearner::save_best_params, OptionBase::buildoption,

"specify if the best params are saved on each stage\n");

declareOption(ol, "sigma_generated", &EntropyContrastLearner::sigma_generated, OptionBase::buildoption,

"the sigma for the gaussian from which we get the generated data\n");

declareOption(ol, "sigma_min_threshold", &EntropyContrastLearner::sigma_min_threshold, OptionBase::buildoption,

"the minimum value for each element of sigma of the computed features\n");

declareOption(ol, "eps", &EntropyContrastLearner::eps, OptionBase::buildoption,

"we ignore singular values smaller than this.\n");

declareOption(ol, "gradient_scaling", &EntropyContrastLearner::gradient_scaling, OptionBase::buildoption,

"");

declareOption(ol, "save_x_hat", &EntropyContrastLearner::save_x_hat, OptionBase::buildoption,

"Save generated data to a file(for debug purposes).");

declareOption(ol, "gen_method", &EntropyContrastLearner::gen_method, OptionBase::buildoption,

"The method used to generate new points.");

declareOption(ol, "use_sigma_min_threshold", &EntropyContrastLearner::use_sigma_min_threshold, OptionBase::buildoption,

"Specify if the sigma of the features should be limited.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::EntropyContrastLearner::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 156 of file EntropyContrastLearner.h.

| EntropyContrastLearner * PLearn::EntropyContrastLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file EntropyContrastLearner.cc.

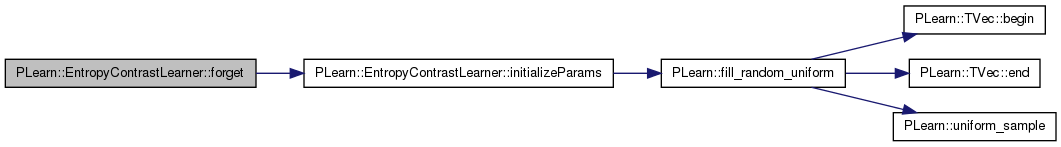

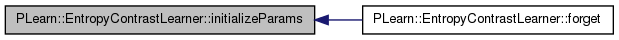

| void PLearn::EntropyContrastLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 412 of file EntropyContrastLearner.cc.

References initializeParams(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

if (train_set) initializeParams();

stage = 0;

}

| OptionList & PLearn::EntropyContrastLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file EntropyContrastLearner.cc.

| OptionMap & PLearn::EntropyContrastLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file EntropyContrastLearner.cc.

| RemoteMethodMap & PLearn::EntropyContrastLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file EntropyContrastLearner.cc.

| TVec< string > PLearn::EntropyContrastLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 555 of file EntropyContrastLearner.cc.

{

// Return the names of the costs computed by computeCostsFromOutpus

// (these may or may not be exactly the same as what's returned by getTrainCostNames).

TVec<string> ret;

return ret;

}

| TVec< string > PLearn::EntropyContrastLearner::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 563 of file EntropyContrastLearner.cc.

{

// Return the names of the objective costs that the train method computes and

// for which it updates the VecStatsCollector train_stats

// (these may or may not be exactly the same as what's returned by getTestCostNames).

TVec<string> ret;

return ret;

}

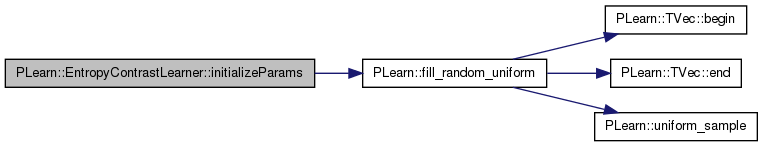

| void PLearn::EntropyContrastLearner::initializeParams | ( | ) | [virtual] |

Definition at line 572 of file EntropyContrastLearner.cc.

References distribution, PLearn::fill_random_uniform(), mu, mu_hat, nconstraints, sigma, sigma_hat, V, V_b, W, and W_b.

Referenced by forget().

{

real delta = 1; //1.0 / sqrt(real(inputsize()));

for(int k=0 ; k<nconstraints ; ++k) {

fill_random_uniform(V[k]->matValue, -delta, delta);

fill_random_uniform(V_b[k]->matValue, -delta, delta);

fill_random_uniform(W_b[k]->matValue, -delta, delta);

// V_b[k]->matValue.fill(0);

// W_b[k]->matValue.fill(0);

}

delta = 1;//1.0 / real(nhidden);

for(int k=0 ; k<((nconstraints*(nconstraints+1))/2) ; ++k) {

fill_random_uniform(W[k]->matValue, -delta, delta);

}

if (distribution=="normal") {

mu->matValue.fill(0);

sigma->matValue.fill(1);

mu_hat->matValue.fill(0);

sigma_hat->matValue.fill(1);

}

}

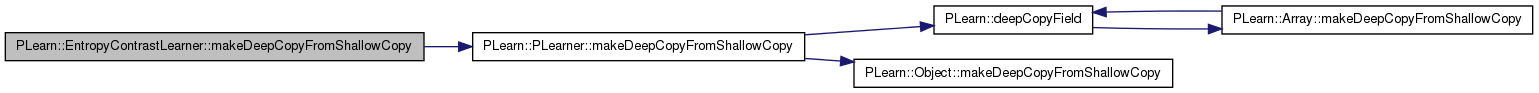

| void PLearn::EntropyContrastLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 392 of file EntropyContrastLearner.cc.

References PLearn::PLearner::makeDeepCopyFromShallowCopy(), and PLERROR.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

// deepCopyField(trainvec, copies);

// ### Remove this line when you have fully implemented this method.

PLERROR("EntropyContrastLearner::makeDeepCopyFromShallowCopy not fully (correctly) implemented yet!");

}

| int PLearn::EntropyContrastLearner::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 407 of file EntropyContrastLearner.cc.

References nconstraints.

{

return nconstraints;

}

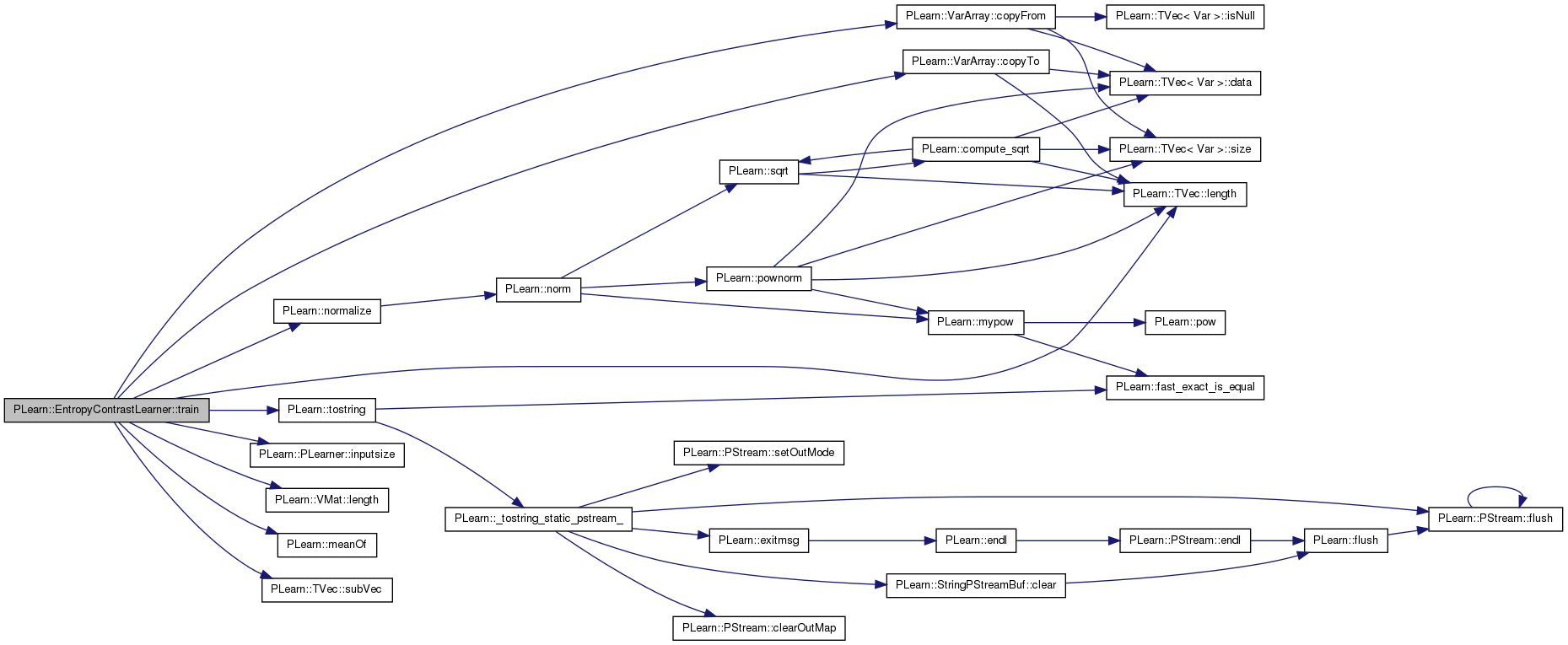

| void PLearn::EntropyContrastLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 419 of file EntropyContrastLearner.cc.

References PLearn::VarArray::copyFrom(), PLearn::VarArray::copyTo(), costs, f_output, i, PLearn::PLearner::inputsize(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::meanOf(), nconstraints, PLearn::normalize(), PLearn::PLearner::nstages, optimizer, params, PLERROR, PLearn::PLearner::report_progress, save_best_params, sigma, PLearn::PLearner::stage, PLearn::TVec< T >::subVec(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_cost, V, V_save, PLearn::PLearner::verbosity, W, W_save, and x.

{

if(!train_stats) // make a default stats collector, in case there's none

train_stats = new VecStatsCollector();

int l = train_set->length();

int nsamples = 1;

Func paramf = Func(x, training_cost); // parameterized function to optimize

//displayFunction(paramf);

Var totalcost = meanOf(train_set, paramf, nsamples);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

optimizer->reset();

}

else PLERROR("EntropyContrastLearner::train can't train without setting an optimizer first!");

PP<ProgressBar> pb;

if(report_progress>0) {

pb = new ProgressBar("Training EntropyContrastLearner from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

}

real min_cost = 1e10;

int optstage_per_lstage = l/nsamples;

while(stage<nstages)

{

optimizer->nstages = optstage_per_lstage;

// clear statistics of previous epoch

train_stats->forget();

optimizer->optimizeN(*train_stats);

train_stats->finalize(); // finalize statistics for this epoch

if (save_best_params) {

if (fabs(training_cost->valuedata[0])<min_cost) {

min_cost = fabs(training_cost->valuedata[0]);

V.copyTo(V_save);

// V_b.copyTo(V_b_save);

W.copyTo(W_save);

// W_b.copyTo(W_b_save);

}

}

if (verbosity>0) {

cout << "Stage: " << stage << ", training cost: " << training_cost->matValue;

// for(int i=0 ; i<W.length() ; ++i) {

// cout << W[i] << "\n";

// }

// cout << "---------------------------------------\n";

cout << sigma << "\n";

for(int i=0 ; i<costs.length() ; ++i) {

cout << costs[i] << "\n";

}

cout << "---------------------------------------\n";

}

++stage;

if(pb) {

pb->update(stage);

}

}

if (save_best_params) {

V.copyFrom(V_save);

// V_b.copyFrom(V_b_save);

W.copyFrom(W_save);

// W_b.copyFrom(W_b_save);

}

Vec x_(inputsize());

Vec g_(inputsize()*nconstraints);

ofstream file1("gen.dat");

for(int t=0 ; t<200 ; ++t) {

train_set->getRow(t,x_);

f_output->fprop(x_,g_);

file1 << x_ << " ";

for(int k=0 ; k<nconstraints ; ++k) {

int is = inputsize();

Vec tmp(is);

tmp = g_.subVec(k*is,is);

normalize(tmp,2);

tmp /= 15;

file1 << tmp << " ";

}

file1 << "\n";

}

file1.close();

}

Reimplemented from PLearn::PLearner.

Definition at line 156 of file EntropyContrastLearner.h.

VarArray PLearn::EntropyContrastLearner::costs [protected] |

Definition at line 82 of file EntropyContrastLearner.h.

Definition at line 96 of file EntropyContrastLearner.h.

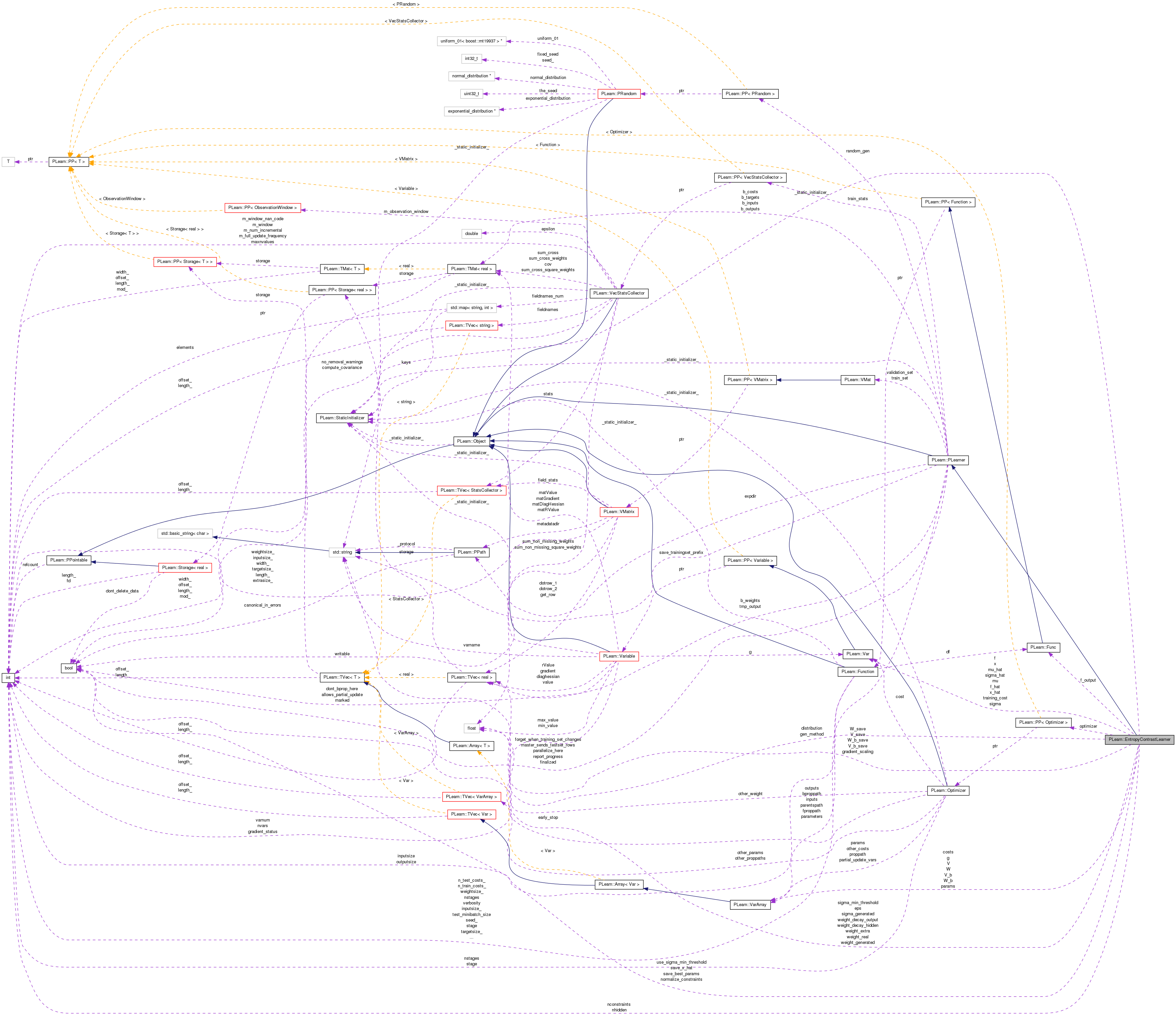

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 109 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::f [protected] |

Definition at line 83 of file EntropyContrastLearner.h.

Referenced by build_().

Var PLearn::EntropyContrastLearner::f_hat [protected] |

Definition at line 84 of file EntropyContrastLearner.h.

Referenced by build_().

Func PLearn::EntropyContrastLearner::f_output [protected] |

Definition at line 88 of file EntropyContrastLearner.h.

Referenced by build_(), computeOutput(), and train().

VarArray PLearn::EntropyContrastLearner::g [protected] |

Definition at line 78 of file EntropyContrastLearner.h.

Referenced by build_().

Definition at line 112 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 110 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::mu [protected] |

Definition at line 79 of file EntropyContrastLearner.h.

Referenced by build_(), and initializeParams().

Var PLearn::EntropyContrastLearner::mu_hat [protected] |

Definition at line 80 of file EntropyContrastLearner.h.

Referenced by build_(), and initializeParams().

Definition at line 97 of file EntropyContrastLearner.h.

Referenced by build_(), computeOutput(), declareOptions(), initializeParams(), outputsize(), and train().

The number of constraints.

Definition at line 98 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 105 of file EntropyContrastLearner.h.

Referenced by computeOutput(), and declareOptions().

The number of constraints.

Definition at line 99 of file EntropyContrastLearner.h.

Referenced by declareOptions(), and train().

VarArray PLearn::EntropyContrastLearner::params [protected] |

Definition at line 86 of file EntropyContrastLearner.h.

Definition at line 106 of file EntropyContrastLearner.h.

Referenced by declareOptions(), and train().

Definition at line 111 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::sigma [protected] |

Definition at line 79 of file EntropyContrastLearner.h.

Referenced by build_(), initializeParams(), and train().

Definition at line 107 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::sigma_hat [protected] |

Definition at line 80 of file EntropyContrastLearner.h.

Referenced by build_(), and initializeParams().

Definition at line 108 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::training_cost [protected] |

Definition at line 81 of file EntropyContrastLearner.h.

Definition at line 113 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

VarArray PLearn::EntropyContrastLearner::V [protected] |

Definition at line 68 of file EntropyContrastLearner.h.

Referenced by build_(), initializeParams(), and train().

VarArray PLearn::EntropyContrastLearner::V_b [protected] |

Definition at line 70 of file EntropyContrastLearner.h.

Referenced by build_(), and initializeParams().

Vec PLearn::EntropyContrastLearner::V_b_save [protected] |

Definition at line 74 of file EntropyContrastLearner.h.

Referenced by build_().

Vec PLearn::EntropyContrastLearner::V_save [protected] |

Definition at line 73 of file EntropyContrastLearner.h.

VarArray PLearn::EntropyContrastLearner::W [protected] |

Definition at line 69 of file EntropyContrastLearner.h.

Referenced by build_(), initializeParams(), and train().

VarArray PLearn::EntropyContrastLearner::W_b [protected] |

Definition at line 71 of file EntropyContrastLearner.h.

Referenced by build_(), and initializeParams().

Vec PLearn::EntropyContrastLearner::W_b_save [protected] |

Definition at line 76 of file EntropyContrastLearner.h.

Referenced by build_().

Vec PLearn::EntropyContrastLearner::W_save [protected] |

Definition at line 75 of file EntropyContrastLearner.h.

Definition at line 103 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 104 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 102 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 101 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 100 of file EntropyContrastLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::EntropyContrastLearner::x [protected] |

Definition at line 66 of file EntropyContrastLearner.h.

Var PLearn::EntropyContrastLearner::x_hat [protected] |

Definition at line 67 of file EntropyContrastLearner.h.

Referenced by build_().

1.7.4

1.7.4