|

PLearn 0.1

|

|

PLearn 0.1

|

Neural net, initialized with SVDs of logistic auto-regressions. More...

#include <StackedSVDNet.h>

Public Member Functions | |

| StackedSVDNet () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| void | greedyStep (const Mat &inputs, const Mat &targets, int index, Vec train_costs) |

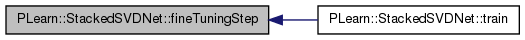

| void | fineTuningStep (const Mat &inputs, const Mat &targets, Vec &train_costs) |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual StackedSVDNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | greedy_learning_rate |

| The learning rate used during the logistic auto-regression gradient descent training. | |

| real | greedy_decrease_ct |

| The decrease constant of the learning rate used during the logistic auto-regression gradient descent training. | |

| real | fine_tuning_learning_rate |

| The learning rate used during the fine tuning gradient descent. | |

| real | fine_tuning_decrease_ct |

| The decrease constant of the learning rate used during fine tuning gradient descent. | |

| int | minibatch_size |

| Size of mini-batch for gradient descent. | |

| bool | global_output_layer |

| Indication that the output layer (given by the final module) should have as input all units of the network (including the input units) | |

| bool | fill_in_null_diagonal |

| Indication that the zero diagonal of the weight matrix after logistic auto-regression should be filled with the maximum absolute value of each corresponding row. | |

| TVec< int > | training_schedule |

| Number of examples to use during each phase of learning: first the greedy phases, and then the fine-tuning phase. | |

| TVec< PP< RBMLayer > > | layers |

| The layers of units in the network. | |

| PP< OnlineLearningModule > | final_module |

| Module that takes as input the output of the last layer (layers[n_layers-1), and feeds its output to final_cost which defines the fine-tuning criteria. | |

| PP< CostModule > | final_cost |

| The cost function to be applied on top of the neural network (i.e. | |

| TVec< PP< RBMMatrixConnection > > | connections |

| The weights of the connections between the layers. | |

| TVec< PP< RBMConnection > > | rbm_connections |

| View of connections as RBMConnection pointers (for compatibility with RBM function calls) | |

| int | n_layers |

| Number of layers. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| TVec< Mat > | activation_gradients |

| Stores the gradient of the cost wrt the activations of the input and hidden layers (at the input of the layers) | |

| TVec< Mat > | expectation_gradients |

| Stores the gradient of the cost wrt the expectations of the input and hidden layers (at the output of the layers) | |

| PP< RBMLayer > | reconstruction_layer |

| Reconstruction layer. | |

| Mat | reconstruction_targets |

| Reconstruction target. | |

| Mat | reconstruction_costs |

| Reconstruction costs. | |

| Vec | reconstruction_test_costs |

| Reconstruction costs (when using computeOutput and computeCostsFromOutput) | |

| Vec | reconstruction_activation_gradient |

| Reconstruction activation gradient. | |

| Mat | reconstruction_activation_gradients |

| Reconstruction activation gradients. | |

| Mat | reconstruction_input_gradients |

| Reconstruction activations. | |

| Vec | global_output_layer_input |

| Global output layer input. | |

| Mat | global_output_layer_inputs |

| Global output layer inputs. | |

| Mat | global_output_layer_input_gradients |

| Global output layer input gradients. | |

| Mat | final_cost_inputs |

| Inputs of the final_cost. | |

| Vec | final_cost_value |

| Cost value of final_cost. | |

| Mat | final_cost_values |

| Cost values of final_cost. | |

| Mat | final_cost_gradients |

| Stores the gradients of the cost at the inputs of final_cost. | |

| TVec< int > | cumulative_schedule |

| Cumulative training schedule. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_layers_and_connections () |

| void | build_classification_cost () |

| void | build_costs () |

| void | setLearningRate (real the_learning_rate) |

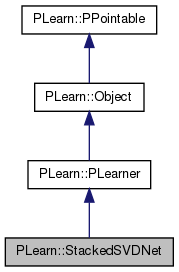

Neural net, initialized with SVDs of logistic auto-regressions.

Definition at line 56 of file StackedSVDNet.h.

typedef PLearner PLearn::StackedSVDNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 58 of file StackedSVDNet.h.

| PLearn::StackedSVDNet::StackedSVDNet | ( | ) |

Default constructor.

Definition at line 55 of file StackedSVDNet.cc.

References PLearn::PLearner::nstages, and PLearn::PLearner::random_gen.

:

greedy_learning_rate( 0. ),

greedy_decrease_ct( 0. ),

fine_tuning_learning_rate( 0. ),

fine_tuning_decrease_ct( 0. ),

minibatch_size(50),

global_output_layer(false),

fill_in_null_diagonal(false),

n_layers( 0 )

{

// random_gen will be initialized in PLearner::build_()

random_gen = new PRandom();

nstages = 0;

}

| string PLearn::StackedSVDNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

| OptionList & PLearn::StackedSVDNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

| RemoteMethodMap & PLearn::StackedSVDNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

| Object * PLearn::StackedSVDNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file StackedSVDNet.cc.

| StaticInitializer StackedSVDNet::_static_initializer_ & PLearn::StackedSVDNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

| void PLearn::StackedSVDNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 285 of file StackedSVDNet.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

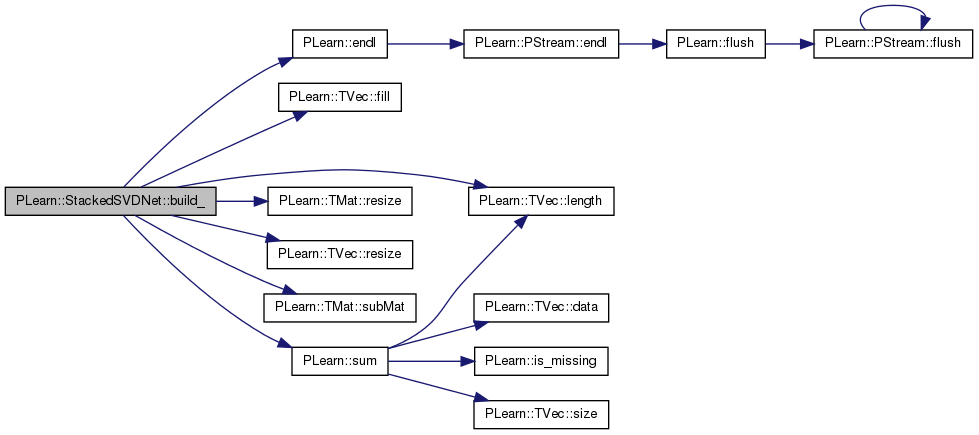

| void PLearn::StackedSVDNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 161 of file StackedSVDNet.cc.

References activation_gradients, cumulative_schedule, PLearn::endl(), expectation_gradients, PLearn::TVec< T >::fill(), final_cost, final_cost_gradients, final_cost_inputs, final_cost_value, final_cost_values, final_module, fine_tuning_learning_rate, global_output_layer, global_output_layer_input, global_output_layer_input_gradients, global_output_layer_inputs, i, PLearn::PLearner::inputsize_, layers, PLearn::TVec< T >::length(), minibatch_size, MISSING_VALUE, n_layers, PLERROR, PLearn::PLearner::random_gen, reconstruction_costs, reconstruction_test_costs, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::subMat(), PLearn::sum(), PLearn::PLearner::targetsize_, training_schedule, and PLearn::PLearner::weightsize_.

Referenced by build().

{

MODULE_LOG << "build_() called" << endl;

if(inputsize_ > 0 && targetsize_ > 0)

{

// Initialize some learnt variables

n_layers = layers.length();

cumulative_schedule.resize( n_layers+1 );

cumulative_schedule[0] = 0;

for( int i=0 ; i<n_layers ; i++ )

{

cumulative_schedule[i+1] = cumulative_schedule[i] +

training_schedule[i];

}

reconstruction_test_costs.resize( n_layers-1 );

reconstruction_test_costs.fill( MISSING_VALUE );

if( training_schedule.length() != n_layers )

PLERROR("StackedSVDNet::build_() - \n"

"training_schedule should have %d elements.\n",

n_layers-1);

if( weightsize_ > 0 )

PLERROR("StackedSVDNet::build_() - \n"

"usage of weighted samples (weight size > 0) is not\n"

"implemented yet.\n");

if(layers[0]->size != inputsize_)

PLERROR("StackedSVDNet::build_layers_and_connections() - \n"

"layers[0] should have a size of %d.\n",

inputsize_);

reconstruction_costs.resize(minibatch_size,1);

activation_gradients.resize( n_layers );

expectation_gradients.resize( n_layers );

for( int i=0 ; i<n_layers ; i++ )

{

if( !(layers[i]->random_gen) )

{

layers[i]->random_gen = random_gen;

layers[i]->forget();

}

if(i>0 && layers[i]->size > layers[i-1]->size)

PLERROR("In StackedSVDNet::build()_: "

"layers must have decreasing sizes from bottom to top.");

activation_gradients[i].resize( minibatch_size, layers[i]->size );

expectation_gradients[i].resize( minibatch_size, layers[i]->size );

}

if( !final_cost )

PLERROR("StackedSVDNet::build_costs() - \n"

"final_cost should be provided.\n");

final_cost_inputs.resize( minibatch_size, final_cost->input_size );

final_cost_value.resize( final_cost->output_size );

final_cost_values.resize( minibatch_size, final_cost->output_size );

final_cost_gradients.resize( minibatch_size, final_cost->input_size );

final_cost->setLearningRate( fine_tuning_learning_rate );

if( !(final_cost->random_gen) )

{

final_cost->random_gen = random_gen;

final_cost->forget();

}

if( !final_module )

PLERROR("StackedSVDNet::build_costs() - \n"

"final_module should be provided.\n");

if(global_output_layer)

{

int sum = 0;

for(int i=0; i<layers.length(); i++)

sum += layers[i]->size;

if( sum != final_module->input_size )

PLERROR("StackedSVDNet::build_costs() - \n"

"final_module should have an input_size of %d.\n",

sum);

global_output_layer_input.resize(sum);

global_output_layer_inputs.resize(minibatch_size,sum);

global_output_layer_input_gradients.resize(minibatch_size,sum);

expectation_gradients[n_layers-1] =

global_output_layer_input_gradients.subMat(

0, sum-layers[n_layers-1]->size,

minibatch_size, layers[n_layers-1]->size);

}

else

{

if( layers[n_layers-1]->size != final_module->input_size )

PLERROR("StackedSVDNet::build_costs() - \n"

"final_module should have an input_size of %d.\n",

layers[n_layers-1]->size);

}

if( final_module->output_size != final_cost->input_size )

PLERROR("StackedSVDNet::build_costs() - \n"

"final_module should have an output_size of %d.\n",

final_cost->input_size);

final_module->setLearningRate( fine_tuning_learning_rate );

if( !(final_module->random_gen) )

{

final_module->random_gen = random_gen;

final_module->forget();

}

if(targetsize_ != 1)

PLERROR("StackedSVDNet::build_costs() - \n"

"target size of %d is not supported.\n", targetsize_);

}

}

| void PLearn::StackedSVDNet::build_classification_cost | ( | ) | [private] |

| void PLearn::StackedSVDNet::build_costs | ( | ) | [private] |

| void PLearn::StackedSVDNet::build_layers_and_connections | ( | ) | [private] |

| string PLearn::StackedSVDNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file StackedSVDNet.cc.

Referenced by train().

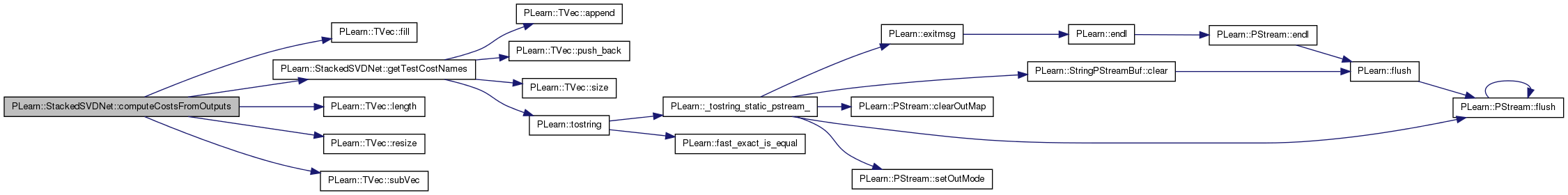

| void PLearn::StackedSVDNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 765 of file StackedSVDNet.cc.

References cumulative_schedule, PLearn::TVec< T >::fill(), final_cost, final_cost_value, getTestCostNames(), i, PLearn::TVec< T >::length(), MISSING_VALUE, n_layers, reconstruction_test_costs, PLearn::TVec< T >::resize(), PLearn::PLearner::stage, and PLearn::TVec< T >::subVec().

{

//Assumes that computeOutput has been called

costs.resize( getTestCostNames().length() );

costs.fill( MISSING_VALUE );

if( stage == 0 )

return;

for( int i=0 ; i<n_layers-1 ; i++ )

{

if( stage <= cumulative_schedule[i+1] )

{

costs[i] = reconstruction_test_costs[i];

return;

}

}

final_cost->fprop( output, target, final_cost_value );

costs.subVec(0, reconstruction_test_costs.length()) << reconstruction_test_costs;

costs.subVec(costs.length()-final_cost_value.length(),

final_cost_value.length()) <<

final_cost_value;

}

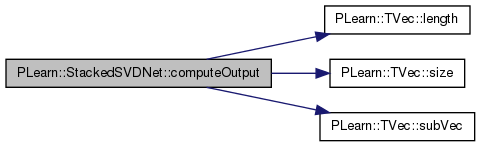

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 719 of file StackedSVDNet.cc.

References connections, cumulative_schedule, final_module, global_output_layer, global_output_layer_input, i, layers, PLearn::TVec< T >::length(), n_layers, rbm_connections, reconstruction_layer, reconstruction_test_costs, PLearn::TVec< T >::size(), PLearn::PLearner::stage, and PLearn::TVec< T >::subVec().

{

// fprop

layers[ 0 ]->expectation << input ;

layers[ 0 ]->expectation_is_up_to_date = true;

if( stage == 0 )

{

output << input;

return;

}

for( int i=0 ; i<n_layers-1 ; i++ )

{

connections[ i ]->setAsDownInput( layers[i]->expectation );

if( stage <= cumulative_schedule[i+1] )

{

reconstruction_layer->getAllActivations( rbm_connections[i], 0, false );

reconstruction_layer->computeExpectation();

reconstruction_test_costs[i] =

reconstruction_layer->fpropNLL( layers[i]->expectation );

output << reconstruction_layer->expectation;

return;

}

layers[ i+1 ]->getAllActivations( rbm_connections[i], 0, false );

layers[ i+1 ]->computeExpectation();

}

if(global_output_layer)

{

int offset = 0;

for(int i=0; i<layers.length(); i++)

{

global_output_layer_input.subVec(offset, layers[i]->size)

<< layers[i]->expectation;

offset += layers[i]->size;

}

final_module->fprop( global_output_layer_input, output );

}

else

{

final_module->fprop( layers[ n_layers-1 ]->expectation,

output );

}

}

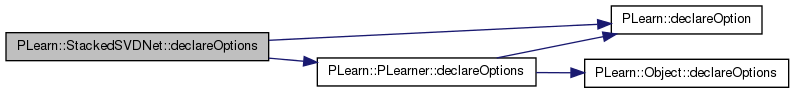

| void PLearn::StackedSVDNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 70 of file StackedSVDNet.cc.

References PLearn::OptionBase::buildoption, connections, PLearn::declareOption(), PLearn::PLearner::declareOptions(), fill_in_null_diagonal, final_cost, final_module, fine_tuning_decrease_ct, fine_tuning_learning_rate, global_output_layer, greedy_decrease_ct, greedy_learning_rate, layers, PLearn::OptionBase::learntoption, minibatch_size, n_layers, and training_schedule.

{

declareOption(ol, "greedy_learning_rate",

&StackedSVDNet::greedy_learning_rate,

OptionBase::buildoption,

"The learning rate used during the logistic auto-regression "

"gradient descent training"

);

declareOption(ol, "greedy_decrease_ct",

&StackedSVDNet::greedy_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during the "

"logistic auto-regression gradient descent training. "

);

declareOption(ol, "fine_tuning_learning_rate",

&StackedSVDNet::fine_tuning_learning_rate,

OptionBase::buildoption,

"The learning rate used during the fine tuning gradient descent");

declareOption(ol, "fine_tuning_decrease_ct",

&StackedSVDNet::fine_tuning_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during "

"fine tuning\n"

"gradient descent.\n");

declareOption(ol, "minibatch_size",

&StackedSVDNet::minibatch_size,

OptionBase::buildoption,

"Size of mini-batch for gradient descent");

declareOption(ol, "training_schedule", &StackedSVDNet::training_schedule,

OptionBase::buildoption,

"Number of examples to use during each phase of learning:\n"

"first the greedy phases, and then the fine-tuning phase.\n"

"However, the learning will stop as soon as we reach nstages.\n"

"For example for 2 hidden layers, with 1000 examples in each\n"

"greedy phase, and 500 in the fine-tuning phase, this option\n"

"should be [1000 1000 500], and nstages should be at least 2500.\n"

);

declareOption(ol, "global_output_layer",

&StackedSVDNet::global_output_layer,

OptionBase::buildoption,

"Indication that the output layer (given by the final module)\n"

"should have as input all units of the network (including the"

"input units).\n");

declareOption(ol, "fill_in_null_diagonal",

&StackedSVDNet::fill_in_null_diagonal,

OptionBase::buildoption,

"Indication that the zero diagonal of the weight matrix after\n"

"logistic auto-regression should be filled with the\n"

"maximum absolute value of each corresponding row.\n");

declareOption(ol, "layers", &StackedSVDNet::layers,

OptionBase::buildoption,

"The layers of units in the network. The first element\n"

"of this vector should be the input layer and the\n"

"subsequent elements should be the hidden layers. The\n"

"should not be included in this layer.\n");

declareOption(ol, "final_module", &StackedSVDNet::final_module,

OptionBase::buildoption,

"Module that takes as input the output of the last layer\n"

"(layers[n_layers-1), and feeds its output to final_cost\n"

"which defines the fine-tuning criteria.\n"

);

declareOption(ol, "final_cost", &StackedSVDNet::final_cost,

OptionBase::buildoption,

"The cost function to be applied on top of the neural network\n"

"(i.e. at the output of final_module). Its gradients will be \n"

"backpropagated to final_module and then backpropagated to\n"

"the layers.\n"

);

declareOption(ol, "connections", &StackedSVDNet::connections,

OptionBase::learntoption,

"The weights of the connections between the layers");

declareOption(ol, "n_layers", &StackedSVDNet::n_layers,

OptionBase::learntoption,

"Number of layers");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::StackedSVDNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 175 of file StackedSVDNet.h.

:

//##### Not Options #####################################################

| StackedSVDNet * PLearn::StackedSVDNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file StackedSVDNet.cc.

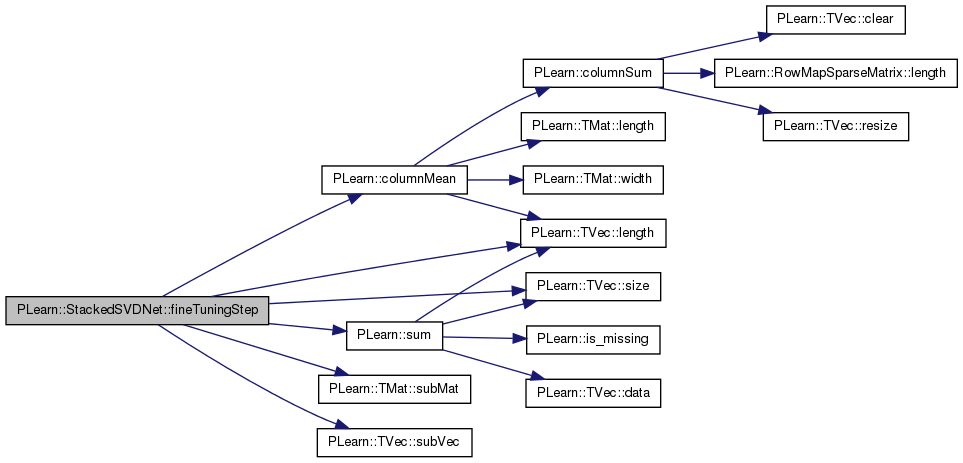

| void PLearn::StackedSVDNet::fineTuningStep | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Vec & | train_costs | ||

| ) |

Definition at line 638 of file StackedSVDNet.cc.

References activation_gradients, PLearn::columnMean(), connections, expectation_gradients, final_cost, final_cost_gradients, final_cost_inputs, final_cost_value, final_cost_values, final_module, global_output_layer, global_output_layer_input_gradients, global_output_layer_inputs, i, layers, PLearn::TVec< T >::length(), minibatch_size, n_layers, rbm_connections, PLearn::TVec< T >::size(), PLearn::TMat< T >::subMat(), PLearn::TVec< T >::subVec(), and PLearn::sum().

Referenced by train().

{

// fprop

layers[ 0 ]->setExpectations( inputs );

for( int i=0 ; i<n_layers-1 ; i++ )

{

connections[ i ]->setAsDownInputs( layers[i]->getExpectations() );

layers[ i+1 ]->getAllActivations( rbm_connections[i], 0, true );

layers[ i+1 ]->computeExpectations();

}

if( global_output_layer )

{

int offset = 0;

for(int i=0; i<layers.length(); i++)

{

global_output_layer_inputs.subMat(0, offset,

minibatch_size, layers[i]->size)

<< layers[i]->getExpectations();

offset += layers[i]->size;

}

final_module->fprop( global_output_layer_inputs, final_cost_inputs );

}

else

{

final_module->fprop( layers[ n_layers-1 ]->getExpectations(),

final_cost_inputs );

}

final_cost->fprop( final_cost_inputs, targets, final_cost_values );

columnMean( final_cost_values,

final_cost_value );

train_costs.subVec(train_costs.length()-final_cost_value.length(),

final_cost_value.length()) << final_cost_value;

final_cost->bpropUpdate( final_cost_inputs, targets,

final_cost_value,

final_cost_gradients );

if( global_output_layer )

{

final_module->bpropUpdate( global_output_layer_inputs,

final_cost_inputs,

global_output_layer_input_gradients,

final_cost_gradients );

}

else

{

final_module->bpropUpdate( layers[ n_layers-1 ]->getExpectations(),

final_cost_inputs,

expectation_gradients[ n_layers-1 ],

final_cost_gradients );

}

int sum = final_module->input_size - layers[ n_layers-1 ]->size;

for( int i=n_layers-1 ; i>0 ; i-- )

{

if( global_output_layer && i != n_layers-1 )

{

expectation_gradients[ i ] +=

global_output_layer_input_gradients.subMat(

0, sum - layers[i]->size,

minibatch_size, layers[i]->size);

sum -= layers[i]->size;

}

layers[ i ]->bpropUpdate( layers[ i ]->activations,

layers[ i ]->getExpectations(),

activation_gradients[ i ],

expectation_gradients[ i ] );

connections[ i-1 ]->bpropUpdate( layers[ i-1 ]->getExpectations(),

layers[ i ]->activations,

expectation_gradients[ i-1 ],

activation_gradients[ i ] );

}

}

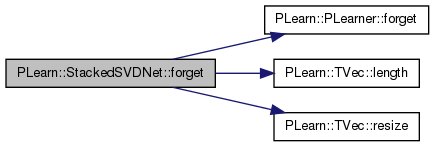

| void PLearn::StackedSVDNet::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 337 of file StackedSVDNet.cc.

References connections, final_cost, final_module, PLearn::PLearner::forget(), i, layers, PLearn::TVec< T >::length(), rbm_connections, PLearn::TVec< T >::resize(), and PLearn::PLearner::stage.

{

inherited::forget();

connections.resize(0);

rbm_connections.resize(0);

for(int i=0; i<layers.length(); i++)

layers[i]->forget();

final_module->forget();

final_cost->forget();

stage = 0;

}

| OptionList & PLearn::StackedSVDNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file StackedSVDNet.cc.

| OptionMap & PLearn::StackedSVDNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file StackedSVDNet.cc.

| RemoteMethodMap & PLearn::StackedSVDNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file StackedSVDNet.cc.

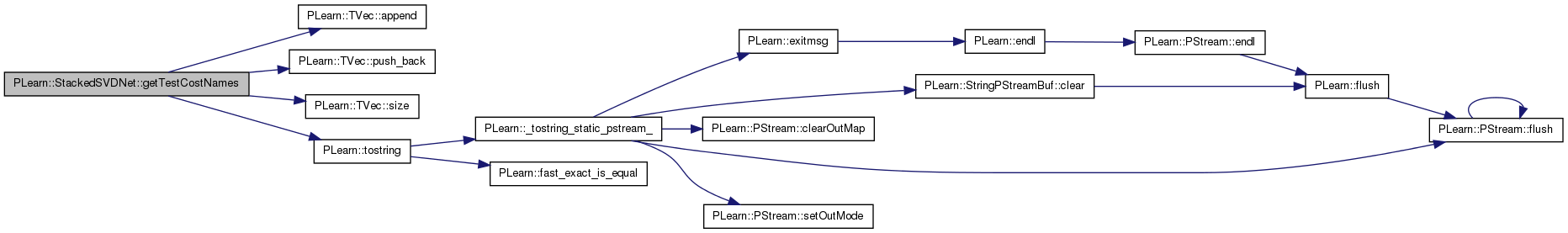

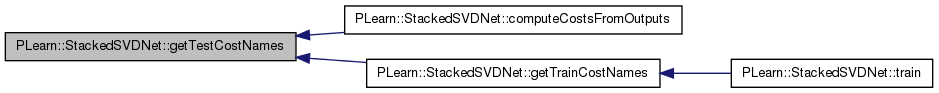

| TVec< string > PLearn::StackedSVDNet::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 792 of file StackedSVDNet.cc.

References PLearn::TVec< T >::append(), final_cost, i, layers, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::size(), and PLearn::tostring().

Referenced by computeCostsFromOutputs(), and getTrainCostNames().

{

// Return the names of the costs computed by computeCostsFromOutputs

// (these may or may not be exactly the same as what's returned by

// getTrainCostNames).

TVec<string> cost_names(0);

for( int i=0; i<layers.size()-1; i++)

cost_names.push_back("layer"+tostring(i)+".reconstruction_error");

cost_names.append( final_cost->name() );

return cost_names;

}

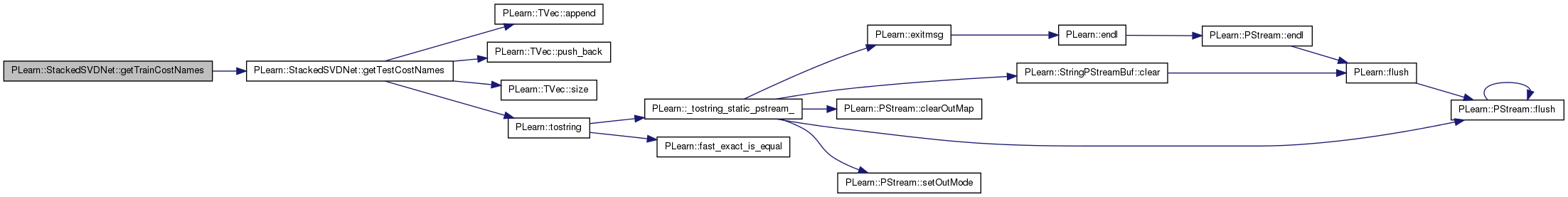

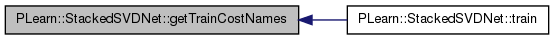

| TVec< string > PLearn::StackedSVDNet::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 808 of file StackedSVDNet.cc.

References getTestCostNames().

Referenced by train().

{

return getTestCostNames() ;

}

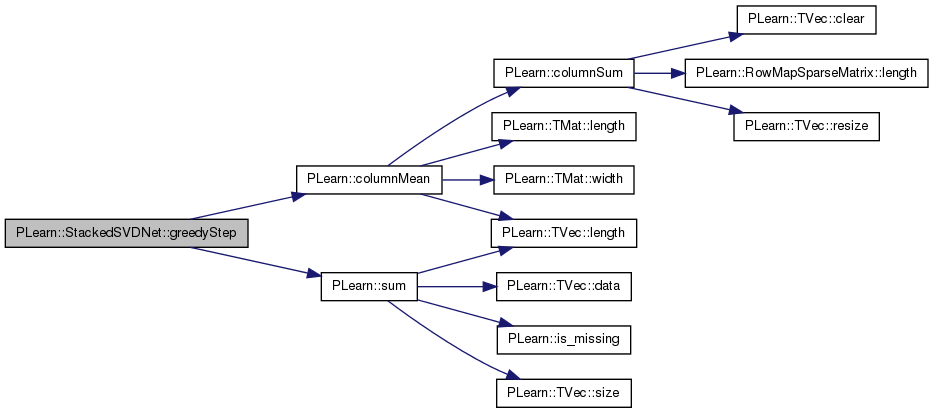

| void PLearn::StackedSVDNet::greedyStep | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| int | index, | ||

| Vec | train_costs | ||

| ) |

Definition at line 597 of file StackedSVDNet.cc.

References PLearn::columnMean(), connections, i, layers, minibatch_size, n_layers, PLASSERT, rbm_connections, reconstruction_activation_gradient, reconstruction_activation_gradients, reconstruction_costs, reconstruction_input_gradients, reconstruction_layer, reconstruction_targets, and PLearn::sum().

Referenced by train().

{

PLASSERT( index < n_layers );

layers[ 0 ]->setExpectations( inputs );

for( int i=0 ; i<index ; i++ )

{

connections[ i ]->setAsDownInputs( layers[i]->getExpectations() );

layers[ i+1 ]->getAllActivations( rbm_connections[i], 0, true );

layers[ i+1 ]->computeExpectations();

}

reconstruction_targets << layers[ index ]->getExpectations();

connections[ index ]->setAsDownInputs( layers[ index ]->getExpectations() );

reconstruction_layer->getAllActivations( rbm_connections[ index ], 0, true );

reconstruction_layer->computeExpectations();

reconstruction_layer->fpropNLL( layers[ index ]->getExpectations(),

reconstruction_costs);

train_costs[index] = sum( reconstruction_costs )/minibatch_size;

reconstruction_layer->bpropNLL(

layers[ index ]->getExpectations(), reconstruction_costs,

reconstruction_activation_gradients );

columnMean( reconstruction_activation_gradients,

reconstruction_activation_gradient );

reconstruction_layer->update( reconstruction_activation_gradient );

connections[ index ]->bpropUpdate(

layers[ index ]->getExpectations(),

layers[ index ]->activations,

reconstruction_input_gradients,

reconstruction_activation_gradients);

// Set diagonal to zero

for(int i=0; i<connections[ index ]->up_size; i++)

connections[ index ]->weights(i,i) = 0;

}

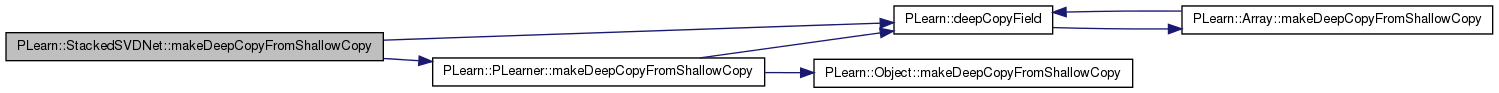

| void PLearn::StackedSVDNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 292 of file StackedSVDNet.cc.

References activation_gradients, connections, cumulative_schedule, PLearn::deepCopyField(), expectation_gradients, final_cost, final_cost_gradients, final_cost_inputs, final_cost_value, final_cost_values, final_module, global_output_layer_input, global_output_layer_input_gradients, global_output_layer_inputs, layers, PLearn::PLearner::makeDeepCopyFromShallowCopy(), rbm_connections, reconstruction_activation_gradient, reconstruction_activation_gradients, reconstruction_costs, reconstruction_input_gradients, reconstruction_layer, reconstruction_targets, reconstruction_test_costs, and training_schedule.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// deepCopyField(, copies);

deepCopyField(training_schedule, copies);

deepCopyField(layers, copies);

deepCopyField(final_module, copies);

deepCopyField(final_cost, copies);

deepCopyField(connections, copies);

deepCopyField(rbm_connections, copies);

deepCopyField(activation_gradients, copies);

deepCopyField(expectation_gradients, copies);

deepCopyField(reconstruction_layer, copies);

deepCopyField(reconstruction_targets, copies);

deepCopyField(reconstruction_costs, copies);

deepCopyField(reconstruction_test_costs, copies);

deepCopyField(reconstruction_activation_gradient, copies);

deepCopyField(reconstruction_activation_gradients, copies);

deepCopyField(reconstruction_input_gradients, copies);

deepCopyField(global_output_layer_input, copies);

deepCopyField(global_output_layer_inputs, copies);

deepCopyField(global_output_layer_input_gradients, copies);

deepCopyField(final_cost_inputs, copies);

deepCopyField(final_cost_value, copies);

deepCopyField(final_cost_values, copies);

deepCopyField(final_cost_gradients, copies);

deepCopyField(cumulative_schedule, copies);

//PLERROR("In StackedSVDNet::makeDeepCopyFromShallowCopy(): "

// "not implemented yet.");

}

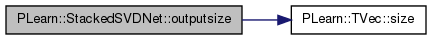

| int PLearn::StackedSVDNet::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 327 of file StackedSVDNet.cc.

References cumulative_schedule, final_module, i, layers, n_layers, PLearn::TVec< T >::size(), and PLearn::PLearner::stage.

{

if( stage == 0 )

return layers[0]->size;

for( int i=1; i<n_layers; i++ )

if( stage <= cumulative_schedule[i] )

return layers[i-1]->size;

return final_module->output_size;

}

| void PLearn::StackedSVDNet::setLearningRate | ( | real | the_learning_rate | ) | [private] |

Definition at line 816 of file StackedSVDNet.cc.

References connections, final_cost, final_module, fine_tuning_learning_rate, i, layers, and n_layers.

Referenced by train().

{

for( int i=0 ; i<n_layers-1 ; i++ )

{

layers[i]->setLearningRate( the_learning_rate );

connections[i]->setLearningRate( the_learning_rate );

}

layers[n_layers-1]->setLearningRate( the_learning_rate );

final_cost->setLearningRate( fine_tuning_learning_rate );

final_module->setLearningRate( fine_tuning_learning_rate );

}

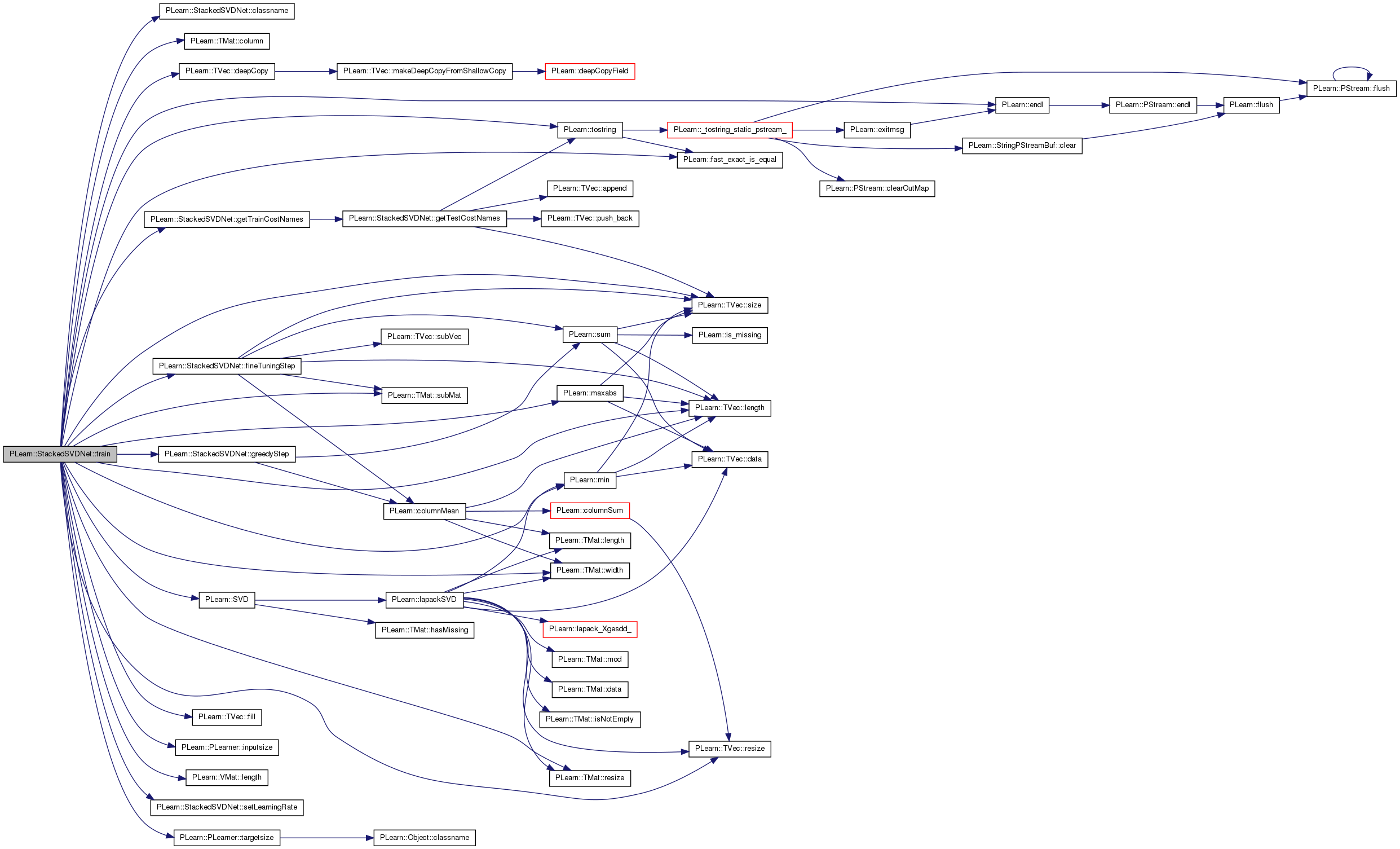

| void PLearn::StackedSVDNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 353 of file StackedSVDNet.cc.

References classname(), PLearn::TMat< T >::column(), connections, cumulative_schedule, PLearn::TVec< T >::deepCopy(), PLearn::endl(), PLearn::fast_exact_is_equal(), PLearn::TVec< T >::fill(), fill_in_null_diagonal, fine_tuning_decrease_ct, fine_tuning_learning_rate, fineTuningStep(), getTrainCostNames(), greedy_decrease_ct, greedy_learning_rate, greedyStep(), i, PLearn::PLearner::inputsize(), j, layers, PLearn::VMat::length(), PLearn::TVec< T >::length(), PLearn::maxabs(), PLearn::min(), minibatch_size, MISSING_VALUE, n_layers, PLearn::PLearner::nstages, PLearn::PLearner::random_gen, rbm_connections, reconstruction_activation_gradient, reconstruction_activation_gradients, reconstruction_input_gradients, reconstruction_layer, reconstruction_targets, PLearn::PLearner::report_progress, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), setLearningRate(), PLearn::TVec< T >::size(), PLearn::PLearner::stage, PLearn::TMat< T >::subMat(), PLearn::SVD(), PLearn::PLearner::targetsize(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_schedule, PLearn::PLearner::verbosity, and PLearn::TMat< T >::width().

{

MODULE_LOG << "train() called " << endl;

// Enforce value of cumulative_schedule because build_() might

// not be called if we change training_schedule inside a HyperLearner

for( int i=0 ; i<n_layers ; i++ )

cumulative_schedule[i+1] = cumulative_schedule[i] +

training_schedule[i];

Vec input( inputsize() );

Vec target( targetsize() );

Mat inputs( minibatch_size, inputsize() );

Mat targets( minibatch_size, targetsize() );

Vec weights( minibatch_size );

TVec<string> train_cost_names = getTrainCostNames() ;

Vec train_costs( train_cost_names.length() );

train_costs.fill(MISSING_VALUE) ;

PP<ProgressBar> pb;

// clear stats of previous epoch

train_stats->forget();

real lr = 0;

int init_stage;

int end_stage;

/***** initial greedy training *****/

connections.resize(n_layers-1);

rbm_connections.resize(n_layers-1);

TVec< Vec > biases(n_layers-1);

for( int i=0 ; i<n_layers-1 ; i++ )

{

end_stage = min(cumulative_schedule[i+1], nstages);

if( stage >= end_stage )

continue;

MODULE_LOG << "Training connection weights between layers " << i

<< " and " << i+1 << endl;

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

MODULE_LOG << " greedy_learning_rate = "

<< greedy_learning_rate << endl;

if( report_progress )

pb = new ProgressBar( "Training layer "+tostring(i)

+" of "+classname(),

end_stage - stage );

// Finalize training of last layer (if any)

if( i>0 && stage < end_stage && stage == cumulative_schedule[i] )

{

if(fill_in_null_diagonal)

{

// Fill in the empty diagonal

for(int j=0; j<layers[i]->size; j++)

{

connections[i-1]->weights(j,j) =

maxabs(connections[i-1]->weights(j));

}

}

if(layers[i-1]->size != layers[i]->size)

{

Mat A,U,Vt;

Vec S;

A.resize( reconstruction_layer->size,

reconstruction_layer->size+1);

A.column( 0 ) << reconstruction_layer->bias;

A.subMat( 0, 1, reconstruction_layer->size,

reconstruction_layer->size ) <<

connections[i-1]->weights;

SVD( A, U, S, Vt );

connections[ i-1 ]->up_size = layers[ i ]->size;

connections[ i-1 ]->down_size = layers[ i-1 ]->size;

connections[ i-1 ]->build();

connections[ i-1 ]->weights << Vt.subMat(

0, 1, layers[ i ]->size, Vt.width()-1 );

biases[ i-1 ].resize( layers[i]->size );

for(int j=0; j<biases[ i-1 ].length(); j++)

biases[ i-1 ][ j ] = Vt(j,0);

for(int j=0; j<connections[ i-1 ]->up_size; j++)

{

connections[ i-1 ]->weights( j ) *= S[ j ];

biases[ i-1 ][ j ] *= S[ j ];

}

}

else

{

biases[ i-1 ].resize( layers[ i ]->size );

biases[ i-1 ] << reconstruction_layer->bias;

}

layers[ i ]->bias << biases[ i-1 ];

}

// Create connections

if(stage == cumulative_schedule[i])

{

connections[i] = new RBMMatrixConnection();

connections[i]->up_size = layers[i]->size;

connections[i]->down_size = layers[i]->size;

connections[i]->random_gen = random_gen;

connections[i]->build();

for(int j=0; j < layers[i]->size; j++)

connections[i]->weights(j,j) = 0;

rbm_connections[i] = (RBMMatrixConnection *) connections[i];

CopiesMap map;

reconstruction_layer = layers[ i ]->deepCopy( map );

reconstruction_targets.resize( minibatch_size, layers[ i ]->size );

reconstruction_activation_gradient.resize( layers[ i ]->size );

reconstruction_activation_gradients.resize(

minibatch_size, layers[ i ]->size );

reconstruction_input_gradients.resize(

minibatch_size, layers[ i ]->size );

lr = greedy_learning_rate;

connections[i]->setLearningRate( lr );

reconstruction_layer->setLearningRate( lr );

}

for( ; stage<end_stage ; stage++)

{

train_stats->forget();

if( !fast_exact_is_equal( greedy_decrease_ct , 0 ) )

{

lr = greedy_learning_rate/(1 + greedy_decrease_ct

* (stage - cumulative_schedule[i]) );

connections[i]->setLearningRate( lr );

reconstruction_layer->setLearningRate( lr );

}

train_set->getExamples((stage*minibatch_size)%train_set->length(),

minibatch_size, inputs, targets, weights,

NULL, true);

greedyStep( inputs, targets, i, train_costs );

train_stats->update( train_costs );

if( pb )

pb->update( stage - cumulative_schedule[i] + 1 );

}

train_stats->finalize();

}

/***** fine-tuning by gradient descent *****/

end_stage = min(cumulative_schedule[n_layers], nstages);

if( stage >= end_stage )

return;

// Finalize training of last layer (if any)

if( n_layers>1 && stage < end_stage && stage == cumulative_schedule[n_layers-1] )

{

if(fill_in_null_diagonal)

{

// Fill in the empty diagonal

for(int j=0; j<layers[n_layers-1]->size; j++)

{

connections[n_layers-2]->weights(j,j) =

maxabs(connections[n_layers-2]->weights(j));

}

}

if(layers[n_layers-2]->size != layers[n_layers-1]->size)

{

Mat A,U,Vt;

Vec S;

A.resize( reconstruction_layer->size,

reconstruction_layer->size+1);

A.column( 0 ) << reconstruction_layer->bias;

A.subMat( 0, 1, reconstruction_layer->size,

reconstruction_layer->size ) <<

connections[n_layers-2]->weights;

SVD( A, U, S, Vt );

connections[ n_layers-2 ]->up_size = layers[ n_layers-1 ]->size;

connections[ n_layers-2 ]->down_size = layers[ n_layers-2 ]->size;

connections[ n_layers-2 ]->build();

connections[ n_layers-2 ]->weights << Vt.subMat(

0, 1, layers[ n_layers-1 ]->size, Vt.width()-1 );

biases[ n_layers-2 ].resize( layers[n_layers-1]->size );

for(int j=0; j<biases[ n_layers-2 ].length(); j++)

biases[ n_layers-2 ][ j ] = Vt(j,0);

for(int j=0; j<connections[ n_layers-2 ]->up_size; j++)

{

connections[ n_layers-2 ]->weights( j ) *= S[ j ];

biases[ n_layers-2 ][ j ] *= S[ j ];

}

}

else

{

biases[ n_layers-2 ].resize( layers[ n_layers-1 ]->size );

biases[ n_layers-2 ] << reconstruction_layer->bias;

}

layers[ n_layers-1 ]->bias << biases[ n_layers-2 ];

}

MODULE_LOG << "Fine-tuning all parameters, by gradient descent" << endl;

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

MODULE_LOG << " fine_tuning_learning_rate = "

<< fine_tuning_learning_rate << endl;

init_stage = stage;

if( report_progress && stage < end_stage )

pb = new ProgressBar( "Fine-tuning parameters of all layers of "

+ classname(),

end_stage - init_stage );

setLearningRate( fine_tuning_learning_rate );

train_costs.fill(MISSING_VALUE);

for( ; stage<end_stage ; stage++ )

{

if( !fast_exact_is_equal( fine_tuning_decrease_ct, 0. ) )

setLearningRate( fine_tuning_learning_rate

/ (1. + fine_tuning_decrease_ct *

(stage - cumulative_schedule[n_layers]) ) );

train_set->getExamples((stage*minibatch_size)%train_set->length(),

minibatch_size, inputs, targets, weights,

NULL, true);

fineTuningStep( inputs, targets, train_costs );

train_stats->update( train_costs );

if( pb )

pb->update( stage - init_stage + 1 );

}

if(verbosity > 2)

cout << "error at stage " << stage << ": " <<

train_stats->getMean() << endl;

train_stats->finalize();

}

Reimplemented from PLearn::PLearner.

Definition at line 175 of file StackedSVDNet.h.

TVec<Mat> PLearn::StackedSVDNet::activation_gradients [mutable, protected] |

Stores the gradient of the cost wrt the activations of the input and hidden layers (at the input of the layers)

Definition at line 190 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

The weights of the connections between the layers.

Definition at line 116 of file StackedSVDNet.h.

Referenced by computeOutput(), declareOptions(), fineTuningStep(), forget(), greedyStep(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

TVec<int> PLearn::StackedSVDNet::cumulative_schedule [protected] |

Cumulative training schedule.

Definition at line 240 of file StackedSVDNet.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutput(), makeDeepCopyFromShallowCopy(), outputsize(), and train().

TVec<Mat> PLearn::StackedSVDNet::expectation_gradients [mutable, protected] |

Stores the gradient of the cost wrt the expectations of the input and hidden layers (at the output of the layers)

Definition at line 195 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Indication that the zero diagonal of the weight matrix after logistic auto-regression should be filled with the maximum absolute value of each corresponding row.

Definition at line 88 of file StackedSVDNet.h.

Referenced by declareOptions(), and train().

The cost function to be applied on top of the neural network (i.e.

at the output of final_module). Its gradients will be backpropagated to final_module and then backpropagated to the layers.

Definition at line 111 of file StackedSVDNet.h.

Referenced by build_(), computeCostsFromOutputs(), declareOptions(), fineTuningStep(), forget(), getTestCostNames(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Mat PLearn::StackedSVDNet::final_cost_gradients [mutable, protected] |

Stores the gradients of the cost at the inputs of final_cost.

Definition at line 237 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Mat PLearn::StackedSVDNet::final_cost_inputs [mutable, protected] |

Inputs of the final_cost.

Definition at line 228 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Vec PLearn::StackedSVDNet::final_cost_value [mutable, protected] |

Cost value of final_cost.

Definition at line 231 of file StackedSVDNet.h.

Referenced by build_(), computeCostsFromOutputs(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Mat PLearn::StackedSVDNet::final_cost_values [mutable, protected] |

Cost values of final_cost.

Definition at line 234 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Module that takes as input the output of the last layer (layers[n_layers-1), and feeds its output to final_cost which defines the fine-tuning criteria.

Definition at line 105 of file StackedSVDNet.h.

Referenced by build_(), computeOutput(), declareOptions(), fineTuningStep(), forget(), makeDeepCopyFromShallowCopy(), outputsize(), and setLearningRate().

The decrease constant of the learning rate used during fine tuning gradient descent.

Definition at line 76 of file StackedSVDNet.h.

Referenced by declareOptions(), and train().

The learning rate used during the fine tuning gradient descent.

Definition at line 72 of file StackedSVDNet.h.

Referenced by build_(), declareOptions(), setLearningRate(), and train().

Indication that the output layer (given by the final module) should have as input all units of the network (including the input units)

Definition at line 83 of file StackedSVDNet.h.

Referenced by build_(), computeOutput(), declareOptions(), and fineTuningStep().

Vec PLearn::StackedSVDNet::global_output_layer_input [mutable, protected] |

Global output layer input.

Definition at line 219 of file StackedSVDNet.h.

Referenced by build_(), computeOutput(), and makeDeepCopyFromShallowCopy().

Mat PLearn::StackedSVDNet::global_output_layer_input_gradients [mutable, protected] |

Global output layer input gradients.

Definition at line 225 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

Mat PLearn::StackedSVDNet::global_output_layer_inputs [mutable, protected] |

Global output layer inputs.

Definition at line 222 of file StackedSVDNet.h.

Referenced by build_(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

The decrease constant of the learning rate used during the logistic auto-regression gradient descent training.

Definition at line 69 of file StackedSVDNet.h.

Referenced by declareOptions(), and train().

The learning rate used during the logistic auto-regression gradient descent training.

Definition at line 65 of file StackedSVDNet.h.

Referenced by declareOptions(), and train().

The layers of units in the network.

Definition at line 100 of file StackedSVDNet.h.

Referenced by build_(), computeOutput(), declareOptions(), fineTuningStep(), forget(), getTestCostNames(), greedyStep(), makeDeepCopyFromShallowCopy(), outputsize(), setLearningRate(), and train().

Size of mini-batch for gradient descent.

Definition at line 79 of file StackedSVDNet.h.

Referenced by build_(), declareOptions(), fineTuningStep(), greedyStep(), and train().

Number of layers.

Definition at line 123 of file StackedSVDNet.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutput(), declareOptions(), fineTuningStep(), greedyStep(), outputsize(), setLearningRate(), and train().

View of connections as RBMConnection pointers (for compatibility with RBM function calls)

Definition at line 120 of file StackedSVDNet.h.

Referenced by computeOutput(), fineTuningStep(), forget(), greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::StackedSVDNet::reconstruction_activation_gradient [mutable, protected] |

Reconstruction activation gradient.

Definition at line 210 of file StackedSVDNet.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::StackedSVDNet::reconstruction_activation_gradients [mutable, protected] |

Reconstruction activation gradients.

Definition at line 213 of file StackedSVDNet.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::StackedSVDNet::reconstruction_costs [mutable, protected] |

Reconstruction costs.

Definition at line 204 of file StackedSVDNet.h.

Referenced by build_(), greedyStep(), and makeDeepCopyFromShallowCopy().

Mat PLearn::StackedSVDNet::reconstruction_input_gradients [mutable, protected] |

Reconstruction activations.

Definition at line 216 of file StackedSVDNet.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

PP<RBMLayer> PLearn::StackedSVDNet::reconstruction_layer [mutable, protected] |

Reconstruction layer.

Definition at line 198 of file StackedSVDNet.h.

Referenced by computeOutput(), greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::StackedSVDNet::reconstruction_targets [mutable, protected] |

Reconstruction target.

Definition at line 201 of file StackedSVDNet.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::StackedSVDNet::reconstruction_test_costs [mutable, protected] |

Reconstruction costs (when using computeOutput and computeCostsFromOutput)

Definition at line 207 of file StackedSVDNet.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutput(), and makeDeepCopyFromShallowCopy().

Number of examples to use during each phase of learning: first the greedy phases, and then the fine-tuning phase.

However, the learning will stop as soon as we reach nstages. For example for 2 hidden layers, with 1000 examples in each greedy phase, and 500 in the fine-tuning phase, this option should be [1000 1000 500], and nstages should be at least 2500. When online = true, this vector is ignored and should be empty.

Definition at line 97 of file StackedSVDNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

1.7.4

1.7.4