|

PLearn 0.1

|

|

PLearn 0.1

|

#include <MoleculeTemplateLearner.h>

Public Member Functions | |

| MoleculeTemplateLearner () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

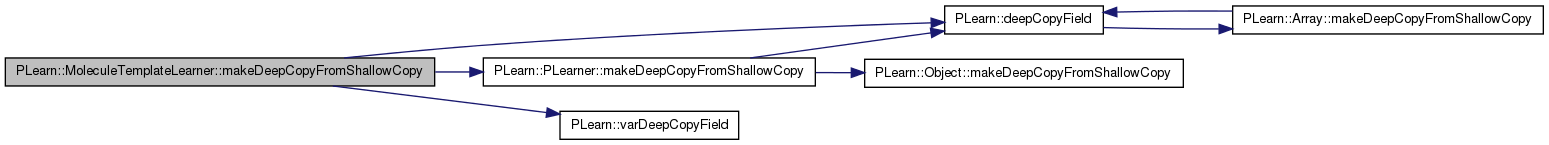

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual MoleculeTemplateLearner * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

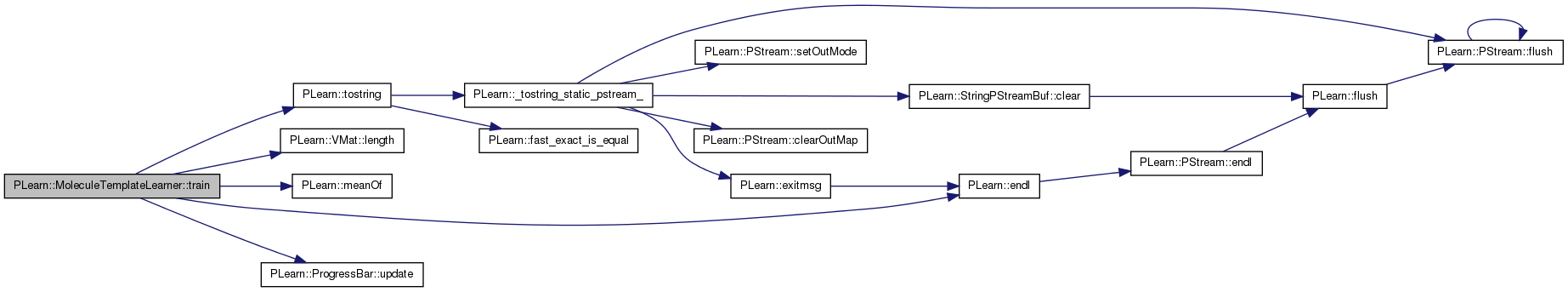

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

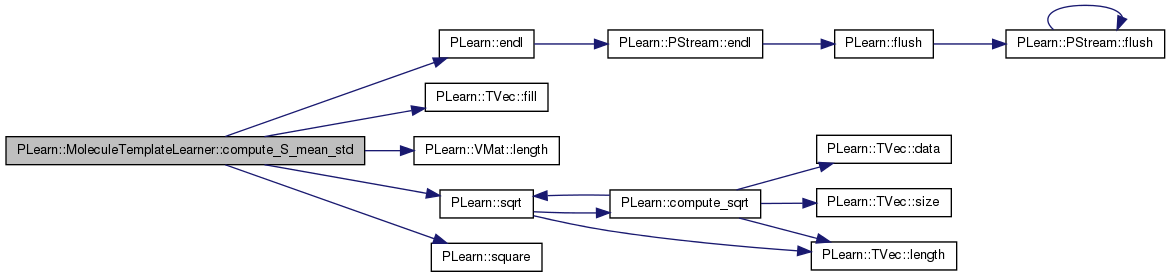

| void | compute_S_mean_std (Vec &mean, Vec &std) |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

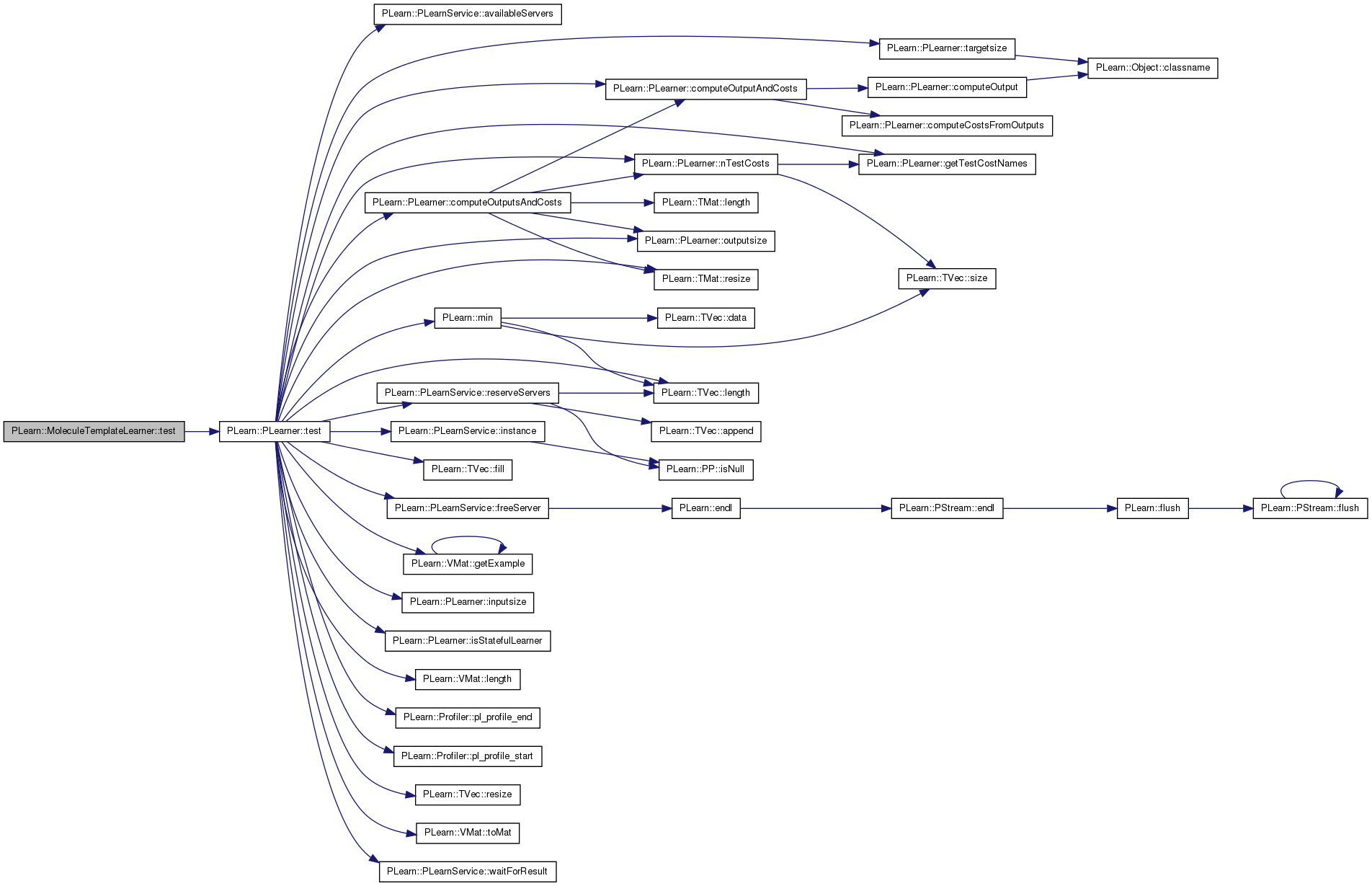

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts. | |

| void | initializeParams () |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | nhidden |

| real | weight_decay |

| int | noutputs |

| int | batch_size |

| int | n_active_templates |

| int | n_inactive_templates |

| int | n_templates |

| real | scaling_factor |

| real | lrate2 |

| bool | training_mode |

| bool | builded |

| PP< Optimizer > | optimizer |

| TVec< MoleculeTemplate > | templates |

| Vec | paramsvalues |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Var | input_index |

| VarArray | mu |

| VarArray | sigma |

| VarArray | mu_S |

| VarArray | sigma_S |

| VarArray | sigma_square_S |

| VarArray | sigma_square |

| VarArray | S |

| VarArray | S_after_scaling |

| VarArray | params |

| VarArray | penalties |

| Var | V |

| Var | W |

| Var | V_b |

| Var | W_b |

| Var | V_direct |

| Var | hl |

| Var | y |

| Var | y_before_transfer |

| Var | training_cost |

| Var | test_costs |

| Var | target |

| Var | temp_S |

| VarArray | costs |

| VarArray | temp_output |

| int | n_actives |

| int | n_inactives |

| Vec | S_std |

| Vec | sigma_s_vec |

| Func | f_output |

| Func | output_target_to_costs |

| Func | test_costf |

| vector< PMolecule > | Molecules |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Definition at line 55 of file MoleculeTemplateLearner.h.

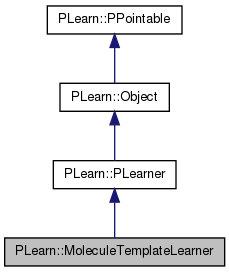

typedef PLearner PLearn::MoleculeTemplateLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 60 of file MoleculeTemplateLearner.h.

| PLearn::MoleculeTemplateLearner::MoleculeTemplateLearner | ( | ) |

Default constructor.

Definition at line 75 of file MoleculeTemplateLearner.cc.

:

nhidden(10) ,

weight_decay(0),

noutputs(1),

batch_size(1),

scaling_factor(1),

lrate2(1),

training_mode(true),

builded(false)

/* ### Initialize all fields to their default value here */

{

// load the molecules in a vector

// ### You may or may not want to call build_() to finish building the object

// build_();

}

| string PLearn::MoleculeTemplateLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| OptionList & PLearn::MoleculeTemplateLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| RemoteMethodMap & PLearn::MoleculeTemplateLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| Object * PLearn::MoleculeTemplateLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| StaticInitializer MoleculeTemplateLearner::_static_initializer_ & PLearn::MoleculeTemplateLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

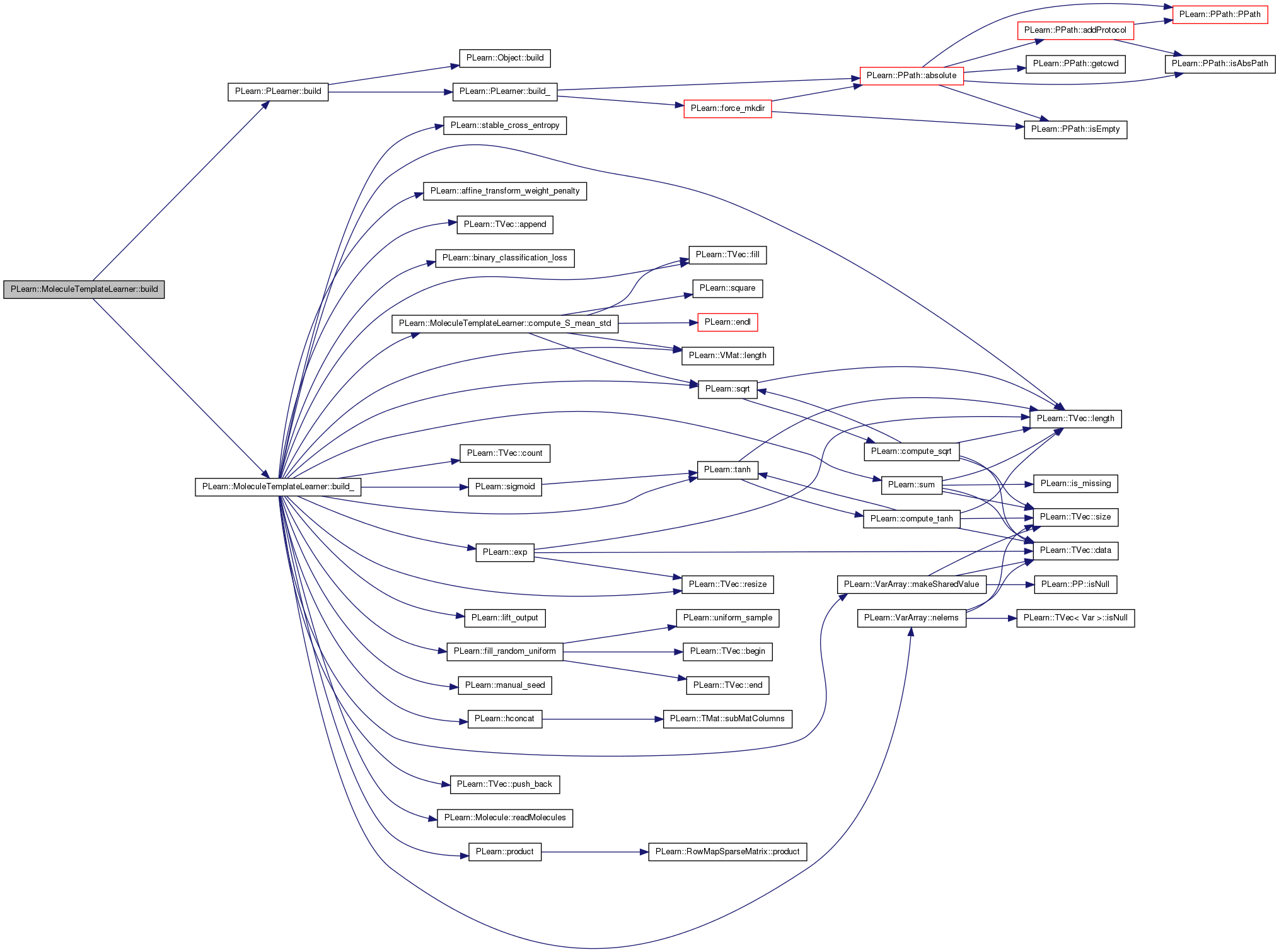

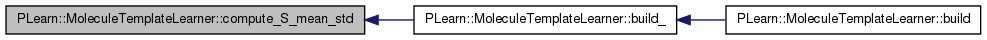

| void PLearn::MoleculeTemplateLearner::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 403 of file MoleculeTemplateLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

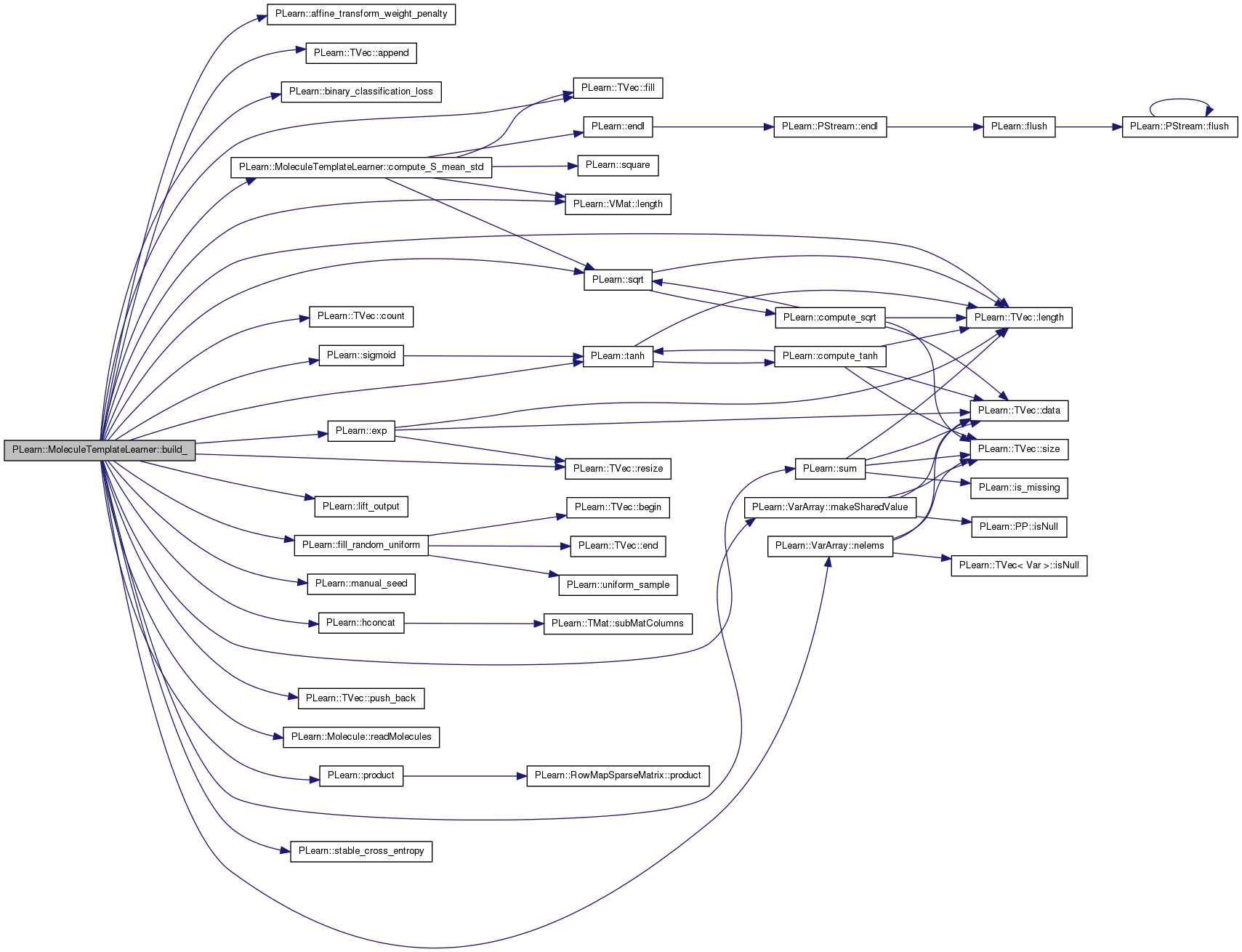

| void PLearn::MoleculeTemplateLearner::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 144 of file MoleculeTemplateLearner.cc.

References PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), PLearn::binary_classification_loss(), builded, compute_S_mean_std(), costs, PLearn::TVec< T >::count(), PLearn::exp(), f_output, PLearn::TVec< T >::fill(), PLearn::fill_random_uniform(), PLearn::hconcat(), hl, i, input_index, j, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::lift_output(), lrate2, PLearn::VarArray::makeSharedValue(), PLearn::manual_seed(), Molecules, mu, mu_S, n_active_templates, n_actives, n_inactive_templates, n_templates, PLearn::VarArray::nelems(), nhidden, output_target_to_costs, params, paramsvalues, penalties, PLERROR, PLearn::product(), PLearn::TVec< T >::push_back(), PLearn::Molecule::readMolecules(), PLearn::TVec< T >::resize(), S, S_after_scaling, S_std, PLearn::PLearner::seed_, sigma, sigma_S, sigma_s_vec, sigma_square, sigma_square_S, PLearn::sigmoid(), PLearn::sqrt(), PLearn::stable_cross_entropy(), PLearn::sum(), PLearn::tanh(), target, temp_S, templates, test_costf, test_costs, PLearn::PLearner::train_set, training_cost, training_mode, V, V_b, W, W_b, weight_decay, y, and y_before_transfer.

Referenced by build().

{

if ((train_set || !training_mode) && !builded){

builded = true ;

n_templates = n_active_templates + n_inactive_templates ;

vector<int> id_templates ;

if (training_mode) {

Molecules.clear() ;

Molecule::readMolecules("g1active.txt",Molecules) ; //TODO : make filelist1 an option

n_actives = Molecules.size() ;

Molecule::readMolecules("g1inactive.txt",Molecules) ;

// n_inactives = Molecules.size() - n_actives ; // TODO : is needed ??

set<int> found ;

Vec t(2) ;

// find the ids for the active templates

int nr_find_active = n_active_templates ;

for(int i=0 ; i<train_set.length() ; ++i) {

train_set -> getRow( i , t);

if (nr_find_active > 0 && t[1] == 1 && found.count((int)t[0])==0 ){

nr_find_active -- ;

id_templates.push_back((int)t[0]);

found.insert((int)t[0]);

}

if (nr_find_active == 0) break ;

}

if (nr_find_active > 0){

PLERROR("There are not enought actives in the dataset") ;

}

int nr_find_inactive = n_inactive_templates ;

for(int i=0 ; i<train_set.length() ; ++i) {

train_set -> getRow( i , t);

if (nr_find_inactive > 0 && t[1] == 0 && found.count((int)t[0])==0 ){

nr_find_inactive -- ;

id_templates.push_back((int)t[0]);

found.insert((int)t[0]);

}

if (nr_find_inactive == 0) break ;

}

if (nr_find_inactive > 0){

PLERROR("There are not enought inactives in the dataset") ;

}

}

input_index = Var(1,"input_index") ;

mu.resize(n_templates) ;

sigma.resize(n_templates) ;

sigma_square.resize(n_templates) ;

S.resize(n_templates) ;

templates.resize(n_templates) ;

for(int i=0 ; i<n_templates ; ++i) {

if (training_mode) {

mu[i] = Var(Molecules[id_templates[i]]->chem.length() , Molecules[id_templates[i]]->chem.width() , "Mu") ;

mu[i]->matValue << Molecules[id_templates[i]]->chem ;

sigma[i] = Var(Molecules[id_templates[i]]->chem.length() , Molecules[id_templates[i]]->chem.width() , "Sigma") ;

sigma[i]->value.fill(0) ;

}

else {

mu[i] = Var(templates[i]->chem.length() , templates[i]->chem.width() ,"Mu" ) ;

sigma[i] = Var(templates[i]->dev.length() , templates[i]->dev.width() , "Sigma") ;

}

params.push_back(mu[i]) ;

params.push_back(sigma[i]) ;

if (training_mode)

sigma_square[i] = new ExpVariable(sigma[i]) ;

else

sigma_square[i] = sigma[i] ;

// if (!training_mode) {

// sigma_square[i]->value.fill(1) ;

// }

if (training_mode) {

templates[i] = new Template() ;

templates[i]->chem.resize(mu[i]->matValue.length() , mu[i]->matValue.width()) ;

templates[i]->chem << mu[i]->matValue ;

templates[i]->geom.resize(Molecules[id_templates[i]]->geom.length() , Molecules[id_templates[i]]->geom.width()) ;

templates[i]->geom << Molecules[id_templates[i]]->geom ;

templates[i]->vrml_file = Molecules[id_templates[i]]->vrml_file ;

templates[i]->dev.resize (sigma_square[i]->matValue.length() , sigma_square[i]->matValue.width() ) ;

templates[i]->dev << sigma_square[i]->matValue ; // SIGMA_SQUARE has not the right value yet ??????

}

}

for(int i=0 ; i<n_templates ; ++i)

S[i] = new WeightedLogGaussian(training_mode , i, input_index, mu[i], sigma_square[i] , templates[i]) ;

V = Var(nhidden , n_templates , "V") ;

V_b = Var(nhidden , 1 , "V_b") ;

// V_direct = Var(1 , 2 , "V_direct") ;

mu_S.resize(n_templates) ;

sigma_S.resize(n_templates) ;

sigma_square_S.resize(n_templates) ;

S_after_scaling.resize(n_templates) ;

sigma_s_vec.resize(n_templates) ;

for(int i=0 ; i<n_templates ; ++i) {

mu_S[i] = Var(1 , 1) ;

sigma_S[i] = Var(1 , 1) ;

if (training_mode)

sigma_square_S[i] = new SquareVariable(sigma_S[i]) ;

else

sigma_square_S[i] = sigma_S[i] ;

params.push_back(mu_S[i]);

params.push_back(sigma_S[i]);

S_after_scaling[i] = new DivVariable(S[i] - mu_S[i] , sigma_square_S[i] ) ;

}

S_std.resize(n_templates) ;

for(int i=0 ; i<n_templates ; ++i) {

S_after_scaling[i] = new NoBpropVariable (S_after_scaling[i] , &S_std[i] ) ;

}

temp_S = new ConcatRowsVariable(S_after_scaling) ;

hl = tanh(product(V,temp_S) + V_b) ;

params.push_back(V);

params.push_back(V_b);

// params.push_back(V_direct);

W = Var(1, nhidden) ;

W_b = Var(1 , 1) ;

y_before_transfer = (product(W,hl) + W_b); //+product(V_direct , temp_S)) ;

y = sigmoid(y_before_transfer) ;

params.push_back(W);

penalties.append(affine_transform_weight_penalty(V, (weight_decay), 0, "L1"));

params.push_back(W_b);

// initialize all the parameters

if (training_mode) {

paramsvalues.resize(params.nelems());

for(int i=0 ; i<n_templates ; ++i) {

mu_S[i]->value.fill(0) ;

sigma_S[i]->value.fill(1) ;

}

Vec t_mean(n_templates) , t_std(n_templates) ;

compute_S_mean_std(t_mean,t_std) ;

for(int i=0 ; i<n_templates ; ++i) {

mu_S[i]->value[0] = t_mean[i] ;

sigma_S[i]->value[0] = sqrt(t_std[i]) ;

}

for(int i=0 ; i<n_templates ; ++i) {

S_std[i] = lrate2 ;

}

manual_seed(seed_) ;

fill_random_uniform(V->matValue,-1,1) ;

fill_random_uniform(V_b->matValue,-1,1) ;

// fill_random_uniform(V_direct->matValue,-0.0001,0.0001) ;

fill_random_uniform(W->matValue,-1,1) ;

fill_random_uniform(W_b->matValue,-1,1) ;

}

else {

params << paramsvalues;

}

params.makeSharedValue(paramsvalues);

if (!training_mode) {

for(int i=0 ; i<n_templates ; ++i) {

sigma_S[i]->value[0] *= sigma_S[i]->value[0] ;

}

for(int i=0 ; i<n_templates ; ++i) {

for(int j=0 ; j<sigma_square[i]->matValue.length() ; ++j) {

for(int k=0 ; k<sigma_square[i]->matValue.width() ; ++k) {

sigma_square[i]->matValue[j][k] = exp(sigma[i]->matValue[j][k]) ;

}

}

}

}

/*

for(int i=0 ; i<n_templates ; ++i) {

sigma_s_vec[i] = sigma_S[i]->value[0] ;

}

*/

target = Var(1 , "the target") ;

costs.resize(3) ;

costs[0] = stable_cross_entropy(y_before_transfer , target) ;

costs[1] = binary_classification_loss(y, target);

costs[2] = lift_output(y , target);

f_output = Func(input_index, y) ;

// displayVarFn(f_output , 0) ;

training_cost = hconcat(sum(hconcat(costs[0] & penalties)));

training_cost->setName("training cost");

test_costs = hconcat(costs);

test_costs->setName("testing cost");

output_target_to_costs = Func(y & target , test_costs) ;

test_costf = Func(input_index & target , y & test_costs);

}

}

| string PLearn::MoleculeTemplateLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file MoleculeTemplateLearner.cc.

Definition at line 481 of file MoleculeTemplateLearner.cc.

References PLearn::endl(), PLearn::TVec< T >::fill(), i, input_index, j, PLearn::VMat::length(), Molecules, n_templates, S, PLearn::sqrt(), PLearn::square(), temp_S, and PLearn::PLearner::train_set.

Referenced by build_().

{

int l = train_set->length() ;

Vec current_S(n_templates) ;

Func computeS(input_index , temp_S ) ;

Mat valueS(l,n_templates) ;

Vec training_row(2) ;

Vec current_index(1) ;

t_mean.fill(0) ;

t_std.fill(0) ;

computeS->recomputeParents();

FILE * f = fopen("nicolas.txt","wt") ;

for(int i=0 ; i<l ; ++i) {

train_set->getRow(i , training_row) ;

current_index[0] = training_row[0] ;

for(int j=0 ; j<n_templates ; ++j) {

PP<WeightedLogGaussian> ppp = dynamic_cast<WeightedLogGaussian*>( (Variable*) S[j]); //->molecule = Molecules[(int)training_row[0]] ;

ppp->molecule = Molecules[(int)training_row[0]] ;

}

computeS->fprop(current_index , current_S ) ;

for(int j=0 ; j<n_templates ; ++j) {

valueS[i][j] = current_S[j] ;

t_mean[j] += current_S[j] ;

cout << i << " " << current_S[j] << endl ;

}

fprintf( f , "%f %f %d\n" , current_S[0] , current_S[1] , training_row[1] > 0 ? 1 : -1 ) ;

}

fclose(f) ;

for(int i=0 ; i<n_templates ; ++i) {

t_mean[i]/= l ; t_mean[i]/=l ;

}

for(int i=0 ; i<l ; ++i) {

for(int j=0 ; j<n_templates ; ++j) {

t_std[j] += square(valueS[i][j] - t_mean[j]) ;

}

}

for(int i=0 ; i<n_templates ; ++i) {

t_std[i] /= l ;

t_std[i] = sqrt(t_std[i]) ;

}

}

| void PLearn::MoleculeTemplateLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 655 of file MoleculeTemplateLearner.cc.

References output_target_to_costs, and PLERROR.

{

PLERROR("You are not allowed to reach this function :((((") ;

// Compute the costs from *already* computed output.

output_target_to_costs->fprop(output & target , costsv) ;

}

| void PLearn::MoleculeTemplateLearner::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 648 of file MoleculeTemplateLearner.cc.

References f_output, and PLearn::TVec< T >::resize().

{

output.resize(1);

f_output->fprop(input,output) ;

}

| void PLearn::MoleculeTemplateLearner::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 663 of file MoleculeTemplateLearner.cc.

References test_costf.

{

test_costf->fprop(inputv&targetv, outputv&costsv);

}

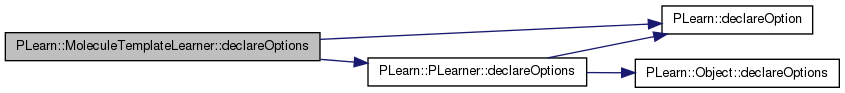

| void PLearn::MoleculeTemplateLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 94 of file MoleculeTemplateLearner.cc.

References batch_size, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), PLearn::OptionBase::learntoption, lrate2, n_active_templates, n_inactive_templates, nhidden, optimizer, paramsvalues, templates, training_mode, and weight_decay.

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

// ### ex:

declareOption(ol, "nhidden", &MoleculeTemplateLearner::nhidden, OptionBase::buildoption,

"Number of hidden units in first hidden layer (0 means no hidden layer)\n");

declareOption(ol, "weight_decay", &MoleculeTemplateLearner::weight_decay, OptionBase::buildoption,

"weight_decay, preaty obvious right :) \n");

declareOption(ol, "batch_size", &MoleculeTemplateLearner::batch_size, OptionBase::buildoption,

"How many samples to use to estimate the average gradient before updating the weights\n"

"0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "optimizer", &MoleculeTemplateLearner::optimizer, OptionBase::buildoption,

"Specify the optimizer to use\n");

declareOption(ol, "n_active_templates", &MoleculeTemplateLearner::n_active_templates, OptionBase::buildoption,

"Specify the index of the molecule to use as seed for the actives\n");

declareOption(ol, "n_inactive_templates", &MoleculeTemplateLearner::n_inactive_templates, OptionBase::buildoption,

"Specify the index of the molecule to use as seed for the inactives\n");

declareOption(ol, "lrate2", &MoleculeTemplateLearner::lrate2, OptionBase::buildoption,

"The lrate2\n");

declareOption(ol, "training_mode", &MoleculeTemplateLearner::training_mode, OptionBase::buildoption,

"training_mode\n");

declareOption(ol, "templates", &MoleculeTemplateLearner::templates, OptionBase::learntoption,

"templates\n");

declareOption(ol, "paramsvalues", &MoleculeTemplateLearner::paramsvalues, OptionBase::learntoption,

"paramsvalues\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::MoleculeTemplateLearner::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 168 of file MoleculeTemplateLearner.h.

| MoleculeTemplateLearner * PLearn::MoleculeTemplateLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 92 of file MoleculeTemplateLearner.cc.

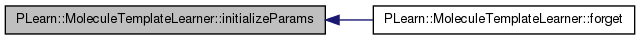

| void PLearn::MoleculeTemplateLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

(Re-)initialize the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

Reimplemented from PLearn::PLearner.

Definition at line 468 of file MoleculeTemplateLearner.cc.

References initializeParams().

{

initializeParams() ;

}

| OptionList & PLearn::MoleculeTemplateLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| OptionMap & PLearn::MoleculeTemplateLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| RemoteMethodMap & PLearn::MoleculeTemplateLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 92 of file MoleculeTemplateLearner.cc.

| TVec< string > PLearn::MoleculeTemplateLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 669 of file MoleculeTemplateLearner.cc.

{

// Return the names of the costs computed by computeCostsFromOutpus

// (these may or may not be exactly the same as what's returned by getTrainCostNames).

// ...

//TODO : put some code here

TVec<string> t(3) ;

t[0] = "NLL" ;

t[1] = "binary_class_error" ;

t[2] = "lift_output" ;

return t ;

}

| TVec< string > PLearn::MoleculeTemplateLearner::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 683 of file MoleculeTemplateLearner.cc.

{

TVec<string> t(3) ;

t[0] = "NLL" ;

t[1] = "binary_class_error" ;

t[2] = "lift_output" ;

return t ;

}

| void PLearn::MoleculeTemplateLearner::initializeParams | ( | ) |

Definition at line 692 of file MoleculeTemplateLearner.cc.

Referenced by forget().

{

}

| void PLearn::MoleculeTemplateLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 410 of file MoleculeTemplateLearner.cc.

References costs, PLearn::deepCopyField(), f_output, hl, input_index, PLearn::PLearner::makeDeepCopyFromShallowCopy(), mu, mu_S, optimizer, output_target_to_costs, params, paramsvalues, penalties, S, S_after_scaling, S_std, sigma, sigma_S, sigma_s_vec, sigma_square, sigma_square_S, target, temp_output, temp_S, templates, test_costf, test_costs, training_cost, V, V_b, V_direct, PLearn::varDeepCopyField(), W, W_b, y, and y_before_transfer.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

// deepCopyField(trainvec, copies);

varDeepCopyField(input_index,copies) ;

deepCopyField(mu , copies) ;

deepCopyField(sigma , copies) ;

deepCopyField(mu_S , copies) ;

deepCopyField(sigma_S , copies) ;

deepCopyField(sigma_square_S , copies) ;

deepCopyField(sigma_square , copies) ;

deepCopyField(S , copies) ;

deepCopyField(S_after_scaling, copies) ;

deepCopyField(params , copies) ;

deepCopyField(penalties , copies) ;

varDeepCopyField(V,copies) ;

varDeepCopyField(W,copies) ;

varDeepCopyField(V_b,copies) ;

varDeepCopyField(W_b,copies) ;

varDeepCopyField(V_direct,copies) ;

varDeepCopyField(hl,copies) ;

varDeepCopyField(y,copies) ;

varDeepCopyField(y_before_transfer,copies) ;

varDeepCopyField(training_cost,copies) ;

varDeepCopyField(test_costs,copies) ;

varDeepCopyField(target,copies) ;

varDeepCopyField(temp_S,copies) ;

deepCopyField(costs , copies) ;

deepCopyField(temp_output , copies) ;

deepCopyField(S_std , copies) ;

deepCopyField(sigma_s_vec , copies) ;

deepCopyField(f_output , copies) ;

deepCopyField(output_target_to_costs , copies) ;

deepCopyField(test_costf , copies) ;

deepCopyField(optimizer , copies) ;

deepCopyField(templates , copies) ;

deepCopyField(paramsvalues , copies) ;

}

| int PLearn::MoleculeTemplateLearner::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 460 of file MoleculeTemplateLearner.cc.

References noutputs.

{

// Compute and return the size of this learner's output (which typically

// may depend on its inputsize(), targetsize() and set options).

return noutputs ;

}

| void PLearn::MoleculeTemplateLearner::test | ( | VMat | testset, |

| PP< VecStatsCollector > | test_stats, | ||

| VMat | testoutputs = 0, |

||

| VMat | testcosts = 0 |

||

| ) | const [virtual] |

Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts.

The default version repeatedly calls computeOutputAndCosts or computeCostsOnly. Note that neither test_stats->forget() nor test_stats->finalize() is called, so that you should call them yourself (respectively before and after calling this method) if you don't plan to accumulate statistics.

Reimplemented from PLearn::PLearner.

Definition at line 696 of file MoleculeTemplateLearner.cc.

References i, n_templates, S, and PLearn::PLearner::test().

{

for(int i=0 ; i<n_templates ; ++i) {

PP<WeightedLogGaussian> ppp = dynamic_cast<WeightedLogGaussian*>( (Variable*) S[i]); //->molecule = Molecules[(int)training_row[0]] ;

ppp->test_set = testset ;

}

inherited::test(testset , test_stats , testoutputs , testcosts) ;

}

| void PLearn::MoleculeTemplateLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 538 of file MoleculeTemplateLearner.cc.

References PLearn::endl(), i, input_index, PLearn::VMat::length(), PLearn::meanOf(), Molecules, mu, n_templates, PLearn::PLearner::nstages, optimizer, output_target_to_costs, params, PLERROR, PLearn::PLearner::report_progress, S, sigma_square, PLearn::PLearner::stage, target, templates, test_costf, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_cost, and PLearn::ProgressBar::update().

{

if(!train_stats) // make a default stats collector, in case there's none

train_stats = new VecStatsCollector();

int l = train_set->length();

int nsamples = 1;

Func paramf = Func(input_index & target, training_cost); // parameterized function to optimize

Var totalcost = meanOf(train_set, paramf, nsamples);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

optimizer->reset();

}

else PLERROR("EntropyContrastLearner::train can't train without setting an optimizer first!");

ProgressBar* pb = 0;

if(report_progress>0) {

pb = new ProgressBar("Training MoleculeTemplateLearner stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

}

// int optstage_per_lstage = l/nsamples;

while(stage<nstages)

{

optimizer->nstages = 1 ; // optstage_per_lstage;

double mean_error = 0.0 ;

for(int k=0 ; k<train_set->length() ; ++k) {

//update the template

for(int i=0 ; i<n_templates ; ++i)

{

templates[i]->chem << mu[i]->matValue ;

templates[i]->dev << sigma_square[i]->matValue ;

}

//align only the next training example

Mat temp_mat ;

Vec training_row(2) ;

train_set->getRow(k , training_row) ;

for(int i=0 ; i<n_templates ; ++i) {

// string s = train_set->getString(k,0) ;

// performLP(Molecules[(int)training_row[0]],templates[i], temp_mat , false) ;

// W_lp[i][(int)training_row[0]]->matValue << temp_mat ;

PP<WeightedLogGaussian> ppp = dynamic_cast<WeightedLogGaussian*>( (Variable*) S[i]); //->molecule = Molecules[(int)training_row[0]] ;

ppp->molecule = Molecules[(int)training_row[0]] ;

// S[i]->molecule = Molecules[(int)training_row[0]] ;

}

// clear statistics of previous epoch

train_stats->forget();

// displayVarFn(f_output , true) ;

optimizer->optimizeN(*train_stats);

// temp_S->verifyGradient(1e-4) ;

train_stats->finalize(); // finalize statistics for this epoch

cout << "Example " << k << " train objective: " << train_stats->getMean() << endl;

mean_error += train_stats->getMean()[0] ;

if(pb)

pb->update(stage);

}

cout << endl << endl <<"Epoch " << stage << " mean error " << mean_error/l << endl << endl;

++stage;

}

/*

Mat temp_mat ;

for(int i=0 ; i<n_templates ; ++i) {

W_lp[i].resize(Molecules.size()) ;

for(unsigned int j=0 ; j<Molecules.size() ; ++j) {

performLP(Molecules[j],templates[i], temp_mat , false) ;

W_lp[i][j]->matValue << temp_mat ;

}

}

*/

for(int i=0 ; i<n_templates ; ++i) {

cout << "mu[0]" << mu[i]->matValue << endl ;

cout << "sigma[0]" << sigma_square[i]->matValue << endl ;

}

output_target_to_costs->recomputeParents();

test_costf->recomputeParents();

// molecule = NULL ;

}

Reimplemented from PLearn::PLearner.

Definition at line 168 of file MoleculeTemplateLearner.h.

Definition at line 110 of file MoleculeTemplateLearner.h.

Referenced by declareOptions().

Definition at line 118 of file MoleculeTemplateLearner.h.

Referenced by build_().

VarArray PLearn::MoleculeTemplateLearner::costs [protected] |

Definition at line 86 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::MoleculeTemplateLearner::f_output [protected] |

Definition at line 93 of file MoleculeTemplateLearner.h.

Referenced by build_(), computeOutput(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::hl [protected] |

Definition at line 79 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::input_index [protected] |

Definition at line 69 of file MoleculeTemplateLearner.h.

Referenced by build_(), compute_S_mean_std(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 115 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

vector<PMolecule> PLearn::MoleculeTemplateLearner::Molecules [protected] |

Definition at line 97 of file MoleculeTemplateLearner.h.

Referenced by build_(), compute_S_mean_std(), and train().

VarArray PLearn::MoleculeTemplateLearner::mu [protected] |

Definition at line 70 of file MoleculeTemplateLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

VarArray PLearn::MoleculeTemplateLearner::mu_S [protected] |

Definition at line 71 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 111 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

int PLearn::MoleculeTemplateLearner::n_actives [protected] |

Definition at line 88 of file MoleculeTemplateLearner.h.

Referenced by build_().

Definition at line 112 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

int PLearn::MoleculeTemplateLearner::n_inactives [protected] |

Definition at line 89 of file MoleculeTemplateLearner.h.

Definition at line 113 of file MoleculeTemplateLearner.h.

Referenced by build_(), compute_S_mean_std(), test(), and train().

Definition at line 108 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

Definition at line 110 of file MoleculeTemplateLearner.h.

Referenced by outputsize().

Definition at line 121 of file MoleculeTemplateLearner.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 94 of file MoleculeTemplateLearner.h.

Referenced by build_(), computeCostsFromOutputs(), makeDeepCopyFromShallowCopy(), and train().

VarArray PLearn::MoleculeTemplateLearner::params [protected] |

Definition at line 76 of file MoleculeTemplateLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 124 of file MoleculeTemplateLearner.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::MoleculeTemplateLearner::penalties [protected] |

Definition at line 77 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::MoleculeTemplateLearner::S [mutable, protected] |

Definition at line 74 of file MoleculeTemplateLearner.h.

Referenced by build_(), compute_S_mean_std(), makeDeepCopyFromShallowCopy(), test(), and train().

Definition at line 75 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Vec PLearn::MoleculeTemplateLearner::S_std [protected] |

Definition at line 90 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 114 of file MoleculeTemplateLearner.h.

VarArray PLearn::MoleculeTemplateLearner::sigma [protected] |

Definition at line 70 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::MoleculeTemplateLearner::sigma_S [protected] |

Definition at line 71 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Vec PLearn::MoleculeTemplateLearner::sigma_s_vec [protected] |

Definition at line 91 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 73 of file MoleculeTemplateLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 72 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::target [protected] |

Definition at line 84 of file MoleculeTemplateLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

VarArray PLearn::MoleculeTemplateLearner::temp_output [protected] |

Definition at line 87 of file MoleculeTemplateLearner.h.

Referenced by makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::temp_S [protected] |

Definition at line 85 of file MoleculeTemplateLearner.h.

Referenced by build_(), compute_S_mean_std(), and makeDeepCopyFromShallowCopy().

Definition at line 123 of file MoleculeTemplateLearner.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Func PLearn::MoleculeTemplateLearner::test_costf [protected] |

Definition at line 95 of file MoleculeTemplateLearner.h.

Referenced by build_(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::MoleculeTemplateLearner::test_costs [protected] |

Definition at line 83 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::training_cost [protected] |

Definition at line 82 of file MoleculeTemplateLearner.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 117 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::MoleculeTemplateLearner::V [protected] |

Definition at line 78 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::V_b [protected] |

Definition at line 78 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::V_direct [protected] |

Definition at line 78 of file MoleculeTemplateLearner.h.

Referenced by makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::W [protected] |

Definition at line 78 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MoleculeTemplateLearner::W_b [protected] |

Definition at line 78 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 109 of file MoleculeTemplateLearner.h.

Referenced by build_(), and declareOptions().

Var PLearn::MoleculeTemplateLearner::y [protected] |

Definition at line 80 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 81 of file MoleculeTemplateLearner.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4