|

PLearn 0.1

|

|

PLearn 0.1

|

Computes the undirected softmax used in deep belief nets. More...

#include <RBMClassificationModule.h>

Public Member Functions | |

| RBMClassificationModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Mini-batch fprop. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | forget () |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMClassificationModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< RBMConnection > | previous_to_last |

| Connection between the previous layer, and last_layer. | |

| PP< RBMBinomialLayer > | last_layer |

| Top-level layer (the one in the middle if we unfold) | |

| PP< RBMMatrixConnection > | last_to_target |

| Connection between last_layer and target_layer. | |

| PP< RBMMultinomialLayer > | target_layer |

| Layer containing the one-hot vector containing the target (or its prediction) | |

| PP< RBMMixedConnection > | joint_connection |

| Connection grouping previous_to_last and last_to_target. | |

| int | last_size |

| Size of last_layer. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Vec | out_act |

| stores output activations | |

Private Types | |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | d_target_act |

| Stores the gradient of the cost at input of target_layer. | |

| Vec | d_last_act |

| Stores the gradient of the cost at input of last_layer. | |

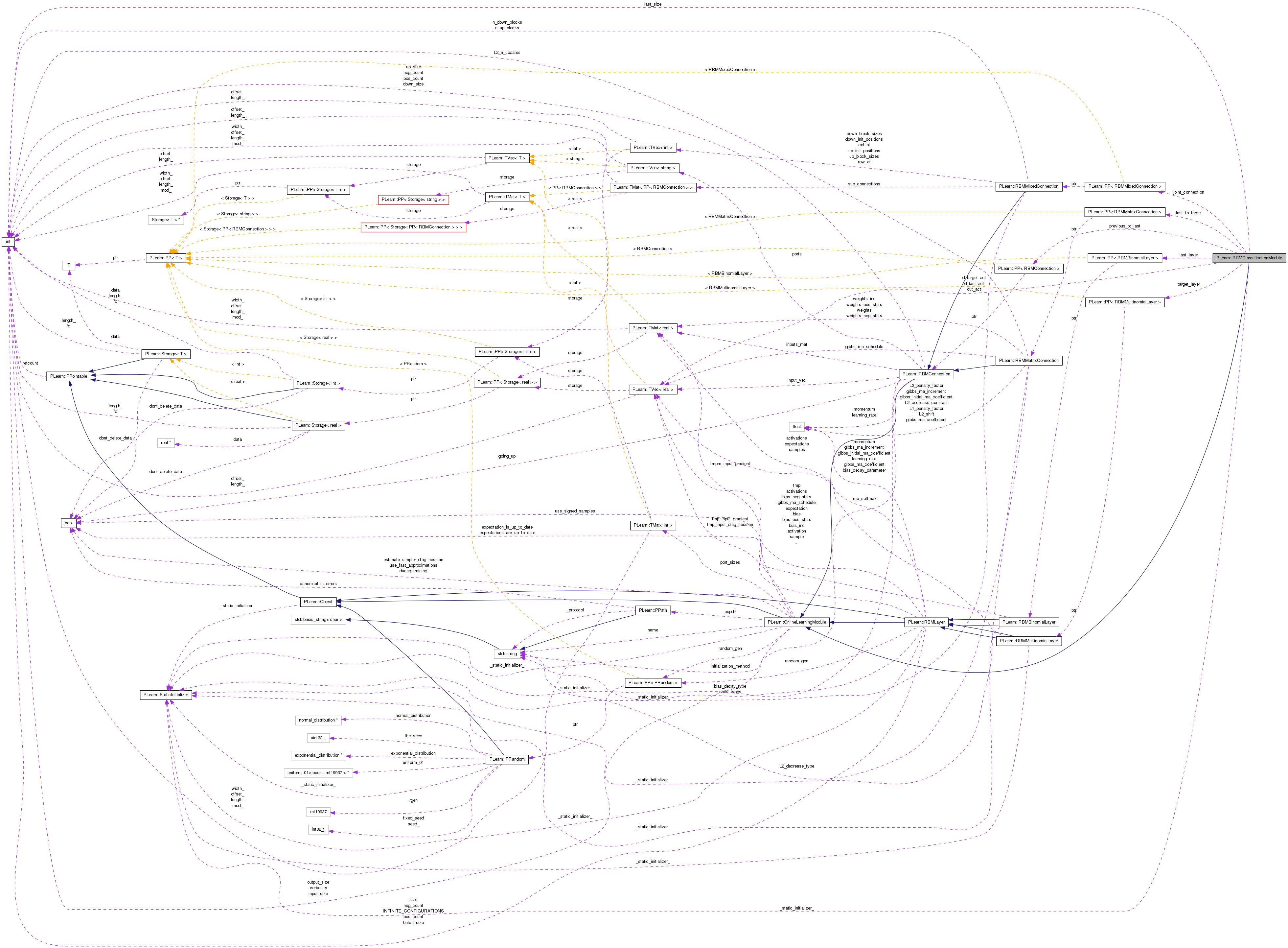

Computes the undirected softmax used in deep belief nets.

This module contains an RBMConnection, an RBMBinomialLayer, an RBMMatrixConnection (transposed) and an RBMMultinomialLayer (target). The two RBMConnections are combined in joint_connection.

Definition at line 59 of file RBMClassificationModule.h.

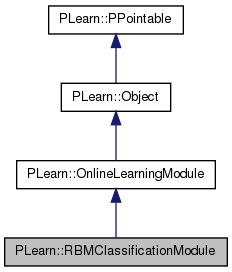

typedef OnlineLearningModule PLearn::RBMClassificationModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 61 of file RBMClassificationModule.h.

| PLearn::RBMClassificationModule::RBMClassificationModule | ( | ) |

| string PLearn::RBMClassificationModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

| OptionList & PLearn::RBMClassificationModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

| RemoteMethodMap & PLearn::RBMClassificationModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

| Object * PLearn::RBMClassificationModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RBMClassificationModule.cc.

| StaticInitializer RBMClassificationModule::_static_initializer_ & PLearn::RBMClassificationModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

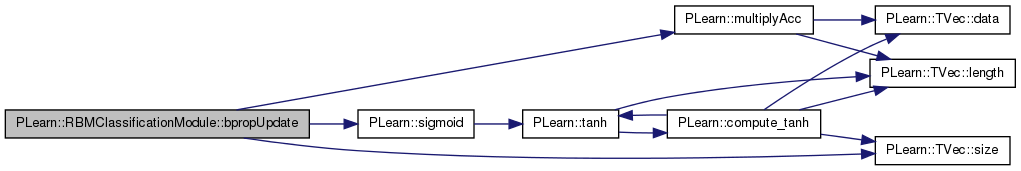

| void PLearn::RBMClassificationModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

this version allows to obtain the input gradient as well

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT. this version allows to obtain the input gradient as well

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 247 of file RBMClassificationModule.cc.

References i, PLearn::multiplyAcc(), PLASSERT, PLASSERT_MSG, PLearn::sigmoid(), PLearn::TVec< T >::size(), and w.

{

// size checks

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

// bpropUpdate in target_layer,

// assuming target_layer->activation is up-to-date, but it should be the

// case if fprop() has been called just before.

target_layer->bpropUpdate( target_layer->activation, output,

d_target_act, output_gradient );

// the tricky part is the backpropagation through last_to_target

Vec last_act = last_layer->activation;

for( int i=0 ; i<last_size ; i++ )

{

real* w = last_to_target->weights[i];

d_last_act[i] = 0;

for( int k=0 ; k<output_size ; k++ )

{

// dC/d( w_ik + target_act_i )

real d_z = d_target_act[k]*(sigmoid(w[k] + last_act[i]));

w[k] -= last_to_target->learning_rate * d_z;

d_last_act[i] += d_z;

}

}

// don't use bpropUpdate(), because the function is different here

// last_layer->bias -= learning_rate * d_last_act;

multiplyAcc( last_layer->bias, d_last_act, -(last_layer->learning_rate) );

// at this point, the gradient can be backpropagated through

// previous_to_last the usual way (even if output is wrong)

previous_to_last->bpropUpdate( input, last_act,

input_gradient, d_last_act, accumulate );

}

| void PLearn::RBMClassificationModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 161 of file RBMClassificationModule.cc.

{

inherited::build();

build_();

}

| void PLearn::RBMClassificationModule::build_ | ( | ) | [private] |

This does the actual building.

Check (and set) sizes

build joint_connection

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 102 of file RBMClassificationModule.cc.

References PLearn::endl(), and PLASSERT.

{

MODULE_LOG << "build_() called" << endl;

if( !previous_to_last || !last_layer || !last_to_target || !target_layer )

{

MODULE_LOG << "build_() aborted because layers and connections were"

" not set" << endl;

return;

}

input_size = previous_to_last->down_size;

last_size = last_layer->size;

output_size = target_layer->size;

PLASSERT( previous_to_last->up_size == last_size );

PLASSERT( last_to_target->up_size == last_size );

PLASSERT( last_to_target->down_size == output_size );

d_last_act.resize( last_size );

d_target_act.resize( output_size );

if( !joint_connection )

joint_connection = new RBMMixedConnection();

joint_connection->sub_connections.resize(1,2);

joint_connection->sub_connections(0,0) = previous_to_last;

joint_connection->sub_connections(0,1) = last_to_target;

joint_connection->build();

// If we have a random_gen, share it with the ones who do not

if( random_gen )

{

if( !(previous_to_last->random_gen) )

{

previous_to_last->random_gen = random_gen;

previous_to_last->forget();

}

if( !(last_layer->random_gen) )

{

last_layer->random_gen = random_gen;

last_layer->forget();

}

if( !(last_to_target->random_gen) )

{

last_to_target->random_gen = random_gen;

last_to_target->forget();

}

if( !(target_layer->random_gen) )

{

target_layer->random_gen = random_gen;

target_layer->forget();

}

if( !(joint_connection->random_gen) )

joint_connection->random_gen = previous_to_last->random_gen;

}

}

| string PLearn::RBMClassificationModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RBMClassificationModule.cc.

| void PLearn::RBMClassificationModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 63 of file RBMClassificationModule.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), joint_connection, last_layer, last_size, last_to_target, PLearn::OptionBase::learntoption, previous_to_last, and target_layer.

{

declareOption(ol, "previous_to_last",

&RBMClassificationModule::previous_to_last,

OptionBase::buildoption,

"Connection between the previous layer, and last_layer");

declareOption(ol, "last_layer", &RBMClassificationModule::last_layer,

OptionBase::buildoption,

"Top-level layer (the one in the middle if we unfold)");

declareOption(ol, "last_to_target",

&RBMClassificationModule::last_to_target,

OptionBase::buildoption,

"Connection between last_layer and target_layer");

declareOption(ol, "target_layer", &RBMClassificationModule::target_layer,

OptionBase::buildoption,

"Layer containing the one-hot vector containing the target\n"

"(or its prediction).\n");

declareOption(ol, "joint_connection",

&RBMClassificationModule::joint_connection,

OptionBase::learntoption,

"Connection grouping previous_to_last and last_to_target");

declareOption(ol, "last_size", &RBMClassificationModule::last_size,

OptionBase::learntoption,

"Size of last_layer");

/*

declareOption(ol, "", &RBMClassificationModule::,

OptionBase::buildoption,

"");

*/

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMClassificationModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 151 of file RBMClassificationModule.h.

:

//##### Not Options #####################################################

| RBMClassificationModule * PLearn::RBMClassificationModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file RBMClassificationModule.cc.

| void PLearn::RBMClassificationModule::forget | ( | ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

reset the parameters to the state they would be BEFORE starting training.

If these methods are defined, you can use them INSTEAD of bpropUpdate(...) N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH JUST CALLS bbpropUpdate(input, output, input_gradient, output_gradient, out_hess, in_hess) AND IGNORES INPUT HESSIAN AND INPUT GRADIENT. this version allows to obtain the input gradient and diag_hessian N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH RAISES A PLERROR. reset the parameters to the state they would be BEFORE starting training. Note that this method is necessarily called from build().

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 298 of file RBMClassificationModule.cc.

References PLWARNING.

{

if( !random_gen )

{

PLWARNING("RBMClassificationModule: cannot forget() without"

" random_gen");

return;

}

if( !(previous_to_last->random_gen) )

previous_to_last->random_gen = random_gen;

previous_to_last->forget();

if( !(last_to_target->random_gen) )

last_to_target->random_gen = random_gen;

last_to_target->forget();

if( !(joint_connection->random_gen) )

joint_connection->random_gen = random_gen;

joint_connection->forget();

if( !(target_layer->random_gen) )

target_layer->random_gen = random_gen;

target_layer->forget();

}

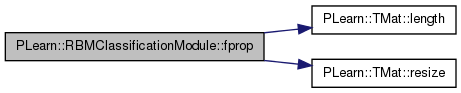

Mini-batch fprop.

Default implementation raises an error. SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 217 of file RBMClassificationModule.cc.

References PLearn::TMat< T >::length(), and PLearn::TMat< T >::resize().

{

int batch_size = inputs.length();

outputs.resize(batch_size, output_size);

for (int k=0; k<batch_size; k++)

{

Vec tmp_out = outputs(k);

fprop(inputs(k), tmp_out);

}

}

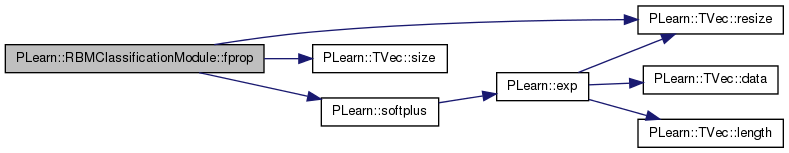

given the input, compute the output (possibly resize it appropriately)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 183 of file RBMClassificationModule.cc.

References i, j, m, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::softplus(), and w.

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

// input is supposed to be an expectation or sample from the previous layer

previous_to_last->setAsDownInput( input );

// last_layer->activation = bias + previous_to_last_weights * input

last_layer->getAllActivations( previous_to_last );

// target_layer->activation =

// bias + sum_j softplus(W_ji + last_layer->activation[j])

Vec target_act = target_layer->activation;

for( int i=0 ; i<output_size ; i++ )

{

target_act[i] = target_layer->bias[i];

real *w = &(last_to_target->weights(0,i));

// step from one row to the next in weights matrix

int m = last_to_target->weights.mod();

Vec last_act = last_layer->activation;

for( int j=0 ; j<last_size ; j++, w+=m )

{

// *w = weights(j,i)

target_act[i] += softplus(*w + last_act[j]);

}

}

target_layer->expectation_is_up_to_date = false;

target_layer->computeExpectation();

output << target_layer->expectation;

}

| OptionList & PLearn::RBMClassificationModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RBMClassificationModule.cc.

| OptionMap & PLearn::RBMClassificationModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RBMClassificationModule.cc.

| RemoteMethodMap & PLearn::RBMClassificationModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RBMClassificationModule.cc.

| void PLearn::RBMClassificationModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 168 of file RBMClassificationModule.cc.

References PLearn::deepCopyField().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(previous_to_last, copies);

deepCopyField(last_layer, copies);

deepCopyField(last_to_target, copies);

deepCopyField(target_layer, copies);

deepCopyField(joint_connection, copies);

deepCopyField(out_act, copies);

deepCopyField(d_target_act, copies);

deepCopyField(d_last_act, copies);

}

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 151 of file RBMClassificationModule.h.

Vec PLearn::RBMClassificationModule::d_last_act [mutable, private] |

Stores the gradient of the cost at input of last_layer.

Definition at line 184 of file RBMClassificationModule.h.

Vec PLearn::RBMClassificationModule::d_target_act [mutable, private] |

Stores the gradient of the cost at input of target_layer.

Definition at line 181 of file RBMClassificationModule.h.

Connection grouping previous_to_last and last_to_target.

Definition at line 80 of file RBMClassificationModule.h.

Referenced by declareOptions().

Top-level layer (the one in the middle if we unfold)

Definition at line 69 of file RBMClassificationModule.h.

Referenced by declareOptions().

Size of last_layer.

Definition at line 83 of file RBMClassificationModule.h.

Referenced by declareOptions().

Connection between last_layer and target_layer.

Definition at line 72 of file RBMClassificationModule.h.

Referenced by declareOptions().

Vec PLearn::RBMClassificationModule::out_act [mutable, protected] |

stores output activations

Definition at line 162 of file RBMClassificationModule.h.

Connection between the previous layer, and last_layer.

Definition at line 66 of file RBMClassificationModule.h.

Referenced by declareOptions().

Layer containing the one-hot vector containing the target (or its prediction)

Definition at line 76 of file RBMClassificationModule.h.

Referenced by declareOptions().

1.7.4

1.7.4