|

PLearn 0.1

|

|

PLearn 0.1

|

#include <MultiInstanceNNet.h>

Public Member Functions | |

| MultiInstanceNNet () | |

| virtual | ~MultiInstanceNNet () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual MultiInstanceNNet * | deepCopy (CopiesMap &copies) const |

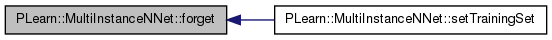

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Declares the training set. | |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Func | f |

| Func | test_costf |

| Func | output_and_target_to_cost |

| int | max_n_instances |

| int | nhidden |

| int | nhidden2 |

| real | weight_decay |

| real | bias_decay |

| real | layer1_weight_decay |

| real | layer1_bias_decay |

| real | layer2_weight_decay |

| real | layer2_bias_decay |

| real | output_layer_weight_decay |

| real | output_layer_bias_decay |

| real | direct_in_to_out_weight_decay |

| real | classification_regularizer |

| string | penalty_type |

| bool | L1_penalty |

| bool | direct_in_to_out |

| real | interval_minval |

| real | interval_maxval |

| int | test_bag_size |

| PP< Optimizer > | optimizer |

| int | batch_size |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | initializeParams () |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Var | input |

| Var | target |

| Var | sampleweight |

| Var | w1 |

| Var | w2 |

| Var | wout |

| Var | wdirect |

| Var | output |

| Var | bag_size |

| Var | bag_inputs |

| Var | bag_output |

| Func | inputs_and_targets_to_test_costs |

| Func | inputs_and_targets_to_training_costs |

| Func | input_to_logP0 |

| Var | nll |

| VarArray | costs |

| VarArray | penalties |

| Var | training_cost |

| Var | test_costs |

| VarArray | invars |

| VarArray | params |

| Vec | paramsvalues |

| int | optstage_per_lstage |

| bool | training_set_has_changed |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| **** SUBCLASS WRITING: **** | |

Private Attributes | |

| Vec | instance_logP0 |

| Used to store data between calls to computeCostsFromOutput. | |

Definition at line 52 of file MultiInstanceNNet.h.

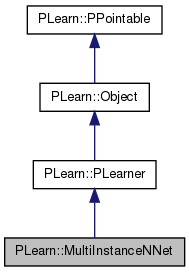

typedef PLearner PLearn::MultiInstanceNNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file MultiInstanceNNet.h.

| PLearn::MultiInstanceNNet::MultiInstanceNNet | ( | ) |

Definition at line 102 of file MultiInstanceNNet.cc.

: training_set_has_changed(false), max_n_instances(1), nhidden(0), nhidden2(0), weight_decay(0), bias_decay(0), layer1_weight_decay(0), layer1_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), direct_in_to_out_weight_decay(0), penalty_type("L2_square"), L1_penalty(false), direct_in_to_out(false), interval_minval(0), interval_maxval(1), test_bag_size(0), batch_size(1) {}

| PLearn::MultiInstanceNNet::~MultiInstanceNNet | ( | ) | [virtual] |

Definition at line 124 of file MultiInstanceNNet.cc.

{

}

| string PLearn::MultiInstanceNNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

| OptionList & PLearn::MultiInstanceNNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

| RemoteMethodMap & PLearn::MultiInstanceNNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

| Object * PLearn::MultiInstanceNNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 100 of file MultiInstanceNNet.cc.

| StaticInitializer MultiInstanceNNet::_static_initializer_ & PLearn::MultiInstanceNNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

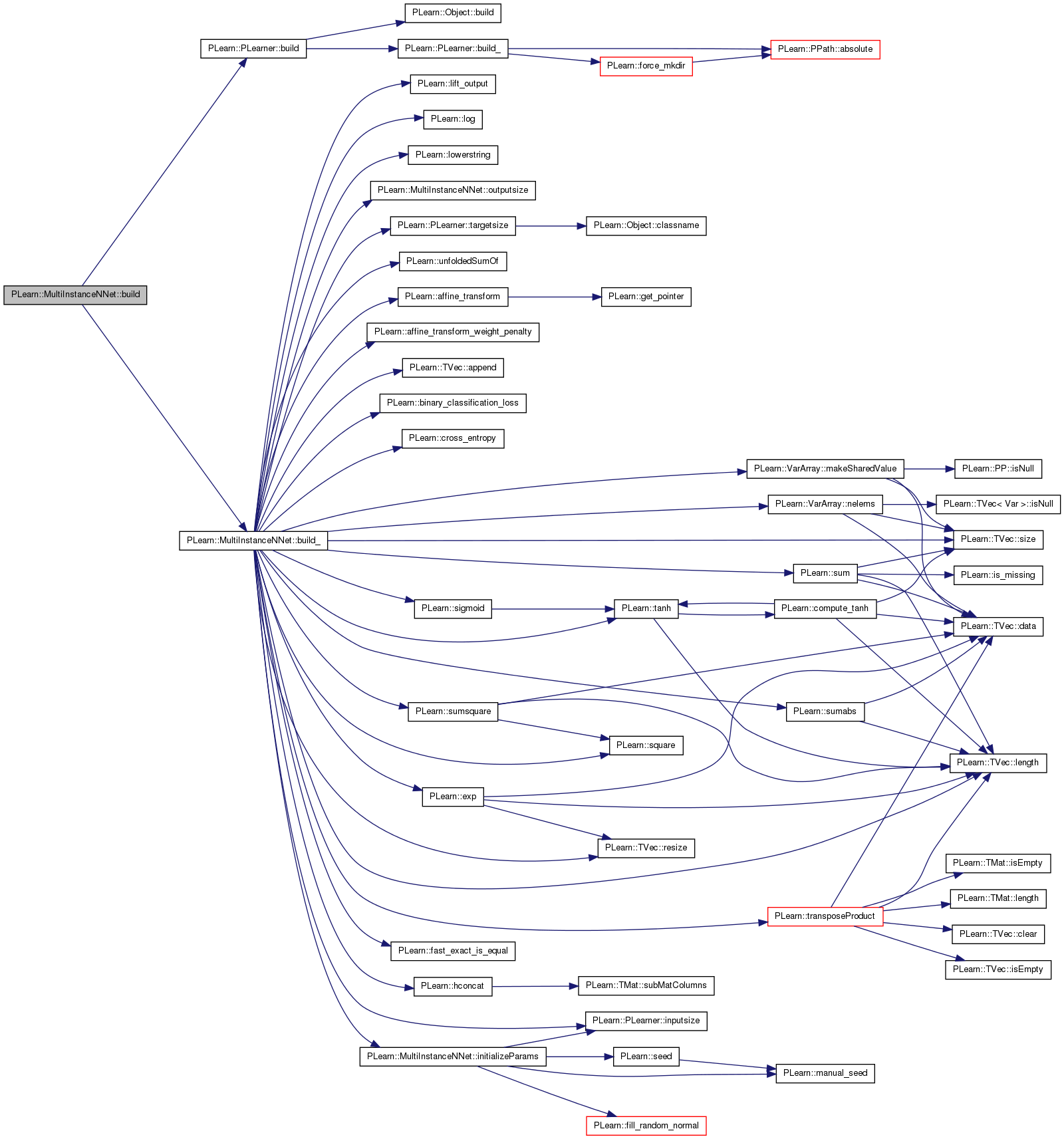

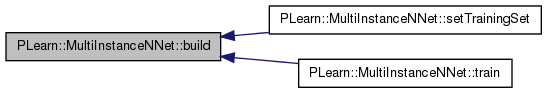

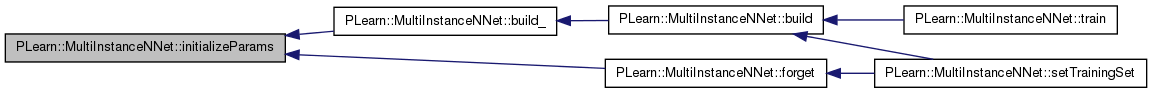

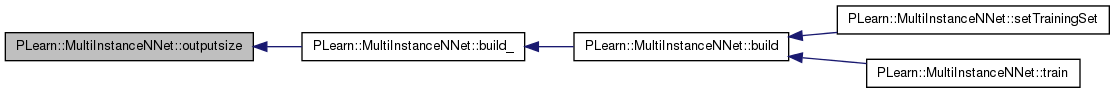

| void PLearn::MultiInstanceNNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 193 of file MultiInstanceNNet.cc.

References PLearn::PLearner::build(), and build_().

Referenced by setTrainingSet(), and train().

{

inherited::build();

build_();

}

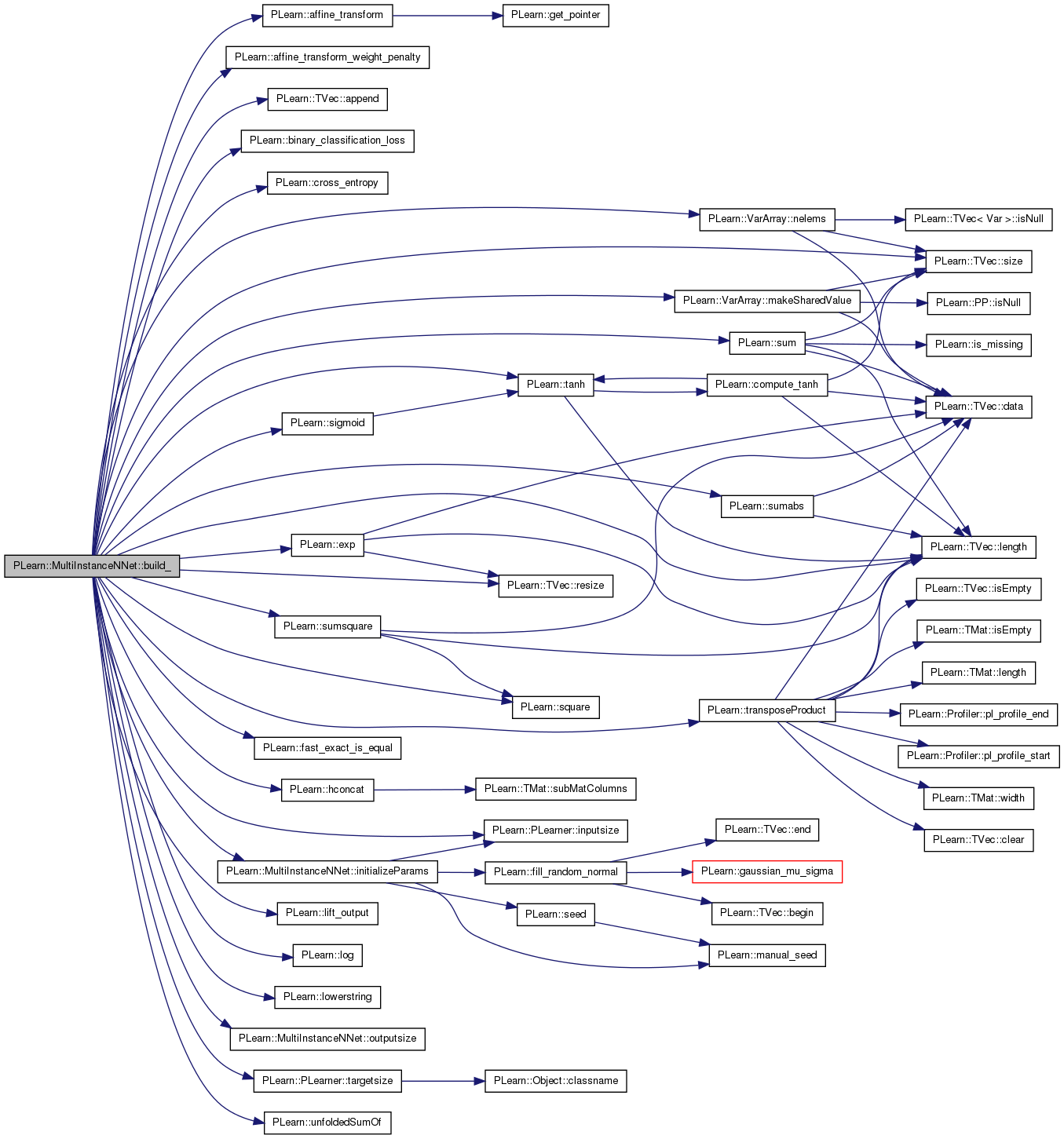

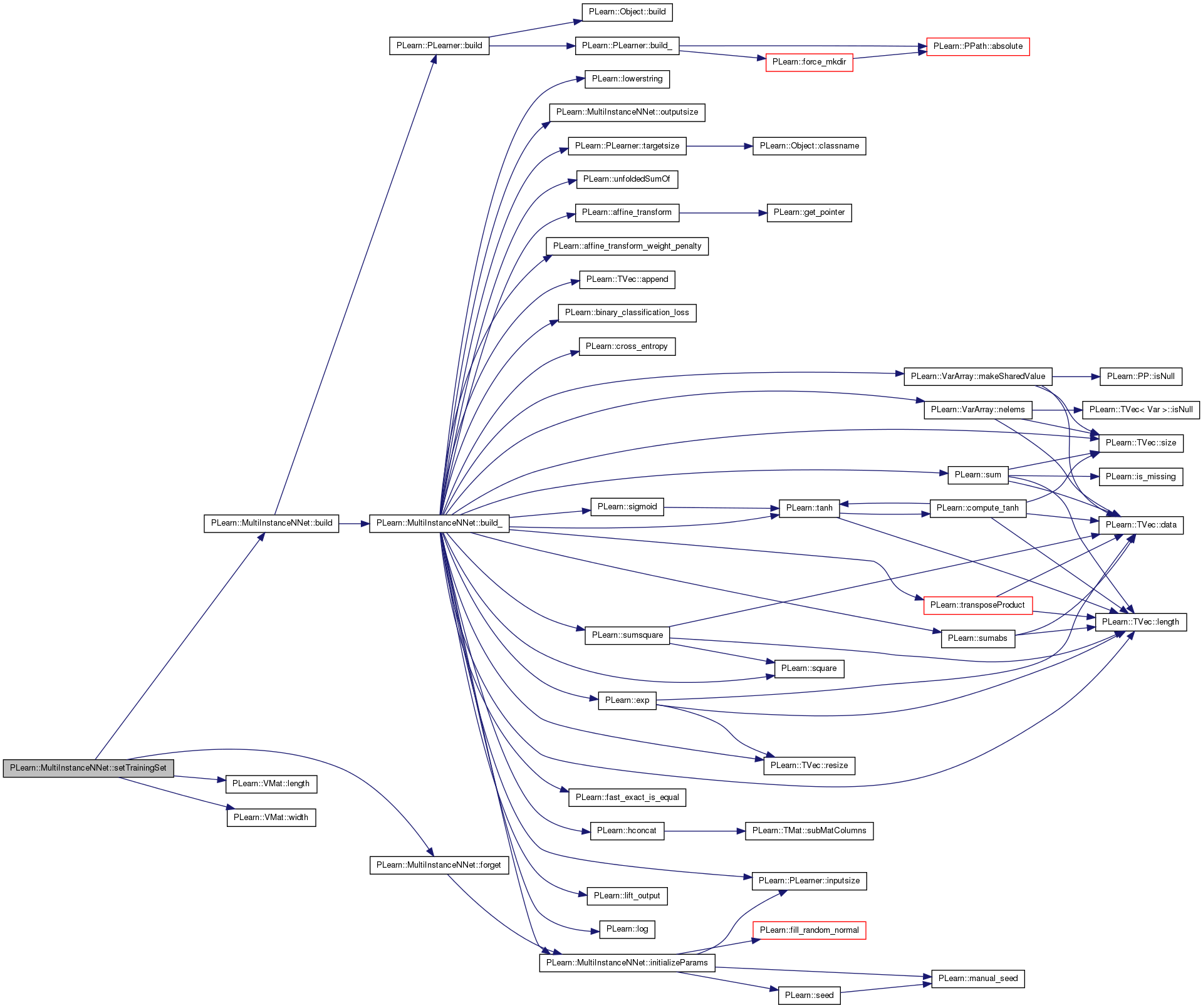

| void PLearn::MultiInstanceNNet::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 222 of file MultiInstanceNNet.cc.

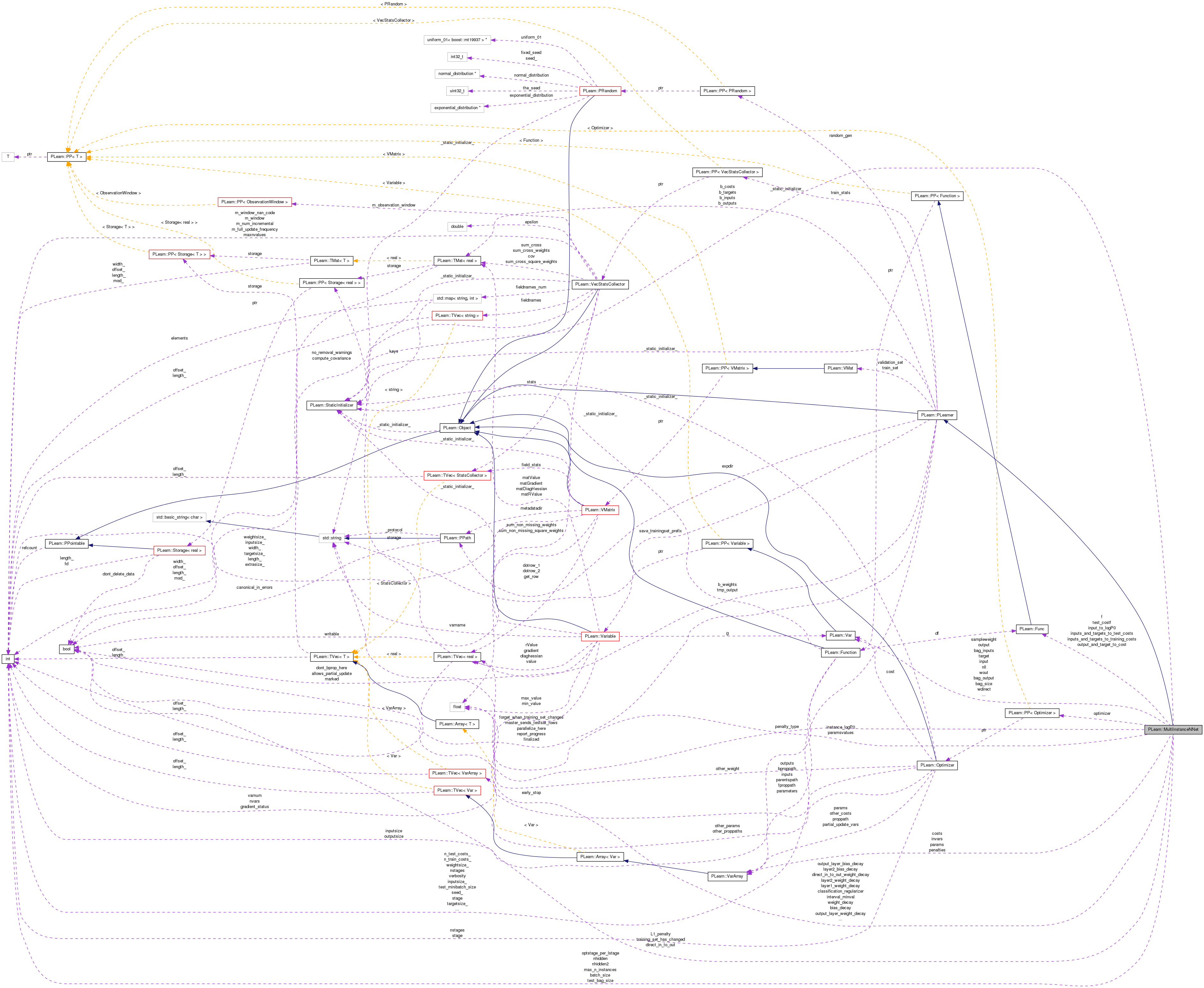

References PLearn::affine_transform(), PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), bag_inputs, bag_output, bag_size, bias_decay, PLearn::binary_classification_loss(), costs, PLearn::cross_entropy(), direct_in_to_out, direct_in_to_out_weight_decay, PLearn::exp(), f, PLearn::fast_exact_is_equal(), PLearn::hconcat(), initializeParams(), input, input_to_logP0, inputs_and_targets_to_test_costs, inputs_and_targets_to_training_costs, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, invars, L1_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::TVec< T >::length(), PLearn::lift_output(), PLearn::log(), PLearn::lowerstring(), PLearn::VarArray::makeSharedValue(), max_n_instances, PLearn::VarArray::nelems(), nhidden, nhidden2, output, output_layer_bias_decay, output_layer_weight_decay, outputsize(), params, paramsvalues, penalties, penalty_type, PLDEPRECATED, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), sampleweight, PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::square(), PLearn::sum(), PLearn::sumabs(), PLearn::sumsquare(), PLearn::tanh(), target, PLearn::PLearner::targetsize(), PLearn::PLearner::targetsize_, test_costs, training_cost, PLearn::transposeProduct(), PLearn::unfoldedSumOf(), w1, w2, wdirect, weight_decay, PLearn::PLearner::weightsize_, and wout.

Referenced by build().

{

/*

* Create Topology Var Graph

*/

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize and targetsize anyway...

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

// init. basic vars

input = Var(inputsize(), "input");

output = input;

params.resize(0);

if (targetsize()!=2)

PLERROR("MultiInstanceNNet:: expected the data to have 2 target columns, got %d",

targetsize());

// first hidden layer

if(nhidden>0)

{

w1 = Var(1+inputsize(), nhidden, "w1");

output = tanh(affine_transform(output,w1));

params.append(w1);

}

// second hidden layer

if(nhidden2>0)

{

w2 = Var(1+nhidden, nhidden2, "w2");

output = tanh(affine_transform(output,w2));

params.append(w2);

}

if (nhidden2>0 && nhidden==0)

PLERROR("MultiInstanceNNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",nhidden2);

// output layer before transfer function

wout = Var(1+output->size(), outputsize(), "wout");

output = affine_transform(output,wout);

params.append(wout);

// direct in-to-out layer

if(direct_in_to_out)

{

wdirect = Var(inputsize(), outputsize(), "wdirect");// Var(1+inputsize(), outputsize(), "wdirect");

output += transposeProduct(wdirect, input);// affine_transform(input,wdirect);

params.append(wdirect);

}

// the output transfer function is FIXED: it must be a sigmoid (0/1 probabilistic classification)

output = sigmoid(output);

/*

* target and weights

*/

target = Var(1, "target");

if(weightsize_>0)

{

if (weightsize_!=1)

PLERROR("MultiInstanceNNet: expected weightsize to be 1 or 0 (or unspecified = -1, meaning 0), got %d",weightsize_);

sampleweight = Var(1, "weight");

}

// build costs

if( L1_penalty )

{

PLDEPRECATED("Option \"L1_penalty\" deprecated. Please use \"penalty_type = L1\" instead.");

L1_penalty = 0;

penalty_type = "L1";

}

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l1_square" || pt == "l1 square" || pt == "l1square" )

penalty_type = "L1_square";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("L2 penalty not supported, assuming you want L2 square"); penalty_type = "L2_square";

}

else

PLERROR("penalty_type \"%s\" not supported", penalty_type.c_str());

// create penalties

penalties.resize(0); // prevents penalties from being added twice by consecutive builds

if(w1 && (!fast_exact_is_equal(layer1_weight_decay + weight_decay,0) ||

!fast_exact_is_equal(layer1_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w1, (layer1_weight_decay + weight_decay), (layer1_bias_decay + bias_decay), penalty_type));

if(w2 && (!fast_exact_is_equal(layer2_weight_decay + weight_decay,0) ||

!fast_exact_is_equal(layer2_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w2, (layer2_weight_decay + weight_decay), (layer2_bias_decay + bias_decay), penalty_type));

if(wout && (!fast_exact_is_equal(output_layer_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(output_layer_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(wout, (output_layer_weight_decay + weight_decay),

(output_layer_bias_decay + bias_decay), penalty_type));

if(wdirect && !fast_exact_is_equal(direct_in_to_out_weight_decay + weight_decay, 0))

{

if (penalty_type=="L1_square")

penalties.append(square(sumabs(wdirect))*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type=="L1")

penalties.append(sumabs(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type=="L2_square")

penalties.append(sumsquare(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

}

// Shared values hack...

if(paramsvalues.length() == params.nelems())

params << paramsvalues;

else

{

paramsvalues.resize(params.nelems());

initializeParams();

}

params.makeSharedValue(paramsvalues);

output->setName("element output");

f = Func(input, output);

input_to_logP0 = Func(input, log(1 - output));

bag_size = Var(1,1);

bag_inputs = Var(max_n_instances,inputsize());

bag_output = 1-exp(unfoldedSumOf(bag_inputs,bag_size,input_to_logP0,max_n_instances));

costs.resize(3); // (negative log-likelihood, classification error, lift output) for the bag

costs[0] = cross_entropy(bag_output, target);

costs[1] = binary_classification_loss(bag_output,target);

costs[2] = lift_output(bag_output, target);

test_costs = hconcat(costs);

// Apply penalty to cost.

// If there is no penalty, we still add costs[0] as the first cost, in

// order to keep the same number of costs as if there was a penalty.

if(penalties.size() != 0) {

if (weightsize_>0)

// only multiply by sampleweight if there are weights

training_cost = hconcat(sampleweight*sum(hconcat(costs[0] & penalties)) // don't weight the lift output

& (costs[0]*sampleweight) & (costs[1]*sampleweight) & costs[2]);

else {

training_cost = hconcat(sum(hconcat(costs[0] & penalties)) & test_costs);

}

}

else {

if(weightsize_>0) {

// only multiply by sampleweight if there are weights (but don't weight the lift output)

training_cost = hconcat(costs[0]*sampleweight & costs[0]*sampleweight & costs[1]*sampleweight & costs[2]);

} else {

training_cost = hconcat(costs[0] & test_costs);

}

}

training_cost->setName("training_cost");

test_costs->setName("test_costs");

if (weightsize_>0)

invars = bag_inputs & bag_size & target & sampleweight;

else

invars = bag_inputs & bag_size & target;

inputs_and_targets_to_test_costs = Func(invars,test_costs);

inputs_and_targets_to_training_costs = Func(invars,training_cost);

inputs_and_targets_to_test_costs->recomputeParents();

inputs_and_targets_to_training_costs->recomputeParents();

// A UN MOMENT DONNE target NE POINTE PLUS AU MEME ENDROIT!!!

}

}

| string PLearn::MultiInstanceNNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 100 of file MultiInstanceNNet.cc.

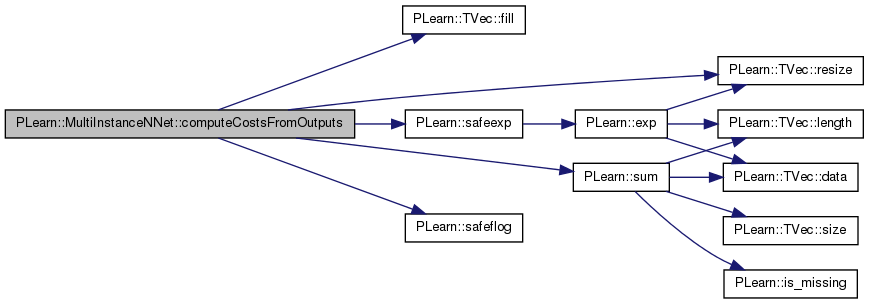

| void PLearn::MultiInstanceNNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 548 of file MultiInstanceNNet.cc.

References PLearn::TVec< T >::fill(), instance_logP0, max_n_instances, MISSING_VALUE, nll, PLERROR, PLearn::TVec< T >::resize(), PLearn::safeexp(), PLearn::safeflog(), PLearn::sum(), and test_bag_size.

{

instance_logP0.resize(max_n_instances);

int bag_signal = int(targetv[1]);

if (bag_signal & 1) // first instance, start counting

test_bag_size=0;

instance_logP0[test_bag_size++] = safeflog(1-outputv[0]);

if (!(bag_signal & 2)) // not reached the last instance

costsv.fill(MISSING_VALUE);

else // end of bag, we have a target and we can compute a cost

{

instance_logP0.resize(test_bag_size);

real bag_P0 = safeexp(sum(instance_logP0));

int classe = int(targetv[0]);

int predicted_classe = (bag_P0>0.5)?0:1;

real nll = (classe==0)?-safeflog(bag_P0):-safeflog(1-bag_P0);

int classification_error = (classe != predicted_classe);

costsv[0] = nll;

costsv[1] = classification_error;

// Add the lift output.

// Probably not working: it looks like it only takes into account the

// output for the last instance in the bag.

PLERROR("In MultiInstanceNNet::computeCostsFromOutputs - Probably "

"bugged, please check code");

if (targetv[0] > 0) {

costsv[2] = outputv[0];

} else {

costsv[2] = -outputv[0];

}

}

}

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 514 of file MultiInstanceNNet.cc.

References f.

{

f->fprop(inputv,outputv);

}

| void PLearn::MultiInstanceNNet::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 522 of file MultiInstanceNNet.cc.

References bag_inputs, bag_size, f, PLearn::TVec< T >::fill(), inputs_and_targets_to_test_costs, MISSING_VALUE, sampleweight, target, test_bag_size, and PLearn::PLearner::weightsize_.

{

f->fprop(inputv,outputv); // this is the individual P(y_i|x_i), MAYBE UNNECESSARY CALCULATION

// since the outputs will be re-computed when doing the fprop below at the end of the bag

// (but if we want to provide them after each call...). The solution would

// be to do like in computeCostsFromOutputs, keeping track of the outputs.

int bag_signal = int(targetv[1]);

if (bag_signal & 1) // first instance, start counting

test_bag_size=0;

bag_inputs->matValue(test_bag_size++) << inputv;

if (!(bag_signal & 2)) // not reached the last instance

costsv.fill(MISSING_VALUE);

else // end of bag, we have a target and we can compute a cost

{

bag_size->valuedata[0]=test_bag_size;

target->valuedata[0] = targetv[0];

if (weightsize_>0) sampleweight->valuedata[0]=1; // the test weights are known and used higher up

inputs_and_targets_to_test_costs->fproppath.fprop();

inputs_and_targets_to_test_costs->outputs.copyTo(costsv);

}

}

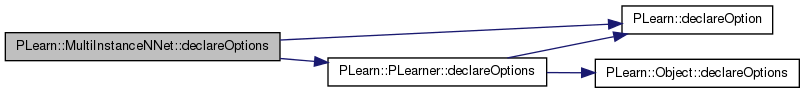

| void PLearn::MultiInstanceNNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 128 of file MultiInstanceNNet.cc.

References batch_size, bias_decay, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), direct_in_to_out, direct_in_to_out_weight_decay, L1_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, max_n_instances, nhidden, nhidden2, optimizer, output_layer_bias_decay, output_layer_weight_decay, paramsvalues, penalty_type, and weight_decay.

{

declareOption(ol, "max_n_instances", &MultiInstanceNNet::max_n_instances, OptionBase::buildoption,

" maximum number of instances (input vectors x_i) allowed\n");

declareOption(ol, "nhidden", &MultiInstanceNNet::nhidden, OptionBase::buildoption,

" number of hidden units in first hidden layer (0 means no hidden layer)\n");

declareOption(ol, "nhidden2", &MultiInstanceNNet::nhidden2, OptionBase::buildoption,

" number of hidden units in second hidden layer (0 means no hidden layer)\n");

declareOption(ol, "weight_decay", &MultiInstanceNNet::weight_decay, OptionBase::buildoption,

" global weight decay for all layers\n");

declareOption(ol, "bias_decay", &MultiInstanceNNet::bias_decay, OptionBase::buildoption,

" global bias decay for all layers\n");

declareOption(ol, "layer1_weight_decay", &MultiInstanceNNet::layer1_weight_decay, OptionBase::buildoption,

" Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer1_bias_decay", &MultiInstanceNNet::layer1_bias_decay, OptionBase::buildoption,

" Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer2_weight_decay", &MultiInstanceNNet::layer2_weight_decay, OptionBase::buildoption,

" Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer2_bias_decay", &MultiInstanceNNet::layer2_bias_decay, OptionBase::buildoption,

" Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(ol, "output_layer_weight_decay", &MultiInstanceNNet::output_layer_weight_decay, OptionBase::buildoption,

" Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_bias_decay", &MultiInstanceNNet::output_layer_bias_decay, OptionBase::buildoption,

" Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "direct_in_to_out_weight_decay", &MultiInstanceNNet::direct_in_to_out_weight_decay, OptionBase::buildoption,

" Additional weight decay for the direct in-to-out layer. Is added to 'weight_decay'.\n");

declareOption(ol, "penalty_type", &MultiInstanceNNet::penalty_type, OptionBase::buildoption,

" Penalty to use on the weights (for weight and bias decay).\n"

" Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L1_square\": square of the L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "L1_penalty", &MultiInstanceNNet::L1_penalty, OptionBase::buildoption,

" Deprecated - You should use \"penalty_type\" instead\n"

" should we use L1 penalty instead of the default L2 penalty on the weights?\n");

declareOption(ol, "direct_in_to_out", &MultiInstanceNNet::direct_in_to_out, OptionBase::buildoption,

" should we include direct input to output connections?\n");

declareOption(ol, "optimizer", &MultiInstanceNNet::optimizer, OptionBase::buildoption,

" specify the optimizer to use\n");

declareOption(ol, "batch_size", &MultiInstanceNNet::batch_size, OptionBase::buildoption,

" how many samples to use to estimate the avergage gradient before updating the weights\n"

" 0 is equivalent to specifying training_set->n_non_missing_rows() \n");

declareOption(ol, "paramsvalues", &MultiInstanceNNet::paramsvalues, OptionBase::learntoption,

" The learned parameter vector\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::MultiInstanceNNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 140 of file MultiInstanceNNet.h.

:

static void declareOptions(OptionList& ol);

| MultiInstanceNNet * PLearn::MultiInstanceNNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 100 of file MultiInstanceNNet.cc.

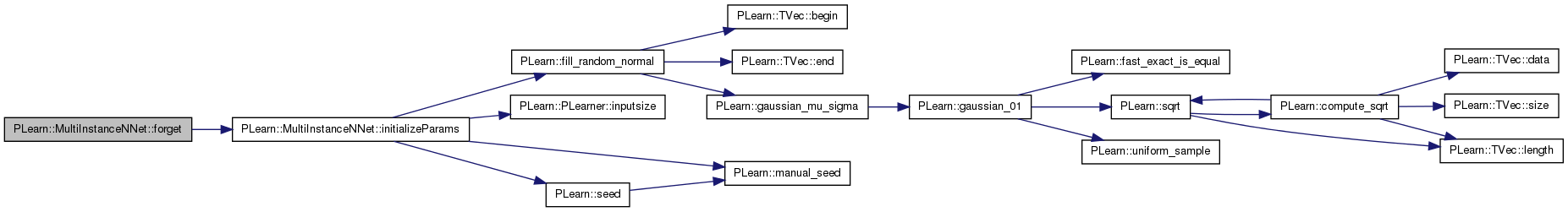

| void PLearn::MultiInstanceNNet::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 632 of file MultiInstanceNNet.cc.

References initializeParams(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

Referenced by setTrainingSet().

{

if (train_set) initializeParams();

stage = 0;

}

| OptionList & PLearn::MultiInstanceNNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 100 of file MultiInstanceNNet.cc.

| OptionMap & PLearn::MultiInstanceNNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 100 of file MultiInstanceNNet.cc.

| RemoteMethodMap & PLearn::MultiInstanceNNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 100 of file MultiInstanceNNet.cc.

| TVec< string > PLearn::MultiInstanceNNet::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 416 of file MultiInstanceNNet.cc.

{

TVec<string> names(3);

names[0] = "NLL";

names[1] = "class_error";

names[2] = "lift_output";

return names;

}

| TVec< string > PLearn::MultiInstanceNNet::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 406 of file MultiInstanceNNet.cc.

{

TVec<string> names(4);

names[0] = "NLL+penalty";

names[1] = "NLL";

names[2] = "class_error";

names[3] = "lift_output";

return names;

}

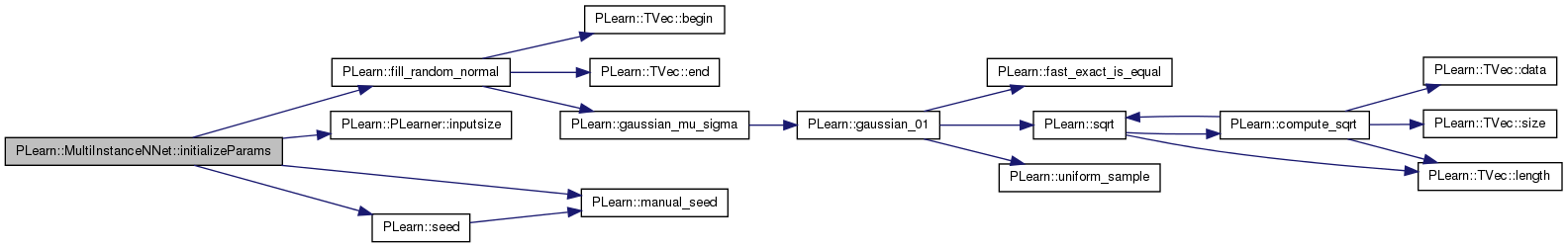

| void PLearn::MultiInstanceNNet::initializeParams | ( | ) | [protected] |

Definition at line 584 of file MultiInstanceNNet.cc.

References direct_in_to_out, PLearn::fill_random_normal(), PLearn::PLearner::inputsize(), PLearn::manual_seed(), nhidden, nhidden2, optimizer, PLearn::seed(), PLearn::PLearner::seed_, w1, w2, wdirect, and wout.

Referenced by build_(), and forget().

{

if (seed_>=0)

manual_seed(seed_);

else

PLearn::seed();

//real delta = 1./sqrt(inputsize());

real delta = 1./inputsize();

/*

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, delta);

//wdirect->matValue(0).clear();

}

*/

if(nhidden>0)

{

//fill_random_uniform(w1->value, -delta, +delta);

//delta = 1./sqrt(nhidden);

fill_random_normal(w1->value, 0, delta);

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, 0.01*delta);

wdirect->matValue(0).clear();

}

delta = 1./nhidden;

w1->matValue(0).clear();

}

if(nhidden2>0)

{

//fill_random_uniform(w2->value, -delta, +delta);

//delta = 1./sqrt(nhidden2);

fill_random_normal(w2->value, 0, delta);

delta = 1./nhidden2;

w2->matValue(0).clear();

}

//fill_random_uniform(wout->value, -delta, +delta);

fill_random_normal(wout->value, 0, delta);

wout->matValue(0).clear();

// Reset optimizer

if(optimizer)

optimizer->reset();

}

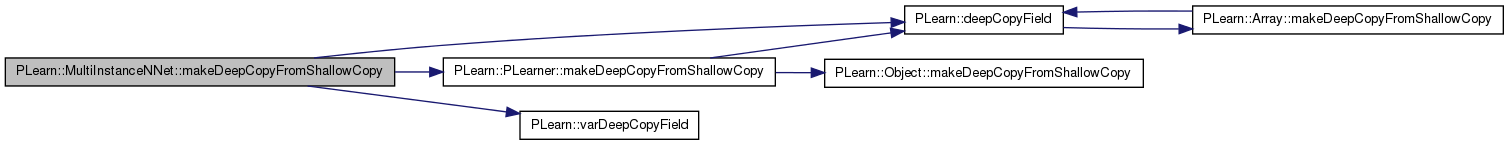

| void PLearn::MultiInstanceNNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 647 of file MultiInstanceNNet.cc.

References bag_inputs, bag_output, bag_size, costs, PLearn::deepCopyField(), f, input, input_to_logP0, inputs_and_targets_to_test_costs, inputs_and_targets_to_training_costs, instance_logP0, invars, PLearn::PLearner::makeDeepCopyFromShallowCopy(), nll, optimizer, output, output_and_target_to_cost, params, paramsvalues, penalties, sampleweight, target, test_costf, test_costs, training_cost, PLearn::varDeepCopyField(), w1, w2, wdirect, and wout.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(instance_logP0, copies);

varDeepCopyField(input, copies);

varDeepCopyField(target, copies);

varDeepCopyField(sampleweight, copies);

varDeepCopyField(w1, copies);

varDeepCopyField(w2, copies);

varDeepCopyField(wout, copies);

varDeepCopyField(wdirect, copies);

varDeepCopyField(output, copies);

varDeepCopyField(bag_size, copies);

varDeepCopyField(bag_inputs, copies);

varDeepCopyField(bag_output, copies);

deepCopyField(inputs_and_targets_to_test_costs, copies);

deepCopyField(inputs_and_targets_to_training_costs, copies);

deepCopyField(input_to_logP0, copies);

varDeepCopyField(nll, copies);

deepCopyField(costs, copies);

deepCopyField(penalties, copies);

varDeepCopyField(training_cost, copies);

varDeepCopyField(test_costs, copies);

deepCopyField(invars, copies);

deepCopyField(params, copies);

deepCopyField(paramsvalues, copies);

deepCopyField(f, copies);

deepCopyField(test_costf, copies);

deepCopyField(output_and_target_to_cost, copies);

deepCopyField(optimizer, copies);

}

| int PLearn::MultiInstanceNNet::outputsize | ( | ) | const [virtual] |

SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implements PLearn::PLearner.

Definition at line 403 of file MultiInstanceNNet.cc.

Referenced by build_().

{ return 1; }

| void PLearn::MultiInstanceNNet::setTrainingSet | ( | VMat | training_set, |

| bool | call_forget = true |

||

| ) | [virtual] |

Declares the training set.

Then calls build() and forget() if necessary. Also sets this learner's inputsize_ targetsize_ weightsize_ from those of the training_set. Note: You shouldn't have to override this in subclasses, except in maybe to forward the call to an underlying learner.

Reimplemented from PLearn::PLearner.

Definition at line 199 of file MultiInstanceNNet.cc.

References build(), forget(), PLearn::PLearner::inputsize_, PLearn::VMat::length(), PLearn::PLearner::targetsize_, PLearn::PLearner::train_set, training_set_has_changed, PLearn::PLearner::weightsize_, and PLearn::VMat::width().

{

training_set_has_changed =

!train_set || train_set->width()!=training_set->width() ||

train_set->length()!=training_set->length() || train_set->inputsize()!=training_set->inputsize()

|| train_set->weightsize()!= training_set->weightsize();

train_set = training_set;

if (training_set_has_changed)

{

inputsize_ = train_set->inputsize();

targetsize_ = train_set->targetsize();

weightsize_ = train_set->weightsize();

}

if (training_set_has_changed || call_forget)

{

build(); // MODIF FAITE PAR YOSHUA: sinon apres un setTrainingSet le build n'est pas complete dans un MultiInstanceNNet train_set = training_set;

if (call_forget) forget();

}

}

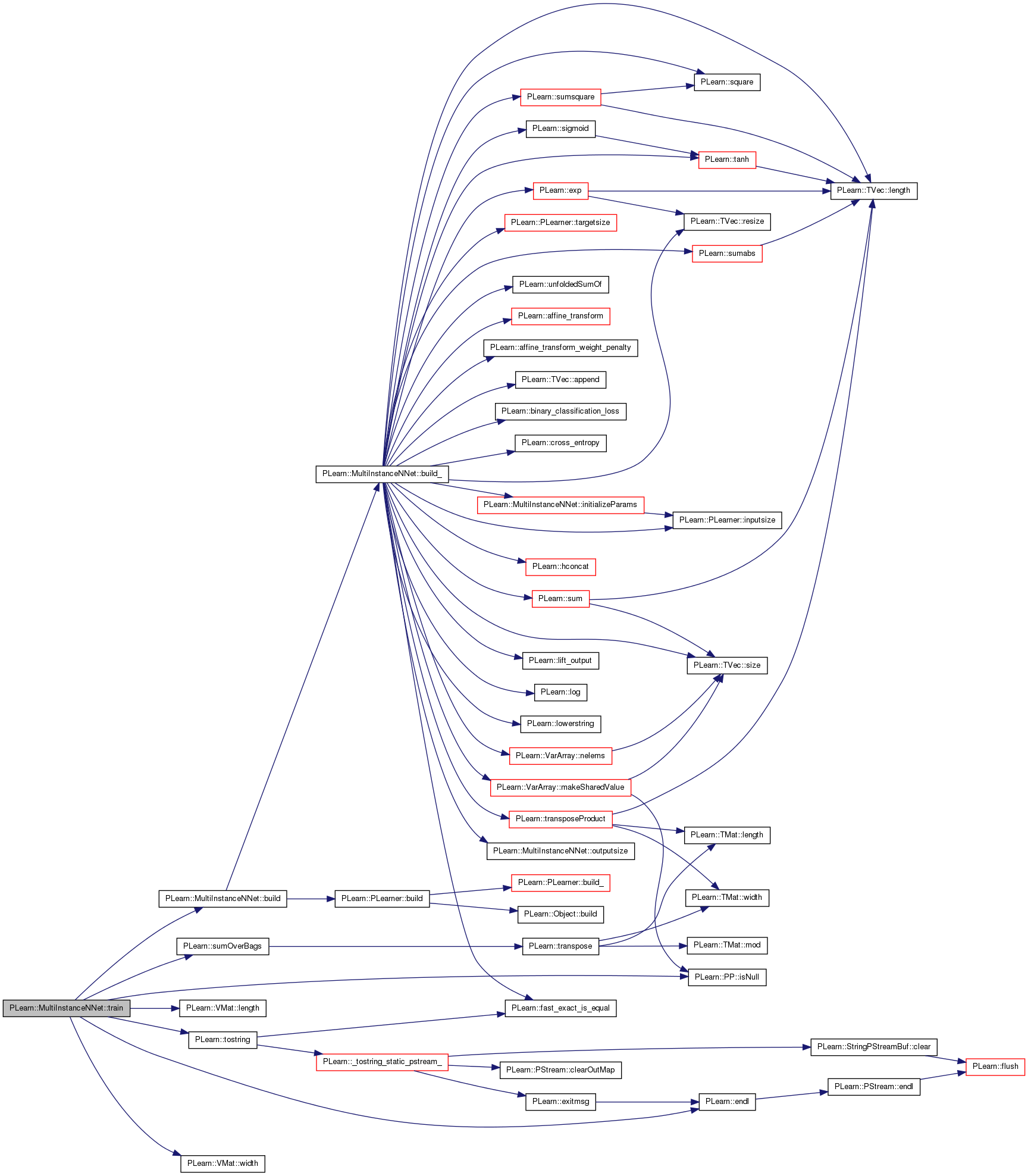

| void PLearn::MultiInstanceNNet::train | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

TYPICAL CODE:

static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { // clear statistics of previous epoch train_stats->forget(); //... train for 1 stage, and update train_stats, // using train_set->getSample(input, target, weight); // and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch }

Implements PLearn::PLearner.

Definition at line 426 of file MultiInstanceNNet.cc.

References batch_size, build(), PLearn::endl(), f, i, inputs_and_targets_to_training_costs, PLearn::PP< T >::isNull(), PLearn::VMat::length(), max_n_instances, PLearn::PLearner::nstages, optimizer, optstage_per_lstage, params, PLERROR, PLearn::PLearner::report_progress, PLearn::PLearner::stage, PLearn::sumOverBags(), PLearn::SumOverBagsVariable::TARGET_COLUMN_FIRST, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_set_has_changed, PLearn::PLearner::verbosity, and PLearn::VMat::width().

{

// MultiInstanceNNet nstages is number of epochs (whole passages through the training set)

// while optimizer nstages is number of weight updates.

// So relationship between the 2 depends whether we are in stochastic, batch or minibatch mode

if(!train_set)

PLERROR("In MultiInstanceNNet::train, you did not setTrainingSet");

if(!train_stats)

PLERROR("In MultiInstanceNNet::train, you did not setTrainStatsCollector");

if(f.isNull()) // Net has not been properly built yet (because build was called before the learner had a proper training set)

build();

if (training_set_has_changed)

{

// number of optimiser stages corresponding to one learner stage (one epoch)

optstage_per_lstage = 0;

int n_bags = -1;

if (batch_size<=0)

optstage_per_lstage = 1;

else // must count the nb of bags in the training set

{

n_bags=0;

int l = train_set->length();

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Counting nb bags in train_set for MultiInstanceNNet ", l);

Vec row(train_set->width());

int tag_column = train_set->inputsize() + train_set->targetsize() - 1;

for (int i=0;i<l;i++) {

train_set->getRow(i,row);

int tag = (int)row[tag_column];

if (tag & SumOverBagsVariable::TARGET_COLUMN_FIRST) {

// indicates the beginning of a new bag.

n_bags++;

}

if(pb)

pb->update(i);

}

optstage_per_lstage = n_bags/batch_size;

}

training_set_has_changed = false;

}

Var totalcost = sumOverBags(train_set, inputs_and_targets_to_training_costs, max_n_instances, batch_size);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

}

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Training MultiInstanceNNet from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

optimizer->optimizeN(*train_stats);

train_stats->finalize();

if(verbosity>2)

cout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

cout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

//if (batch_size==0)

// optimizer->verifyGradient(0.001);

//output_and_target_to_cost->recomputeParents();

//test_costf->recomputeParents();

// cerr << "totalcost->value = " << totalcost->value << endl;

// cout << "Result for benchmark is: " << totalcost->value << endl;

}

Reimplemented from PLearn::PLearner.

Definition at line 140 of file MultiInstanceNNet.h.

Var PLearn::MultiInstanceNNet::bag_inputs [protected] |

Definition at line 74 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::bag_output [protected] |

Definition at line 75 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::bag_size [protected] |

Definition at line 73 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Definition at line 130 of file MultiInstanceNNet.h.

Referenced by declareOptions(), and train().

Definition at line 111 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 119 of file MultiInstanceNNet.h.

VarArray PLearn::MultiInstanceNNet::costs [protected] |

Definition at line 82 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 123 of file MultiInstanceNNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 118 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Func PLearn::MultiInstanceNNet::f [mutable] |

Definition at line 95 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::MultiInstanceNNet::input [protected] |

Definition at line 64 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::MultiInstanceNNet::input_to_logP0 [protected] |

Definition at line 79 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 77 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Definition at line 78 of file MultiInstanceNNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::MultiInstanceNNet::instance_logP0 [mutable, private] |

Used to store data between calls to computeCostsFromOutput.

Definition at line 60 of file MultiInstanceNNet.h.

Referenced by computeCostsFromOutputs(), and makeDeepCopyFromShallowCopy().

Definition at line 124 of file MultiInstanceNNet.h.

Definition at line 124 of file MultiInstanceNNet.h.

VarArray PLearn::MultiInstanceNNet::invars [protected] |

Definition at line 86 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 122 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 113 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 112 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 115 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 114 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 105 of file MultiInstanceNNet.h.

Referenced by build_(), computeCostsFromOutputs(), declareOptions(), and train().

Definition at line 107 of file MultiInstanceNNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 108 of file MultiInstanceNNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Var PLearn::MultiInstanceNNet::nll [protected] |

Definition at line 80 of file MultiInstanceNNet.h.

Referenced by computeCostsFromOutputs(), and makeDeepCopyFromShallowCopy().

Definition at line 128 of file MultiInstanceNNet.h.

Referenced by declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::MultiInstanceNNet::optstage_per_lstage [protected] |

Definition at line 91 of file MultiInstanceNNet.h.

Referenced by train().

Var PLearn::MultiInstanceNNet::output [protected] |

Definition at line 72 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 97 of file MultiInstanceNNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Definition at line 117 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 116 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

VarArray PLearn::MultiInstanceNNet::params [protected] |

Definition at line 87 of file MultiInstanceNNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::MultiInstanceNNet::paramsvalues [protected] |

Definition at line 89 of file MultiInstanceNNet.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

VarArray PLearn::MultiInstanceNNet::penalties [protected] |

Definition at line 83 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 121 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::MultiInstanceNNet::sampleweight [protected] |

Definition at line 66 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::target [protected] |

Definition at line 65 of file MultiInstanceNNet.h.

Referenced by build_(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

int PLearn::MultiInstanceNNet::test_bag_size [mutable] |

Definition at line 125 of file MultiInstanceNNet.h.

Referenced by computeCostsFromOutputs(), and computeOutputAndCosts().

Func PLearn::MultiInstanceNNet::test_costf [mutable] |

Definition at line 96 of file MultiInstanceNNet.h.

Referenced by makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::test_costs [protected] |

Definition at line 85 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::training_cost [protected] |

Definition at line 84 of file MultiInstanceNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 92 of file MultiInstanceNNet.h.

Referenced by setTrainingSet(), and train().

Var PLearn::MultiInstanceNNet::w1 [protected] |

Definition at line 67 of file MultiInstanceNNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::w2 [protected] |

Definition at line 68 of file MultiInstanceNNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Var PLearn::MultiInstanceNNet::wdirect [protected] |

Definition at line 70 of file MultiInstanceNNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Definition at line 110 of file MultiInstanceNNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::MultiInstanceNNet::wout [protected] |

Definition at line 69 of file MultiInstanceNNet.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4