|

PLearn 0.1

|

|

PLearn 0.1

|

#include <NonLocalManifoldParzen.h>

Public Member Functions | |

| NonLocalManifoldParzen () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual NonLocalManifoldParzen * | deepCopy (CopiesMap &copies) const |

| virtual real | log_density (const Vec &x) const |

| Return log of probability density log(p(y)). | |

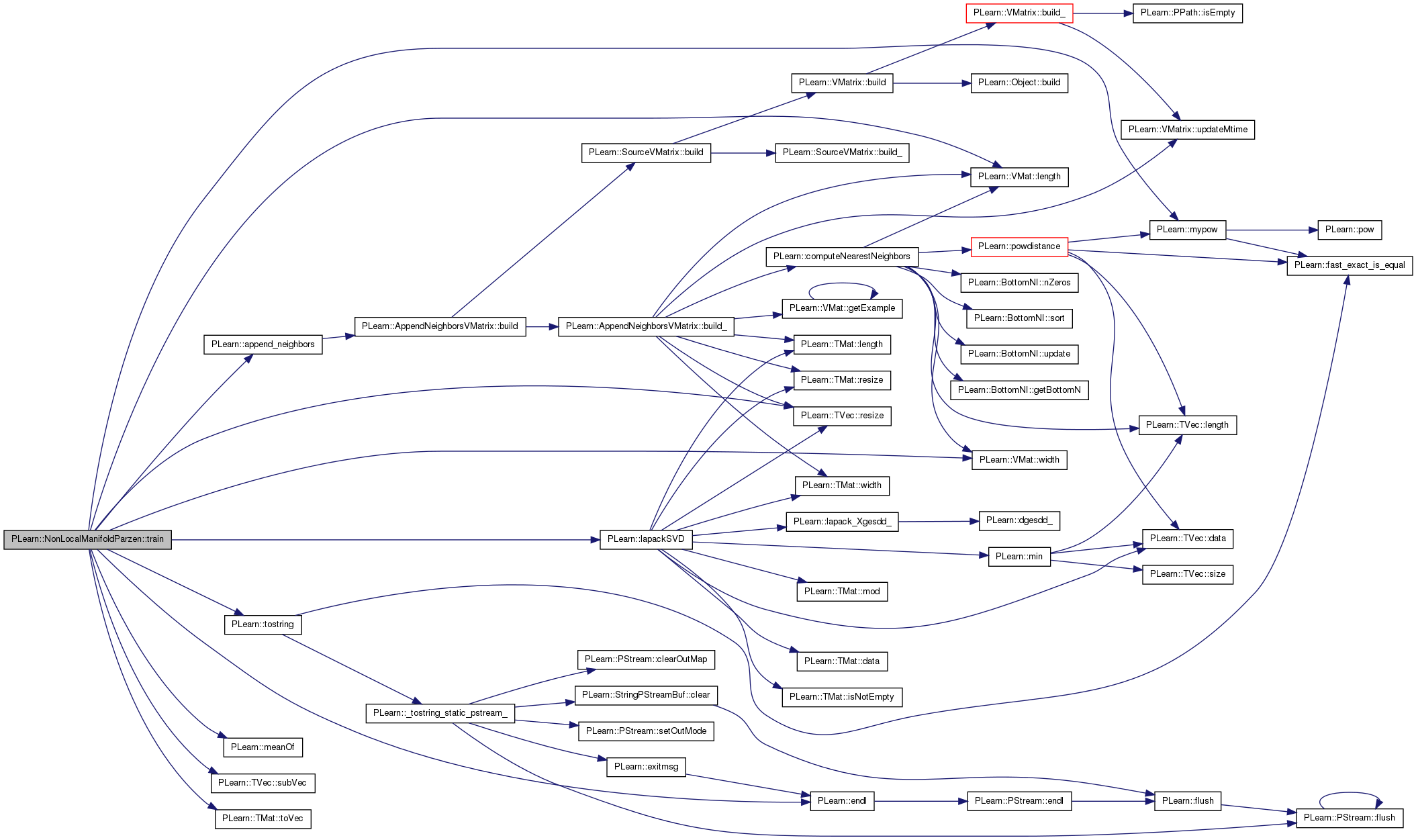

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Produce outputs according to what is specified in outputs_def. | |

| virtual int | outputsize () const |

| Returned value depends on outputs_def. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| VarArray | parameters |

| Parameters of the model. | |

| VMat | reference_set |

| Reference set of points in the gaussian mixture. | |

| int | ncomponents |

| Number of reduced dimensions (number of tangent vectors to compute) | |

| int | nneighbors |

| Number of neighbors used for gradient descent. | |

| int | nneighbors_density |

| Number of neighbors for the p(x) density estimation. | |

| bool | store_prediction |

| Indication that the predicted parameters should be stored. | |

| bool | learn_mu |

| Indication that the mean of the gaussians should be learned. | |

| real | sigma_init |

| Initial (approximate) value of sigma^2_noise. | |

| real | sigma_min |

| Minimum value of sigma^2_noise. | |

| int | mu_nneighbors |

| Number of neighbors to learn the mus. | |

| real | sigma_threshold_factor |

| Threshold applied on the update rule for sigma^2_noise. | |

| real | svd_threshold |

| SVD threshold on the eigen values. | |

| int | nhidden |

| Number of hidden units. | |

| real | weight_decay |

| Weight decay for all weights. | |

| string | penalty_type |

| Penalty type to use on the weights. | |

| PP< Optimizer > | optimizer |

| Optimizer of the neural network. | |

| int | batch_size |

| Batch size of the gradient-based optimization. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| virtual void | initializeParams () |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| int | L |

| Number of gaussians. | |

| real | log_L |

| Logarithm of number of gaussians. | |

| Func | cost_of_one_example |

| Cost of one example. | |

| Var | x |

| Input vector. | |

| Var | W |

| Parameters of the neural network. | |

| Var | V |

| Var | muV |

| Var | snV |

| Var | tangent_targets |

| Tangent vector targets. | |

| Var | components |

| Tangent vectors spanning the tangent plane, given by the neural network. | |

| Var | mu |

| Mean of the gaussian. | |

| Var | sn |

| Sigma^2_noise of the gaussian. | |

| Var | sum_nll |

| Sum of NLL cost. | |

| Var | min_sig |

| Mininum value of sigma^2_noise. | |

| Var | init_sig |

| Initial (approximate) value of sigma^2_noise. | |

| Func | predictor |

| Predictor of the parameters of the gaussian at x. | |

| Mat | U_temp |

| log_density and Kernel methods' temporary variables | |

| Mat | F |

| Mat | distances |

| Vec | mu_temp |

| log_density and Kernel methods' temporary variables | |

| Vec | sm_temp |

| Vec | sn_temp |

| Vec | diff |

| Vec | z |

| Vec | x_minus_neighbor |

| Vec | t_row |

| Vec | neighbor_row |

| Vec | log_gauss |

| Vec | t_dist |

| TVec< int > | t_nn |

| log_density and Kernel methods' temporary variables | |

| DistanceKernel | dk |

| log_density and Kernel methods' temporary variables | |

| Mat | Ut_svd |

| SVD computation variables. | |

| Mat | V_svd |

| Vec | S_svd |

| SVD computation variables. | |

| Mat | mus |

| Predictions for mu. | |

| Vec | sns |

| Predictions for sn. | |

| Mat | sms |

| Predictions for sm. | |

| TVec< Mat > | Fs |

| Predictions for F. | |

| VMat | train_set_with_targets |

| Training set concatenated with nearest neighbor targets. | |

| VMat | targets_vmat |

| Nearest neighbor differences targets. | |

| Var | totalcost |

| Total cost Var. | |

| int | nsamples |

| Batch size. | |

| Vec | paramsvalues |

| Parameter values. | |

Private Types | |

| typedef UnconditionalDistribution | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

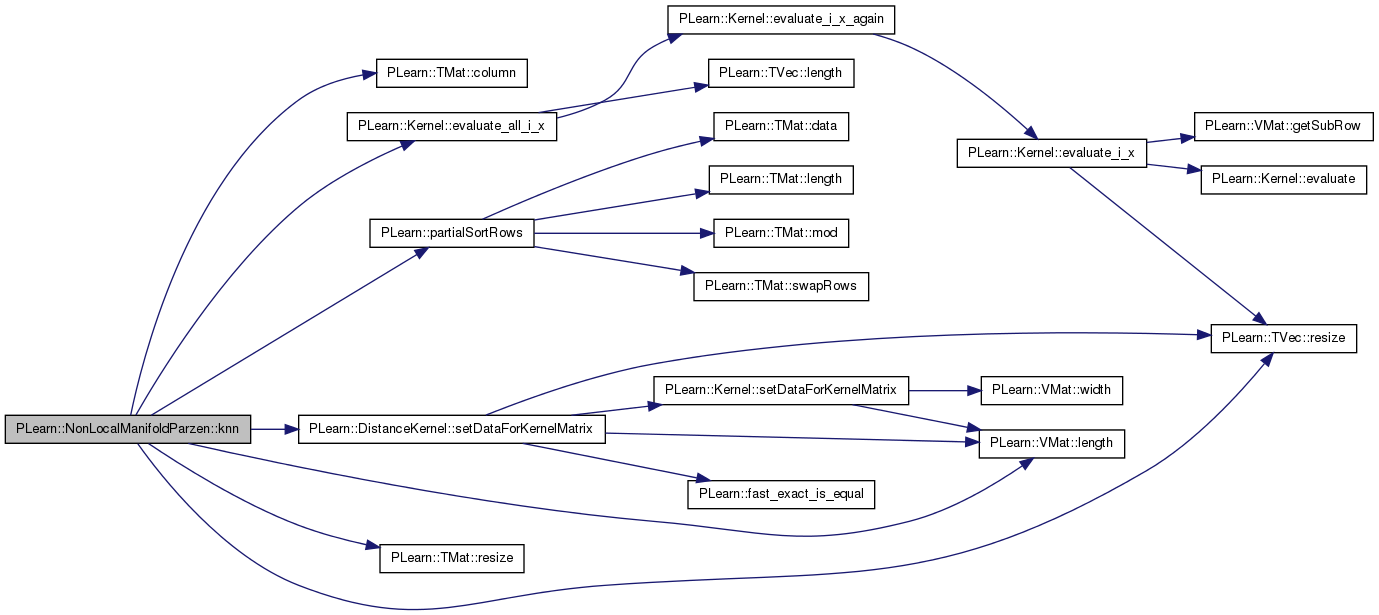

| void | knn (const VMat &vm, const Vec &x, const int &k, TVec< int > &neighbors, bool sortk) const |

| Finds nearest neighbors of "x" in set "vm" and puts their indices in "neighbors". | |

Definition at line 58 of file NonLocalManifoldParzen.h.

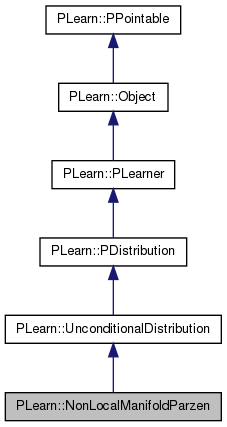

typedef UnconditionalDistribution PLearn::NonLocalManifoldParzen::inherited [private] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 63 of file NonLocalManifoldParzen.h.

| PLearn::NonLocalManifoldParzen::NonLocalManifoldParzen | ( | ) |

Default constructor.

Definition at line 67 of file NonLocalManifoldParzen.cc.

:

reference_set(0),

ncomponents(1),

nneighbors(5),

nneighbors_density(-1),

store_prediction(false),

learn_mu(false),

sigma_init(0.1),

sigma_min(0.00001),

mu_nneighbors(2),

sigma_threshold_factor(-1),

svd_threshold(1e-8),

nhidden(10),

weight_decay(0),

penalty_type("L2_square"),

batch_size(1)

{

}

| string PLearn::NonLocalManifoldParzen::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| OptionList & PLearn::NonLocalManifoldParzen::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| RemoteMethodMap & PLearn::NonLocalManifoldParzen::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| Object * PLearn::NonLocalManifoldParzen::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| StaticInitializer NonLocalManifoldParzen::_static_initializer_ & PLearn::NonLocalManifoldParzen::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

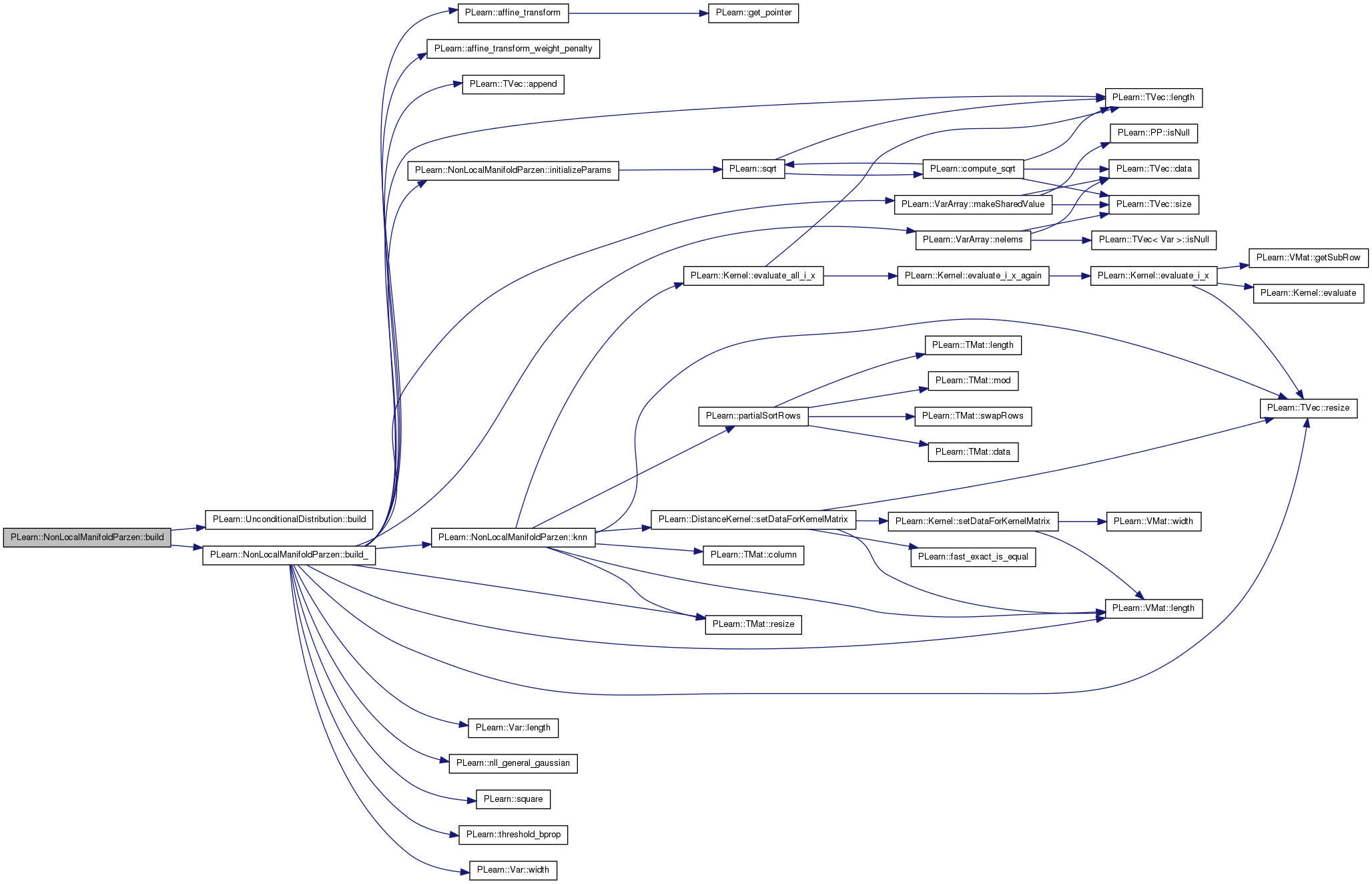

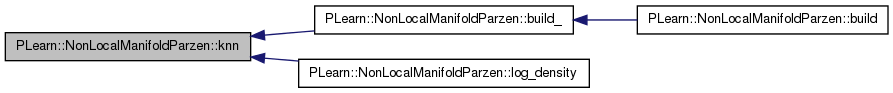

| void PLearn::NonLocalManifoldParzen::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 402 of file NonLocalManifoldParzen.cc.

References PLearn::UnconditionalDistribution::build(), and build_().

{

inherited::build();

build_();

}

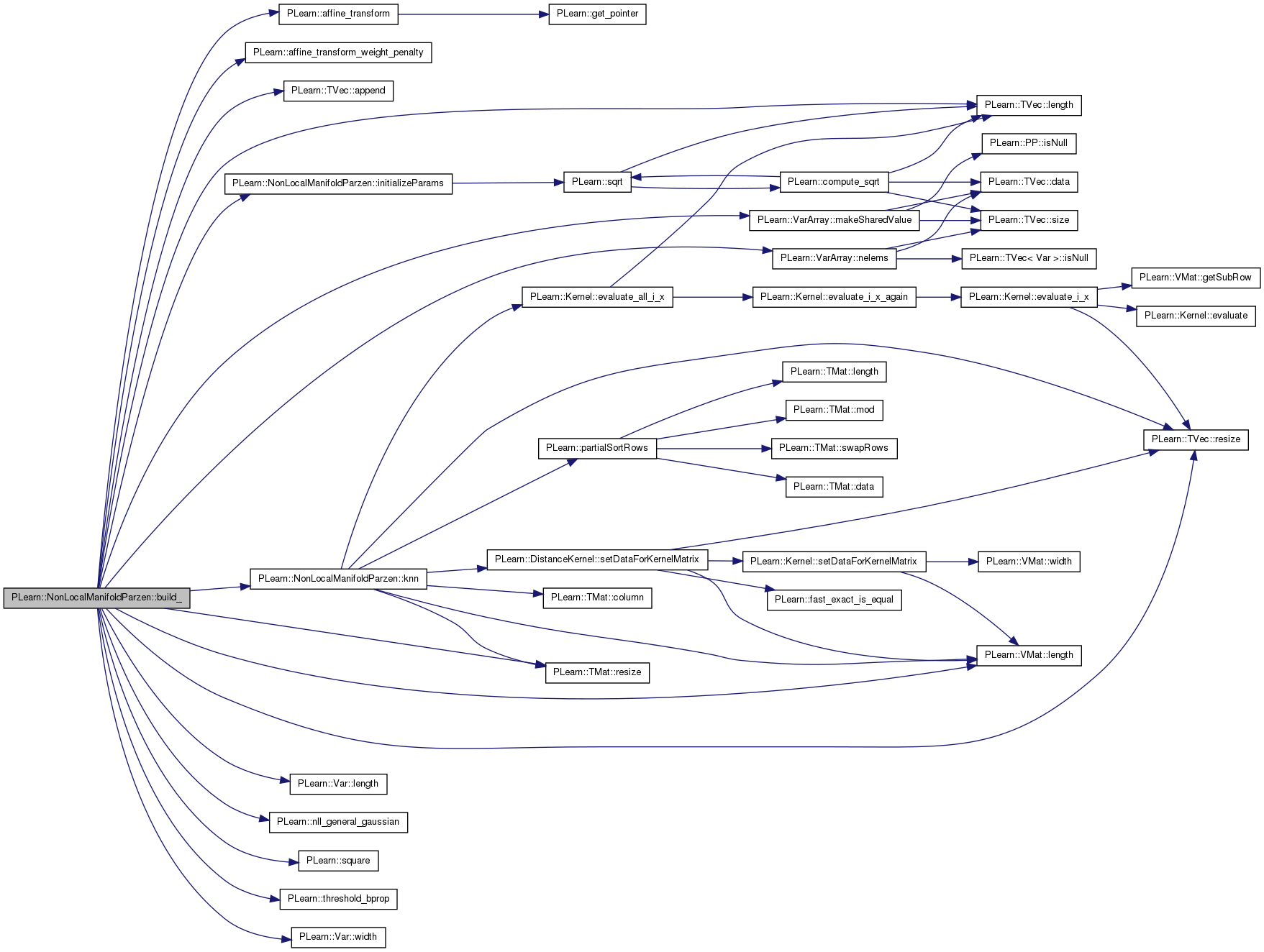

| void PLearn::NonLocalManifoldParzen::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 236 of file NonLocalManifoldParzen.cc.

References a, PLearn::affine_transform(), PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), components, cost_of_one_example, diff, F, Fs, i, init_sig, initializeParams(), PLearn::PLearner::inputsize_, knn(), L, learn_mu, PLearn::TVec< T >::length(), PLearn::Var::length(), PLearn::VMat::length(), log_L, PLearn::VarArray::makeSharedValue(), min_sig, mu, mu_nneighbors, mu_temp, mus, muV, ncomponents, neighbor_row, PLearn::VarArray::nelems(), nhidden, PLearn::nll_general_gaussian(), nneighbors, nneighbors_density, optimizer, parameters, paramsvalues, penalty_type, pl_log, PLERROR, predictor, reference_set, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sigma_min, sigma_threshold_factor, sm_temp, sms, sn, sn_temp, sns, snV, PLearn::square(), sum_nll, t_row, tangent_targets, PLearn::threshold_bprop(), PLearn::PLearner::train_set, U_temp, Ut_svd, V, V_svd, W, weight_decay, PLearn::Var::width(), x, x_minus_neighbor, and z.

Referenced by build().

{

if (inputsize_>0)

{

if (nhidden <= 0)

PLERROR("NonLocalManifoldParzen::Number of hidden units "

"should be positive, now %d\n",nhidden);

Var log_n_examples(1,1,"log(n_examples)");

if(train_set)

{

L = train_set->length();

reference_set = train_set;

}

log_L= pl_log((real) L);

parameters.resize(0);

// Neural network prediction of principal components

x = Var(inputsize_);

x->setName("x");

W = Var(nhidden+1,inputsize_,"W");

parameters.append(W);

Var a; // outputs of hidden layer

a = affine_transform(x,W);

a->setName("a");

V = Var(ncomponents*(inputsize_+1),nhidden,"V");

parameters.append(V);

// TODO: instead, make NllGeneralGaussianVariable use vector... (DONE)

//components = reshape(affine_transform(V,a),ncomponents,n);

components = affine_transform(V,a);

components->setName("components");

// Gaussian kernel parameters prediction

muV = Var(inputsize_+1,nhidden,"muV");

snV = Var(2,nhidden,"snV");

parameters.append(muV);

parameters.append(snV);

if(learn_mu)

mu = affine_transform(muV,a);

else

{

mu = new SourceVariable(inputsize_,1);

mu->value.clear();

}

mu->setName("mu");

min_sig = new SourceVariable(1,1);

min_sig->value[0] = sigma_min;

min_sig->setName("min_sig");

init_sig = Var(1,1);

init_sig->setName("init_sig");

parameters.append(init_sig);

sn = square(affine_transform(snV,a)) + min_sig + square(init_sig);

sn->setName("sn");

if(sigma_threshold_factor > 0)

sn = threshold_bprop(sn,sigma_threshold_factor);

predictor = Func(x, parameters , components & mu & sn );

Var target_index = Var(1,1);

target_index->setName("target_index");

Var neighbor_indexes = Var(nneighbors,1);

neighbor_indexes->setName("neighbor_indexes");

tangent_targets = Var(nneighbors,inputsize_);

if(mu_nneighbors < 0 ) mu_nneighbors = nneighbors;

Var nll;

nll = nll_general_gaussian(components, mu, sn, tangent_targets,

log_L, learn_mu, mu_nneighbors);

Var knn = new SourceVariable(1,1);

knn->setName("knn");

knn->value[0] = nneighbors;

sum_nll = new ColumnSumVariable(nll) / knn;

// Weight decay penalty

if(weight_decay > 0 )

{

sum_nll += affine_transform_weight_penalty(

W,weight_decay,0,penalty_type) +

affine_transform_weight_penalty(

V,weight_decay,0,penalty_type) +

affine_transform_weight_penalty(

muV,weight_decay,0,penalty_type) +

affine_transform_weight_penalty(

snV,weight_decay,0,penalty_type);

}

cost_of_one_example = Func(x & tangent_targets & target_index &

neighbor_indexes, parameters, sum_nll);

if(nneighbors_density >= L || nneighbors_density < 0)

nneighbors_density = L;

// Output storage variables

t_row.resize(inputsize_);

Ut_svd.resize(inputsize_,inputsize_);

V_svd.resize(ncomponents,ncomponents);

F.resize(components->length(),components->width());

z.resize(inputsize_);

x_minus_neighbor.resize(inputsize_);

neighbor_row.resize(inputsize_);

// log_density and Kernel methods variables

U_temp.resize(ncomponents,inputsize_);

mu_temp.resize(inputsize_);

sm_temp.resize(ncomponents);

sn_temp.resize(1);

diff.resize(inputsize_);

mus.resize(L, inputsize_);

sns.resize(L);

sms.resize(L,ncomponents);

Fs.resize(L);

for(int i=0; i<L; i++)

{

Fs[i].resize(ncomponents,inputsize_);

}

if(paramsvalues.length() == parameters.nelems())

parameters << paramsvalues;

else

{

paramsvalues.resize(parameters.nelems());

initializeParams();

if(optimizer)

optimizer->reset();

}

parameters.makeSharedValue(paramsvalues);

}

}

| string PLearn::NonLocalManifoldParzen::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

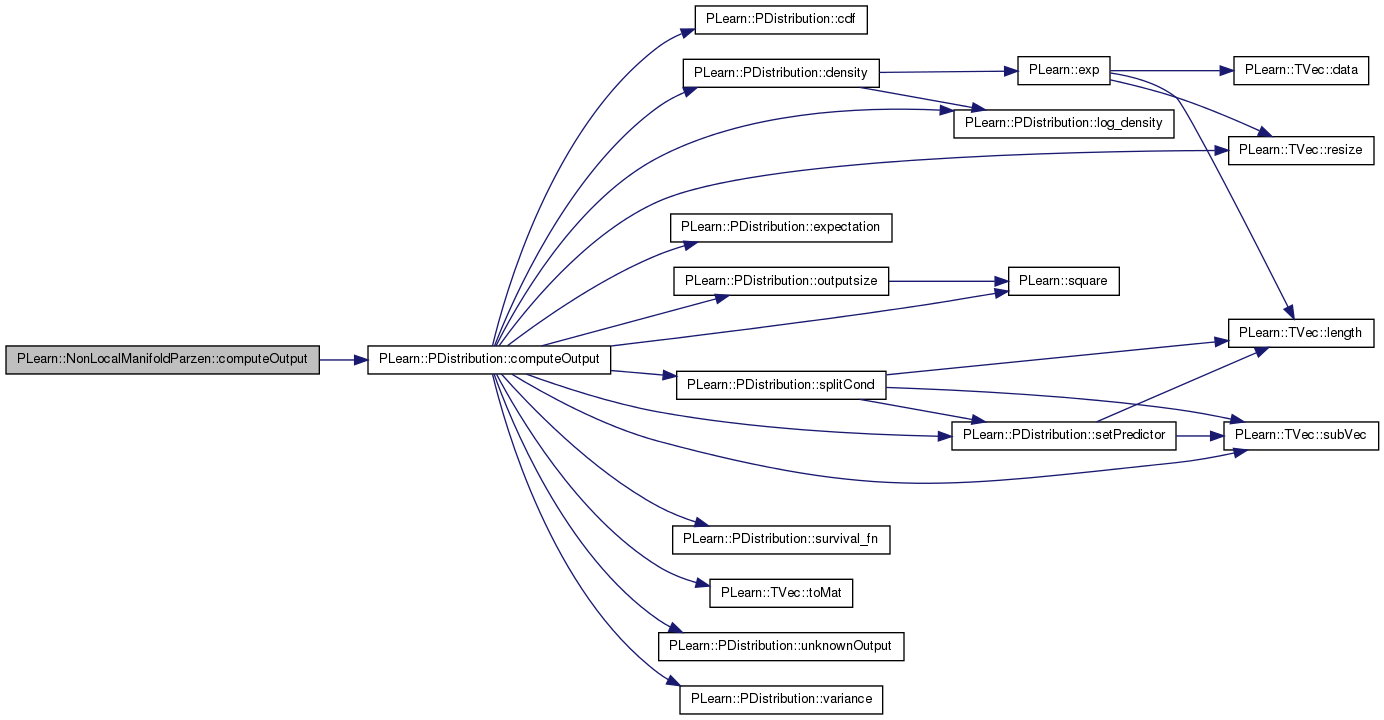

| void PLearn::NonLocalManifoldParzen::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Produce outputs according to what is specified in outputs_def.

Reimplemented from PLearn::PDistribution.

Definition at line 707 of file NonLocalManifoldParzen.cc.

References PLearn::PDistribution::computeOutput(), and PLearn::PDistribution::outputs_def.

{

switch(outputs_def[0])

{

/*

case 'r':

{

string fsave = "";

VMat temp;

real step_size = rw_size_step;

real dp;

t_row << input;

Vec last_F(inputsize());

for(int s=0; s<rw_n_step;s++)

{

if(s == 0)

{

predictor->fprop(t_row, F.toVec() & mu_temp & sn_temp);

last_F << F(rw_ith_component);

}

predictor->fprop(t_row, F.toVec() & mu_temp & sn_temp);

// N.B. this is the SVD of F'

lapackSVD(F, Ut_svd, S_svd, V_svd,'A',1.5);

F(rw_ith_component) << Ut_svd(rw_ith_component);

if(s % rw_save_every == 0)

{

fsave = rw_file_name + tostring(s) + ".amat";

temp = new MemoryVMatrix(t_row.toMat(1,t_row.length()));

temp->saveAMAT(fsave,false,true);

//PLearn::save(fsave,t_row);

}

dp = dot(last_F,F(rw_ith_component));

if(dp>0) dp = 1;

else dp = -1;

t_row += step_size*F(rw_ith_component)*abs(S_svd[rw_ith_component])*dp;

last_F << dp*F(rw_ith_component);

}

output << t_row;

t_row << input;

for(int s=0; s<rw_n_step;s++)

{

if(s == 0)

{

predictor->fprop(t_row, F.toVec() & mu_temp & sn_temp);

last_F << (-1.0)*F(rw_ith_component);

}

predictor->fprop(t_row, F.toVec() & mu_temp & sn_temp);

// N.B. this is the SVD of F'

lapackSVD(F, Ut_svd, S_svd, V_svd,'A',1.5);

F(rw_ith_component) << Ut_svd(rw_ith_component);

if(s % rw_save_every == 0)

{

fsave = rw_file_name + tostring(-s) + ".amat";

temp = new MemoryVMatrix(t_row.toMat(1,t_row.length()));

temp->saveAMAT(fsave,false,true);

//PLearn::save(fsave,t_row);

}

dp = dot(last_F,F(rw_ith_component));

if(dp>0) dp = 1;

else dp = -1;

t_row += step_size*F(rw_ith_component)*abs(S_svd[rw_ith_component])*dp;

last_F << dp*F(rw_ith_component);

}

break;

}

case 't':

{

predictor->fprop(input, F.toVec() & mu_temp & sn_temp);

output << F.toVec();

break;

}

*/

default:

inherited::computeOutput(input,output);

}

}

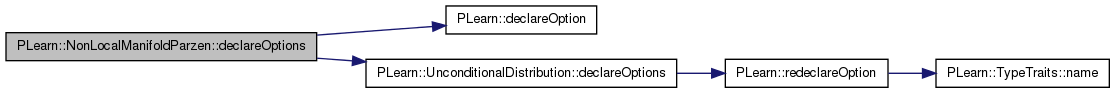

| void PLearn::NonLocalManifoldParzen::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 95 of file NonLocalManifoldParzen.cc.

References batch_size, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::UnconditionalDistribution::declareOptions(), Fs, learn_mu, PLearn::OptionBase::learntoption, mu_nneighbors, mus, ncomponents, nhidden, nneighbors, nneighbors_density, optimizer, parameters, paramsvalues, penalty_type, reference_set, sigma_init, sigma_min, sigma_threshold_factor, sms, sns, store_prediction, svd_threshold, and weight_decay.

{

declareOption(ol, "parameters", &NonLocalManifoldParzen::parameters,

OptionBase::learntoption,

"Parameters of the tangent_predictor function.\n"

);

declareOption(ol, "reference_set", &NonLocalManifoldParzen::reference_set,

OptionBase::learntoption,

"Reference points for density computation.\n"

);

declareOption(ol, "ncomponents", &NonLocalManifoldParzen::ncomponents,

OptionBase::buildoption,

"Number of \"principal components\" to predict\n"

"for kernel parameters prediction.\n"

);

declareOption(ol, "nneighbors", &NonLocalManifoldParzen::nneighbors,

OptionBase::buildoption,

"Number of nearest neighbors to consider in training procedure.\n"

);

declareOption(ol, "nneighbors_density",

&NonLocalManifoldParzen::nneighbors_density,

OptionBase::buildoption,

"Number of nearest neighbors to consider for\n"

"p(x) density estimation.\n"

);

declareOption(ol, "store_prediction",

&NonLocalManifoldParzen::store_prediction,

OptionBase::buildoption,

"Indication that the predicted parameters should be stored.\n"

"This may make testing faster. Note that the predictions are\n"

"stored after the last training stage\n"

);

declareOption(ol, "paramsvalues",

&NonLocalManifoldParzen::paramsvalues,

OptionBase::learntoption,

"The learned parameter vector.\n"

);

// ** Gaussian kernel options

declareOption(ol, "learn_mu", &NonLocalManifoldParzen::learn_mu,

OptionBase::buildoption,

"Indication that the deviation from the training point\n"

"in a Gaussian kernel (called mu) should be learned.\n"

);

declareOption(ol, "sigma_init", &NonLocalManifoldParzen::sigma_init,

OptionBase::buildoption,

"Initial minimum value for sigma noise.\n"

);

declareOption(ol, "sigma_min", &NonLocalManifoldParzen::sigma_min,

OptionBase::buildoption,

"The minimum value for sigma noise.\n"

);

declareOption(ol, "mu_nneighbors", &NonLocalManifoldParzen::mu_nneighbors,

OptionBase::buildoption,

"Number of nearest neighbors to learn the mus \n"

"(if < 0, mu_nneighbors = nneighbors).\n"

);

declareOption(ol, "sigma_threshold_factor",

&NonLocalManifoldParzen::sigma_threshold_factor,

OptionBase::buildoption,

"Threshold factor of the gradient on the sigma noise\n"

"parameter of the Gaussian kernel. If < 0, then\n"

"no threshold is used."

);

declareOption(ol, "svd_threshold",

&NonLocalManifoldParzen::svd_threshold, OptionBase::buildoption,

"Threshold to accept singular values of F in solving for\n"

"linear combination weights on tangent subspace.\n"

);

// ** Neural network predictor **

declareOption(ol, "nhidden",

&NonLocalManifoldParzen::nhidden, OptionBase::buildoption,

"Number of hidden units of the neural network.\n"

);

declareOption(ol, "weight_decay", &NonLocalManifoldParzen::weight_decay,

OptionBase::buildoption,

"Global weight decay for all layers.\n");

declareOption(ol, "penalty_type", &NonLocalManifoldParzen::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "optimizer", &NonLocalManifoldParzen::optimizer,

OptionBase::buildoption,

"Optimizer that optimizes the cost function.\n"

);

declareOption(ol, "batch_size",

&NonLocalManifoldParzen::batch_size, OptionBase::buildoption,

"How many samples to use to estimate the average gradient\n"

"before updating the weights. If <= 0, is equivalent to\n"

"specifying training_set->length() \n");

// ** Stored outputs of neural network

declareOption(ol, "mus",

&NonLocalManifoldParzen::mus, OptionBase::learntoption,

"The stored mu vectors for the reference set.\n"

);

declareOption(ol, "sns", &NonLocalManifoldParzen::sns,

OptionBase::learntoption,

"The stored sigma noise values for the reference set.\n"

);

declareOption(ol, "sms", &NonLocalManifoldParzen::sms,

OptionBase::learntoption,

"The stored sigma manifold values for the reference set.\n"

);

declareOption(ol, "Fs", &NonLocalManifoldParzen::Fs, OptionBase::learntoption,

"The storaged \"principal components\" (F) values for\n"

"the reference set.\n"

);

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::NonLocalManifoldParzen::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 233 of file NonLocalManifoldParzen.h.

| NonLocalManifoldParzen * PLearn::NonLocalManifoldParzen::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

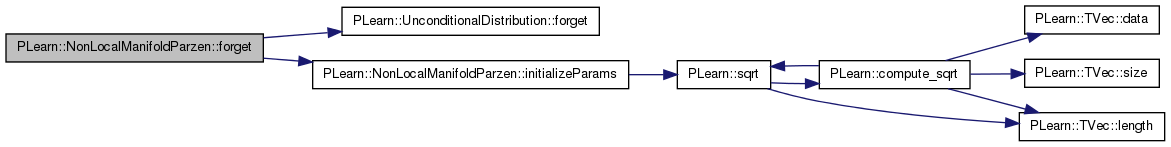

| void PLearn::NonLocalManifoldParzen::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 471 of file NonLocalManifoldParzen.cc.

References PLearn::UnconditionalDistribution::forget(), initializeParams(), optimizer, PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

inherited::forget();

if (train_set) initializeParams();

if(optimizer) optimizer->reset();

stage = 0;

}

| OptionList & PLearn::NonLocalManifoldParzen::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| OptionMap & PLearn::NonLocalManifoldParzen::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

| RemoteMethodMap & PLearn::NonLocalManifoldParzen::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 92 of file NonLocalManifoldParzen.cc.

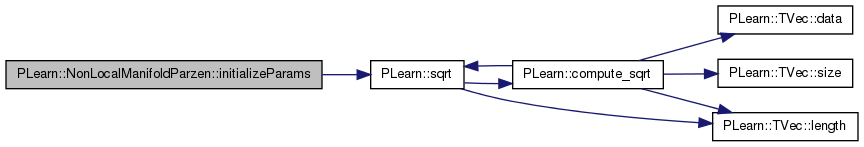

| void PLearn::NonLocalManifoldParzen::initializeParams | ( | ) | [protected, virtual] |

Definition at line 560 of file NonLocalManifoldParzen.cc.

References init_sig, PLearn::PLearner::inputsize_, muV, nhidden, PLearn::PLearner::random_gen, sigma_init, snV, PLearn::sqrt(), V, and W.

Referenced by build_(), and forget().

{

real delta = 1.0 / sqrt(real(inputsize_));

random_gen->fill_random_uniform(W->value, -delta, delta);

delta = 1.0 / real(nhidden);

random_gen->fill_random_uniform(V->matValue, -delta, delta);

random_gen->fill_random_uniform(snV->matValue, -delta, delta);

random_gen->fill_random_uniform(muV->matValue, -delta, delta);

W->matValue(0).clear();

V->matValue(0).clear();

muV->matValue(0).clear();

snV->matValue(0).clear();

init_sig->value[0] = sqrt(sigma_init);

}

| void PLearn::NonLocalManifoldParzen::knn | ( | const VMat & | vm, |

| const Vec & | x, | ||

| const int & | k, | ||

| TVec< int > & | neighbors, | ||

| bool | sortk | ||

| ) | const [private] |

Finds nearest neighbors of "x" in set "vm" and puts their indices in "neighbors".

The neighbors can be sorted if "sortk" is true

Definition at line 382 of file NonLocalManifoldParzen.cc.

References PLearn::TMat< T >::column(), distances, dk, PLearn::Kernel::evaluate_all_i_x(), i, PLearn::VMat::length(), n, PLearn::partialSortRows(), PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::DistanceKernel::setDataForKernelMatrix(), and t_dist.

Referenced by build_(), and log_density().

{

int n = vm->length();

distances.resize(n,2);

distances.column(1) << Vec(0, n-1, 1);

dk.setDataForKernelMatrix(vm);

t_dist.resize(n);

dk.evaluate_all_i_x(x, t_dist);

distances.column(0) << t_dist;

partialSortRows(distances, k, sortk);

neighbors.resize(k);

for (int i=0; i < k && i<n; i++)

{

neighbors[i] = int(distances(i,1));

}

}

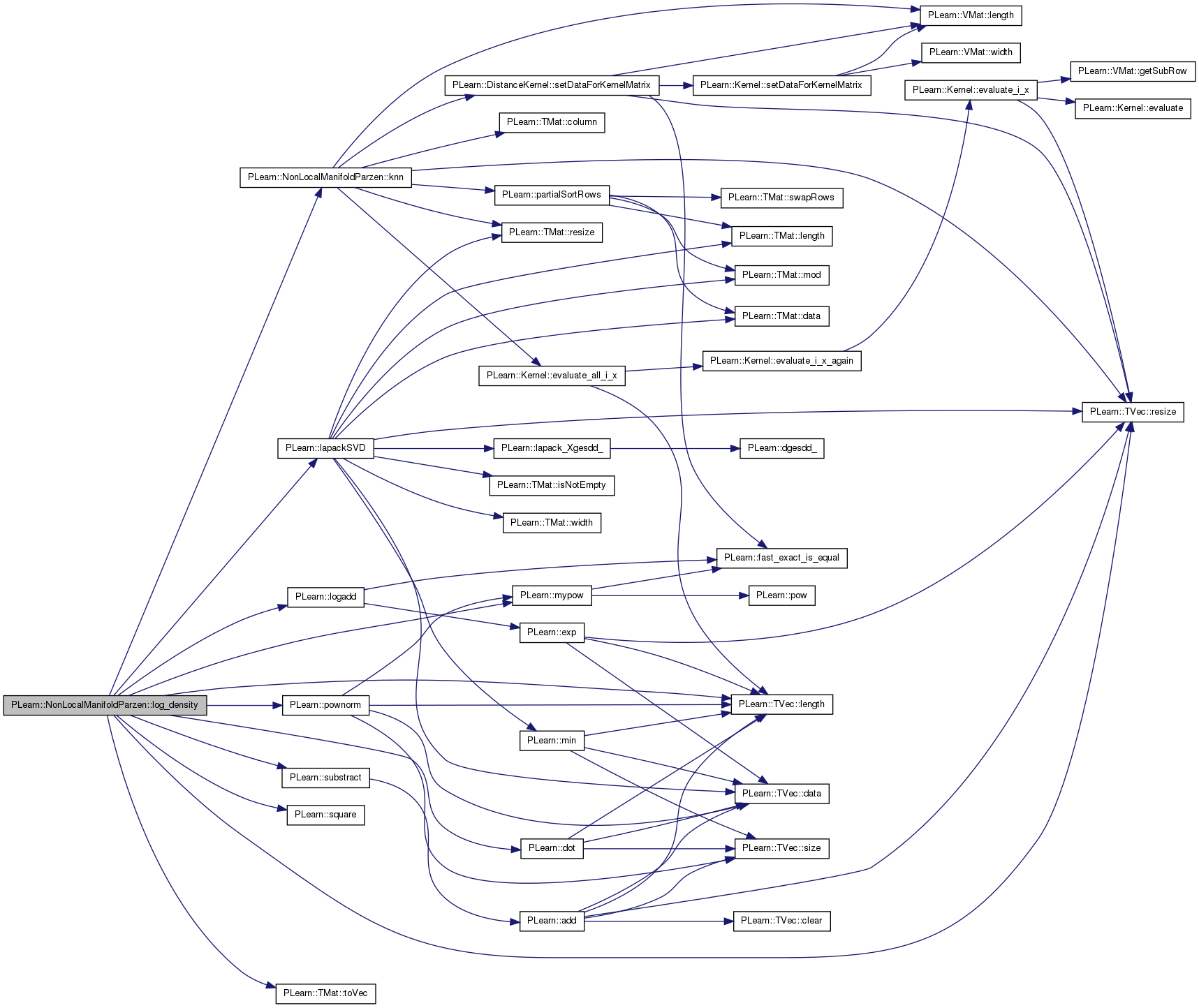

Return log of probability density log(p(y)).

Reimplemented from PLearn::PDistribution.

Definition at line 578 of file NonLocalManifoldParzen.cc.

References PLearn::dot(), F, Fs, i, PLearn::PLearner::inputsize_, knn(), L, PLearn::lapackSVD(), learn_mu, PLearn::TVec< T >::length(), Log2Pi, log_gauss, log_L, PLearn::logadd(), min_sig, mu_temp, mus, PLearn::mypow(), ncomponents, neighbor_row, nneighbors_density, pl_log, PLearn::pownorm(), predictor, reference_set, PLearn::TVec< T >::resize(), S_svd, sigma_min, sm_temp, sms, sn_temp, sns, PLearn::square(), store_prediction, PLearn::substract(), t_nn, t_row, PLearn::TMat< T >::toVec(), U_temp, Ut_svd, V_svd, x, x_minus_neighbor, and z.

{

// Compute log-density.

real ret = 0;

t_row << x;

real mahal = 0;

real norm_term = 0;

// Update sigma_min, in case it was changed,

// e.g. using an HyperLearner

if(store_prediction && min_sig->value[0] != sigma_min)

{

for(int i=0; i<L; i++)

{

sns[i] += sigma_min - min_sig->value[0];

}

}

min_sig->value[0] = sigma_min;

if(nneighbors_density != L)

{

// Fetching nearest neighbors for density estimation.

knn(reference_set,x,nneighbors_density,t_nn,0);

log_gauss.resize(t_nn.length());

for(int neighbor=0; neighbor<t_nn.length(); neighbor++)

{

reference_set->getRow(t_nn[neighbor],neighbor_row);

if(!store_prediction)

{

predictor->fprop(neighbor_row, F.toVec() & mu_temp & sn_temp);

// N.B. this is the SVD of F'

lapackSVD(F, Ut_svd, S_svd, V_svd,'A',1.5);

for (int k=0;k<ncomponents;k++)

{

sm_temp[k] = mypow(S_svd[k],2);

U_temp(k) << Ut_svd(k);

}

}

else

{

if(learn_mu)

mu_temp << mus(t_nn[neighbor]);

sn_temp[0] = sns[t_nn[neighbor]];

sm_temp << sms(t_nn[neighbor]);

U_temp << Fs[t_nn[neighbor]];

}

if(learn_mu)

{

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mu_temp,z);

}

else

substract(t_row,neighbor_row,z);

mahal = -0.5*pownorm(z)/sn_temp[0];

norm_term = - inputsize_/2.0 * Log2Pi

- log_L - 0.5*(inputsize_-ncomponents)*pl_log(sn_temp[0]);

for(int k=0; k<ncomponents; k++)

{

mahal -= square(dot(z,U_temp(k)))*(0.5/(sm_temp[k]+sn_temp[0])

- 0.5/sn_temp[0]);

norm_term -= 0.5*pl_log(sm_temp[k]+sn_temp[0]);

}

log_gauss[neighbor] = mahal + norm_term;

}

}

else

{

// Fetching nearest neighbors for density estimation.

log_gauss.resize(L);

for(int t=0; t<L;t++)

{

reference_set->getRow(t,neighbor_row);

if(!store_prediction)

{

predictor->fprop(neighbor_row, F.toVec() & mu_temp & sn_temp);

// N.B. this is the SVD of F'

lapackSVD(F, Ut_svd, S_svd, V_svd,'A',1.5);

for (int k=0;k<ncomponents;k++)

{

sm_temp[k] = mypow(S_svd[k],2);

U_temp(k) << Ut_svd(k);

}

}

else

{

if(learn_mu)

mu_temp << mus(t);

sn_temp[0] = sns[t];

sm_temp << sms(t);

U_temp << Fs[t];

}

if(learn_mu)

{

substract(t_row,neighbor_row,x_minus_neighbor);

substract(x_minus_neighbor,mu_temp,z);

}

else

substract(t_row,neighbor_row,z);

mahal = -0.5*pownorm(z)/sn_temp[0];

norm_term = - inputsize_/2.0 * Log2Pi - log_L

- 0.5*(inputsize_-ncomponents)*pl_log(sn_temp[0]);

for(int k=0; k<ncomponents; k++)

{

mahal -= square(dot(z,U_temp(k)))*(0.5/(sm_temp[k]+sn_temp[0])

- 0.5/sn_temp[0]);

norm_term -= 0.5*pl_log(sm_temp[k]+sn_temp[0]);

}

log_gauss[t] = mahal + norm_term;

}

}

ret = logadd(log_gauss);

return ret;

}

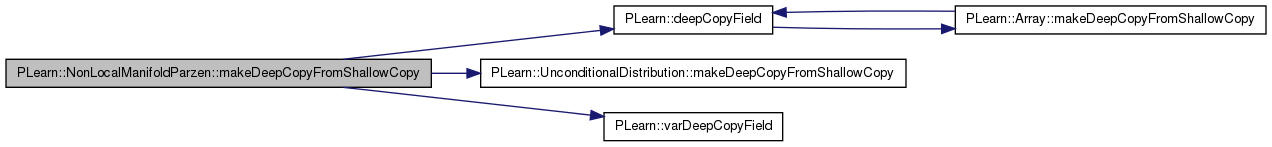

| void PLearn::NonLocalManifoldParzen::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 416 of file NonLocalManifoldParzen.cc.

References components, cost_of_one_example, PLearn::deepCopyField(), diff, distances, F, Fs, init_sig, log_gauss, PLearn::UnconditionalDistribution::makeDeepCopyFromShallowCopy(), min_sig, mu, mu_temp, mus, muV, neighbor_row, optimizer, parameters, paramsvalues, predictor, reference_set, S_svd, sm_temp, sms, sn, sn_temp, sns, snV, sum_nll, t_dist, t_nn, t_row, tangent_targets, targets_vmat, totalcost, train_set_with_targets, U_temp, Ut_svd, V, V_svd, PLearn::varDeepCopyField(), W, x, x_minus_neighbor, and z.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// Protected

deepCopyField(cost_of_one_example, copies);

varDeepCopyField(x, copies);

varDeepCopyField(W, copies);

varDeepCopyField(V, copies);

varDeepCopyField(muV, copies);

varDeepCopyField(snV, copies);

varDeepCopyField(tangent_targets, copies);

varDeepCopyField(components, copies);

varDeepCopyField(mu, copies);

varDeepCopyField(sn, copies);

varDeepCopyField(sum_nll, copies);

varDeepCopyField(min_sig, copies);

varDeepCopyField(init_sig, copies);

deepCopyField(predictor, copies);

deepCopyField(U_temp,copies);

deepCopyField(F, copies);

deepCopyField(distances,copies);

deepCopyField(mu_temp,copies);

deepCopyField(sm_temp,copies);

deepCopyField(sn_temp,copies);

deepCopyField(diff,copies);

deepCopyField(z,copies);

deepCopyField(x_minus_neighbor,copies);

deepCopyField(t_row,copies);

deepCopyField(neighbor_row,copies);

deepCopyField(log_gauss,copies);

deepCopyField(t_dist,copies);

deepCopyField(t_nn,copies);

deepCopyField(Ut_svd, copies);

deepCopyField(V_svd, copies);

deepCopyField(S_svd, copies);

deepCopyField(mus, copies);

deepCopyField(sns, copies);

deepCopyField(sms, copies);

deepCopyField(Fs, copies);

deepCopyField(train_set_with_targets, copies);

deepCopyField(targets_vmat, copies);

varDeepCopyField(totalcost, copies);

deepCopyField(paramsvalues, copies);

// Public

deepCopyField(parameters, copies);

deepCopyField(reference_set,copies);

deepCopyField(optimizer, copies);

}

| int PLearn::NonLocalManifoldParzen::outputsize | ( | ) | const [virtual] |

Returned value depends on outputs_def.

Reimplemented from PLearn::PDistribution.

Definition at line 795 of file NonLocalManifoldParzen.cc.

References PLearn::PDistribution::outputs_def, and PLearn::PDistribution::outputsize().

{

switch(outputs_def[0])

{

/*

case 'm':

return ncomponents;

break;

case 'r':

return n;

case 't':

return ncomponents*n;

*/

default:

return inherited::outputsize();

}

}

| void PLearn::NonLocalManifoldParzen::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Reimplemented from PLearn::PDistribution.

Definition at line 479 of file NonLocalManifoldParzen.cc.

References PLearn::append_neighbors(), batch_size, cost_of_one_example, PLearn::endl(), F, Fs, L, PLearn::lapackSVD(), PLearn::VMat::length(), PLearn::meanOf(), min_sig, mus, PLearn::mypow(), ncomponents, neighbor_row, nneighbors, nsamples, PLearn::PLearner::nstages, optimizer, parameters, PLERROR, predictor, reference_set, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), S_svd, sigma_min, sms, sns, PLearn::PLearner::stage, store_prediction, PLearn::TVec< T >::subVec(), t_row, targets_vmat, PLearn::tostring(), totalcost, PLearn::TMat< T >::toVec(), PLearn::PLearner::train_set, train_set_with_targets, PLearn::PLearner::train_stats, Ut_svd, V_svd, PLearn::PLearner::verbosity, and PLearn::VMat::width().

{

// Check whether gradient descent is going to be done

// If not, then we don't need to store the parameters,

// except for sn...

bool flag = (nstages == stage);

// Update sigma_min, in case it was changed,

// e.g. using an HyperLearner

min_sig->value[0] = sigma_min;

// Set train_stats if not already done.

if (!train_stats)

train_stats = new VecStatsCollector();

if (!cost_of_one_example)

PLERROR("NonLocalManifoldParzen::train: build has not been run after setTrainingSet!");

if(stage == 0)

{

targets_vmat = append_neighbors(

train_set, nneighbors, true);

nsamples = batch_size>0 ? batch_size : train_set->length();

totalcost = meanOf(train_set_with_targets, cost_of_one_example, nsamples);

if(optimizer)

{

optimizer->setToOptimize(parameters, totalcost);

optimizer->build();

}

else PLERROR("NonLocalManifoldParzen::train can't train without setting an optimizer first!");

}

int optstage_per_lstage = train_set->length()/nsamples;

PP<ProgressBar> pb;

if(report_progress>0)

pb = new ProgressBar("Training NonLocalManifoldParzen from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

t_row.resize(train_set.width());

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

optimizer->optimizeN(*train_stats);

train_stats->finalize();

if(verbosity>2)

cout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

cout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

if(store_prediction && !flag)

{

for(int t=0; t<L;t++)

{

reference_set->getRow(t,neighbor_row);

predictor->fprop(neighbor_row, F.toVec() & mus(t) & sns.subVec(t,1));

// N.B. this is the SVD of F'

lapackSVD(F, Ut_svd, S_svd, V_svd,'A',1.5);

for (int k=0;k<ncomponents;k++)

{

sms(t,k) = mypow(S_svd[k],2);

Fs[t](k) << Ut_svd(k);

}

sns[t] += sigma_min - min_sig->value[0];

}

}

}

Reimplemented from PLearn::UnconditionalDistribution.

Definition at line 233 of file NonLocalManifoldParzen.h.

Batch size of the gradient-based optimization.

Definition at line 182 of file NonLocalManifoldParzen.h.

Referenced by declareOptions(), and train().

Var PLearn::NonLocalManifoldParzen::components [protected] |

Tangent vectors spanning the tangent plane, given by the neural network.

Definition at line 85 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Cost of one example.

Definition at line 76 of file NonLocalManifoldParzen.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::NonLocalManifoldParzen::diff [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Mat PLearn::NonLocalManifoldParzen::distances [mutable, protected] |

Definition at line 100 of file NonLocalManifoldParzen.h.

Referenced by knn(), and makeDeepCopyFromShallowCopy().

DistanceKernel PLearn::NonLocalManifoldParzen::dk [mutable, protected] |

log_density and Kernel methods' temporary variables

Definition at line 107 of file NonLocalManifoldParzen.h.

Referenced by knn().

Mat PLearn::NonLocalManifoldParzen::F [mutable, protected] |

Definition at line 100 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

TVec<Mat> PLearn::NonLocalManifoldParzen::Fs [protected] |

Predictions for F.

Definition at line 121 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::init_sig [protected] |

Initial (approximate) value of sigma^2_noise.

Definition at line 95 of file NonLocalManifoldParzen.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

int PLearn::NonLocalManifoldParzen::L [protected] |

Number of gaussians.

Definition at line 72 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and train().

Indication that the mean of the gaussians should be learned.

Definition at line 159 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), and log_density().

Vec PLearn::NonLocalManifoldParzen::log_gauss [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by log_density(), and makeDeepCopyFromShallowCopy().

real PLearn::NonLocalManifoldParzen::log_L [protected] |

Logarithm of number of gaussians.

Definition at line 74 of file NonLocalManifoldParzen.h.

Referenced by build_(), and log_density().

Var PLearn::NonLocalManifoldParzen::min_sig [protected] |

Mininum value of sigma^2_noise.

Definition at line 93 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::mu [protected] |

Mean of the gaussian.

Definition at line 87 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Number of neighbors to learn the mus.

Definition at line 165 of file NonLocalManifoldParzen.h.

Referenced by build_(), and declareOptions().

Vec PLearn::NonLocalManifoldParzen::mu_temp [mutable, protected] |

log_density and Kernel methods' temporary variables

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Mat PLearn::NonLocalManifoldParzen::mus [protected] |

Predictions for mu.

Definition at line 115 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::muV [protected] |

Definition at line 80 of file NonLocalManifoldParzen.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Number of reduced dimensions (number of tangent vectors to compute)

Definition at line 148 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), and train().

Vec PLearn::NonLocalManifoldParzen::neighbor_row [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Number of hidden units.

Definition at line 174 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), and initializeParams().

Number of neighbors used for gradient descent.

Definition at line 150 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), and train().

Number of neighbors for the p(x) density estimation.

Definition at line 152 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), and log_density().

int PLearn::NonLocalManifoldParzen::nsamples [protected] |

Optimizer of the neural network.

Definition at line 180 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Parameters of the model.

Definition at line 144 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::NonLocalManifoldParzen::paramsvalues [protected] |

Parameter values.

Definition at line 133 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

Penalty type to use on the weights.

Definition at line 178 of file NonLocalManifoldParzen.h.

Referenced by build_(), and declareOptions().

Func PLearn::NonLocalManifoldParzen::predictor [protected] |

Predictor of the parameters of the gaussian at x.

Definition at line 97 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Reference set of points in the gaussian mixture.

Definition at line 146 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::NonLocalManifoldParzen::S_svd [mutable, protected] |

SVD computation variables.

Definition at line 112 of file NonLocalManifoldParzen.h.

Referenced by log_density(), makeDeepCopyFromShallowCopy(), and train().

Initial (approximate) value of sigma^2_noise.

Definition at line 161 of file NonLocalManifoldParzen.h.

Referenced by declareOptions(), and initializeParams().

Minimum value of sigma^2_noise.

Definition at line 163 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), and train().

Threshold applied on the update rule for sigma^2_noise.

Definition at line 167 of file NonLocalManifoldParzen.h.

Referenced by build_(), and declareOptions().

Vec PLearn::NonLocalManifoldParzen::sm_temp [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Mat PLearn::NonLocalManifoldParzen::sms [protected] |

Predictions for sm.

Definition at line 119 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::sn [protected] |

Sigma^2_noise of the gaussian.

Definition at line 89 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Vec PLearn::NonLocalManifoldParzen::sn_temp [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::NonLocalManifoldParzen::sns [protected] |

Predictions for sn.

Definition at line 117 of file NonLocalManifoldParzen.h.

Referenced by build_(), declareOptions(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::snV [protected] |

Definition at line 80 of file NonLocalManifoldParzen.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Indication that the predicted parameters should be stored.

Definition at line 154 of file NonLocalManifoldParzen.h.

Referenced by declareOptions(), log_density(), and train().

Var PLearn::NonLocalManifoldParzen::sum_nll [protected] |

Sum of NLL cost.

Definition at line 91 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

SVD threshold on the eigen values.

Definition at line 169 of file NonLocalManifoldParzen.h.

Referenced by declareOptions().

Vec PLearn::NonLocalManifoldParzen::t_dist [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by knn(), and makeDeepCopyFromShallowCopy().

TVec<int> PLearn::NonLocalManifoldParzen::t_nn [mutable, protected] |

log_density and Kernel methods' temporary variables

Definition at line 105 of file NonLocalManifoldParzen.h.

Referenced by log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::NonLocalManifoldParzen::t_row [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::tangent_targets [protected] |

Tangent vector targets.

Definition at line 82 of file NonLocalManifoldParzen.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

VMat PLearn::NonLocalManifoldParzen::targets_vmat [protected] |

Nearest neighbor differences targets.

Definition at line 126 of file NonLocalManifoldParzen.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::totalcost [protected] |

Total cost Var.

Definition at line 128 of file NonLocalManifoldParzen.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Training set concatenated with nearest neighbor targets.

Definition at line 124 of file NonLocalManifoldParzen.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::NonLocalManifoldParzen::U_temp [mutable, protected] |

log_density and Kernel methods' temporary variables

Definition at line 100 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Mat PLearn::NonLocalManifoldParzen::Ut_svd [mutable, protected] |

SVD computation variables.

Definition at line 110 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::V [protected] |

Definition at line 80 of file NonLocalManifoldParzen.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Mat PLearn::NonLocalManifoldParzen::V_svd [mutable, protected] |

Definition at line 110 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NonLocalManifoldParzen::W [protected] |

Parameters of the neural network.

Definition at line 80 of file NonLocalManifoldParzen.h.

Referenced by build_(), initializeParams(), and makeDeepCopyFromShallowCopy().

Weight decay for all weights.

Definition at line 176 of file NonLocalManifoldParzen.h.

Referenced by build_(), and declareOptions().

Var PLearn::NonLocalManifoldParzen::x [protected] |

Input vector.

Definition at line 78 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::NonLocalManifoldParzen::x_minus_neighbor [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::NonLocalManifoldParzen::z [mutable, protected] |

Definition at line 102 of file NonLocalManifoldParzen.h.

Referenced by build_(), log_density(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4