|

PLearn 0.1

|

|

PLearn 0.1

|

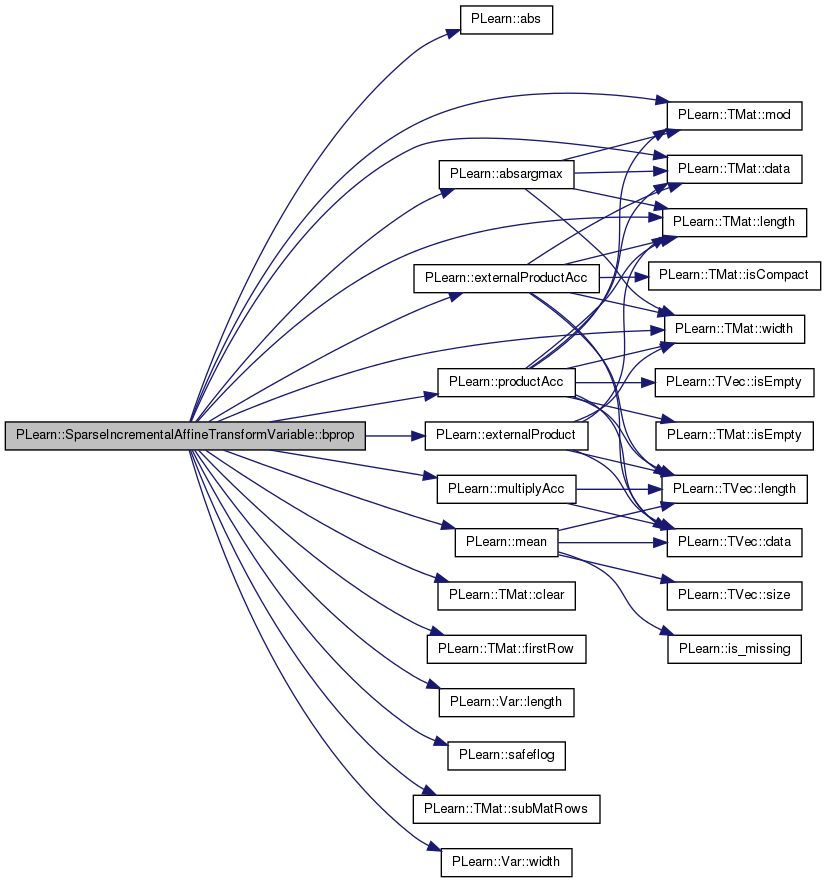

Affine transformation of a vector variable, with weights that are sparse and incrementally added Should work for both column and row vectors: result vector will be of same kind (row or col) First row of transformation matrix contains bias b, following rows contain linear-transformation T Will compute b + x.T. More...

#include <SparseIncrementalAffineTransformVariable.h>

Public Member Functions | |

| SparseIncrementalAffineTransformVariable () | |

| Default constructor for persistence. | |

| SparseIncrementalAffineTransformVariable (Variable *vec, Variable *transformation, real the_running_average_prop, real the_start_grad_prop) | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SparseIncrementalAffineTransformVariable * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

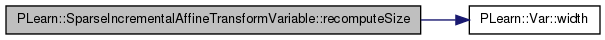

| virtual void | recomputeSize (int &l, int &w) const |

| Recomputes the length l and width w that this variable should have, according to its parent variables. | |

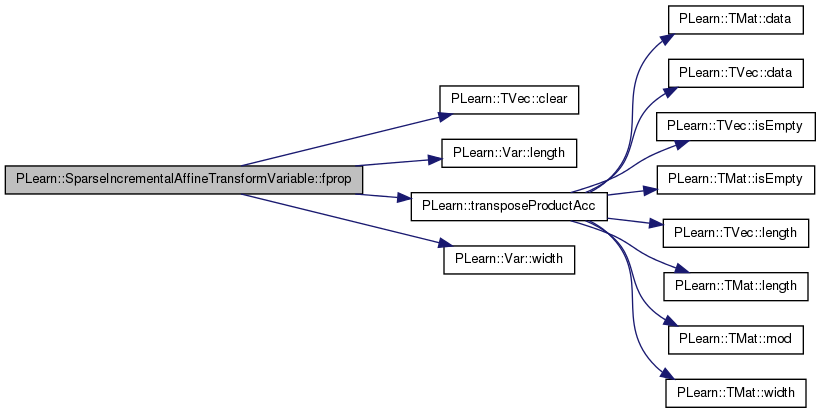

| virtual void | fprop () |

| compute output given input | |

| virtual void | bprop () |

| virtual void | symbolicBprop () |

| compute a piece of new Var graph that represents the symbolic derivative of this Var | |

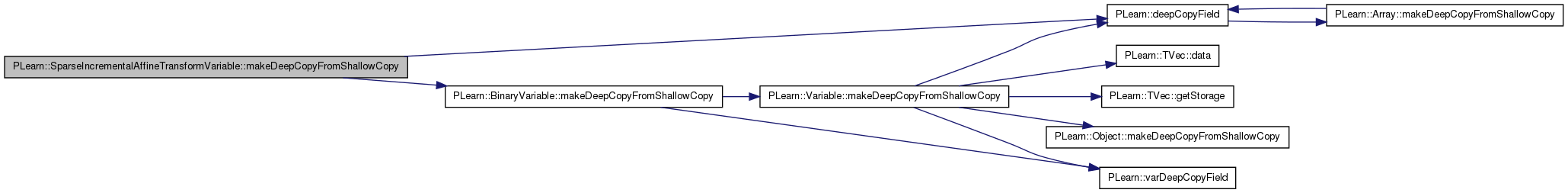

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

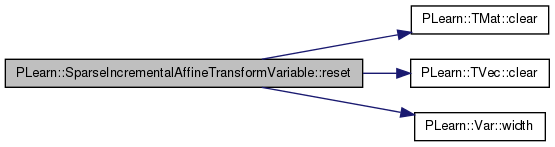

| void | reset () |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | declareOptions (OptionList &ol) |

| Declare options (data fields) for the class. | |

Public Attributes | |

| int | add_n_weights |

| real | start_grad_prop |

| real | running_average_prop |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | build_ () |

| This does the actual building. | |

Protected Attributes | |

| TVec< StatsCollector > | sc_input |

| TVec< StatsCollector > | sc_grad |

| TMat< StatsCollector > | sc_input_grad |

| Mat | positions |

| Mat | sums |

| Vec | input_average |

| int | n_grad_samples |

| bool | has_seen_input |

| int | n_weights |

Private Types | |

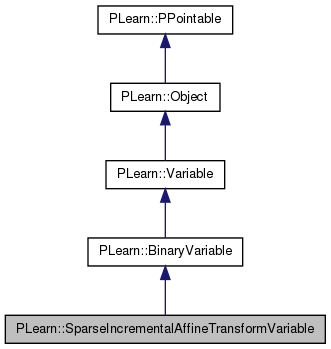

| typedef BinaryVariable | inherited |

Private Attributes | |

| Mat | temp_grad |

Affine transformation of a vector variable, with weights that are sparse and incrementally added Should work for both column and row vectors: result vector will be of same kind (row or col) First row of transformation matrix contains bias b, following rows contain linear-transformation T Will compute b + x.T.

In order to make sure that T is sparse, SparseIncrementalAffineTransformVariable only considers a subset of the entries in T, and only bprops to those entries, ignoring the others. The number of entries of T seen is incrementally increased, by selecting unseen entries with the highest average incoming gradient since the last addition of entries. When a new weight is added, it is set to start_grad_prop proportion of the average incoming gradient to that weight.

Definition at line 63 of file SparseIncrementalAffineTransformVariable.h.

typedef BinaryVariable PLearn::SparseIncrementalAffineTransformVariable::inherited [private] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 65 of file SparseIncrementalAffineTransformVariable.h.

| PLearn::SparseIncrementalAffineTransformVariable::SparseIncrementalAffineTransformVariable | ( | ) | [inline] |

Default constructor for persistence.

Definition at line 89 of file SparseIncrementalAffineTransformVariable.h.

: add_n_weights(0), start_grad_prop(1){}

| PLearn::SparseIncrementalAffineTransformVariable::SparseIncrementalAffineTransformVariable | ( | Variable * | vec, |

| Variable * | transformation, | ||

| real | the_running_average_prop, | ||

| real | the_start_grad_prop | ||

| ) |

Definition at line 74 of file SparseIncrementalAffineTransformVariable.cc.

References build_().

: inherited(vec, transformation, (vec->size() == 1) ? transformation->width() : (vec->isRowVec() ? 1 : transformation->width()), (vec->size() == 1) ? 1 : (vec->isRowVec() ? transformation->width() : 1)), n_grad_samples(0), has_seen_input(0), n_weights(0), add_n_weights(0), start_grad_prop(the_start_grad_prop), running_average_prop(the_running_average_prop) { build_(); }

| string PLearn::SparseIncrementalAffineTransformVariable::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| OptionList & PLearn::SparseIncrementalAffineTransformVariable::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| RemoteMethodMap & PLearn::SparseIncrementalAffineTransformVariable::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| Object * PLearn::SparseIncrementalAffineTransformVariable::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| StaticInitializer SparseIncrementalAffineTransformVariable::_static_initializer_ & PLearn::SparseIncrementalAffineTransformVariable::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

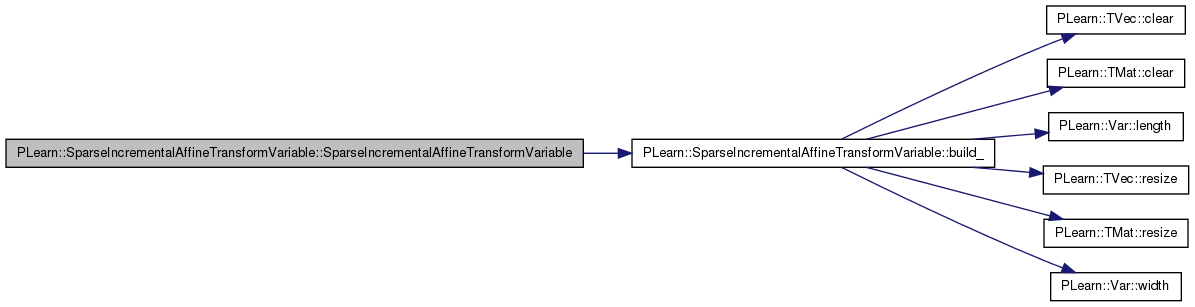

| void PLearn::SparseIncrementalAffineTransformVariable::bprop | ( | ) | [virtual] |

Implements PLearn::Variable.

Definition at line 211 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::abs(), PLearn::absargmax(), add_n_weights, PLearn::TMat< T >::clear(), PLearn::TMat< T >::data(), PLearn::externalProduct(), PLearn::externalProductAcc(), PLearn::TMat< T >::firstRow(), PLearn::Variable::gradient, i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, j, PLearn::TMat< T >::length(), PLearn::Var::length(), PLearn::mean(), PLearn::TMat< T >::mod(), PLearn::multiplyAcc(), n_grad_samples, n_weights, positions, PLearn::productAcc(), PLearn::safeflog(), sc_grad, sc_input, sc_input_grad, PLearn::TMat< T >::subMatRows(), sums, temp_grad, PLearn::TMat< T >::width(), and PLearn::Var::width().

{

if( n_weights >= (input2->matValue.length()-1)*input2->matValue.width())

{

Mat& afftr = input2->matValue;

int l = afftr.length();

// Vec bias = afftr.firstRow();

Mat lintr = afftr.subMatRows(1,l-1);

Mat& afftr_g = input2->matGradient;

Vec bias_g = afftr_g.firstRow();

Mat lintr_g = afftr_g.subMatRows(1,l-1);

bias_g += gradient;

if(!input1->dont_bprop_here)

productAcc(input1->gradient, lintr, gradient);

externalProductAcc(lintr_g, input1->value, gradient);

}

else

{

// Update Stats Collector

for(int i=0; i< input1->size(); i++)

{

sc_input[i].update(input1->value[i]);

for(int j=0; j< input2->width(); j++)

{

if(i==0) sc_grad[j].update(gradient[j]);

sc_input_grad(i,j).update(input1->value[i]*gradient[j]);

}

}

// Update sums of gradient

//externalProductAcc(sums, (input1->value-input_average)/input_stddev, gradient);

n_grad_samples++;

int l = input2->matValue.length();

// Set the sums for already added weights to 0

/*

for(int i=0; i<positions.length(); i++)

{

position_i = positions[i];

for(int j=0; j<position_i.length(); j++)

sums(position_i[j],i) = 0;

}

*/

//sums *= positions.subMatRows(1,l-1);

if(add_n_weights > 0)

{

// Watch out! This is not compatible with the previous version!

sums.clear();

Mat positions_lin = positions.subMatRows(1,l-1);

real* sums_i = sums.data();

real* positions_lin_i = positions_lin.data();

for(int i=0; i<sums.length(); i++, sums_i+=sums.mod(),positions_lin_i+=positions_lin.mod())

for(int j=0; j<sums.width(); j++)

{

//sums_i[j] *= 1-positions_lin_i[j];

if(positions_lin_i[j] == 0)

{

sums_i[j] = safeflog(abs(sc_input_grad(i,j).mean() - sc_input[i].mean() * sc_grad[j].mean()))

- safeflog( sc_input[i].stddev() * sc_grad[j].stddev());

}

}

while(add_n_weights >0 && n_weights < (input2->matValue.length()-1)*input2->matValue.width())

{

add_n_weights--;

n_weights++;

int maxi, maxj;

absargmax(sums,maxi,maxj);

//input2->matValue(maxi+1,maxj) = start_grad_prop * sums(maxi,maxj)/n_grad_samples;

//positions[maxj].push_back(maxi);

if(positions(0,maxj) == 0)

positions(0,maxj) = 1;

positions(maxi+1,maxj) = 1;

sums(maxi,maxj) = 0;

}

// Initialize gradient cumulator

n_grad_samples=0;

sums.clear();

for(int i=0; i< input1->size(); i++)

{

sc_input[i].forget();

for(int j=0; j< input2->width(); j++)

{

if(i==0) sc_grad[j].forget();

sc_input_grad(i,j).forget();

}

}

}

// Do actual bprop

/*

for(int i=0; i<positions.length(); i++)

{

position_i = positions[i];

input2->matGradient(0,i) += position_i.length() != 0 ? gradient[i] : 0;

for(int j=0; j<position_i.length(); j++)

{

input2->matGradient(position_i[j]+1,i) += gradient[i] * input1->value[position_i[j]];

if(!input1->dont_bprop_here)

input1->gradient[position_i[j]] += gradient[i] * input2->matValue(position_i[j]+1,i);

}

}

*/

Mat& afftr = input2->matValue;

// Vec bias = afftr.firstRow();

Mat lintr = afftr.subMatRows(1,l-1);

Mat& afftr_g = input2->matGradient;

Vec bias_g = afftr_g.firstRow();

multiplyAcc(bias_g,gradient,positions.firstRow());

if(!input1->dont_bprop_here)

productAcc(input1->gradient, lintr, gradient);

externalProduct(temp_grad, input1->value, gradient);

temp_grad *= positions.subMatRows(1,l-1);

afftr_g.subMatRows(1,l-1) += temp_grad;

}

}

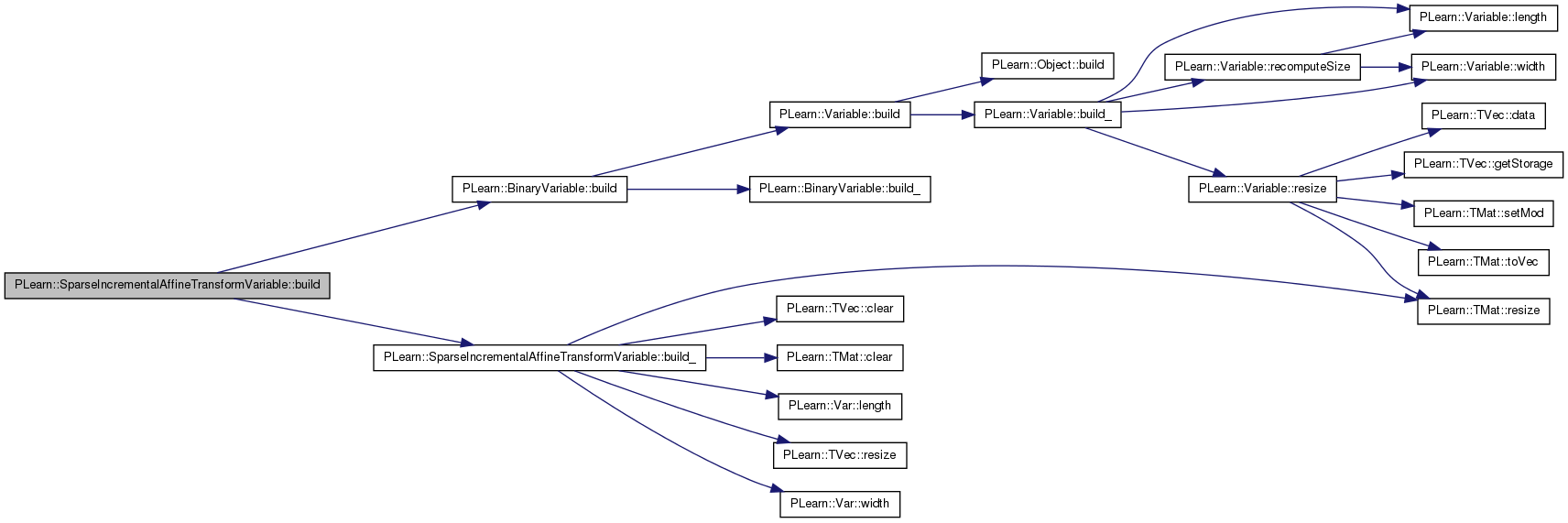

| void PLearn::SparseIncrementalAffineTransformVariable::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::BinaryVariable.

Definition at line 114 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::BinaryVariable::build(), and build_().

{

inherited::build();

build_();

}

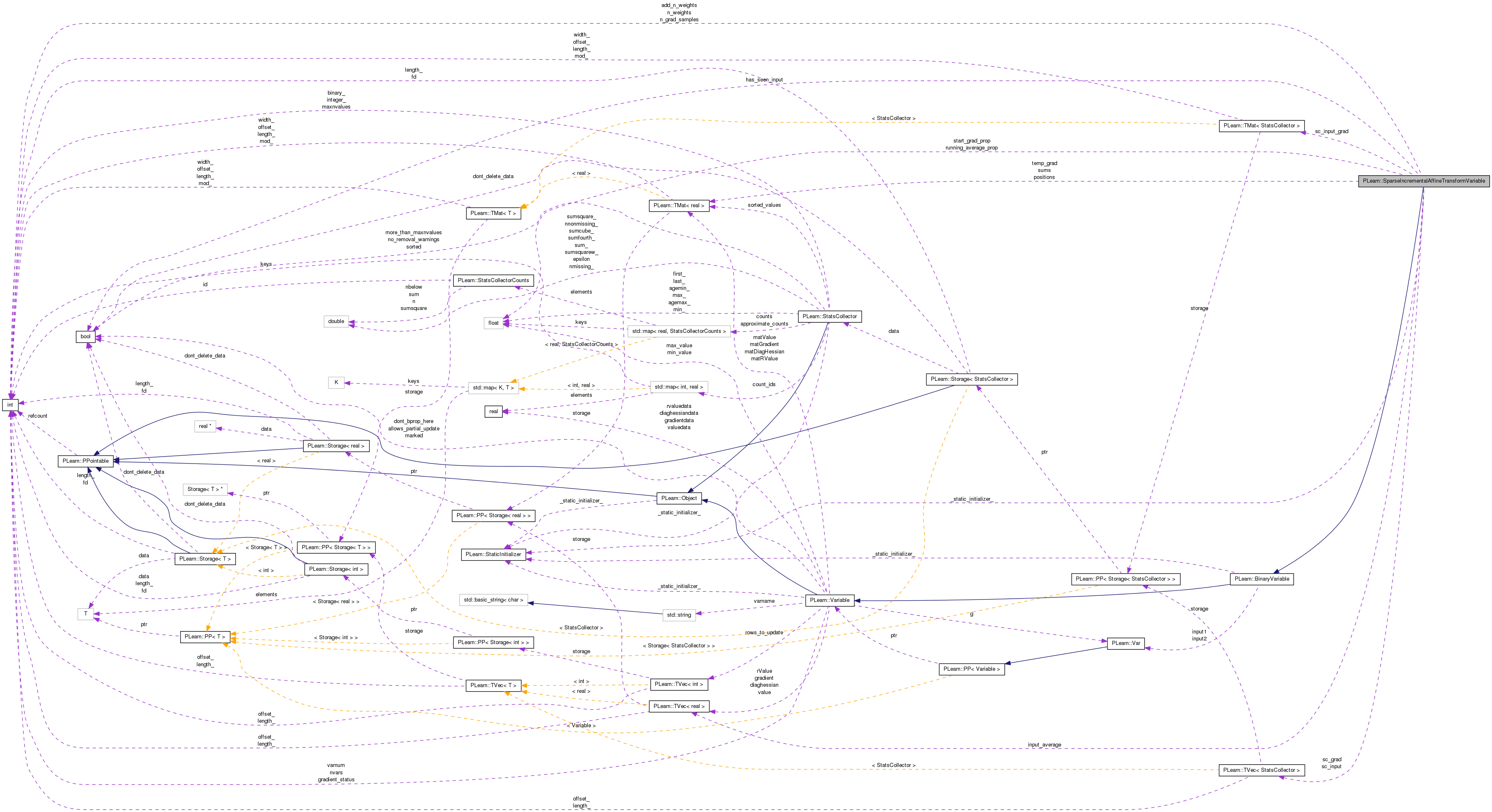

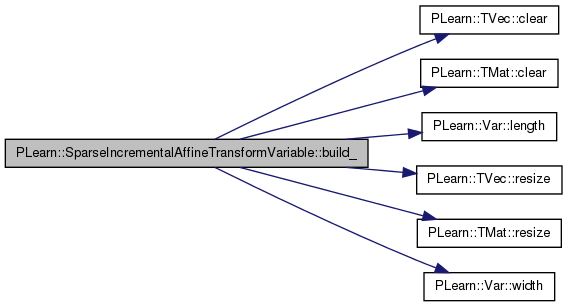

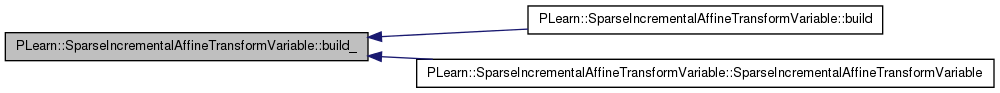

| void PLearn::SparseIncrementalAffineTransformVariable::build_ | ( | ) | [protected] |

This does the actual building.

Reimplemented from PLearn::BinaryVariable.

Definition at line 121 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::TVec< T >::clear(), PLearn::TMat< T >::clear(), has_seen_input, i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, input_average, j, PLearn::Var::length(), n_grad_samples, PLERROR, positions, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), sc_grad, sc_input, sc_input_grad, sums, temp_grad, and PLearn::Var::width().

Referenced by build(), and SparseIncrementalAffineTransformVariable().

{

// input1 is vec from constructor

if (input1 && !input1->isVec())

PLERROR("In SparseIncrementalAffineTransformVariable: expecting a vector Var (row or column) as first argument");

if(input1->size() != input2->length()-1)

PLERROR("In SparseIncrementalAffineTransformVariable: transformation matrix (%d+1) and input vector (%d) have incompatible lengths",input2->length()-1,input1->size());

if(n_grad_samples == 0)

{

sums.resize(input2->length()-1,input2->width());

sums.clear();

}

if(!has_seen_input)

{

input_average.resize(input2->length()-1);

input_average.clear();

positions.resize(input2->length(),input2->width());

positions.clear();

sc_input.resize(input1->size());

sc_grad.resize(input2->width());

sc_input_grad.resize(input2->length()-1,input2->width());

// This may not be necessary ...

for(int i=0; i< input1->size(); i++)

{

sc_input[i].forget();

for(int j=0; j< input2->width(); j++)

{

if(i==0) sc_grad[j].forget();

sc_input_grad(i,j).forget();

}

}

}

temp_grad.resize(input2->length()-1,input2->width());

temp_grad.clear();

}

| string PLearn::SparseIncrementalAffineTransformVariable::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

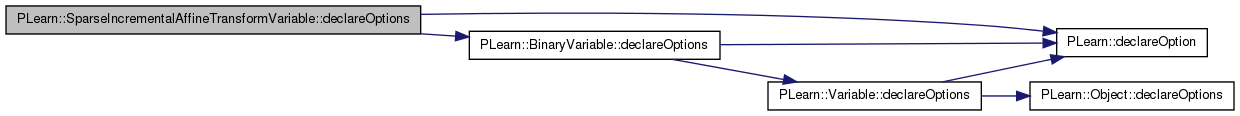

| void PLearn::SparseIncrementalAffineTransformVariable::declareOptions | ( | OptionList & | ol | ) | [static] |

Declare options (data fields) for the class.

Redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options). Please call the inherited method AT THE END to get the options listed in a consistent order (from most recently defined to least recently defined).

static void MyDerivedClass::declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "The size of the input; it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "The learned model weights"); inherited::declareOptions(ol); }

| ol | List of options that is progressively being constructed for the current class. |

Reimplemented from PLearn::BinaryVariable.

Definition at line 84 of file SparseIncrementalAffineTransformVariable.cc.

References add_n_weights, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::BinaryVariable::declareOptions(), has_seen_input, input_average, PLearn::OptionBase::learntoption, n_grad_samples, n_weights, positions, start_grad_prop, and sums.

{

declareOption(ol, "start_grad_prop", &SparseIncrementalAffineTransformVariable::start_grad_prop, OptionBase::buildoption,

"Proportion of the average incoming gradient used to initialize the added weights\n");

declareOption(ol, "add_n_weights", &SparseIncrementalAffineTransformVariable::add_n_weights, OptionBase::buildoption,

"Number of weights to add after next bprop\n");

declareOption(ol, "positions", &SparseIncrementalAffineTransformVariable::positions, OptionBase::learntoption,

"Positions of non-zero weights\n");

declareOption(ol, "sums", &SparseIncrementalAffineTransformVariable::sums, OptionBase::learntoption,

"Sums of the incoming gradient\n");

declareOption(ol, "input_average", &SparseIncrementalAffineTransformVariable::input_average, OptionBase::learntoption,

"Average of the input\n");

declareOption(ol, "n_grad_samples", &SparseIncrementalAffineTransformVariable::n_grad_samples, OptionBase::learntoption,

"Number of incoming gradient summed\n");

declareOption(ol, "has_seen_input", &SparseIncrementalAffineTransformVariable::has_seen_input, OptionBase::learntoption,

"Indication that this variable has seen at least one input sample\n");

declareOption(ol, "n_weights", &SparseIncrementalAffineTransformVariable::n_weights, OptionBase::learntoption,

"Number of weights in the affine transform\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SparseIncrementalAffineTransformVariable::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 92 of file SparseIncrementalAffineTransformVariable.h.

: void build_();

| SparseIncrementalAffineTransformVariable * PLearn::SparseIncrementalAffineTransformVariable::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| void PLearn::SparseIncrementalAffineTransformVariable::fprop | ( | ) | [virtual] |

compute output given input

Implements PLearn::Variable.

Definition at line 171 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::TVec< T >::clear(), PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Var::length(), n_weights, PLearn::transposeProductAcc(), PLearn::Variable::value, and PLearn::Var::width().

{

if( n_weights >= (input2->matValue.length()-1)*input2->matValue.width())

{

value << input2->matValue.firstRow();

Mat lintransform = input2->matValue.subMatRows(1,input2->length()-1);

transposeProductAcc(value, lintransform, input1->value);

}

else

{

value.clear();

/*

if(has_seen_input)

exponentialMovingAverageUpdate(input_average,input1->value,running_average_prop);

else

{

input_average << input1->value;

has_seen_input = true;

}

*/

value << input2->matValue.firstRow();

Mat lintransform = input2->matValue.subMatRows(1,input2->length()-1);

transposeProductAcc(value, lintransform, input1->value);

/*

for(int i=0; i<positions.length(); i++)

{

position_i = positions[i];

value[i] = position_i.length() != 0 ? input2->matValue(0,i) : 0;

for(int j=0; j<position_i.length(); j++)

{

value[i] += input2->matValue(position_i[j]+1,i) * input1->value[position_i[j]];

}

}

*/

}

}

| OptionList & PLearn::SparseIncrementalAffineTransformVariable::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| OptionMap & PLearn::SparseIncrementalAffineTransformVariable::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| RemoteMethodMap & PLearn::SparseIncrementalAffineTransformVariable::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file SparseIncrementalAffineTransformVariable.cc.

| void PLearn::SparseIncrementalAffineTransformVariable::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

This needs to be overridden by every class that adds "complex" data members to the class, such as Vec, Mat, PP<Something>, etc. Typical implementation:

void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { inherited::makeDeepCopyFromShallowCopy(copies); deepCopyField(complex_data_member1, copies); deepCopyField(complex_data_member2, copies); ... }

| copies | A map used by the deep-copy mechanism to keep track of already-copied objects. |

Reimplemented from PLearn::BinaryVariable.

Definition at line 343 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::deepCopyField(), input_average, PLearn::BinaryVariable::makeDeepCopyFromShallowCopy(), positions, sc_grad, sc_input, sc_input_grad, sums, and temp_grad.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(positions, copies);

deepCopyField(sums, copies);

deepCopyField(input_average, copies);

//deepCopyField(position_i, copies);

deepCopyField(temp_grad,copies);

deepCopyField(sc_input,copies);

deepCopyField(sc_grad,copies);

deepCopyField(sc_input_grad,copies);

}

| void PLearn::SparseIncrementalAffineTransformVariable::recomputeSize | ( | int & | l, |

| int & | w | ||

| ) | const [virtual] |

Recomputes the length l and width w that this variable should have, according to its parent variables.

This is used for ex. by sizeprop() The default version stupidly returns the current dimensions, so make sure to overload it in subclasses if this is not appropriate.

Reimplemented from PLearn::Variable.

Definition at line 161 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, and PLearn::Var::width().

{

if (input1 && input2) {

l = input1->isRowVec() ? 1 : input2->width();

w = input1->isColumnVec() ? 1 : input2->width();

} else

l = w = 0;

}

| void PLearn::SparseIncrementalAffineTransformVariable::reset | ( | ) |

Definition at line 356 of file SparseIncrementalAffineTransformVariable.cc.

References PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), has_seen_input, i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, input_average, j, n_grad_samples, n_weights, positions, sc_grad, sc_input, sc_input_grad, sums, and PLearn::Var::width().

{

/*

for(int i=0; i<positions.length(); i++)

{

positions[i].clear();

positions[i].resize(0);

}

*/

positions.clear();

sums.clear();

n_grad_samples = 0;

input_average.clear();

has_seen_input = false;

n_weights = 0;

for(int i=0; i< input1->size(); i++)

{

sc_input[i].forget();

for(int j=0; j< input2->width(); j++)

{

if(i==0) sc_grad[j].forget();

sc_input_grad(i,j).forget();

}

}

}

| void PLearn::SparseIncrementalAffineTransformVariable::symbolicBprop | ( | ) | [virtual] |

compute a piece of new Var graph that represents the symbolic derivative of this Var

Reimplemented from PLearn::Variable.

Definition at line 338 of file SparseIncrementalAffineTransformVariable.cc.

References PLERROR.

{

PLERROR("SparseIncrementalAffineTransformVariable::symbolicBprop() not implemented");

}

Reimplemented from PLearn::BinaryVariable.

Definition at line 92 of file SparseIncrementalAffineTransformVariable.h.

Definition at line 84 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), and declareOptions().

Definition at line 79 of file SparseIncrementalAffineTransformVariable.h.

Referenced by build_(), declareOptions(), and reset().

Definition at line 77 of file SparseIncrementalAffineTransformVariable.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 78 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), declareOptions(), and reset().

Definition at line 80 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), declareOptions(), fprop(), and reset().

Definition at line 75 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 86 of file SparseIncrementalAffineTransformVariable.h.

Definition at line 73 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 72 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 74 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 85 of file SparseIncrementalAffineTransformVariable.h.

Referenced by declareOptions().

Definition at line 76 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and reset().

Definition at line 68 of file SparseIncrementalAffineTransformVariable.h.

Referenced by bprop(), build_(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4