|

PLearn 0.1

|

|

PLearn 0.1

|

#include <DistRepNNet.h>

Public Member Functions | |

| DistRepNNet () | |

| virtual | ~DistRepNNet () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DistRepNNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

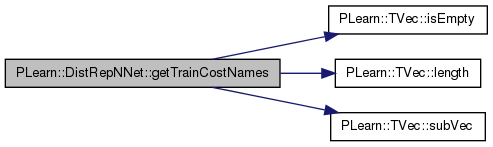

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

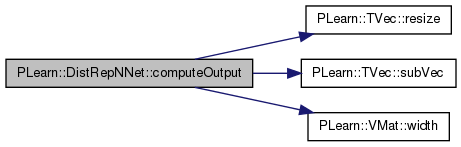

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

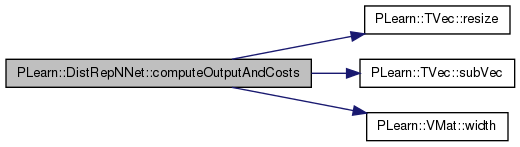

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

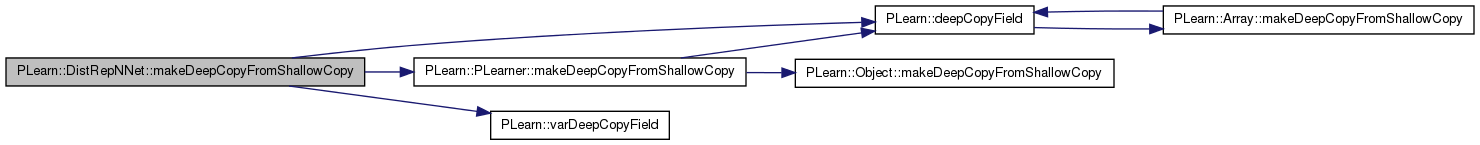

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

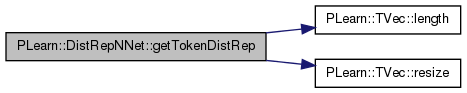

| void | getTokenDistRep (TVec< string > &token_features, Vec &dist_rep) |

| Gives distributed representation for token features. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Vec | paramsvalues |

| Parameter values (linked to params in build() ) It is put here to set these values instead of getting them by training. | |

| Var | input |

| Input vector. | |

| Var | dp_input |

| Distributed representation input vector. | |

| Var | target |

| Target vector. | |

| Var | sampleweight |

| Sample weight. | |

| Var | w1 |

| Bias and weights of first hidden layer. | |

| VarArray | winputsparse |

| Bias and weights from input layer. | |

| Var | w1theta |

| Weights of first hidden layer. | |

| VarArray | winputdistrep |

| Weights applied to the input of distributed representation predictor. | |

| Var | woutdistrep |

| Bias and weights going to the output layer of distributed representation predictor. | |

| Var | w2 |

| Bias and weights of second hidden layer. | |

| Var | wout |

| bias and weights of output layer | |

| Var | direct_wout |

| Direct input to output weights. | |

| Var | wouttheta |

| Bias and weights of output layer, target part, when no hidden layers. | |

| Var | outbias |

| Bias used only if fixed_output_weights. | |

| Var | dp_all_targets |

| Distributed representation of all targets. | |

| Var | token_features |

| Features of the token. | |

| Var | dist_rep |

| Distributed representation. | |

| TVec< PP< Dictionary > > | dictionaries |

| Distributed representations. | |

| Func | f |

| Seen classes in training set. | |

| Func | test_costf |

| Function from input and target to output and test_costs. | |

| Func | token_to_dist_rep |

| Function from token features to distributed representation. | |

| Func | paramf |

| Function for training. | |

| TVec< VMat > | extra_tasks |

| Extra tasks. | |

| int | nhidden |

| Number of hidden nunits in first hidden layer (default:0) | |

| int | nhidden2 |

| Number of hidden units in second hidden layer (default:0) | |

| TVec< int > | nhidden_extra_tasks |

| Number of hidden nunits in first hidden layer for extra tasks. | |

| TVec< int > | nhidden2_extra_tasks |

| Number of hidden units in second hidden layer for extra tasks. | |

| int | nhidden_theta_predictor |

| Number of hidden units of the neural network predictor for the hidden to output weights. | |

| int | nhidden_dist_rep_predictor |

| Number of hidden units of the neural network predictor for the distributed representation. | |

| real | weight_decay |

| Weight decay (default:0) | |

| real | bias_decay |

| Bias decay (default:0) | |

| real | input_dist_rep_predictor_bias_decay |

| Weight decay for weights going from input layer. | |

| real | output_dist_rep_predictor_bias_decay |

| Weight decay for weights going from hidden layer. | |

| real | input_dist_rep_predictor_weight_decay |

| Weight decay for weights going from input layer. | |

| real | output_dist_rep_predictor_weight_decay |

| Weight decay for weights going from hidden layer. | |

| real | layer1_weight_decay |

| Weight decay for weights from input layer to first hidden layer (default:0) | |

| real | layer1_bias_decay |

| Bias decay for weights from input layer to first hidden layer (default:0) | |

| real | layer1_theta_predictor_weight_decay |

| Weight decay for weights from input layer to first hidden layer of the theta-predictor (default:0) | |

| real | layer1_theta_predictor_bias_decay |

| Bias decay for weights from input layer to first hidden layer of the theta-predictor (default:0) | |

| real | layer2_weight_decay |

| Weight decay for weights from first hidden layer to second hidden layer (default:0) | |

| real | layer2_bias_decay |

| Bias decay for weights from first hidden layer to second hidden layer (default:0) | |

| real | output_layer_weight_decay |

| Weight decay for weights from last hidden layer to output layer (default:0) | |

| real | output_layer_bias_decay |

| Bias decay for weights from last hidden layer to output layer (default:0) | |

| real | output_layer_theta_predictor_weight_decay |

| Weight decay for weights from last hidden layer to output layer of the theta-predictor (default:0) | |

| real | output_layer_theta_predictor_bias_decay |

| Bias decay for weights from last hidden layer to output layer of the theta-predictor (default:0) | |

| real | direct_in_to_out_weight_decay |

| Weight decay for weights from input directly to output layer (default:0) | |

| real | direct_in_to_out_bias_decay |

| Bias decay for weights from input directly to output layer (default:0) | |

| real | margin |

| Margin requirement, used only with the margin_perceptron_cost cost function (default:1) | |

| bool | fixed_output_weights |

| If true then the output weights are not learned. They are initialized to +1 or -1 randomly (default:false) | |

| bool | direct_in_to_out |

| If true then direct input to output weights will be added (if nhidden > 0) | |

| string | penalty_type |

| Penalty to use on the weights (for weight and bias decay) (default:"L2_square") | |

| string | output_transfer_func |

| Transfer function to use for ouput layer (default:"") | |

| string | hidden_transfer_func |

| Transfer function to use for hidden units (default:"tanh") | |

| bool | do_not_change_params |

| If set to 1, the weights won't be loaded nor initialized at build time (default:false) | |

| TVec< string > | cost_funcs |

| Cost functions. | |

| PP< Optimizer > | optimizer |

| Build options related to the optimization: | |

| TVec< PP< Optimizer > > | optimizer_extra_tasks |

| Optimizers for extra tasks. | |

| int | batch_size |

| Number of samples to use to estimate gradient before an update. | |

| string | initialization_method |

| Method of initialization for neural network's weights. | |

| TVec< int > | dist_rep_dim |

| Dimensionality (number of components) of distributed representations. | |

| int | ntokens |

| Number of tokens, for which to predict a distributed representation. | |

| TVec< int > | ntokens_extra_tasks |

| Number of tokens, for which to predict a distributed representation, for extra tasks. | |

| int | nfeatures_per_token |

| Number of features per token. | |

| TVec< int > | nfeatures_for_each_token |

| Number of features for each token (nfeatures_per_token is used if nfeatures_for_each_token.length()==0) | |

| PP< Dictionary > | target_dictionary |

| Target dictionary. | |

| Mat | target_dist_rep |

| Target distributed representations. | |

| bool | use_dist_reps |

| Indication that distributed representations should be used. | |

| bool | use_output_weights_bases |

| Indication that bases for output weights should be used. | |

| bool | use_extra_tasks_only_on_first_epoch |

| Indication that the possible targets varies from one input vector to another. | |

| bool | initialize_sparse_params_to_zero |

| Indication that the parameters on the sparse input should be initialized to zero. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| Var | buildSparseAffineTransform (VarArray weights, Var input, TVec< int > input_to_dict_index, int begin) |

| Builds a sparse affine transformation from a set of weights and an input variable. | |

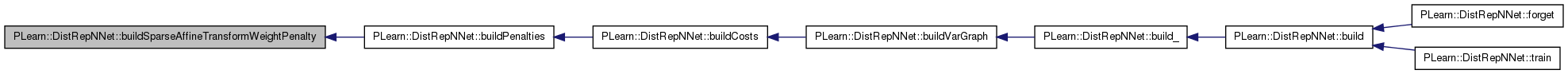

| Var | buildSparseAffineTransformWeightPenalty (VarArray weights, Var input, TVec< int > input_to_dict_index, int begin, real weight_decay, real bias_decay=0, string penalty_type="L2_square") |

| Builds a sparse affine transformation weight penalty from a set of weights and an input variable. | |

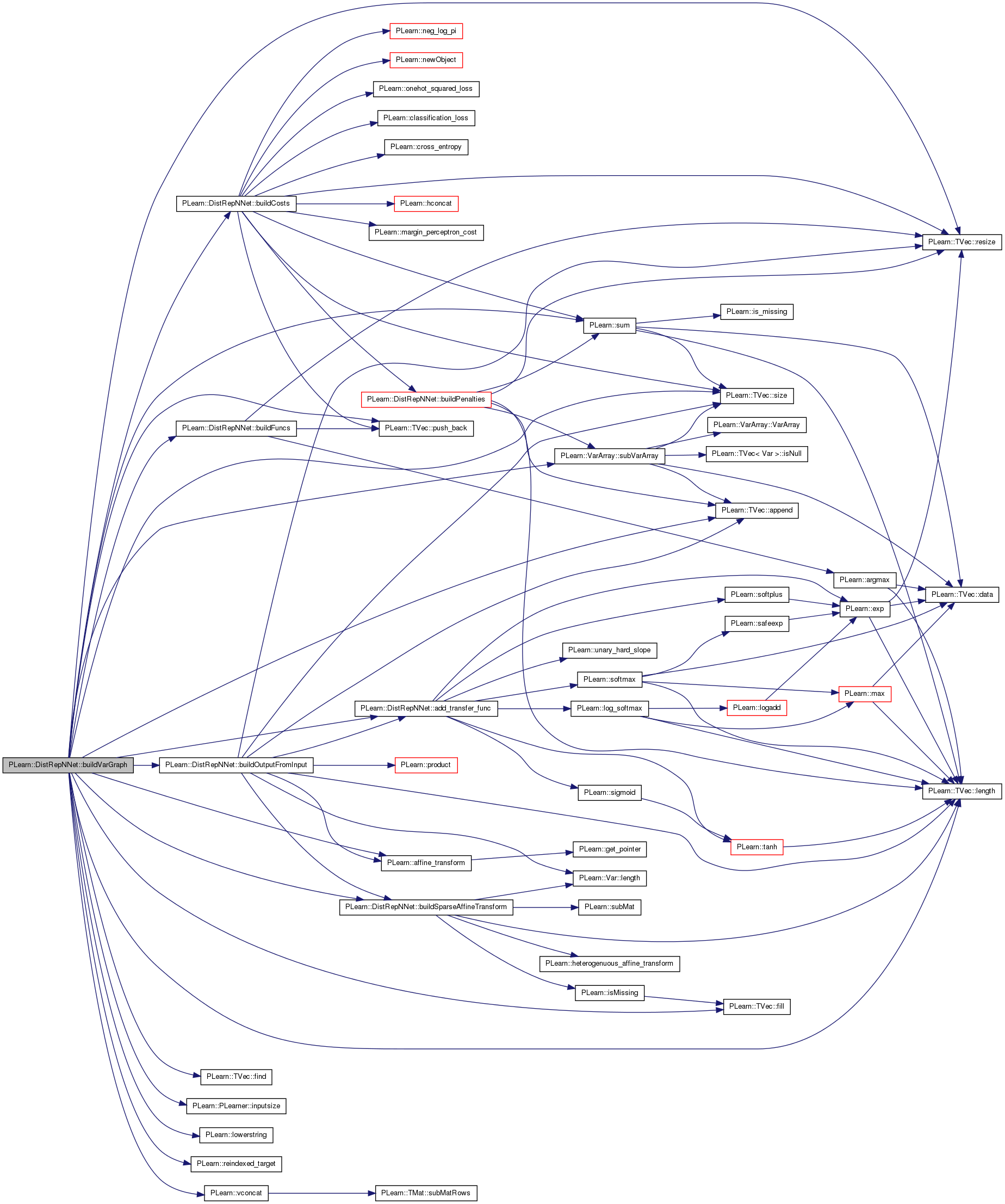

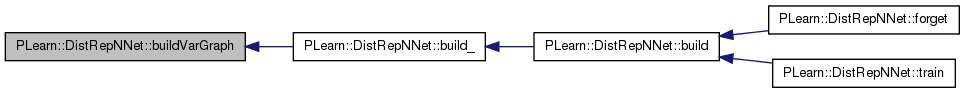

| void | buildVarGraph (int task_index) |

| Builds a var graph from a task, specified by task_index. | |

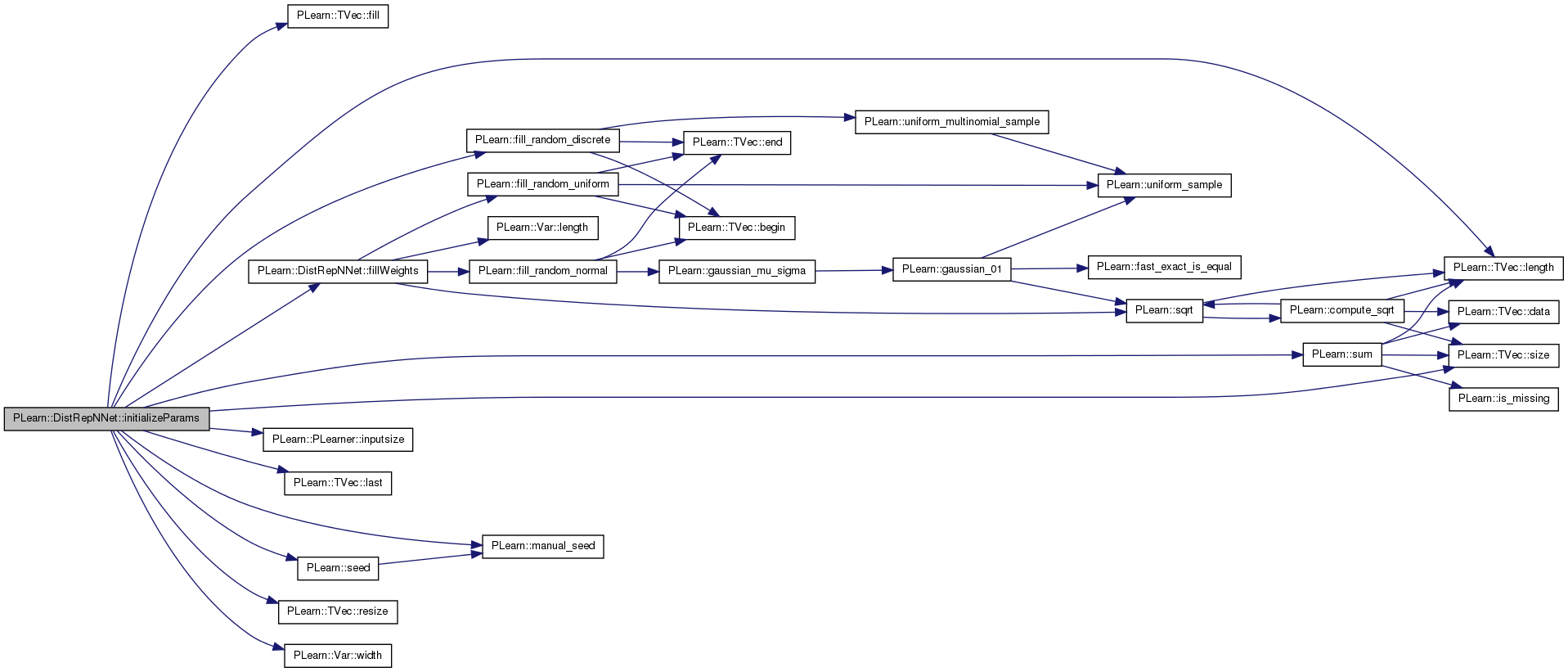

| virtual void | initializeParams (bool set_seed=true, int task_index=-1) |

| Initialize the parameters. | |

| Var | add_transfer_func (const Var &input, string transfer_func="default", VarArray mus=0, Var sigma=0) |

| Return a variable that is the result of the application of the given transfer function on the input variable. | |

| void | buildOutputFromInput (int task_index) |

| Build the output of the neural network, from the given input. | |

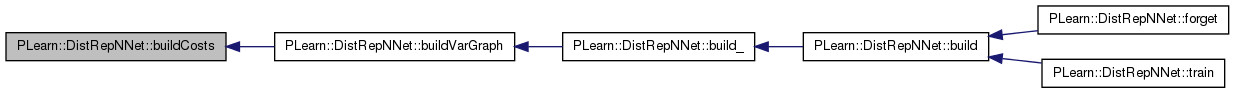

| void | buildCosts (const Var &output, const Var &target, int task_index=-1) |

| Build the costs variable from other variables. | |

| void | buildFuncs (VarArray &invars) |

| Build the various functions used in the network. | |

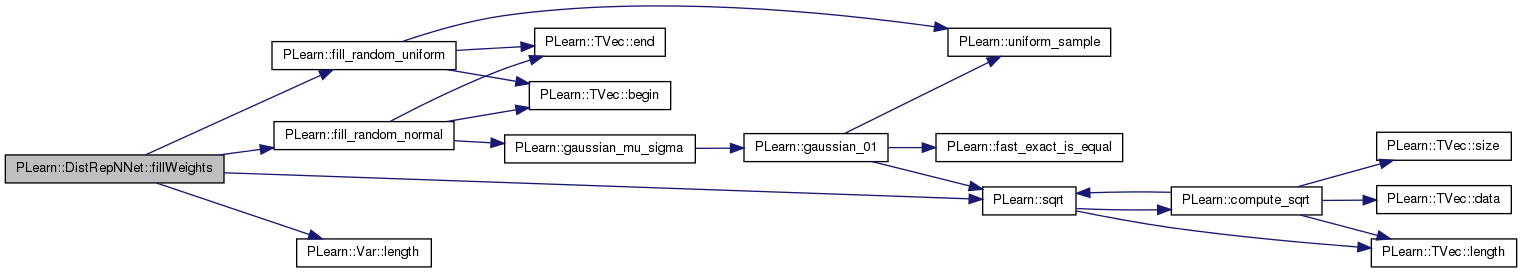

| void | fillWeights (const Var &weights, bool clear_first_row, int use_this_to_scale=-1) |

| Fill a matrix of weights according to the 'initialization_method' specified. | |

| virtual void | buildPenalties (int this_ntokens) |

| Fill the costs penalties. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Methods to get the network's (learned) parameters. | |

Protected Attributes | |

| Var | output |

| Output of the neural network. | |

| VarArray | costs |

| Costs of the neural network. | |

| VarArray | partial_update_vars |

| Vars that use partial updates. | |

| VarArray | penalties |

| Penalties on the neural network's weights. | |

| Var | training_cost |

| Training cost of the neural network It corresponds to costs[0] + weighted penalties. | |

| VarArray | training_cost_extra_tasks |

| Training cost of the neural network for extra tasks. | |

| Var | test_costs |

| Test costs. | |

| VarArray | invars |

| Input variables for training cost Func. | |

| TVec< VarArray > | invars_extra_tasks |

| Input variables for extra tasks training cost Func. | |

| VarArray | params |

| Parameters of the neural network. | |

| TVec< int > | input_to_dict_index |

| Weights of the activated features, in the distributed representation predictor. | |

| int | target_dict_index |

| Target Dictionary index. | |

| real | winputsparse_weight_decay |

| Temporary variables... | |

| real | winputsparse_bias_decay |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| Architecture of the neural network. | |

Private Attributes | |

| Vec | target_values |

| Vector of possible target values. | |

| Vec | output_comp |

| Vector for output computations. | |

| Vec | row |

| Row vector. | |

| Vec | tf |

| Token features vector. | |

| TVec< string > | options |

Definition at line 51 of file DistRepNNet.h.

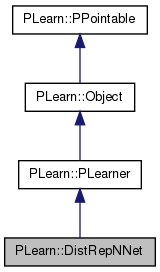

typedef PLearner PLearn::DistRepNNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DistRepNNet.h.

| PLearn::DistRepNNet::DistRepNNet | ( | ) |

Definition at line 99 of file DistRepNNet.cc.

: nhidden(0), nhidden2(0), nhidden_theta_predictor(0), nhidden_dist_rep_predictor(0), weight_decay(0), bias_decay(0), input_dist_rep_predictor_bias_decay(0), output_dist_rep_predictor_bias_decay(0), input_dist_rep_predictor_weight_decay(0), output_dist_rep_predictor_weight_decay(0), layer1_weight_decay(0), layer1_bias_decay(0), layer1_theta_predictor_weight_decay(0), layer1_theta_predictor_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), output_layer_theta_predictor_weight_decay(0), output_layer_theta_predictor_bias_decay(0), direct_in_to_out_weight_decay(0), direct_in_to_out_bias_decay(0), margin(1), fixed_output_weights(0), direct_in_to_out(0), penalty_type("L2_square"), output_transfer_func(""), hidden_transfer_func("tanh"), do_not_change_params(false), batch_size(1), initialization_method("uniform_linear"), ntokens(-1), nfeatures_per_token(-1), //consider_unseen_classes(0), use_dist_reps(1), use_output_weights_bases(0), use_extra_tasks_only_on_first_epoch(false), initialize_sparse_params_to_zero(false) {}

| PLearn::DistRepNNet::~DistRepNNet | ( | ) | [virtual] |

Definition at line 141 of file DistRepNNet.cc.

{

}

| string PLearn::DistRepNNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 97 of file DistRepNNet.cc.

| OptionList & PLearn::DistRepNNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 97 of file DistRepNNet.cc.

| RemoteMethodMap & PLearn::DistRepNNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 97 of file DistRepNNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 97 of file DistRepNNet.cc.

| Object * PLearn::DistRepNNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 97 of file DistRepNNet.cc.

| StaticInitializer DistRepNNet::_static_initializer_ & PLearn::DistRepNNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 97 of file DistRepNNet.cc.

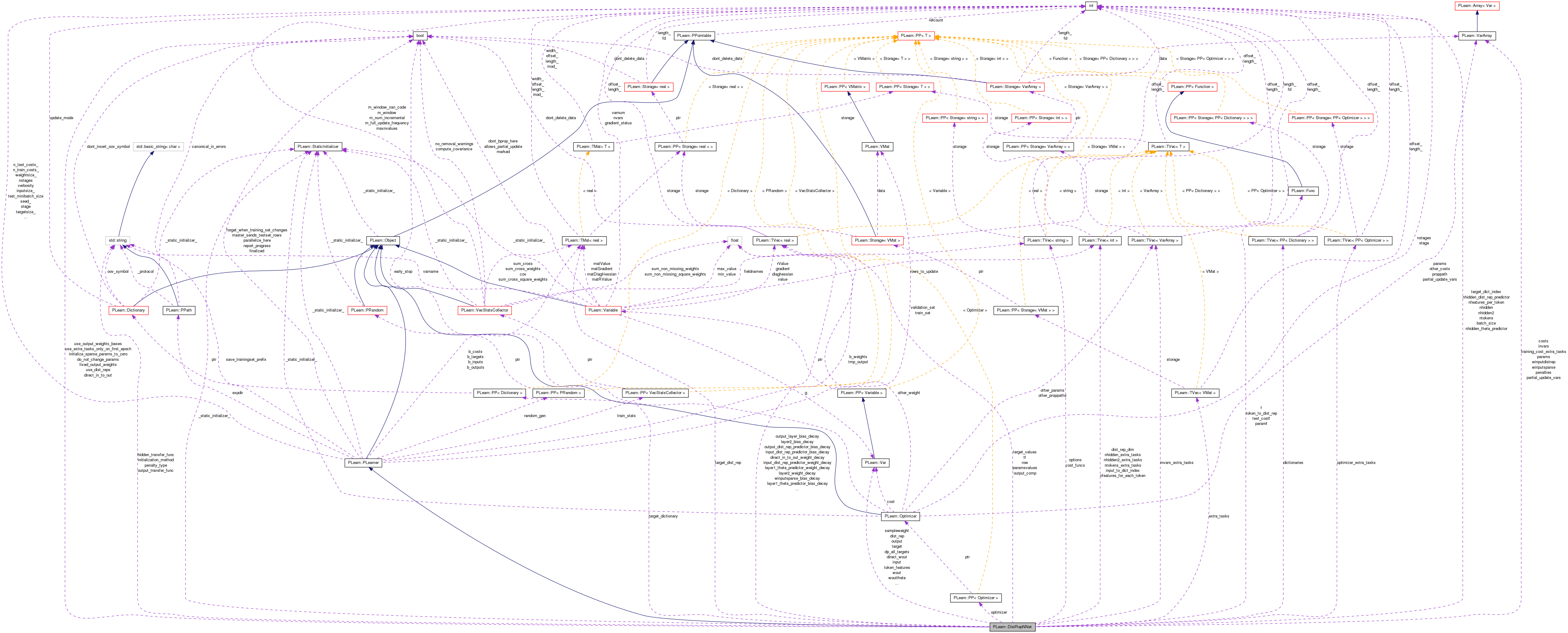

| Var PLearn::DistRepNNet::add_transfer_func | ( | const Var & | input, |

| string | transfer_func = "default", |

||

| VarArray | mus = 0, |

||

| Var | sigma = 0 |

||

| ) | [protected] |

Return a variable that is the result of the application of the given transfer function on the input variable.

Definition at line 1516 of file DistRepNNet.cc.

References PLearn::exp(), hidden_transfer_func, input, PLearn::log_softmax(), PLERROR, PLearn::sigmoid(), PLearn::softmax(), PLearn::softplus(), PLearn::tanh(), and PLearn::unary_hard_slope().

Referenced by buildOutputFromInput(), and buildVarGraph().

{

Var result;

if (transfer_func == "default")

transfer_func = hidden_transfer_func;

if(transfer_func=="linear")

result = input;

else if(transfer_func=="tanh")

result = tanh(input);

else if(transfer_func=="sigmoid")

result = sigmoid(input);

else if(transfer_func=="softplus")

result = softplus(input);

else if(transfer_func=="exp")

result = exp(input);

else if(transfer_func=="softmax")

result = softmax(input);

else if (transfer_func == "log_softmax")

result = log_softmax(input);

else if(transfer_func=="hard_slope")

result = unary_hard_slope(input,0,1);

else if(transfer_func=="symm_hard_slope")

result = unary_hard_slope(input,-1,1);

else PLERROR("In DistRepNNet::add_transfer_func(): Unknown value for transfer_func: %s",transfer_func.c_str());

return result;

}

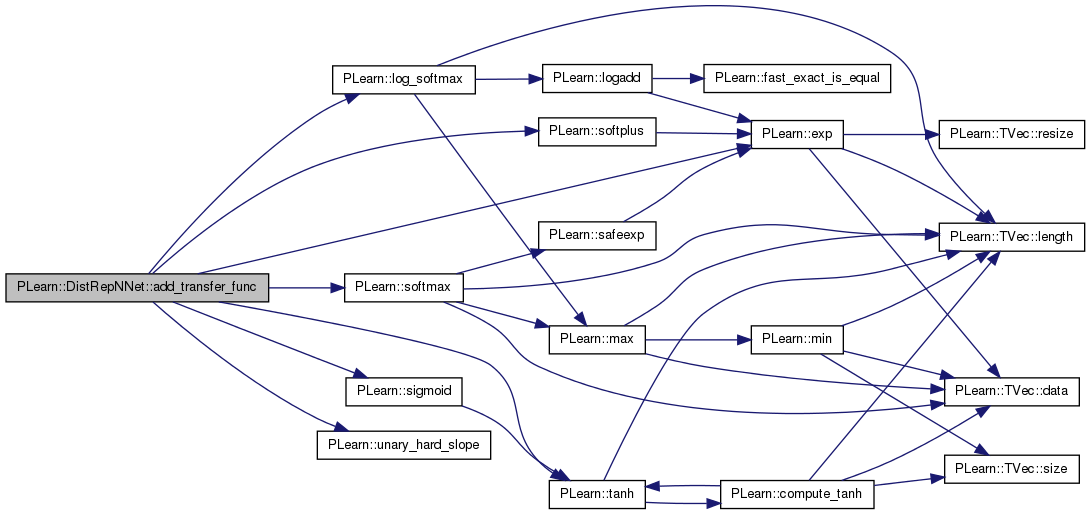

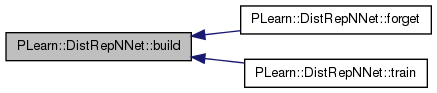

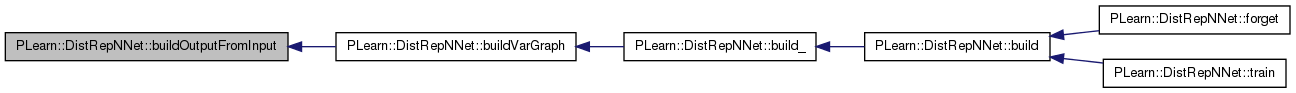

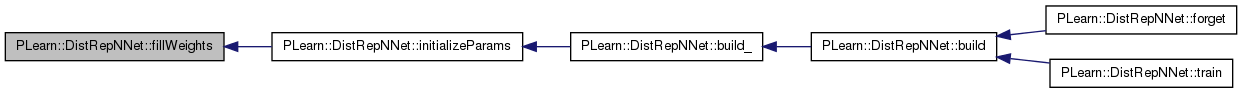

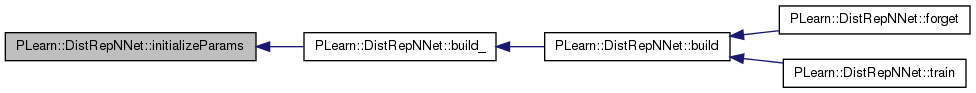

| void PLearn::DistRepNNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 365 of file DistRepNNet.cc.

References PLearn::PLearner::build(), and build_().

Referenced by forget(), and train().

{

inherited::build();

build_();

}

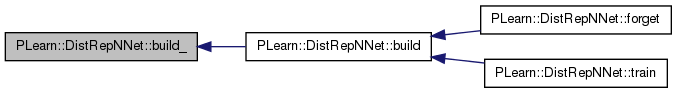

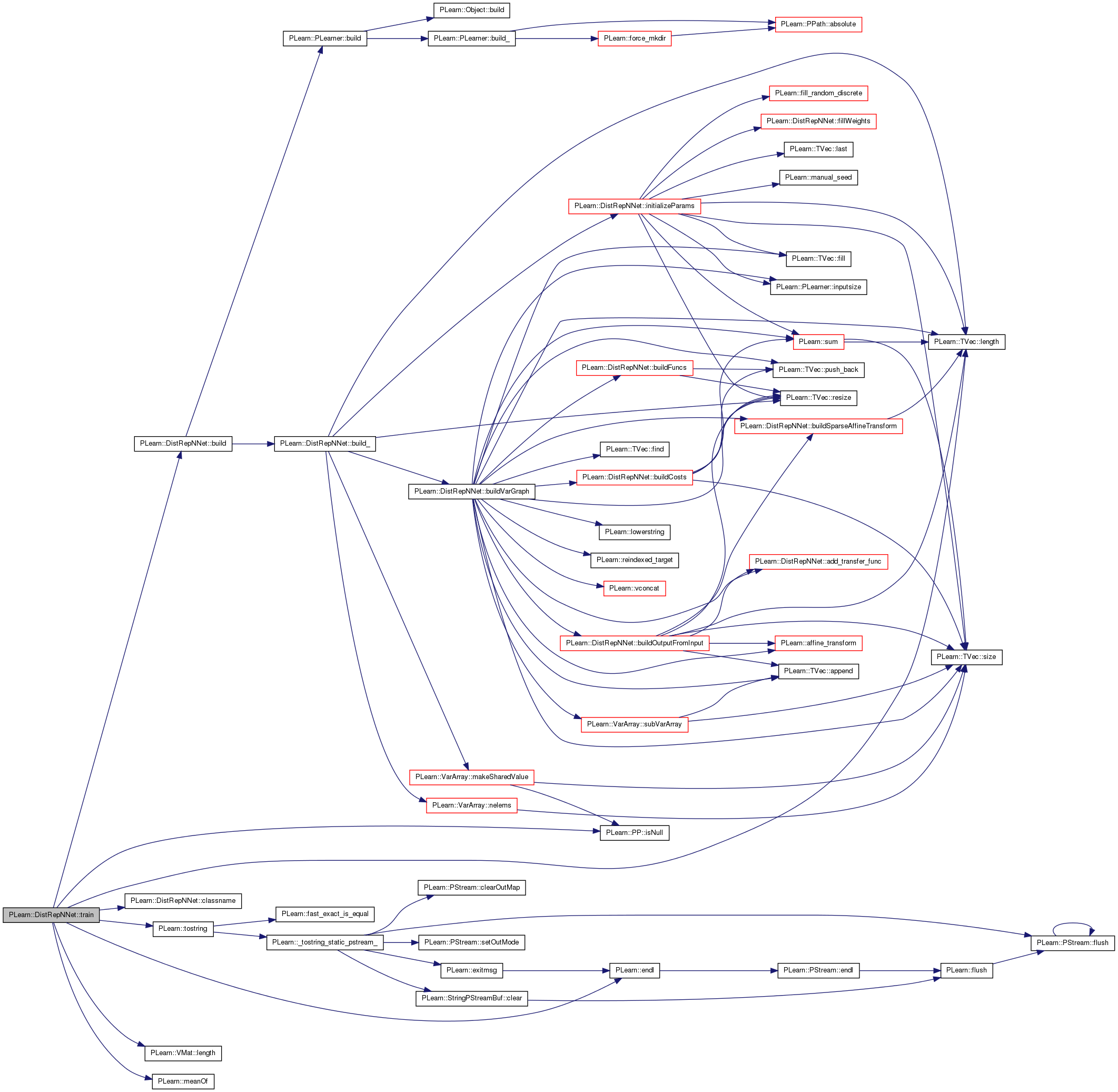

| void PLearn::DistRepNNet::build_ | ( | ) | [private] |

Architecture of the neural network.

Reimplemented from PLearn::PLearner.

Definition at line 733 of file DistRepNNet.cc.

References buildVarGraph(), dictionaries, direct_in_to_out, direct_wout, do_not_change_params, extra_tasks, fixed_output_weights, initializeParams(), PLearn::PLearner::inputsize_, invars_extra_tasks, PLearn::TVec< T >::length(), PLearn::VarArray::makeSharedValue(), PLearn::VarArray::nelems(), optimizer, optimizer_extra_tasks, options, outbias, output_comp, params, paramsvalues, partial_update_vars, PLERROR, PLearn::TVec< T >::resize(), PLearn::PLearner::targetsize_, training_cost_extra_tasks, use_dist_reps, use_output_weights_bases, w1, w1theta, w2, PLearn::PLearner::weightsize_, winputdistrep, winputsparse, winputsparse_bias_decay, winputsparse_weight_decay, wout, woutdistrep, and wouttheta.

Referenced by build().

{

/*

* Create Topology Var Graph

*/

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize, targetsize and weightsize,

// and it contains the Dictionaries...

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

if(targetsize_ != 1)

PLERROR("In DistRepNNet::build_(): targetsize_ must be 1, not %d",targetsize_);

if(fixed_output_weights && use_output_weights_bases)

PLERROR("In DistRepNNet::build_(): output weights cannot be fixed (i.e. fixed_output_weights=1) and predicted (i.e. use_output_weights_bases=1)");

if(direct_in_to_out && use_output_weights_bases)

PLERROR("In DistRepNNet::build_(): direct input to output weights cannot be used with output weights bases");

if(!use_output_weights_bases && !use_dist_reps

&& extra_tasks.length() != 0)

PLERROR("In DistRepNNet::build_(): it is useless to have extra tasks and not use distributed\n"

"representations or output weights bases.");

dictionaries.resize(0);

partial_update_vars.resize(0);

//partial_update_vars_extra_tasks.resize(extra_tasks.length());

//for(int t=0; t<partial_update_vars_extra_tasks.length(); t++)

// partial_update_vars_extra_tasks[t].resize(0);

params.resize(0);

training_cost_extra_tasks.resize(0);

invars_extra_tasks.resize(extra_tasks.length());

// Reset shared parameters

winputdistrep.resize(0);

woutdistrep = (Variable*) NULL;

w1theta = (Variable*) NULL;

wouttheta = (Variable*) NULL;

for(int t=0; t<extra_tasks.length(); t++)

{

// Reset parameters variable

w1 = (Variable*) NULL;

w2 = (Variable*) NULL;

wout = (Variable*) NULL;

direct_wout = (Variable*) NULL;

outbias = (Variable*) NULL;

winputsparse.resize(0);

winputsparse_weight_decay = 0;

winputsparse_bias_decay = 0;

buildVarGraph(t);

initializeParams(true,t);

}

// Reset parameters variable

w1 = (Variable*) NULL;

w2 = (Variable*) NULL;

wout = (Variable*) NULL;

direct_wout = (Variable*) NULL;

outbias = (Variable*) NULL;

winputsparse.resize(0);

winputsparse_weight_decay = 0;

winputsparse_bias_decay = 0;

buildVarGraph(-1);

initializeParams();

// Shared values hack...

if (!do_not_change_params) {

if(paramsvalues.length() == params.nelems())

params << paramsvalues;

else

{

paramsvalues.resize(params.nelems());

if(optimizer)

optimizer->reset();

for(int t=0; t<optimizer_extra_tasks.length(); t++)

optimizer_extra_tasks[t]->reset();

}

params.makeSharedValue(paramsvalues);

}

output_comp.resize(1);

options.resize(0);

//output_comp.resize(outputsize());

}

}

| void PLearn::DistRepNNet::buildCosts | ( | const Var & | output, |

| const Var & | target, | ||

| int | task_index = -1 |

||

| ) | [protected] |

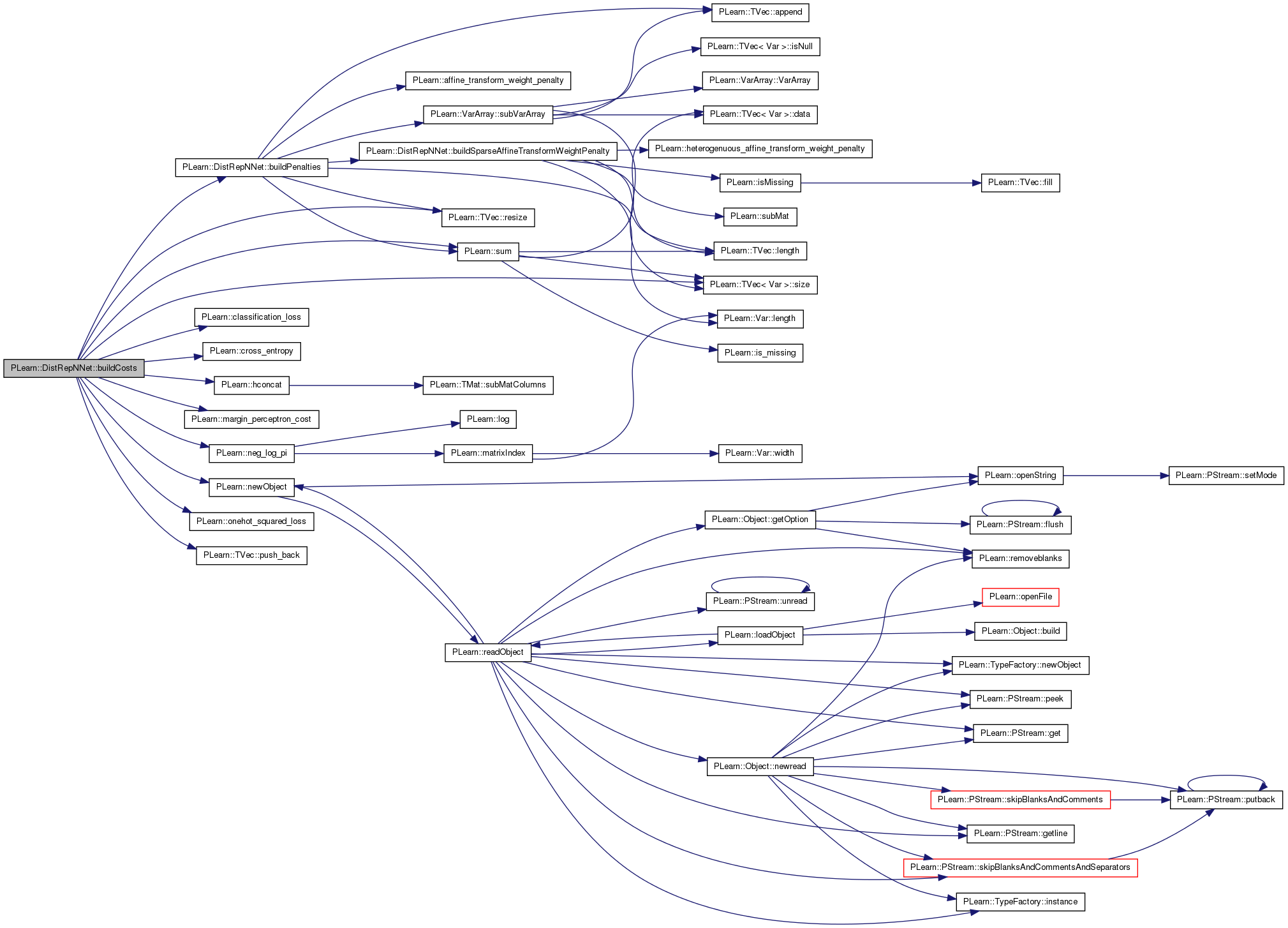

Build the costs variable from other variables.

Definition at line 824 of file DistRepNNet.cc.

References buildPenalties(), PLearn::classification_loss(), cost_funcs, costs, PLearn::cross_entropy(), PLearn::hconcat(), margin, PLearn::margin_perceptron_cost(), PLearn::neg_log_pi(), PLearn::newObject(), ntokens, ntokens_extra_tasks, PLearn::onehot_squared_loss(), output_transfer_func, penalties, PLERROR, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), sampleweight, PLearn::TVec< T >::size(), PLearn::sum(), test_costs, training_cost, training_cost_extra_tasks, and PLearn::PLearner::weightsize_.

Referenced by buildVarGraph().

{

int ncosts = cost_funcs.size();

if(ncosts<=0)

PLERROR("In DistRepNNet::buildCosts - Empty cost_funcs : must at least specify the cost function to optimize!");

costs.resize(ncosts);

for(int k=0; k<ncosts; k++)

{

// create costfuncs and apply individual weights if weightpart > 1

if(cost_funcs[k]=="mse_onehot")

costs[k] = onehot_squared_loss(the_output, the_target);

else if(cost_funcs[k]=="NLL")

{

if (the_output->size() == 1) {

// Assume sigmoid output here!

costs[k] = cross_entropy(the_output, the_target);

} else {

if (output_transfer_func == "log_softmax")

costs[k] = -the_output[the_target];

else

costs[k] = neg_log_pi(the_output, the_target);

}

}

else if(cost_funcs[k]=="class_error")

costs[k] = classification_loss(the_output, the_target);

else if (cost_funcs[k]=="margin_perceptron_cost")

costs[k] = margin_perceptron_cost(the_output,the_target,margin);

else // Assume we got a Variable name and its options

{

costs[k]= dynamic_cast<Variable*>(newObject(cost_funcs[k]));

if(costs[k].isNull())

PLERROR("In DistRepNNet::build_() unknown cost_func option: %s",cost_funcs[k].c_str());

costs[k]->setParents(the_output & the_target);

costs[k]->build();

}

}

/*

* weight and bias decay penalty

*/

// create penalties

int this_ntokens;

if(task_index < 0)

this_ntokens = ntokens;

else

this_ntokens = ntokens_extra_tasks[task_index];

buildPenalties(this_ntokens);

test_costs = hconcat(costs);

// Apply penalty to cost.

// If there is no penalty, we still add costs[0] as the first cost, in

// order to keep the same number of costs as if there was a penalty.

if(penalties.size() != 0) {

if (weightsize_>0)

// only multiply by sampleweight if there are weights

training_cost = hconcat(sampleweight*sum(hconcat(costs[0] & penalties))

& (test_costs*sampleweight));

else {

training_cost = hconcat(sum(hconcat(costs[0] & penalties)) & test_costs);

}

}

else {

if(weightsize_>0) {

// only multiply by sampleweight if there are weights

training_cost = hconcat(costs[0]*sampleweight & test_costs*sampleweight);

} else {

training_cost = hconcat(costs[0] & test_costs);

}

}

if(task_index >= 0) training_cost_extra_tasks.push_back(training_cost);

training_cost->setName("training_cost");

test_costs->setName("test_costs");

the_output->setName("output");

}

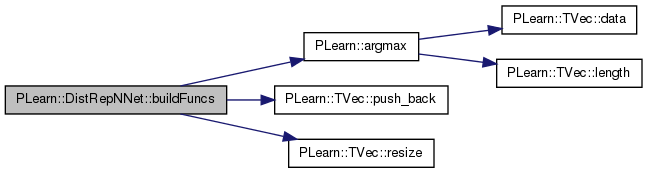

| void PLearn::DistRepNNet::buildFuncs | ( | VarArray & | invars | ) | [protected] |

Build the various functions used in the network.

Definition at line 908 of file DistRepNNet.cc.

References PLearn::argmax(), dist_rep, f, input, output, paramf, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), sampleweight, target, test_costf, test_costs, token_features, token_to_dist_rep, and training_cost.

Referenced by buildVarGraph().

{

invars.resize(0);

VarArray outvars;

VarArray testinvars;

if (input)

{

invars.push_back(input);

testinvars.push_back(input);

}

if (output)

outvars.push_back(output);

if(target)

{

invars.push_back(target);

testinvars.push_back(target);

outvars.push_back(target);

}

if(sampleweight)

{

invars.push_back(sampleweight);

}

f = Func(input, argmax(output));

//f = Func(input, output);

test_costf = Func(testinvars, argmax(output)&test_costs);

//test_costf = Func(testinvars,output&test_costs);

test_costf->recomputeParents();

if(dist_rep)

token_to_dist_rep = Func(token_features,dist_rep);

paramf = Func(invars, training_cost);

//displayFunction(paramf, true, false, 250);

}

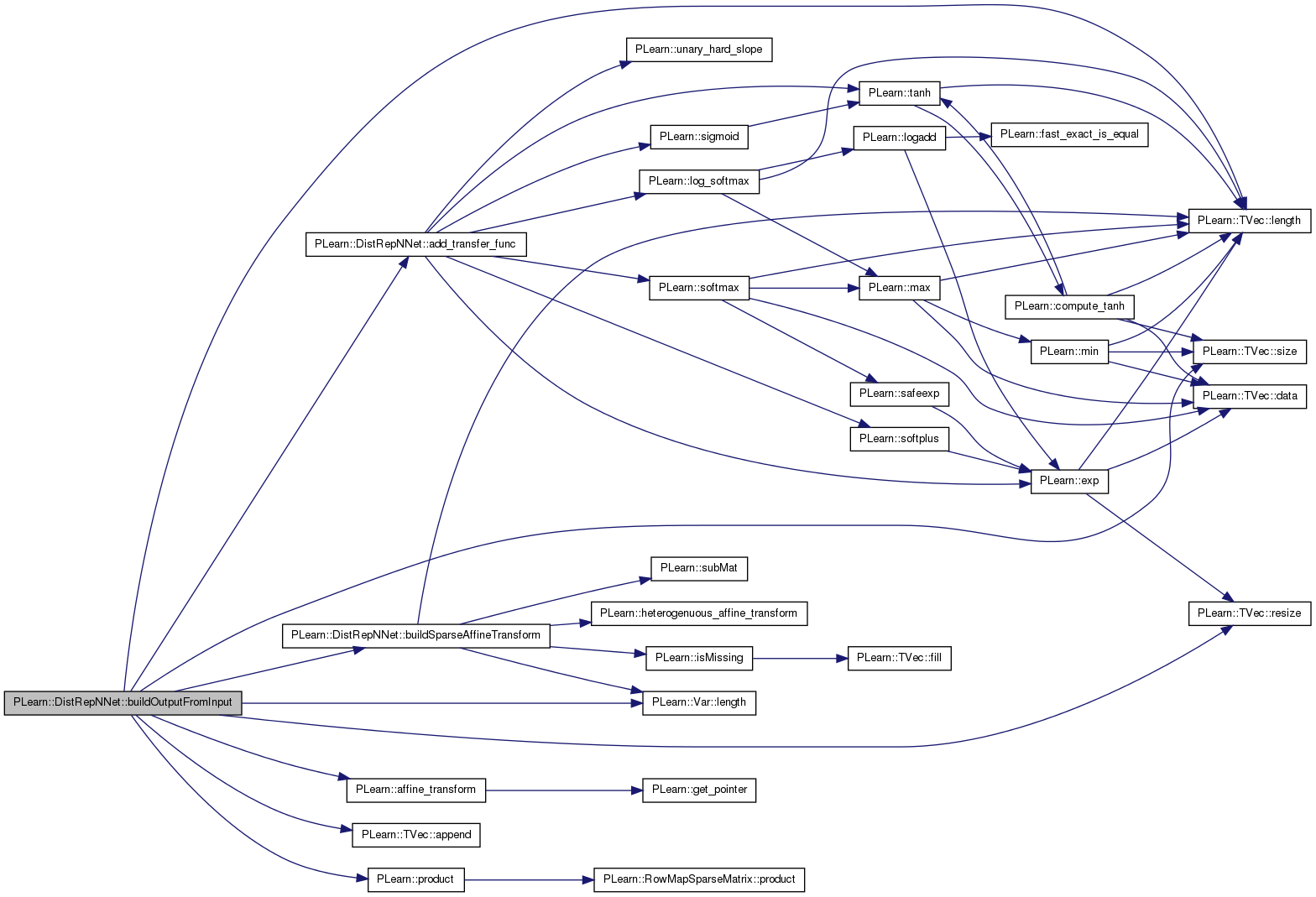

| void PLearn::DistRepNNet::buildOutputFromInput | ( | int | task_index | ) | [protected] |

Build the output of the neural network, from the given input.

Definition at line 943 of file DistRepNNet.cc.

References add_transfer_func(), PLearn::affine_transform(), PLearn::TVec< T >::append(), bias_decay, buildSparseAffineTransform(), dictionaries, direct_in_to_out, direct_wout, dp_all_targets, dp_input, fixed_output_weights, input, input_to_dict_index, j, layer1_bias_decay, layer1_weight_decay, PLearn::Var::length(), PLearn::TVec< T >::length(), nhidden, nhidden2, nhidden2_extra_tasks, nhidden_extra_tasks, nhidden_theta_predictor, outbias, output, output_layer_bias_decay, output_layer_weight_decay, output_transfer_func, params, partial_update_vars, PLERROR, PLearn::product(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), target_dict_index, use_dist_reps, use_output_weights_bases, w1, w1theta, w2, weight_decay, winputsparse, winputsparse_bias_decay, winputsparse_weight_decay, wout, and wouttheta.

Referenced by buildVarGraph().

{

/*

if(nnet_architecture == "csMTL")

{

// The idea is to output a "potential" for each

// target possibility...

// Hence, we need to make a propagation path from

// the computations using only the input part

// (and hence commun to all targets) and the

// target disptributed representation, to the potential output.

// In order to know what are the possible targets,

// the train_set vmat, which contains the target

// Dictionary, will be used.

// Computations common to all targets

if(nhidden>0)

{

w1 = Var(1 + dp_input->size(), nhidden, "w1");

params.append(w1);

output = affine_transform(dp_input, w1);

}

else

{

wout = Var(1 + dp_input->size(), outputsize(), "wout");

output = affine_transform(dp_input, wout);

if(!fixed_output_weights)

{

params.append(wout);

}

else

{

outbias = Var(output->size(),"outbias");

output = output + outbias;

params.append(outbias);

}

}

Var comp_input = output;

Var dp_target = Var(1,dist_rep_dim[target_dict_index]);

VarArray proppath_params;

if(nhidden>0)

{

w1target = Var( dp_target->size(),nhidden, "w1target");

params.append(w1target);

proppath_params.append(w1target);

output = output + product(dp_target, w1target);

output = add_transfer_func(output);

}

else

{

wouttarget = Var(dp_target->size(),outputsize(), "wouttarget");

if (!fixed_output_weights)

{

params.append(wouttarget);

proppath_params.append(wouttarget);

}

output = output + product(dp_target,wouttarget);

//output = add_transfer_func(output);

}

// second hidden layer

if(nhidden2>0)

{

w2 = Var(1 + output.length(), nhidden2, "w2");

params.append(w2);

proppath_params.append(w2);

output = affine_transform(output,w2);

output = add_transfer_func(output);

}

if (nhidden2>0 && nhidden==0)

PLERROR("DistRepNNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",nhidden2);

// output layer before transfer function when there is at least one hidden layer

if(nhidden > 0)

{

wout = Var(1 + output->size(), outputsize(), "wout");

output = affine_transform(output, wout);

if (!fixed_output_weights)

{

params.append(wout);

proppath_params.append(wout);

}

else

{

outbias = Var(output->size(),"outbias");

output = output + outbias;

params.append(outbias);

proppath_params.append(outbias);

}

}

output = potentials(input,comp_input,dp_target,dist_reps[target_dict_index], output, proppath_params, train_set);

partial_update_vars.push_back(dist_reps[target_dict_index]);

}

else

*/

int this_nhidden;

int this_nhidden2;

if(task_index < 0)

{

this_nhidden = nhidden;

this_nhidden2 = nhidden2;

}

else

{

this_nhidden = nhidden_extra_tasks[task_index];

this_nhidden2 = nhidden2_extra_tasks[task_index];

}

if(!use_dist_reps)

{

if(!use_output_weights_bases)

{

// These weights will be used as the input weights of the neural

// network, instead of w1.

int dim;

if(this_nhidden > 0) dim = this_nhidden;

else dim = dictionaries[target_dict_index]->size(); //+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1);

winputsparse.resize(input->length()+1);

winputsparse[input->length()] = Var(1,dim);

for(int j=0; j<winputsparse.length()-1; j++)

{

if(input_to_dict_index[j] < 0)

winputsparse[j] = Var(1,dim);

else

winputsparse[j] = Var(dictionaries[input_to_dict_index[j]]->size()+1,dim);

}

params.append(winputsparse);

partial_update_vars.append(winputsparse);

}

if(this_nhidden>0)

{

output = buildSparseAffineTransform(winputsparse,input,input_to_dict_index,0);

output = add_transfer_func(output);

winputsparse_weight_decay = weight_decay + layer1_weight_decay;

winputsparse_bias_decay = bias_decay + layer1_bias_decay;

// ici: Faire direct in to out

if(direct_in_to_out)

{

PLERROR("In buildOutputFromInput(): direct_in_to_out option not implemented for sparse input.");

direct_wout = Var(dictionaries[target_dict_index]->size()

//+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1)

, dp_input->size(), "direct_wout");

params.append(direct_wout);

}

}

// second hidden layer

if(this_nhidden2>0)

{

w2 = Var(1 + output.length(), this_nhidden2, "w2");

params.append(w2);

output = affine_transform(output,w2);

output = add_transfer_func(output);

wout = Var(1 + this_nhidden2, dictionaries[target_dict_index]->size()

//+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1)

, "wout");

}

else if(this_nhidden > 0) wout = Var(1 + this_nhidden, dictionaries[target_dict_index]->size()

//+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1)

, "wout");

if (this_nhidden2>0 && this_nhidden==0)

PLERROR("DistRepNNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",this_nhidden2);

if(this_nhidden > 0)

{

// ici: ajouter option sans biais pour sparse product...

if(direct_in_to_out)

output = affine_transform(output, wout) + product(direct_wout,dp_input);

else

output = affine_transform(output, wout);

}

else

{

output = buildSparseAffineTransform(winputsparse,input,input_to_dict_index,0);

winputsparse_weight_decay = weight_decay + output_layer_weight_decay;

winputsparse_bias_decay = bias_decay + output_layer_bias_decay;

}

if(fixed_output_weights)

{

outbias = Var(output->size(),"outbias");

output = output + outbias;

params.append(outbias);

}

else if(this_nhidden>0) params.append(wout);

//output = transpose(output);

}

else

{

int before_output_size = dp_input->size();

if(this_nhidden > 0) before_output_size = this_nhidden;

if(this_nhidden2 > 0) before_output_size = this_nhidden2;

/*if(possible_targets_varies)

{

PLERROR("In DistRepNNet::buildOutputFromInput(): possible_targets_varies is not implemented yet");

if(use_output_weights_bases)

;

}

else*/

{

if(use_output_weights_bases)

{

PLERROR("In DistRepNNet::buildOutputFromInput(): use_output_weights_bases is not implemented yet");

// À faire: faire vérification pour partager les

// représentations lorsque les dictionnaires sont les mêmes...

// Ne pas oublier de partager les poids w1theta et wouttheta

//dp_all_targets = Var(dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1,dist_rep_dim.last(),"dp_all_targets");

// Penser à l'initialisation!!!! Comment faire!!!

params.append(dp_all_targets);

if(nhidden_theta_predictor>0)

{

if(!w1theta)

{

w1theta = Var(dp_all_targets->length()+1,nhidden_theta_predictor,"w1theta");

params.append(w1theta);

}

wout = new MatrixAffineTransformVariable(dp_all_targets,w1theta);

wout = add_transfer_func(wout);

}

else

wout = dp_all_targets;

if(!wouttheta)

{

wouttheta = Var(wout->length()+1,before_output_size+1, "wouttheta");

params.append(wouttheta);

}

wout = new MatrixAffineTransformVariable(wout,wouttheta);

}

else

{

wout = Var(1 + before_output_size, dictionaries[target_dict_index]->size()

//+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1)

, "wout");

}

}

if(this_nhidden>0)

{

w1 = Var(1 + dp_input->size(), this_nhidden, "w1");

params.append(w1);

output = affine_transform(dp_input, w1);

output = add_transfer_func(output);

if(direct_in_to_out)

{

direct_wout = Var(dictionaries[target_dict_index]->size()

//+ (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1)

, dp_input->size(), "direct_wout");

params.append(direct_wout);

}

}

// second hidden layer

if(this_nhidden2>0)

{

w2 = Var(1 + output.length(), this_nhidden2, "w2");

params.append(w2);

output = affine_transform(output,w2);

output = add_transfer_func(output);

}

if (this_nhidden2>0 && this_nhidden==0)

PLERROR("DistRepNNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",this_nhidden2);

if(this_nhidden > 0)

{

if(direct_in_to_out)

output = affine_transform(output, wout) + product(direct_wout,dp_input);

else

output = affine_transform(output, wout);

}

else

{

if(use_dist_reps)

output = affine_transform(dp_input, wout);

else

output = buildSparseAffineTransform(winputsparse,input,input_to_dict_index,0);

}

if(fixed_output_weights)

{

outbias = Var(output->size(),"outbias");

output = output + outbias;

params.append(outbias);

}

else if(use_dist_reps)

params.append(wout);

//output = transpose(output);

}

/*

TVec<bool> class_tag(dictionaries[target_dict_index]->size() + (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1));

Vec row(train_set.width());

int target;

class_tag.fill(0);

for(int i=0; i<train_set.length(); i++)

{

train_set->getRow(i,row);

target = (int) row[train_set->inputsize()];

class_tag[target] = 1;

}

Vec seen_target_vec(0);

seen_target.resize(0);

unseen_target.resize(0);

for(int i=0; i<class_tag.length(); i++)

if(class_tag[i])

{

seen_target_vec.push_back(i);

seen_target.push_back(i);

}

else unseen_target.push_back(i);

if(seen_target_vec.length() != class_tag.length())

train_output = new VarRowsVariable(output,new SourceVariable(seen_target_vec));

*/

// output_transfer_func

if(output_transfer_func!="" && output_transfer_func!="none")

{

/*

if(consider_unseen_classes)

output = insert_zeros(add_transfer_func(output, output_transfer_func),seen_target);

else

*/

output = add_transfer_func(output, output_transfer_func);

}

/*

else

{

if(consider_unseen_classes)

output = insert_zeros(output,seen_target);

}

*/

/*

if(train_output)

if(output_transfer_func!="" && output_transfer_func!="none")

train_output = insert_zeros(add_transfer_func(train_output, output_transfer_func),unseen_target);

else

train_output = insert_zeros(train_output,unseen_target);

*/

}

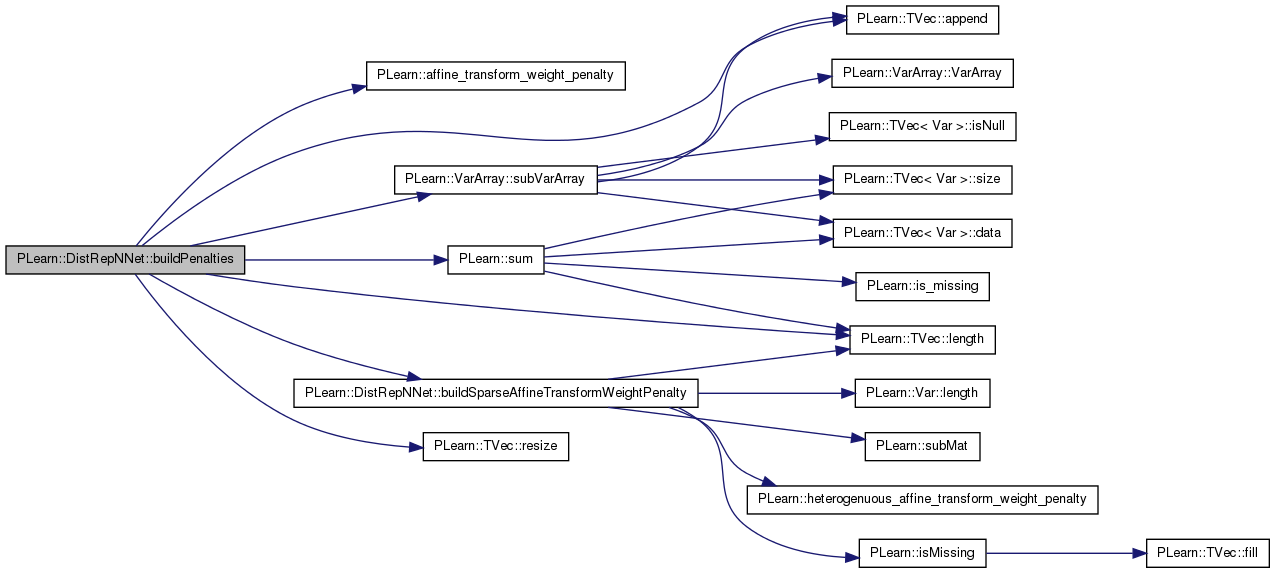

| void PLearn::DistRepNNet::buildPenalties | ( | int | this_ntokens | ) | [protected, virtual] |

Fill the costs penalties.

Definition at line 1310 of file DistRepNNet.cc.

References PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), bias_decay, buildSparseAffineTransformWeightPenalty(), direct_in_to_out_bias_decay, direct_in_to_out_weight_decay, direct_wout, i, input, input_dist_rep_predictor_bias_decay, input_dist_rep_predictor_weight_decay, input_to_dict_index, layer1_bias_decay, layer1_theta_predictor_bias_decay, layer1_theta_predictor_weight_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::TVec< T >::length(), nfeatures_for_each_token, nfeatures_per_token, output_dist_rep_predictor_bias_decay, output_dist_rep_predictor_weight_decay, output_layer_bias_decay, output_layer_theta_predictor_bias_decay, output_layer_theta_predictor_weight_decay, output_layer_weight_decay, penalties, penalty_type, PLearn::TVec< T >::resize(), PLearn::VarArray::subVarArray(), PLearn::sum(), use_output_weights_bases, w1, w1theta, w2, weight_decay, winputdistrep, winputsparse, winputsparse_bias_decay, winputsparse_weight_decay, wout, woutdistrep, and wouttheta.

Referenced by buildCosts().

{

penalties.resize(0); // prevents penalties from being added twice by consecutive builds

if(w1 && ((layer1_weight_decay + weight_decay)!=0 || (layer1_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(w1, (layer1_weight_decay + weight_decay), (layer1_bias_decay + bias_decay), penalty_type));

if(w1theta && ((layer1_theta_predictor_weight_decay + weight_decay)!=0 || (layer1_theta_predictor_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(w1theta, (layer1_theta_predictor_weight_decay + weight_decay), (layer1_theta_predictor_bias_decay + bias_decay), penalty_type));

if(w2 && ((layer2_weight_decay + weight_decay)!=0 || (layer2_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(w2, (layer2_weight_decay + weight_decay), (layer2_bias_decay + bias_decay), penalty_type));

if(!use_output_weights_bases && wout && ((output_layer_weight_decay + weight_decay)!=0 || (output_layer_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(wout, (output_layer_weight_decay + weight_decay),

(output_layer_bias_decay + bias_decay), penalty_type));

if(wouttheta && ((output_layer_theta_predictor_weight_decay + weight_decay)!=0 || (output_layer_theta_predictor_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(wouttheta, (output_layer_theta_predictor_weight_decay + weight_decay),

(output_layer_theta_predictor_bias_decay + bias_decay), penalty_type));

if(woutdistrep && ((output_dist_rep_predictor_weight_decay + weight_decay)!=0 || (output_dist_rep_predictor_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(woutdistrep, (output_dist_rep_predictor_weight_decay + weight_decay),

(output_dist_rep_predictor_bias_decay + bias_decay), penalty_type));

if(direct_wout && ((direct_in_to_out_weight_decay + weight_decay)!=0 || (direct_in_to_out_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(direct_wout, (direct_in_to_out_weight_decay + weight_decay),

(direct_in_to_out_bias_decay + bias_decay), penalty_type));

// Here, affine_transform_weight_penalty is not used differently, since the weight variables don't correspond

// to an affine_transform (i.e. doesn't contain biases AND a weights)

if(winputdistrep.length() != 0 && (input_dist_rep_predictor_weight_decay + weight_decay + input_dist_rep_predictor_bias_decay + bias_decay))

{

/*

for(int i=0; i<activated_weights.length(); i++)

{

if(input_to_dict_index[i%nfeatures_per_token] < 0)

{

// Add those weights in the penalties only once

if(i<nfeatures_per_token)

penalties.append(affine_transform_weight_penalty(winputdistrep[i], (input_dist_rep_predictor_weight_decay + weight_decay),

(input_dist_rep_predictor_weight_decay + weight_decay), penalty_type));

}

else

// Approximate version of the weight decay on the input weights, which is more computationally efficient

penalties.append(affine_transform_weight_penalty(activated_weights[i], (input_dist_rep_predictor_weight_decay + weight_decay),

(input_dist_rep_predictor_weight_decay + weight_decay), penalty_type));

}

*/

// Apply only bias decay for first token, since these biases are present in all dist. rep. predictions

if(nfeatures_for_each_token.length() != 0)

{

int sum=0;

int sum_dict = 0;

for(int i=0; i<nfeatures_for_each_token.length(); i++)

{

penalties.append(buildSparseAffineTransformWeightPenalty(winputdistrep.subVarArray(sum,nfeatures_for_each_token[i]+1), input, input_to_dict_index, sum_dict, input_dist_rep_predictor_weight_decay + weight_decay, input_dist_rep_predictor_bias_decay + bias_decay, penalty_type));

sum += nfeatures_for_each_token[i]+1;

sum_dict += nfeatures_for_each_token[i];

}

}

else

{

for(int i=0; i<this_ntokens; i++)

penalties.append(buildSparseAffineTransformWeightPenalty(winputdistrep, input, input_to_dict_index, i*nfeatures_per_token, input_dist_rep_predictor_weight_decay + weight_decay, (i==0 ? input_dist_rep_predictor_bias_decay + bias_decay : 0), penalty_type));

}

}

//if(winputdistrep.length() != 0 && (input_dist_rep_predictor_bias_decay + bias_decay))

// penalties.append(affine_transform_weight_penalty(winputdistrep[nfeatures_per_token], (input_dist_rep_predictor_bias_decay + bias_decay),

// (input_dist_rep_predictor_bias_decay + bias_decay), penalty_type));

if(winputsparse.length() != 0 && (winputsparse_weight_decay + winputsparse_bias_decay != 0))

{

penalties.append(buildSparseAffineTransformWeightPenalty(winputsparse, input, input_to_dict_index, 0, winputsparse_weight_decay, winputsparse_bias_decay, penalty_type));

}

// if(winputsparse.length() != 0 && winputsparse_weight_decay)

// {

// for(int i=0; i<activated_weights.length(); i++)

// {

// penalties.append(affine_transform_weight_penalty(activated_weights[i], winputsparse_weight_decay,

// winputsparse_weight_decay, penalty_type));

// }

// }

// if(winputsparse.length() != 0 && winputsparse_bias_decay)

// penalties.append(affine_transform_weight_penalty(winputsparse.last(), winputsparse_bias_decay,

// winputsparse_bias_decay, penalty_type));

}

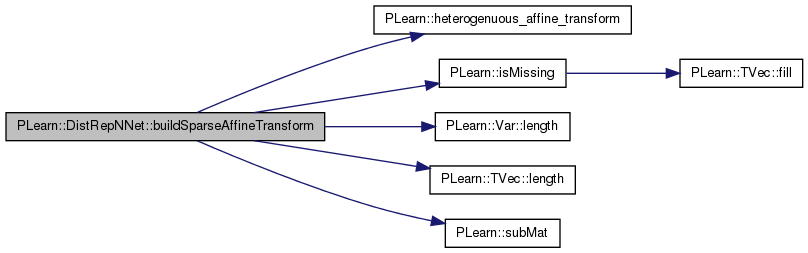

| Var PLearn::DistRepNNet::buildSparseAffineTransform | ( | VarArray | weights, |

| Var | input, | ||

| TVec< int > | input_to_dict_index, | ||

| int | begin | ||

| ) | [protected] |

Builds a sparse affine transformation from a set of weights and an input variable.

Definition at line 371 of file DistRepNNet.cc.

References dictionaries, PLearn::heterogenuous_affine_transform(), PLearn::isMissing(), j, PLearn::Var::length(), PLearn::TVec< T >::length(), and PLearn::subMat().

Referenced by buildOutputFromInput(), and buildVarGraph().

{

TVec<bool> input_is_discrete(weights->length()-1);

Vec missing_replace(weights->length()-1);

for(int j=0; j<weights->length()-1; j++)

{

if(input_to_dict_index[begin+j] < 0)

{

input_is_discrete[j] = false;

missing_replace[j] = 0;

}

else

{

input_is_discrete[j] = true;

missing_replace[j] = dictionaries[input_to_dict_index[begin+j]]->getId(dictionaries[input_to_dict_index[begin+j]]->oov_symbol);

}

}

if(weights.length()-1 == input->length())

return heterogenuous_affine_transform(isMissing(input,true, true, missing_replace), weights, input_is_discrete);

else

return heterogenuous_affine_transform(isMissing(subMat(input,begin,0,weights.length()-1,1),true, true, missing_replace), weights, input_is_discrete);

}

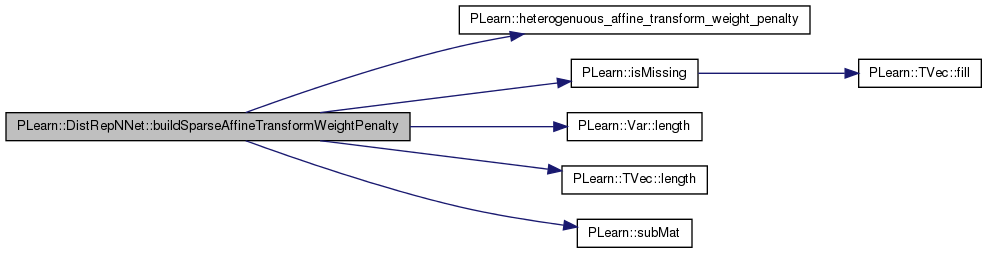

| Var PLearn::DistRepNNet::buildSparseAffineTransformWeightPenalty | ( | VarArray | weights, |

| Var | input, | ||

| TVec< int > | input_to_dict_index, | ||

| int | begin, | ||

| real | weight_decay, | ||

| real | bias_decay = 0, |

||

| string | penalty_type = "L2_square" |

||

| ) | [protected] |

Builds a sparse affine transformation weight penalty from a set of weights and an input variable.

Definition at line 394 of file DistRepNNet.cc.

References bias_decay, dictionaries, PLearn::heterogenuous_affine_transform_weight_penalty(), PLearn::isMissing(), j, PLearn::Var::length(), PLearn::TVec< T >::length(), penalty_type, PLearn::subMat(), and weight_decay.

Referenced by buildPenalties().

{

TVec<bool> input_is_discrete(weights.length()-1);

Vec missing_replace(weights.length()-1);

for(int j=0; j<weights->length()-1; j++)

{

if(input_to_dict_index[begin+j] < 0)

{

input_is_discrete[j] = false;

missing_replace[j] = 0;

}

else

{

input_is_discrete[j] = true;

missing_replace[j] = dictionaries[input_to_dict_index[begin+j]]->getId(dictionaries[input_to_dict_index[begin+j]]->oov_symbol);

}

}

if(weights.length()-1 == input->length())

return heterogenuous_affine_transform_weight_penalty(isMissing(input,true, true, missing_replace), weights, input_is_discrete, weight_decay, bias_decay, penalty_type);

else

return heterogenuous_affine_transform_weight_penalty(isMissing(subMat(input,begin,0,weights.length()-1,1),true, true, missing_replace), weights, input_is_discrete, weight_decay, bias_decay, penalty_type);

}

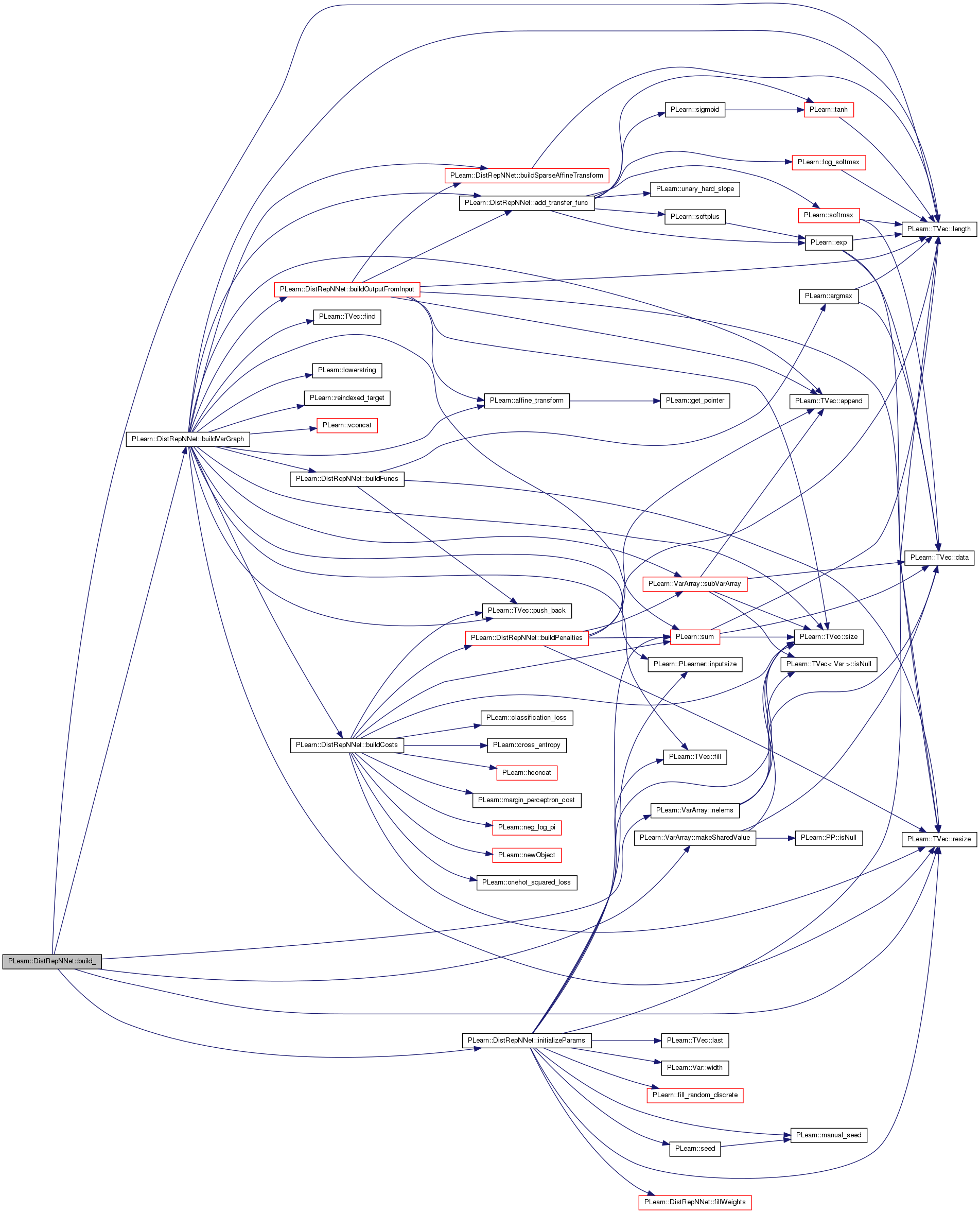

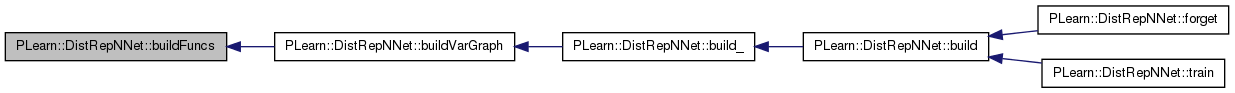

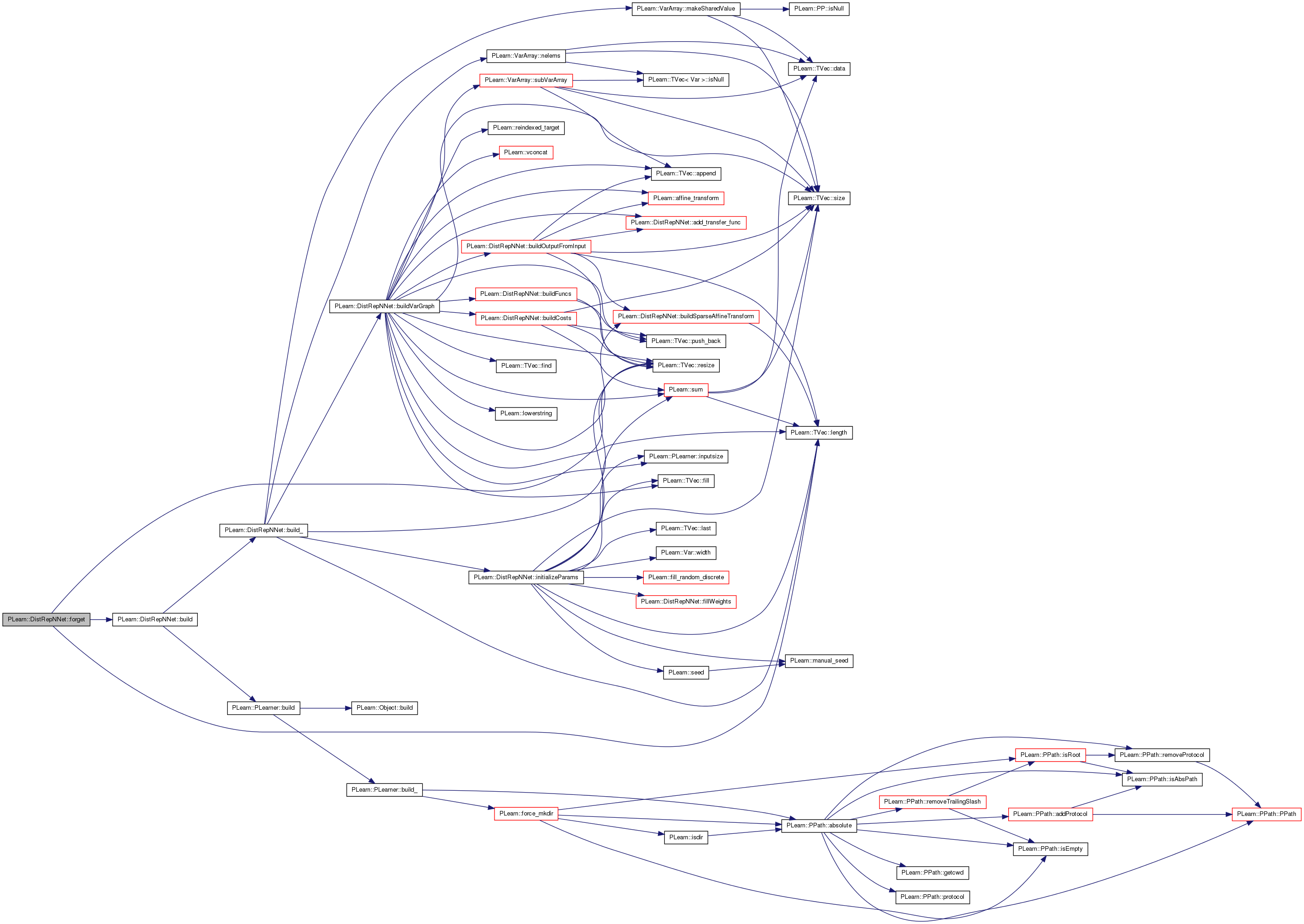

| void PLearn::DistRepNNet::buildVarGraph | ( | int | task_index | ) | [protected] |

Builds a var graph from a task, specified by task_index.

TODO: reuse weight matrices when dictionary is the same...

Definition at line 418 of file DistRepNNet.cc.

References add_transfer_func(), PLearn::affine_transform(), PLearn::TVec< T >::append(), buildCosts(), buildFuncs(), buildOutputFromInput(), buildSparseAffineTransform(), dictionaries, dist_rep, dist_rep_dim, dp_input, extra_tasks, f, PLearn::TVec< T >::fill(), PLearn::TVec< T >::find(), fixed_output_weights, i, input, input_to_dict_index, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, invars, invars_extra_tasks, j, PLearn::TVec< T >::length(), PLearn::lowerstring(), nfeatures_for_each_token, nfeatures_per_token, nhidden_dist_rep_predictor, nhidden_extra_tasks, ntokens, ntokens_extra_tasks, output, params, partial_update_vars, penalty_type, PLERROR, PLWARNING, PLearn::TVec< T >::push_back(), PLearn::reindexed_target(), PLearn::TVec< T >::resize(), sampleweight, PLearn::TVec< T >::size(), PLearn::VarArray::subVarArray(), PLearn::sum(), target, target_dict_index, target_dictionary, PLearn::PLearner::targetsize_, token_features, PLearn::PLearner::train_set, use_dist_reps, PLearn::vconcat(), PLearn::PLearner::weightsize_, winputdistrep, and woutdistrep.

Referenced by build_().

{

VMat task_set;

if(task_index < 0)

task_set = train_set;

else

task_set = extra_tasks[task_index];

if(task_set->targetsize() != 1)

PLERROR("In DistRepNNet::buildVarGraph(): task_set->targetsize() must be 1, not %d",targetsize_);

// Initialize the input.

// This is where we construct the distributed representation

// mappings (matrices).

// The input is separated in two parts, one which corresponds

// to symbolic data (uses distributed representations) and

// one which corresponds to real valued data

// Finaly, in order to figure out how many representation

// mappings have to be constructed (since several input elements

// might share the same Dictionary), we use the pointer

// value of the Dictionaries

int n_dist_rep_input = 0;

input_to_dict_index.resize(inputsize_);

input_to_dict_index.fill(-1);

target_dict_index = -1;

//if(direct_in_to_out && nnet_architecture == "csMTL")

// PLERROR("In DistRepNNet::buildVarGraph(): direct_in_to_out cannot be used with \"csMTL\" architecture");

// Associate input components with their corresponding

// Dictionary and distributed representation

for(int i=0; i<task_set->inputsize(); i++)

{

PP<Dictionary> dict = task_set->getDictionary(i);

// Check if component has Dictionary

if(dict)

{

// Find if Dictionary has already been added

int f = dictionaries.find(dict);

if(f<0)

{

dictionaries.push_back(dict);

input_to_dict_index[i] = dictionaries.size()-1;

}

else input_to_dict_index[i] = f;

n_dist_rep_input++;

}

}

// Add target Dictionary

{

PP<Dictionary> dict;

if(target_dictionary && task_index < 0) dict = target_dictionary;

else dict = task_set->getDictionary(task_set->inputsize());

// Check if component has Dictionary

if(!dict) PLERROR("In DistRepNNet::buildVarGraph(): target component of task set has no Dictionary");

// Find if Dictionary has already been added

int f = dictionaries.find(dict);

if(f<0)

{

dictionaries.push_back(dict);

target_dict_index = dictionaries.size()-1;

}

else

target_dict_index = f;

}

// if(dist_rep_dim.length() != dist_reps.length())

// PLWARNING("In DistRepNNet::buildVarGraph(): number of distributed representation sets (%d) and dimensionaly specification (dist_rep_dim.length()=%d) isn't the same", dist_reps.length(), dist_rep_dim.length());

input = Var(task_set->inputsize(), "input");

//if(nnet_architecture == "dist_rep_predictor" || nnet_architecture == "linear")

//if(!use_dist_reps)

//{

// ntokens = 1;

// nfeatures_per_token = task_set->inputsize();

// nhidden_dist_rep_predictor = -1;

// dim = dictionaries[target_dict_index]->size() + (dictionaries[target_dict_index]->oov_not_in_possible_values ? 0 : 1);

//}

if(use_dist_reps)

{

int dim = dist_rep_dim[0];

int this_ntokens;

if(task_index < 0)

{

if(nfeatures_for_each_token.length() == 0)

{

if(ntokens <= 0) PLERROR("In DistRepNNet::buildVarGraph(): ntokens should be > 0");

if(nfeatures_per_token <= 0) PLERROR("In DistRepNNet::buildVarGraph(): nfeatures_per_token should be > 0");

if(ntokens * nfeatures_per_token != task_set->inputsize()) PLERROR("In DistRepNNet::buildVarGraph(): ntokens * nfeatures_per_token != task_set->inputsize()");

}

else

{

int sum_feat = 0;

for(int f=0; f<nfeatures_for_each_token.length(); f++)

{

if(nfeatures_for_each_token[f] <= 0) PLERROR("In DistRepNNet::buildVarGraph(): nfeatures_for_each_token[%d] should be > 0", f);

sum_feat += nfeatures_for_each_token[f];

}

if(sum_feat != inputsize())

PLERROR("In DistRepNNet::buildVarGraph(): sum of nfeatures_for_each_token should be equal to inputsize");

if(nfeatures_for_each_token.length() != ntokens)

PLERROR("In DistRepNNet::buildVarGraph(): nfeatures_for_each_token should be of size ntokens=%d", ntokens);

}

this_ntokens = ntokens;

}

else

{

if(nfeatures_for_each_token.length() != 0)

PLERROR("In DistRepNNet::buildVarGraph(): usage of nfeatures_for_each_token with extra tasks is not supported yet");

if(task_index >= ntokens_extra_tasks.length()) PLERROR("In DistRepNNet::buildVarGraph(): ntokens not defined for task %d", task_index);

if(ntokens_extra_tasks[task_index] <= 0) PLERROR("In DistRepNNet::buildVarGraph(): ntokens[%d] should be > 0", task_index);

if(nfeatures_per_token <= 0) PLERROR("In DistRepNNet::buildVarGraph(): nfeatures_per_token should be > 0");

if(ntokens_extra_tasks[task_index] * nfeatures_per_token != task_set->inputsize()) PLERROR("In DistRepNNet::buildVarGraph(): ntokens_extra_task[%d] * nfeatures_per_token != task_set->inputsize()",task_index);

this_ntokens = ntokens_extra_tasks[task_index];

}

//activated_weights.resize(0);

VarArray dist_reps(this_ntokens);

VarArray dist_rep_hids(this_ntokens);

if(nfeatures_for_each_token.length() != 0)

{

if(winputdistrep.length() == 0)

{

winputdistrep.resize(sum(nfeatures_for_each_token)+this_ntokens);

int sum = 0;

int sum_dict = 0;

if(nhidden_dist_rep_predictor>0)

PLERROR("In DistRepNNet::buildVarGraph(): nhidden_dist_rep_predictor>0 is not supported with nfeatures_for_each_token");

for(int t=0; t<this_ntokens; t++)

{

if(nhidden_dist_rep_predictor > 0) winputdistrep[sum+nfeatures_for_each_token[t]] = Var(1,nhidden_dist_rep_predictor);

else winputdistrep[sum+nfeatures_for_each_token[t]] = Var(1,dim);

for(int j=0; j<nfeatures_for_each_token[t]; j++)

{

if(input_to_dict_index[sum_dict+j] < 0)

if(nhidden_dist_rep_predictor > 0) winputdistrep[sum+j] = Var(1,nhidden_dist_rep_predictor);

else winputdistrep[sum+j] = Var(1,dim);

else

if(nhidden_dist_rep_predictor > 0) winputdistrep[sum+j] = Var(dictionaries[input_to_dict_index[sum_dict+j]]->size()+1,nhidden_dist_rep_predictor);

else winputdistrep[sum+j] = Var(dictionaries[input_to_dict_index[sum_dict+j]]->size()+1,dim);

if(nhidden_dist_rep_predictor > 0) woutdistrep = Var(nhidden_dist_rep_predictor+1,dim);

}

sum += nfeatures_for_each_token[t]+1;

sum_dict += nfeatures_for_each_token[t];

}

params.append(winputdistrep);

partial_update_vars.append(winputdistrep);

if(nhidden_dist_rep_predictor > 0) params.append(woutdistrep);

}

// Building var graph from input to distributed representations

int sum = 0;

int sum_dict = 0;

for(int i=0; i<this_ntokens; i++)

{

//if(nhidden_dist_rep_predictor > 0) dist_rep_hids[i] = buildSparseAffineTransform(winputdistrep, input, input_to_dict_index, i*nfeatures_per_token);

//else

dist_reps[i] = buildSparseAffineTransform(winputdistrep.subVarArray(sum,nfeatures_for_each_token[i]+1), input, input_to_dict_index, sum_dict);

//if(nhidden_dist_rep_predictor > 0)

//{

// dist_rep_hids[i] = add_transfer_func(dist_rep_hids[i]);

// dist_reps.append(affine_transform(dist_rep_hids[i],woutdistrep));

//}

sum += nfeatures_for_each_token[i]+1;

sum_dict += nfeatures_for_each_token[i];

}

}

else

{

if(winputdistrep.length() == 0)

{

winputdistrep.resize(nfeatures_per_token+1);

if(nhidden_dist_rep_predictor > 0) winputdistrep[nfeatures_per_token] = Var(1,nhidden_dist_rep_predictor);

else winputdistrep[nfeatures_per_token] = Var(1,dim);

for(int j=0; j<nfeatures_per_token; j++)

{

if(input_to_dict_index[j] < 0)

if(nhidden_dist_rep_predictor > 0) winputdistrep[j] = Var(1,nhidden_dist_rep_predictor);

else winputdistrep[j] = Var(1,dim);

else

if(nhidden_dist_rep_predictor > 0) winputdistrep[j] = Var(dictionaries[input_to_dict_index[j]]->size()+1,nhidden_dist_rep_predictor);

else winputdistrep[j] = Var(dictionaries[input_to_dict_index[j]]->size()+1,dim);

if(nhidden_dist_rep_predictor > 0) woutdistrep = Var(nhidden_dist_rep_predictor+1,dim);

}

params.append(winputdistrep);

partial_update_vars.append(winputdistrep);

if(nhidden_dist_rep_predictor > 0) params.append(woutdistrep);

}

// Building var graph from input to distributed representations

for(int i=0; i<this_ntokens; i++)

{

if(nhidden_dist_rep_predictor > 0) dist_rep_hids[i] = buildSparseAffineTransform(winputdistrep, input, input_to_dict_index, i*nfeatures_per_token);

else dist_reps[i] = buildSparseAffineTransform(winputdistrep, input, input_to_dict_index, i*nfeatures_per_token);

if(nhidden_dist_rep_predictor > 0)

{

dist_rep_hids[i] = add_transfer_func(dist_rep_hids[i]);

dist_reps.append(affine_transform(dist_rep_hids[i],woutdistrep));

}

}

}

if(task_index < 0)

{

// To construct the Func...

if(nfeatures_for_each_token.length() != 0)

token_features = Var(sum(nfeatures_for_each_token));

else

token_features = Var(nfeatures_per_token);

//VarArray aw;

if(nfeatures_for_each_token.length() != 0)

{

int sum = 0;

int sum_dict = 0;

VarArray dist_reps(this_ntokens);

for(int i=0; i<this_ntokens; i++)

{

//if(nhidden_dist_rep_predictor > 0) dist_rep_hids[i] = buildSparseAffineTransform(winputdistrep, input, input_to_dict_index, i*nfeatures_per_token);

//else

dist_reps[i] = buildSparseAffineTransform(winputdistrep.subVarArray(sum,nfeatures_for_each_token[i]+1), token_features, input_to_dict_index, sum_dict);

//if(nhidden_dist_rep_predictor > 0)

//{

// dist_rep_hids[i] = add_transfer_func(dist_rep_hids[i]);

// dist_reps.append(affine_transform(dist_rep_hids[i],woutdistrep));

//}

sum += nfeatures_for_each_token[i]+1;

sum_dict += nfeatures_for_each_token[i];

}

dist_rep = vconcat(dist_reps);

}

else

{

Var dist_rep_hid;

if(nhidden_dist_rep_predictor > 0) dist_rep_hid = buildSparseAffineTransform(winputdistrep, token_features, input_to_dict_index, 0);

else dist_rep = buildSparseAffineTransform(winputdistrep, token_features, input_to_dict_index, 0);

if(nhidden_dist_rep_predictor > 0)

{

dist_rep_hid = add_transfer_func(dist_rep_hid);

dist_rep = affine_transform(dist_rep_hid,woutdistrep);

}

}

}

dp_input = vconcat(dist_reps);

}

if(fixed_output_weights && !use_dist_reps && (task_index < 0 && nhidden <= 0 || task_index>=0 && nhidden_extra_tasks[task_index] <= 0))

PLERROR("In DistRepNNet::buildVarGraph(): fixed output weights is not implemented for sparse input and no hidden layers");

// Build main network graph.

buildOutputFromInput(task_index);

target = Var(targetsize_);

TVec<int> target_cols(1);

target_cols[0] = task_set->inputsize();

Var reind_target;

if(target_dictionary && task_index < 0)

reind_target = reindexed_target(target,input,target_dictionary,target_cols);

else

reind_target = reindexed_target(target,input,task_set,target_cols);

//reind_target = target;

if(weightsize_>0)

{

if (weightsize_!=1)

PLERROR("In DistRepNNet::buildVarGraph(): expected weightsize to be 1 or 0 (or unspecified = -1, meaning 0), got %d",weightsize_);

sampleweight = Var(1, "weight");

}

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l1_square" || pt == "l1 square" || pt == "l1square" )

penalty_type = "L1_square";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("L2 penalty not supported, assuming you want L2 square");

penalty_type = "L2_square";

}

else

PLERROR("penalty_type \"%s\" not supported", penalty_type.c_str());

buildCosts(output, reind_target, task_index);

// Build functions.

if(task_index < 0)

buildFuncs(invars);

else

buildFuncs(invars_extra_tasks[task_index]);

// if(consider_unseen_classes) cost_paramf.resize(getTrainCostNames().length());

}

| string PLearn::DistRepNNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 97 of file DistRepNNet.cc.

Referenced by train().

| void PLearn::DistRepNNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 1395 of file DistRepNNet.cc.

References PLERROR.

{

PLERROR("In DistRepNNet::computeCostsFromOutputs: output is not enough to compute cost");

//computeOutputAndCosts(inputv,targetv,outputv,costsv);

}

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 1405 of file DistRepNNet.cc.

References f, PLearn::PLearner::inputsize_, MISSING_VALUE, options, output_comp, PLearn::TVec< T >::resize(), row, PLearn::TVec< T >::subVec(), target_dictionary, target_values, PLearn::PLearner::train_set, and PLearn::VMat::width().

{

f->sizefprop(inputv,output_comp);

row.resize(inputsize_);

row << inputv;

row.resize(train_set->width());

row.subVec(inputsize_,train_set->width()-inputsize_).fill(MISSING_VALUE);

if(target_dictionary)

target_dictionary->getValues(options,target_values);

else

train_set->getValues(row,inputsize_,target_values);

// TO REMOVE!!!

//for(int i=0; i<outputv.length(); i++)

//{

// outputv[(int)target_values[i]] = output->valuedata[i];

//}

outputv[0] = target_values[(int)output_comp[0]];

//outputv[0] = (int)output_comp[0];

}

| void PLearn::DistRepNNet::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 1428 of file DistRepNNet.cc.

References PLearn::PLearner::inputsize_, MISSING_VALUE, options, output_comp, PLearn::TVec< T >::resize(), row, PLearn::TVec< T >::subVec(), target_dictionary, target_values, test_costf, PLearn::PLearner::train_set, and PLearn::VMat::width().

{

test_costf->sizefprop(inputv&targetv, output_comp&costsv);

row.resize(inputsize_);

row << inputv;

row.resize(train_set->width());

row.subVec(inputsize_,train_set->width()-inputsize_).fill(MISSING_VALUE);

if(target_dictionary)

target_dictionary->getValues(options,target_values);

else

train_set->getValues(row,inputsize_,target_values);

outputv[0] = target_values[(int)output_comp[0]];

//outputv[0] = (int)output_comp[0];

//for(int i=0; i<costsv.length(); i++)

// if(is_missing(costsv[i]))

// cout << "WHAT THE FUCK!!!" << endl;

}

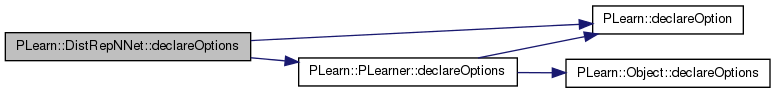

| void PLearn::DistRepNNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Methods to get the network's (learned) parameters.

Reimplemented from PLearn::PLearner.

Definition at line 145 of file DistRepNNet.cc.

References batch_size, bias_decay, PLearn::OptionBase::buildoption, cost_funcs, PLearn::declareOption(), PLearn::PLearner::declareOptions(), direct_in_to_out, direct_in_to_out_bias_decay, direct_in_to_out_weight_decay, dist_rep_dim, do_not_change_params, extra_tasks, fixed_output_weights, hidden_transfer_func, initialization_method, initialize_sparse_params_to_zero, input_dist_rep_predictor_bias_decay, input_dist_rep_predictor_weight_decay, layer1_bias_decay, layer1_theta_predictor_bias_decay, layer1_theta_predictor_weight_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, margin, nfeatures_for_each_token, nfeatures_per_token, nhidden, nhidden2, nhidden2_extra_tasks, nhidden_dist_rep_predictor, nhidden_extra_tasks, nhidden_theta_predictor, ntokens, ntokens_extra_tasks, optimizer, optimizer_extra_tasks, output_dist_rep_predictor_bias_decay, output_dist_rep_predictor_weight_decay, output_layer_bias_decay, output_layer_theta_predictor_bias_decay, output_layer_theta_predictor_weight_decay, output_layer_weight_decay, output_transfer_func, paramsvalues, penalty_type, target_dictionary, target_dist_rep, PLearn::PLearner::train_set, use_dist_reps, use_extra_tasks_only_on_first_epoch, use_output_weights_bases, and weight_decay.

{

declareOption(ol, "nhidden", &DistRepNNet::nhidden, OptionBase::buildoption,

"Number of hidden units in first hidden layer (0 means no hidden layer).\n");

declareOption(ol, "nhidden2", &DistRepNNet::nhidden2, OptionBase::buildoption,

"Number of hidden units in second hidden layer (0 means no hidden layer).\n");

declareOption(ol, "weight_decay", &DistRepNNet::weight_decay, OptionBase::buildoption,

"Global weight decay for all layers.\n");

declareOption(ol, "bias_decay", &DistRepNNet::bias_decay, OptionBase::buildoption,

"Global bias decay for all layers.\n");

declareOption(ol, "layer1_weight_decay", &DistRepNNet::layer1_weight_decay, OptionBase::buildoption,

"Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer1_bias_decay", &DistRepNNet::layer1_bias_decay, OptionBase::buildoption,

"Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer2_weight_decay", &DistRepNNet::layer2_weight_decay, OptionBase::buildoption,

"Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer2_bias_decay", &DistRepNNet::layer2_bias_decay, OptionBase::buildoption,

"Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer1_theta_predictor_weight_decay", &DistRepNNet::layer1_theta_predictor_weight_decay, OptionBase::buildoption,

"Additional weight decay for the first hidden layer of the theta-predictor. Is added to weight_decay.\n");

declareOption(ol, "layer1_theta_predictor_bias_decay", &DistRepNNet::layer1_theta_predictor_bias_decay, OptionBase::buildoption,

"Additional bias decay for the first hidden layer of the theta-predictor. Is added to bias_decay.\n");

declareOption(ol, "output_layer_weight_decay", &DistRepNNet::output_layer_weight_decay, OptionBase::buildoption,

"Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_bias_decay", &DistRepNNet::output_layer_bias_decay, OptionBase::buildoption,

"Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "output_layer_theta_predictor_weight_decay", &DistRepNNet::output_layer_theta_predictor_weight_decay, OptionBase::buildoption,

"Additional weight decay for the output layer of the theta-predictor. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_theta_predictor_bias_decay", &DistRepNNet::output_layer_theta_predictor_bias_decay, OptionBase::buildoption,

"Additional bias decay for the output layer of the theta-predictor. Is added to 'bias_decay'.\n");

declareOption(ol, "output_dist_rep_predictor_weight_decay", &DistRepNNet::output_dist_rep_predictor_weight_decay, OptionBase::buildoption,

"Additional weight decay for the weights going from the hidden layer of the distributed representation predictor. Is added to 'weight_decay'.\n");

declareOption(ol, "output_dist_rep_predictor_bias_decay", &DistRepNNet::output_dist_rep_predictor_bias_decay, OptionBase::buildoption,

"Additional bias decay for the weights going from the hidden layer of the distributed representation predictor. Is added to 'bias_decay'.\n");

declareOption(ol, "input_dist_rep_predictor_weight_decay", &DistRepNNet::input_dist_rep_predictor_weight_decay, OptionBase::buildoption,

"Additional weight decay for the weights going from the input layer of the distributed representation predictor. Is added to 'weight_decay'.\n");

declareOption(ol, "input_dist_rep_predictor_bias_decay", &DistRepNNet::input_dist_rep_predictor_bias_decay, OptionBase::buildoption,

"Additional bias decay for the weights going from the input layer of the distributed representation predictor. Is added to 'bias_decay'.\n");

declareOption(ol, "direct_in_to_out_weight_decay", &DistRepNNet::direct_in_to_out_weight_decay, OptionBase::buildoption,

"Additional weight decay for the weights going from the input directly to the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "direct_in_to_out_bias_decay", &DistRepNNet::direct_in_to_out_bias_decay, OptionBase::buildoption,

"Additional bias decay for the weights going from the input directly to the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "penalty_type", &DistRepNNet::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L1_square\": square of the L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "fixed_output_weights", &DistRepNNet::fixed_output_weights, OptionBase::buildoption,

"If true then the output weights are not learned. They are initialized to +1 or -1 randomly.\n");

declareOption(ol, "direct_in_to_out", &DistRepNNet::direct_in_to_out, OptionBase::buildoption,

"If true then direct input to output weights will be added (if nhidden > 0).\n");

declareOption(ol, "output_transfer_func", &DistRepNNet::output_transfer_func, OptionBase::buildoption,

"what transfer function to use for ouput layer? One of: \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"exp\" \n"

" - \"softplus\" \n"

" - \"softmax\" \n"

" - \"log_softmax\" \n"

" - \"hard_slope\" \n"

" - \"symm_hard_slope\" \n"

"An empty string or \"none\" means no output transfer function \n");

declareOption(ol, "hidden_transfer_func", &DistRepNNet::hidden_transfer_func, OptionBase::buildoption,

"What transfer function to use for hidden units? One of \n"

" - \"linear\" \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"exp\" \n"

" - \"softplus\" \n"

" - \"softmax\" \n"

" - \"log_softmax\" \n"

" - \"hard_slope\" \n"

" - \"symm_hard_slope\" \n");

declareOption(ol, "cost_funcs", &DistRepNNet::cost_funcs, OptionBase::buildoption,

"A list of cost functions to use\n"

"in the form \"[ cf1; cf2; cf3; ... ]\" where each function is one of: \n"

" - \"mse_onehot\" (for classification)\n"

" - \"NLL\" (negative log likelihood -log(p[c]) for classification) \n"

" - \"class_error\" (classification error) \n"

" - \"margin_perceptron_cost\" (a hard version of the cross_entropy, uses the 'margin' option)\n"

"The FIRST function of the list will be used as \n"

"the objective function to optimize \n"

"(possibly with an added weight decay penalty) \n");

declareOption(ol, "margin", &DistRepNNet::margin, OptionBase::buildoption,

"Margin requirement, used only with the margin_perceptron_cost cost function.\n"

"It should be positive, and larger values regularize more.\n");

declareOption(ol, "do_not_change_params", &DistRepNNet::do_not_change_params, OptionBase::buildoption,

"If set to 1, the weights won't be loaded nor initialized at build time.");

declareOption(ol, "optimizer", &DistRepNNet::optimizer, OptionBase::buildoption,

"Specify the optimizer to use\n");

declareOption(ol, "batch_size", &DistRepNNet::batch_size, OptionBase::buildoption,

"How many samples to use to estimate the avergage gradient before updating the weights\n"

"0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "dist_rep_dim", &DistRepNNet::dist_rep_dim, OptionBase::buildoption,

" Dimensionality (number of components) of distributed representations.\n"

"The first element is the dimensionality of the input distributed representations"

"and the last one is the dimensionality of the target distributed representations."

// "Those values are taken one by one, as the Dictionary objects are extracted.\n"

// "When nnet_architecture == \"dist_rep_predictor\", the first element of dist_rep_dim\n"

// "indicates the dimensionality to the predicted distributed representation.\n"

);

declareOption(ol, "initialization_method", &DistRepNNet::initialization_method, OptionBase::buildoption,

"The method used to initialize the weights:\n"

" - \"normal_linear\" = a normal law with variance 1/n_inputs\n"

" - \"normal_sqrt\" = a normal law with variance 1/sqrt(n_inputs)\n"

" - \"uniform_linear\" = a uniform law in [-1/n_inputs, 1/n_inputs]\n"

" - \"uniform_sqrt\" = a uniform law in [-1/sqrt(n_inputs), 1/sqrt(n_inputs)]\n"

" - \"zero\" = all weights are set to 0\n");

declareOption(ol, "use_dist_reps", &DistRepNNet::use_dist_reps, OptionBase::buildoption,

"Indication that distributed representations should be used");

declareOption(ol, "use_output_weights_bases", &DistRepNNet::use_output_weights_bases, OptionBase::buildoption,

"Indication that bases for output weights should be used");

declareOption(ol, "use_extra_tasks_only_on_first_epoch", &DistRepNNet::use_extra_tasks_only_on_first_epoch, OptionBase::buildoption,

"Indication that the extra tasks will only be used at the first epoch");

declareOption(ol, "initialize_sparse_params_to_zero", &DistRepNNet::initialize_sparse_params_to_zero, OptionBase::buildoption,

"Indication that the parameters on the sparse input should be initialized to zero");

/*

declareOption(ol, "nnet_architecture", &DistRepNNet::nnet_architecture, OptionBase::buildoption,

"Architecture of the neural network:\n"

" - \"standard\"\n"

//" - \"csMTL\" (context-sensitive Multiple Task Learning, at NIPS 2005 Inductive Transfer Workshop)\n"

" - \"theta_predictor\" (standard NNet with output weights being PREDICTED) \n"

" - \"dist_rep_predictor\" (standard NNet with distributed representation being PREDICTED) \n"

" - \"linear\" (linear classifier that doesn't learn distributed representations) \n"

);

*/

declareOption(ol, "ntokens", &DistRepNNet::ntokens, OptionBase::buildoption,

"Number of tokens, for which to predict a distributed representation.\n");

declareOption(ol, "nfeatures_per_token", &DistRepNNet::nfeatures_per_token, OptionBase::buildoption,

"Number of features per token.\n");

declareOption(ol, "nfeatures_for_each_token", &DistRepNNet::nfeatures_for_each_token, OptionBase::buildoption,

"Number of features for each token (nfeatures_per_token is used if nfeatures_for_each_token.length()==0).\n");

declareOption(ol, "nhidden_dist_rep_predictor", &DistRepNNet::nhidden_dist_rep_predictor, OptionBase::buildoption,

"Number of hidden units of the neural network predictor for the distributed representation.\n");

declareOption(ol, "target_dictionary", &DistRepNNet::target_dictionary, OptionBase::buildoption,

"User specified Dictionary for the target field. If null, then it is extracted from the training set VMatrix.\n");

declareOption(ol, "target_dist_rep", &DistRepNNet::target_dist_rep, OptionBase::buildoption,

"User specified distributed representation for the target field. If null, then it is learned from the training set VMatrix.\n");

declareOption(ol, "paramsvalues", &DistRepNNet::paramsvalues, OptionBase::learntoption,

"The learned parameter vector\n");

declareOption(ol, "nhidden_theta_predictor", &DistRepNNet::nhidden_theta_predictor, OptionBase::buildoption,

"Number of hidden units of the neural network predictor for the hidden to output weights.\n");