|

PLearn 0.1

|

|

PLearn 0.1

|

#include <NeuralNet.h>

Public Types | |

| typedef Learner | inherited |

Public Member Functions | |

| NeuralNet () | |

| virtual | ~NeuralNet () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual NeuralNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| **** SUBCLASS WRITING: **** This method should be redefined in subclasses, to just call inherited::build() and then build_() | |

| virtual void | forget () |

| void | initializeParams () |

| virtual void | train (VMat training_set) |

| virtual void | use (const Vec &inputvec, Vec &prediction) |

| virtual int | costsize () const |

| **** SUBCLASS WRITING: should be re-defined if user re-defines computeCost default version returns | |

| virtual Array< string > | costNames () const |

| virtual Array< string > | testResultsNames () |

| virtual void | useAndCost (const Vec &inputvec, const Vec &targetvec, Vec outputvec, Vec costvec) |

| void | computeCost (const Vec &inputvec, const Vec &targetvec, const Vec &outputvec, const Vec &costvec) |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

Static Public Member Functions | |

| static string | _classname_ () |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Func | f |

| Func | costf |

| Func | output_and_target_to_cost |

| int | nhidden |

| int | nhidden2 |

| real | weight_decay |

| real | bias_decay |

| real | layer1_weight_decay |

| real | layer1_bias_decay |

| real | layer2_weight_decay |

| real | layer2_bias_decay |

| real | output_layer_weight_decay |

| real | output_layer_bias_decay |

| real | direct_in_to_out_weight_decay |

| bool | global_weight_decay |

| bool | direct_in_to_out |

| string | output_transfer_func |

| int | iseed |

| Array< string > | cost_funcs |

| a list of cost functions to use in the form "[ cf1; cf2; cf3; ... ]" | |

| real | semisupervised_flatten_factor |

| Vec | semisupervised_prior |

| PP< Optimizer > | optimizer |

| int | batch_size |

| int | nepochs |

| string | saveparams |

| Array< Vec > | normalization |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declare options (data fields) for the class. | |

Protected Attributes | |

| Var | input |

| Var | target |

| Var | costweights |

| Var | target_and_weights |

| Var | w1 |

| Var | w2 |

| Var | wout |

| Var | wdirect |

| Var | output |

| VarArray | costs |

| Var | cost |

| VarArray | params |

| Vec | paramsvalues |

| Vec | initial_paramsvalues |

Private Member Functions | |

| void | build_ () |

Consider using NNet instead.

Definition at line 55 of file NeuralNet.h.

| typedef Learner PLearn::NeuralNet::inherited |

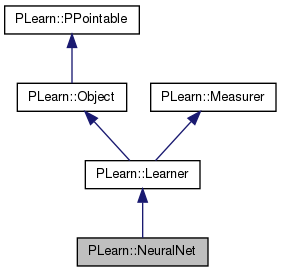

Reimplemented from PLearn::Learner.

Definition at line 83 of file NeuralNet.h.

| PLearn::NeuralNet::NeuralNet | ( | ) |

Definition at line 83 of file NeuralNet.cc.

:nhidden(0), nhidden2(0), weight_decay(0), bias_decay(0), layer1_weight_decay(0), layer1_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), direct_in_to_out_weight_decay(0), direct_in_to_out(false), output_transfer_func(""), iseed(-1), semisupervised_flatten_factor(1), batch_size(1), nepochs(10000), saveparams("") {}

| PLearn::NeuralNet::~NeuralNet | ( | ) | [virtual] |

Definition at line 104 of file NeuralNet.cc.

{

}

| string PLearn::NeuralNet::_classname_ | ( | ) | [static] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| OptionList & PLearn::NeuralNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| RemoteMethodMap & PLearn::NeuralNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| Object * PLearn::NeuralNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| StaticInitializer NeuralNet::_static_initializer_ & PLearn::NeuralNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

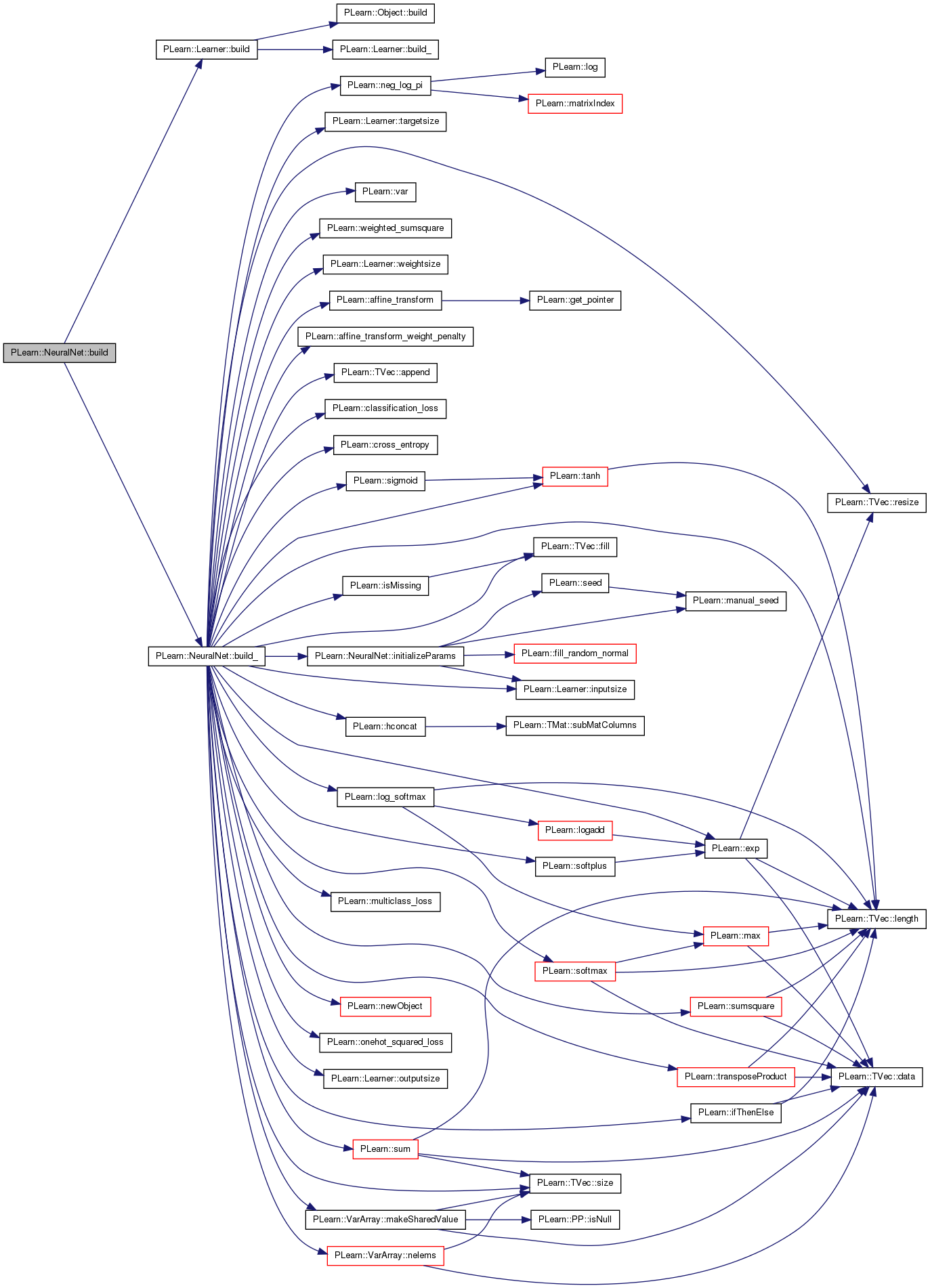

| void PLearn::NeuralNet::build | ( | ) | [virtual] |

**** SUBCLASS WRITING: **** This method should be redefined in subclasses, to just call inherited::build() and then build_()

Reimplemented from PLearn::Learner.

Definition at line 199 of file NeuralNet.cc.

References PLearn::Learner::build(), and build_().

{

inherited::build();

build_();

}

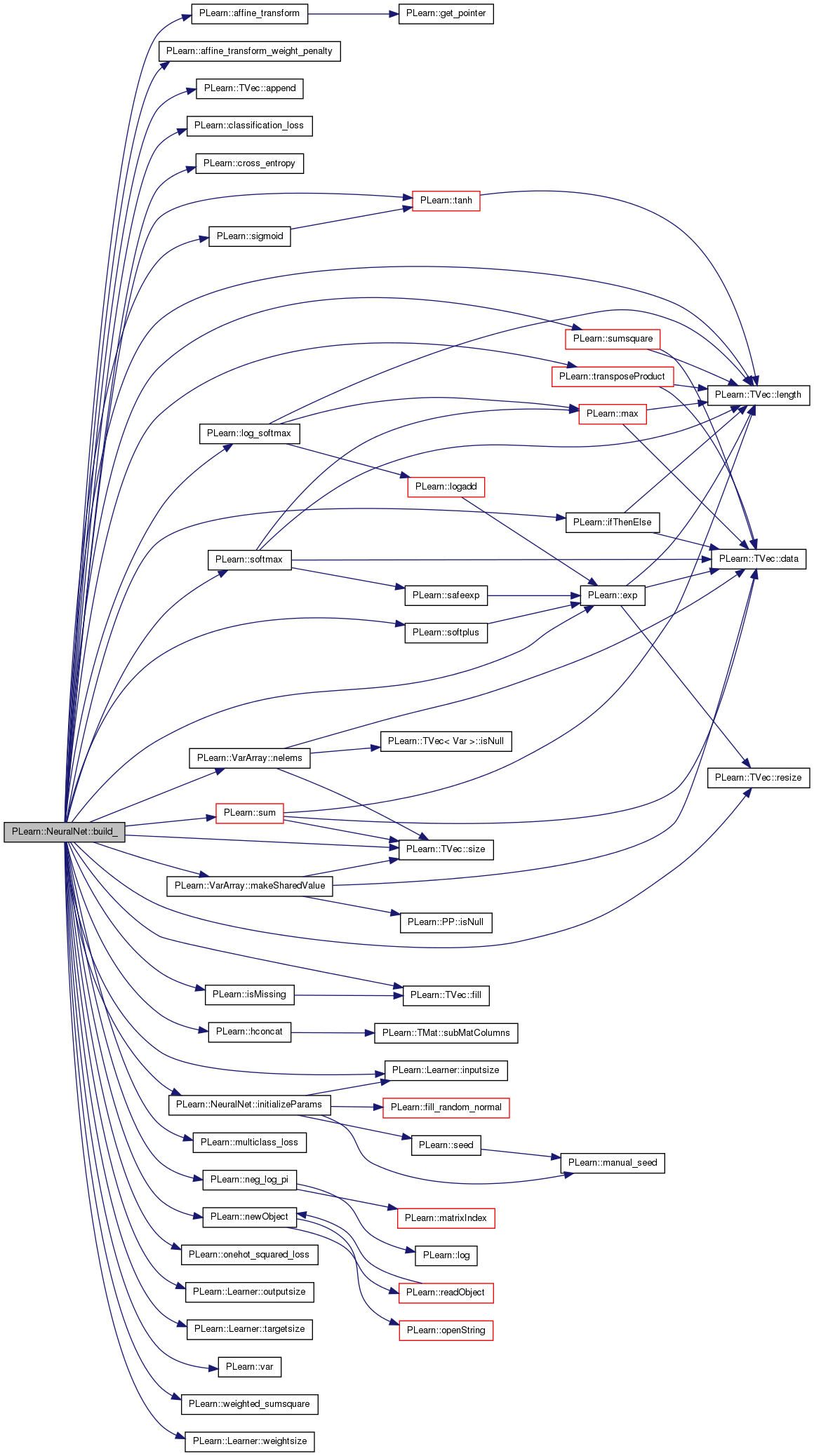

| void PLearn::NeuralNet::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: **** The build_ and build methods should be redefined in subclasses build_ should do the actual building of the Learner according to build options (member variables) previously set. (These may have been set by hand, by a constructor, by the load method, or by setOption) As build() may be called several times (after changing options, to "rebuild" an object with different build options), make sure your implementation can handle this properly.

Reimplemented from PLearn::Learner.

Definition at line 205 of file NeuralNet.cc.

References PLearn::affine_transform(), PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), bias_decay, PLearn::classification_loss(), cost, cost_funcs, costf, costs, costweights, PLearn::cross_entropy(), direct_in_to_out, direct_in_to_out_weight_decay, PLearn::exp(), f, PLearn::TVec< T >::fill(), PLearn::hconcat(), PLearn::ifThenElse(), initial_paramsvalues, initializeParams(), input, PLearn::Learner::inputsize(), PLearn::isMissing(), layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::TVec< T >::length(), PLearn::log_softmax(), PLearn::VarArray::makeSharedValue(), MISSING_VALUE, PLearn::multiclass_loss(), PLearn::neg_log_pi(), PLearn::VarArray::nelems(), PLearn::newObject(), nhidden, nhidden2, normalization, PLearn::onehot_squared_loss(), output, output_and_target_to_cost, output_layer_bias_decay, output_layer_weight_decay, output_transfer_func, PLearn::Learner::outputsize(), params, paramsvalues, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), semisupervised_flatten_factor, semisupervised_prior, PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::softmax(), PLearn::softplus(), PLearn::sum(), PLearn::sumsquare(), PLearn::tanh(), target, target_and_weights, PLearn::Learner::targetsize(), PLearn::transposeProduct(), PLearn::var(), w1, w2, wdirect, weight_decay, PLearn::weighted_sumsquare(), PLearn::Learner::weightsize(), and wout.

Referenced by build().

{

/*

* Create Topology Var Graph

*/

// init. basic vars

input = Var(inputsize(), "input");

if (normalization.length()) {

Var means(normalization[0]);

Var stddevs(normalization[1]);

output = (input - means) / stddevs;

} else

output = input;

params.resize(0);

// first hidden layer

if(nhidden>0)

{

w1 = Var(1+inputsize(), nhidden, "w1");

output = tanh(affine_transform(output,w1));

params.append(w1);

}

// second hidden layer

if(nhidden2>0)

{

w2 = Var(1+nhidden, nhidden2, "w2");

output = tanh(affine_transform(output,w2));

params.append(w2);

}

// output layer before transfer function

wout = Var(1+output->size(), outputsize(), "wout");

output = affine_transform(output,wout);

params.append(wout);

// direct in-to-out layer

if(direct_in_to_out)

{

wdirect = Var(inputsize(), outputsize(), "wdirect");// Var(1+inputsize(), outputsize(), "wdirect");

output += transposeProduct(wdirect, input);// affine_transform(input,wdirect);

params.append(wdirect);

}

/*

* output_transfer_func

*/

if(output_transfer_func!="")

{

if(output_transfer_func=="tanh")

output = tanh(output);

else if(output_transfer_func=="sigmoid")

output = sigmoid(output);

else if(output_transfer_func=="softplus")

output = softplus(output);

else if(output_transfer_func=="exp")

output = exp(output);

else if(output_transfer_func=="softmax")

output = softmax(output);

else if (output_transfer_func == "log_softmax")

output = log_softmax(output);

else

PLERROR("In NeuralNet::build_() unknown output_transfer_func option: %s",output_transfer_func.c_str());

}

/*

* target & weights

*/

if(weightsize() != 0 && weightsize() != 1 && targetsize()/2 != weightsize())

PLERROR("In NeuralNet::build_() weightsize must be either:\n"

"\t0: no weights on costs\n"

"\t1: single weight applied on total cost\n"

"\ttargetsize/2: vector of weights applied individually to each component of the cost\n"

"weightsize= %d; targetsize= %d.", weightsize(), targetsize());

target_and_weights= Var(targetsize(), "target_and_weights");

target = new SubMatVariable(target_and_weights, 0, 0, targetsize()-weightsize(), 1);

target->setName("target");

if(0 < weightsize())

{

costweights = new SubMatVariable(target_and_weights, targetsize()-weightsize(), 0, weightsize(), 1);

costweights->setName("costweights");

}

/*

* costfuncs

*/

int ncosts = cost_funcs.size();

if(ncosts<=0)

PLERROR("In NeuralNet::build_() Empty cost_funcs : must at least specify the cost function to optimize!");

costs.resize(ncosts);

for(int k=0; k<ncosts; k++)

{

bool handles_missing_target=false;

// create costfuncs and apply individual weights if weightsize() > 1

if(cost_funcs[k]=="mse")

if(weightsize() < 2)

costs[k]= sumsquare(output-target);

else

costs[k]= weighted_sumsquare(output-target, costweights);

else if(cost_funcs[k]=="mse_onehot")

costs[k] = onehot_squared_loss(output, target);

else if(cost_funcs[k]=="NLL") {

if (output_transfer_func == "log_softmax")

costs[k] = -output[target];

else

costs[k] = neg_log_pi(output, target);

} else if(cost_funcs[k]=="class_error")

costs[k] = classification_loss(output, target);

else if(cost_funcs[k]=="multiclass_error")

if(weightsize() < 2)

costs[k] = multiclass_loss(output, target);

else

PLERROR("In NeuralNet::build() weighted multiclass error cost not implemented.");

else if(cost_funcs[k]=="cross_entropy")

if(weightsize() < 2)

costs[k] = cross_entropy(output, target);

else

PLERROR("In NeuralNet::build() weighted cross entropy cost not implemented.");

else if (cost_funcs[k]=="semisupervised_prob_class")

{

if (output_transfer_func!="softmax")

PLWARNING("To properly use the semisupervised_prob_class criterion, the transfer function should probably be a softmax, to guarantee positive probabilities summing to 1");

if (semisupervised_prior.length()==0) // default value is (1,1,1...)

{

semisupervised_prior.resize(outputsize());

semisupervised_prior.fill(1.0);

}

costs[k] = new SemiSupervisedProbClassCostVariable(output,target,new SourceVariable(semisupervised_prior),

semisupervised_flatten_factor);

handles_missing_target=true;

}

else

{

costs[k]= dynamic_cast<Variable*>(newObject(cost_funcs[k]));

if(costs[k].isNull())

PLERROR("In NeuralNet::build_() unknown cost_func option: %s",cost_funcs[k].c_str());

if(weightsize() < 2)

costs[k]->setParents(output & target);

else

costs[k]->setParents(output & target & costweights);

costs[k]->build();

}

// apply a single global weight if weightsize() == 1

if(1 == weightsize())

costs[k]= costs[k] * costweights;

if (!handles_missing_target)

costs[k] = ifThenElse(isMissing(target),var(MISSING_VALUE),costs[k]);

}

/*

* weight and bias decay penalty

*/

// create penalties

VarArray penalties;

if(w1 && ((layer1_weight_decay + weight_decay)!=0 || (layer1_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(w1, (layer1_weight_decay + weight_decay), (layer1_bias_decay + bias_decay)));

if(w2 && ((layer2_weight_decay + weight_decay)!=0 || (layer2_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(w2, (layer2_weight_decay + weight_decay), (layer2_bias_decay + bias_decay)));

if(wout && ((output_layer_weight_decay + weight_decay)!=0 || (output_layer_bias_decay + bias_decay)!=0))

penalties.append(affine_transform_weight_penalty(wout, (output_layer_weight_decay + weight_decay), (output_layer_bias_decay + bias_decay)));

if(wdirect && (direct_in_to_out_weight_decay + weight_decay) != 0)

penalties.append(sumsquare(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

// apply penalty to cost

if(penalties.size() != 0)

cost = hconcat( sum(hconcat(costs[0] & penalties)) & costs );

else

cost = hconcat(costs[0] & costs);

cost->setName("cost");

output->setName("output");

// norman: ambiguous conversion (bool or char*?)

//if(paramsvalues && (paramsvalues.size() == params.nelems()))

if((bool)(paramsvalues) && (paramsvalues.size() == params.nelems()))

{

params << paramsvalues;

initial_paramsvalues.resize(paramsvalues.length());

initial_paramsvalues << paramsvalues;

}

else

{

paramsvalues.resize(params.nelems());

initializeParams();

}

params.makeSharedValue(paramsvalues);

// Funcs

f = Func(input, output);

costf = Func(input&target_and_weights, output&cost);

costf->recomputeParents();

output_and_target_to_cost = Func(output&target_and_weights, cost);

output_and_target_to_cost->recomputeParents();

}

| string PLearn::NeuralNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

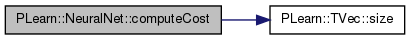

| void PLearn::NeuralNet::computeCost | ( | const Vec & | inputvec, |

| const Vec & | targetvec, | ||

| const Vec & | outputvec, | ||

| const Vec & | costvec | ||

| ) |

Definition at line 284 of file Learner.cc.

References PLearn::TVec< T >::size(), and PLearn::Learner::test_costfuncs.

{

for (int k=0; k<test_costfuncs.size(); k++)

cost[k] = test_costfuncs[k](output, target);

}

| Array< string > PLearn::NeuralNet::costNames | ( | ) | const [virtual] |

returns an Array of strings for the names of the components of the cost. Default version returns the info() strings of the cost functions in test_costfuncs

Reimplemented from PLearn::Learner.

Definition at line 409 of file NeuralNet.cc.

References cost_funcs.

{

return (cost_funcs[0]+"+penalty") & cost_funcs;

}

| int PLearn::NeuralNet::costsize | ( | ) | const [virtual] |

**** SUBCLASS WRITING: should be re-defined if user re-defines computeCost default version returns

Reimplemented from PLearn::Learner.

Definition at line 414 of file NeuralNet.cc.

References cost.

{ return cost->size(); }

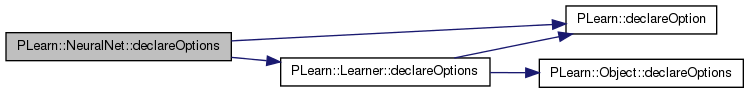

| void PLearn::NeuralNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declare options (data fields) for the class.

Redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options). Please call the inherited method AT THE END to get the options listed in a consistent order (from most recently defined to least recently defined).

static void MyDerivedClass::declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "The size of the input; it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "The learned model weights"); inherited::declareOptions(ol); }

| ol | List of options that is progressively being constructed for the current class. |

Reimplemented from PLearn::Learner.

Definition at line 108 of file NeuralNet.cc.

References batch_size, bias_decay, PLearn::OptionBase::buildoption, cost_funcs, PLearn::declareOption(), PLearn::Learner::declareOptions(), direct_in_to_out, direct_in_to_out_weight_decay, iseed, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, nepochs, nhidden, nhidden2, normalization, optimizer, output_layer_bias_decay, output_layer_weight_decay, output_transfer_func, paramsvalues, saveparams, semisupervised_flatten_factor, semisupervised_prior, and weight_decay.

{

declareOption(ol, "nhidden", &NeuralNet::nhidden, OptionBase::buildoption,

" number of hidden units in first hidden layer (0 means no hidden layer)\n");

declareOption(ol, "nhidden2", &NeuralNet::nhidden2, OptionBase::buildoption,

" number of hidden units in second hidden layer (0 means no hidden layer)\n");

declareOption(ol, "weight_decay", &NeuralNet::weight_decay, OptionBase::buildoption,

" global weight decay for all layers\n");

declareOption(ol, "bias_decay", &NeuralNet::bias_decay, OptionBase::buildoption,

" global bias decay for all layers\n");

declareOption(ol, "layer1_weight_decay", &NeuralNet::layer1_weight_decay, OptionBase::buildoption,

" Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer1_bias_decay", &NeuralNet::layer1_bias_decay, OptionBase::buildoption,

" Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(ol, "layer2_weight_decay", &NeuralNet::layer2_weight_decay, OptionBase::buildoption,

" Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(ol, "layer2_bias_decay", &NeuralNet::layer2_bias_decay, OptionBase::buildoption,

" Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(ol, "output_layer_weight_decay", &NeuralNet::output_layer_weight_decay, OptionBase::buildoption,

" Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(ol, "output_layer_bias_decay", &NeuralNet::output_layer_bias_decay, OptionBase::buildoption,

" Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(ol, "direct_in_to_out_weight_decay", &NeuralNet::direct_in_to_out_weight_decay, OptionBase::buildoption,

" Additional weight decay for the direct in-to-out layer. Is added to 'weight_decay'.\n");

declareOption(ol, "direct_in_to_out", &NeuralNet::direct_in_to_out, OptionBase::buildoption,

" should we include direct input to output connections?\n");

declareOption(ol, "output_transfer_func", &NeuralNet::output_transfer_func, OptionBase::buildoption,

" what transfer function to use for ouput layer? \n"

" one of: tanh, sigmoid, exp, softmax \n"

" an empty string means no output transfer function \n");

declareOption(ol, "seed", &NeuralNet::iseed, OptionBase::buildoption,

" Seed for the random number generator used to initialize parameters. If -1 then use time of day.\n");

declareOption(ol, "cost_funcs", &NeuralNet::cost_funcs, OptionBase::buildoption,

" a list of cost functions to use\n"

" in the form \"[ cf1; cf2; cf3; ... ]\" where each function is one of: \n"

" mse (for regression)\n"

" mse_onehot (for classification)\n"

" NLL (negative log likelihood -log(p[c]) for classification) \n"

" class_error (classification error) \n"

" semisupervised_prob_class\n"

" The first function of the list will be used as \n"

" the objective function to optimize \n"

" (possibly with an added weight decay penalty) \n"

" If semisupervised_prob_class is chosen, then the options\n"

" semisupervised_{flatten_factor,prior} will be used. Note that\n"

" the output_transfer_func should be the softmax, in that case.\n"

);

declareOption(ol, "semisupervised_flatten_factor", &NeuralNet::semisupervised_flatten_factor, OptionBase::buildoption,

" Hyper-parameter of the semi-supervised criterion for probabilistic classifiers\n");

declareOption(ol, "semisupervised_prior", &NeuralNet::semisupervised_prior, OptionBase::buildoption,

" Hyper-parameter of the semi-supervised criterion = prior classes probabilities\n");

declareOption(ol, "optimizer", &NeuralNet::optimizer, OptionBase::buildoption,

" specify the optimizer to use\n");

declareOption(ol, "batch_size", &NeuralNet::batch_size, OptionBase::buildoption,

" how many samples to use to estimate the avergage gradient before updating the weights\n"

" 0 is equivalent to specifying training_set->length() \n"

" NOTE: this overrides the optimizer's 'n_updates' and 'every_iterations'.\n");

declareOption(ol, "nepochs", &NeuralNet::nepochs, OptionBase::buildoption,

" how many times the optimizer gets to see the whole training set.\n");

declareOption(ol, "paramsvalues", &NeuralNet::paramsvalues, OptionBase::learntoption,

" The learned parameter vector (in which order?)\n");

declareOption(ol, "saveparams", &NeuralNet::saveparams, OptionBase::learntoption,

" This string, if not empty, indicates where in the expdir directory\n"

" to save the final paramsvalues\n");

declareOption(ol, "normalization", &NeuralNet::normalization, OptionBase::buildoption,

" The normalization to be applied to the data\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::NeuralNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Learner.

Definition at line 133 of file NeuralNet.h.

{ return costNames(); }

Reimplemented from PLearn::Learner.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

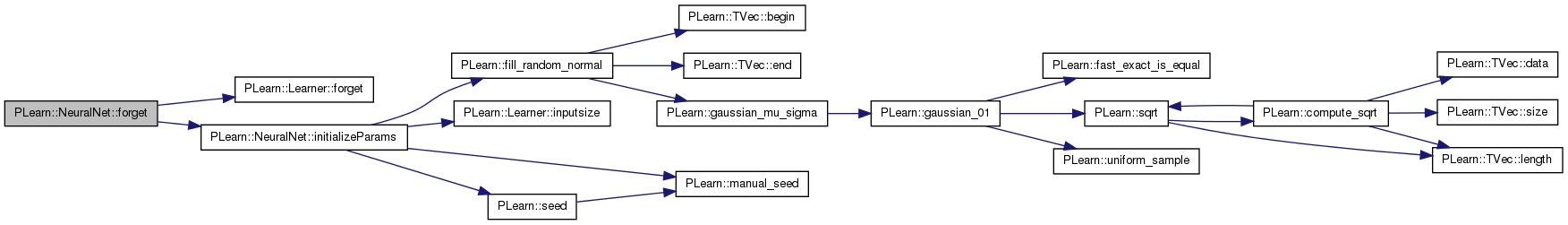

| void PLearn::NeuralNet::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: *** This method should be called AFTER or inside the build method, e.g. in order to re-initialize parameters. It should put the Learner in a 'fresh' state, not being influenced by any past call to train (everything learned is forgotten!).

Reimplemented from PLearn::Learner.

Definition at line 498 of file NeuralNet.cc.

References PLearn::Learner::forget(), initial_paramsvalues, initializeParams(), and params.

{

if(initial_paramsvalues)

params << initial_paramsvalues;

else

initializeParams();

inherited::forget();

}

| OptionList & PLearn::NeuralNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| OptionMap & PLearn::NeuralNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

| RemoteMethodMap & PLearn::NeuralNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 81 of file NeuralNet.cc.

: Use NNet instead", "NO HELP");

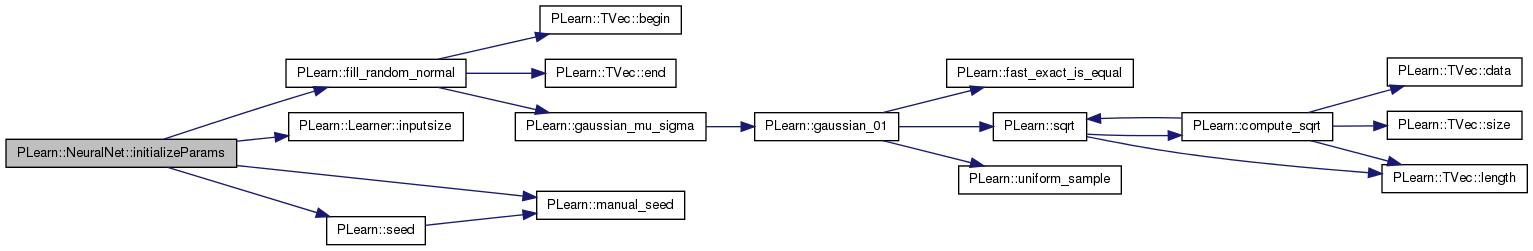

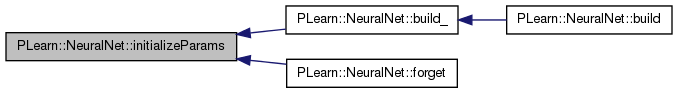

| void PLearn::NeuralNet::initializeParams | ( | ) |

Definition at line 440 of file NeuralNet.cc.

References direct_in_to_out, PLearn::fill_random_normal(), PLearn::Learner::inputsize(), iseed, PLearn::manual_seed(), nhidden, nhidden2, PLearn::seed(), w1, w2, wdirect, and wout.

Referenced by build_(), and forget().

{

if (iseed<0)

seed();

else

manual_seed(iseed);

//real delta = 1./sqrt(inputsize());

real delta = 1./inputsize();

/*

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, delta);

//wdirect->matValue(0).clear();

}

*/

if(nhidden>0)

{

//fill_random_uniform(w1->value, -delta, +delta);

//delta = 1./sqrt(nhidden);

fill_random_normal(w1->value, 0, delta);

if(direct_in_to_out)

{

//fill_random_uniform(wdirect->value, -delta, +delta);

fill_random_normal(wdirect->value, 0, delta);

wdirect->matValue(0).clear();

}

delta = 1./nhidden;

w1->matValue(0).clear();

}

if(nhidden2>0)

{

//fill_random_uniform(w2->value, -delta, +delta);

//delta = 1./sqrt(nhidden2);

fill_random_normal(w2->value, 0, delta);

delta = 1./nhidden2;

w2->matValue(0).clear();

}

//fill_random_uniform(wout->value, -delta, +delta);

fill_random_normal(wout->value, 0, delta);

wout->matValue(0).clear();

}

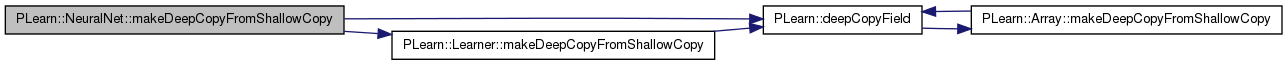

| void PLearn::NeuralNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

This needs to be overridden by every class that adds "complex" data members to the class, such as Vec, Mat, PP<Something>, etc. Typical implementation:

void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { inherited::makeDeepCopyFromShallowCopy(copies); deepCopyField(complex_data_member1, copies); deepCopyField(complex_data_member2, copies); ... }

| copies | A map used by the deep-copy mechanism to keep track of already-copied objects. |

Reimplemented from PLearn::Learner.

Definition at line 507 of file NeuralNet.cc.

References PLearn::deepCopyField(), PLearn::Learner::makeDeepCopyFromShallowCopy(), and optimizer.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(optimizer, copies);

}

| virtual Array<string> PLearn::NeuralNet::testResultsNames | ( | ) | [inline, virtual] |

Definition at line 146 of file NeuralNet.h.

{ return costNames(); }

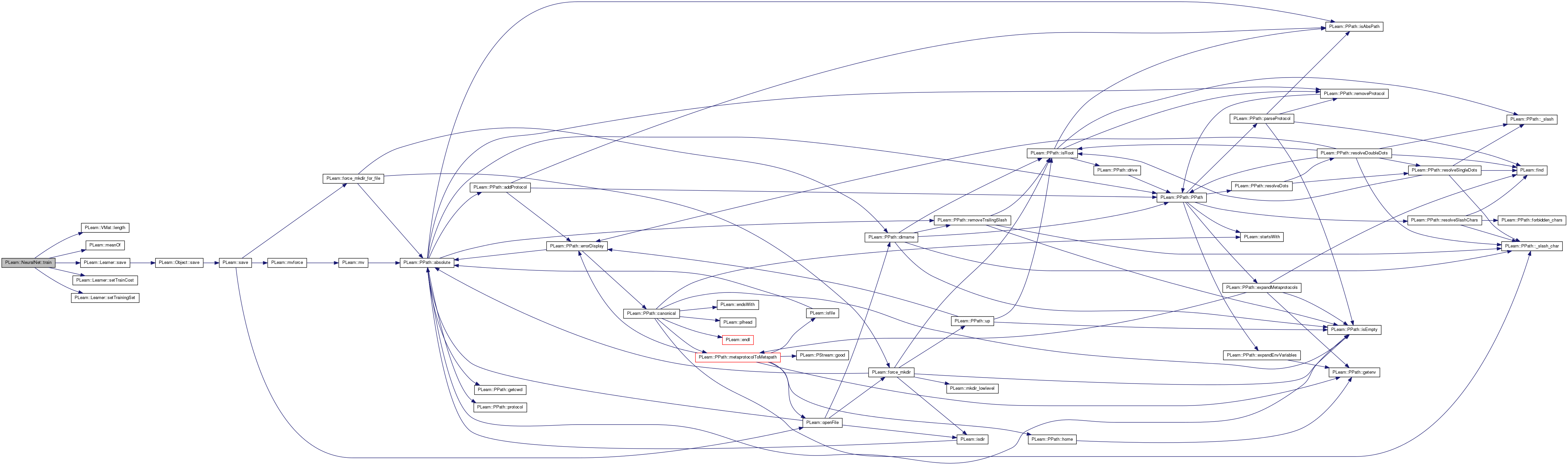

| void PLearn::NeuralNet::train | ( | VMat | training_set | ) | [virtual] |

*** SUBCLASS WRITING: *** Does the actual training. Subclasses must implement this method. The method should upon entry, call setTrainingSet(training_set); Make sure that a if(measure(step, objective_value)) is done after each training step, and that training is stopped if it returned true

Implements PLearn::Learner.

Definition at line 417 of file NeuralNet.cc.

References batch_size, cost, costf, PLearn::Learner::expdir, input, PLearn::VMat::length(), PLearn::meanOf(), nepochs, optimizer, output_and_target_to_cost, params, paramsvalues, PLearn::Learner::save(), saveparams, PLearn::Learner::setTrainCost(), PLearn::Learner::setTrainingSet(), and target_and_weights.

{

setTrainingSet(training_set);

int l = training_set->length();

int nsamples = batch_size>0 ? batch_size : l;

Func paramf = Func(input&target_and_weights, cost); // parameterized function to optimize

Var totalcost = meanOf(training_set,paramf, nsamples);

optimizer->setToOptimize(params, totalcost);

optimizer->nupdates = (nepochs*l)/nsamples;

optimizer->every = l/nsamples;

optimizer->addMeasurer(*this);

optimizer->build();

optimizer->optimize();

output_and_target_to_cost->recomputeParents();

costf->recomputeParents();

// cerr << "totalcost->value = " << totalcost->value << endl;

setTrainCost(totalcost->value);

if (saveparams!="")

PLearn::save(expdir+saveparams,paramsvalues);

}

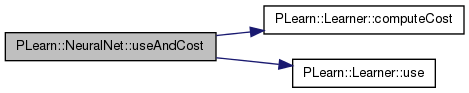

| void PLearn::NeuralNet::useAndCost | ( | const Vec & | inputvec, |

| const Vec & | targetvec, | ||

| Vec | outputvec, | ||

| Vec | costvec | ||

| ) | [virtual] |

Definition at line 278 of file Learner.cc.

References PLearn::Learner::computeCost(), and PLearn::Learner::use().

Reimplemented from PLearn::Learner.

Definition at line 133 of file NeuralNet.h.

Definition at line 118 of file NeuralNet.h.

Referenced by declareOptions(), and train().

Definition at line 93 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::NeuralNet::cost [protected] |

Definition at line 69 of file NeuralNet.h.

Referenced by build_(), costsize(), and train().

| Array<string> PLearn::NeuralNet::cost_funcs |

a list of cost functions to use in the form "[ cf1; cf2; cf3; ... ]"

Definition at line 111 of file NeuralNet.h.

Referenced by build_(), costNames(), and declareOptions().

Definition at line 78 of file NeuralNet.h.

VarArray PLearn::NeuralNet::costs [protected] |

Definition at line 68 of file NeuralNet.h.

Referenced by build_().

Var PLearn::NeuralNet::costweights [protected] |

Definition at line 60 of file NeuralNet.h.

Referenced by build_().

Definition at line 103 of file NeuralNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 100 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 77 of file NeuralNet.h.

Definition at line 102 of file NeuralNet.h.

Vec PLearn::NeuralNet::initial_paramsvalues [protected] |

Definition at line 74 of file NeuralNet.h.

Var PLearn::NeuralNet::input [protected] |

Definition at line 58 of file NeuralNet.h.

Definition at line 105 of file NeuralNet.h.

Referenced by declareOptions(), and initializeParams().

Definition at line 95 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 94 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 97 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 96 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 121 of file NeuralNet.h.

Referenced by declareOptions(), and train().

Definition at line 89 of file NeuralNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 90 of file NeuralNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 125 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 116 of file NeuralNet.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NeuralNet::output [protected] |

Definition at line 67 of file NeuralNet.h.

Referenced by build_().

Definition at line 79 of file NeuralNet.h.

Definition at line 99 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 98 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 104 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

VarArray PLearn::NeuralNet::params [protected] |

Definition at line 71 of file NeuralNet.h.

Vec PLearn::NeuralNet::paramsvalues [protected] |

Definition at line 73 of file NeuralNet.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 123 of file NeuralNet.h.

Referenced by declareOptions(), and train().

Definition at line 112 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Definition at line 113 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::NeuralNet::target [protected] |

Definition at line 59 of file NeuralNet.h.

Referenced by build_().

Var PLearn::NeuralNet::target_and_weights [protected] |

Definition at line 61 of file NeuralNet.h.

Var PLearn::NeuralNet::w1 [protected] |

Definition at line 62 of file NeuralNet.h.

Referenced by build_(), and initializeParams().

Var PLearn::NeuralNet::w2 [protected] |

Definition at line 63 of file NeuralNet.h.

Referenced by build_(), and initializeParams().

Var PLearn::NeuralNet::wdirect [protected] |

Definition at line 65 of file NeuralNet.h.

Referenced by build_(), and initializeParams().

Definition at line 92 of file NeuralNet.h.

Referenced by build_(), and declareOptions().

Var PLearn::NeuralNet::wout [protected] |

Definition at line 64 of file NeuralNet.h.

Referenced by build_(), and initializeParams().

1.7.4

1.7.4