|

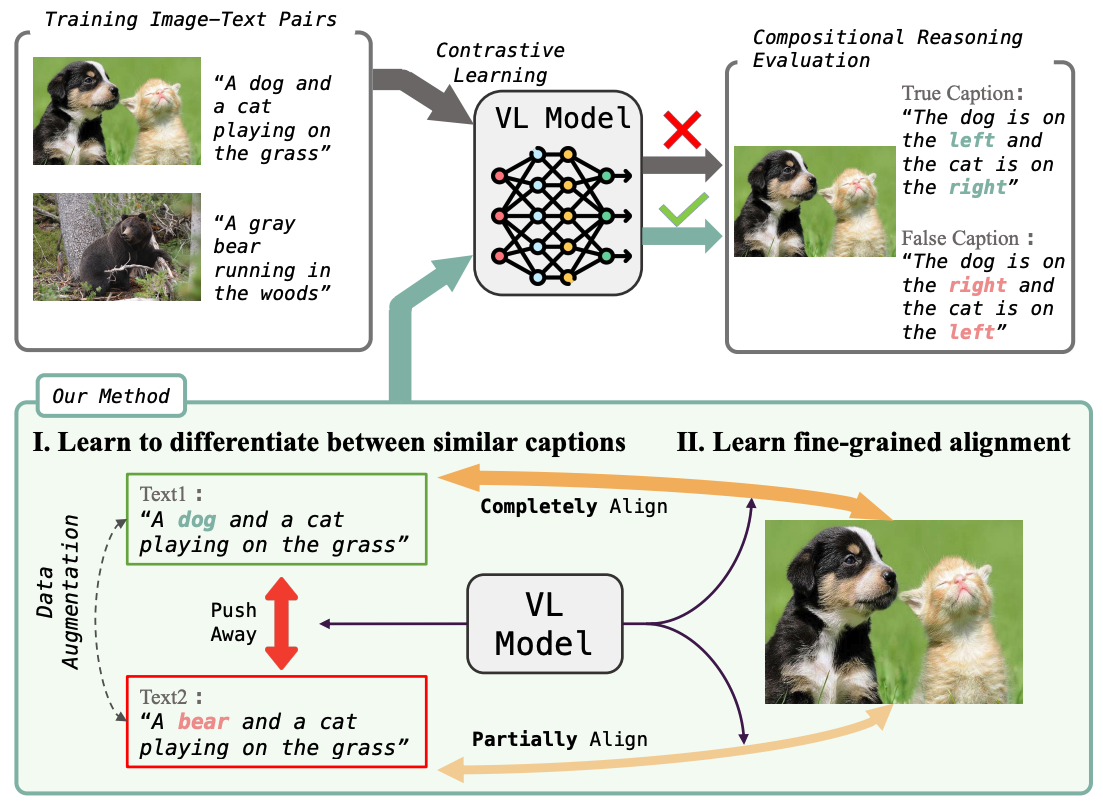

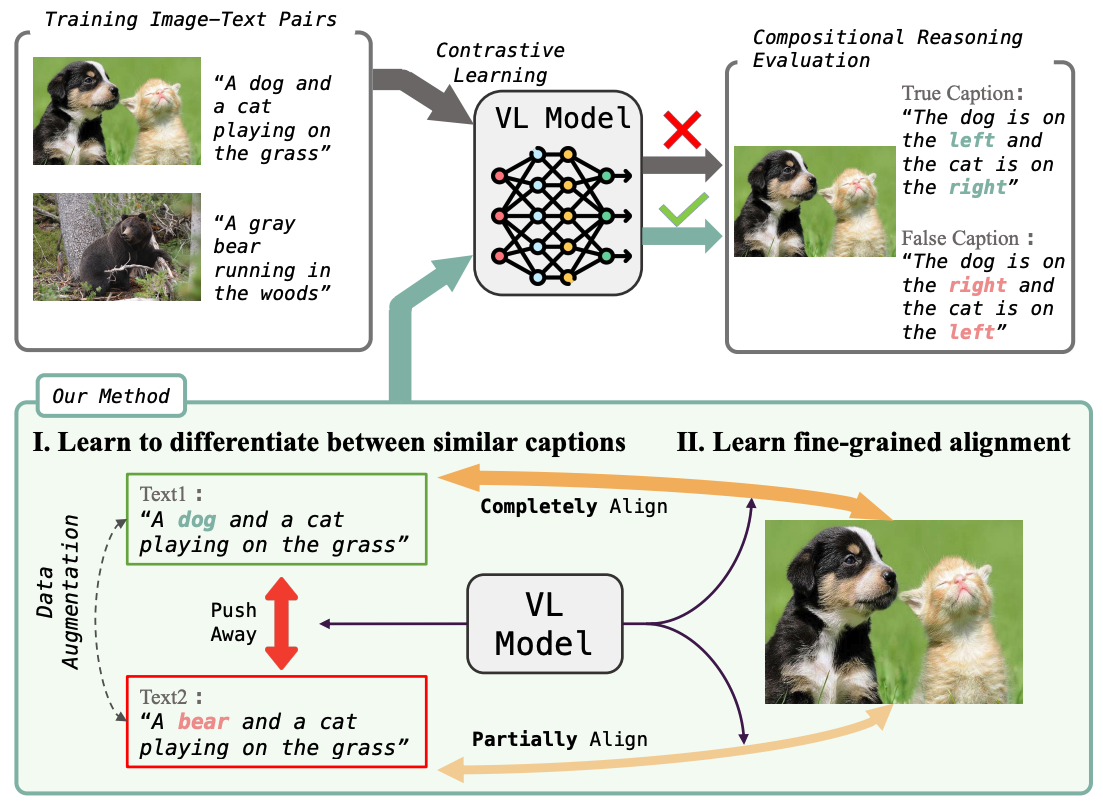

Contrasting Intra-Modal and Ranking Cross-Modal Hard Negatives to Enhance Visio-Linguistic Compositional Understanding

Le Zhang,

Rabiul Awal,

Aishwarya Agrawal

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024

[ArXiv]

|

|

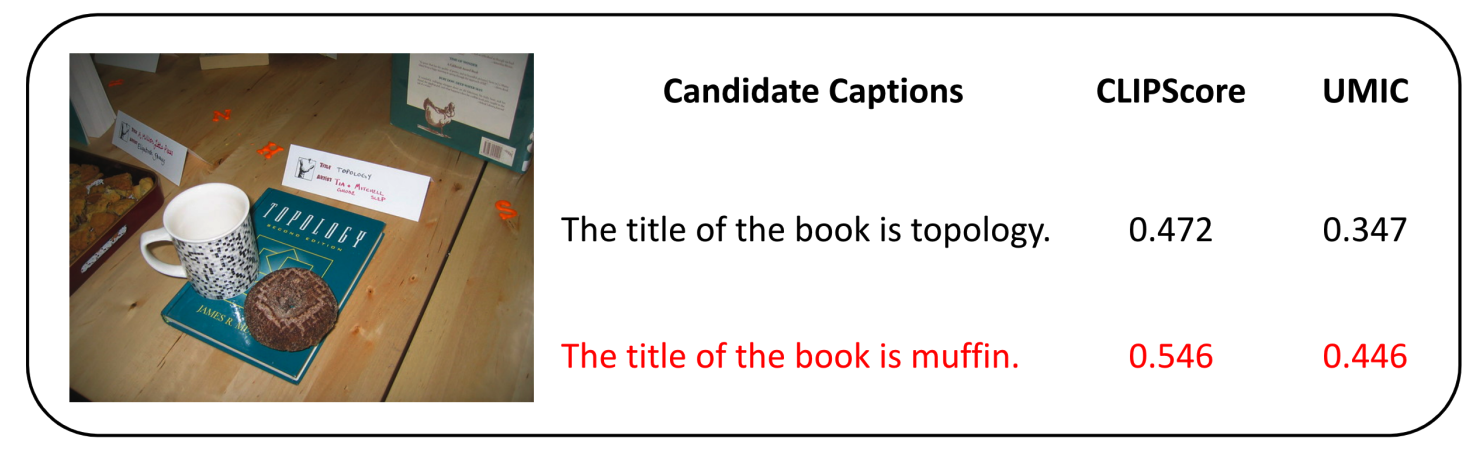

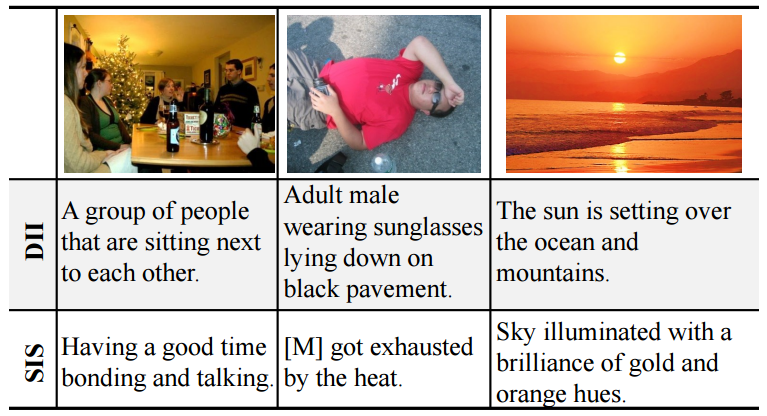

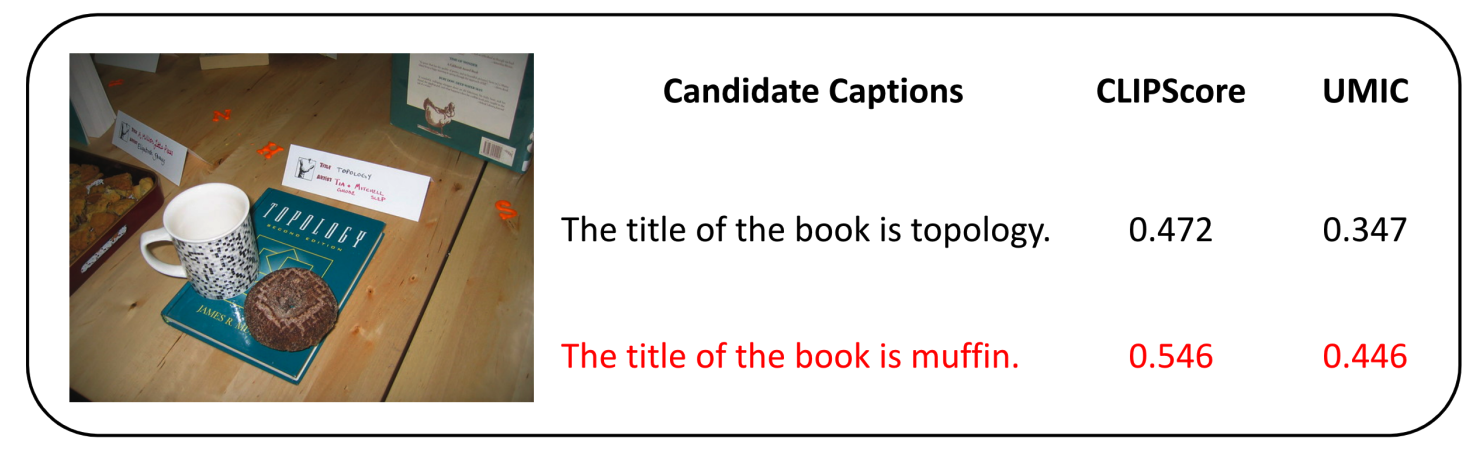

An Examination of the Robustness of Reference-Free Image Captioning Evaluation Metrics

Saba Ahmadi,

Aishwarya Agrawal

Findings of the Association for Computational Linguistics: EACL 2024

[ArXiv]

|

|

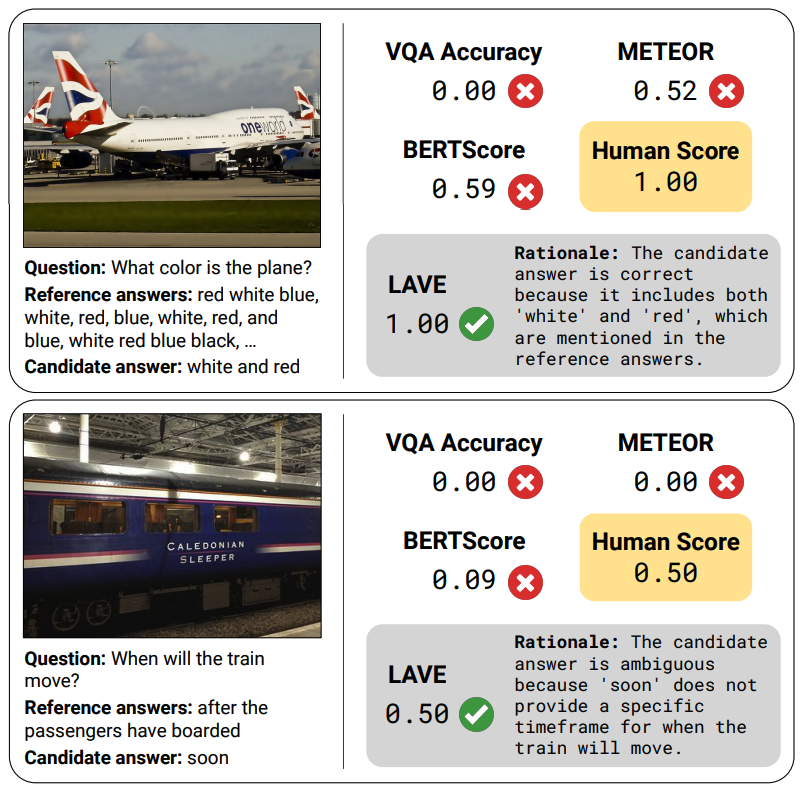

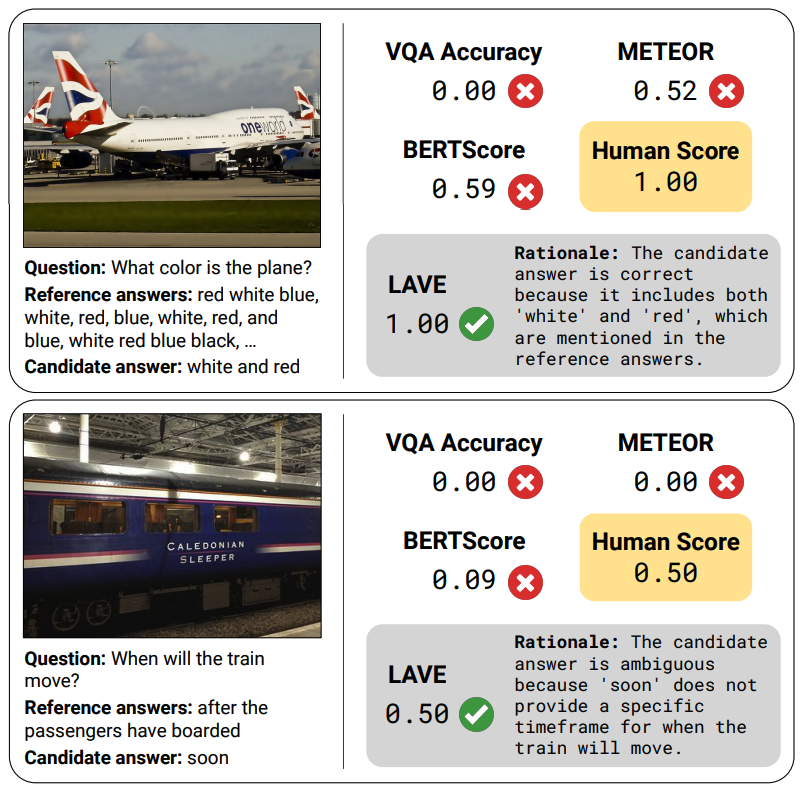

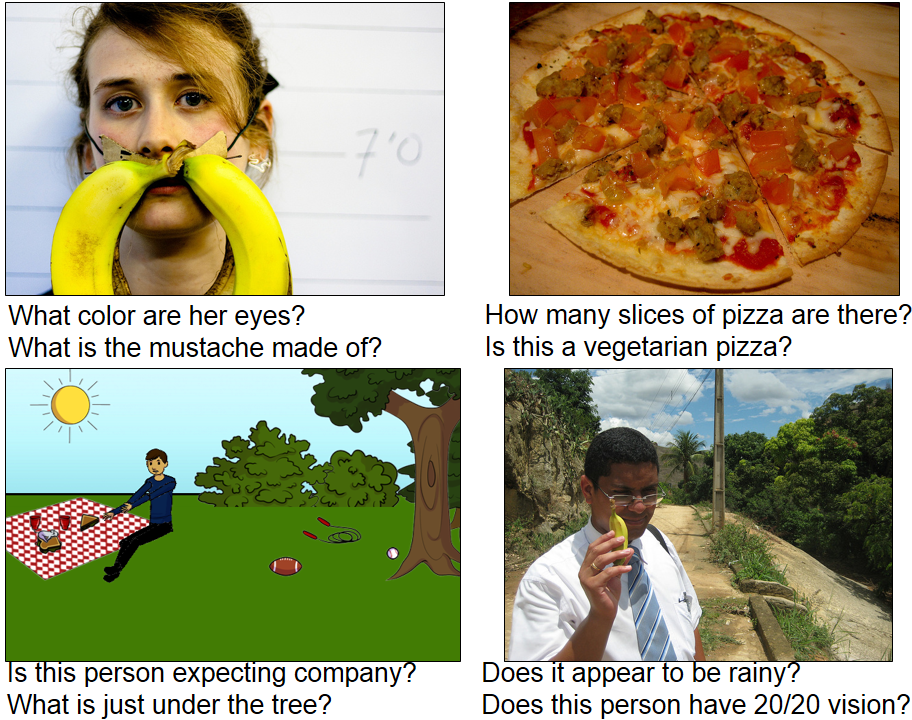

Improving Automatic VQA Evaluation Using Large Language Models

Oscar Mañas,,

Benno Krojer,

Aishwarya Agrawal

In the 38th Annual AAAI Conference on Artificial Intelligence, 2024

[ArXiv]

|

|

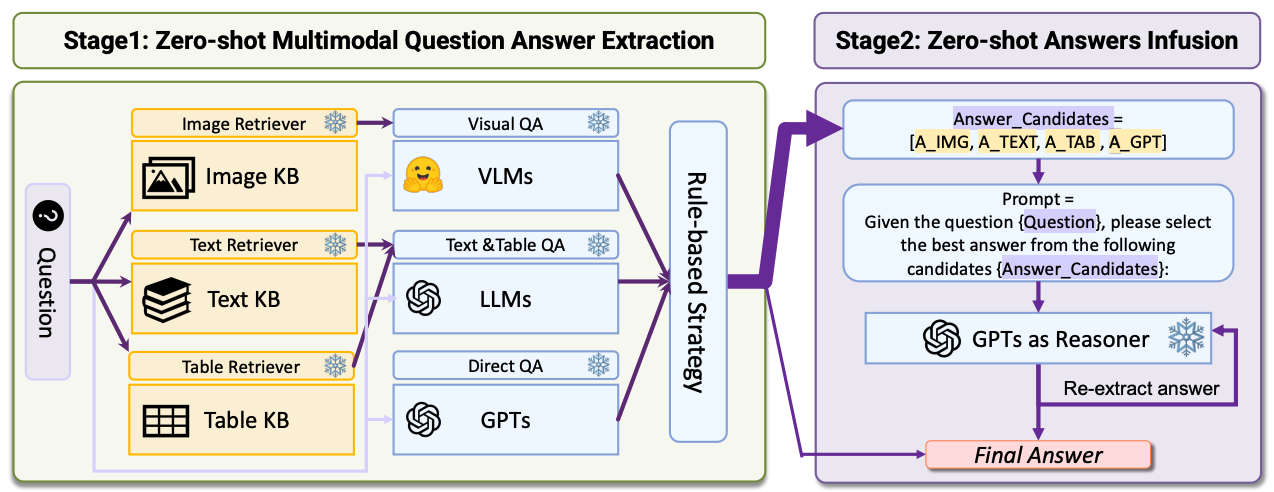

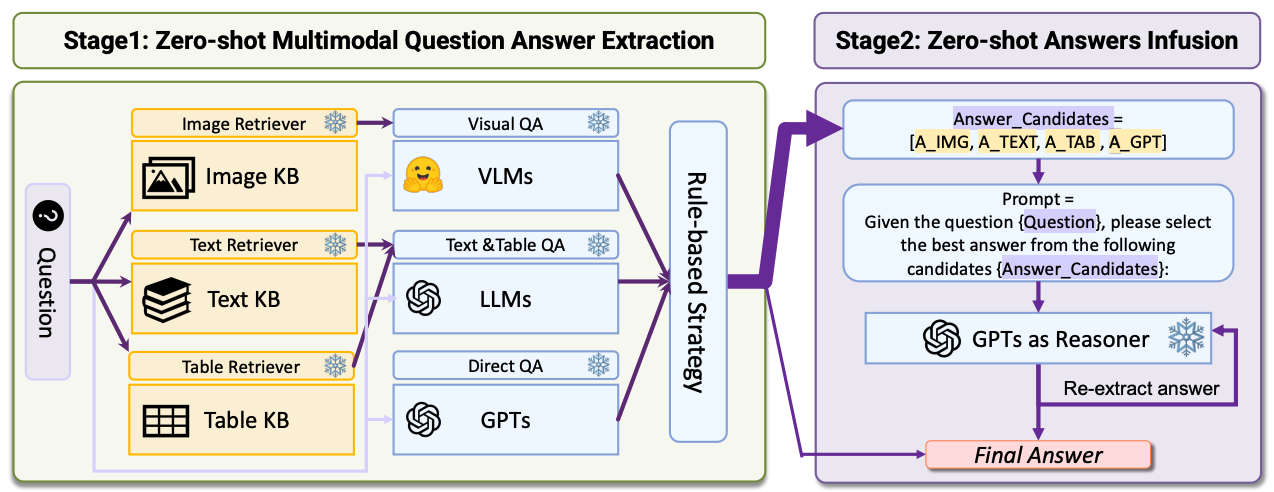

MoqaGPT: Zero-Shot Multi-modal Open-domain Question Answering with Large Language Model

Le Zhang,

Yihong Wu,

Fengran Mo,

Jian-Yun Nie,

Aishwarya Agrawal

Findings of the Association for Computational Linguistics (EMNLP), 2023

[ArXiv]

|

|

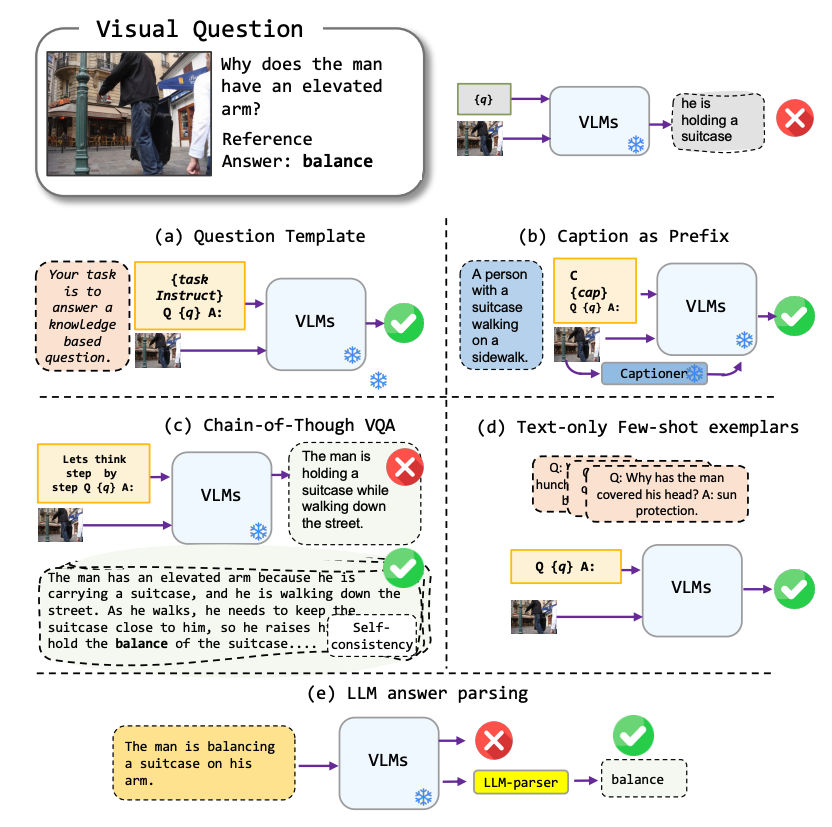

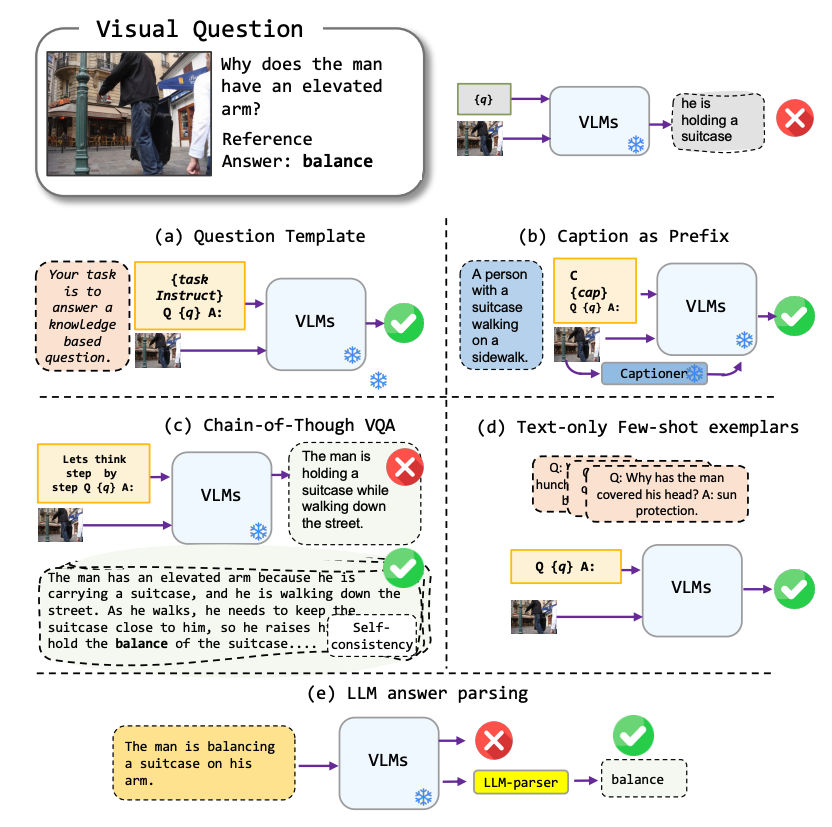

Investigating Prompting Techniques for Zero- and Few-Shot Visual Question Answering

Rabiul Awal,

Le Zhang,

Aishwarya Agrawal

arXiv preprint, arXiv:2306.09996, 2023

[ArXiv]

|

|

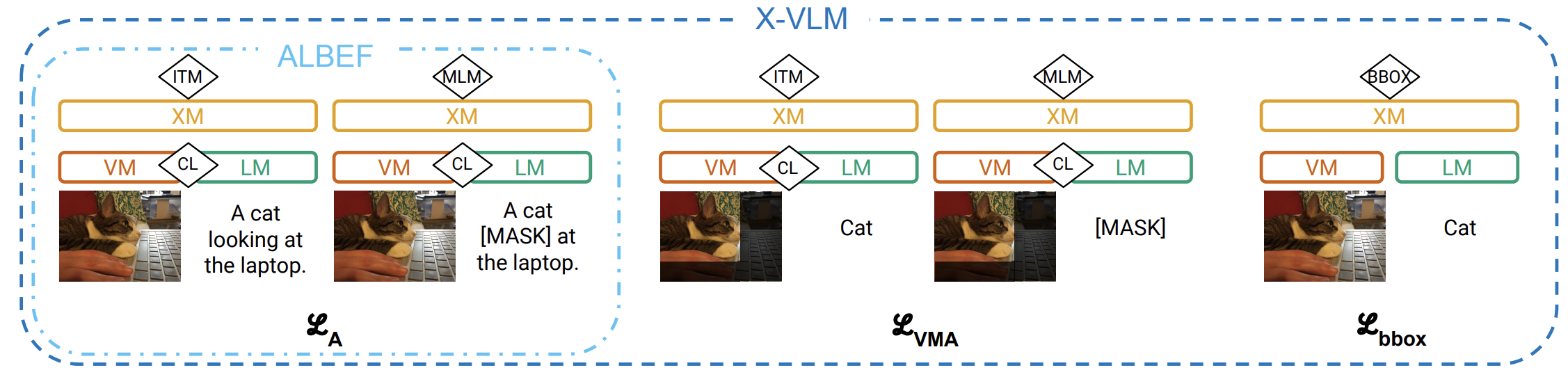

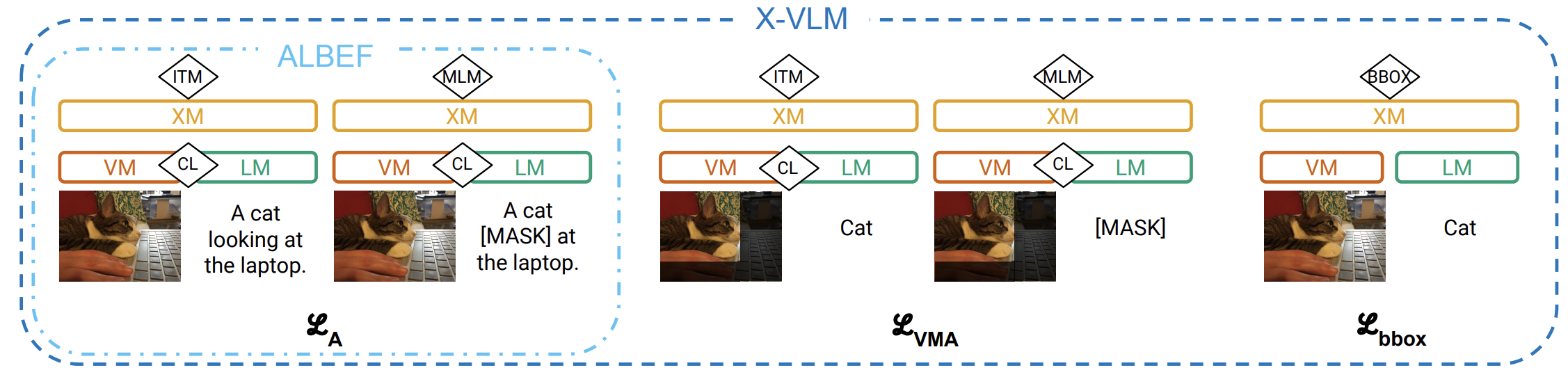

Measuring Progress in Fine-grained Vision-and-Language Understanding

Emanuele Bugliarello,

Laurent Sartran,

Aishwarya Agrawal,

Lisa Anne Hendricks,

Aida Nematzadeh

The Association for Computational Linguistics (ACL), 2023

[ArXiv]

|

|

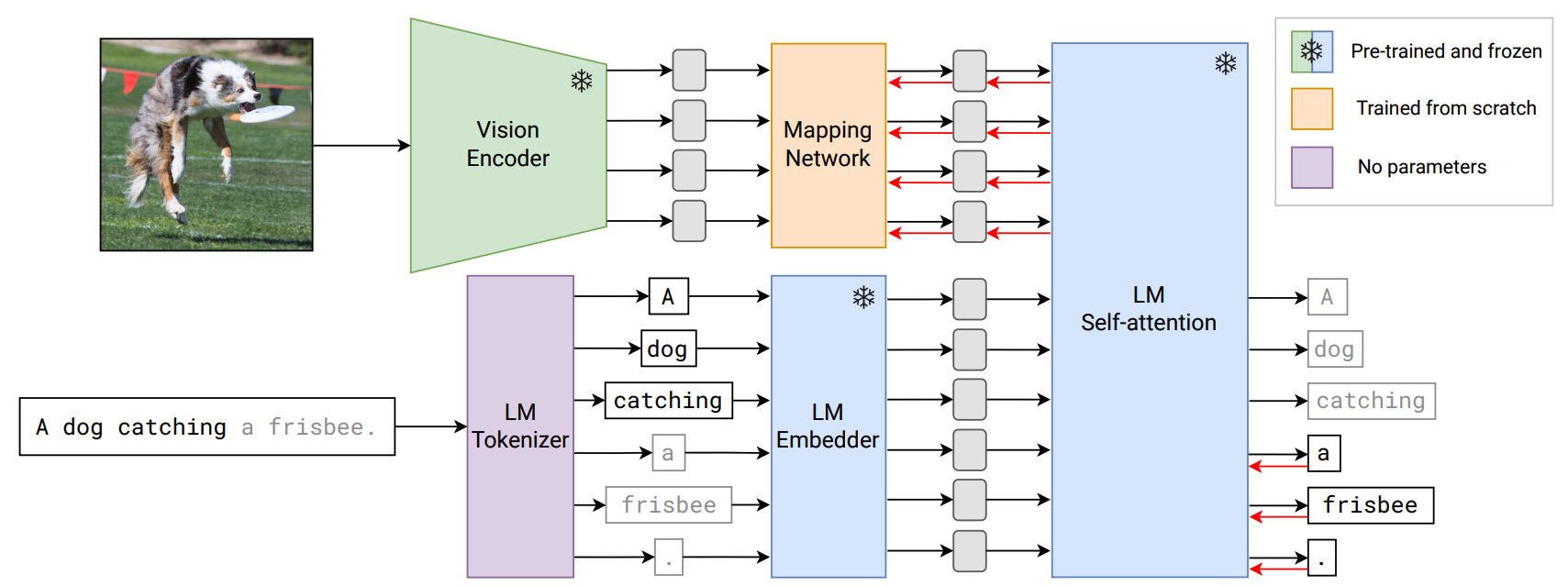

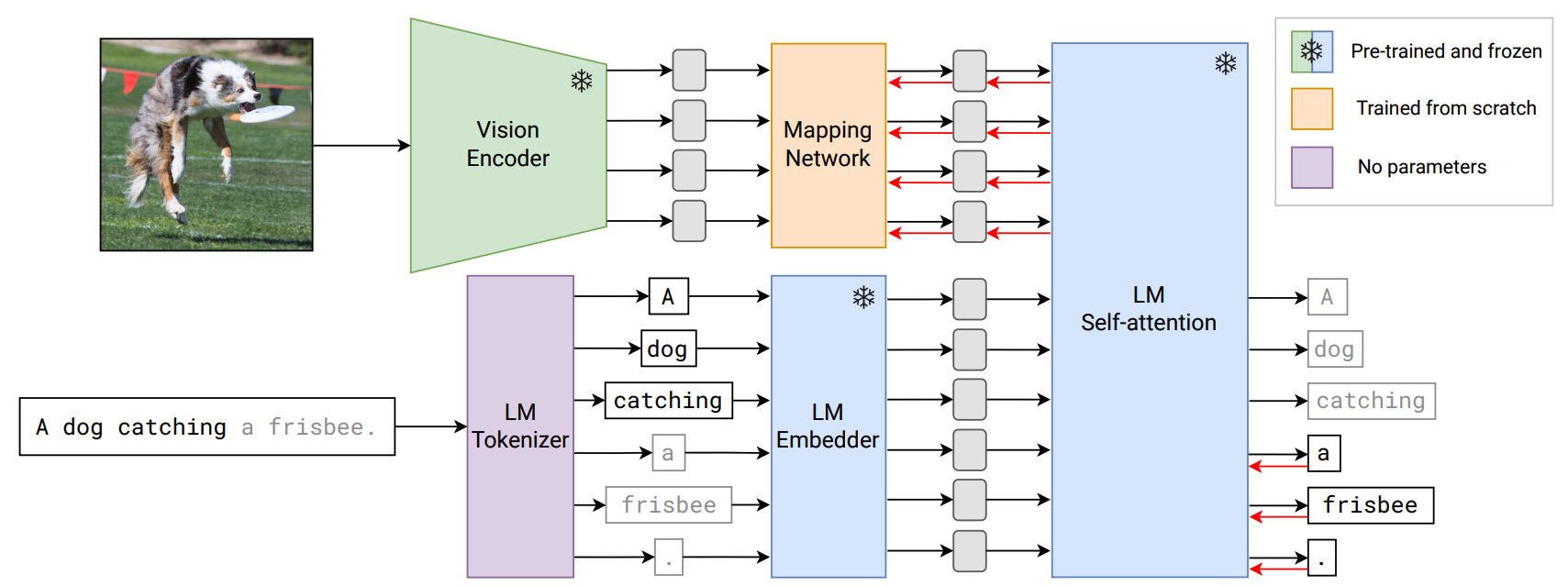

MAPL: Parameter-Efficient Adaptation of Unimodal Pre-Trained Models for Vision-Language Few-Shot Prompting

Oscar Mañas,

Pau Rodríguez*,

Saba Ahmadi*,

Aida Nematzadeh,

Yash Goyal,

Aishwarya Agrawal

*equal contribution

The European Chapter of the Association for Computational Linguistics (EACL), 2023

[ArXiv | Code | Live Demo]

|

|

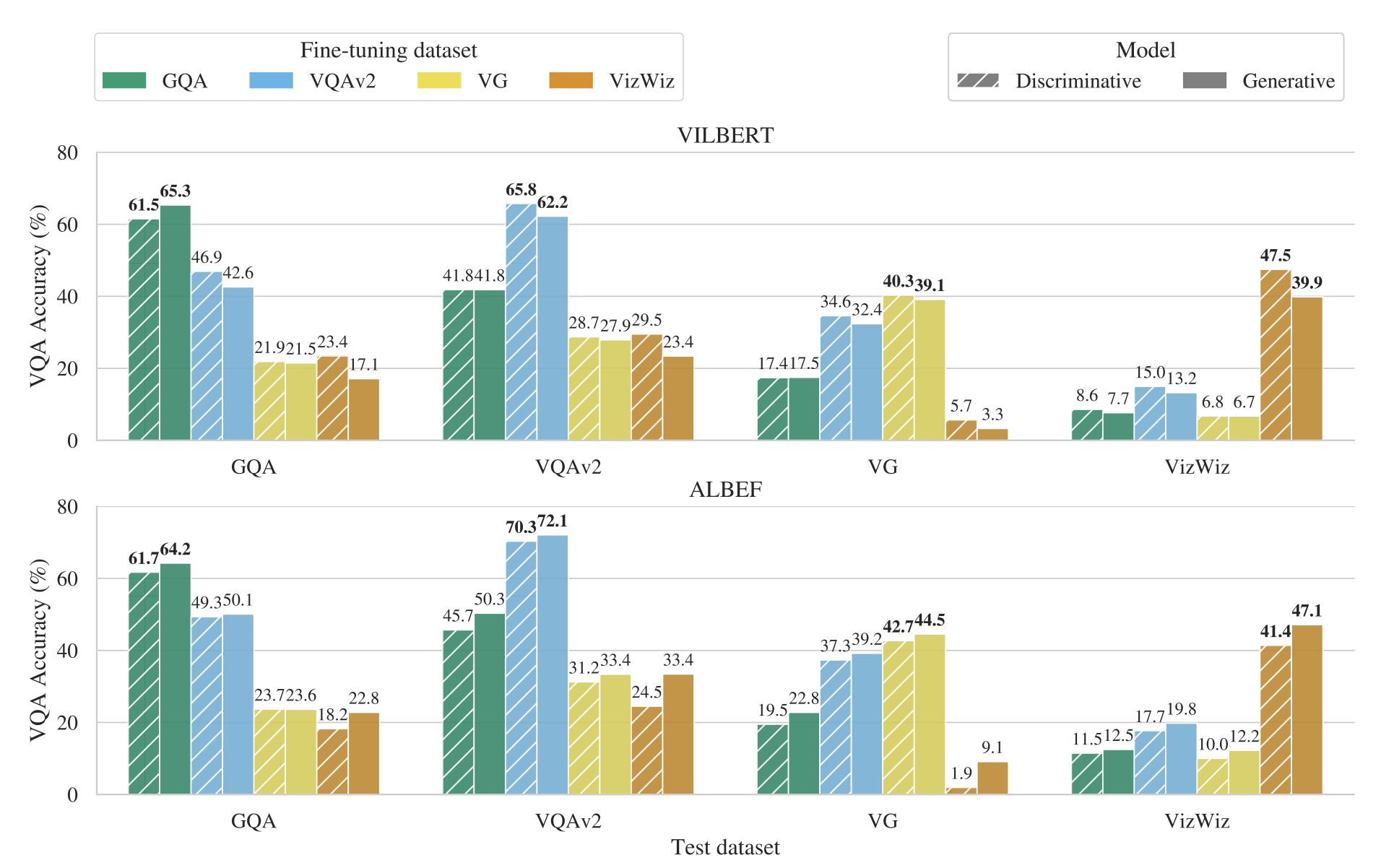

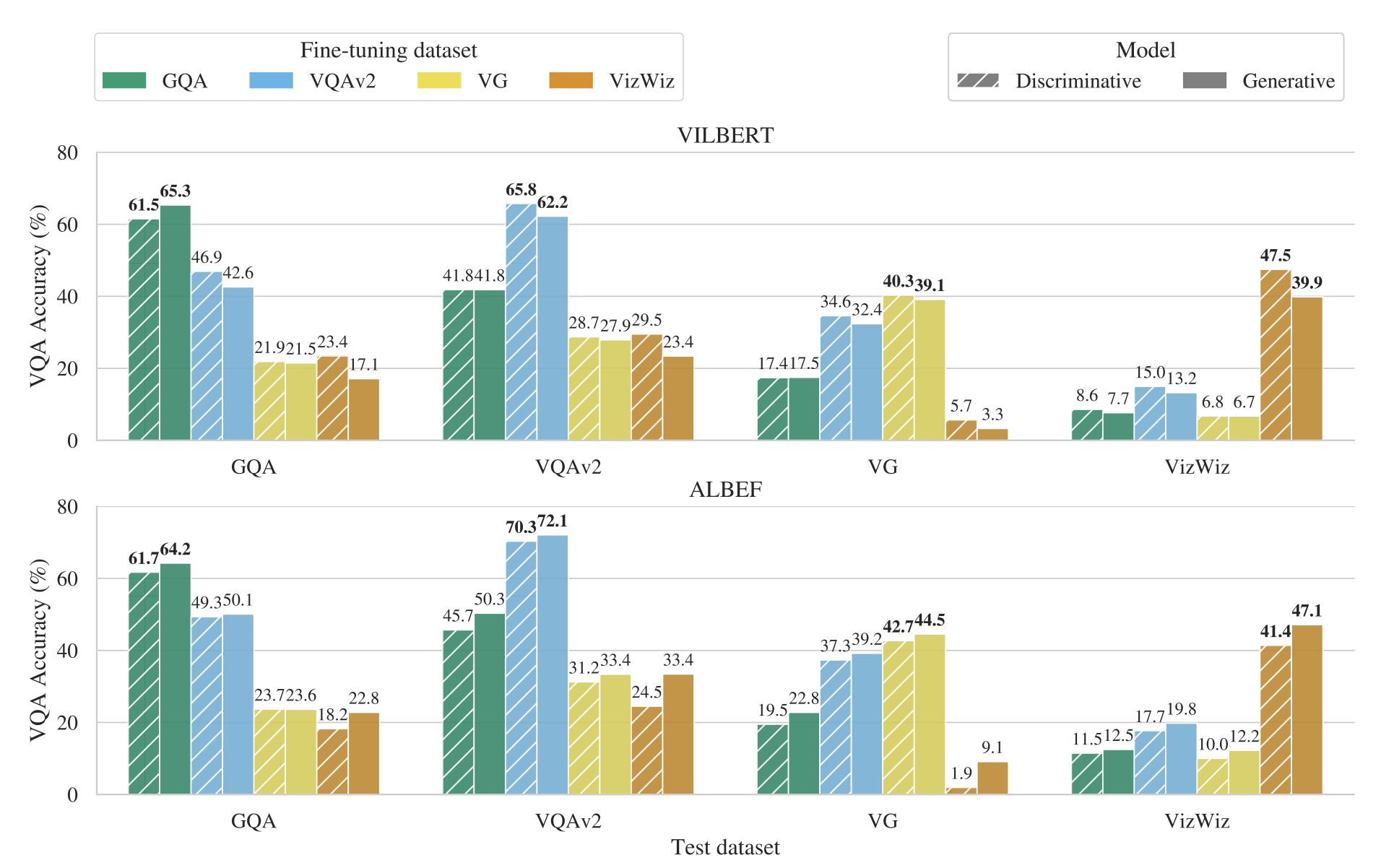

Rethinking Evaluation Practices in Visual Question Answering: A Case Study on Out-of-Distribution Generalization

Aishwarya Agrawal,

Ivana Kajić,

Emanuele Bugliarello,

Elnaz Davoodi,

Anita Gergely,

Phil Blunsom,

Aida Nematzadeh

(see paper for equal contributions)

Findings of the Association for Computational Linguistics: EACL 2023

[ArXiv]

|

|

Visual Question Answering and Beyond

Aishwarya Agrawal

PhD Dissertation, 2019

[PDF]

|

|

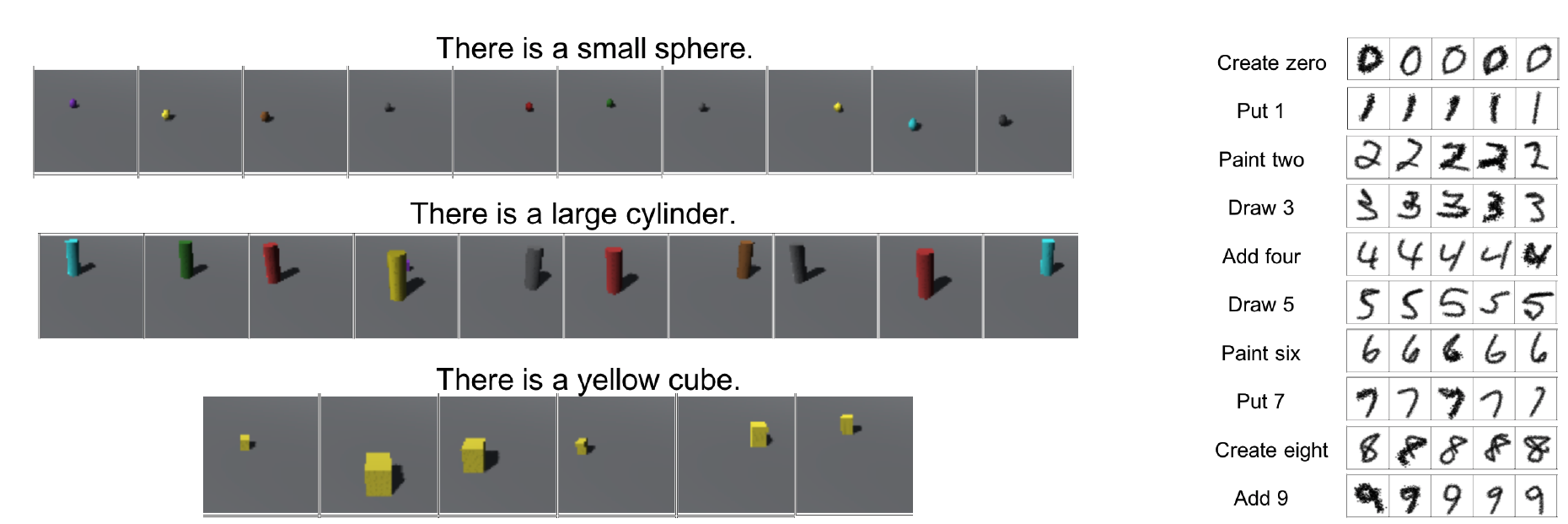

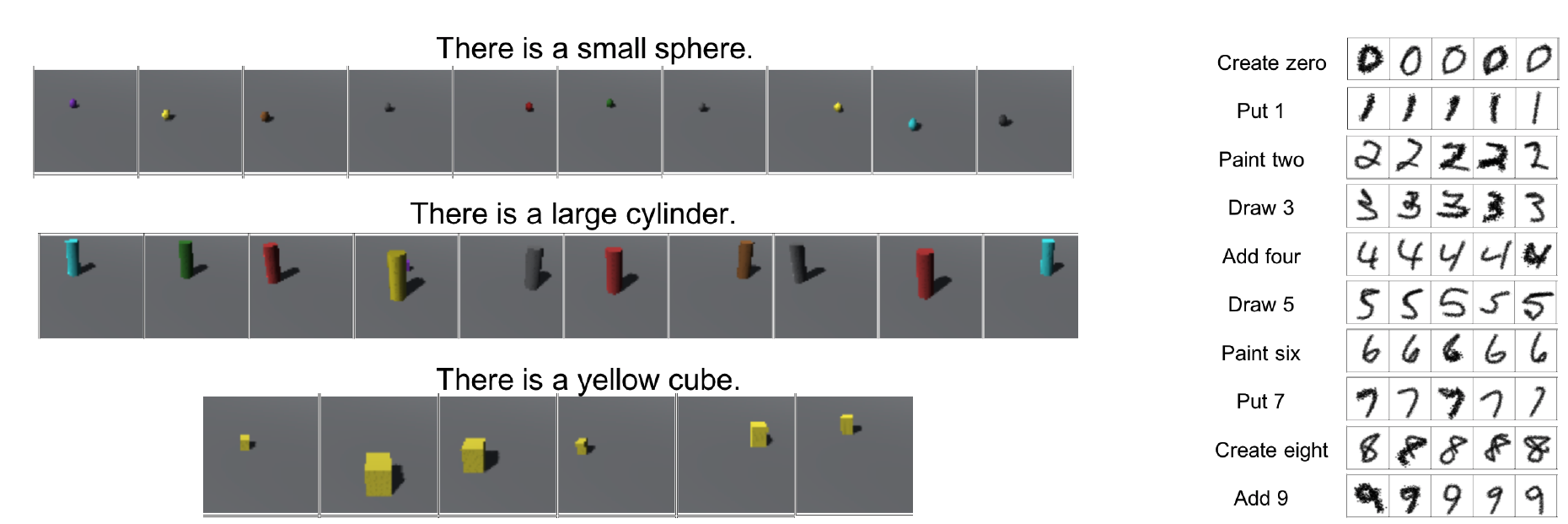

Generating Diverse Programs with Instruction Conditioned Reinforced Adversarial Learning

Aishwarya Agrawal,

Mateusz Malinowski,

Felix Hill,

Ali Eslami,

Oriol Vinyals,

Tejas Kulkarni

Visually-Grounded Interaction and Language workshop (spotlight), NIPS 2018

Learning by Instruction workshop, NIPS 2018

[ArXiv]

|

|

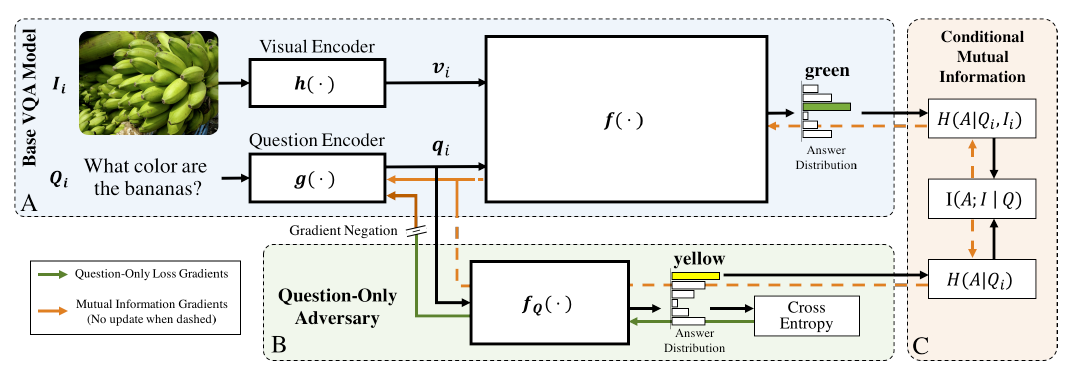

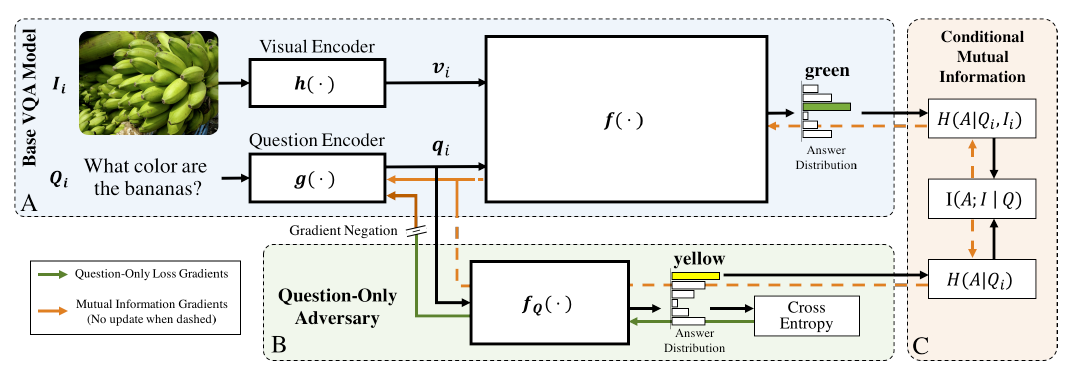

Overcoming Language Priors in Visual Question Answering with Adversarial Regularization

Sainandan Ramakrishnan,

Aishwarya Agrawal,

Stefan Lee

Neural Information Processing Systems (NIPS), 2018

[ArXiv]

|

|

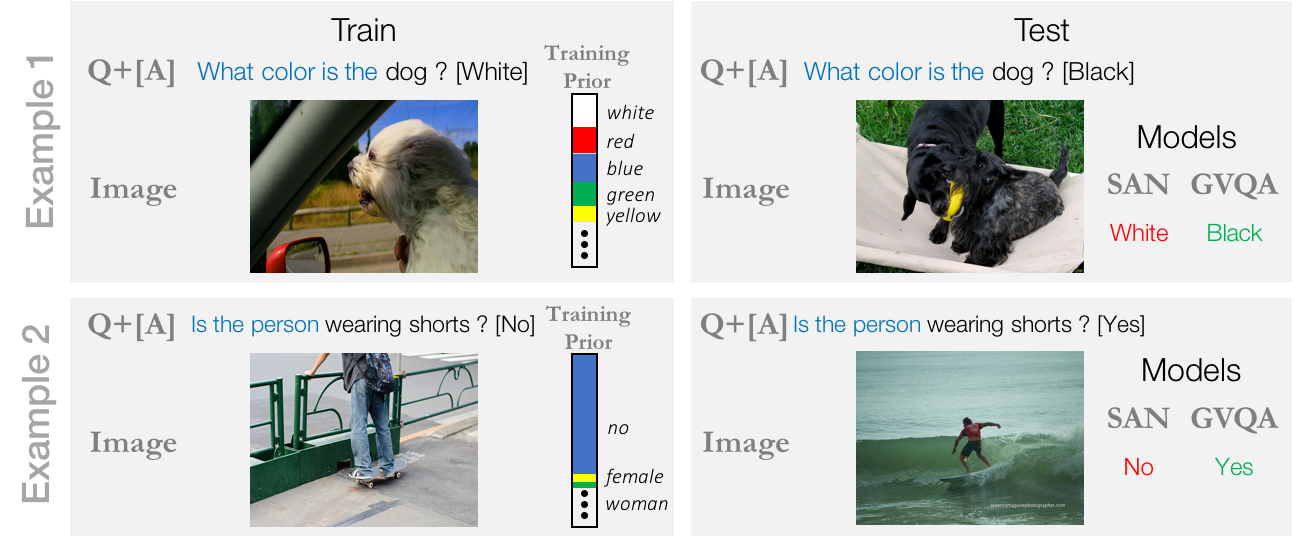

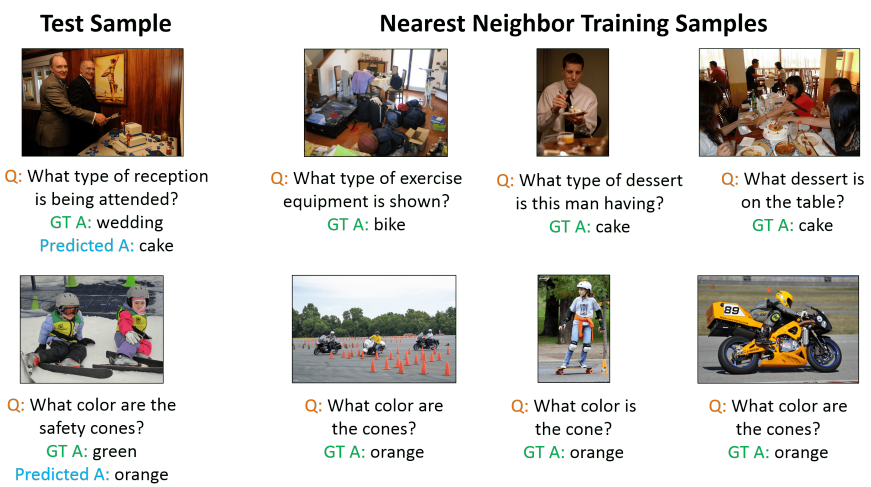

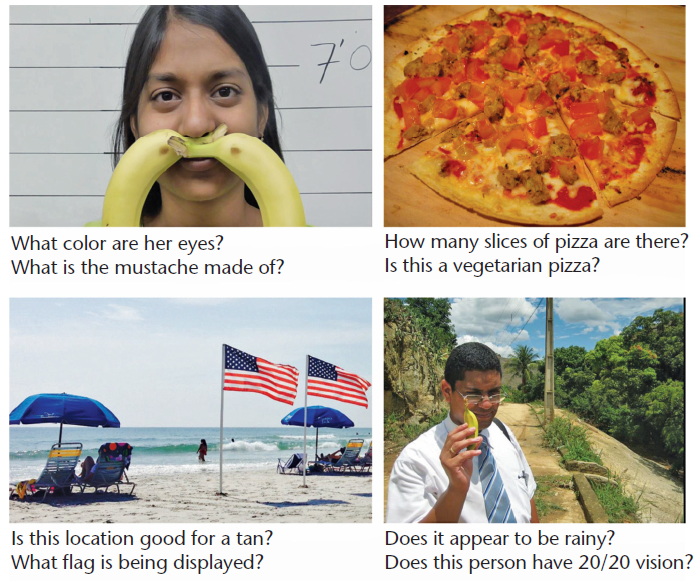

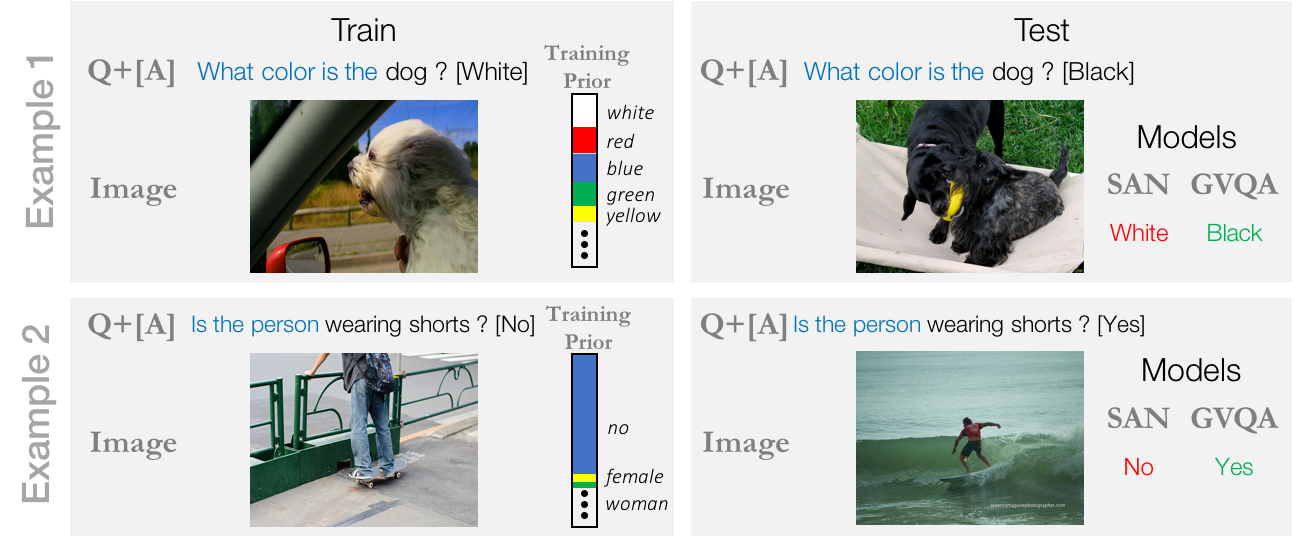

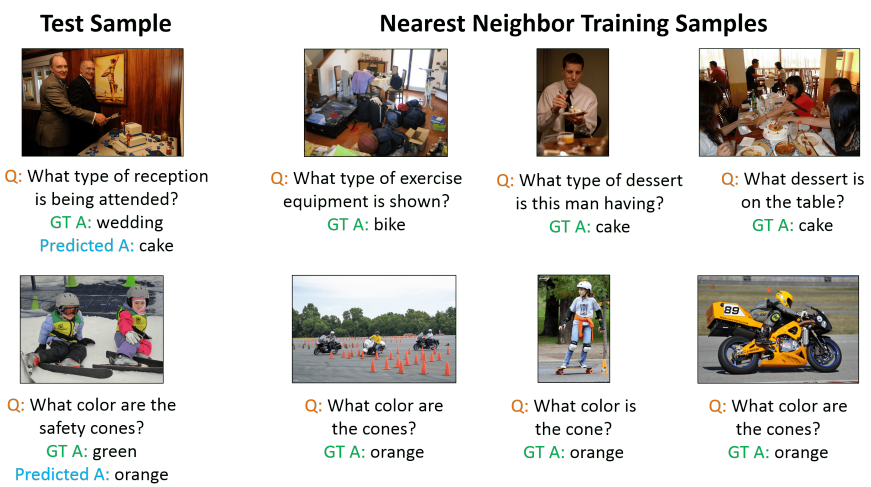

Don't Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering

Aishwarya Agrawal,

Dhruv Batra,

Devi Parikh,

Aniruddha Kembhavi

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018

[ArXiv |

Project Page]

|

|

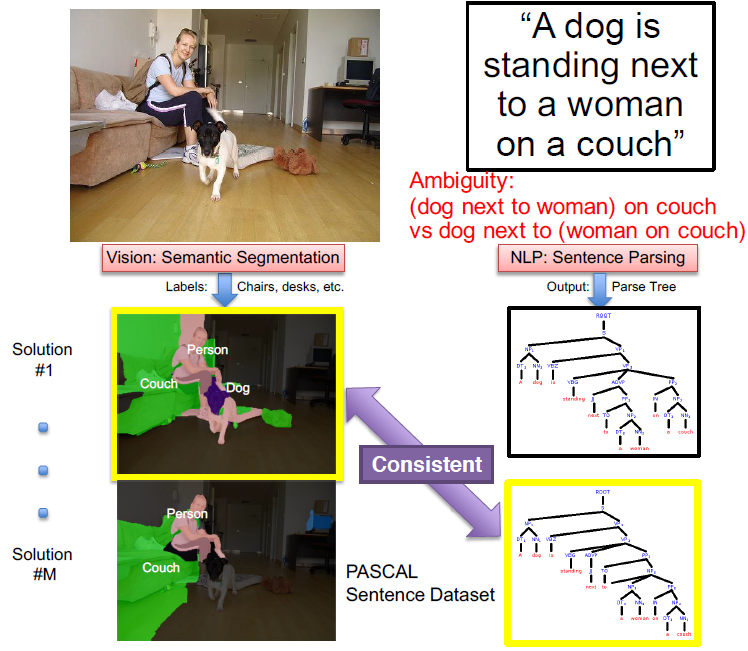

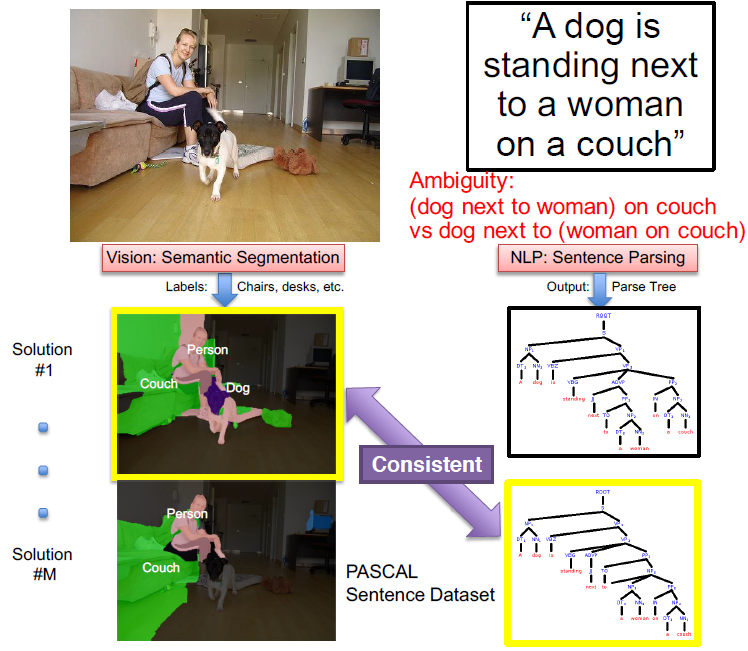

Resolving Language and Vision Ambiguities Together: Joint Segmentation & Prepositional Attachment Resolution in Captioned Scenes

Gordon Christie*,

Ankit Laddha*,

Aishwarya Agrawal,

Stanislaw Antol,

Yash Goyal,

Kevin Kochersberger,

Dhruv Batra

*equal contribution

Computer Vision and Image Understanding (CVIU), 2017

[Arxiv |

Project Page]

|

|

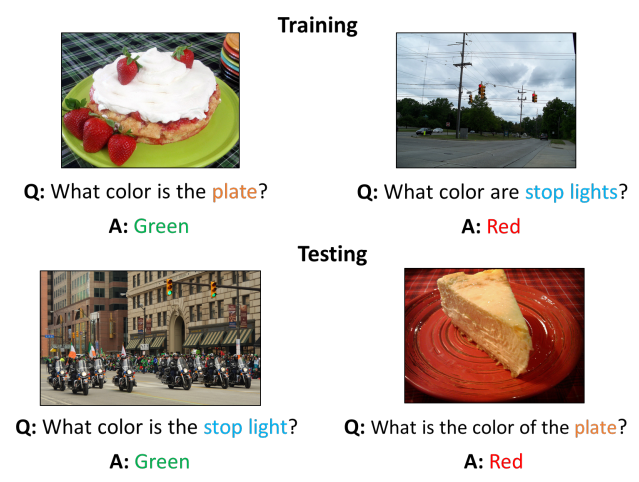

C-VQA: A Compositional Split of the Visual Question Answering (VQA) v1.0 Dataset

Aishwarya Agrawal,

Aniruddha Kembhavi,

Dhruv Batra,

Devi Parikh

arXiv preprint, arXiv:1704.08243, 2017

[ArXiv]

|

|

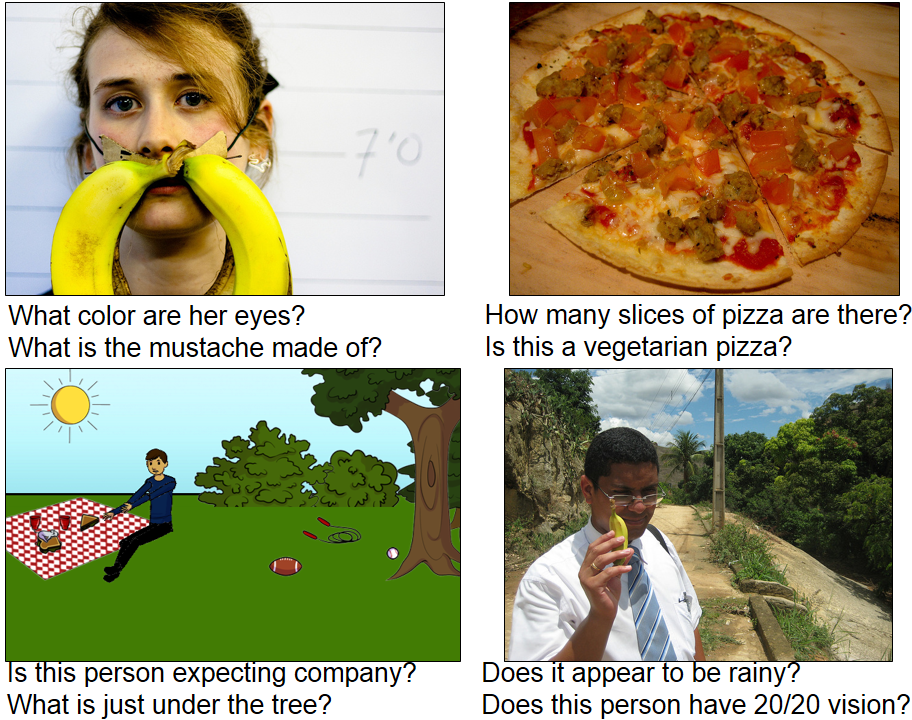

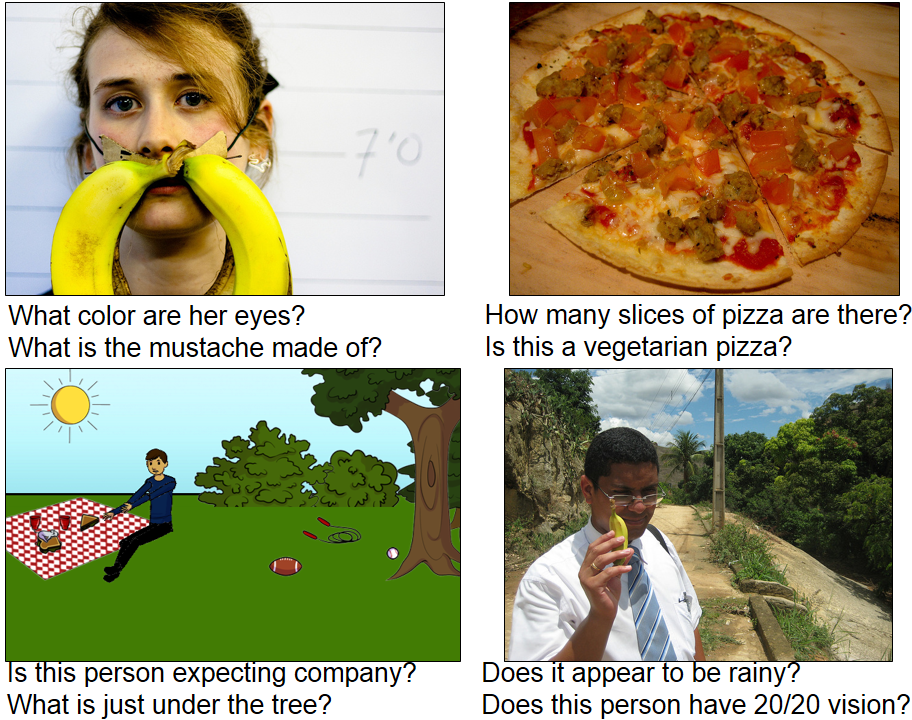

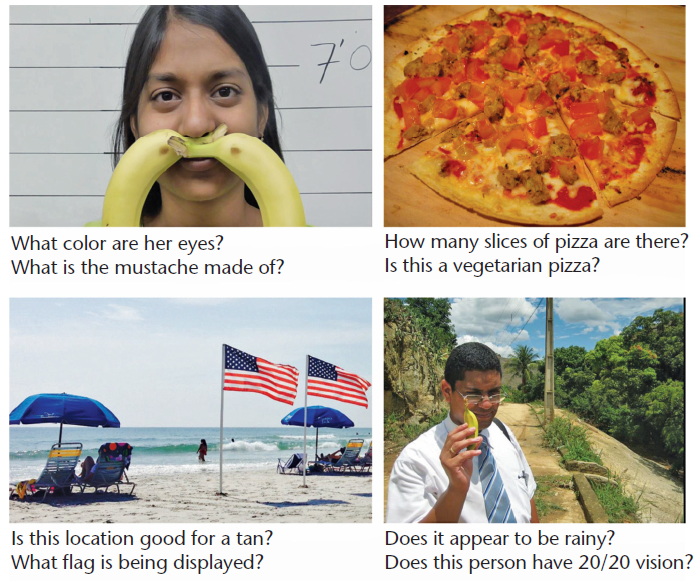

VQA: Visual Question Answering

Aishwarya Agrawal*,

Jiasen Lu*,

Stanislaw Antol*,

Margaret Mitchell,

Larry Zitnick,

Devi Parikh,

Dhruv Batra

*equal contribution

Special Issue on Combined Image and Language Understanding, International Journal of Computer Vision (IJCV), 2017

[ ArXiv

| visualqa.org (data, code, challenge)

| slides

| talk at GPU Technology Conference (GTC) 2016]

|

|

Analyzing the Behavior of Visual Question Answering Models

Aishwarya Agrawal,

Dhruv Batra,

Devi Parikh

Conference on Empirical Methods in Natural Language Processing (EMNLP), 2016

[Arxiv

| slides

| talk at Deep Learning Summer School, Montreal, 2016]

|

|

Resolving Language and Vision Ambiguities Together: Joint Segmentation & Prepositional Attachment Resolution in Captioned Scenes

Gordon Christie*,

Ankit Laddha*,

Aishwarya Agrawal,

Stanislaw Antol,

Yash Goyal,

Kevin Kochersberger,

Dhruv Batra

*equal contribution

Conference on Empirical Methods in Natural Language Processing (EMNLP), 2016

[Arxiv |

Project Page]

|

|

Measuring Machine Intelligence Through Visual Question Answering

Larry Zitnick,

Aishwarya Agrawal,

Stanislaw Antol,

Margaret Mitchell,

Dhruv Batra,

Devi Parikh

AI Magazine, 2016

[Paper |

ArXiv]

|

|

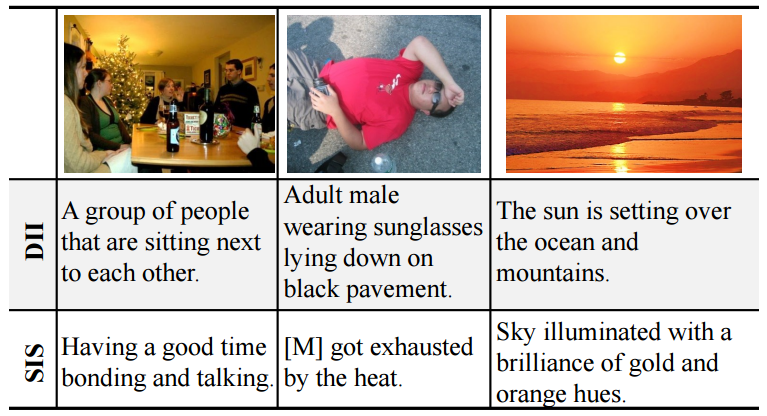

Visual Storytelling

Ting-Hao Huang,

Francis Ferraro,

Nasrin Mostafazadeh,

Ishan Misra,

Aishwarya Agrawal,

Jacob Devlin,

Ross Girshick,

Xiaodong He,

Pushmeet Kohli,

Dhruv Batra,

Larry Zitnick,

Devi Parikh,

Lucy Vanderwende,

Michel Galley,

Margaret Mitchell

Conference of the North American Chapter of the Association for Computational Linguistics:

Human Language Technologies (NAACL HLT), 2016

[Arxiv, Project Page]

|

|

VQA: Visual Question Answering

Stanislaw Antol*,

Aishwarya Agrawal*,

Jiasen Lu,

Margaret Mitchell,

Dhruv Batra,

Larry Zitnick,

Devi Parikh

*equal contribution

International Conference on Computer Vision (ICCV), 2015

[ ICCV Camera Ready Paper

| ArXiv

| ICCV Spotlight

| visualqa.org (data, code, challenge)

| slides

| talk at GPU Technology Conference (GTC) 2016]

|

|

A Novel LBP Based Operator for Tone Mapping HDR Images

Aishwarya Agrawal,

Shanmuganathan Raman

International Conference on Signal Processing and Communications (SPCOM-2014)

[Paper

|Poster]

|

|

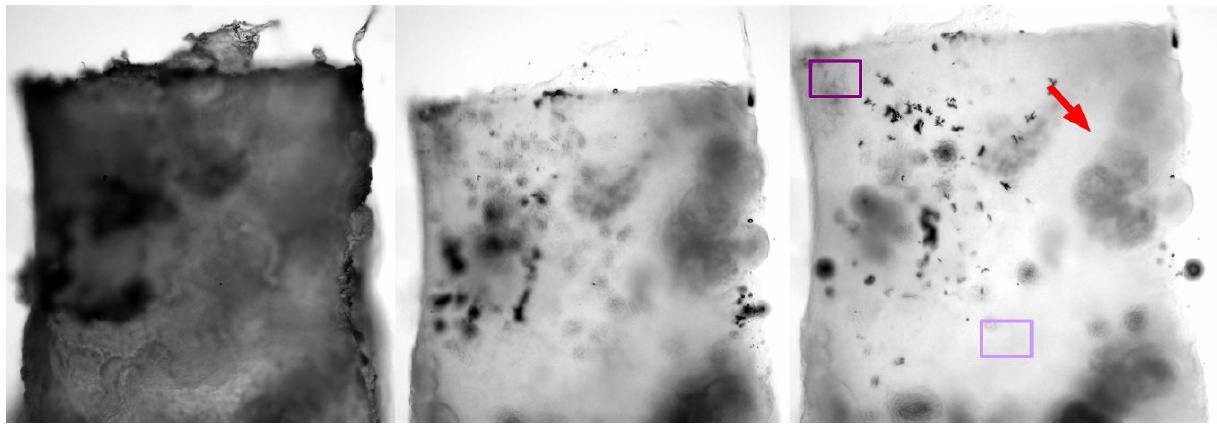

Optically clearing tissue as an initial step for 3D imaging of core biopsies to diagnose pancreatic cancer

Ronnie Das,

Aishwarya Agrawal,

Melissa P. Upton,

Eric J. Seibel

SPIE BiOS, International Society for Optics and Photonics, 2014

[Paper]

|