|

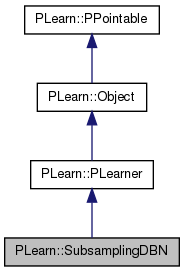

PLearn 0.1

|

|

PLearn 0.1

|

Neural net, learned layer-wise in a greedy fashion. More...

#include <SubsamplingDBN.h>

Public Member Functions | |

| SubsamplingDBN () | |

| Default constructor. | |

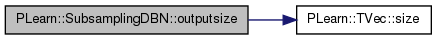

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

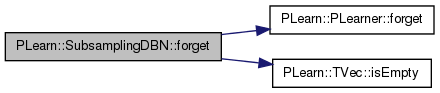

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

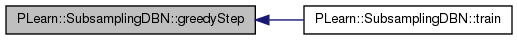

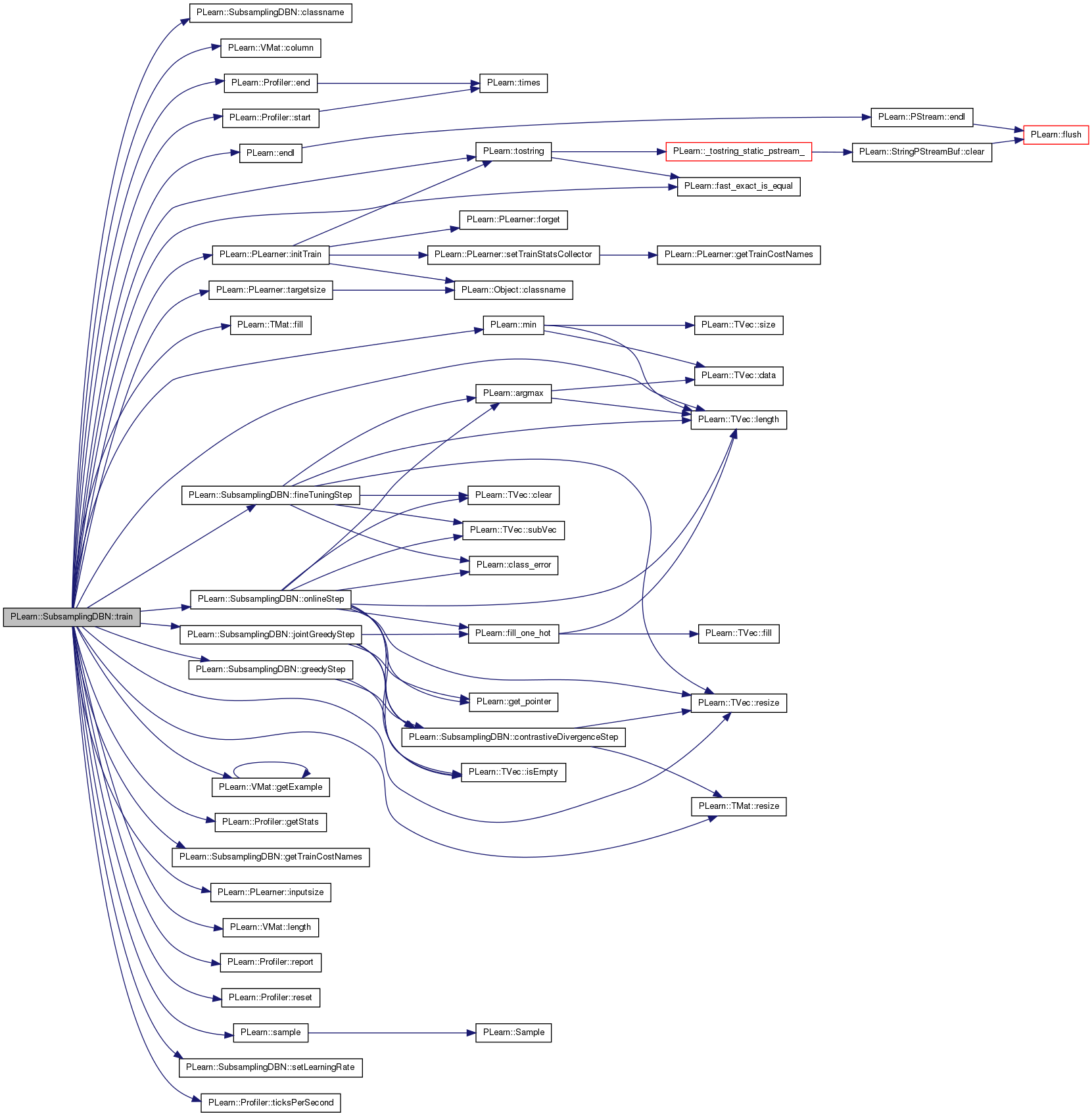

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

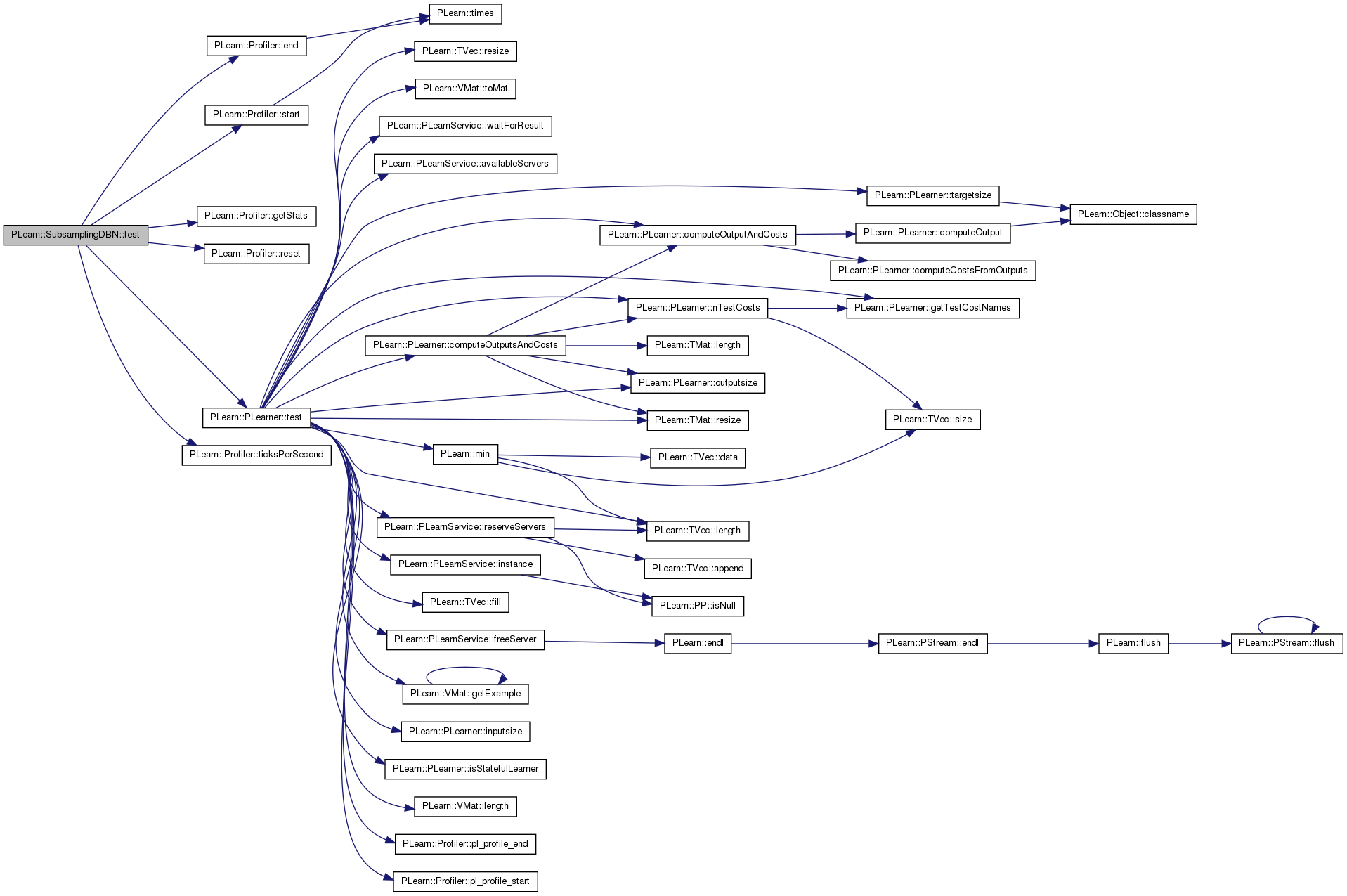

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Re-implementation of the PLearner test() for profiling purposes. | |

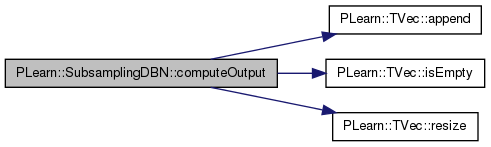

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

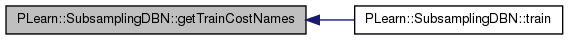

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

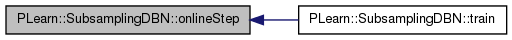

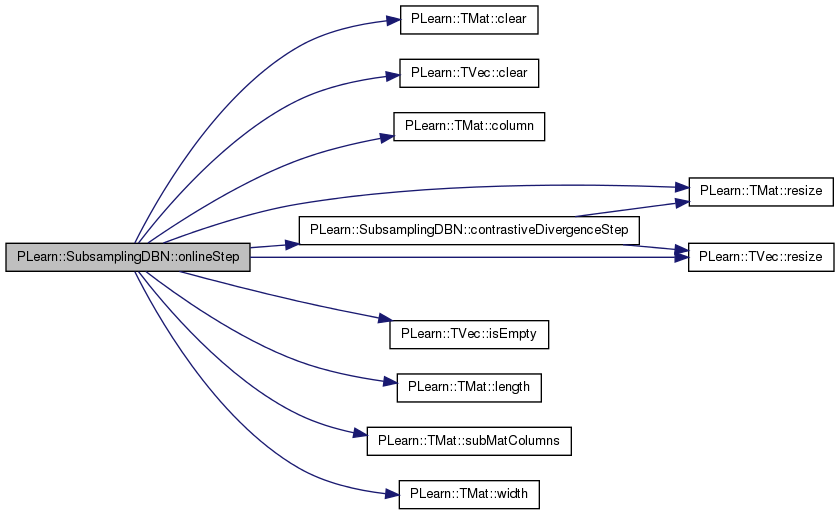

| void | onlineStep (const Vec &input, const Vec &target, Vec &train_costs) |

| void | onlineStep (const Mat &inputs, const Mat &targets, Mat &train_costs) |

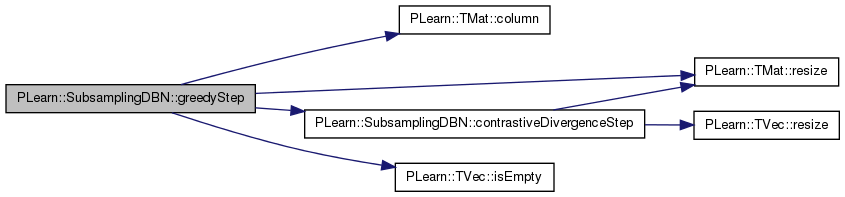

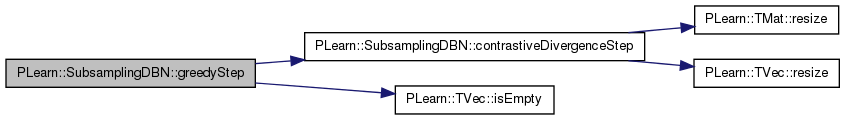

| void | greedyStep (const Vec &input, const Vec &target, int index) |

| void | greedyStep (const Mat &inputs, const Mat &targets, int index, Mat &train_costs_m) |

| Greedy step with mini-batches. | |

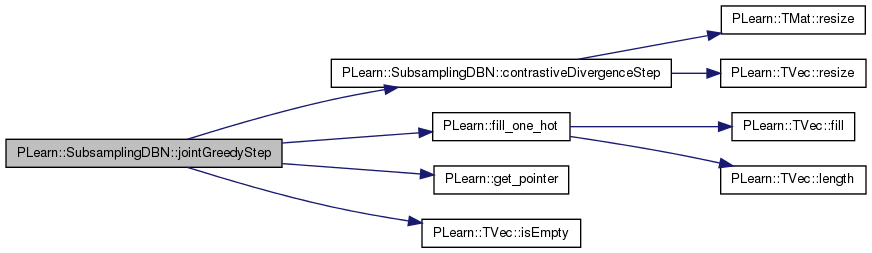

| void | jointGreedyStep (const Vec &input, const Vec &target) |

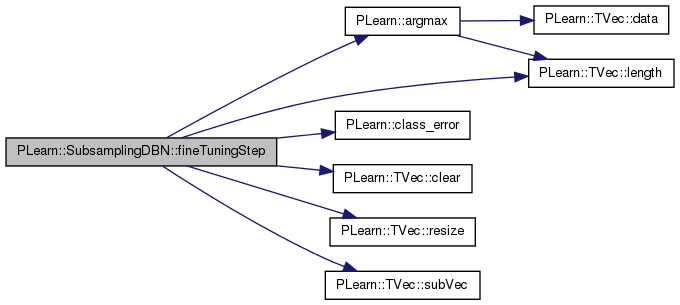

| void | fineTuningStep (const Vec &input, const Vec &target, Vec &train_costs) |

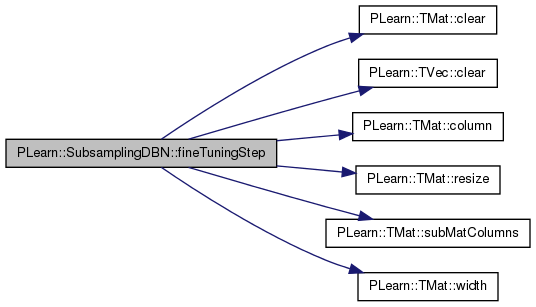

| void | fineTuningStep (const Mat &inputs, const Mat &targets, Mat &train_costs) |

| Fine tuning step with mini-batches. | |

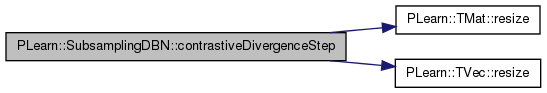

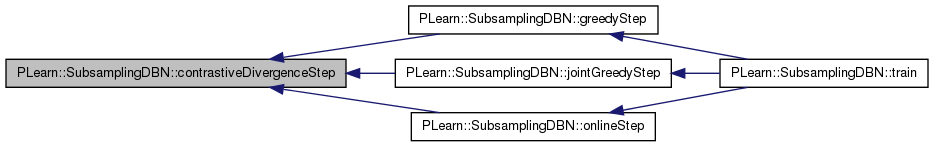

| void | contrastiveDivergenceStep (const PP< RBMLayer > &down_layer, const PP< RBMConnection > &connection, const PP< RBMLayer > &up_layer, int layer_index, bool nofprop=false) |

| Perform a step of contrastive divergence, assuming that down_layer->expectation(s) is set. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SubsamplingDBN * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

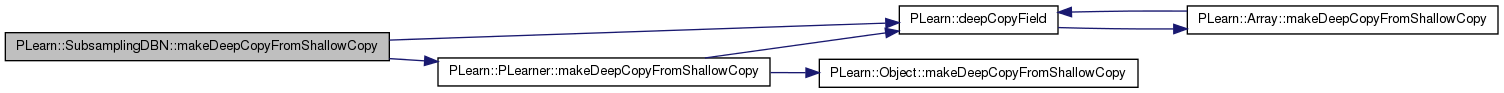

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | cd_learning_rate |

| The learning rate used during contrastive divergence learning. | |

| real | grad_learning_rate |

| The learning rate used during the gradient descent. | |

| int | batch_size |

| real | grad_decrease_ct |

| The decrease constant of the learning rate used during gradient descent. | |

| int | n_classes |

| Number of classes in the training set. | |

| TVec< int > | training_schedule |

| Number of examples to use during each phase of learning: first the greedy phases, and then the fine-tuning phase. | |

| bool | use_classification_cost |

| If the first cost function is the NLL in classification, pre-trained with CD, and using the last *two* layers to get a better approximation (undirected softmax) than layer-wise mean-field. | |

| bool | reconstruct_layerwise |

| Minimize reconstruction error of each layer as an auto-encoder. | |

| TVec< PP< RBMLayer > > | layers |

| The layers of units in the network. | |

| TVec< PP< RBMConnection > > | connections |

| The weights of the connections between the layers. | |

| PP< OnlineLearningModule > | final_module |

| Optional module that takes as input the output of the last layer (layers[n_layers-1), and its output is fed to final_cost, and concatenated with the one of classification_cost (if present) as output of the learner. | |

| PP< CostModule > | final_cost |

| The cost function to be applied on top of the DBN (or of final_module if provided). | |

| TVec< PP< CostModule > > | partial_costs |

| The different cost function to be applied on top of each layer (except the first one, which has to be null) of the RBM. | |

| bool | independent_biases |

| In an RBMLayer, do we want the bias during up and down propagations to be potentially different? | |

| TVec< PP< OnlineLearningModule > > | subsampling_modules |

| Different subsampling modules, to be applied on top of RBMs when they're already learned. | |

| PP< RBMClassificationModule > | classification_module |

| The module computing the probabilities of the different classes. | |

| int | n_layers |

| Number of layers. | |

| TVec< string > | cost_names |

| The computed cost names. | |

| bool | online |

| whether to do things by stages, including fine-tuning, or on-line | |

| real | background_gibbs_update_ratio |

| bool | top_layer_joint_cd |

| Wether we do a step of joint contrastive divergence on top-layer Only used if online for the moment. | |

| int | gibbs_chain_reinit_freq |

| after how many examples should we re-initialize the Gibbs chains (if == INT_MAX, the default then NEVER re-initialize except when stage==0) | |

| TVec< PP< RBMLayer > > | reduced_layers |

| Layers of reduced size, to be put on top of subsampling modules If the subsampling module is null, it will be either the same that the one in 'layers' (default), or a copy of it (with independant biases) if 'independent_biases' is true. | |

| PP< PTimer > | timer |

| Timer for monitoring the speed. | |

| PP< NLLCostModule > | classification_cost |

| The module computing the classification cost function (NLL) on top of classification_module. | |

| PP< RBMMixedLayer > | joint_layer |

| Concatenation of layers[n_layers-2] and the target layer (that is inside classification_module), if use_classification_cost. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| int | minibatch_size |

| bool | initialize_gibbs_chain |

| TVec< Vec > | activation_gradients |

| Stores the gradient of the cost wrt the activations (at the input of the layers) | |

| TVec< Mat > | activations_gradients |

| For mini-batch. | |

| TVec< Vec > | expectation_gradients |

| Stores the gradient of the cost wrt the expectations (at the output of the layers) | |

| TVec< Mat > | expectations_gradients |

| For mini-batch. | |

| TVec< Vec > | subsampling_gradients |

| Vec | final_cost_input |

| Mat | final_cost_inputs |

| For mini-batch. | |

| Vec | final_cost_value |

| Mat | final_cost_values |

| For mini-batch. | |

| Vec | final_cost_output |

| Vec | class_output |

| Vec | class_gradient |

| Vec | final_cost_gradient |

| Stores the gradient of the cost at the input of final_cost. | |

| Mat | final_cost_gradients |

| For mini-batch. | |

| Vec | save_layer_activation |

| buffers bottom layer activation during onlineStep | |

| Mat | save_layer_activations |

| For mini-batches. | |

| Vec | save_layer_expectation |

| buffers bottom layer expectation during onlineStep | |

| Mat | save_layer_expectations |

| For mini-batches. | |

| bool | final_module_has_learning_rate |

| Does final_module exist and have a "learning_rate" option. | |

| bool | final_cost_has_learning_rate |

| Does final_cost exist and have a "learning_rate" option. | |

| Vec | pos_down_val |

| Store a copy of the positive phase values. | |

| Vec | pos_up_val |

| Mat | pos_down_vals |

| Mat | pos_up_vals |

| Mat | cd_neg_down_vals |

| Mat | cd_neg_up_vals |

| TVec< Mat > | gibbs_down_state |

| Store the state of the Gibbs chain for each RBM. | |

| Vec | optimized_costs |

| Used to store the costs optimized by the final cost module. | |

| Vec | reconstruction_costs |

| Stores reconstruction costs. | |

| int | nll_cost_index |

| Keeps the index of the NLL cost in train_costs. | |

| int | class_cost_index |

| Keeps the index of the class_error cost in train_costs. | |

| int | final_cost_index |

| Keeps the beginning index of the final costs in train_costs. | |

| TVec< int > | partial_costs_indices |

| Keeps the beginning indices of the partial costs in train_costs. | |

| int | reconstruction_cost_index |

| Keeps the beginning index of the reconstruction costs in train_costs. | |

| int | training_cpu_time_cost_index |

| Index of the cpu time cost (per each call of train()) | |

| int | cumulative_training_time_cost_index |

| The index of the cumulative training time cost. | |

| int | cumulative_testing_time_cost_index |

| The index of the cumulative testing time cost. | |

| real | cumulative_training_time |

| Holds the total training (cpu)time. | |

| real | cumulative_testing_time |

| Holds the total testing (cpu)time. | |

| TVec< int > | cumulative_schedule |

| Cumulative training schedule. | |

| Vec | layer_input |

| Mat | layer_inputs |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_layers_and_connections () |

| void | build_costs () |

| void | build_classification_cost () |

| void | build_final_cost () |

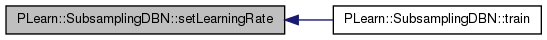

| void | setLearningRate (real the_learning_rate) |

Neural net, learned layer-wise in a greedy fashion.

This version support different unit types, different connection types, and different cost functions, including the NLL in classification.

Definition at line 61 of file SubsamplingDBN.h.

typedef PLearner PLearn::SubsamplingDBN::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 63 of file SubsamplingDBN.h.

| PLearn::SubsamplingDBN::SubsamplingDBN | ( | ) |

Default constructor.

Definition at line 59 of file SubsamplingDBN.cc.

References PLearn::PLearner::random_gen.

:

cd_learning_rate( 0. ),

grad_learning_rate( 0. ),

batch_size( 1 ),

grad_decrease_ct( 0. ),

// grad_weight_decay( 0. ),

n_classes( -1 ),

use_classification_cost( true ),

reconstruct_layerwise( false ),

independent_biases( false ),

n_layers( 0 ),

online ( false ),

background_gibbs_update_ratio(0),

gibbs_chain_reinit_freq( INT_MAX ),

minibatch_size( 0 ),

initialize_gibbs_chain( false ),

final_module_has_learning_rate( false ),

final_cost_has_learning_rate( false ),

nll_cost_index( -1 ),

class_cost_index( -1 ),

final_cost_index( -1 ),

reconstruction_cost_index( -1 ),

training_cpu_time_cost_index ( -1 ),

cumulative_training_time_cost_index ( -1 ),

cumulative_testing_time_cost_index ( -1 ),

cumulative_training_time( 0 ),

cumulative_testing_time( 0 )

{

random_gen = new PRandom();

}

| string PLearn::SubsamplingDBN::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

| OptionList & PLearn::SubsamplingDBN::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

| RemoteMethodMap & PLearn::SubsamplingDBN::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

| Object * PLearn::SubsamplingDBN::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 54 of file SubsamplingDBN.cc.

| StaticInitializer SubsamplingDBN::_static_initializer_ & PLearn::SubsamplingDBN::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

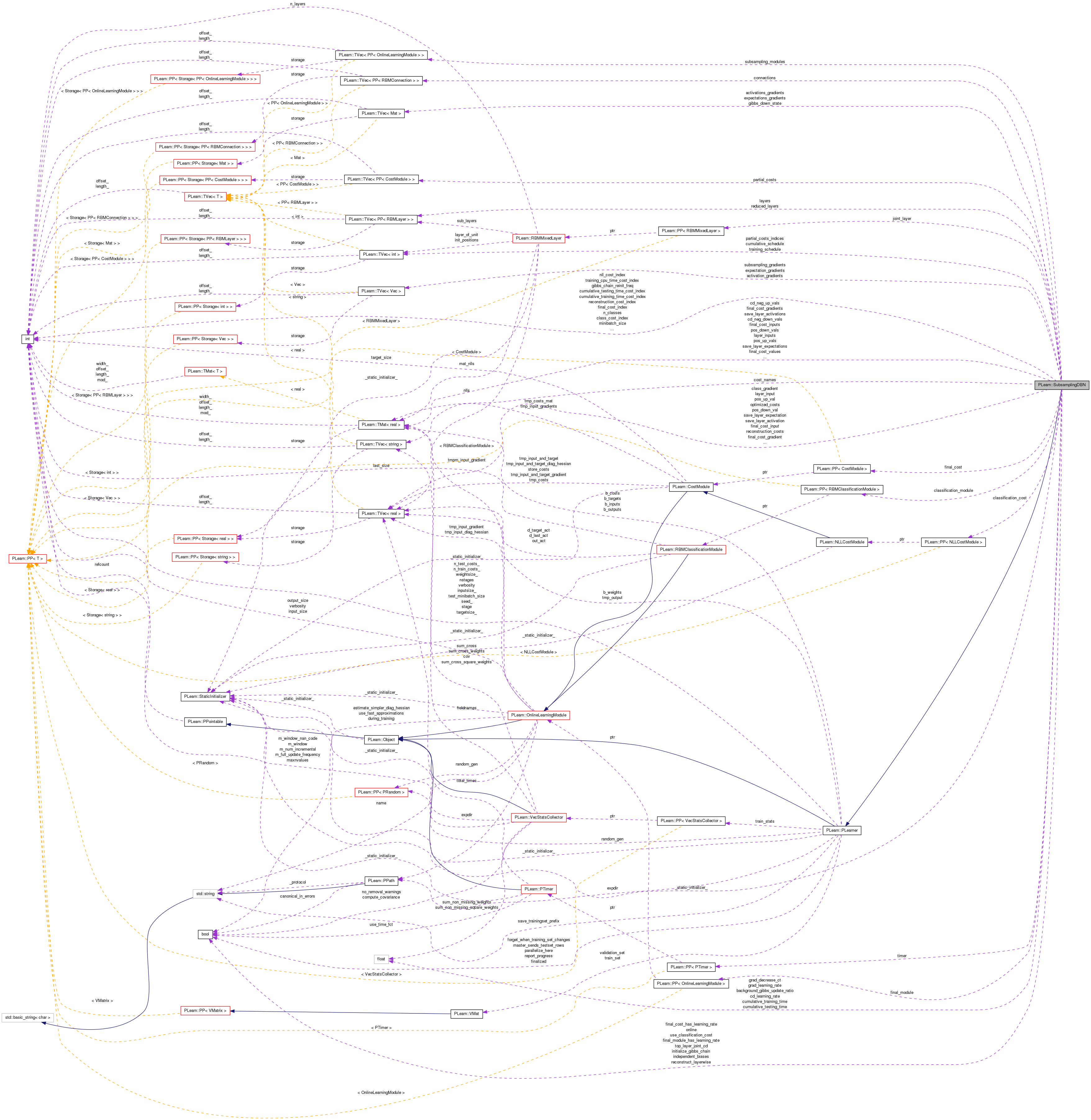

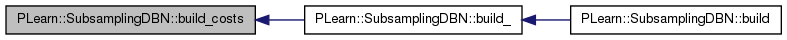

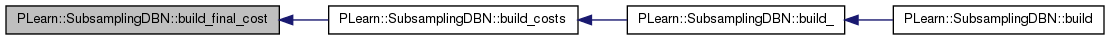

| void PLearn::SubsamplingDBN::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 681 of file SubsamplingDBN.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

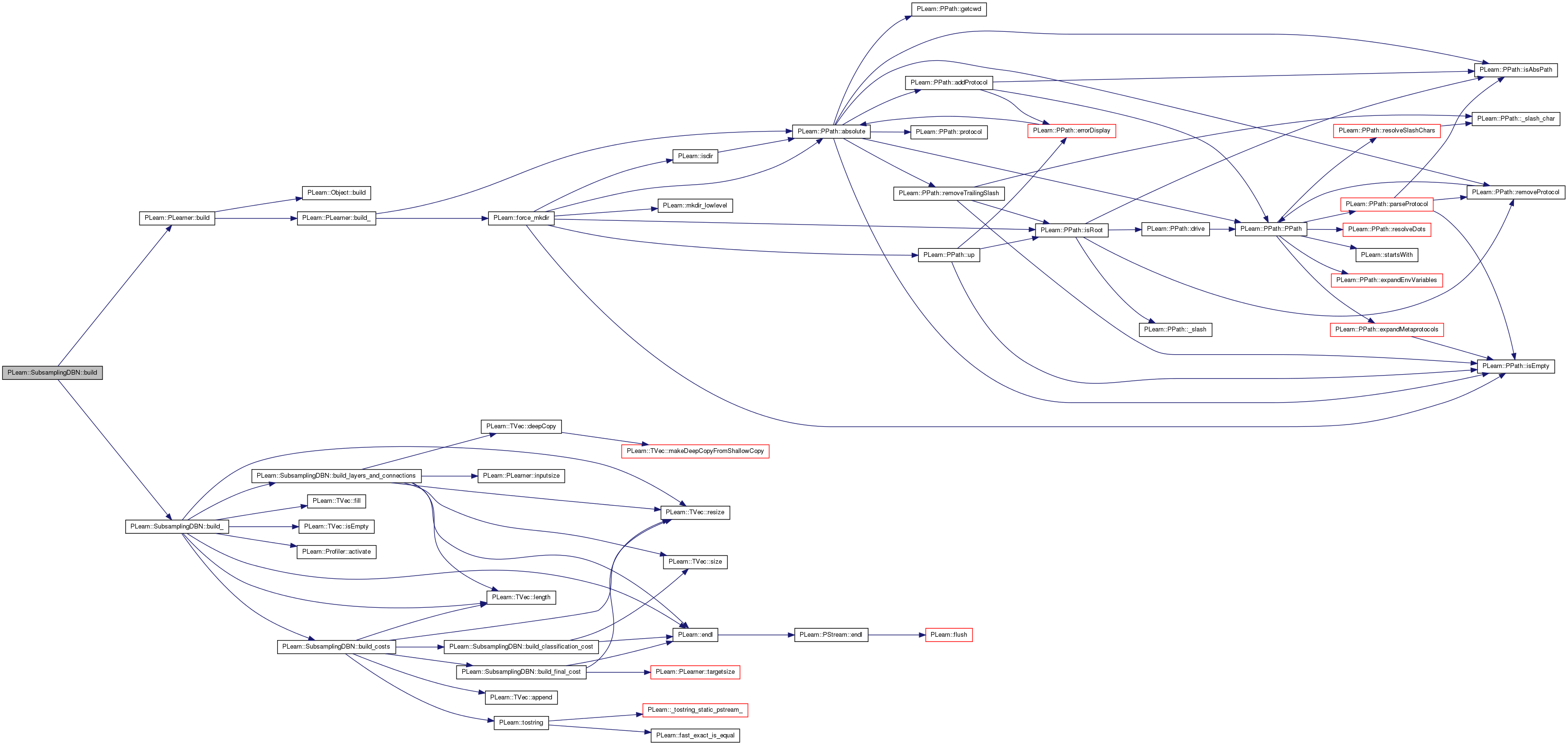

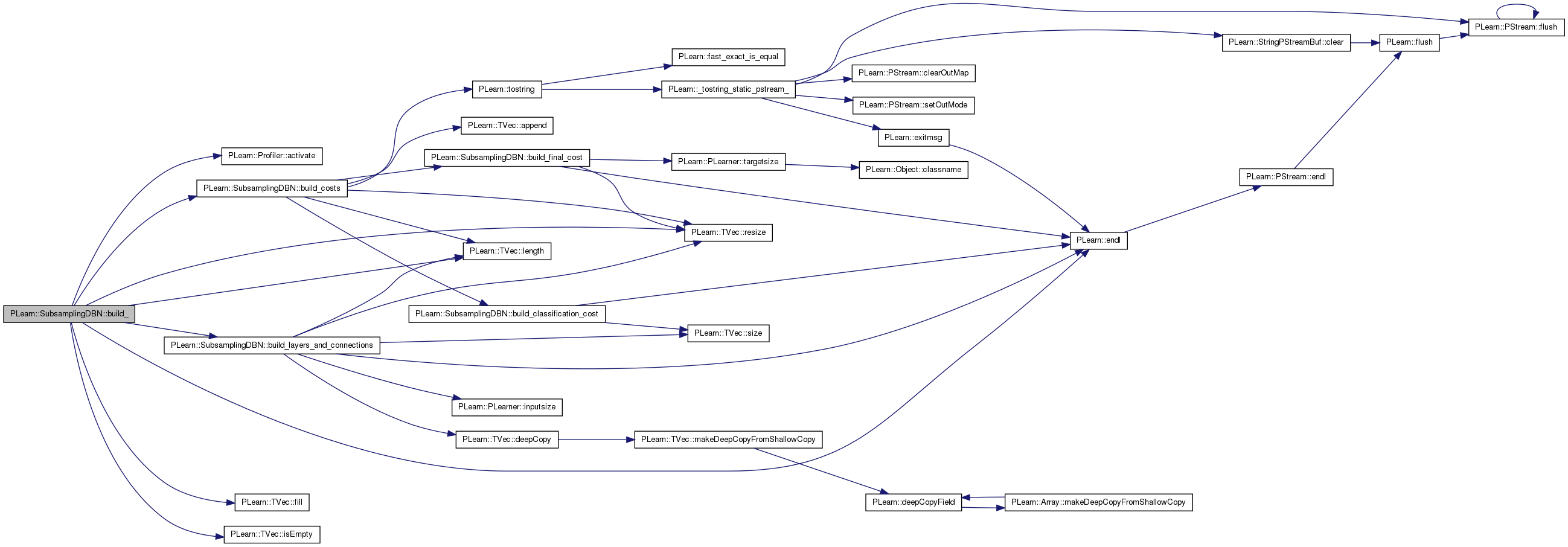

| void PLearn::SubsamplingDBN::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 306 of file SubsamplingDBN.cc.

References PLearn::Profiler::activate(), batch_size, build_costs(), build_layers_and_connections(), cumulative_schedule, PLearn::endl(), PLearn::TVec< T >::fill(), i, PLearn::TVec< T >::isEmpty(), layers, PLearn::TVec< T >::length(), n_layers, online, PLASSERT, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), and training_schedule.

Referenced by build().

{

PLASSERT( batch_size >= 0 );

MODULE_LOG << "build_() called" << endl;

// Initialize some learnt variables

if (layers.isEmpty())

PLERROR("In SubsamplingDBN::build_ - You must provide at least one RBM "

"layer through the 'layers' option");

else

n_layers = layers.length();

if( !online )

{

if( training_schedule.length() != n_layers )

{

PLWARNING("In SubsamplingDBN::build_ - training_schedule.length() "

"!= n_layers, resizing and zeroing");

training_schedule.resize( n_layers );

training_schedule.fill( 0 );

}

cumulative_schedule.resize( n_layers+1 );

cumulative_schedule[0] = 0;

for( int i=0 ; i<n_layers ; i++ )

{

cumulative_schedule[i+1] = cumulative_schedule[i] +

training_schedule[i];

}

}

build_layers_and_connections();

// Activate the profiler

Profiler::activate();

build_costs();

}

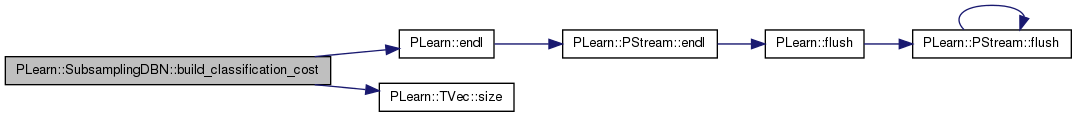

| void PLearn::SubsamplingDBN::build_classification_cost | ( | ) | [private] |

Definition at line 556 of file SubsamplingDBN.cc.

References batch_size, classification_cost, classification_module, connections, PLearn::endl(), joint_layer, layers, n_classes, n_layers, PLASSERT_MSG, PLERROR, PLearn::PLearner::random_gen, and PLearn::TVec< T >::size().

Referenced by build_costs().

{

MODULE_LOG << "build_classification_cost() called" << endl;

PLERROR( "classification_cost doesn't work with subsampling yet" );

PLASSERT_MSG(batch_size == 1, "SubsamplingDBN::build_classification_cost - "

"This method has not been verified yet for minibatch "

"compatibility");

PP<RBMMatrixConnection> last_to_target = new RBMMatrixConnection();

last_to_target->up_size = layers[n_layers-1]->size;

last_to_target->down_size = n_classes;

last_to_target->random_gen = random_gen;

last_to_target->build();

PP<RBMMultinomialLayer> target_layer = new RBMMultinomialLayer();

target_layer->size = n_classes;

target_layer->random_gen = random_gen;

target_layer->build();

PLASSERT_MSG(n_layers >= 2, "You must specify at least two layers (the "

"input layer and one hidden layer)");

classification_module = new RBMClassificationModule();

classification_module->previous_to_last = connections[n_layers-2];

classification_module->last_layer =

(RBMBinomialLayer*) (RBMLayer*) layers[n_layers-1];

classification_module->last_to_target = last_to_target;

classification_module->target_layer = target_layer;

classification_module->random_gen = random_gen;

classification_module->build();

classification_cost = new NLLCostModule();

classification_cost->input_size = n_classes;

classification_cost->target_size = 1;

classification_cost->build();

joint_layer = new RBMMixedLayer();

joint_layer->sub_layers.resize( 2 );

joint_layer->sub_layers[0] = layers[ n_layers-2 ];

joint_layer->sub_layers[1] = target_layer;

joint_layer->random_gen = random_gen;

joint_layer->build();

}

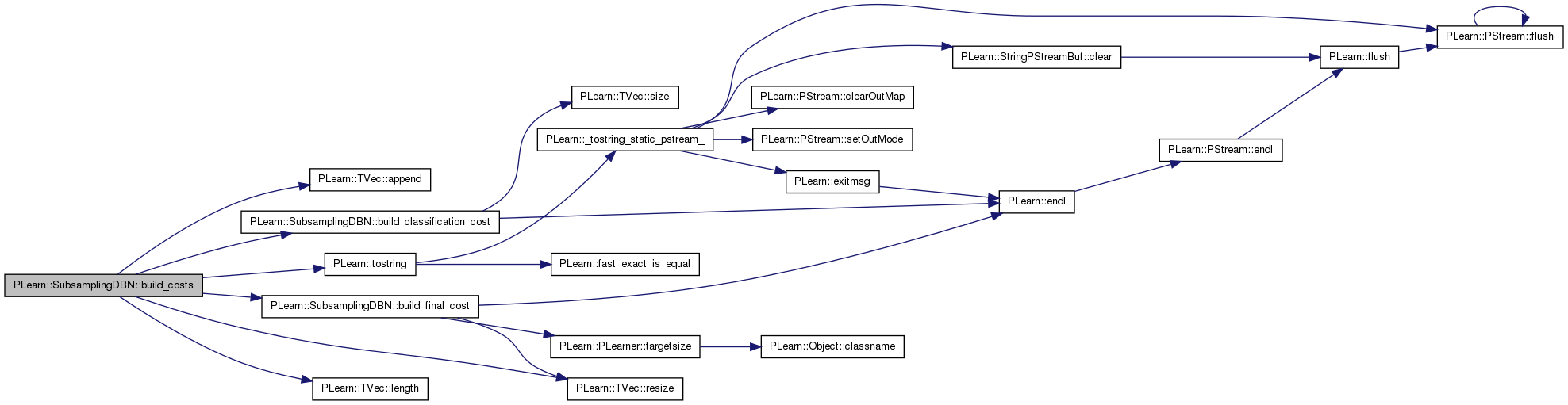

| void PLearn::SubsamplingDBN::build_costs | ( | ) | [private] |

Definition at line 349 of file SubsamplingDBN.cc.

References PLearn::TVec< T >::append(), build_classification_cost(), build_final_cost(), class_cost_index, cost_names, cumulative_testing_time_cost_index, cumulative_training_time_cost_index, final_cost, final_cost_index, i, j, PLearn::TVec< T >::length(), n_classes, n_layers, nll_cost_index, partial_costs, partial_costs_indices, PLASSERT, PLearn::PLearner::random_gen, reconstruct_layerwise, reconstruction_cost_index, reconstruction_costs, PLearn::TVec< T >::resize(), PLearn::tostring(), training_cpu_time_cost_index, and use_classification_cost.

Referenced by build_().

{

cost_names.resize(0);

int current_index = 0;

// build the classification module, its cost and the joint layer

if( use_classification_cost )

{

PLASSERT( n_classes >= 2 );

build_classification_cost();

cost_names.append("NLL");

nll_cost_index = current_index;

current_index++;

cost_names.append("class_error");

class_cost_index = current_index;

current_index++;

}

if( final_cost )

{

build_final_cost();

TVec<string> final_names = final_cost->name();

int n_final_costs = final_names.length();

for( int i=0; i<n_final_costs; i++ )

cost_names.append("final." + final_names[i]);

final_cost_index = current_index;

current_index += n_final_costs;

}

if( partial_costs )

{

int n_partial_costs = partial_costs.length();

partial_costs_indices.resize(n_partial_costs);

for( int i=0; i<n_partial_costs; i++ )

if( partial_costs[i] )

{

TVec<string> names = partial_costs[i]->name();

int n_partial_costs_i = names.length();

for( int j=0; j<n_partial_costs_i; j++ )

cost_names.append("partial"+tostring(i)+"."+names[j]);

partial_costs_indices[i] = current_index;

current_index += n_partial_costs_i;

// Share random_gen with partial_costs[i], unless it already

// has one

if( !(partial_costs[i]->random_gen) )

{

partial_costs[i]->random_gen = random_gen;

partial_costs[i]->forget();

}

}

else

partial_costs_indices[i] = -1;

}

else

partial_costs_indices.resize(0);

if( reconstruct_layerwise )

{

reconstruction_costs.resize(n_layers);

cost_names.append("layerwise_reconstruction_error");

reconstruction_cost_index = current_index;

current_index++;

for( int i=0; i<n_layers-1; i++ )

cost_names.append("layer"+tostring(i)+".reconstruction_error");

current_index += n_layers-1;

}

else

reconstruction_costs.resize(0);

cost_names.append("cpu_time");

cost_names.append("cumulative_train_time");

cost_names.append("cumulative_test_time");

training_cpu_time_cost_index = current_index;

current_index++;

cumulative_training_time_cost_index = current_index;

current_index++;

cumulative_testing_time_cost_index = current_index;

current_index++;

PLASSERT( current_index == cost_names.length() );

}

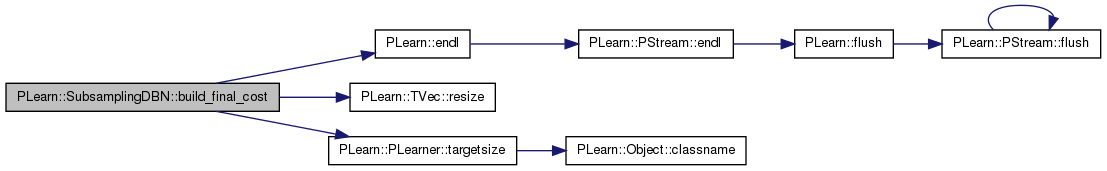

| void PLearn::SubsamplingDBN::build_final_cost | ( | ) | [private] |

Definition at line 604 of file SubsamplingDBN.cc.

References PLearn::endl(), final_cost, final_cost_gradient, final_module, grad_learning_rate, layers, n_classes, n_layers, PLASSERT_MSG, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::PLearner::targetsize(), and PLearn::PLearner::targetsize_.

Referenced by build_costs().

{

MODULE_LOG << "build_final_cost() called" << endl;

PLASSERT_MSG(final_cost->input_size >= 0, "The input size of the final "

"cost must be non-negative");

final_cost_gradient.resize( final_cost->input_size );

final_cost->setLearningRate( grad_learning_rate );

if( final_module )

{

if( layers[n_layers-1]->size != final_module->input_size )

PLERROR("SubsamplingDBN::build_final_cost() - "

"layers[%i]->size (%d) != final_module->input_size (%d)."

"\n", n_layers-1, layers[n_layers-1]->size,

final_module->input_size);

if( final_module->output_size != final_cost->input_size )

PLERROR("SubsamplingDBN::build_final_cost() - "

"final_module->output_size (%d) != final_cost->input_size."

"\n", n_layers-1, layers[n_layers-1]->size,

final_module->input_size);

final_module->setLearningRate( grad_learning_rate );

// Share random_gen with final_module, unless it already has one

if( !(final_module->random_gen) )

{

final_module->random_gen = random_gen;

final_module->forget();

}

}

else

{

if( layers[n_layers-1]->size != final_cost->input_size )

PLERROR("SubsamplingDBN::build_final_cost() - "

"layers[%i]->size (%d) != final_cost->input_size (%d)."

"\n", n_layers-1, layers[n_layers-1]->size,

final_cost->input_size);

}

// check target size and final_cost->input_size

if( n_classes == 0 ) // regression

{

if( final_cost->input_size != targetsize() )

PLERROR("SubsamplingDBN::build_final_cost() - "

"final_cost->input_size (%d) != targetsize() (%d), "

"although we are doing regression (n_classes == 0).\n",

final_cost->input_size, targetsize());

}

else

{

if( final_cost->input_size != n_classes )

PLERROR("SubsamplingDBN::build_final_cost() - "

"final_cost->input_size (%d) != n_classes (%d), "

"although we are doing classification (n_classes != 0).\n",

final_cost->input_size, n_classes);

if( targetsize_ >= 0 && targetsize() != 1 )

PLERROR("SubsamplingDBN::build_final_cost() - "

"targetsize() (%d) != 1, "

"although we are doing classification (n_classes != 0).\n",

targetsize());

}

// Share random_gen with final_cost, unless it already has one

if( !(final_cost->random_gen) )

{

final_cost->random_gen = random_gen;

final_cost->forget();

}

}

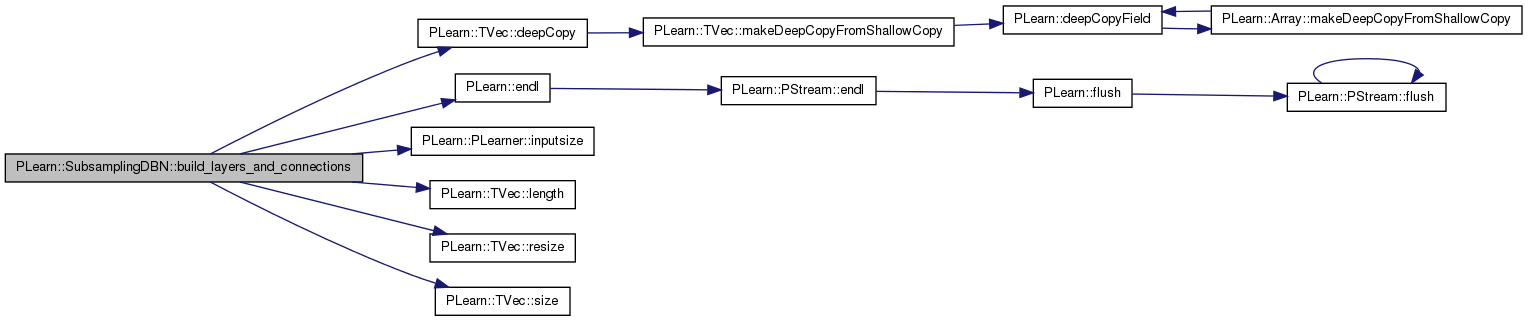

| void PLearn::SubsamplingDBN::build_layers_and_connections | ( | ) | [private] |

Definition at line 445 of file SubsamplingDBN.cc.

References activation_gradients, activations_gradients, connections, PLearn::TVec< T >::deepCopy(), PLearn::endl(), expectation_gradients, expectations_gradients, gibbs_down_state, i, independent_biases, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, layers, PLearn::TVec< T >::length(), n_layers, PLASSERT, PLASSERT_MSG, PLERROR, PLearn::PLearner::random_gen, reduced_layers, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), subsampling_gradients, and subsampling_modules.

Referenced by build_().

{

MODULE_LOG << "build_layers_and_connections() called" << endl;

if( connections.length() != n_layers-1 )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"connections.length() (%d) != n_layers-1 (%d).\n",

connections.length(), n_layers-1);

if( subsampling_modules.length() == 0 )

subsampling_modules.resize(n_layers-1);

if( subsampling_modules.length() != n_layers-1 )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"subsampling_modules.length() (%d) != n_layers-1 (%d).\n",

subsampling_modules.length(), n_layers-1);

if( inputsize_ >= 0 )

PLASSERT( layers[0]->size == inputsize() );

activation_gradients.resize( n_layers );

activations_gradients.resize( n_layers );

expectation_gradients.resize( n_layers );

expectations_gradients.resize( n_layers );

subsampling_gradients.resize( n_layers );

gibbs_down_state.resize( n_layers-1 );

reduced_layers.resize(n_layers-1);

for( int i=0 ; i<n_layers-1 ; i++ )

{

if( !(reduced_layers[i]) )

{

if( (independent_biases || subsampling_modules[i]) && i!=0 )

{

CopiesMap map;

reduced_layers[i] = layers[i]->deepCopy(map);

if( subsampling_modules[i] )

{

reduced_layers[i]->size =

subsampling_modules[i]->output_size;

reduced_layers[i]->build();

}

}

else

reduced_layers[i] = layers[i];

}

if( subsampling_modules[i] )

{

if( layers[i]->size != subsampling_modules[i]->input_size )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"layers[%i]->size (%d) != subsampling_modules[%i]->input_size (%d)."

"\n", i, layers[i]->size, i,

subsampling_modules[i]->input_size);

}

else

{

if( layers[i]->size != reduced_layers[i]->size )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"layers[%i]->size (%d) != reduced_layers[%i]->size (%d)."

"\n", i, layers[i]->size, i, reduced_layers[i]->size);

}

if( reduced_layers[i]->size != connections[i]->down_size )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"reduced_layers[%i]->size (%d) != connections[%i]->down_size (%d)."

"\n", i, reduced_layers[i]->size, i, connections[i]->down_size);

if( connections[i]->up_size != layers[i+1]->size )

PLERROR("SubsamplingDBN::build_layers_and_connections() - \n"

"connections[%i]->up_size (%d) != layers[%i]->size (%d)."

"\n", i, connections[i]->up_size, i+1, layers[i+1]->size);

// Assign random_gen to layers[i] and connections[i], unless they

// already have one

if( !(layers[i]->random_gen) )

{

layers[i]->random_gen = random_gen;

layers[i]->forget();

}

if( !(reduced_layers[i]->random_gen) )

{

reduced_layers[i]->random_gen = random_gen;

reduced_layers[i]->forget();

}

if( !(connections[i]->random_gen) )

{

connections[i]->random_gen = random_gen;

connections[i]->forget();

}

activation_gradients[i].resize( layers[i]->size );

expectation_gradients[i].resize( layers[i]->size );

subsampling_gradients[i].resize( reduced_layers[i]->size );

}

if( !(layers[n_layers-1]->random_gen) )

{

layers[n_layers-1]->random_gen = random_gen;

layers[n_layers-1]->forget();

}

int last_layer_size = layers[n_layers-1]->size;

PLASSERT_MSG(last_layer_size >= 0,

"Size of last layer must be non-negative");

activation_gradients[n_layers-1].resize(last_layer_size);

expectation_gradients[n_layers-1].resize(last_layer_size);

}

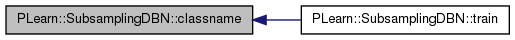

| string PLearn::SubsamplingDBN::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file SubsamplingDBN.cc.

Referenced by train().

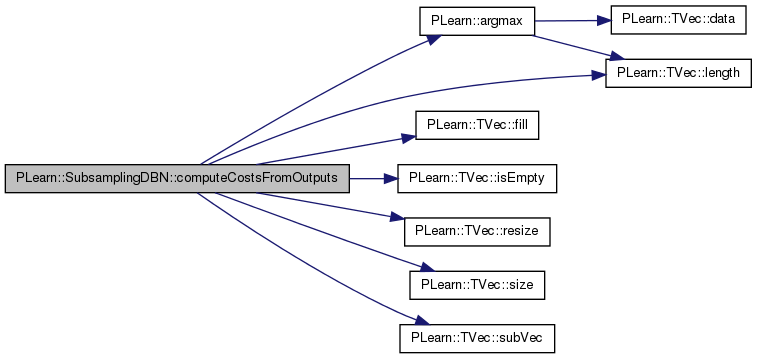

| void PLearn::SubsamplingDBN::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 2205 of file SubsamplingDBN.cc.

References PLearn::argmax(), class_cost_index, classification_cost, cost_names, PLearn::TVec< T >::fill(), final_cost, final_cost_index, final_cost_value, i, PLearn::TVec< T >::isEmpty(), layers, PLearn::TVec< T >::length(), MISSING_VALUE, n_classes, n_layers, nll_cost_index, partial_costs, partial_costs_indices, reconstruct_layerwise, reconstruction_cost_index, reconstruction_costs, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), and use_classification_cost.

{

// Compute the costs from *already* computed output.

costs.resize( cost_names.length() );

costs.fill( MISSING_VALUE );

// TO MAKE FOR CLEANER CODE INDEPENDENT OF ORDER OF CALLING THIS

// METHOD AND computeOutput, THIS SHOULD BE IN A REDEFINITION OF computeOutputAndCosts

if( use_classification_cost )

{

classification_cost->CostModule::fprop( output.subVec(0, n_classes),

target, costs[nll_cost_index] );

costs[class_cost_index] =

(argmax(output.subVec(0, n_classes)) == (int) round(target[0]))? 0

: 1;

}

if( final_cost )

{

int init = use_classification_cost ? n_classes : 0;

final_cost->fprop( output.subVec( init, output.size() - init ),

target, final_cost_value );

costs.subVec(final_cost_index, final_cost_value.length())

<< final_cost_value;

}

if( !partial_costs.isEmpty() )

{

Vec pcosts;

for( int i=0 ; i<n_layers-1 ; i++ )

// propagate into local cost associated to output of layer i+1

if( partial_costs[ i ] )

{

partial_costs[ i ]->fprop( layers[ i+1 ]->expectation,

target, pcosts);

costs.subVec(partial_costs_indices[i], pcosts.length())

<< pcosts;

}

}

if (reconstruct_layerwise)

costs.subVec(reconstruction_cost_index, reconstruction_costs.length())

<< reconstruction_costs;

}

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 2126 of file SubsamplingDBN.cc.

References PLearn::TVec< T >::append(), classification_module, connections, final_cost, final_cost_input, final_module, i, independent_biases, PLearn::TVec< T >::isEmpty(), layer_input, layers, n_layers, partial_costs, PLERROR, reconstruct_layerwise, reconstruction_costs, reduced_layers, PLearn::TVec< T >::resize(), subsampling_modules, and use_classification_cost.

{

// Compute the output from the input.

output.resize(0);

// fprop

reduced_layers[0]->expectation << input;

if(reconstruct_layerwise)

reconstruction_costs[0]=0;

for( int i=0 ; i<n_layers-2 ; i++ )

{

connections[i]->setAsDownInput( reduced_layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

if( subsampling_modules[i+1] )

{

subsampling_modules[i+1]->fprop(layers[i+1]->expectation,

reduced_layers[i+1]->expectation);

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

else if( independent_biases )

{

reduced_layers[i+1]->expectation << layers[i+1]->expectation;

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

if (reconstruct_layerwise)

{

PLERROR( "reconstruct_layerwise and subsampling don't work yet" );

layer_input.resize(layers[i]->size);

layer_input << layers[i]->expectation;

connections[i]->setAsUpInput(layers[i+1]->expectation);

layers[i]->getAllActivations(connections[i]);

real rc = reconstruction_costs[i+1] = layers[i]->fpropNLL( layer_input );

reconstruction_costs[0] += rc;

}

}

if( use_classification_cost )

classification_module->fprop( layers[ n_layers-2 ]->expectation,

output );

if( final_cost || (!partial_costs.isEmpty() && partial_costs[n_layers-2] ))

{

connections[ n_layers-2 ]->setAsDownInput(

reduced_layers[ n_layers-2 ]->expectation );

layers[ n_layers-1 ]->getAllActivations( connections[ n_layers-2 ] );

layers[ n_layers-1 ]->computeExpectation();

if( final_module )

{

final_module->fprop( layers[ n_layers-1 ]->expectation,

final_cost_input );

output.append( final_cost_input );

}

else

{

output.append( layers[ n_layers-1 ]->expectation );

}

if (reconstruct_layerwise)

{

PLERROR( "reconstruct_layerwise and subsampling don't work yet" );

layer_input.resize(layers[n_layers-2]->size);

layer_input << layers[n_layers-2]->expectation;

connections[n_layers-2]->setAsUpInput(layers[n_layers-1]->expectation);

layers[n_layers-2]->getAllActivations(connections[n_layers-2]);

real rc = reconstruction_costs[n_layers-1] = layers[n_layers-2]->fpropNLL( layer_input );

reconstruction_costs[0] += rc;

}

}

}

| void PLearn::SubsamplingDBN::contrastiveDivergenceStep | ( | const PP< RBMLayer > & | down_layer, |

| const PP< RBMConnection > & | connection, | ||

| const PP< RBMLayer > & | up_layer, | ||

| int | layer_index, | ||

| bool | nofprop = false |

||

| ) |

Perform a step of contrastive divergence, assuming that down_layer->expectation(s) is set.

Definition at line 1968 of file SubsamplingDBN.cc.

References background_gibbs_update_ratio, cd_neg_down_vals, cd_neg_up_vals, gibbs_down_state, initialize_gibbs_chain, minibatch_hack, minibatch_size, pos_down_val, pos_down_vals, pos_up_val, pos_up_vals, PLearn::TMat< T >::resize(), and PLearn::TVec< T >::resize().

Referenced by greedyStep(), jointGreedyStep(), and onlineStep().

{

bool mbatch = minibatch_size > 1 || minibatch_hack;

// positive phase

if (!nofprop)

{

if (mbatch) {

connection->setAsDownInputs( down_layer->getExpectations() );

up_layer->getAllActivations( connection, 0, true );

up_layer->computeExpectations();

} else {

connection->setAsDownInput( down_layer->expectation );

up_layer->getAllActivations( connection );

up_layer->computeExpectation();

}

}

if (mbatch) {

// accumulate positive stats using the expectation

// we deep-copy because the value will change during negative phase

pos_down_vals.resize(minibatch_size, down_layer->size);

pos_up_vals.resize(minibatch_size, up_layer->size);

pos_down_vals << down_layer->getExpectations();

pos_up_vals << up_layer->getExpectations();

// down propagation, starting from a sample of up_layer

if (background_gibbs_update_ratio<1)

// then do some contrastive divergence, o/w only background Gibbs

{

up_layer->generateSamples();

connection->setAsUpInputs( up_layer->samples );

down_layer->getAllActivations( connection, 0, true );

down_layer->generateSamples();

// negative phase

connection->setAsDownInputs( down_layer->samples );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectations();

// accumulate negative stats

// no need to deep-copy because the values won't change before update

Mat neg_down_vals = down_layer->samples;

Mat neg_up_vals = up_layer->getExpectations();

if (background_gibbs_update_ratio==0)

// update here only if there is ONLY contrastive divergence

{

down_layer->update( pos_down_vals, neg_down_vals );

connection->update( pos_down_vals, pos_up_vals,

neg_down_vals, neg_up_vals );

up_layer->update( pos_up_vals, neg_up_vals );

}

else

{

connection->accumulatePosStats(pos_down_vals,pos_up_vals);

cd_neg_down_vals.resize(minibatch_size, down_layer->size);

cd_neg_up_vals.resize(minibatch_size, up_layer->size);

cd_neg_down_vals << neg_down_vals;

cd_neg_up_vals << neg_up_vals;

}

}

//

if (background_gibbs_update_ratio>0)

{

Mat down_state = gibbs_down_state[layer_index];

if (initialize_gibbs_chain) // initializing or re-initializing the chain

{

if (background_gibbs_update_ratio==1) // if <1 just use the CD state

{

up_layer->generateSamples();

connection->setAsUpInputs(up_layer->samples);

down_layer->getAllActivations(connection, 0, true);

down_layer->generateSamples();

down_state << down_layer->samples;

}

initialize_gibbs_chain=false;

}

// sample up state given down state

connection->setAsDownInputs(down_state);

up_layer->getAllActivations(connection, 0, true);

up_layer->generateSamples();

// sample down state given up state, to prepare for next time

connection->setAsUpInputs(up_layer->samples);

down_layer->getAllActivations(connection, 0, true);

down_layer->generateSamples();

// update using the down_state and up_layer->expectations for moving average in negative phase

// (and optionally

if (background_gibbs_update_ratio<1)

{

down_layer->updateCDandGibbs(pos_down_vals,cd_neg_down_vals,

down_state,

background_gibbs_update_ratio);

connection->updateCDandGibbs(pos_down_vals,pos_up_vals,

cd_neg_down_vals, cd_neg_up_vals,

down_state,

up_layer->getExpectations(),

background_gibbs_update_ratio);

up_layer->updateCDandGibbs(pos_up_vals,cd_neg_up_vals,

up_layer->getExpectations(),

background_gibbs_update_ratio);

}

else

{

down_layer->updateGibbs(pos_down_vals,down_state);

connection->updateGibbs(pos_down_vals,pos_up_vals,down_state,

up_layer->getExpectations());

up_layer->updateGibbs(pos_up_vals,up_layer->getExpectations());

}

// Save Gibbs chain's state.

down_state << down_layer->samples;

}

} else {

up_layer->generateSample();

// accumulate positive stats using the expectation

// we deep-copy because the value will change during negative phase

pos_down_val.resize( down_layer->size );

pos_up_val.resize( up_layer->size );

pos_down_val << down_layer->expectation;

pos_up_val << up_layer->expectation;

// down propagation, starting from a sample of up_layer

connection->setAsUpInput( up_layer->sample );

down_layer->getAllActivations( connection );

down_layer->generateSample();

// negative phase

connection->setAsDownInput( down_layer->sample );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectation();

// accumulate negative stats

// no need to deep-copy because the values won't change before update

Vec neg_down_val = down_layer->sample;

Vec neg_up_val = up_layer->expectation;

// update

down_layer->update( pos_down_val, neg_down_val );

connection->update( pos_down_val, pos_up_val,

neg_down_val, neg_up_val );

up_layer->update( pos_up_val, neg_up_val );

}

}

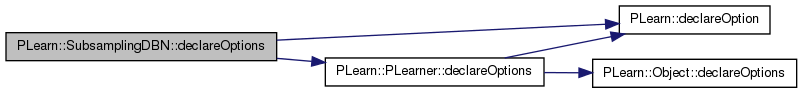

| void PLearn::SubsamplingDBN::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 93 of file SubsamplingDBN.cc.

References background_gibbs_update_ratio, batch_size, PLearn::OptionBase::buildoption, cd_learning_rate, classification_cost, classification_module, connections, cumulative_testing_time, cumulative_training_time, PLearn::declareOption(), PLearn::PLearner::declareOptions(), final_cost, final_module, gibbs_chain_reinit_freq, gibbs_down_state, grad_decrease_ct, grad_learning_rate, independent_biases, joint_layer, layers, PLearn::OptionBase::learntoption, minibatch_size, n_classes, n_layers, PLearn::OptionBase::nosave, online, partial_costs, reconstruct_layerwise, reduced_layers, subsampling_modules, top_layer_joint_cd, training_schedule, and use_classification_cost.

{

declareOption(ol, "cd_learning_rate", &SubsamplingDBN::cd_learning_rate,

OptionBase::buildoption,

"The learning rate used during contrastive divergence"

" learning");

declareOption(ol, "grad_learning_rate", &SubsamplingDBN::grad_learning_rate,

OptionBase::buildoption,

"The learning rate used during gradient descent");

declareOption(ol, "grad_decrease_ct", &SubsamplingDBN::grad_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during"

"gradient descent");

declareOption(ol, "batch_size", &SubsamplingDBN::batch_size,

OptionBase::buildoption,

"Training batch size (1=stochastic learning, 0=full batch learning).");

/* NOT IMPLEMENTED YET

declareOption(ol, "grad_weight_decay", &SubsamplingDBN::grad_weight_decay,

OptionBase::buildoption,

"The weight decay used during the gradient descent");

*/

declareOption(ol, "n_classes", &SubsamplingDBN::n_classes,

OptionBase::buildoption,

"Number of classes in the training set:\n"

" - 0 means we are doing regression,\n"

" - 1 means we have two classes, but only one output,\n"

" - 2 means we also have two classes, but two outputs"

" summing to 1,\n"

" - >2 is the usual multiclass case.\n"

);

declareOption(ol, "training_schedule", &SubsamplingDBN::training_schedule,

OptionBase::buildoption,

"Number of examples to use during each phase of learning:\n"

"first the greedy phases, and then the fine-tuning phase.\n"

"However, the learning will stop as soon as we reach nstages.\n"

"For example for 2 hidden layers, with 1000 examples in each\n"

"greedy phase, and 500 in the fine-tuning phase, this option\n"

"should be [1000 1000 500], and nstages should be at least 2500.\n"

"When online = true, this vector is ignored and should be empty.\n");

declareOption(ol, "use_classification_cost",

&SubsamplingDBN::use_classification_cost,

OptionBase::buildoption,

"Put the class target as an extra input of the top-level RBM\n"

"and compute and maximize conditional class probability in that\n"

"top layer (probability of the correct class given the other input\n"

"of the top-level RBM, which is the output of the rest of the network.\n");

declareOption(ol, "reconstruct_layerwise",

&SubsamplingDBN::reconstruct_layerwise,

OptionBase::buildoption,

"Compute reconstruction error of each layer as an auto-encoder.\n"

"This is done using cross-entropy between actual and reconstructed.\n"

"This option automatically adds the following cost names:\n"

" layerwise_reconstruction_error (sum over all layers)\n"

" layer0.reconstruction_error (only layers[0])\n"

" layer1.reconstruction_error (only layers[1])\n"

" etc.\n");

declareOption(ol, "layers", &SubsamplingDBN::layers,

OptionBase::buildoption,

"The layers of units in the network (including the input layer).");

declareOption(ol, "connections", &SubsamplingDBN::connections,

OptionBase::buildoption,

"The weights of the connections between the layers");

declareOption(ol, "classification_module",

&SubsamplingDBN::classification_module,

OptionBase::learntoption,

"The module computing the class probabilities (if"

" use_classification_cost)\n"

);

declareOption(ol, "classification_cost",

&SubsamplingDBN::classification_cost,

OptionBase::nosave,

"The module computing the classification cost function (NLL)"

" on top\n"

"of classification_module.\n"

);

declareOption(ol, "joint_layer", &SubsamplingDBN::joint_layer,

OptionBase::nosave,

"Concatenation of layers[n_layers-2] and the target layer\n"

"(that is inside classification_module), if"

" use_classification_cost.\n"

);

declareOption(ol, "final_module", &SubsamplingDBN::final_module,

OptionBase::buildoption,

"Optional module that takes as input the output of the last"

" layer\n"

"layers[n_layers-1), and its output is fed to final_cost,"

" and\n"

"concatenated with the one of classification_cost (if"

" present)\n"

"as output of the learner.\n"

"If it is not provided, then the last layer will directly be"

" put as\n"

"input of final_cost.\n"

);

declareOption(ol, "final_cost", &SubsamplingDBN::final_cost,

OptionBase::buildoption,

"The cost function to be applied on top of the DBN (or of\n"

"final_module if provided). Its gradients will be"

" backpropagated\n"

"to final_module, then combined with the one of"

" classification_cost and\n"

"backpropagated to the layers.\n"

);

declareOption(ol, "partial_costs", &SubsamplingDBN::partial_costs,

OptionBase::buildoption,

"The different cost functions to be applied on top of each"

" layer\n"

"(except the first one) of the RBM. These costs are not\n"

"back-propagated to previous layers.\n");

declareOption(ol, "independent_biases",

&SubsamplingDBN::independent_biases,

OptionBase::buildoption,

"In an RBMLayer, do we want the bias during up and down\n"

"propagations to be potentially different?\n");

declareOption(ol, "subsampling_modules",

&SubsamplingDBN::subsampling_modules,

OptionBase::buildoption,

"Different subsampling modules, to be applied on top of\n"

"RBMs when they're already learned. subsampling_modules[0]\n"

"is null.\n");

declareOption(ol, "reduced_layers", &SubsamplingDBN::reduced_layers,

OptionBase::learntoption,

"Layers of reduced size, to be put on top of subsampling\n"

"modules If the subsampling module is null, it will be\n"

"either the same that the one in 'layers' (default), or a\n"

"copy of it (with independant biases) if\n"

"'independent_biases' is true.\n");

declareOption(ol, "online", &SubsamplingDBN::online,

OptionBase::buildoption,

"If true then all unsupervised training stages (as well as\n"

"the fine-tuning stage) are done simultaneously.\n");

declareOption(ol, "background_gibbs_update_ratio", &SubsamplingDBN::background_gibbs_update_ratio,

OptionBase::buildoption,

"Coefficient between 0 and 1. If non-zero, run a background Gibbs chain and use\n"

"the visible-hidden statistics to contribute in the negative phase update\n"

"(in proportion background_gibbs_update_ratio wrt the contrastive divergence\n"

"negative phase statistics). If = 1, then do not perform any contrastive\n"

"divergence negative phase (use only the Gibbs chain statistics).\n");

declareOption(ol, "gibbs_chain_reinit_freq",

&SubsamplingDBN::gibbs_chain_reinit_freq,

OptionBase::buildoption,

"After how many training examples to re-initialize the Gibbs chains.\n"

"If == INT_MAX, the default value of this option, then NEVER\n"

"re-initialize except at the beginning, when stage==0.\n");

declareOption(ol, "top_layer_joint_cd", &SubsamplingDBN::top_layer_joint_cd,

OptionBase::buildoption,

"Wether we do a step of joint contrastive divergence on"

" top-layer.\n"

"Only used if online for the moment.\n");

declareOption(ol, "n_layers", &SubsamplingDBN::n_layers,

OptionBase::learntoption,

"Number of layers");

declareOption(ol, "minibatch_size", &SubsamplingDBN::minibatch_size,

OptionBase::learntoption,

"Size of a mini-batch.");

declareOption(ol, "gibbs_down_state", &SubsamplingDBN::gibbs_down_state,

OptionBase::learntoption,

"State of visible units of RBMs at each layer in background Gibbs chain.");

declareOption(ol, "cumulative_training_time", &SubsamplingDBN::cumulative_training_time,

OptionBase::learntoption | OptionBase::nosave,

"Cumulative training time since age=0, in seconds.\n");

declareOption(ol, "cumulative_testing_time", &SubsamplingDBN::cumulative_testing_time,

OptionBase::learntoption | OptionBase::nosave,

"Cumulative testing time since age=0, in seconds.\n");

/*

declareOption(ol, "n_final_costs", &SubsamplingDBN::n_final_costs,

OptionBase::learntoption,

"Number of final costs");

*/

/*

declareOption(ol, "", &SubsamplingDBN::,

OptionBase::learntoption,

"");

*/

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SubsamplingDBN::declaringFile | ( | ) | [inline, static] |

| SubsamplingDBN * PLearn::SubsamplingDBN::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file SubsamplingDBN.cc.

| void PLearn::SubsamplingDBN::fineTuningStep | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | train_costs | ||

| ) |

Definition at line 1697 of file SubsamplingDBN.cc.

References activation_gradients, PLearn::argmax(), class_cost_index, PLearn::class_error(), class_gradient, class_output, classification_cost, classification_module, PLearn::TVec< T >::clear(), connections, expectation_gradients, final_cost, final_cost_gradient, final_cost_index, final_cost_input, final_cost_value, final_module, i, independent_biases, layers, PLearn::TVec< T >::length(), n_layers, nll_cost_index, PLERROR, reduced_layers, PLearn::TVec< T >::resize(), subsampling_gradients, subsampling_modules, PLearn::TVec< T >::subVec(), and use_classification_cost.

Referenced by train().

{

final_cost_value.resize(0);

// fprop

reduced_layers[0]->expectation << input;

for( int i=0 ; i<n_layers-2 ; i++ )

{

connections[i]->setAsDownInput( reduced_layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

if( subsampling_modules[i+1] )

{

subsampling_modules[i+1]->fprop(layers[i+1]->expectation,

reduced_layers[i+1]->expectation);

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

else if( independent_biases )

{

reduced_layers[i+1]->expectation << layers[i+1]->expectation;

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

}

if( final_cost )

{

connections[ n_layers-2 ]->setAsDownInput(

reduced_layers[ n_layers-2 ]->expectation );

layers[ n_layers-1 ]->getAllActivations( connections[ n_layers-2 ] );

layers[ n_layers-1 ]->computeExpectation();

if( final_module )

{

final_module->fprop( layers[ n_layers-1 ]->expectation,

final_cost_input );

final_cost->fprop( final_cost_input, target, final_cost_value );

final_cost->bpropUpdate( final_cost_input, target,

final_cost_value[0],

final_cost_gradient );

final_module->bpropUpdate( layers[ n_layers-1 ]->expectation,

final_cost_input,

expectation_gradients[ n_layers-1 ],

final_cost_gradient );

}

else

{

final_cost->fprop( layers[ n_layers-1 ]->expectation, target,

final_cost_value );

final_cost->bpropUpdate( layers[ n_layers-1 ]->expectation,

target, final_cost_value[0],

expectation_gradients[ n_layers-1 ] );

}

train_costs.subVec(final_cost_index, final_cost_value.length())

<< final_cost_value;

layers[ n_layers-1 ]->bpropUpdate( layers[ n_layers-1 ]->activation,

layers[ n_layers-1 ]->expectation,

activation_gradients[ n_layers-1 ],

expectation_gradients[ n_layers-1 ]

);

connections[ n_layers-2 ]->bpropUpdate(

reduced_layers[ n_layers-2 ]->expectation,

layers[ n_layers-1 ]->activation,

subsampling_gradients[ n_layers-2 ],

activation_gradients[ n_layers-1 ] );

}

else {

subsampling_gradients[ n_layers-2 ].clear();

}

if( use_classification_cost )

{

PLERROR("classification_cost doesn't work with subsampling yet");

classification_module->fprop( layers[ n_layers-2 ]->expectation,

class_output );

real nll_cost;

// This doesn't work. gcc bug?

// classification_cost->fprop( class_output, target, cost );

classification_cost->CostModule::fprop( class_output, target,

nll_cost );

real class_error =

( argmax(class_output) == (int) round(target[0]) ) ? 0

: 1;

train_costs[nll_cost_index] = nll_cost;

train_costs[class_cost_index] = class_error;

classification_cost->bpropUpdate( class_output, target, nll_cost,

class_gradient );

classification_module->bpropUpdate( layers[ n_layers-2 ]->expectation,

class_output,

expectation_gradients[n_layers-2],

class_gradient,

true );

}

for( int i=n_layers-2 ; i>0 ; i-- )

{

if( subsampling_modules[i] )

{

subsampling_modules[i]->bpropUpdate( layers[i]->expectation,

reduced_layers[i]->expectation,

expectation_gradients[i],

subsampling_gradients[i] );

layers[i]->bpropUpdate( layers[i]->activation,

layers[i]->expectation,

activation_gradients[i],

expectation_gradients[i] );

}

else

{

layers[i]->bpropUpdate( layers[i]->activation,

reduced_layers[i]->expectation,

activation_gradients[i],

subsampling_gradients[i] );

}

connections[i-1]->bpropUpdate( reduced_layers[i-1]->expectation,

layers[i]->activation,

expectation_gradients[i-1],

activation_gradients[i] );

}

}

| void PLearn::SubsamplingDBN::fineTuningStep | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | train_costs | ||

| ) |

Fine tuning step with mini-batches.

Definition at line 1828 of file SubsamplingDBN.cc.

References activations_gradients, PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), PLearn::TMat< T >::column(), connections, expectations_gradients, final_cost, final_cost_gradients, final_cost_index, final_cost_inputs, final_cost_values, final_module, i, layer_inputs, layers, minibatch_size, n_layers, optimized_costs, PLERROR, reconstruct_layerwise, reconstruction_cost_index, PLearn::TMat< T >::resize(), PLearn::TMat< T >::subMatColumns(), use_classification_cost, and PLearn::TMat< T >::width().

{

PLERROR("minibatch doesn't work with subsampling yet");

final_cost_values.resize(0, 0);

// fprop

layers[0]->getExpectations() << inputs;

for( int i=0 ; i<n_layers-2 ; i++ )

{

connections[i]->setAsDownInputs( layers[i]->getExpectations() );

layers[i+1]->getAllActivations( connections[i], 0, true );

layers[i+1]->computeExpectations();

}

if( final_cost )

{

connections[ n_layers-2 ]->setAsDownInputs(

layers[ n_layers-2 ]->getExpectations() );

// TODO Also ensure getAllActivations fills everything.

layers[ n_layers-1 ]->getAllActivations(connections[n_layers-2],

0, true);

layers[ n_layers-1 ]->computeExpectations();

if( final_module )

{

final_cost_inputs.resize(minibatch_size,

final_module->output_size);

final_module->fprop( layers[ n_layers-1 ]->getExpectations(),

final_cost_inputs );

final_cost->fprop( final_cost_inputs, targets, final_cost_values );

// TODO This extra memory copy is annoying: how can we avoid it?

optimized_costs << final_cost_values.column(0);

final_cost->bpropUpdate( final_cost_inputs, targets,

optimized_costs,

final_cost_gradients );

final_module->bpropUpdate( layers[ n_layers-1 ]->getExpectations(),

final_cost_inputs,

expectations_gradients[ n_layers-1 ],

final_cost_gradients );

}

else

{

final_cost->fprop( layers[ n_layers-1 ]->getExpectations(), targets,

final_cost_values );

optimized_costs << final_cost_values.column(0);

final_cost->bpropUpdate( layers[ n_layers-1 ]->getExpectations(),

targets, optimized_costs,

expectations_gradients[ n_layers-1 ] );

}

train_costs.subMatColumns(final_cost_index, final_cost_values.width())

<< final_cost_values;

layers[ n_layers-1 ]->bpropUpdate( layers[ n_layers-1 ]->activations,

layers[ n_layers-1 ]->getExpectations(),

activations_gradients[ n_layers-1 ],

expectations_gradients[ n_layers-1 ]

);

connections[ n_layers-2 ]->bpropUpdate(

layers[ n_layers-2 ]->getExpectations(),

layers[ n_layers-1 ]->activations,

expectations_gradients[ n_layers-2 ],

activations_gradients[ n_layers-1 ] );

}

else {

expectations_gradients[ n_layers-2 ].clear();

}

if( use_classification_cost )

{

PLERROR("SubsamplingDBN::fineTuningStep - Not implemented for "

"mini-batches");

/*

classification_module->fprop( layers[ n_layers-2 ]->expectation,

class_output );

real nll_cost;

// This doesn't work. gcc bug?

// classification_cost->fprop( class_output, target, cost );

classification_cost->CostModule::fprop( class_output, target,

nll_cost );

real class_error =

( argmax(class_output) == (int) round(target[0]) ) ? 0

: 1;

train_costs[nll_cost_index] = nll_cost;

train_costs[class_cost_index] = class_error;

classification_cost->bpropUpdate( class_output, target, nll_cost,

class_gradient );

classification_module->bpropUpdate( layers[ n_layers-2 ]->expectation,

class_output,

expectation_gradients[n_layers-2],

class_gradient,

true );

*/

}

for( int i=n_layers-2 ; i>0 ; i-- )

{

layers[i]->bpropUpdate( layers[i]->activations,

layers[i]->getExpectations(),

activations_gradients[i],

expectations_gradients[i] );

connections[i-1]->bpropUpdate( layers[i-1]->getExpectations(),

layers[i]->activations,

expectations_gradients[i-1],

activations_gradients[i] );

}

// do it AFTER the bprop to avoid interfering with activations used in bprop

// (and do not worry that the weights have changed a bit). This is incoherent

// with the current implementation in the greedy stage.

if (reconstruct_layerwise)

{

Mat rc = train_costs.column(reconstruction_cost_index);

rc.clear();

for( int index=0 ; index<n_layers-1 ; index++ )

{

layer_inputs.resize(minibatch_size,layers[index]->size);

layer_inputs << layers[index]->getExpectations();

connections[index]->setAsUpInputs(layers[index+1]->getExpectations());

layers[index]->getAllActivations(connections[index], 0, true);

layers[index]->fpropNLL(layer_inputs, train_costs.column(reconstruction_cost_index+index+1));

rc += train_costs.column(reconstruction_cost_index+index+1);

}

}

}

| void PLearn::SubsamplingDBN::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 759 of file SubsamplingDBN.cc.

References classification_cost, classification_module, connections, cumulative_testing_time, cumulative_training_time, final_cost, final_module, PLearn::PLearner::forget(), i, PLearn::TVec< T >::isEmpty(), layers, n_layers, partial_costs, reduced_layers, and use_classification_cost.

{

inherited::forget();

for( int i=0 ; i<n_layers ; i++ )

layers[i]->forget();

for( int i=0 ; i<n_layers-1 ; i++ )

{

reduced_layers[i]->forget();

connections[i]->forget();

}

if( use_classification_cost )

{

classification_cost->forget();

classification_module->forget();

}

if( final_module )

final_module->forget();

if( final_cost )

final_cost->forget();

if( !partial_costs.isEmpty() )

for( int i=0 ; i<n_layers-1 ; i++ )

if( partial_costs[i] )

partial_costs[i]->forget();

cumulative_training_time = 0;

cumulative_testing_time = 0;

}

| OptionList & PLearn::SubsamplingDBN::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file SubsamplingDBN.cc.

| OptionMap & PLearn::SubsamplingDBN::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file SubsamplingDBN.cc.

| RemoteMethodMap & PLearn::SubsamplingDBN::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file SubsamplingDBN.cc.

| TVec< string > PLearn::SubsamplingDBN::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 2301 of file SubsamplingDBN.cc.

References cost_names.

{

// Return the names of the costs computed by computeCostsFromOutputs

// (these may or may not be exactly the same as what's returned by

// getTrainCostNames).

return cost_names;

}

| TVec< string > PLearn::SubsamplingDBN::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 2310 of file SubsamplingDBN.cc.

References cost_names.

Referenced by train().

{

return cost_names;

}

| void PLearn::SubsamplingDBN::greedyStep | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| int | index, | ||

| Mat & | train_costs_m | ||

| ) |

Greedy step with mini-batches.

Definition at line 1566 of file SubsamplingDBN.cc.

References activations_gradients, cd_learning_rate, PLearn::TMat< T >::column(), connections, contrastiveDivergenceStep(), expectations_gradients, grad_learning_rate, i, PLearn::TVec< T >::isEmpty(), layer_inputs, layers, minibatch_size, n_layers, partial_costs, PLASSERT, PLERROR, reconstruct_layerwise, reconstruction_cost_index, and PLearn::TMat< T >::resize().

{

PLERROR("minibatch doesn't work with subsampling yet");

PLASSERT( index < n_layers );

layers[0]->setExpectations(inputs);

for( int i=0 ; i<=index ; i++ )

{

connections[i]->setAsDownInputs( layers[i]->getExpectations() );

layers[i+1]->getAllActivations( connections[i], 0, true );

layers[i+1]->computeExpectations();

}

// TODO: add another learning rate?

if( !partial_costs.isEmpty() && partial_costs[ index ] )

{

// put appropriate learning rate

connections[ index ]->setLearningRate( grad_learning_rate );

layers[ index+1 ]->setLearningRate( grad_learning_rate );

// Backward pass

Vec costs;

partial_costs[ index ]->fprop( layers[ index+1 ]->getExpectations(),

targets, costs );

partial_costs[ index ]->bpropUpdate(layers[index+1]->getExpectations(),

targets, costs,

expectations_gradients[ index+1 ]

);

layers[ index+1 ]->bpropUpdate( layers[ index+1 ]->activations,

layers[ index+1 ]->getExpectations(),

activations_gradients[ index+1 ],

expectations_gradients[ index+1 ] );

connections[ index ]->bpropUpdate( layers[ index ]->getExpectations(),

layers[ index+1 ]->activations,

expectations_gradients[ index ],

activations_gradients[ index+1 ] );

// put back old learning rate

connections[ index ]->setLearningRate( cd_learning_rate );

layers[ index+1 ]->setLearningRate( cd_learning_rate );

}

if (reconstruct_layerwise)

{

layer_inputs.resize(minibatch_size,layers[index]->size);

layer_inputs << layers[index]->getExpectations(); // we will perturb these, so save them

connections[index]->setAsUpInputs(layers[index+1]->getExpectations());

layers[index]->getAllActivations(connections[index], 0, true);

layers[index]->fpropNLL(layer_inputs, train_costs_m.column(reconstruction_cost_index+index+1));

Mat rc = train_costs_m.column(reconstruction_cost_index);

rc += train_costs_m.column(reconstruction_cost_index+index+1);

layers[index]->setExpectations(layer_inputs); // and restore them here

}

contrastiveDivergenceStep( layers[ index ],

connections[ index ],

layers[ index+1 ],

index, true);

}

Definition at line 1497 of file SubsamplingDBN.cc.

References activation_gradients, cd_learning_rate, connections, contrastiveDivergenceStep(), expectation_gradients, grad_learning_rate, i, independent_biases, PLearn::TVec< T >::isEmpty(), layers, n_layers, partial_costs, PLASSERT, PLERROR, reduced_layers, and subsampling_modules.

Referenced by train().

{

PLASSERT( index < n_layers );

reduced_layers[0]->expectation << input;

for( int i=0 ; i<=index ; i++ )

{

connections[i]->setAsDownInput( reduced_layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

if( i+1<n_layers-1 )

{

if( subsampling_modules[i+1] )

{

subsampling_modules[i+1]->fprop(layers[i+1]->expectation,

reduced_layers[i+1]->expectation);

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

else if( independent_biases )

{

reduced_layers[i+1]->expectation << layers[i+1]->expectation;

reduced_layers[i+1]->expectation_is_up_to_date = true;

}

}

}

// TODO: add another learning rate?

if( !partial_costs.isEmpty() && partial_costs[ index ] )

{

PLERROR("partial_costs doesn't work with subsampling yet");

// put appropriate learning rate

connections[ index ]->setLearningRate( grad_learning_rate );

layers[ index+1 ]->setLearningRate( grad_learning_rate );

// Backward pass

real cost;

partial_costs[ index ]->fprop( layers[ index+1 ]->expectation,

target, cost );

partial_costs[ index ]->bpropUpdate( layers[ index+1 ]->expectation,

target, cost,

expectation_gradients[ index+1 ]

);

layers[ index+1 ]->bpropUpdate( layers[ index+1 ]->activation,

layers[ index+1 ]->expectation,

activation_gradients[ index+1 ],

expectation_gradients[ index+1 ] );

connections[ index ]->bpropUpdate( layers[ index ]->expectation,

layers[ index+1 ]->activation,

expectation_gradients[ index ],

activation_gradients[ index+1 ] );

// put back old learning rate

connections[ index ]->setLearningRate( cd_learning_rate );

layers[ index+1 ]->setLearningRate( cd_learning_rate );

}

contrastiveDivergenceStep( reduced_layers[ index ],

connections[ index ],

layers[ index+1 ],

index, true);

}

Definition at line 1633 of file SubsamplingDBN.cc.

References activation_gradients, batch_size, cd_learning_rate, classification_module, connections, contrastiveDivergenceStep(), expectation_gradients, PLearn::fill_one_hot(), PLearn::get_pointer(), grad_learning_rate, i, PLearn::TVec< T >::isEmpty(), joint_layer, layers, n_layers, partial_costs, PLASSERT, PLASSERT_MSG, and PLERROR.

Referenced by train().

{

PLERROR("classification_module doesn't work with subsampling yet");

PLASSERT( joint_layer );

PLASSERT_MSG(batch_size == 1, "Not implemented for mini-batches");

layers[0]->expectation << input;

for( int i=0 ; i<n_layers-2 ; i++ )

{

connections[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

}

if( !partial_costs.isEmpty() && partial_costs[ n_layers-2 ] )

{

// Deterministic forward pass

connections[ n_layers-2 ]->setAsDownInput(

layers[ n_layers-2 ]->expectation );

layers[ n_layers-1 ]->getAllActivations( connections[ n_layers-2 ] );

layers[ n_layers-1 ]->computeExpectation();

// put appropriate learning rate

connections[ n_layers-2 ]->setLearningRate( grad_learning_rate );

layers[ n_layers-1 ]->setLearningRate( grad_learning_rate );

// Backward pass

real cost;

partial_costs[ n_layers-2 ]->fprop( layers[ n_layers-1 ]->expectation,

target, cost );

partial_costs[ n_layers-2 ]->bpropUpdate(

layers[ n_layers-1 ]->expectation, target, cost,

expectation_gradients[ n_layers-1 ] );

layers[ n_layers-1 ]->bpropUpdate( layers[ n_layers-1 ]->activation,

layers[ n_layers-1 ]->expectation,

activation_gradients[ n_layers-1 ],

expectation_gradients[ n_layers-1 ]

);

connections[ n_layers-2 ]->bpropUpdate(

layers[ n_layers-2 ]->expectation,

layers[ n_layers-1 ]->activation,

expectation_gradients[ n_layers-2 ],

activation_gradients[ n_layers-1 ] );

// put back old learning rate

connections[ n_layers-2 ]->setLearningRate( cd_learning_rate );

layers[ n_layers-1 ]->setLearningRate( cd_learning_rate );

}

Vec target_exp = classification_module->target_layer->expectation;

fill_one_hot( target_exp, (int) round(target[0]), real(0.), real(1.) );

contrastiveDivergenceStep(

get_pointer( joint_layer ),

get_pointer( classification_module->joint_connection ),

layers[ n_layers-1 ], n_layers-2);

}

| void PLearn::SubsamplingDBN::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 690 of file SubsamplingDBN.cc.

References activation_gradients, activations_gradients, cd_neg_down_vals, cd_neg_up_vals, class_gradient, class_output, classification_cost, classification_module, connections, cost_names, cumulative_schedule, PLearn::deepCopyField(), expectation_gradients, expectations_gradients, final_cost, final_cost_gradient, final_cost_gradients, final_cost_input, final_cost_inputs, final_cost_output, final_cost_value, final_cost_values, final_module, gibbs_down_state, joint_layer, layer_input, layer_inputs, layers, PLearn::PLearner::makeDeepCopyFromShallowCopy(), optimized_costs, partial_costs, partial_costs_indices, pos_down_val, pos_up_val, reconstruction_costs, reduced_layers, save_layer_activation, save_layer_activations, save_layer_expectation, save_layer_expectations, subsampling_gradients, subsampling_modules, timer, and training_schedule.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(training_schedule, copies);

deepCopyField(layers, copies);

deepCopyField(connections, copies);

deepCopyField(final_module, copies);

deepCopyField(final_cost, copies);

deepCopyField(partial_costs, copies);

deepCopyField(subsampling_modules, copies);

deepCopyField(classification_module, copies);

deepCopyField(cost_names, copies);

deepCopyField(reduced_layers, copies);

deepCopyField(timer, copies);

deepCopyField(classification_cost, copies);

deepCopyField(joint_layer, copies);

deepCopyField(activation_gradients, copies);

deepCopyField(activations_gradients, copies);

deepCopyField(expectation_gradients, copies);

deepCopyField(expectations_gradients, copies);

deepCopyField(subsampling_gradients, copies);

deepCopyField(final_cost_input, copies);

deepCopyField(final_cost_inputs, copies);

deepCopyField(final_cost_value, copies);

deepCopyField(final_cost_values, copies);

deepCopyField(final_cost_output, copies);

deepCopyField(class_output, copies);

deepCopyField(class_gradient, copies);

deepCopyField(final_cost_gradient, copies);

deepCopyField(final_cost_gradients, copies);

deepCopyField(save_layer_activation, copies);

deepCopyField(save_layer_expectation, copies);

deepCopyField(save_layer_activations, copies);

deepCopyField(save_layer_expectations, copies);

deepCopyField(pos_down_val, copies);

deepCopyField(pos_up_val, copies);

deepCopyField(cd_neg_up_vals, copies);

deepCopyField(cd_neg_down_vals, copies);

deepCopyField(gibbs_down_state, copies);

deepCopyField(optimized_costs, copies);

deepCopyField(reconstruction_costs, copies);

deepCopyField(partial_costs_indices, copies);

deepCopyField(cumulative_schedule, copies);

deepCopyField(layer_input, copies);

deepCopyField(layer_inputs, copies);

}

| void PLearn::SubsamplingDBN::onlineStep | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | train_costs | ||

| ) |

Definition at line 1057 of file SubsamplingDBN.cc.

References activation_gradients, PLearn::argmax(), batch_size, cd_learning_rate, class_cost_index, PLearn::class_error(), class_gradient, class_output, classification_cost, classification_module, PLearn::TVec< T >::clear(), connections, contrastiveDivergenceStep(), expectation_gradients, PLearn::fill_one_hot(), final_cost, final_cost_gradient, final_cost_index, final_cost_input, final_cost_value, final_module, PLearn::get_pointer(), grad_learning_rate, i, PLearn::TVec< T >::isEmpty(), joint_layer, layers, PLearn::TVec< T >::length(), n_layers, nll_cost_index, partial_costs, partial_costs_indices, PLASSERT, PLearn::TVec< T >::resize(), save_layer_activation, save_layer_expectation, PLearn::TVec< T >::subVec(), top_layer_joint_cd, and use_classification_cost.

Referenced by train().

{

PLASSERT(batch_size == 1);

TVec<Vec> cost;

if (!partial_costs.isEmpty())

cost.resize(n_layers-1);

layers[0]->expectation << input;

// FORWARD PHASE

//Vec layer_input;

for( int i=0 ; i<n_layers-1 ; i++ )

{

// mean-field fprop from layer i to layer i+1

connections[i]->setAsDownInput( layers[i]->expectation );

// this does the actual matrix-vector computation

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

// propagate into local cost associated to output of layer i+1

if( !partial_costs.isEmpty() && partial_costs[ i ] )

{

partial_costs[ i ]->fprop( layers[ i+1 ]->expectation,

target, cost[i] );

// Backward pass

// first time we set these gradients: do not accumulate

partial_costs[ i ]->bpropUpdate( layers[ i+1 ]->expectation,

target, cost[i][0],

expectation_gradients[ i+1 ] );

train_costs.subVec(partial_costs_indices[i], cost[i].length())

<< cost[i];

}

else

expectation_gradients[i+1].clear();

}

// top layer may be connected to a final_module followed by a

// final_cost and / or may be used to predict class probabilities

// through a joint classification_module

if ( final_cost )

{

if( final_module )

{

final_module->fprop( layers[ n_layers-1 ]->expectation,

final_cost_input );

final_cost->fprop( final_cost_input, target,

final_cost_value );

final_cost->bpropUpdate( final_cost_input, target,

final_cost_value[0],

final_cost_gradient );

final_module->bpropUpdate(

layers[ n_layers-1 ]->expectation,

final_cost_input,

expectation_gradients[ n_layers-1 ],

final_cost_gradient, true );

}

else

{

final_cost->fprop( layers[ n_layers-1 ]->expectation,

target,

final_cost_value );

final_cost->bpropUpdate( layers[ n_layers-1 ]->expectation,

target, final_cost_value[0],

expectation_gradients[n_layers-1],

true);

}

train_costs.subVec(final_cost_index, final_cost_value.length())

<< final_cost_value;

}

if (final_cost || (!partial_costs.isEmpty() && partial_costs[n_layers-2]))

{

layers[n_layers-1]->setLearningRate( grad_learning_rate );

connections[n_layers-2]->setLearningRate( grad_learning_rate );

layers[ n_layers-1 ]->bpropUpdate( layers[ n_layers-1 ]->activation,

layers[ n_layers-1 ]->expectation,

activation_gradients[ n_layers-1 ],

expectation_gradients[ n_layers-1 ],

false);

connections[ n_layers-2 ]->bpropUpdate(

layers[ n_layers-2 ]->expectation,

layers[ n_layers-1 ]->activation,

expectation_gradients[ n_layers-2 ],

activation_gradients[ n_layers-1 ],

true);

// accumulate into expectation_gradients[n_layers-2]

// because a partial cost may have already put a gradient there

}

if( use_classification_cost )

{

classification_module->fprop( layers[ n_layers-2 ]->expectation,

class_output );

real nll_cost;

// This doesn't work. gcc bug?

// classification_cost->fprop( class_output, target, cost );

classification_cost->CostModule::fprop( class_output, target,

nll_cost );

real class_error =

( argmax(class_output) == (int) round(target[0]) ) ? 0: 1;

train_costs[nll_cost_index] = nll_cost;

train_costs[class_cost_index] = class_error;

classification_cost->bpropUpdate( class_output, target, nll_cost,

class_gradient );

classification_module->bpropUpdate( layers[ n_layers-2 ]->expectation,

class_output,

expectation_gradients[n_layers-2],

class_gradient,

true );

if( top_layer_joint_cd )

{

// set the input of the joint layer

Vec target_exp = classification_module->target_layer->expectation;

fill_one_hot( target_exp, (int) round(target[0]), real(0.), real(1.) );

joint_layer->setLearningRate( cd_learning_rate );