|

PLearn 0.1

|

|

PLearn 0.1

|

Linear classifier that uses class representations in order to make use of inductive transfer between classes. More...

#include <LinearInductiveTransferClassifier.h>

Public Member Functions | |

| LinearInductiveTransferClassifier () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual LinearInductiveTransferClassifier * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Mat | class_reps |

| Class representations. | |

| PP< Optimizer > | optimizer |

| Optimizer of the neural network. | |

| int | batch_size |

| Batch size. | |

| real | weight_decay |

| Weight decay for all weights. | |

| string | penalty_type |

| Penalty to use on the weights for weight decay. | |

| string | initialization_method |

| The method used to initialize the weights. | |

| string | model_type |

| Model type. | |

| bool | dont_consider_train_targets |

| Indication that the targets seen in the training set should not be considered when tagging a new set. | |

| bool | use_bias_in_weights_prediction |

| Indication that a bias should be used for weights prediction. | |

| bool | multi_target_classifier |

| Indication that the classifier works with multiple targets, possibly ON simulatneously. | |

| real | sigma_min |

| Minimum variance for all coordinates, which is added to the maximum likelihood estimates. | |

| int | nhidden |

| Number of hidden units for neural network. | |

| int | rbm_nstages |

| Number of RBM training to initialize hidden layer weights. | |

| real | rbm_learning_rate |

| Learning rate for the RBM. | |

| PP< RBMLayer > | visible_layer |

| Visible layer of the RBM. | |

| PP< RBMLayer > | hidden_layer |

| Hidden layer of the RBM. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| Var | hiddenLayer (const Var &input, const Var &weights, string transfer_func, Var &before_transfer_function, bool use_cubed_value=false) |

| Return a variable that is the hidden layer corresponding to given input and weights. | |

| void | buildOutputFromInput (const Var &the_input, Var &hidden_layer, Var &before_transfer_func) |

| Build the output of the neural network, from the given input. | |

| void | buildTargetAndWeight () |

| Builds the target and sampleweight variables. | |

| void | buildFuncs (const Var &the_input, const Var &the_output, const Var &the_target, const Var &the_sampleweight) |

| Build the various functions used in the network. | |

| void | fillWeights (const Var &weights, bool zero_first_row, real scale_with_this=-1) |

| Fill a matrix of weights according to the 'initialization_method' specified. | |

| virtual void | buildPenalties () |

| Fill the costs penalties. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| int | noutputs |

| Number of outputs for the neural network. | |

| Var | input |

| Input variable. | |

| Var | output |

| Output variable. | |

| Var | sup_output |

| Sup output variable. | |

| Var | new_output |

| New output variable. | |

| Var | target |

| Target variable. | |

| Var | sup_target |

| Sup target variable. | |

| Var | new_target |

| New target variable. | |

| Var | sampleweight |

| Sample weight variable. | |

| Var | A |

| Linear classifier parameters. | |

| Var | s |

| Linear classifier scale parameter. | |

| Var | class_reps_var |

| Class representations. | |

| VarArray | costs |

| Costs variables. | |

| VarArray | new_costs |

| Costs variables for new tasks. | |

| VarArray | params |

| Parameters. | |

| Vec | paramsvalues |

| Parameters vec. | |

| VarArray | penalties |

| Penalties variables. | |

| Var | training_cost |

| Training cost variable. | |

| Var | test_costs |

| Test costs variable. | |

| VarArray | invars |

| Input variables. | |

| Vec | seen_targets |

| Vec of seen targets in the training set. | |

| Vec | unseen_targets |

| Vec of unseen targets in the training set. | |

| Func | f |

| Function: input -> output. | |

| Func | test_costf |

| Function: input & target -> output & test_costs. | |

| Func | output_and_target_to_cost |

| Function: output & target -> cost. | |

| Func | sup_test_costf |

| Function: input & target -> output & test_costs. | |

| Func | sup_output_and_target_to_cost |

| Function: output & target -> cost. | |

| Var | W |

| Input to hidden layer weights. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Linear classifier that uses class representations in order to make use of inductive transfer between classes.

Definition at line 58 of file LinearInductiveTransferClassifier.h.

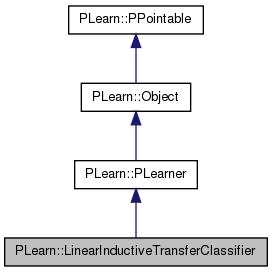

typedef PLearner PLearn::LinearInductiveTransferClassifier::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 60 of file LinearInductiveTransferClassifier.h.

| PLearn::LinearInductiveTransferClassifier::LinearInductiveTransferClassifier | ( | ) |

Default constructor.

Definition at line 95 of file LinearInductiveTransferClassifier.cc.

References PLearn::PLearner::random_gen.

: batch_size(1), weight_decay(0), penalty_type("L2_square"), initialization_method("uniform_linear"), model_type("discriminative"), dont_consider_train_targets(false), use_bias_in_weights_prediction(false), multi_target_classifier(false), sigma_min(1e-5), nhidden(-1), rbm_nstages(0), rbm_learning_rate(0.01) { random_gen = new PRandom(); }

| string PLearn::LinearInductiveTransferClassifier::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| OptionList & PLearn::LinearInductiveTransferClassifier::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| RemoteMethodMap & PLearn::LinearInductiveTransferClassifier::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| Object * PLearn::LinearInductiveTransferClassifier::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| StaticInitializer LinearInductiveTransferClassifier::_static_initializer_ & PLearn::LinearInductiveTransferClassifier::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

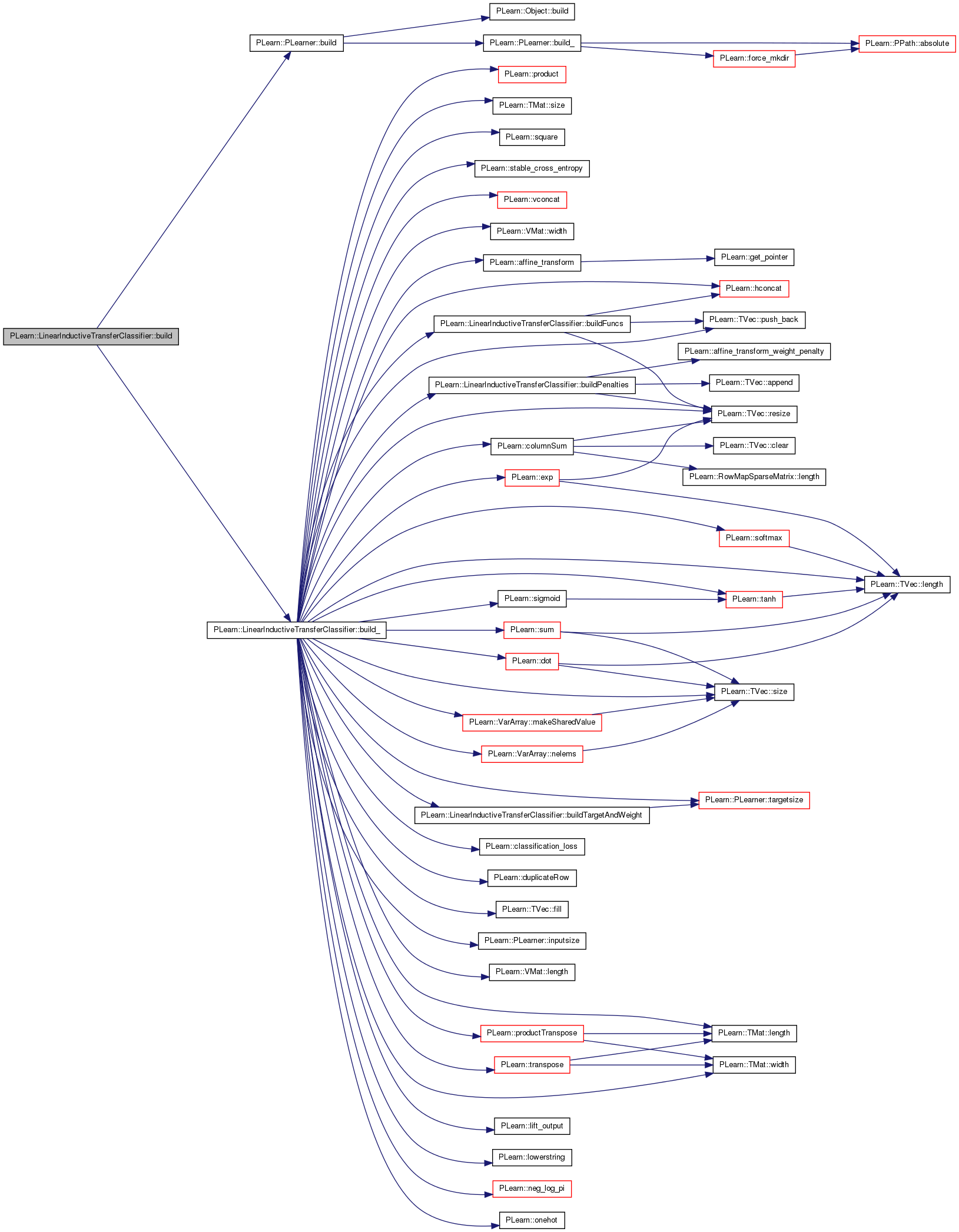

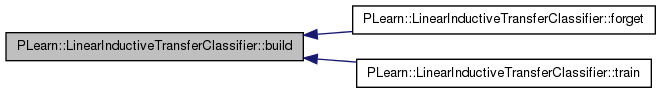

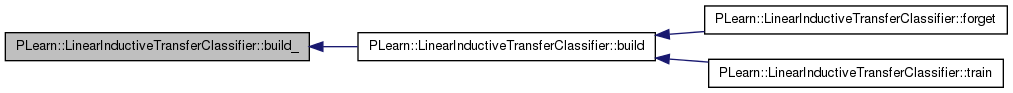

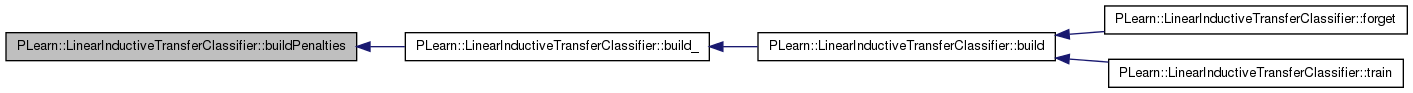

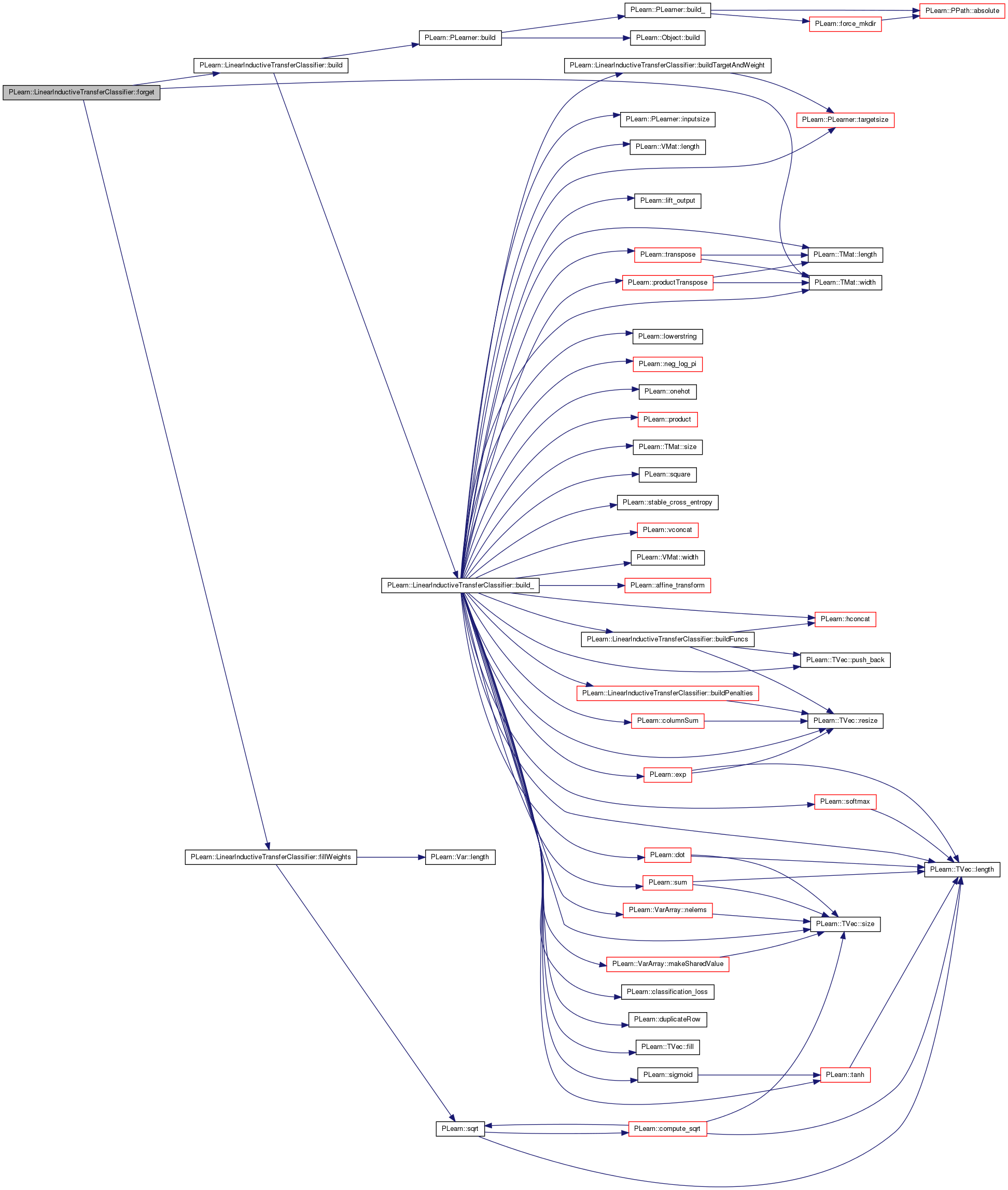

| void PLearn::LinearInductiveTransferClassifier::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 553 of file LinearInductiveTransferClassifier.cc.

References PLearn::PLearner::build(), and build_().

Referenced by forget(), and train().

{

inherited::build();

build_();

}

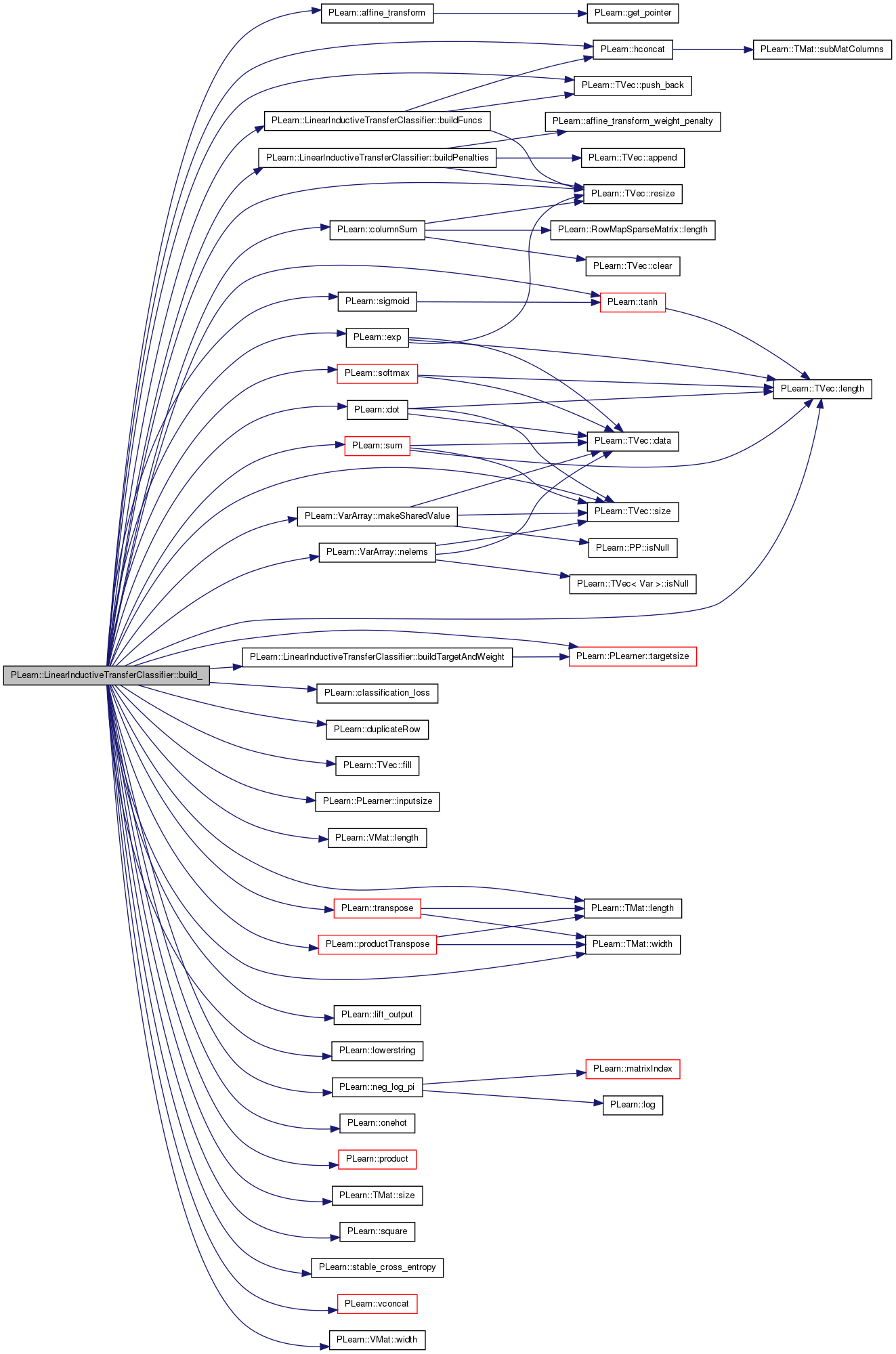

| void PLearn::LinearInductiveTransferClassifier::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 200 of file LinearInductiveTransferClassifier.cc.

References A, PLearn::affine_transform(), buildFuncs(), buildPenalties(), buildTargetAndWeight(), class_reps, class_reps_var, PLearn::classification_loss(), PLearn::columnSum(), costs, dont_consider_train_targets, PLearn::dot(), PLearn::duplicateRow(), PLearn::exp(), PLearn::TVec< T >::fill(), PLearn::hconcat(), i, input, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, j, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::TMat< T >::length(), PLearn::lift_output(), PLearn::lowerstring(), PLearn::VarArray::makeSharedValue(), MISSING_VALUE, model_type, multi_target_classifier, PLearn::neg_log_pi(), PLearn::VarArray::nelems(), new_costs, new_output, new_target, nhidden, noutputs, PLearn::onehot(), optimizer, output, params, paramsvalues, penalties, penalty_type, PLERROR, PLWARNING, PLearn::product(), PLearn::productTranspose(), PLearn::TVec< T >::push_back(), PLearn::PLearner::random_gen, rbm_nstages, PLearn::TVec< T >::resize(), s, sampleweight, PLearn::PLearner::seed_, seen_targets, PLearn::sigmoid(), PLearn::TVec< T >::size(), PLearn::TMat< T >::size(), PLearn::softmax(), PLearn::square(), PLearn::stable_cross_entropy(), PLearn::PLearner::stage, PLearn::sum(), sup_output, sup_target, PLearn::tanh(), target, PLearn::PLearner::targetsize(), PLearn::PLearner::targetsize_, test_costs, PLearn::PLearner::train_set, training_cost, PLearn::transpose(), unseen_targets, use_bias_in_weights_prediction, PLearn::vconcat(), W, PLearn::PLearner::weightsize_, PLearn::VMat::width(), and PLearn::TMat< T >::width().

Referenced by build().

{

/*

* Create Topology Var Graph

*/

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize and targetsize anyway...

// Also, nothing is done if no layers need to be added

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

if (seed_ != 0) random_gen->manual_seed(seed_);//random_gen->manual_seed(seed_);

input = Var(inputsize(), "input");

target = Var(targetsize(),"target");

if(class_reps.size()<=0)

PLERROR("LinearInductiveTransferClassifier::build_(): class_reps is empty");

noutputs = class_reps.length();

buildTargetAndWeight();

params.resize(0);

Mat class_reps_to_use;

if(use_bias_in_weights_prediction)

{

// Add column with 1s, to include bias

Mat class_reps_with_bias(class_reps.length(), class_reps.width()+1);

for(int i=0; i<class_reps_with_bias.length(); i++)

for(int j=0; j<class_reps_with_bias.width(); j++)

{

if(j==0)

class_reps_with_bias(i,j) = 1;

else

class_reps_with_bias(i,j) = class_reps(i,j-1);

}

class_reps_to_use = class_reps_with_bias;

}

else

{

class_reps_to_use = class_reps;

}

if(model_type == "nnet_discriminative_1_vs_all")

{

if(nhidden <= 0)

PLERROR("In LinearInductiveTransferClassifier::build_(): nhidden "

"must be > 0.");

// Ws.resize(nhidden);

// As.resize(nhidden);

// s_hids.resize(nhidden);

// s = Var(1,nhidden,"sigma_square");

// for(int i=0; i<Ws.length(); i++)

// {

// Ws[i] = Var(inputsize_,class_reps_to_use.width());

// As[i] = Var(1,class_reps_to_use.width());

// s_hids[i] = Var(1,inputsize_);

// }

W = Var(inputsize_+1,nhidden,"hidden_weights");

A = Var(nhidden,class_reps_to_use.width());

s = Var(1,nhidden,"sigma_square");

params.push_back(W);

params.push_back(A);

params.push_back(s);

// params.append(Ws);

// params.append(As);

// params.append(s);

// params.append(s_hids);

// A = vconcat(As);

}

else

{

A = Var(inputsize_,class_reps_to_use.width());

s = Var(1,inputsize_,"sigma_square");

//fillWeights(A,false);

params.push_back(A);

params.push_back(s);

}

class_reps_var = new SourceVariable(class_reps_to_use);

Var weights = productTranspose(A,class_reps_var);

if(model_type == "discriminative" || model_type == "discriminative_1_vs_all")

{

weights =vconcat(-product(exp(s),square(weights)) & weights); // Making sure that the scaling factor is going to be positive

output = affine_transform(input, weights);

}

else if(model_type == "generative_0-1")

{

PLERROR("Not implemented yet");

//weights = vconcat(columnSum(log(A/(exp(A)-1))) & weights);

//output = affine_transform(input, weights);

}

else if(model_type == "generative")

{

weights = vconcat(-columnSum(square(weights)/transpose(duplicateRow(s,noutputs))) & 2*weights/transpose(duplicateRow(s,noutputs)));

if(targetsize() == 1)

output = affine_transform(input, weights);

else

output = exp(affine_transform(input, weights) - duplicateRow(dot(transpose(input)/s,input),noutputs))+REAL_EPSILON;

}

else if(model_type == "nnet_discriminative_1_vs_all")

{

//hidden_neurons.resize(nhidden);

//Var weights;

//for(int i=0; i<nhidden; i++)

//{

// weights = productTranspose(Ws[i],class_reps_var);

// weights = vconcat(-product(exp(s_hids[i]),square(weights))

// & weights);

// hidden_neurons[i] = tanh(affine_transform(input, weights));

//}

//

//weights = productTranspose(A,class_reps_var);

//output = -transpose(product(exp(s),square(weights)));

//

//for(int i=0; i<nhidden; i++)

//{

// output = output + times(productTranspose(class_reps_var,As[i]),

// hidden_neurons[i]);

//}

weights =vconcat(-product(exp(s),square(weights)) & weights); // Making sure that the scaling factor is going to be positive

if(rbm_nstages>0)

output = affine_transform(tanh(affine_transform(input,W)), weights);

else

output = affine_transform(sigmoid(affine_transform(input,W)), weights);

}

else

PLERROR("In LinearInductiveTransferClassifier::build_(): model_type %s is not valid", model_type.c_str());

TVec<bool> class_tags(noutputs);

if(targetsize() == 1)

{

Vec row(train_set.width());

int target_class;

class_tags.fill(0);

for(int i=0; i<train_set.length(); i++)

{

train_set->getRow(i,row);

target_class = (int) row[train_set->inputsize()];

class_tags[target_class] = 1;

}

seen_targets.resize(0);

unseen_targets.resize(0);

for(int i=0; i<class_tags.length(); i++)

if(class_tags[i])

seen_targets.push_back(i);

else

unseen_targets.push_back(i);

}

if(targetsize() != 1 && !multi_target_classifier)

PLERROR("In LinearInductiveTransferClassifier::build_(): when targetsize() != 1, multi_target_classifier should be true.");

if(targetsize() == 1 && multi_target_classifier)

PLERROR("In LinearInductiveTransferClassifier::build_(): when targetsize() == 1, multi_target_classifier should be false.");

if(targetsize() == 1 && seen_targets.length() != class_tags.length())

{

sup_output = new VarRowsVariable(output,new SourceVariable(seen_targets));

if(dont_consider_train_targets)

new_output = new VarRowsVariable(output,new SourceVariable(unseen_targets));

else

new_output = output;

Var sup_mapping = new SourceVariable(noutputs,1);

Var new_mapping = new SourceVariable(noutputs,1);

int sup_id = 0;

int new_id = 0;

for(int k=0; k<class_tags.length(); k++)

{

if(class_tags[k])

{

sup_mapping->value[k] = sup_id;

new_mapping->value[k] = MISSING_VALUE;

sup_id++;

}

else

{

sup_mapping->value[k] = MISSING_VALUE;

new_mapping->value[k] = new_id;

new_id++;

}

}

sup_target = new VarRowsVariable(sup_mapping, target);

if(dont_consider_train_targets)

new_target = new VarRowsVariable(new_mapping, target);

else

new_target = target;

}

else

{

sup_output = output;

new_output = output;

sup_target = target;

new_target = target;

}

// Build costs

if(model_type == "discriminative" || model_type == "discriminative_1_vs_all" || model_type == "generative_0-1" || model_type == "nnet_discriminative_1_vs_all")

{

if(model_type == "discriminative")

{

if(targetsize() != 1)

PLERROR("In LinearInductiveTransferClassifier::build_(): can't use discriminative model with targetsize() != 1");

costs.resize(2);

new_costs.resize(2);

sup_output = softmax(sup_output);

costs[0] = neg_log_pi(sup_output,sup_target);

costs[1] = classification_loss(sup_output, sup_target);

new_output = softmax(new_output);

new_costs[0] = neg_log_pi(new_output,new_target);

new_costs[1] = classification_loss(new_output, new_target);

}

if(model_type == "discriminative_1_vs_all"

|| model_type == "nnet_discriminative_1_vs_all")

{

costs.resize(2);

new_costs.resize(2);

if(targetsize() == 1)

{

costs[0] = stable_cross_entropy(sup_output, onehot(seen_targets.length(),sup_target));

costs[1] = classification_loss(sigmoid(sup_output), sup_target);

}

else

{

costs[0] = stable_cross_entropy(sup_output, sup_target, true);

costs[1] = transpose(lift_output(sigmoid(sup_output)+0.001, sup_target));

}

if(targetsize() == 1)

{

if(dont_consider_train_targets)

new_costs[0] = stable_cross_entropy(new_output, onehot(unseen_targets.length(),new_target));

else

new_costs[0] = stable_cross_entropy(new_output, onehot(noutputs,new_target));

new_costs[1] = classification_loss(sigmoid(new_output), new_target);

}

else

{

new_costs.resize(costs.length());

for(int i=0; i<new_costs.length(); i++)

new_costs[i] = costs[i];

}

}

if(model_type == "generative_0-1")

{

costs.resize(2);

new_costs.resize(2);

if(targetsize() == 1)

{

costs[0] = sup_output;

costs[1] = classification_loss(sigmoid(sup_output), sup_target);

}

else

{

PLERROR("In LinearInductiveTransferClassifier::build_(): can't use generative_0-1 model with targetsize() != 1");

costs[0] = sup_output;

costs[1] = transpose(lift_output(sigmoid(exp(sup_output)+REAL_EPSILON), sup_target));

}

if(targetsize() == 1)

{

new_costs[0] = new_output;

new_costs[1] = classification_loss(new_output, new_target);

}

else

{

new_costs.resize(costs.length());

for(int i=0; i<new_costs.length(); i++)

new_costs[i] = costs[i];

}

}

}

else if(model_type == "generative")

{

costs.resize(1);

if(targetsize() == 1)

costs[0] = classification_loss(sup_output, sup_target);

else

costs[0] = transpose(lift_output(sigmoid(sup_output), sup_target));

if(targetsize() == 1)

{

new_costs.resize(1);

new_costs[0] = classification_loss(new_output, new_target);

}

else

{

new_costs.resize(costs.length());

for(int i=0; i<new_costs.length(); i++)

new_costs[i] = costs[i];

}

}

else PLERROR("LinearInductiveTransferClassifier::build_(): model_type \"%s\" invalid",model_type.c_str());

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l1_square" || pt == "l1 square" || pt == "l1square" )

penalty_type = "L1_square";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("L2 penalty not supported, assuming you want L2 square");

penalty_type = "L2_square";

}

else

PLERROR("penalty_type \"%s\" not supported", penalty_type.c_str());

buildPenalties();

Var train_costs = hconcat(costs);

test_costs = hconcat(new_costs);

// Apply penalty to cost.

// If there is no penalty, we still add costs[0] as the first cost, in

// order to keep the same number of costs as if there was a penalty.

if(penalties.size() != 0) {

if (weightsize_>0)

training_cost = hconcat(sampleweight*sum(hconcat(costs[0] & penalties))

& (train_costs*sampleweight));

else

training_cost = hconcat(sum(hconcat(costs[0] & penalties)) & train_costs);

}

else {

if(weightsize_>0) {

training_cost = hconcat(costs[0]*sampleweight & train_costs*sampleweight);

} else {

training_cost = hconcat(costs[0] & train_costs);

}

}

training_cost->setName("training_cost");

test_costs->setName("test_costs");

if((bool)paramsvalues && (paramsvalues.size() == params.nelems()))

params << paramsvalues;

else

paramsvalues.resize(params.nelems());

params.makeSharedValue(paramsvalues);

// Build functions.

buildFuncs(input, output, target, sampleweight);

// Reinitialize the optimization phase

if(optimizer)

optimizer->reset();

stage = 0;

}

}

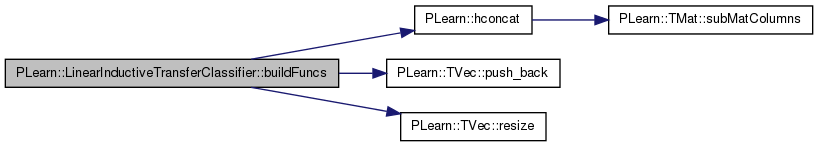

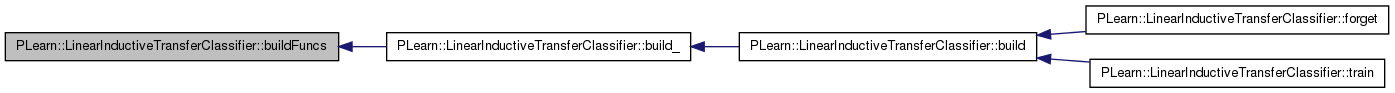

| void PLearn::LinearInductiveTransferClassifier::buildFuncs | ( | const Var & | the_input, |

| const Var & | the_output, | ||

| const Var & | the_target, | ||

| const Var & | the_sampleweight | ||

| ) | [protected] |

Build the various functions used in the network.

Definition at line 1073 of file LinearInductiveTransferClassifier.cc.

References costs, f, PLearn::hconcat(), invars, output_and_target_to_cost, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), sup_output_and_target_to_cost, sup_test_costf, test_costf, and test_costs.

Referenced by build_().

{

invars.resize(0);

VarArray outvars;

VarArray testinvars;

if (the_input)

{

invars.push_back(the_input);

testinvars.push_back(the_input);

}

if (the_output)

outvars.push_back(the_output);

if(the_target)

{

invars.push_back(the_target);

testinvars.push_back(the_target);

outvars.push_back(the_target);

}

if(the_sampleweight)

{

invars.push_back(the_sampleweight);

}

f = Func(the_input, the_output);

test_costf = Func(testinvars, the_output&test_costs);

test_costf->recomputeParents();

output_and_target_to_cost = Func(outvars, test_costs);

output_and_target_to_cost->recomputeParents();

VarArray sup_outvars;

sup_test_costf = Func(testinvars, the_output&hconcat(costs));

sup_test_costf->recomputeParents();

sup_output_and_target_to_cost = Func(outvars, hconcat(costs));

sup_output_and_target_to_cost->recomputeParents();

}

| void PLearn::LinearInductiveTransferClassifier::buildOutputFromInput | ( | const Var & | the_input, |

| Var & | hidden_layer, | ||

| Var & | before_transfer_func | ||

| ) | [protected] |

Build the output of the neural network, from the given input.

The hidden layer is also made available in the 'hidden_layer' parameter. The output before the transfer function is applied is also made available in the 'before_transfer_func' parameter.

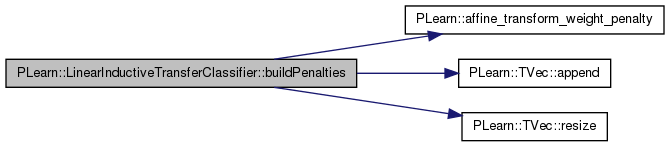

| void PLearn::LinearInductiveTransferClassifier::buildPenalties | ( | ) | [protected, virtual] |

Fill the costs penalties.

Definition at line 1027 of file LinearInductiveTransferClassifier.cc.

References A, PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), model_type, penalties, penalty_type, PLearn::TVec< T >::resize(), W, and weight_decay.

Referenced by build_().

{

penalties.resize(0); // prevents penalties from being added twice by consecutive builds

if(weight_decay > 0)

{

if(model_type == "nnet_discriminative_1_vs_all")

{

//for(int i=0; i<Ws.length(); i++)

//{

// penalties.append(affine_transform_weight_penalty(Ws[i], weight_decay, weight_decay, penalty_type));

//}

penalties.append(affine_transform_weight_penalty(W, weight_decay, 0, penalty_type));

}

penalties.append(affine_transform_weight_penalty(A, weight_decay, weight_decay, penalty_type));

}

}

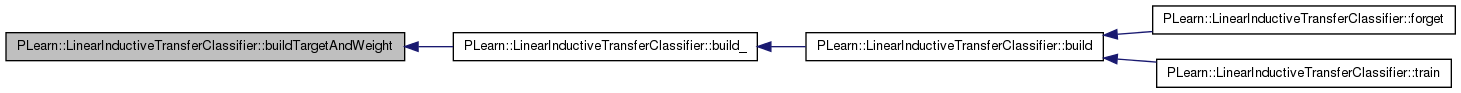

| void PLearn::LinearInductiveTransferClassifier::buildTargetAndWeight | ( | ) | [protected] |

Builds the target and sampleweight variables.

Definition at line 1013 of file LinearInductiveTransferClassifier.cc.

References PLERROR, sampleweight, target, PLearn::PLearner::targetsize(), and PLearn::PLearner::weightsize_.

Referenced by build_().

{

//if(nhidden_schedule_current_position >= nhidden_schedule.length())

if(targetsize() > 0)

{

target = Var(targetsize(), "target");

if(weightsize_>0)

{

if (weightsize_!=1)

PLERROR("In NNet::buildTargetAndWeight - Expected weightsize to be 1 or 0 (or unspecified = -1, meaning 0), got %d",weightsize_);

sampleweight = Var(1, "weight");

}

}

}

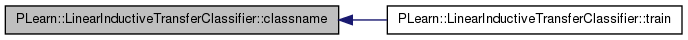

| string PLearn::LinearInductiveTransferClassifier::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

Referenced by train().

| void PLearn::LinearInductiveTransferClassifier::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

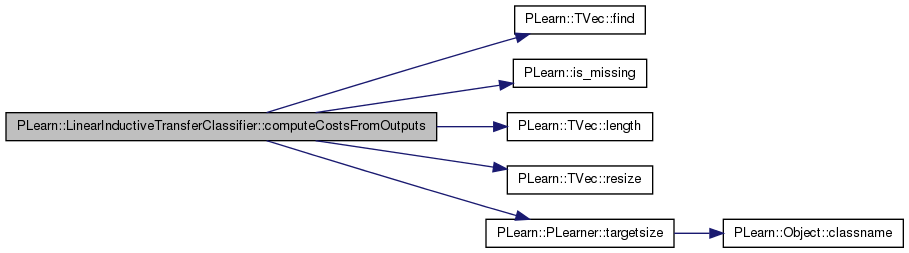

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 903 of file LinearInductiveTransferClassifier.cc.

References PLearn::TVec< T >::find(), i, PLearn::is_missing(), PLearn::TVec< T >::length(), model_type, output_and_target_to_cost, PLERROR, PLearn::TVec< T >::resize(), seen_targets, sup_output_and_target_to_cost, and PLearn::PLearner::targetsize().

{

if(targetsize() != 1)

costs.resize(costs.length()-1+targetsize());

if(seen_targets.find(target[0])>=0)

sup_output_and_target_to_cost->fprop(output&target, costs);

else

output_and_target_to_cost->fprop(output&target, costs);

if(targetsize() != 1)

{

costs.resize(costs.length()+1);

int i;

for(i=0; i<target.length(); i++)

if(!is_missing(target[i]))

break;

if(i>= target.length())

PLERROR("In LinearInductiveTransferClassifier::computeCostsFromOutputs(): all targets are missing, can't compute cost");

if(model_type == "generative")

costs[costs.length()-1] = costs[i];

else

costs[costs.length()-1] = costs[i+1];

costs[costs.length()-targetsize()-1] = costs[costs.length()-1];

costs.resize(costs.length()-targetsize());

}

}

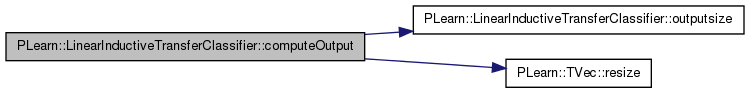

| void PLearn::LinearInductiveTransferClassifier::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 897 of file LinearInductiveTransferClassifier.cc.

References f, outputsize(), and PLearn::TVec< T >::resize().

{

output.resize(outputsize());

f->fprop(input,output);

}

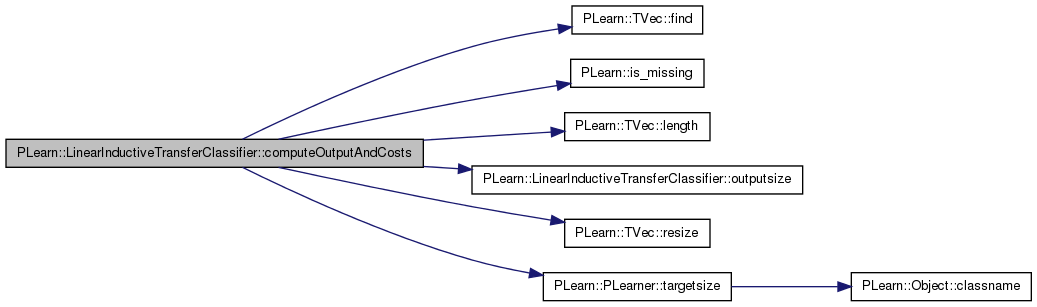

| void PLearn::LinearInductiveTransferClassifier::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 930 of file LinearInductiveTransferClassifier.cc.

References PLearn::TVec< T >::find(), i, PLearn::is_missing(), PLearn::TVec< T >::length(), model_type, outputsize(), PLERROR, PLearn::TVec< T >::resize(), seen_targets, sup_test_costf, PLearn::PLearner::targetsize(), and test_costf.

{

if(targetsize() != 1)

costsv.resize(costsv.length()-1+targetsize());

outputv.resize(outputsize());

if(seen_targets.find(targetv[0])>=0)

sup_test_costf->fprop(inputv&targetv, outputv&costsv);

else

test_costf->fprop(inputv&targetv, outputv&costsv);

if(targetsize() != 1)

{

costsv.resize(costsv.length()+1);

int i;

for(i=0; i<targetv.length(); i++)

if(!is_missing(targetv[i]))

break;

if(i>= targetv.length())

PLERROR("In LinearInductiveTransferClassifier::computeCostsFromOutputs(): all targets are missing, can't compute cost");

//for(int j=i+1; j<targetv.length(); j++)

// if(!is_missing(targetv[j]))

// PLERROR("In LinearInductiveTransferClassifier::computeCostsFromOutputs(): there should be only one non-missing target");

//cout << "i=" << i << " ";

if(model_type == "generative")

costsv[costsv.length()-1] = costsv[i];

else

costsv[costsv.length()-1] = costsv[i+1];

costsv[costsv.length()-targetsize()-1] = costsv[costsv.length()-1];

costsv.resize(costsv.length()-targetsize());

}

}

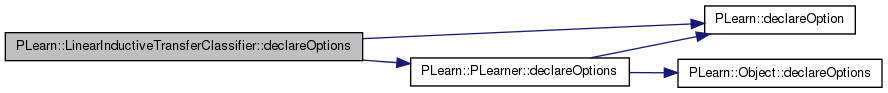

| void PLearn::LinearInductiveTransferClassifier::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 112 of file LinearInductiveTransferClassifier.cc.

References batch_size, PLearn::OptionBase::buildoption, class_reps, PLearn::declareOption(), PLearn::PLearner::declareOptions(), dont_consider_train_targets, hidden_layer, initialization_method, PLearn::OptionBase::learntoption, model_type, multi_target_classifier, nhidden, optimizer, paramsvalues, penalty_type, rbm_learning_rate, rbm_nstages, sigma_min, use_bias_in_weights_prediction, visible_layer, and weight_decay.

{

declareOption(ol, "optimizer", &LinearInductiveTransferClassifier::optimizer,

OptionBase::buildoption,

"Optimizer of the discriminative classifier");

declareOption(ol, "rbm_nstages",

&LinearInductiveTransferClassifier::rbm_nstages,

OptionBase::buildoption,

"Number of RBM training to initialize hidden layer weights");

declareOption(ol, "rbm_learning_rate",

&LinearInductiveTransferClassifier::rbm_learning_rate,

OptionBase::buildoption,

"Learning rate for the RBM");

declareOption(ol, "visible_layer",

&LinearInductiveTransferClassifier::visible_layer,

OptionBase::buildoption,

"Visible layer of the RBM");

declareOption(ol, "hidden_layer",

&LinearInductiveTransferClassifier::hidden_layer,

OptionBase::buildoption,

"Hidden layer of the RBM");

declareOption(ol, "batch_size", &LinearInductiveTransferClassifier::batch_size,

OptionBase::buildoption,

"How many samples to use to estimate the avergage gradient before updating the weights\n"

"0 is equivalent to specifying training_set->length() \n");

declareOption(ol, "weight_decay",

&LinearInductiveTransferClassifier::weight_decay,

OptionBase::buildoption,

"Global weight decay for all layers\n");

declareOption(ol, "model_type", &LinearInductiveTransferClassifier::model_type,

OptionBase::buildoption,

"Model type. Choose between:\n"

" - \"discriminative\" (multiclass classifier)\n"

" - \"discriminative_1_vs_all\" (1 vs all multitask classier)\n"

" - \"generative\" (gaussian input)\n"

" - \"generative_0-1\" ([0,1] input)\n"

" - \"nnet_discriminative_1_vs_all\" ([0,1] input)\n"

);

declareOption(ol, "penalty_type",

&LinearInductiveTransferClassifier::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L1_square\": square of the L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(ol, "initialization_method",

&LinearInductiveTransferClassifier::initialization_method,

OptionBase::buildoption,

"The method used to initialize the weights:\n"

" - \"normal_linear\" = a normal law with variance 1/n_inputs\n"

" - \"normal_sqrt\" = a normal law with variance 1/sqrt(n_inputs)\n"

" - \"uniform_linear\" = a uniform law in [-1/n_inputs, 1/n_inputs]\n"

" - \"uniform_sqrt\" = a uniform law in [-1/sqrt(n_inputs), 1/sqrt(n_inputs)]\n"

" - \"zero\" = all weights are set to 0\n");

declareOption(ol, "paramsvalues",

&LinearInductiveTransferClassifier::paramsvalues,

OptionBase::learntoption,

"The learned parameters\n");

declareOption(ol, "class_reps", &LinearInductiveTransferClassifier::class_reps,

OptionBase::buildoption,

"Class vector representations\n");

declareOption(ol, "dont_consider_train_targets",

&LinearInductiveTransferClassifier::dont_consider_train_targets,

OptionBase::buildoption,

"Indication that the targets seen in the training set\n"

"should not be considered when tagging a new set\n");

declareOption(ol, "use_bias_in_weights_prediction",

&LinearInductiveTransferClassifier::use_bias_in_weights_prediction,

OptionBase::buildoption,

"Indication that a bias should be used for weights prediction\n");

declareOption(ol, "multi_target_classifier",

&LinearInductiveTransferClassifier::multi_target_classifier,

OptionBase::buildoption,

"Indication that the classifier works with multiple targets,\n"

"possibly ON simulatneously.\n");

declareOption(ol, "sigma_min", &LinearInductiveTransferClassifier::sigma_min,

OptionBase::buildoption,

"Minimum variance for all coordinates, which is added\n"

"to the maximum likelihood estimates.\n");

declareOption(ol, "nhidden", &LinearInductiveTransferClassifier::nhidden,

OptionBase::buildoption,

"Number of hidden units for neural network.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::LinearInductiveTransferClassifier::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 159 of file LinearInductiveTransferClassifier.h.

:

//##### Protected Options ###############################################

| LinearInductiveTransferClassifier * PLearn::LinearInductiveTransferClassifier::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| void PLearn::LinearInductiveTransferClassifier::fillWeights | ( | const Var & | weights, |

| bool | zero_first_row, | ||

| real | scale_with_this = -1 |

||

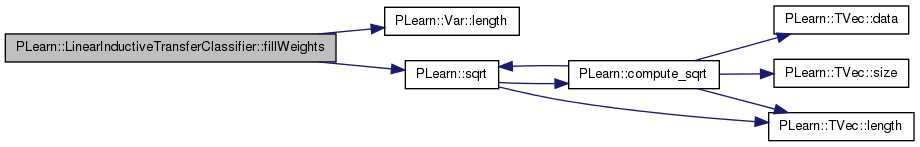

| ) | [protected] |

Fill a matrix of weights according to the 'initialization_method' specified.

The 'clear_first_row' boolean indicates whether we should fill the first row with zeros.

Definition at line 1044 of file LinearInductiveTransferClassifier.cc.

References initialization_method, PLearn::Var::length(), PLearn::PLearner::random_gen, and PLearn::sqrt().

Referenced by forget().

{

if (initialization_method == "zero") {

weights->value->clear();

return;

}

real delta;

if(scale_with_this < 0)

{

int is = weights.length();

if (zero_first_row)

is--; // -1 to get the same result as before.

if (initialization_method.find("linear") != string::npos)

delta = 1.0 / real(is);

else

delta = 1.0 / sqrt(real(is));

}

else

delta = scale_with_this;

if (initialization_method.find("normal") != string::npos)

random_gen->fill_random_normal(weights->value, 0, delta);

else

random_gen->fill_random_uniform(weights->value, -delta, delta);

if(zero_first_row)

weights->matValue(0).clear();

}

| void PLearn::LinearInductiveTransferClassifier::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 617 of file LinearInductiveTransferClassifier.cc.

References A, build(), class_reps, fillWeights(), model_type, nhidden, optimizer, s, PLearn::PLearner::stage, W, and PLearn::TMat< T >::width().

{

if(optimizer)

optimizer->reset();

stage = 0;

if(model_type == "nnet_discriminative_1_vs_all")

{

// for(int i=0; i<Ws.length(); i++)

// {

// fillWeights(Ws[i],false,1./(inputsize_*class_reps.width()));

// fillWeights(As[i],false,1./(nhidden*class_reps.width()));

// s_hids[i]->value.fill(1);

// }

fillWeights(W,true);

fillWeights(A,false,1./(nhidden*class_reps.width()));

s->value.fill(1);

}

else

{

//A = Var(inputsize_,class_reps_to_use.width());

A->value.fill(0);

s->value.fill(1);

}

// Might need to recompute proppaths (if number of task representations changed

// for instance)

build();

}

| OptionList & PLearn::LinearInductiveTransferClassifier::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| OptionMap & PLearn::LinearInductiveTransferClassifier::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| RemoteMethodMap & PLearn::LinearInductiveTransferClassifier::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 93 of file LinearInductiveTransferClassifier.cc.

| TVec< string > PLearn::LinearInductiveTransferClassifier::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 964 of file LinearInductiveTransferClassifier.cc.

References model_type, multi_target_classifier, and PLearn::TVec< T >::resize().

Referenced by getTrainCostNames().

{

TVec<string> costs_str;

if(model_type == "discriminative" || model_type == "discriminative_1_vs_all" || model_type == "generative_0-1" || model_type == "nnet_discriminative_1_vs_all")

{

if(model_type == "discriminative" || model_type == "generative_0-1")

{

costs_str.resize(2);

costs_str[0] = "NLL";

costs_str[1] = "class_error";

}

if(model_type == "discriminative_1_vs_all"

|| model_type == "nnet_discriminative_1_vs_all")

{

costs_str.resize(1);

costs_str[0] = "cross_entropy";

if(!multi_target_classifier)

{

costs_str.resize(2);

costs_str[1] = "class_error";

}

else

{

costs_str.resize(2);

costs_str[1] = "lift_first";

}

}

}

else if(model_type == "generative")

{

if(!multi_target_classifier)

{

costs_str.resize(1);

costs_str[0] = "class_error";

}

else

{

costs_str.resize(1);

costs_str[0] = "lift_first";

}

}

return costs_str;

}

| TVec< string > PLearn::LinearInductiveTransferClassifier::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1008 of file LinearInductiveTransferClassifier.cc.

References getTestCostNames().

{

return getTestCostNames();

}

| Var PLearn::LinearInductiveTransferClassifier::hiddenLayer | ( | const Var & | input, |

| const Var & | weights, | ||

| string | transfer_func, | ||

| Var & | before_transfer_function, | ||

| bool | use_cubed_value = false |

||

| ) | [protected] |

Return a variable that is the hidden layer corresponding to given input and weights.

If the 'default' transfer_func is used, we use the hidden_transfer_func option.

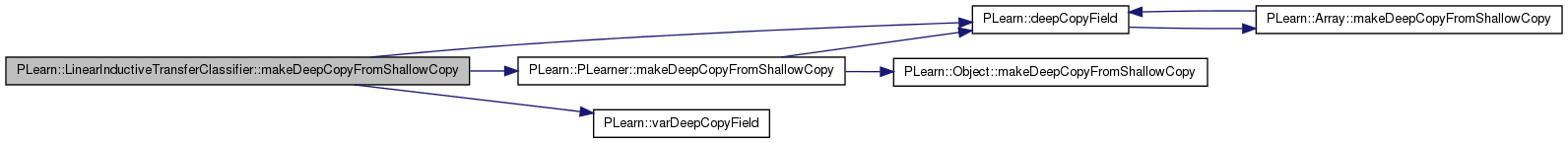

| void PLearn::LinearInductiveTransferClassifier::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 560 of file LinearInductiveTransferClassifier.cc.

References A, class_reps, class_reps_var, costs, PLearn::deepCopyField(), f, hidden_layer, input, invars, PLearn::PLearner::makeDeepCopyFromShallowCopy(), new_costs, new_output, new_target, optimizer, output, output_and_target_to_cost, params, paramsvalues, penalties, s, sampleweight, seen_targets, sup_output, sup_output_and_target_to_cost, sup_target, sup_test_costf, target, test_costf, test_costs, training_cost, unseen_targets, PLearn::varDeepCopyField(), visible_layer, and W.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(class_reps, copies);

deepCopyField(optimizer, copies);

deepCopyField(visible_layer, copies);

deepCopyField(hidden_layer, copies);

varDeepCopyField(input, copies);

varDeepCopyField(output, copies);

varDeepCopyField(sup_output, copies);

varDeepCopyField(new_output, copies);

varDeepCopyField(target, copies);

varDeepCopyField(sup_target, copies);

varDeepCopyField(new_target, copies);

varDeepCopyField(sampleweight, copies);

varDeepCopyField(A, copies);

varDeepCopyField(s, copies);

varDeepCopyField(class_reps_var, copies);

deepCopyField(costs, copies);

deepCopyField(new_costs, copies);

deepCopyField(params, copies);

deepCopyField(paramsvalues, copies);

deepCopyField(penalties, copies);

varDeepCopyField(training_cost, copies);

varDeepCopyField(test_costs, copies);

deepCopyField(invars, copies);

deepCopyField(seen_targets, copies);

deepCopyField(unseen_targets, copies);

deepCopyField(f, copies);

deepCopyField(test_costf, copies);

deepCopyField(output_and_target_to_cost, copies);

deepCopyField(sup_test_costf, copies);

deepCopyField(sup_output_and_target_to_cost, copies);

varDeepCopyField(W, copies);

//deepCopyField(As, copies);

//deepCopyField(Ws, copies);

//deepCopyField(s_hids, copies);

//deepCopyField(hidden_neurons, copies);

//PLERROR("LinearInductiveTransferClassifier::makeDeepCopyFromShallowCopy not fully (correctly) implemented yet!");

}

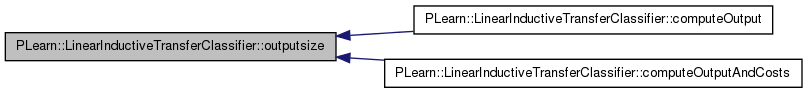

| int PLearn::LinearInductiveTransferClassifier::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 609 of file LinearInductiveTransferClassifier.cc.

References output.

Referenced by computeOutput(), and computeOutputAndCosts().

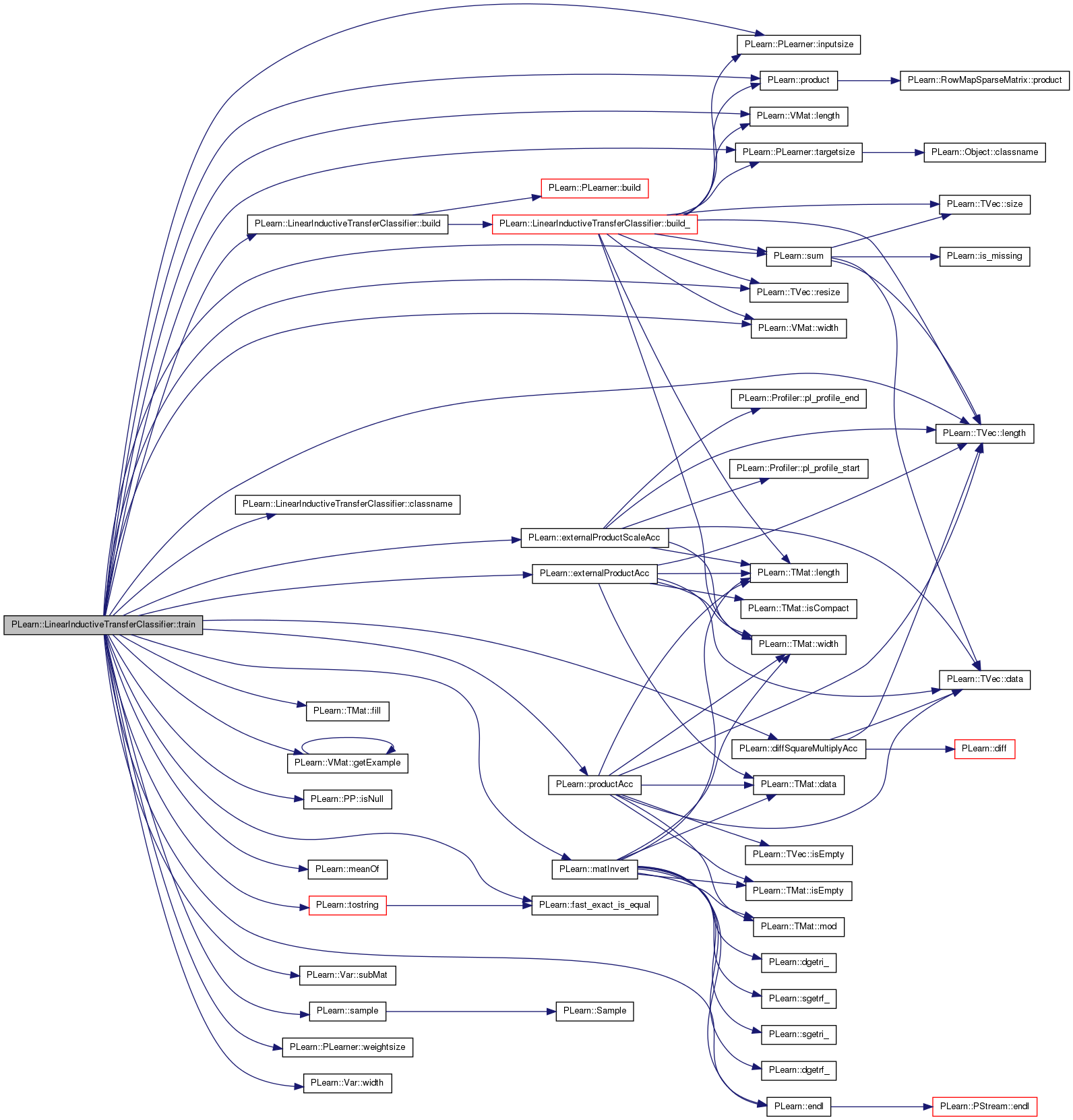

| void PLearn::LinearInductiveTransferClassifier::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 647 of file LinearInductiveTransferClassifier.cc.

References A, batch_size, build(), c, class_reps_var, classname(), PLearn::diffSquareMultiplyAcc(), PLearn::endl(), PLearn::externalProductAcc(), PLearn::externalProductScaleAcc(), f, PLearn::fast_exact_is_equal(), PLearn::TMat< T >::fill(), PLearn::VMat::getExample(), hidden_layer, i, input, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, invars, PLearn::PP< T >::isNull(), j, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::matInvert(), PLearn::meanOf(), model_type, multi_target_classifier, nhidden, PLearn::PLearner::nstages, optimizer, output_and_target_to_cost, params, PLERROR, PLearn::product(), PLearn::productAcc(), PLearn::PLearner::random_gen, rbm_learning_rate, rbm_nstages, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), s, PLearn::sample(), sigma_min, PLearn::PLearner::stage, PLearn::Var::subMat(), PLearn::sum(), target, PLearn::PLearner::targetsize(), test_costf, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_cost, PLearn::PLearner::verbosity, visible_layer, W, weight_decay, PLearn::PLearner::weightsize(), PLearn::VMat::width(), and PLearn::Var::width().

{

if(!train_set)

PLERROR("In DeepFeatureExtractor::train, you did not setTrainingSet");

if(!train_stats)

PLERROR("In DeepFeatureExtractor::train, you did not setTrainStatsCollector");

int l = train_set->length();

if(f.isNull()) // Net has not been properly built yet (because build was called before the learner had a proper training set)

build();

if(rbm_nstages>0 && stage == 0 && nstages > 0 && model_type == "nnet_discriminative_1_vs_all")

{

if(!visible_layer)

PLERROR("In LinearInductiveTransferClassifier::train(): "

"visible_layer must be provided.");

if(!hidden_layer)

PLERROR("In LinearInductiveTransferClassifier::train(): "

"hidden_layer must be provided.");

Vec input, target;

real example_weight;

real recons = 0;

RBMMatrixConnection* c = new RBMMatrixConnection();

PP<RBMMatrixConnection> layer_matrix_connections = c;

PP<RBMConnection> layer_connections = c;

hidden_layer->size = nhidden;

visible_layer->size = inputsize_;

layer_connections->up_size = inputsize_;

layer_connections->down_size = nhidden;

hidden_layer->random_gen = random_gen;

visible_layer->random_gen = random_gen;

layer_connections->random_gen = random_gen;

visible_layer->setLearningRate(rbm_learning_rate);

hidden_layer->setLearningRate(rbm_learning_rate);

layer_connections->setLearningRate(rbm_learning_rate);

hidden_layer->build();

visible_layer->build();

layer_connections->build();

Vec pos_visible,pos_hidden,neg_visible,neg_hidden;

pos_visible.resize(inputsize_);

pos_hidden.resize(nhidden);

neg_visible.resize(inputsize_);

neg_hidden.resize(nhidden);

for(int i = 0; i < rbm_nstages; i++)

{

for(int j=0; j<train_set->length(); j++)

{

train_set->getExample(j,input,target,example_weight);

pos_visible = input;

layer_connections->setAsUpInput( input );

hidden_layer->getAllActivations( layer_connections );

hidden_layer->computeExpectation();

hidden_layer->generateSample();

pos_hidden << hidden_layer->expectation;

layer_connections->setAsDownInput( hidden_layer->sample );

visible_layer->getAllActivations( layer_connections );

visible_layer->computeExpectation();

visible_layer->generateSample();

neg_visible = visible_layer->sample;

layer_connections->setAsUpInput( visible_layer->sample );

hidden_layer->getAllActivations( layer_connections );

hidden_layer->computeExpectation();

neg_hidden = hidden_layer->expectation;

// Compute reconstruction error

layer_connections->setAsDownInput( pos_hidden );

visible_layer->getAllActivations( layer_connections );

visible_layer->computeExpectation();

recons += visible_layer->fpropNLL(input);

// Update

visible_layer->update(pos_visible, neg_visible);

hidden_layer->update(pos_hidden, neg_hidden);

layer_connections->update(pos_hidden, pos_visible,

neg_hidden, neg_visible);

}

if(verbosity > 2)

cout << "Reconstruction error = " << recons/train_set->length() << endl;

recons = 0;

}

W->matValue.subMat(1,0,inputsize_,nhidden) << layer_matrix_connections->weights;

W->matValue(0) << hidden_layer->bias;

}

if(model_type == "discriminative" || model_type == "discriminative_1_vs_all" || model_type == "generative_0-1" || model_type == "nnet_discriminative_1_vs_all")

{

// number of samples seen by optimizer before each optimizer update

int nsamples = batch_size>0 ? batch_size : l;

Func paramf = Func(invars, training_cost); // parameterized function to optimize

Var totalcost = meanOf(train_set, paramf, nsamples);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

}

else PLERROR("LinearInductiveTransferClassifier::train can't train without setting an optimizer first!");

// number of optimizer stages corresponding to one learner stage (one epoch)

int optstage_per_lstage = l/nsamples;

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Training " + classname() + " from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

int initial_stage = stage;

bool early_stop=false;

//displayFunction(paramf, true, false, 250);

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

optimizer->optimizeN(*train_stats);

// optimizer->verifyGradient(1e-4); // Uncomment if you want to check your new Var.

train_stats->finalize();

if(verbosity>2)

cout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

cout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

}

else

{

Mat ww(class_reps_var->width(),class_reps_var->width()); ww.fill(0);

Mat ww_inv(class_reps_var->width(),class_reps_var->width());

Mat xw(inputsize(),class_reps_var->width()); xw.fill(0);

Vec input, target;

real weight;

input.resize(train_set->inputsize());

target.resize(train_set->targetsize());

for(int i=0; i<train_set->length(); i++)

{

train_set->getExample(i,input,target,weight);

if(targetsize() == 1)

{

if(weightsize()>0)

{

externalProductScaleAcc(ww,class_reps_var->matValue((int)target[0]),class_reps_var->matValue((int)target[0]),weight);

externalProductScaleAcc(xw,input,class_reps_var->matValue((int)target[0]),weight);

}

else

{

externalProductAcc(ww,class_reps_var->matValue((int)target[0]),class_reps_var->matValue((int)target[0]));

externalProductAcc(xw,input,class_reps_var->matValue((int)target[0]));

}

}

else

for(int j=0; j<target.length(); j++)

{

if(fast_exact_is_equal(target[j], 1)){

if(weightsize()>0)

{

externalProductScaleAcc(ww,class_reps_var->matValue(j),class_reps_var->matValue(j),weight);

externalProductScaleAcc(xw,input,class_reps_var->matValue(j),weight);

}

else

{

externalProductAcc(ww,class_reps_var->matValue(j),class_reps_var->matValue(j));

externalProductAcc(xw,input,class_reps_var->matValue(j));

}

}

}

}

if(weight_decay > 0)

for(int i=0; i<ww.length(); i++)

ww(i,i) = ww(i,i) + weight_decay;

matInvert(ww,ww_inv);

A->value.fill(0);

productAcc(A->matValue, xw, ww_inv);

s->value.fill(0);

Vec sample(s->size());

Vec weights(inputsize());

real sum = 0;

for(int i=0; i<train_set->length(); i++)

{

train_set->getExample(i,input,target,weight);

if(targetsize() == 1)

{

product(weights,A->matValue,class_reps_var->matValue((int)target[0]));

if(weightsize()>0)

{

diffSquareMultiplyAcc(s->value,weights,input,weight);

sum += weight;

}

else

{

diffSquareMultiplyAcc(s->value,weights,input,real(1.0));

sum++;

}

}

else

for(int j=0; j<target.length(); j++)

{

if(fast_exact_is_equal(target[j], 1))

{

product(weights,A->matValue,class_reps_var->matValue(j));

if(weightsize()>0)

{

diffSquareMultiplyAcc(s->value,weights,input,weight);

sum += weight;

}

else

{

diffSquareMultiplyAcc(s->value,weights,input,real(1.0));

sum++;

}

}

}

}

s->value /= sum;

s->value += sigma_min;

if(verbosity > 2 && !multi_target_classifier)

{

Func paramf = Func(invars, training_cost);

paramf->recomputeParents();

real mean_cost = 0;

Vec cost(2);

Vec row(train_set->width());

for(int i=0; i<train_set->length(); i++)

{

train_set->getRow(i,row);

paramf->fprop(row.subVec(0,inputsize()+targetsize()),cost);

mean_cost += cost[1];

}

mean_cost /= train_set->length();

cout << "Train class error: " << mean_cost << endl;

}

}

// Hugo: I don't know why we have to do this?!?

output_and_target_to_cost->recomputeParents();

test_costf->recomputeParents();

}

Reimplemented from PLearn::PLearner.

Definition at line 159 of file LinearInductiveTransferClassifier.h.

Var PLearn::LinearInductiveTransferClassifier::A [protected] |

Linear classifier parameters.

Definition at line 189 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildPenalties(), forget(), makeDeepCopyFromShallowCopy(), and train().

Batch size.

Definition at line 70 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), and train().

Class representations.

Definition at line 66 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), forget(), and makeDeepCopyFromShallowCopy().

Class representations.

Definition at line 193 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Costs variables.

Definition at line 195 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildFuncs(), and makeDeepCopyFromShallowCopy().

Indication that the targets seen in the training set should not be considered when tagging a new set.

Definition at line 84 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and declareOptions().

Func PLearn::LinearInductiveTransferClassifier::f [mutable, protected] |

Function: input -> output.

Definition at line 216 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), computeOutput(), makeDeepCopyFromShallowCopy(), and train().

Hidden layer of the RBM.

Definition at line 102 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

The method used to initialize the weights.

Definition at line 76 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), and fillWeights().

Var PLearn::LinearInductiveTransferClassifier::input [protected] |

Input variable.

Definition at line 173 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Input variables.

Definition at line 209 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), makeDeepCopyFromShallowCopy(), and train().

Model type.

Choose between:

Definition at line 81 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildPenalties(), computeCostsFromOutputs(), computeOutputAndCosts(), declareOptions(), forget(), getTestCostNames(), and train().

Indication that the classifier works with multiple targets, possibly ON simulatneously.

Definition at line 89 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), getTestCostNames(), and train().

Costs variables for new tasks.

Definition at line 197 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

New output variable.

Definition at line 179 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

New target variable.

Definition at line 185 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Number of hidden units for neural network.

Definition at line 94 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), forget(), and train().

Number of outputs for the neural network.

Definition at line 171 of file LinearInductiveTransferClassifier.h.

Referenced by build_().

Optimizer of the neural network.

Definition at line 68 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::LinearInductiveTransferClassifier::output [protected] |

Output variable.

Definition at line 175 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and outputsize().

Func PLearn::LinearInductiveTransferClassifier::output_and_target_to_cost [mutable, protected] |

Function: output & target -> cost.

Definition at line 220 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), computeCostsFromOutputs(), makeDeepCopyFromShallowCopy(), and train().

Parameters.

Definition at line 199 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Parameters vec.

Definition at line 201 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

Penalties variables.

Definition at line 203 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildPenalties(), and makeDeepCopyFromShallowCopy().

Penalty to use on the weights for weight decay.

Definition at line 74 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildPenalties(), and declareOptions().

Learning rate for the RBM.

Definition at line 98 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), and train().

Number of RBM training to initialize hidden layer weights.

Definition at line 96 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), declareOptions(), and train().

Var PLearn::LinearInductiveTransferClassifier::s [protected] |

Linear classifier scale parameter.

Definition at line 191 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), forget(), makeDeepCopyFromShallowCopy(), and train().

Sample weight variable.

Definition at line 187 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildTargetAndWeight(), and makeDeepCopyFromShallowCopy().

Vec of seen targets in the training set.

Definition at line 211 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Minimum variance for all coordinates, which is added to the maximum likelihood estimates.

Definition at line 92 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), and train().

Sup output variable.

Definition at line 177 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::LinearInductiveTransferClassifier::sup_output_and_target_to_cost [mutable, protected] |

Function: output & target -> cost.

Definition at line 224 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), computeCostsFromOutputs(), and makeDeepCopyFromShallowCopy().

Sup target variable.

Definition at line 183 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Func PLearn::LinearInductiveTransferClassifier::sup_test_costf [mutable, protected] |

Function: input & target -> output & test_costs.

Definition at line 222 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Var PLearn::LinearInductiveTransferClassifier::target [protected] |

Target variable.

Definition at line 181 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildTargetAndWeight(), makeDeepCopyFromShallowCopy(), and train().

Func PLearn::LinearInductiveTransferClassifier::test_costf [mutable, protected] |

Function: input & target -> output & test_costs.

Definition at line 218 of file LinearInductiveTransferClassifier.h.

Referenced by buildFuncs(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and train().

Test costs variable.

Definition at line 207 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildFuncs(), and makeDeepCopyFromShallowCopy().

Training cost variable.

Definition at line 205 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec of unseen targets in the training set.

Definition at line 213 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Indication that a bias should be used for weights prediction.

Definition at line 86 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), and declareOptions().

Visible layer of the RBM.

Definition at line 100 of file LinearInductiveTransferClassifier.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::LinearInductiveTransferClassifier::W [protected] |

Input to hidden layer weights.

Definition at line 228 of file LinearInductiveTransferClassifier.h.

Referenced by build_(), buildPenalties(), forget(), makeDeepCopyFromShallowCopy(), and train().

Weight decay for all weights.

Definition at line 72 of file LinearInductiveTransferClassifier.h.

Referenced by buildPenalties(), declareOptions(), and train().

1.7.4

1.7.4