|

PLearn 0.1

|

|

PLearn 0.1

|

#include <WeightedLogGaussian.h>

Public Member Functions | |

| WeightedLogGaussian () | |

| Default constructor for persistence. | |

| WeightedLogGaussian (bool training_mode, int class_label, Var input_index, Var mu, Var sigma, MoleculeTemplate templates) | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual WeightedLogGaussian * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | recomputeSize (int &l, int &w) const |

| Recomputes the length l and width w that this variable should have, according to its parent variables. | |

| virtual void | fprop () |

| compute output given input | |

| virtual void | bprop () |

| virtual void | symbolicBprop () |

| compute a piece of new Var graph that represents the symbolic derivative of this Var | |

| Var & | input_index () |

| Var & | mu () |

| Var & | sigma () |

Static Public Member Functions | |

| static string | _classname_ () |

| WeightedLogGaussian. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | class_label |

| bool | training_mode |

| VMat | test_set |

| MoleculeTemplate | current_template |

| PMolecule | molecule |

| Mat | W_lp |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | build_ () |

| Object-specific post-constructor. | |

Private Types | |

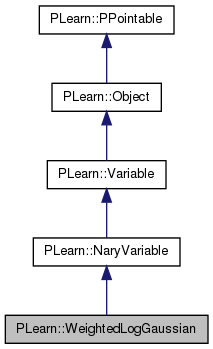

| typedef NaryVariable | inherited |

Variable that computed log P(X | C_k) for the MoleculeClassifier

Definition at line 56 of file WeightedLogGaussian.h.

typedef NaryVariable PLearn::WeightedLogGaussian::inherited [private] |

Reimplemented from PLearn::NaryVariable.

Definition at line 58 of file WeightedLogGaussian.h.

| PLearn::WeightedLogGaussian::WeightedLogGaussian | ( | ) | [inline] |

| PLearn::WeightedLogGaussian::WeightedLogGaussian | ( | bool | training_mode, |

| int | class_label, | ||

| Var | input_index, | ||

| Var | mu, | ||

| Var | sigma, | ||

| MoleculeTemplate | templates | ||

| ) |

Definition at line 59 of file WeightedLogGaussian.cc.

References build_(), class_label, current_template, and training_mode.

: inherited(input_index & mu & sigma, 1 , 1) { build_(); class_label = the_class_label ; current_template = the_template ; training_mode = the_training_mode ; }

| string PLearn::WeightedLogGaussian::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

| OptionList & PLearn::WeightedLogGaussian::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

| RemoteMethodMap & PLearn::WeightedLogGaussian::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

| Object * PLearn::WeightedLogGaussian::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file WeightedLogGaussian.cc.

| StaticInitializer WeightedLogGaussian::_static_initializer_ & PLearn::WeightedLogGaussian::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

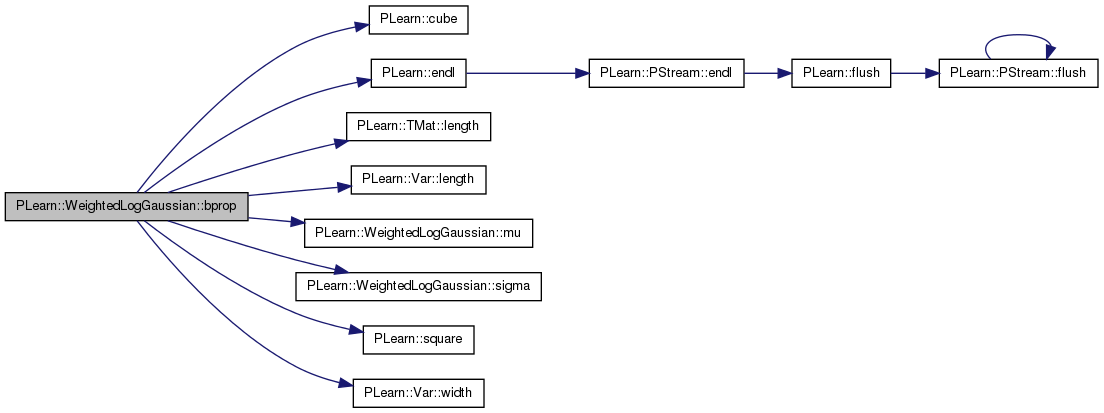

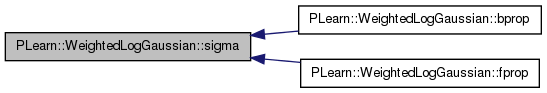

| void PLearn::WeightedLogGaussian::bprop | ( | ) | [virtual] |

Implements PLearn::Variable.

Definition at line 142 of file WeightedLogGaussian.cc.

References PLearn::cube(), PLearn::endl(), PLearn::Variable::gradientdata, i, j, PLearn::TMat< T >::length(), PLearn::Var::length(), m, PLearn::Variable::matGradient, PLearn::Variable::matValue, molecule, mu(), n, sigma(), PLearn::square(), W_lp, and PLearn::Var::width().

{

int n = mu()->length() ;

int p = mu()->width() ;

// Mat W_lp ;

Mat input ;

// int training_index = input_index()->value[0] ;

input = (molecule)->chem ;

int m = W_lp.length() ;

// cout << "MATGRDIENT" << class_label<< endl ;

for (int i=0 ; i<n ; ++i) {

for (int k=0 ; k<p ; ++k) {

sigma()->matGradient[i][k] -= gradientdata[0] / sigma()->matValue[i][k] ;

for (int j=0 ; j<m ; ++j) {

mu()->matGradient[i][k] += gradientdata[0] * W_lp[j][i] * (input[j][k] - mu()->matValue[i][k]) / square(sigma()->matValue[i][k]) ;

sigma()->matGradient[i][k] += gradientdata[0] * W_lp[j][i] * square(input[j][k] - mu()->matValue[i][k]) / cube(sigma()->matValue[i][k]) ;

if (fabs(sigma()->matGradient[i][k]) > 5) {

// char buf[20] ;

// fprintf(buf , "error-%d-%d",, ) ;

// FILE * fo = fopen("error.txt","wt") ;

// fprintf(fo , "gradientdata[0] = %lf \n" , gradientdata[0]) ;

// fprintf(fo , " W_lp[training_index]->matValue[j][i]= %lf \n" , W_lp[training_index]->matValue[j][i]) ;

// fprintf(fo , " input[j][k] = %lf \n" , input[j][k]) ;

// fprintf(fo , " mu()->matValue[i][k] = %lf \n" , mu()->matValue[i][k]) ;

// printf(" sigma()->matValue[i][k] = %lf \n" , sigma()->matValue[i][k]) ;

// fclose(fo) ;

// exit(1) ;

}

}

// cout << sigma()->matValue[i][k] << endl ;

}

}

cout << "Grad Data "<< gradientdata[0] << endl ;

// cout << "sigmagrad " <<sigma()->matGradient << endl ;

// sigma()->matGradiant =

}

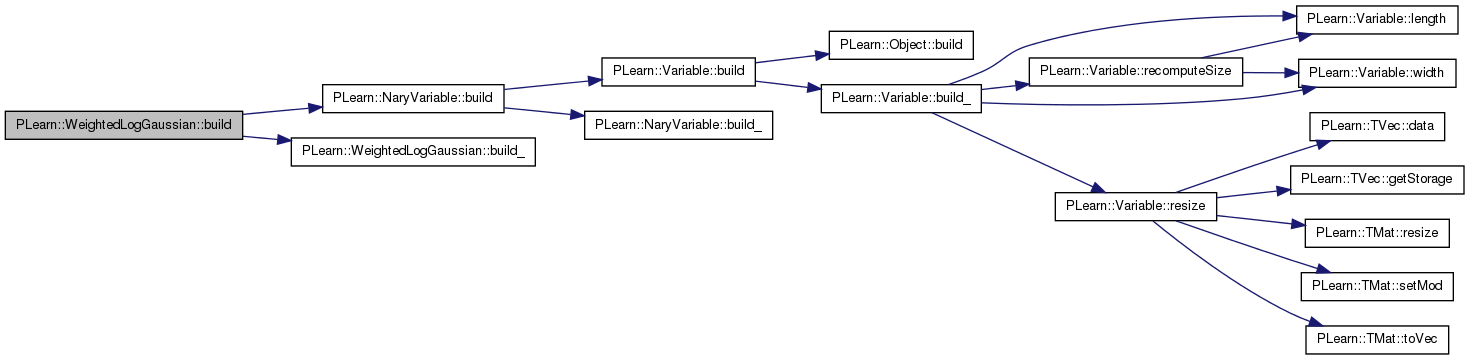

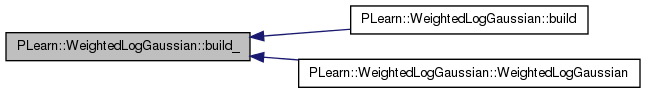

| void PLearn::WeightedLogGaussian::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::NaryVariable.

Definition at line 69 of file WeightedLogGaussian.cc.

References PLearn::NaryVariable::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::WeightedLogGaussian::build_ | ( | ) | [protected] |

Object-specific post-constructor.

This method should be redefined in subclasses and do the actual building of the object according to previously set option fields. Constructors can just set option fields, and then call build_. This method is NOT virtual, and will typically be called only from three places: a constructor, the public virtual build() method, and possibly the public virtual read method (which calls its parent's read). build_() can assume that its parent's build_() has already been called.

Reimplemented from PLearn::NaryVariable.

Definition at line 76 of file WeightedLogGaussian.cc.

Referenced by build(), and WeightedLogGaussian().

{

}

| string PLearn::WeightedLogGaussian::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file WeightedLogGaussian.cc.

| static const PPath& PLearn::WeightedLogGaussian::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::NaryVariable.

Definition at line 69 of file WeightedLogGaussian.h.

{ return varray[0]; }

| WeightedLogGaussian * PLearn::WeightedLogGaussian::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::NaryVariable.

Definition at line 57 of file WeightedLogGaussian.cc.

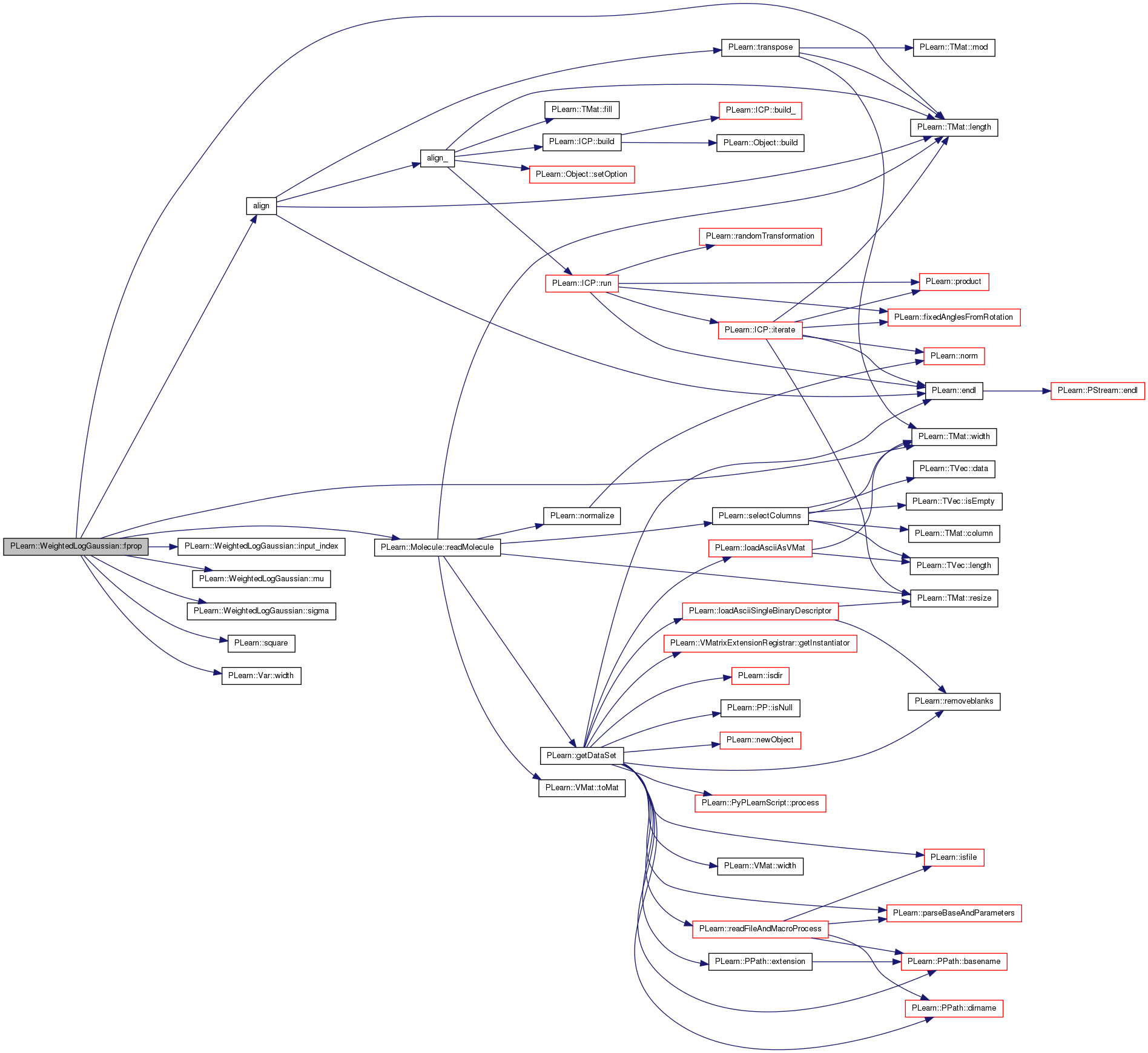

| void PLearn::WeightedLogGaussian::fprop | ( | ) | [virtual] |

compute output given input

Implements PLearn::Variable.

Definition at line 89 of file WeightedLogGaussian.cc.

References align(), PLearn::Molecule::chem, current_template, i, input_index(), j, PLearn::TMat< T >::length(), m, PLearn::Variable::matValue, molecule, mu(), n, pl_log, PLERROR, PLearn::Molecule::readMolecule(), sigma(), PLearn::square(), test_set, training_mode, PLearn::Variable::value, PLearn::Variable::valuedata, PLearn::Molecule::vrml_file, W_lp, PLearn::TMat< T >::width(), and PLearn::Var::width().

{

real ret = 0.0 ;

int p = mu()->width() ;

int training_index = input_index()->value[0] ;

if (! training_mode ) // read in the file only once

{

string filename = test_set->getValString(0, input_index()->value[0]) ;

molecule = Molecule::readMolecule(filename) ;

molecule->vrml_file = filename+".vrml" ;

}

::align(molecule->vrml_file,molecule->chem,current_template->vrml_file , current_template->chem, W_lp) ;

int n = W_lp.width() ;

int m = W_lp.length() ;

// printf("(%d %d) -> length allignment = (%d %d) \n" ,training_index , class_label,n,m) ;

Mat input ;

input = molecule->chem ;

for (int i=0 ; i<n ; ++i) {

for (int j=0 ; j<m ; ++j) {

for (int k=0 ; k<p ; ++k) {

ret += W_lp[j][i] * square((input[j][k] - mu()->matValue[i][k]))/square(sigma()->matValue[i][k]) ;

}

}

}

ret *= - 0.5 ;

for (int i=0 ; i<n ; ++i) {

for (int k=0 ; k<p ; ++k) {

ret -= pl_log(sigma()->matValue[i][k]) ;

// if (sigma()->matValue[i][k] > 20){

// cout << sigma()->matValue[i][k] ;

// cout << ret ;

// }

}

}

if (isnan(ret))

PLERROR("NAN") ;

valuedata[0] = ret ;

}

| OptionList & PLearn::WeightedLogGaussian::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file WeightedLogGaussian.cc.

| OptionMap & PLearn::WeightedLogGaussian::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file WeightedLogGaussian.cc.

| RemoteMethodMap & PLearn::WeightedLogGaussian::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file WeightedLogGaussian.cc.

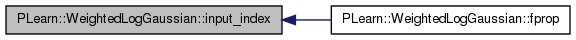

| Var& PLearn::WeightedLogGaussian::input_index | ( | ) | [inline] |

Definition at line 78 of file WeightedLogGaussian.h.

Referenced by fprop().

{ return varray[0]; }

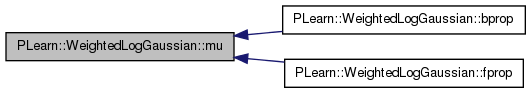

| Var& PLearn::WeightedLogGaussian::mu | ( | ) | [inline] |

Definition at line 79 of file WeightedLogGaussian.h.

Referenced by bprop(), and fprop().

{ return varray[1]; }

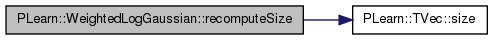

Recomputes the length l and width w that this variable should have, according to its parent variables.

This is used for ex. by sizeprop() The default version stupidly returns the current dimensions, so make sure to overload it in subclasses if this is not appropriate.

Reimplemented from PLearn::Variable.

Definition at line 80 of file WeightedLogGaussian.cc.

References PLearn::TVec< T >::size(), and PLearn::NaryVariable::varray.

| Var& PLearn::WeightedLogGaussian::sigma | ( | ) | [inline] |

Definition at line 80 of file WeightedLogGaussian.h.

Referenced by bprop(), and fprop().

{ return varray[2]; }

| void PLearn::WeightedLogGaussian::symbolicBprop | ( | ) | [virtual] |

compute a piece of new Var graph that represents the symbolic derivative of this Var

Reimplemented from PLearn::Variable.

Definition at line 189 of file WeightedLogGaussian.cc.

{

// input->accg(g * (input<=threshold));

}

Reimplemented from PLearn::NaryVariable.

Definition at line 69 of file WeightedLogGaussian.h.

Definition at line 65 of file WeightedLogGaussian.h.

Referenced by WeightedLogGaussian().

Definition at line 82 of file WeightedLogGaussian.h.

Referenced by fprop(), and WeightedLogGaussian().

Definition at line 83 of file WeightedLogGaussian.h.

Definition at line 67 of file WeightedLogGaussian.h.

Referenced by fprop().

Definition at line 66 of file WeightedLogGaussian.h.

Referenced by fprop(), and WeightedLogGaussian().

Definition at line 84 of file WeightedLogGaussian.h.

1.7.4

1.7.4