| Learning about images |

Suggested readings

-

[Background]

Some Informational Aspects Of Visual Perception. F. Attneave. Psychological Review 1954. [pdf]

-

[Background]

Possible Principles Underlying the Transformations of Sensory Messages. H.B. Barlow. Sensory communication 1961. [pdf]

-

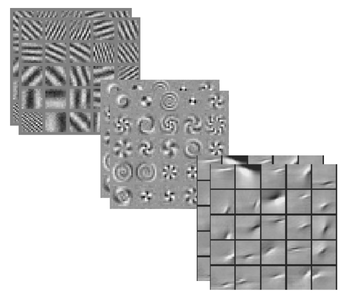

[Background] Emergence of Simple-Cell Receptive Field Properties by Learning a Sparse Code for Natural Images. B.A. Olshausen, D.J. Field. Nature, 1996. [pdf]

-

[Background] The `Independent Components' of Natural Scenes are Edge Filters. T. Bell, T. Sejnowski. Vis. Res. 1997 [pdf]

-

[Background]

Independent Component Analysis: Algorithms and Applications. A. Hyvarinen, E. Oja. Neural Computation 2000. [pdf]

-

[Background] Sparse Coding. P. Foldiak, D. Endres. Scholarpedia. [link]

-

Do We Know What the Early Visual System Does? M. Carrandini, et al. The Journal of Neuroscience 2005. [pdf].

-

Slow Feature Analysis: Unsupervised Learning of Invariances. L. Wiskott, T. Sejnowski. Neural Computation 2002. [pdf]

-

Natural Images, Gaussian Mixtures and Dead Leaves. D. Zoran, Y. Weiss. NIPS 2012 [pdf]

|

|

Date

|

Topic

|

Readings

|

Materials

|

|

Jan. 22

|

Lecture: Introduction

|

|

notes

|

|

Jan. 25

|

Lecture: Review of basic statistics, linear algebra

|

|

notes

Python logsumexp implementation.

|

|

Jan. 29

|

Lecture: Fourier representation and frequency analysis

|

- useful reading: textbook chapters 2, 20.

|

notes

|

|

Jan. 31

|

Lecture: Fourier part II

|

|

notes

|

|

Feb. 5

|

Lecture: Fourier part III, Preprocessing, PCA, whitening

|

- Possible Principles Underlying the Transformations of Sensory Messages. H.B. Barlow. [pdf]

- Interesting, not required: Some Informational Aspects Of Visual Perception. F. Attneave. [pdf]

- Useful background on PCA: textbook, sections 5.1 - 5.9.2.

|

assignment 1 (worth 10%)

notes

|

|

Feb. 8

|

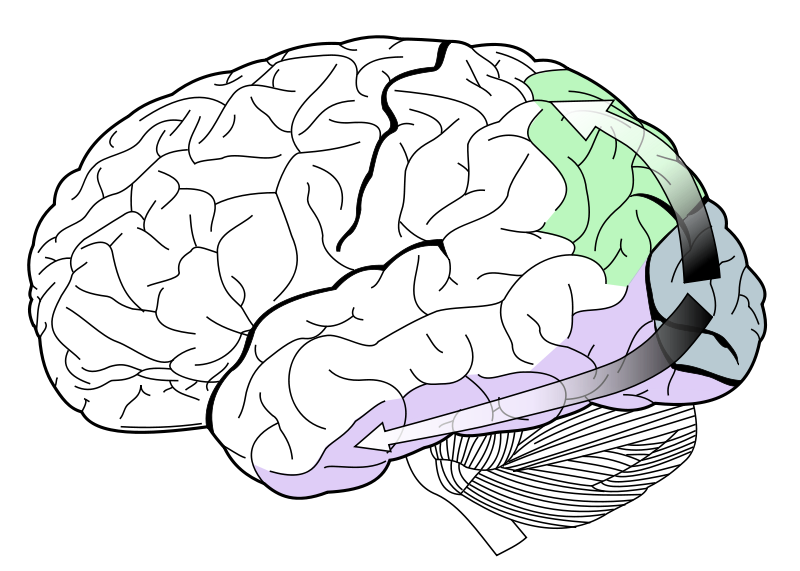

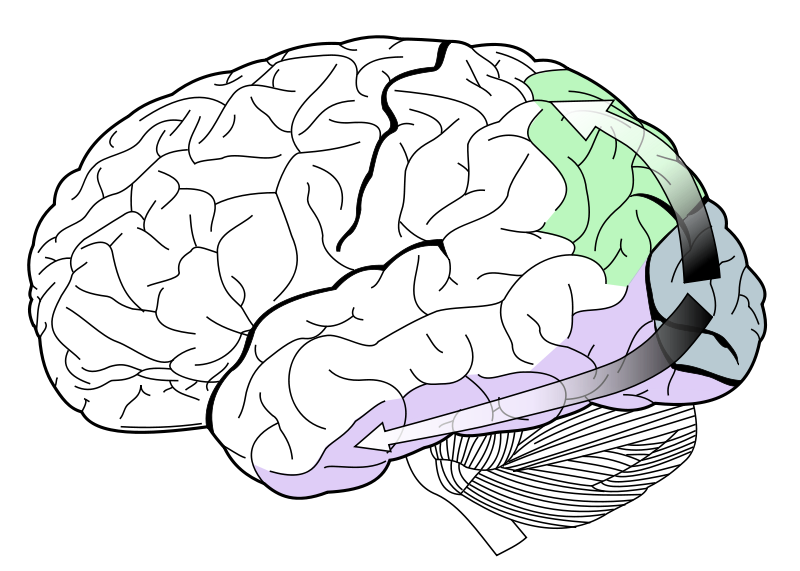

Lecture: PCA and Fourier representation,

some biological aspects of image processing

|

- Textbook chapter 3.

- Sparse Coding. P. Foldiak, D. Endres. Scholarpedia. [link]

|

notes

|

|

Feb. 12

|

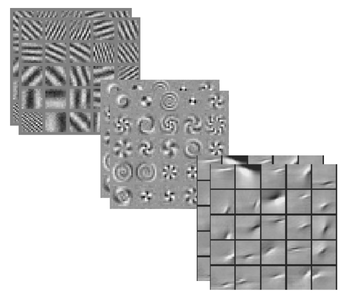

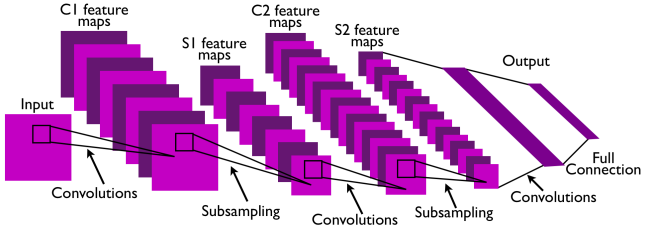

Lecture: ICA, sparse coding

|

- useful background reading:

The `Independent Components' of Natural Scenes are Edge Filters. T. Bell, T. Sejnowski. Vis. Res. 1997 [pdf]

|

assignment 1 due at the beginning of class

notes

|

|

Feb. 15

|

Lecture: Feature Learning II

|

- read the presentation guidelines

-

Emergence of Simple-Cell Receptive Field Properties by Learning a Sparse Code for Natural Images. B.A. Olshausen, D.J. Field. Nature, 1996. [pdf]

|

notes

|

|

Feb. 19

|

Lecture: Feature Learning III

review of assignment 1

Presentations/Discussion

|

- An analysis of single-layer networks in unsupervised feature learning. A. Coates, H. Lee, A. Y. Ng. AISTATS 2011. [pdf] (Salah R.)

|

assignment 2 (worth 10%)

A1 solution Q2

A1 solution Q4

notes

|

|

Feb. 22

|

Lecture: More on kmeans, energy based models

Presentations/Discussion

|

-

Building high-level features using large scale unsupervised learning. Q. Le, M.A. Ranzato, R. Monga, M. Devin, K. Chen, G. Corrado, J. Dean, A. Ng. ICML 2012. [pdf] (Pierre Luc C.)

-

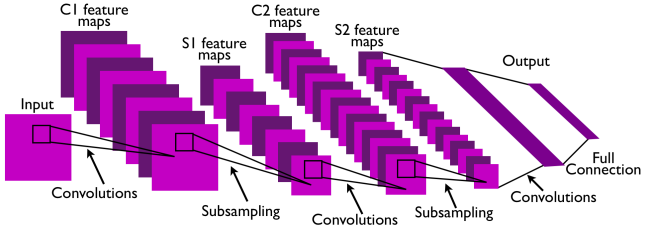

ImageNet Classification with Deep Convolutional Neural Networks. A. Krizhevsky, I. Sutskever, G.E. Hinton. NIPS 2012. [pdf]

|

notes

|

|

Feb. 26

|

Lecture: Restricted Boltmann Machines

Presentations/Discussion

|

-

Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. M. Gutmann, A. Hyvarinen. AISTATS 2010. [pdf] (Sebastien J.)

- Sparse filtering. J. Ngiam, et. al. NIPS 2011. [pdf] (Antoine M.)

|

assignment 2 due at the beginning of class

notes

|

|

Mar. 1

|

Lecture: more on RBMs

MRF models

Presentations/Discussion

|

-

Fields of Experts. S. Roth, M. Black. IJCV 2007. [pdf] (Francis Q. L.)

-

Deconvolutional Networks. M. Zeiler, D. Krishnan, G. Taylor, R. Fergus. CVPR 2010. [pdf] (Vincent A.)

|

|

| Learning about motion, geometry and invariance |

Suggested readings

-

[Background] Spatiotemporal energy models for the perception of motion. E.H. Adelson and J.R. Bergen. Journal Opt. Soc. Am. [pdf]

-

[Background] Neural Encoding of Binocular Disparity: Energy Models, Position Shifts and Phase Shifts. D. Fleet, H. Wagner, D. Heeger. Vision Research 1996. [pdf]

-

[Background] Learning Invariance from Transformation Sequences. P. Foldiak. Neural Computation 1991. [pdf].

-

Topographic Independent Component Analysis. A. Hyvarinen, P.O. Hoyer, M. Inki. Neural Computation 2001. [pdf]

-

Unsupervised learning of image transformations. R. Memisevic and G.E. Hinton. CVPR 2007. [pdf]

-

A multi-layer sparse coding network learns contour coding from natural images. P.O. Hoyer and A. Hyvarinen. Vision Research 2002. [ps]

-

Emergence of Phase- and Shift-Invariant Features by Decomposition of Natural Images into Independent Feature Subspaces. A. Hyvarinen, P. Hoyer. Neural Computation 2000. [pdf]

-

Dynamic Scene Understanding: The Role of Orientation Features in Space and Time in Scene Classification. K.G. Derpanis, M. Lecce, K. Daniildis, R.P. Wildes. CVPR 2012. [pdf]

-

Action spotting and recognition based on a spatiotemporal orientation analysis. K.G. Derpanis, M. Sizintsev, K. Cannons, R.P. Wildes. PAMI 2013. [pdf]

|

|

Date

|

Topic

|

Readings

|

Materials

|

|

Mar. 12

|

review of assignment 2

brief review of squaring and complex cells

|

Spatiotemporal energy models for the perception of motion. E.H. Adelson and J.R. Bergen. Journal Opt. Soc. Am. [pdf]

|

assignment 3 (worth 10%)

A2 solution Q3 (online kmeans)

A2 solution Q3 (kmeans on cifar)

|

|

Mar. 15

|

Lecture: Relations, gating and complex cells I

|

Learning to relate images. Roland Memisevic TPAMI 2013 [pdf]

|

notes

|

|

Mar. 19

|

Lecture: Relations, gating and complex cells II

|

|

assignment 3 due at the beginning of class

|

|

Mar. 22

|

Presentations/Discussion

Energy models on single images

|

-

What is the Best Multi-Stage Architecture for Object Recognition?

K. Jarrett, K. Kavukcuoglu, M.A. Ranzato, Y. LeCun. CVPR 2009. [pdf] (Nicholas L.)

-

On Random Weights and Unsupervised Feature Learning. A Saxe, et al. ICML 2011. [pdf] (Pierre Luc C.)

|

A3 solution Q3

|

|

Mar. 26

|

Discussion of assignment 3 Q3

Lecture: Relations, gating and complex cells III

|

|

The slides from my IPAM tutorial:

part 1 and

part 2.

|

|

Apr. 2

|

Discussion of assignment 3 Q1/Q2

Presentations/Discussion

Energy models on single images II

|

-

How to generate realistic images using gated MRF's. M.A. Ranzato, V. Mnih, G. Hinton. NIPS 2010. [pdf] (Francis Q.L.)

|

|

|

Apr. 5

|

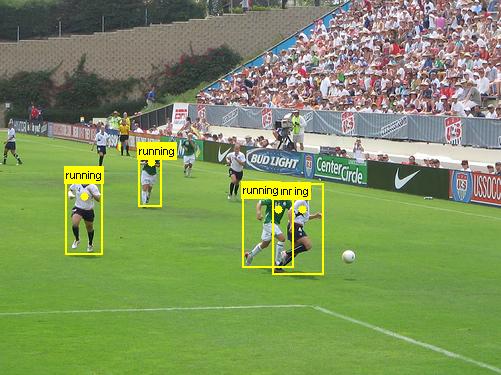

Presentations/Discussion

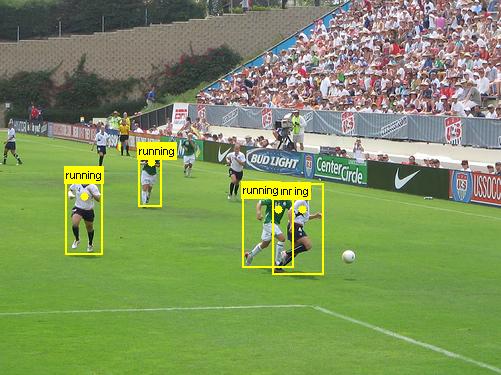

Motion features and activity recognition

|

-

Convolutional Learning of Spatio-temporal Features. G. Taylor, R. Fergus, Y. LeCun and C. Bregler. ECCV, 2010. [pdf] (Xavier B.)

-

Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. Q.V. Le, W.Y. Zou, S.Y. Yeung, A.Y. Ng. CVPR 2011 [pdf] (Antoine M.)

|

|

|

Apr. 9

|

Presentations/Discussion

Learning about images from movies

|

-

Learning Intermediate-Level Representations of Form and Motion from Natural Movies. C.F. Cadieu, B.A. Olshausen. Neural Computation 2012. [pdf] (Xavier B.)

-

Deep Learning of Invariant Features via Simulated Fixations in Video. W. Zou, A. Ng, S. Zhu, K. Yu. NIPS 2012. (Sebastien J.)

[pdf]

|

|

|

Apr. 12

|

Presentations/Discussion

Fixations

Group structure and topography

|

-

Topographic Independent Component Analysis. A. Hyvarinen, P.O. Hoyer, M. Inki. Neural Computation 2001. [pdf]

-

Learning Invariant Features through Topographic Filter Maps. K. Kavukcuoglu, M.A. Ranzato, R. Fergus, Y. LeCun. CVPR 2009. [pdf] (Magatte D.)

-

Learning to combine foveal glimpses with a third-order Boltzmann machine. H. Larochelle and G. Hinton. NIPS 2010. [pdf] (Vincent A.)

|

|

| Learning about shape, learning where to look, miscellaneous. |

Suggested readings

-

A Model of Saliency-Based Visual Attention for Rapid Scene Analysis. L. Itti, C. Koch, E. Niebur. PAMI 1998. [pdf]

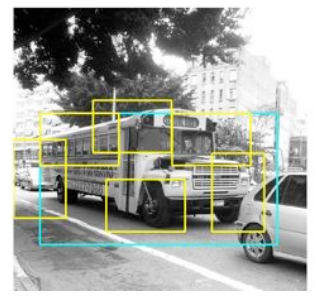

- Searching for objects driven by context. Alexe, et al. NIPS 2012 [pdf]

-

Convolutional Deep Belief Networks for Scalable Unsupervised Learning of Hierarchical Representations. H. Lee, R. Grosse, R. Ranganath, and A. Y. Ng. ICML 2009. [pdf]

-

Learning where to attend with deep architectures for image tracking. M. Denil, L. Bazzani, H. Larochelle, N. de Freitas. Neural Computation 2012. [pdf]

-

Factored Conditional Restricted Boltzmann Machines for Modeling Motion Style. G. Taylor, G. Hinton. ICML 2009. [pdf]

-

Learning a Generative Model of Images by Factoring Appearance and Shape. N. Le Roux, N. Heess, J. Shotton, J. Winn. Neural Computation 2012. [pdf]

|

|

Date

|

Topic

|

Readings

|

Materials

|

|

Apr. 16

|

Presentations/Discussion

Miscellaneous topics

|

- WSABIE: Scaling Up To Large Vocabulary Image Annotation. J. Weston, et al. IJCAI [pdf] (Salah R.)

- The Shape Boltzmann Machine: a Strong Model of Object Shape. S.M.A.Eslami, N.Hess, J.Winn. CVPR 2012. [pdf] (Nicholas L.)

- Emergence of Object-Selective Features in Unsupervised Feature Learning. A. Coates et al. NIPS 2012. [pdf] (Magatte D.)

|

|

|

Apr. 19

|

Wrap-up

Final Project Presentations and Discussions

|

- Transforming Auto-encoders. G. Hinton, A. Krizhevsky, S. Wang. ICANN 2011. [pdf]

- Project Nicholas L.

- Project Francis Q. L.

- Project Antoine M.

|

|

|

Apr. 22

|

Project office hour

|

|

|

|

Apr. 23

|

Final Project Presentations and Discussions

|

- Project Sebastien J.

- Project Xavier B.

- Project Vincent A.B.

- Project Pierre Luc C.

- Project Salah R.

- Project Magatte D.

|

|

|

Apr. 30

|

|

Final Projects Due

|

|