| Introduction / background |

|

Date

|

Topic

|

Readings

|

Notes

|

|

Jan 6

|

Intro

|

|

notes

|

|

Jan 19

|

Review of basic probabilities/linear algebra/ML

|

|

notes

|

|

Jan 20

|

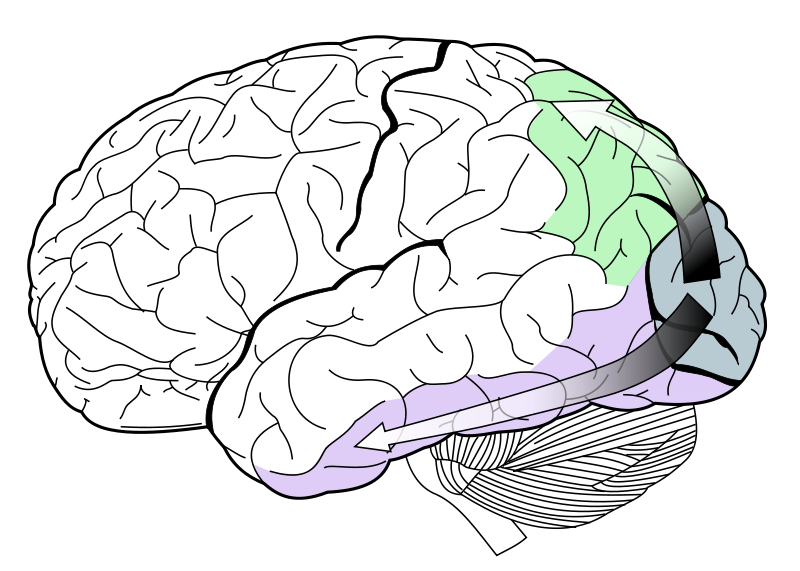

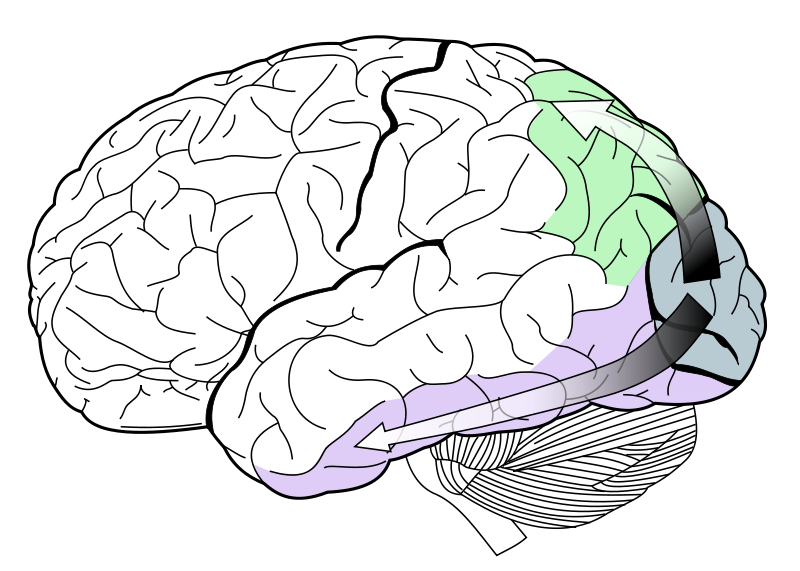

Lecture: Some biological aspects of vision

|

Reading 1: Attneave 1954

|

notes

|

|

Jan 26

|

Lecture: Back-prop and neural networks

|

Reading 2: sparse coding (Foldiak, Endres; scholarpedia 2008)

|

notes

Reading 1 due

|

|

Feb 2

|

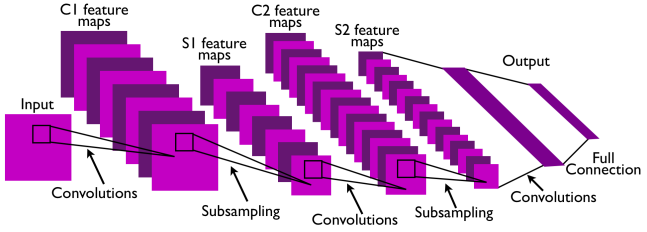

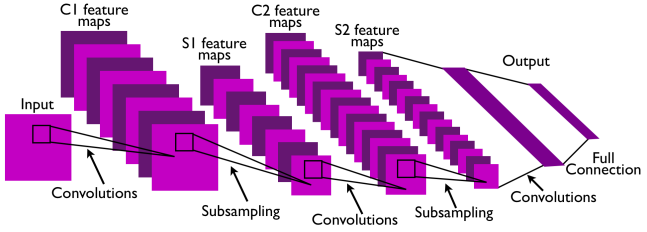

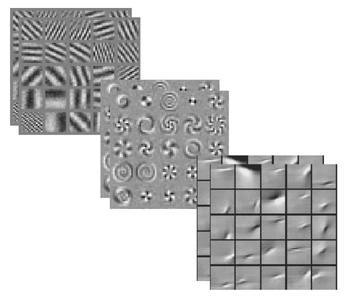

Lecture: Convolutional networks

|

Reading 3: cudnn

|

Reading 2 due

notes

|

|

Feb 3

|

Lecture: Convolutional networks (contd.)

|

|

|

|

Feb 9

|

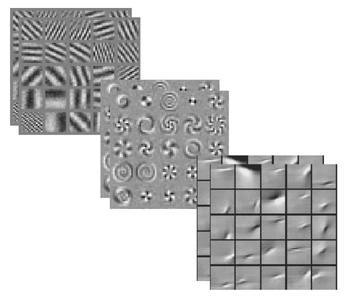

Lecture: Convnets and Fourier (I)

|

Reading 4: imagenet Sections 1 and 2, skim the rest.

|

Reading 3 due

notes

|

|

Feb 10

|

Lecture: Fourier (II)

|

|

notes

(A1 deferred to next week)

|

|

Feb 16

|

Lecture: Fourier (III)

|

Reading 5: "alex-net"

|

notes

Assignment 1

Reading 4 due

|

|

Feb 17

|

Lecture: Fourier (III) contd.

|

|

|

|

Feb 23

|

Paper presentations and discussion

|

Reading 6: Thrun 1996

papers:

-

Going Deeper with Convolutions Szegedy et al. 2014 [pdf]

The paper describes GoogLeNet which won the 2014 ImageNet competition with a performance most people doubted would be possible anytime soon. (see also this related paper) (Florian B.)

-

Batch Normalization: Accelerating Deep network training by reducing internal covariate shift Ioffe, Szegedy 2015 [pdf](Guillaume B.)

-

Very Deep Convolutional Networks For Large-scale Image Recognition Simonyan Zisserman 2015 [pdf]

A CNN architecture that has become very common. (Olexa B.)

|

Reading 5 due

|

|

Feb 24

|

Paper presentations and discussion

|

papers:

-

Deep Residual Learning for Image Recognition He et al., 2015 [pdf]

Training very very deep nets, ILSVRC 2015 winner. (Thomas G.)

-

Spatial Transformer Networks Jaderberg et al., 2016 [pdf]

(Vincent M.)

|

A1 due

|

|

Mar 8

|

Paper presentations and discussion

|

papers:

-

Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture Eigen, Fergus 2014 [pdf]

(dataset)

Extract richer scene information with conv-nets. (Chinna S.)

-

DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition Donahue et al. [pdf]

One (of several) papers demonstrating the generality of learned convnet features. (also see: this related paper) (Luiz G. H.)

-

Learning Visual Features from Large Weakly Supervised Data (Joulin, v.d.Maaten, Jabri, Vasilache; 2015) [pdf] (Daniel E.)

Can we do well without imagenet.

|

Reading 6 due

|

|

Mar 9

|

Paper presentations and discussion

|

Reading 7: MS COCO (skim the details)

papers:

-

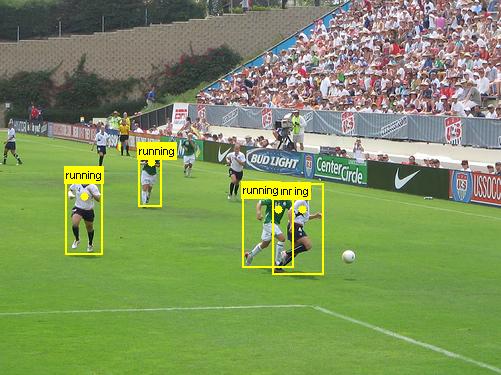

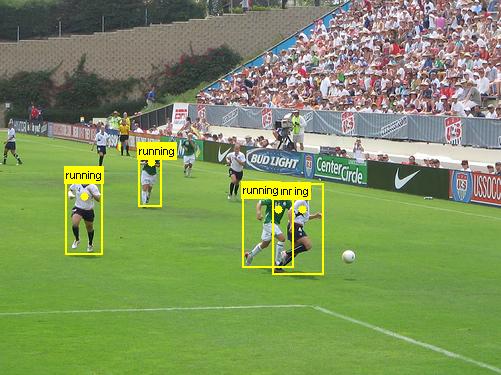

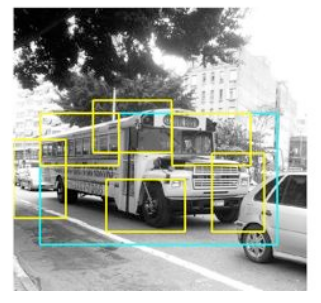

Rich feature hierarchies for accurate object detection and semantic segmentation (Girshik et al. 2014) [pdf]

-

Fast R-CNN (Girshik 2015) [pdf]

see also faster r-cnn

(Danlan C.)

-

You Only Look Once: Unified, Real-Time Object Detection (Redmon et al. 2015) [pdf] (Sarath C.)

-

C3D: Generic Features for Video Analysis Tran et al. 2015 [pdf] (Faruk A.)

|

Assignment 2

|

|

Mar 15

|

-- class canceled --

|

|

|

|

Mar 16

|

-- class canceled --

|

|

|

|

Mar 22

|

Lecture: Reinforcement Learning

|

Reading 8: VQA: Visual Question Answering (without appendix)

|

notes

|

|

Mar 23

|

Paper presentations and discussion

|

*** No more reaction reports required. ***

-

Image Super-Resolution Using Deep Convolutional Networks (Dong et al. 2015) [pdf] (Mario B.)

-

DenseCap: Fully Convolutional Localization Networks for Dense Captioning (Johnson et al. 2015) [pdf] (Sarath C.)

-

Visual7W: Grounded Question Answering in Images Zhu et al 2015 [pdf] (Vincent M.)

|

*** No more reaction reports required. ***

A2 due

Reading 7 due

|

|

Mar 29

|

Paper presentations and discussion

|

Reading 9: deep dream blog post, also have a look at the code

*** No more reaction reports required. ***

-

Ask Your Neurons: A Neural-based Approach to Answering Questions about Images (Malinowski et al, 2015) [pdf]

see also the DAQUAR dataset (Faruk A.)

-

Natural Language Object Retrieval (Hu et al, 2015) [pdf] (Luiz H.)

see also the dataset

|

Reading 8 due

|

|

Mar 30

|

Paper presentations and discussion

|

*** No more reaction reports required. ***

-

Multiple Object Recognition with Visual Attention (Ba et al., 2015) [pdf] see also this earlier paper (Mnih et al) (Olexa B.)

-

DRAW: A Recurrent Neural Network For Image Generation (Gregor et al., 2015) [pdf](Daniel E.)

-

A Neural Algorithm of Artistic Style (Gatys et al, 2015) [pdf] (Thomas G.)

|

|

|

April 5

|

Paper presentations and discussion

|

Reading 10: Carefully read one paper that is highly relevant to your final project. In your email state which paper you read.

*** No more reaction reports required. ***

-

DeepStereo: Learning to Predict New Views from the World's Imagery (Flynn et al, 2015) [pdf] (Florian B.)

-

Pixel Recurrent Neural Networks (van den Oord, et al, 2016) [pdf] (Guillaume B.)

-

Render for CNN: Viewpoint Estimation in Images Using CNNs Trained with Rendered 3D Model Views (Su et al, 2015) [pdf] (Mario B.)

|

Reading 9 due

|

|

April 6

|

|

*** No more reaction reports required. ***

-

MovieQA: Understanding Stories in Movies through Question-Answering (Tapaswi et al, 2015) [pdf] (Danlan C.)

-

Unsupervised Representation Learning With Deep Convolutional Generative Adversarial Networks (Radford et al, 2016) [pdf] (Chinna S.)

-

Visual genome (Krishna et al. 2016)[pdf]

-

Dynamic Memory Networks for Visual and Textual Question Answering (Xiong et al, 2016) [pdf]

|

|

|

April 12

|

Lecture: Generative and variational methods

|

|

Reading 10 due

notes

|

|

April 13

|

Final project presentations and discussions

|

|

|

|

|