|

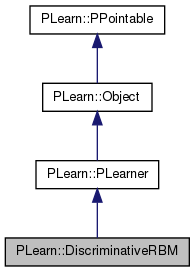

PLearn 0.1

|

|

PLearn 0.1

|

Discriminative Restricted Boltzmann Machine classifier. More...

#include <DiscriminativeRBM.h>

Public Member Functions | |

| DiscriminativeRBM () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DiscriminativeRBM * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | disc_learning_rate |

| The learning rate used for discriminative learning. | |

| real | disc_decrease_ct |

| The decrease constant of the discriminative learning rate. | |

| bool | use_exact_disc_gradient |

| Indication that the exact gradient should be used for discriminative learning (instead of the CD gradient) | |

| real | gen_learning_weight |

| The weight of the generative learning term, for hybrid discriminative/generative learning. | |

| bool | use_multi_conditional_learning |

| Indication that multi-conditional learning should be used instead of generative learning. | |

| real | semi_sup_learning_weight |

| The weight of the semi-supervised learning term, for unsupervised learning on unlabeled data. | |

| int | n_classes |

| Number of classes in the training set. | |

| PP< RBMLayer > | input_layer |

| The input layer of the RBM. | |

| PP< RBMBinomialLayer > | hidden_layer |

| The hidden layer of the RBM. | |

| PP< RBMConnection > | connection |

| The connection weights between the input and hidden layer. | |

| real | target_weights_L1_penalty_factor |

| Target weights' L1_penalty_factor. | |

| real | target_weights_L2_penalty_factor |

| Target weights' L2_penalty_factor. | |

| bool | do_not_use_discriminative_learning |

| Indication that discriminative learning should not be used. | |

| int | unlabeled_class_index_begin |

| The smallest index for the classes of the unlabeled data. | |

| int | n_classes_at_test_time |

| The number of classes to discriminate from during test. | |

| int | n_mean_field_iterations |

| Number of mean field iterations for the approximate computation of p(y|x) for multitask learning. | |

| int | gen_learning_every_n_samples |

| Determines the frequency of a generative learning update. | |

| PP< RBMClassificationModule > | classification_module |

| The module computing the probabilities of the different classes. | |

| PP < RBMMultitaskClassificationModule > | multitask_classification_module |

| The module for approximate computation of the probabilities of the different classes. | |

| TVec< string > | cost_names |

| The computed cost names. | |

| PP< NLLCostModule > | classification_cost |

| The module computing the classification cost function (NLL) on top of classification_module. | |

| PP< RBMMixedLayer > | joint_layer |

| Concatenation of input_layer and the target layer (that is inside classification_module) | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| PP< RBMMatrixConnection > | last_to_target |

| Matrix connection weights between the hidden layer and the target layer (pointer to classification_module->last_to_target) | |

| PP< RBMConnection > | last_to_target_connection |

| Connection weights between the hidden layer and the target layer (pointer to classification_module->last_to_target) | |

| PP< RBMConnection > | joint_connection |

| Connection weights between the hidden layer and the visible layer (pointer to classification_module->joint_connection) | |

| PP< RBMLayer > | target_layer |

| Part of the RBM visible layer corresponding to the target (pointer to classification_module->target_layer) | |

| PP< RBMClassificationModule > | unlabeled_classification_module |

| Classification module for when unlabeled_class_index_begin != 0. | |

| PP< RBMClassificationModule > | test_time_classification_module |

| Classification module for when n_classes_at_test_time != n_classes. | |

| Vec | target_one_hot |

| Temporary variables for Contrastive Divergence. | |

| Vec | disc_pos_down_val |

| Vec | disc_pos_up_val |

| Vec | disc_neg_down_val |

| Vec | disc_neg_up_val |

| Vec | gen_pos_down_val |

| Vec | gen_pos_up_val |

| Vec | gen_neg_down_val |

| Vec | gen_neg_up_val |

| Vec | semi_sup_pos_down_val |

| Vec | semi_sup_pos_up_val |

| Vec | semi_sup_neg_down_val |

| Vec | semi_sup_neg_up_val |

| Vec | input_gradient |

| Temporary variables for gradient descent. | |

| Vec | class_output |

| Vec | before_class_output |

| Vec | unlabeled_class_output |

| Vec | test_time_class_output |

| Vec | class_gradient |

| int | nll_cost_index |

| Keeps the index of the NLL cost in train_costs. | |

| int | class_cost_index |

| Keeps the index of the class_error cost in train_costs. | |

| int | hamming_loss_index |

| Keeps the index of the hamming_loss cost in train_costs. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_layers_and_connections () |

| void | build_costs () |

| void | build_classification_cost () |

| void | setLearningRate (real the_learning_rate) |

Discriminative Restricted Boltzmann Machine classifier.

Definition at line 61 of file DiscriminativeRBM.h.

typedef PLearner PLearn::DiscriminativeRBM::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 63 of file DiscriminativeRBM.h.

| PLearn::DiscriminativeRBM::DiscriminativeRBM | ( | ) |

Default constructor.

Definition at line 60 of file DiscriminativeRBM.cc.

References PLearn::PLearner::random_gen.

:

disc_learning_rate( 0. ),

disc_decrease_ct( 0. ),

use_exact_disc_gradient( 0. ),

gen_learning_weight( 0. ),

use_multi_conditional_learning( false ),

semi_sup_learning_weight( 0. ),

n_classes( -1 ),

target_weights_L1_penalty_factor( 0. ),

target_weights_L2_penalty_factor( 0. ),

do_not_use_discriminative_learning( false ),

unlabeled_class_index_begin( 0 ),

n_classes_at_test_time( -1 ),

n_mean_field_iterations( 1 ),

gen_learning_every_n_samples( 1 )

{

random_gen = new PRandom();

}

| string PLearn::DiscriminativeRBM::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

| OptionList & PLearn::DiscriminativeRBM::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

| RemoteMethodMap & PLearn::DiscriminativeRBM::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

| Object * PLearn::DiscriminativeRBM::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DiscriminativeRBM.cc.

| StaticInitializer DiscriminativeRBM::_static_initializer_ & PLearn::DiscriminativeRBM::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

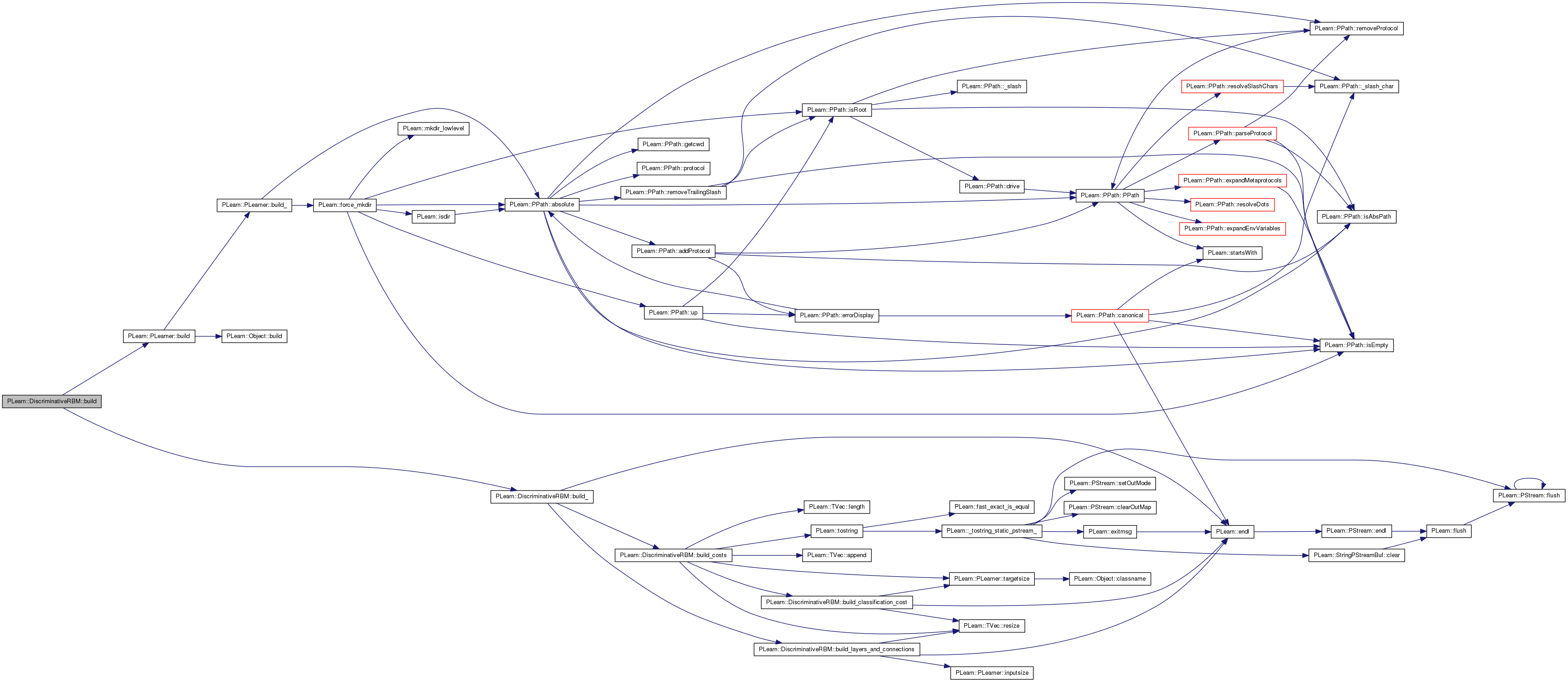

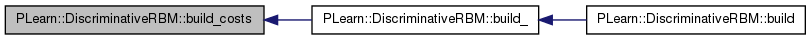

| void PLearn::DiscriminativeRBM::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 513 of file DiscriminativeRBM.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::DiscriminativeRBM::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 206 of file DiscriminativeRBM.cc.

References build_costs(), build_layers_and_connections(), PLearn::endl(), gen_learning_weight, PLearn::PLearner::inputsize_, n_classes, PLASSERT, semi_sup_learning_weight, and PLearn::PLearner::targetsize_.

Referenced by build().

{

MODULE_LOG << "build_() called" << endl;

if( inputsize_ > 0 && targetsize_ > 0)

{

PLASSERT( n_classes >= 2 );

PLASSERT( gen_learning_weight >= 0 );

PLASSERT( semi_sup_learning_weight >= 0 );

build_layers_and_connections();

build_costs();

}

}

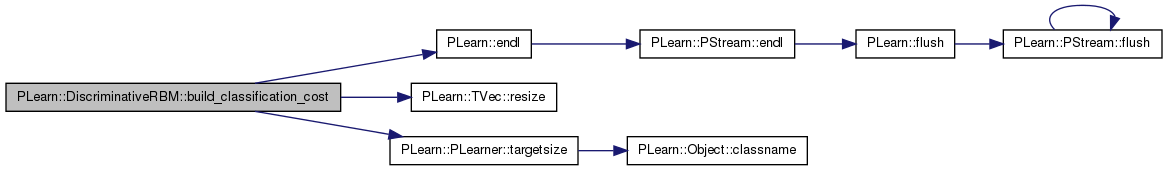

| void PLearn::DiscriminativeRBM::build_classification_cost | ( | ) | [private] |

Definition at line 330 of file DiscriminativeRBM.cc.

References classification_cost, classification_module, connection, PLearn::endl(), hidden_layer, input_layer, joint_connection, joint_layer, last_to_target, last_to_target_connection, multitask_classification_module, n_classes, n_classes_at_test_time, n_mean_field_iterations, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), target_layer, target_weights_L1_penalty_factor, target_weights_L2_penalty_factor, PLearn::PLearner::targetsize(), test_time_class_output, test_time_classification_module, unlabeled_class_index_begin, unlabeled_class_output, and unlabeled_classification_module.

Referenced by build_costs().

{

MODULE_LOG << "build_classification_cost() called" << endl;

if( targetsize() == 1 )

{

if (!classification_module ||

classification_module->target_layer->size != n_classes ||

classification_module->last_layer != hidden_layer ||

classification_module->previous_to_last != connection )

{

// We need to (re-)create 'last_to_target', and thus the classification

// module too.

// This is not systematically done so that the learner can be

// saved and loaded without losing learned parameters.

last_to_target = new RBMMatrixConnection();

last_to_target->up_size = hidden_layer->size;

last_to_target->down_size = n_classes;

last_to_target->L1_penalty_factor = target_weights_L1_penalty_factor;

last_to_target->L2_penalty_factor = target_weights_L2_penalty_factor;

last_to_target->random_gen = random_gen;

last_to_target->build();

target_layer = new RBMMultinomialLayer();

target_layer->size = n_classes;

target_layer->random_gen = random_gen;

target_layer->build();

classification_module = new RBMClassificationModule();

classification_module->previous_to_last = connection;

classification_module->last_layer = hidden_layer;

classification_module->last_to_target = last_to_target;

classification_module->target_layer =

dynamic_cast<RBMMultinomialLayer*>((RBMLayer*) target_layer);

classification_module->random_gen = random_gen;

classification_module->build();

}

classification_cost = new NLLCostModule();

classification_cost->input_size = n_classes;

classification_cost->target_size = 1;

classification_cost->build();

last_to_target = classification_module->last_to_target;

last_to_target_connection =

(RBMMatrixConnection*) classification_module->last_to_target;

target_layer = classification_module->target_layer;

joint_connection = classification_module->joint_connection;

joint_layer = new RBMMixedLayer();

joint_layer->sub_layers.resize( 2 );

joint_layer->sub_layers[0] = input_layer;

joint_layer->sub_layers[1] = target_layer;

joint_layer->random_gen = random_gen;

joint_layer->build();

if( unlabeled_class_index_begin != 0 )

{

unlabeled_class_output.resize( n_classes - unlabeled_class_index_begin );

PP<RBMMultinomialLayer> sub_layer = new RBMMultinomialLayer();

sub_layer->bias = target_layer->bias.subVec(

unlabeled_class_index_begin,

n_classes - unlabeled_class_index_begin);

sub_layer->size = n_classes - unlabeled_class_index_begin;

sub_layer->random_gen = random_gen;

sub_layer->build();

PP<RBMMatrixConnection> sub_connection = new RBMMatrixConnection();

sub_connection->weights = last_to_target->weights.subMatColumns(

unlabeled_class_index_begin,

n_classes - unlabeled_class_index_begin);

sub_connection->up_size = hidden_layer->size;

sub_connection->down_size = n_classes - unlabeled_class_index_begin;

sub_connection->random_gen = random_gen;

sub_connection->build();

unlabeled_classification_module = new RBMClassificationModule();

unlabeled_classification_module->previous_to_last = connection;

unlabeled_classification_module->last_layer = hidden_layer;

unlabeled_classification_module->last_to_target = sub_connection;

unlabeled_classification_module->target_layer = sub_layer;

unlabeled_classification_module->random_gen = random_gen;

unlabeled_classification_module->build();

}

if( n_classes_at_test_time > 0 && n_classes_at_test_time != n_classes )

{

test_time_class_output.resize( n_classes_at_test_time );

PP<RBMMultinomialLayer> sub_layer = new RBMMultinomialLayer();

sub_layer->bias = target_layer->bias.subVec(

0, n_classes_at_test_time );

sub_layer->size = n_classes_at_test_time;

sub_layer->random_gen = random_gen;

sub_layer->build();

PP<RBMMatrixConnection> sub_connection = new RBMMatrixConnection();

sub_connection->weights = last_to_target->weights.subMatColumns(

0, n_classes_at_test_time );

sub_connection->up_size = hidden_layer->size;

sub_connection->down_size = n_classes_at_test_time;

sub_connection->random_gen = random_gen;

sub_connection->build();

test_time_classification_module = new RBMClassificationModule();

test_time_classification_module->previous_to_last = connection;

test_time_classification_module->last_layer = hidden_layer;

test_time_classification_module->last_to_target = sub_connection;

test_time_classification_module->target_layer = sub_layer;

test_time_classification_module->random_gen = random_gen;

test_time_classification_module->build();

}

else

{

test_time_classification_module = 0;

}

}

else

{

if( n_classes != targetsize() )

PLERROR("In DiscriminativeRBM::build_classification_cost(): "

"n_classes should be equal to targetsize()");

// Multitask setting

if (!multitask_classification_module ||

multitask_classification_module->target_layer->size != n_classes ||

multitask_classification_module->last_layer != hidden_layer ||

multitask_classification_module->previous_to_last != connection )

{

// We need to (re-)create 'last_to_target', and thus the

// multitask_classification module too.

// This is not systematically done so that the learner can be

// saved and loaded without losing learned parameters.

last_to_target = new RBMMatrixConnection();

last_to_target->up_size = hidden_layer->size;

last_to_target->down_size = n_classes;

last_to_target->L1_penalty_factor = target_weights_L1_penalty_factor;

last_to_target->L2_penalty_factor = target_weights_L2_penalty_factor;

last_to_target->random_gen = random_gen;

last_to_target->build();

target_layer = new RBMBinomialLayer();

target_layer->size = n_classes;

target_layer->random_gen = random_gen;

target_layer->build();

multitask_classification_module =

new RBMMultitaskClassificationModule();

multitask_classification_module->previous_to_last = connection;

multitask_classification_module->last_layer = hidden_layer;

multitask_classification_module->last_to_target = last_to_target;

multitask_classification_module->target_layer =

dynamic_cast<RBMBinomialLayer*>((RBMLayer*) target_layer);

multitask_classification_module->fprop_outputs_activation = true;

multitask_classification_module->n_mean_field_iterations = n_mean_field_iterations;

multitask_classification_module->random_gen = random_gen;

multitask_classification_module->build();

}

last_to_target = multitask_classification_module->last_to_target;

last_to_target_connection =

(RBMMatrixConnection*) multitask_classification_module->last_to_target;

target_layer = multitask_classification_module->target_layer;

joint_connection = multitask_classification_module->joint_connection;

joint_layer = new RBMMixedLayer();

joint_layer->sub_layers.resize( 2 );

joint_layer->sub_layers[0] = input_layer;

joint_layer->sub_layers[1] = target_layer;

joint_layer->random_gen = random_gen;

joint_layer->build();

if( unlabeled_class_index_begin != 0 )

PLERROR("In DiscriminativeRBM::build_classification_cost(): "

"can't use unlabeled_class_index_begin != 0 in multitask setting");

if( n_classes_at_test_time > 0 && n_classes_at_test_time != n_classes )

PLERROR("In DiscriminativeRBM::build_classification_cost(): "

"can't use n_classes_at_test_time in multitask setting");

}

}

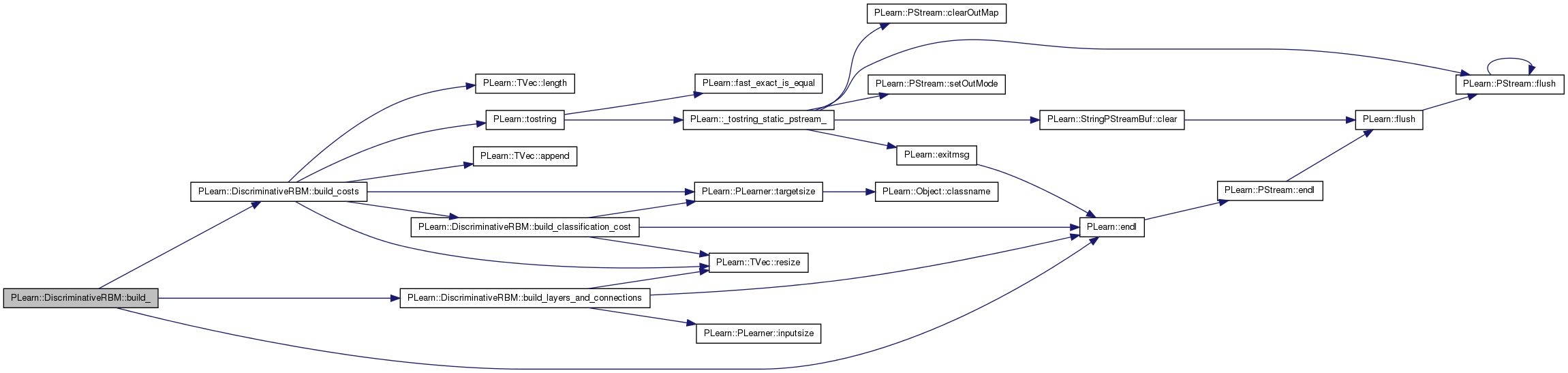

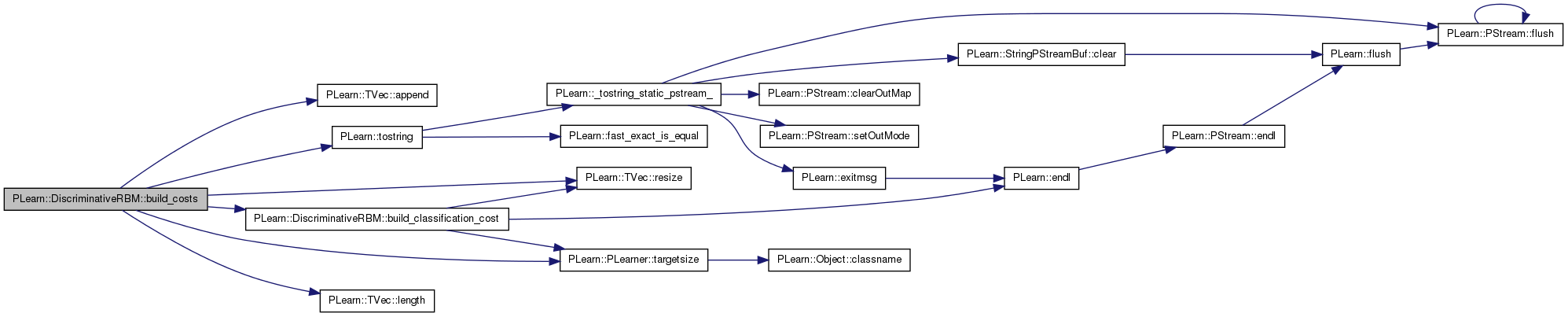

| void PLearn::DiscriminativeRBM::build_costs | ( | ) | [private] |

Definition at line 224 of file DiscriminativeRBM.cc.

References PLearn::TVec< T >::append(), build_classification_cost(), class_cost_index, cost_names, hamming_loss_index, i, PLearn::TVec< T >::length(), nll_cost_index, PLASSERT, PLearn::TVec< T >::resize(), PLearn::PLearner::targetsize(), and PLearn::tostring().

Referenced by build_().

{

cost_names.resize(0);

// build the classification module, its cost and the joint layer

build_classification_cost();

int current_index = 0;

cost_names.append("NLL");

nll_cost_index = current_index;

current_index++;

cost_names.append("class_error");

class_cost_index = current_index;

current_index++;

if( targetsize() > 1 )

{

cost_names.append("hamming_loss");

hamming_loss_index = current_index;

current_index++;

}

for( int i=0; i<targetsize(); i++ )

{

cost_names.append("class_error_" + tostring(i));

current_index++;

}

PLASSERT( current_index == cost_names.length() );

}

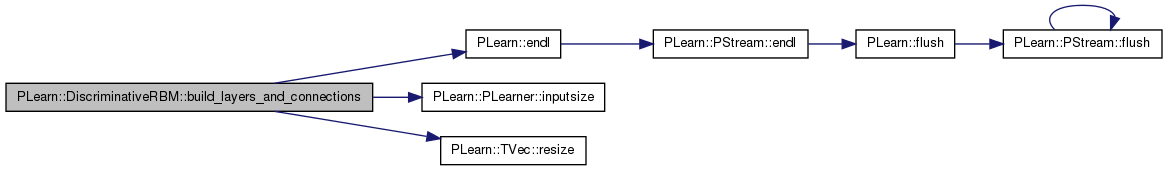

| void PLearn::DiscriminativeRBM::build_layers_and_connections | ( | ) | [private] |

Definition at line 259 of file DiscriminativeRBM.cc.

References before_class_output, class_gradient, class_output, connection, disc_neg_down_val, disc_neg_up_val, disc_pos_down_val, disc_pos_up_val, PLearn::endl(), gen_neg_down_val, gen_neg_up_val, gen_pos_down_val, gen_pos_up_val, hidden_layer, input_gradient, input_layer, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, n_classes, PLASSERT, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), semi_sup_neg_down_val, semi_sup_neg_up_val, semi_sup_pos_down_val, semi_sup_pos_up_val, and target_one_hot.

Referenced by build_().

{

MODULE_LOG << "build_layers_and_connections() called" << endl;

if( !input_layer )

PLERROR("In DiscriminativeRBM::build_layers_and_connections(): "

"input_layer must be provided");

if( !hidden_layer )

PLERROR("In DiscriminativeRBM::build_layers_and_connections(): "

"hidden_layer must be provided");

if( !connection )

PLERROR("DiscriminativeRBM::build_layers_and_connections(): \n"

"connection must be provided");

if( connection->up_size != hidden_layer->size ||

connection->down_size != input_layer->size )

PLERROR("DiscriminativeRBM::build_layers_and_connections(): \n"

"connection's size (%d x %d) should be %d x %d",

connection->up_size, connection->down_size,

hidden_layer->size, input_layer->size);

if( inputsize_ >= 0 )

PLASSERT( input_layer->size == inputsize() );

input_gradient.resize( inputsize() );

class_output.resize( n_classes );

before_class_output.resize( n_classes );

class_gradient.resize( n_classes );

target_one_hot.resize( n_classes );

disc_pos_down_val.resize( inputsize() + n_classes );

disc_pos_up_val.resize( hidden_layer->size );

disc_neg_down_val.resize( inputsize() + n_classes );

disc_neg_up_val.resize( hidden_layer->size );

gen_pos_down_val.resize( inputsize() + n_classes );

gen_pos_up_val.resize( hidden_layer->size );

gen_neg_down_val.resize( inputsize() + n_classes );

gen_neg_up_val.resize( hidden_layer->size );

semi_sup_pos_down_val.resize( inputsize() + n_classes );

semi_sup_pos_up_val.resize( hidden_layer->size );

semi_sup_neg_down_val.resize( inputsize() + n_classes );

semi_sup_neg_up_val.resize( hidden_layer->size );

if( !input_layer->random_gen )

{

input_layer->random_gen = random_gen;

input_layer->forget();

}

if( !hidden_layer->random_gen )

{

hidden_layer->random_gen = random_gen;

hidden_layer->forget();

}

if( !connection->random_gen )

{

connection->random_gen = random_gen;

connection->forget();

}

}

| string PLearn::DiscriminativeRBM::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DiscriminativeRBM.cc.

Referenced by train().

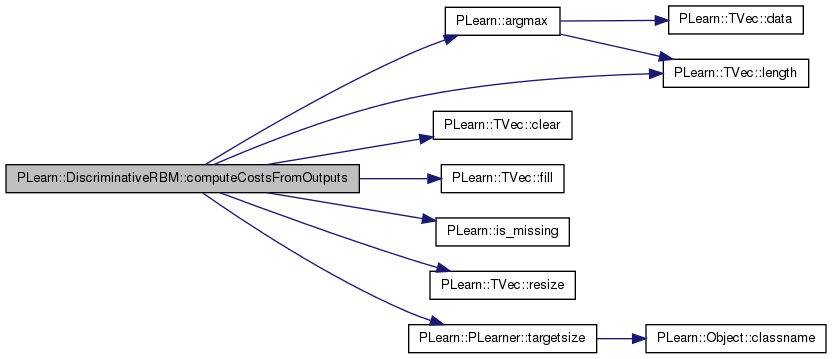

| void PLearn::DiscriminativeRBM::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 981 of file DiscriminativeRBM.cc.

References PLearn::argmax(), class_cost_index, class_output, PLearn::TVec< T >::clear(), cost_names, PLearn::TVec< T >::fill(), hamming_loss_index, PLearn::is_missing(), PLearn::TVec< T >::length(), MISSING_VALUE, nll_cost_index, pl_log, PLearn::TVec< T >::resize(), target_layer, and PLearn::PLearner::targetsize().

{

// Compute the costs from *already* computed output.

costs.resize( cost_names.length() );

costs.fill( MISSING_VALUE );

if( targetsize() == 1 )

{

if( !is_missing(target[0]) && (target[0] >= 0) )

{

//classification_cost->fprop( output, target, costs[nll_cost_index] );

//classification_cost->CostModule::fprop( output, target, costs[nll_cost_index] );

costs[nll_cost_index] = -pl_log(output[(int) round(target[0])]);

costs[class_cost_index] =

(argmax(output) == (int) round(target[0]))? 0 : 1;

}

}

else

{

costs.clear();

// This doesn't work. gcc bug?

//multitask_classification_cost->fprop( output, target,

// costs[nll_cost_index] );

//multitask_classification_cost->CostModule::fprop( output,

// target,

// nll_cost );

target_layer->fprop( output, class_output );

target_layer->activation << output;

target_layer->activation += target_layer->bias;

target_layer->setExpectation( class_output );

costs[ nll_cost_index ] = target_layer->fpropNLL( target );

for( int task=0; task<targetsize(); task++)

{

if( class_output[task] > 0.5 && target[task] != 1)

{

costs[ hamming_loss_index ]++;

costs[ hamming_loss_index + task + 1 ] = 1;

}

if( class_output[task] <= 0.5 && target[task] != 0)

{

costs[ hamming_loss_index ]++;

costs[ hamming_loss_index + task + 1 ] = 1;

}

}

if( costs[ hamming_loss_index ] > 0 )

costs[ class_cost_index ] = 1;

costs[ hamming_loss_index ] /= targetsize();

}

}

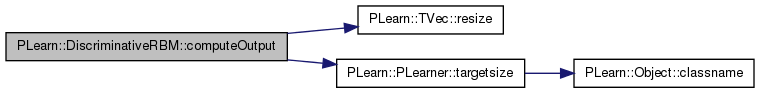

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 956 of file DiscriminativeRBM.cc.

References classification_module, multitask_classification_module, PLearn::TVec< T >::resize(), PLearn::PLearner::targetsize(), and test_time_classification_module.

{

// Compute the output from the input.

output.resize(0);

if( targetsize() == 1 )

{

if( test_time_classification_module )

{

test_time_classification_module->fprop( input,

output );

}

else

{

classification_module->fprop( input,

output );

}

}

else

{

multitask_classification_module->fprop( input,

output );

}

}

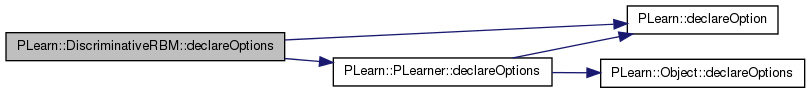

| void PLearn::DiscriminativeRBM::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 82 of file DiscriminativeRBM.cc.

References PLearn::OptionBase::buildoption, classification_cost, classification_module, connection, PLearn::declareOption(), PLearn::PLearner::declareOptions(), disc_decrease_ct, disc_learning_rate, do_not_use_discriminative_learning, gen_learning_every_n_samples, gen_learning_weight, hidden_layer, input_layer, joint_layer, PLearn::OptionBase::learntoption, multitask_classification_module, n_classes, n_classes_at_test_time, n_mean_field_iterations, PLearn::OptionBase::nosave, semi_sup_learning_weight, target_weights_L1_penalty_factor, target_weights_L2_penalty_factor, unlabeled_class_index_begin, use_exact_disc_gradient, and use_multi_conditional_learning.

{

declareOption(ol, "disc_learning_rate", &DiscriminativeRBM::disc_learning_rate,

OptionBase::buildoption,

"The learning rate used for discriminative learning.\n");

declareOption(ol, "disc_decrease_ct", &DiscriminativeRBM::disc_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the discriminative learning rate.\n");

declareOption(ol, "use_exact_disc_gradient",

&DiscriminativeRBM::use_exact_disc_gradient,

OptionBase::buildoption,

"Indication that the exact gradient should be used for\n"

"discriminative learning (instead of the CD gradient).\n");

declareOption(ol, "gen_learning_weight", &DiscriminativeRBM::gen_learning_weight,

OptionBase::buildoption,

"The weight of the generative learning term, for\n"

"hybrid discriminative/generative learning.\n");

declareOption(ol, "use_multi_conditional_learning",

&DiscriminativeRBM::use_multi_conditional_learning,

OptionBase::buildoption,

"Indication that multi-conditional learning should\n"

"be used instead of generative learning.\n");

declareOption(ol, "semi_sup_learning_weight",

&DiscriminativeRBM::semi_sup_learning_weight,

OptionBase::buildoption,

"The weight of the semi-supervised learning term, for\n"

"unsupervised learning on unlabeled data.\n");

declareOption(ol, "n_classes", &DiscriminativeRBM::n_classes,

OptionBase::buildoption,

"Number of classes in the training set.\n"

);

declareOption(ol, "input_layer", &DiscriminativeRBM::input_layer,

OptionBase::buildoption,

"The input layer of the RBM.\n");

declareOption(ol, "hidden_layer", &DiscriminativeRBM::hidden_layer,

OptionBase::buildoption,

"The hidden layer of the RBM.\n");

declareOption(ol, "connection", &DiscriminativeRBM::connection,

OptionBase::buildoption,

"The connection weights between the input and hidden layer.\n");

declareOption(ol, "target_weights_L1_penalty_factor",

&DiscriminativeRBM::target_weights_L1_penalty_factor,

OptionBase::buildoption,

"Target weights' L1_penalty_factor.\n");

declareOption(ol, "target_weights_L2_penalty_factor",

&DiscriminativeRBM::target_weights_L2_penalty_factor,

OptionBase::buildoption,

"Target weights' L2_penalty_factor.\n");

declareOption(ol, "do_not_use_discriminative_learning",

&DiscriminativeRBM::do_not_use_discriminative_learning,

OptionBase::buildoption,

"Indication that discriminative learning should not be used.\n");

declareOption(ol, "unlabeled_class_index_begin",

&DiscriminativeRBM::unlabeled_class_index_begin,

OptionBase::buildoption,

"The smallest index for the classes of the unlabeled data.\n");

declareOption(ol, "n_classes_at_test_time",

&DiscriminativeRBM::n_classes_at_test_time,

OptionBase::buildoption,

"The number of classes to discriminate from during test.\n"

"The classes that will be discriminated are indexed\n"

"from 0 to n_classes_at_test_time.\n");

declareOption(ol, "n_mean_field_iterations",

&DiscriminativeRBM::n_mean_field_iterations,

OptionBase::buildoption,

"Number of mean field iterations for the approximate computation of p(y|x)\n"

"for multitask learning.\n");

declareOption(ol, "gen_learning_every_n_samples",

&DiscriminativeRBM::gen_learning_every_n_samples,

OptionBase::buildoption,

"Determines the frequency of a generative learning update.\n"

"For example, set this option to 100 in order to do an\n"

"update every 100 samples. The gen_learning_weight will\n"

"then be multiplied by 100.");

declareOption(ol, "classification_module",

&DiscriminativeRBM::classification_module,

OptionBase::learntoption,

"The module computing the class probabilities.\n"

);

declareOption(ol, "multitask_classification_module",

&DiscriminativeRBM::multitask_classification_module,

OptionBase::learntoption,

"The module approximating the multitask class probabilities.\n"

);

declareOption(ol, "classification_cost",

&DiscriminativeRBM::classification_cost,

OptionBase::nosave,

"The module computing the classification cost function (NLL)"

" on top\n"

"of classification_module.\n"

);

declareOption(ol, "joint_layer", &DiscriminativeRBM::joint_layer,

OptionBase::nosave,

"Concatenation of input_layer and the target layer\n"

"(that is inside classification_module).\n"

);

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::DiscriminativeRBM::declaringFile | ( | ) | [inline, static] |

| DiscriminativeRBM * PLearn::DiscriminativeRBM::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DiscriminativeRBM.cc.

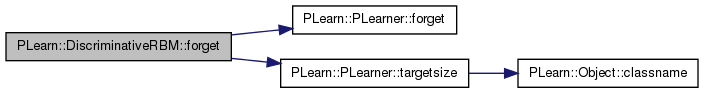

| void PLearn::DiscriminativeRBM::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 573 of file DiscriminativeRBM.cc.

References classification_cost, classification_module, connection, PLearn::PLearner::forget(), hidden_layer, input_layer, multitask_classification_module, and PLearn::PLearner::targetsize().

{

inherited::forget();

input_layer->forget();

hidden_layer->forget();

connection->forget();

if( targetsize() > 1 )

{

multitask_classification_module->forget();

}

else

{

classification_cost->forget();

classification_module->forget();

}

}

| OptionList & PLearn::DiscriminativeRBM::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DiscriminativeRBM.cc.

| OptionMap & PLearn::DiscriminativeRBM::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DiscriminativeRBM.cc.

| RemoteMethodMap & PLearn::DiscriminativeRBM::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DiscriminativeRBM.cc.

| TVec< string > PLearn::DiscriminativeRBM::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 1040 of file DiscriminativeRBM.cc.

References cost_names.

{

// Return the names of the costs computed by computeCostsFromOutputs

// (these may or may not be exactly the same as what's returned by

// getTrainCostNames).

return cost_names;

}

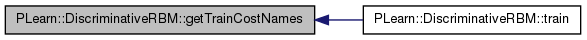

| TVec< string > PLearn::DiscriminativeRBM::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1049 of file DiscriminativeRBM.cc.

References cost_names.

Referenced by train().

{

return cost_names;

}

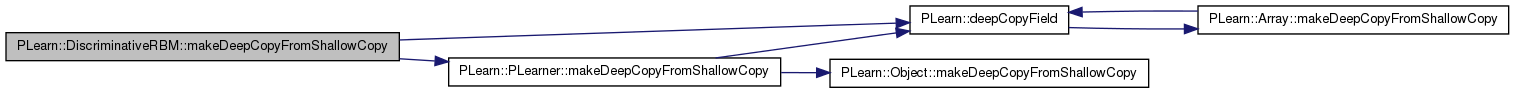

| void PLearn::DiscriminativeRBM::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 522 of file DiscriminativeRBM.cc.

References before_class_output, class_gradient, class_output, classification_cost, classification_module, connection, cost_names, PLearn::deepCopyField(), disc_neg_down_val, disc_neg_up_val, disc_pos_down_val, disc_pos_up_val, gen_neg_down_val, gen_neg_up_val, gen_pos_down_val, gen_pos_up_val, hidden_layer, input_gradient, input_layer, joint_connection, joint_layer, last_to_target, last_to_target_connection, PLearn::PLearner::makeDeepCopyFromShallowCopy(), multitask_classification_module, semi_sup_neg_down_val, semi_sup_neg_up_val, semi_sup_pos_down_val, semi_sup_pos_up_val, target_layer, target_one_hot, test_time_class_output, test_time_classification_module, unlabeled_class_output, and unlabeled_classification_module.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(input_layer, copies);

deepCopyField(hidden_layer, copies);

deepCopyField(connection, copies);

deepCopyField(classification_module, copies);

deepCopyField(multitask_classification_module, copies);

deepCopyField(cost_names, copies);

deepCopyField(classification_cost, copies);

deepCopyField(joint_layer, copies);

deepCopyField(last_to_target, copies);

deepCopyField(last_to_target_connection, copies);

deepCopyField(joint_connection, copies);

deepCopyField(target_layer, copies);

deepCopyField(unlabeled_classification_module, copies);

deepCopyField(test_time_classification_module, copies);

deepCopyField(target_one_hot, copies);

deepCopyField(disc_pos_down_val, copies);

deepCopyField(disc_pos_up_val, copies);

deepCopyField(disc_neg_down_val, copies);

deepCopyField(disc_neg_up_val, copies);

deepCopyField(gen_pos_down_val, copies);

deepCopyField(gen_pos_up_val, copies);

deepCopyField(gen_neg_down_val, copies);

deepCopyField(gen_neg_up_val, copies);

deepCopyField(semi_sup_pos_down_val, copies);

deepCopyField(semi_sup_pos_up_val, copies);

deepCopyField(semi_sup_neg_down_val, copies);

deepCopyField(semi_sup_neg_up_val, copies);

deepCopyField(input_gradient, copies);

deepCopyField(class_output, copies);

deepCopyField(before_class_output, copies);

deepCopyField(unlabeled_class_output, copies);

deepCopyField(test_time_class_output, copies);

deepCopyField(class_gradient, copies);

}

| int PLearn::DiscriminativeRBM::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 565 of file DiscriminativeRBM.cc.

References n_classes, and n_classes_at_test_time.

{

return n_classes_at_test_time > 0 ? n_classes_at_test_time : n_classes;

}

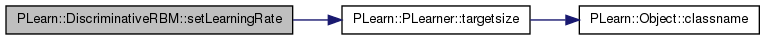

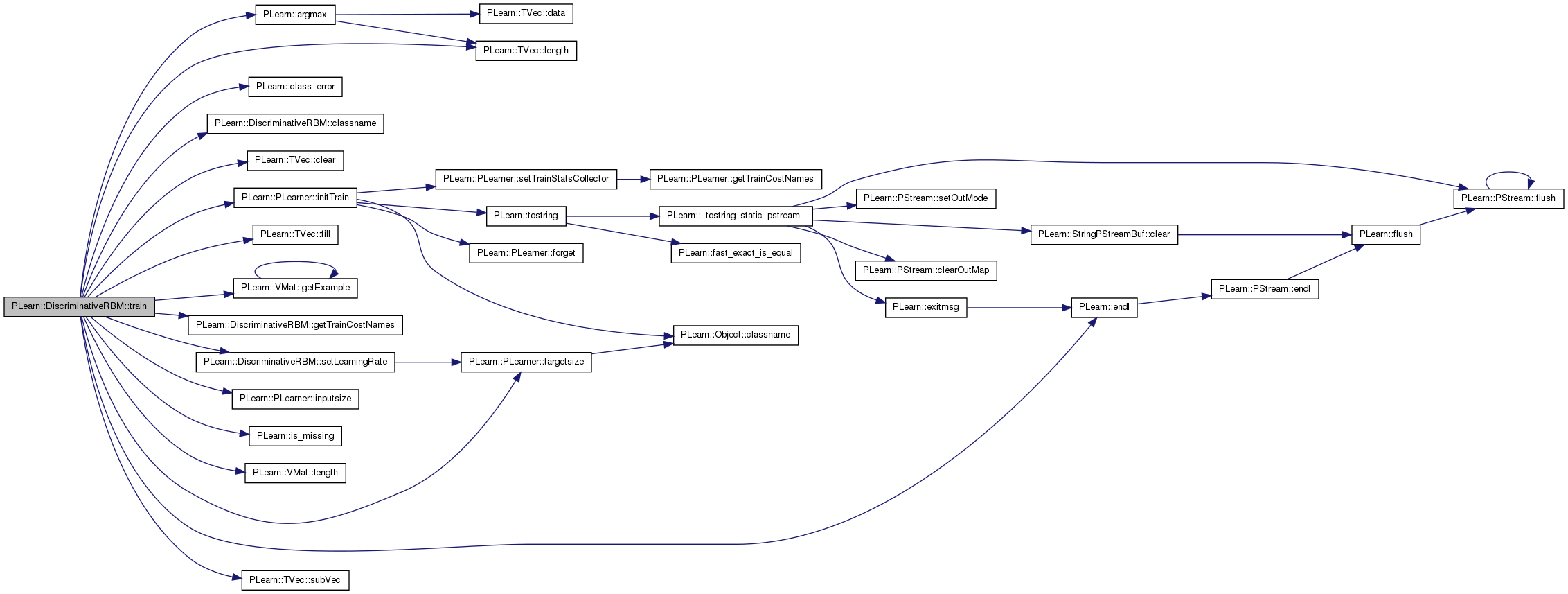

| void PLearn::DiscriminativeRBM::setLearningRate | ( | real | the_learning_rate | ) | [private] |

Definition at line 1057 of file DiscriminativeRBM.cc.

References classification_cost, connection, hidden_layer, input_layer, last_to_target, target_layer, and PLearn::PLearner::targetsize().

Referenced by train().

{

input_layer->setLearningRate( the_learning_rate );

hidden_layer->setLearningRate( the_learning_rate );

connection->setLearningRate( the_learning_rate );

target_layer->setLearningRate( the_learning_rate );

last_to_target->setLearningRate( the_learning_rate );

if( targetsize() == 1)

classification_cost->setLearningRate( the_learning_rate );

//else

// multitask_classification_cost->setLearningRate( the_learning_rate );

//classification_module->setLearningRate( the_learning_rate );

}

| void PLearn::DiscriminativeRBM::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

This makes sure that the samples on which generative learning is done changes from one epoch to another

Implements PLearn::PLearner.

Definition at line 594 of file DiscriminativeRBM.cc.

References PLearn::argmax(), before_class_output, class_cost_index, PLearn::class_error(), class_gradient, class_output, classification_cost, classification_module, classname(), PLearn::TVec< T >::clear(), connection, disc_decrease_ct, disc_learning_rate, disc_neg_down_val, disc_neg_up_val, disc_pos_down_val, disc_pos_up_val, do_not_use_discriminative_learning, PLearn::endl(), PLearn::TVec< T >::fill(), gen_learning_every_n_samples, gen_learning_weight, gen_neg_down_val, gen_neg_up_val, gen_pos_down_val, gen_pos_up_val, PLearn::VMat::getExample(), getTrainCostNames(), hamming_loss_index, hidden_layer, PLearn::PLearner::initTrain(), input_gradient, input_layer, PLearn::PLearner::inputsize(), PLearn::is_missing(), joint_connection, joint_layer, last_to_target_connection, PLearn::VMat::length(), PLearn::TVec< T >::length(), MISSING_VALUE, multitask_classification_module, n_classes, nll_cost_index, PLearn::PLearner::nstages, PLASSERT, PLERROR, PLearn::PLearner::report_progress, semi_sup_learning_weight, semi_sup_neg_down_val, semi_sup_neg_up_val, semi_sup_pos_down_val, semi_sup_pos_up_val, setLearningRate(), PLearn::PLearner::stage, PLearn::TVec< T >::subVec(), target_layer, target_one_hot, PLearn::PLearner::targetsize(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, unlabeled_class_index_begin, unlabeled_class_output, unlabeled_classification_module, use_exact_disc_gradient, and use_multi_conditional_learning.

{

MODULE_LOG << "train() called " << endl;

MODULE_LOG << "stage = " << stage

<< ", target nstages = " << nstages << endl;

PLASSERT( train_set );

Vec input( inputsize() );

Vec target( targetsize() );

int target_index = -1;

real weight;

real nll_cost;

real class_error;

TVec<string> train_cost_names = getTrainCostNames() ;

Vec train_costs( train_cost_names.length() );

train_costs.fill(MISSING_VALUE) ;

int nsamples = train_set->length();

int init_stage = stage;

if( !initTrain() )

{

MODULE_LOG << "train() aborted" << endl;

return;

}

PP<ProgressBar> pb;

// clear stats of previous epoch

train_stats->forget();

if( report_progress )

pb = new ProgressBar( "Training "

+ classname(),

nstages - stage );

int offset = (int)round(stage/nstages) % gen_learning_every_n_samples;

for( ; stage<nstages ; stage++ )

{

train_set->getExample(stage%nsamples, input, target, weight);

if( pb )

pb->update( stage - init_stage + 1 );

if( targetsize() == 1 )

{

target_one_hot.clear();

if( !is_missing(target[0]) && (target[0] >= 0) )

{

target_index = (int)round( target[0] );

target_one_hot[ target_index ] = 1;

}

}

else

{

target_one_hot << target;

}

// Get CD stats...

// ... for discriminative learning

if( !do_not_use_discriminative_learning &&

!use_exact_disc_gradient &&

( ( !is_missing(target[0]) && (target[0] >= 0) ) || targetsize() > 1 ) )

{

// Positive phase

// Clamp visible units

target_layer->sample << target_one_hot;

input_layer->sample << input ;

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

hidden_layer->generateSample();

disc_pos_down_val << joint_layer->sample;

disc_pos_up_val << hidden_layer->expectation;

// Negative phase

// Down pass

last_to_target_connection->setAsUpInput( hidden_layer->sample );

target_layer->getAllActivations( last_to_target_connection );

target_layer->computeExpectation();

target_layer->generateSample();

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

disc_neg_down_val << joint_layer->sample;

disc_neg_up_val << hidden_layer->expectation;

}

// ... for generative learning

if( (stage + offset) % gen_learning_every_n_samples == 0 )

{

if( ( ( !is_missing(target[0]) && (target[0] >= 0) ) || targetsize() > 1 ) &&

gen_learning_weight > 0 )

{

// Positive phase

if( !use_exact_disc_gradient && !do_not_use_discriminative_learning )

{

// Use previous computations

gen_pos_down_val << disc_pos_down_val;

gen_pos_up_val << disc_pos_up_val;

hidden_layer->setExpectation( gen_pos_up_val );

hidden_layer->generateSample();

}

else

{

// Clamp visible units

target_layer->sample << target_one_hot;

input_layer->sample << input ;

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

hidden_layer->generateSample();

gen_pos_down_val << joint_layer->sample;

gen_pos_up_val << hidden_layer->expectation;

}

// Negative phase

if( !use_multi_conditional_learning )

{

// Down pass

joint_connection->setAsUpInput( hidden_layer->sample );

joint_layer->getAllActivations( joint_connection );

joint_layer->computeExpectation();

joint_layer->generateSample();

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

}

else

{

target_layer->sample << target_one_hot;

// Down pass

connection->setAsUpInput( hidden_layer->sample );

input_layer->getAllActivations( connection );

input_layer->computeExpectation();

input_layer->generateSample();

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

}

gen_neg_down_val << joint_layer->sample;

gen_neg_up_val << hidden_layer->expectation;

}

}

// ... and for semi-supervised learning

if( targetsize() > 1 && semi_sup_learning_weight > 0 )

PLERROR("DiscriminativeRBM::train(): semi-supervised learning "

"is not implemented yet for multi-task learning.");

if( ( is_missing(target[0]) || target[0] < 0 ) && semi_sup_learning_weight > 0 )

{

// Positive phase

// Clamp visible units and sample from p(y|x)

if( unlabeled_classification_module )

{

unlabeled_classification_module->fprop( input,

unlabeled_class_output );

class_output.clear();

class_output.subVec( unlabeled_class_index_begin,

n_classes - unlabeled_class_index_begin )

<< unlabeled_class_output;

}

else

{

classification_module->fprop( input,

class_output );

}

target_layer->setExpectation( class_output );

target_layer->generateSample();

input_layer->sample << input ;

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

hidden_layer->generateSample();

semi_sup_pos_down_val << joint_layer->sample;

semi_sup_pos_up_val << hidden_layer->expectation;

// Negative phase

// Down pass

joint_connection->setAsUpInput( hidden_layer->sample );

joint_layer->getAllActivations( joint_connection );

joint_layer->computeExpectation();

joint_layer->generateSample();

// Up pass

joint_connection->setAsDownInput( joint_layer->sample );

hidden_layer->getAllActivations( joint_connection );

hidden_layer->computeExpectation();

semi_sup_neg_down_val << joint_layer->sample;

semi_sup_neg_up_val << hidden_layer->expectation;

}

if( train_set->weightsize() == 0 )

setLearningRate( disc_learning_rate / (1. + disc_decrease_ct * stage ));

else

setLearningRate( weight * disc_learning_rate / (1. + disc_decrease_ct * stage ));

// Get gradient and update

if( !do_not_use_discriminative_learning &&

use_exact_disc_gradient &&

( ( !is_missing(target[0]) && (target[0] >= 0) ) || targetsize() > 1 ) )

{

if( targetsize() == 1)

{

PLASSERT( target_index >= 0 );

classification_module->fprop( input, class_output );

// This doesn't work. gcc bug?

//classification_cost->fprop( class_output, target, nll_cost );

classification_cost->CostModule::fprop( class_output, target,

nll_cost );

class_error = ( argmax(class_output) == target_index ) ? 0: 1;

train_costs[nll_cost_index] = nll_cost;

train_costs[class_cost_index] = class_error;

classification_cost->bpropUpdate( class_output, target, nll_cost,

class_gradient );

classification_module->bpropUpdate( input, class_output,

input_gradient, class_gradient );

train_stats->update( train_costs );

}

else

{

multitask_classification_module->fprop( input, before_class_output );

// This doesn't work. gcc bug?

//multitask_classification_cost->fprop( class_output, target,

// nll_cost );

//multitask_classification_cost->CostModule::fprop( class_output,

// target,

// nll_cost );

target_layer->fprop( before_class_output, class_output );

target_layer->activation << before_class_output;

target_layer->activation += target_layer->bias;

target_layer->setExpectation( class_output );

nll_cost = target_layer->fpropNLL( target );

train_costs.clear();

train_costs[nll_cost_index] = nll_cost;

for( int task=0; task<targetsize(); task++)

{

if( class_output[task] > 0.5 && target[task] != 1)

{

train_costs[ hamming_loss_index ]++;

train_costs[ hamming_loss_index + task + 1 ] = 1;

}

if( class_output[task] <= 0.5 && target[task] != 0)

{

train_costs[ hamming_loss_index ]++;

train_costs[ hamming_loss_index + task + 1 ] = 1;

}

}

if( train_costs[ hamming_loss_index ] > 0 )

train_costs[ class_cost_index ] = 1;

train_costs[ hamming_loss_index ] /= targetsize();

//multitask_classification_cost->bpropUpdate(

// class_output, target, nll_cost,

// class_gradient );

class_gradient.clear();

target_layer->bpropNLL( target, nll_cost, class_gradient );

target_layer->update( class_gradient );

multitask_classification_module->bpropUpdate(

input, before_class_output,

input_gradient, class_gradient );

train_stats->update( train_costs );

}

}

// CD Updates

if( !do_not_use_discriminative_learning &&

!use_exact_disc_gradient && ( !is_missing(target[0]) && (target[0] >= 0) ) )

{

joint_layer->update( disc_pos_down_val, disc_neg_down_val );

hidden_layer->update( disc_pos_up_val, disc_neg_up_val );

joint_connection->update( disc_pos_down_val, disc_pos_up_val,

disc_neg_down_val, disc_neg_up_val);

}

if( (stage + offset) % gen_learning_every_n_samples == 0 )

{

if( ( !is_missing(target[0]) && (target[0] >= 0) ) && gen_learning_weight > 0 )

{

if( train_set->weightsize() == 0 )

setLearningRate( gen_learning_every_n_samples * gen_learning_weight * disc_learning_rate /

(1. + disc_decrease_ct * stage ));

else

setLearningRate( weight * gen_learning_every_n_samples * gen_learning_weight * disc_learning_rate /

(1. + disc_decrease_ct * stage ));

joint_layer->update( gen_pos_down_val, gen_neg_down_val );

hidden_layer->update( gen_pos_up_val, gen_neg_up_val );

joint_connection->update( gen_pos_down_val, gen_pos_up_val,

gen_neg_down_val, gen_neg_up_val);

}

}

if( ( is_missing(target[0]) || (target[0] < 0) ) && semi_sup_learning_weight > 0 )

{

if( train_set->weightsize() == 0 )

setLearningRate( semi_sup_learning_weight * disc_learning_rate /

(1. + disc_decrease_ct * stage ));

else

setLearningRate( weight * semi_sup_learning_weight * disc_learning_rate /

(1. + disc_decrease_ct * stage ));

joint_layer->update( semi_sup_pos_down_val, semi_sup_neg_down_val );

hidden_layer->update( semi_sup_pos_up_val, semi_sup_neg_up_val );

joint_connection->update( semi_sup_pos_down_val, semi_sup_pos_up_val,

semi_sup_neg_down_val, semi_sup_neg_up_val);

}

}

train_stats->finalize();

}

Reimplemented from PLearn::PLearner.

Definition at line 214 of file DiscriminativeRBM.h.

Vec PLearn::DiscriminativeRBM::before_class_output [mutable, protected] |

Definition at line 270 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::DiscriminativeRBM::class_cost_index [protected] |

Keeps the index of the class_error cost in train_costs.

Definition at line 279 of file DiscriminativeRBM.h.

Referenced by build_costs(), computeCostsFromOutputs(), and train().

Vec PLearn::DiscriminativeRBM::class_gradient [mutable, protected] |

Definition at line 273 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::class_output [mutable, protected] |

Definition at line 269 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), computeCostsFromOutputs(), makeDeepCopyFromShallowCopy(), and train().

The module computing the classification cost function (NLL) on top of classification_module.

Definition at line 142 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

The module computing the probabilities of the different classes.

Definition at line 131 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

The connection weights between the input and hidden layer.

Definition at line 100 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), build_layers_and_connections(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

The computed cost names.

Definition at line 138 of file DiscriminativeRBM.h.

Referenced by build_costs(), computeCostsFromOutputs(), getTestCostNames(), getTrainCostNames(), and makeDeepCopyFromShallowCopy().

The decrease constant of the discriminative learning rate.

Definition at line 72 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

The learning rate used for discriminative learning.

Definition at line 69 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

Vec PLearn::DiscriminativeRBM::disc_neg_down_val [mutable, protected] |

Definition at line 254 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::disc_neg_up_val [mutable, protected] |

Definition at line 255 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::disc_pos_down_val [mutable, protected] |

Definition at line 252 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::disc_pos_up_val [mutable, protected] |

Definition at line 253 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Indication that discriminative learning should not be used.

Definition at line 109 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

Determines the frequency of a generative learning update.

For example, set this option to 100 in order to do an update every 100 samples. The gen_learning_weight will then be multiplied by 100.

Definition at line 127 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

The weight of the generative learning term, for hybrid discriminative/generative learning.

Definition at line 80 of file DiscriminativeRBM.h.

Referenced by build_(), declareOptions(), and train().

Vec PLearn::DiscriminativeRBM::gen_neg_down_val [mutable, protected] |

Definition at line 259 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::gen_neg_up_val [mutable, protected] |

Definition at line 260 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::gen_pos_down_val [mutable, protected] |

Definition at line 257 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::gen_pos_up_val [mutable, protected] |

Definition at line 258 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::DiscriminativeRBM::hamming_loss_index [protected] |

Keeps the index of the hamming_loss cost in train_costs.

Definition at line 282 of file DiscriminativeRBM.h.

Referenced by build_costs(), computeCostsFromOutputs(), and train().

The hidden layer of the RBM.

Definition at line 97 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), build_layers_and_connections(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

Vec PLearn::DiscriminativeRBM::input_gradient [mutable, protected] |

Temporary variables for gradient descent.

Definition at line 268 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

The input layer of the RBM.

Definition at line 94 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), build_layers_and_connections(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

PP<RBMConnection> PLearn::DiscriminativeRBM::joint_connection [protected] |

Connection weights between the hidden layer and the visible layer (pointer to classification_module->joint_connection)

Definition at line 237 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), makeDeepCopyFromShallowCopy(), and train().

Concatenation of input_layer and the target layer (that is inside classification_module)

Definition at line 146 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Matrix connection weights between the hidden layer and the target layer (pointer to classification_module->last_to_target)

Definition at line 229 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Connection weights between the hidden layer and the target layer (pointer to classification_module->last_to_target)

Definition at line 233 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), makeDeepCopyFromShallowCopy(), and train().

The module for approximate computation of the probabilities of the different classes.

Definition at line 135 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Number of classes in the training set.

Definition at line 91 of file DiscriminativeRBM.h.

Referenced by build_(), build_classification_cost(), build_layers_and_connections(), declareOptions(), outputsize(), and train().

The number of classes to discriminate from during test.

The classes that will be discriminated are indexed from 0 to n_classes_at_test_time.

Definition at line 117 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), declareOptions(), and outputsize().

Number of mean field iterations for the approximate computation of p(y|x) for multitask learning.

Definition at line 121 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), and declareOptions().

int PLearn::DiscriminativeRBM::nll_cost_index [protected] |

Keeps the index of the NLL cost in train_costs.

Definition at line 276 of file DiscriminativeRBM.h.

Referenced by build_costs(), computeCostsFromOutputs(), and train().

The weight of the semi-supervised learning term, for unsupervised learning on unlabeled data.

Definition at line 88 of file DiscriminativeRBM.h.

Referenced by build_(), declareOptions(), and train().

Vec PLearn::DiscriminativeRBM::semi_sup_neg_down_val [mutable, protected] |

Definition at line 264 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::semi_sup_neg_up_val [mutable, protected] |

Definition at line 265 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::semi_sup_pos_down_val [mutable, protected] |

Definition at line 262 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DiscriminativeRBM::semi_sup_pos_up_val [mutable, protected] |

Definition at line 263 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

PP<RBMLayer> PLearn::DiscriminativeRBM::target_layer [protected] |

Part of the RBM visible layer corresponding to the target (pointer to classification_module->target_layer)

Definition at line 241 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), computeCostsFromOutputs(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

Vec PLearn::DiscriminativeRBM::target_one_hot [mutable, protected] |

Temporary variables for Contrastive Divergence.

Definition at line 250 of file DiscriminativeRBM.h.

Referenced by build_layers_and_connections(), makeDeepCopyFromShallowCopy(), and train().

Target weights' L1_penalty_factor.

Definition at line 103 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), and declareOptions().

Target weights' L2_penalty_factor.

Definition at line 106 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), and declareOptions().

Vec PLearn::DiscriminativeRBM::test_time_class_output [mutable, protected] |

Definition at line 272 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), and makeDeepCopyFromShallowCopy().

Classification module for when n_classes_at_test_time != n_classes.

Definition at line 247 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), computeOutput(), and makeDeepCopyFromShallowCopy().

The smallest index for the classes of the unlabeled data.

Definition at line 112 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), declareOptions(), and train().

Vec PLearn::DiscriminativeRBM::unlabeled_class_output [mutable, protected] |

Definition at line 271 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), makeDeepCopyFromShallowCopy(), and train().

Classification module for when unlabeled_class_index_begin != 0.

Definition at line 244 of file DiscriminativeRBM.h.

Referenced by build_classification_cost(), makeDeepCopyFromShallowCopy(), and train().

Indication that the exact gradient should be used for discriminative learning (instead of the CD gradient)

Definition at line 76 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

Indication that multi-conditional learning should be used instead of generative learning.

Definition at line 84 of file DiscriminativeRBM.h.

Referenced by declareOptions(), and train().

1.7.4

1.7.4