|

PLearn 0.1

|

|

PLearn 0.1

|

Neural net, learned layer-wise in a greedy fashion. More...

#include <DeepBeliefNet.h>

Public Member Functions | |

| DeepBeliefNet () | |

| Default constructor. | |

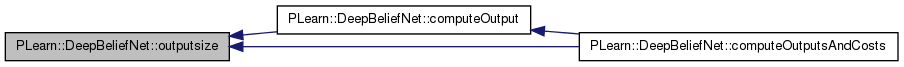

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

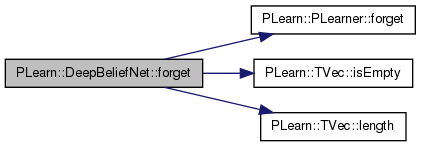

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

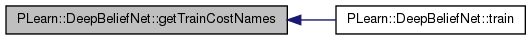

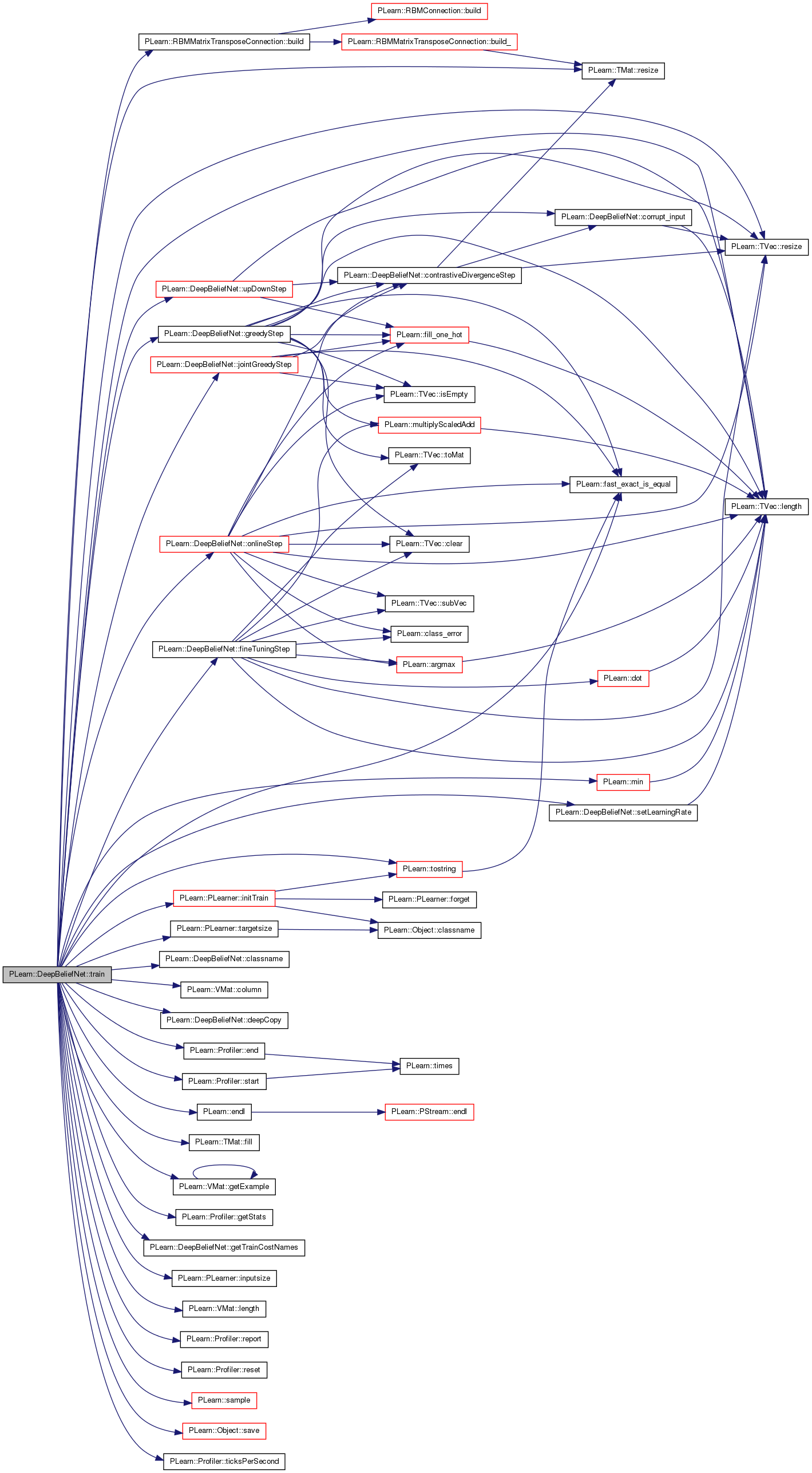

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

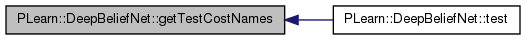

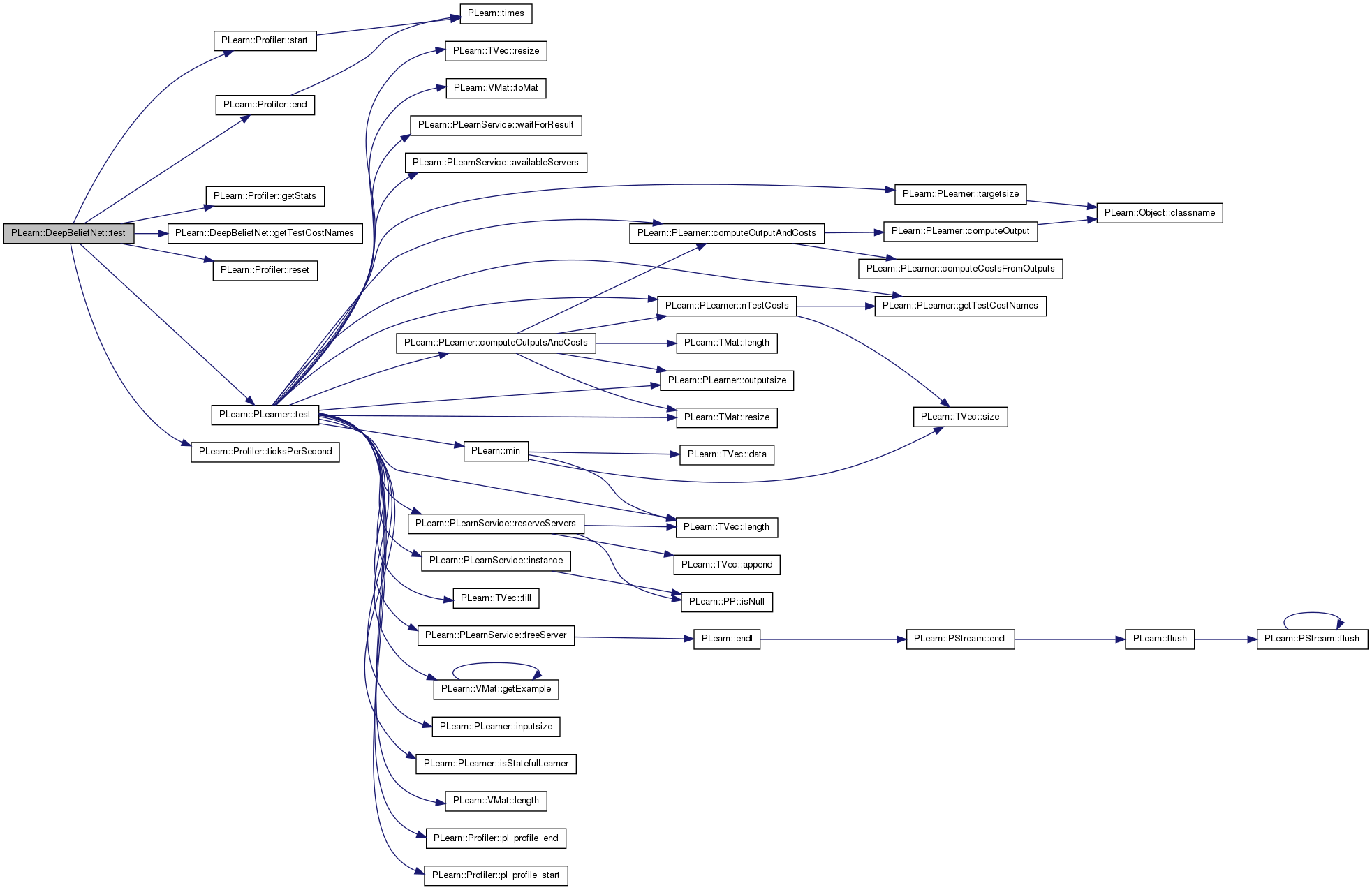

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Re-implementation of the PLearner test() for profiling purposes. | |

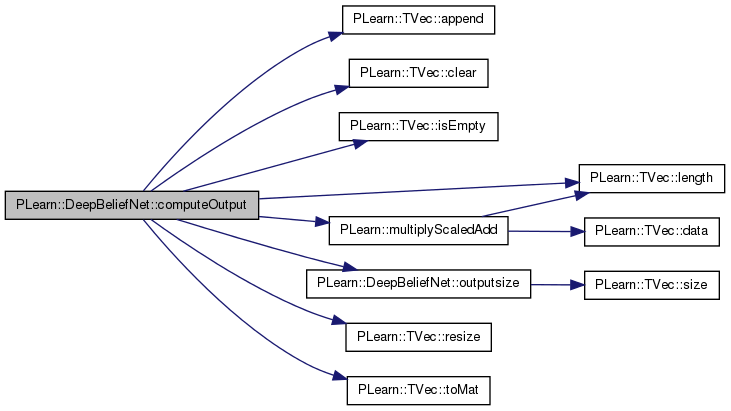

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

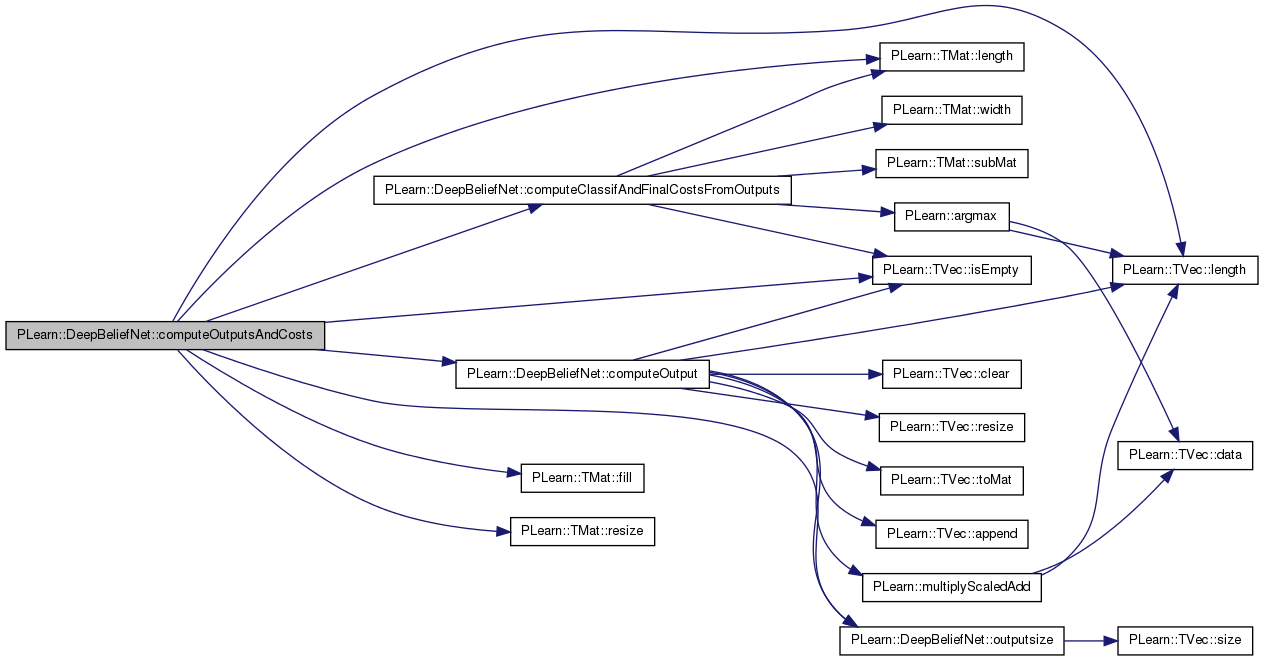

| virtual void | computeOutputsAndCosts (const Mat &inputs, const Mat &targets, Mat &outputs, Mat &costs) const |

| This function is usefull when the NLL CostModule AND/OR the final_cost Module are more efficient with batch computation (or need to be computed on a bunch of examples, as LayerCostModule) | |

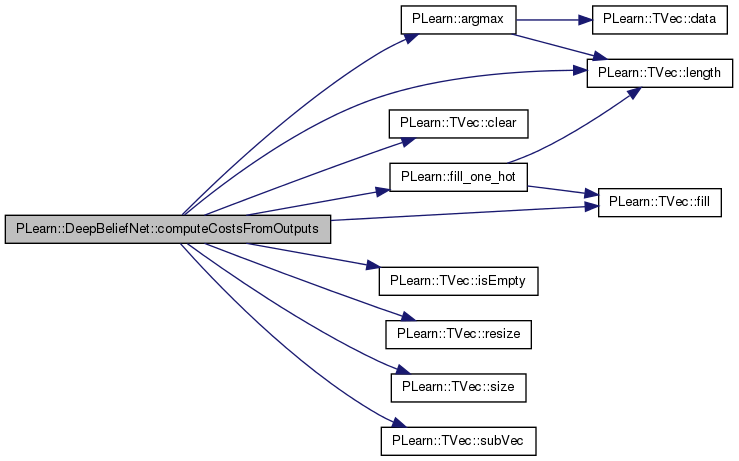

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual void | computeClassifAndFinalCostsFromOutputs (const Mat &inputs, const Mat &outputs, const Mat &targets, Mat &costs) const |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

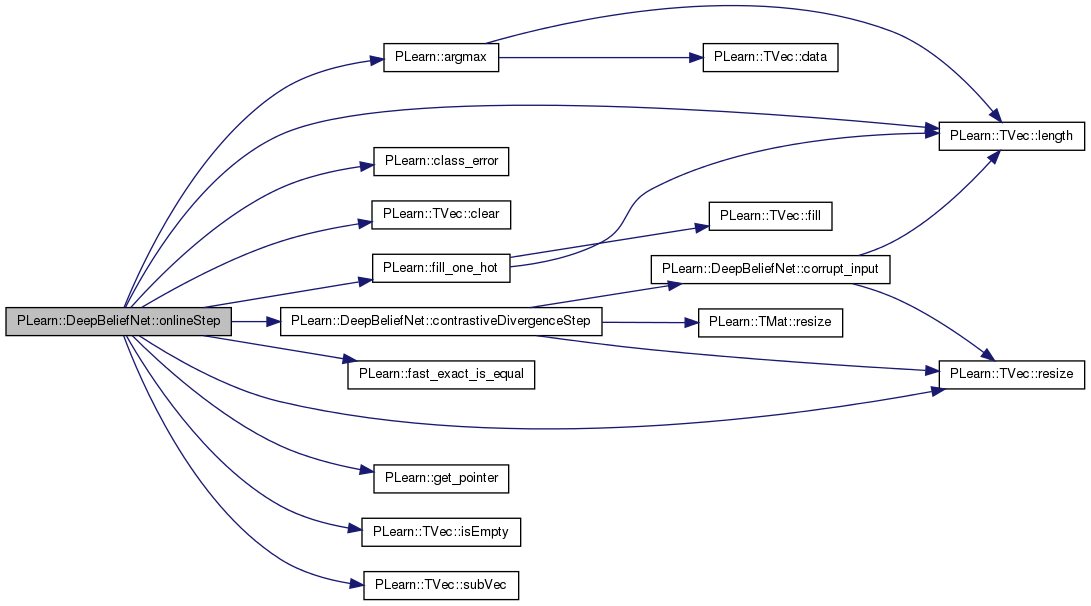

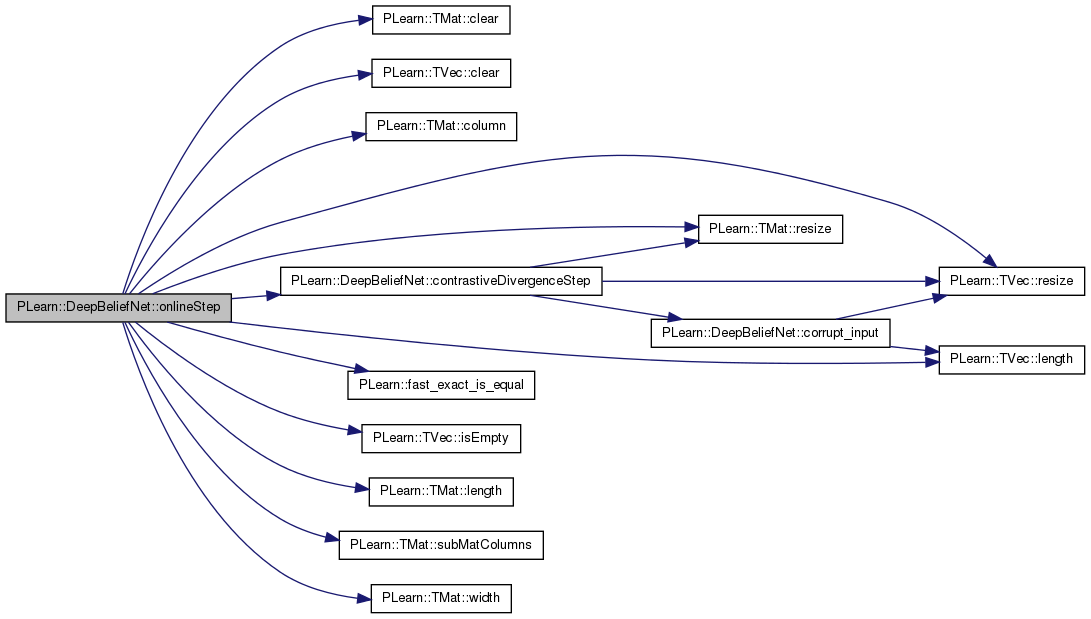

| void | onlineStep (const Vec &input, const Vec &target, Vec &train_costs) |

| void | onlineStep (const Mat &inputs, const Mat &targets, Mat &train_costs) |

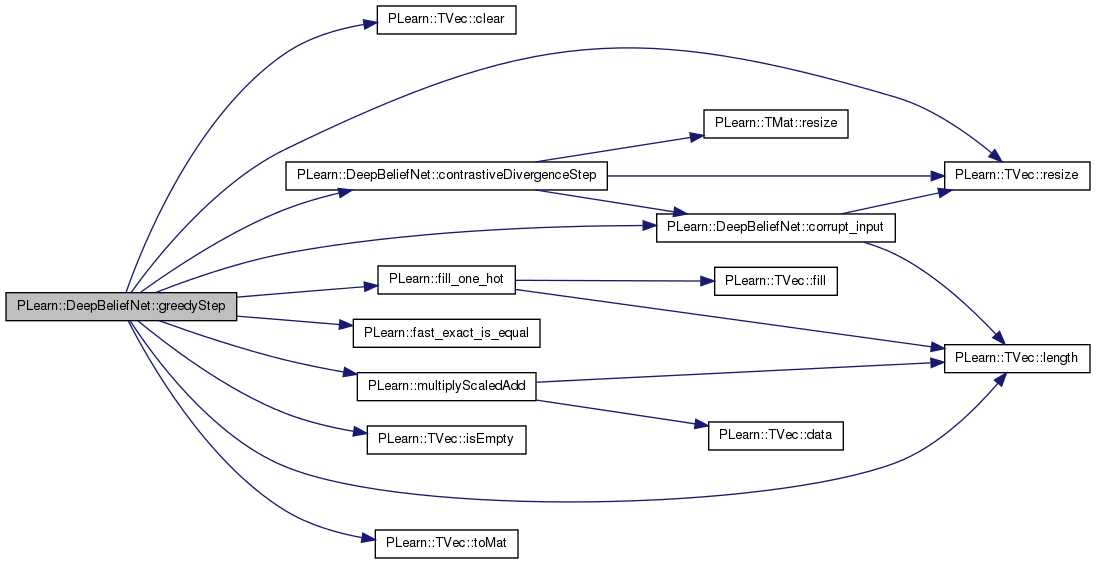

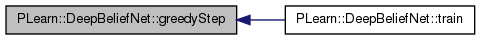

| void | greedyStep (const Vec &input, const Vec &target, int index) |

| void | greedyStep (const Mat &inputs, const Mat &targets, int index, Mat &train_costs_m) |

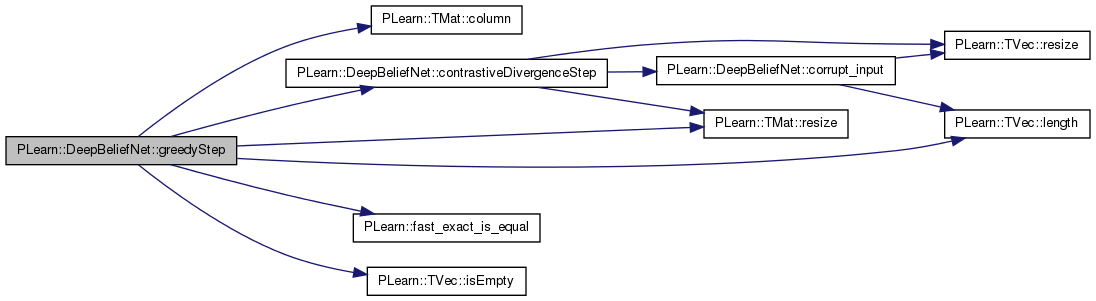

| void | jointGreedyStep (const Vec &input, const Vec &target) |

| void | jointGreedyStep (const Mat &inputs, const Mat &targets) |

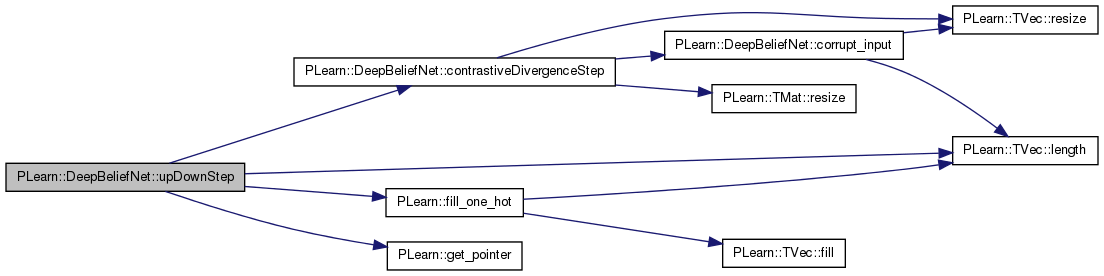

| void | upDownStep (const Vec &input, const Vec &target, Vec &train_costs) |

| void | upDownStep (const Mat &inputs, const Mat &targets, Mat &train_costs) |

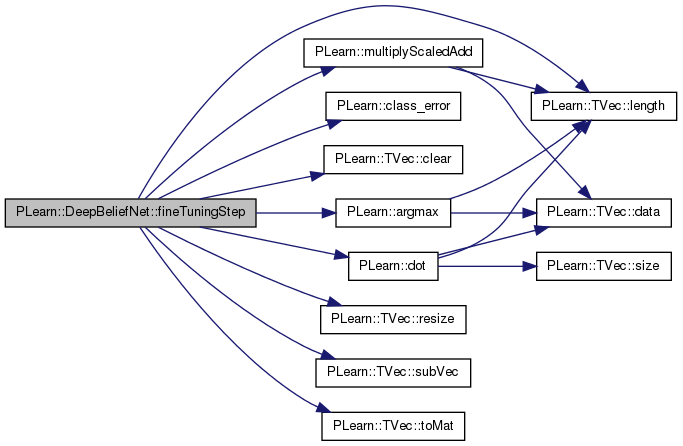

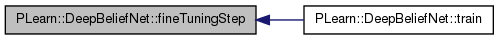

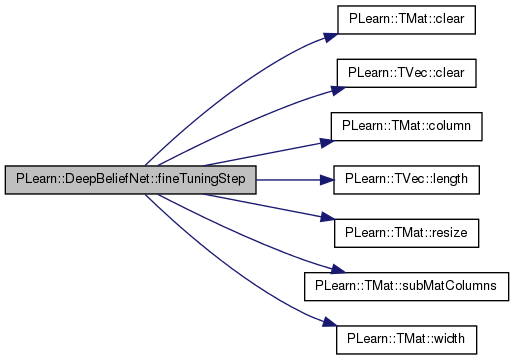

| void | fineTuningStep (const Vec &input, const Vec &target, Vec &train_costs) |

| void | fineTuningStep (const Mat &inputs, const Mat &targets, Mat &train_costs) |

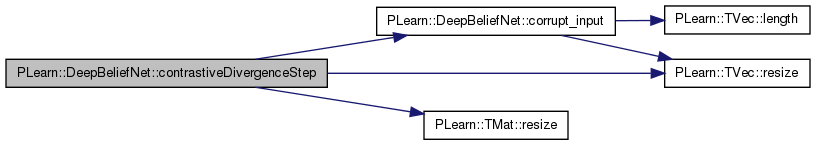

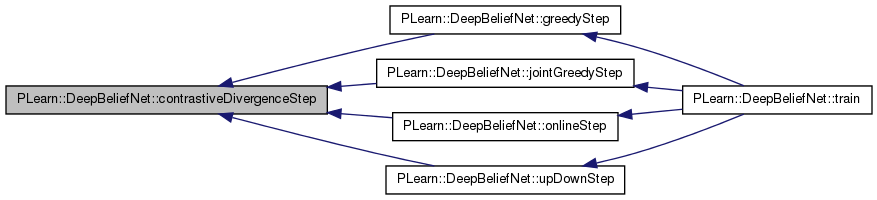

| void | contrastiveDivergenceStep (const PP< RBMLayer > &down_layer, const PP< RBMConnection > &connection, const PP< RBMLayer > &up_layer, int layer_index, bool nofprop=false) |

| Perform a step of contrastive divergence, assuming that down_layer->expectation(s) is set. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DeepBeliefNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

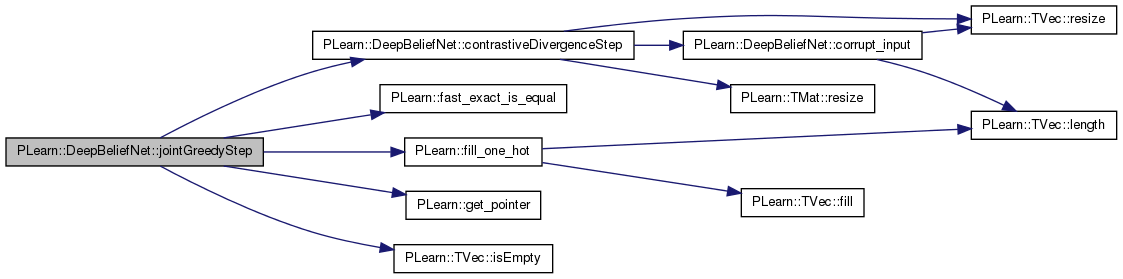

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | cd_learning_rate |

| The learning rate used during contrastive divergence learning. | |

| real | cd_decrease_ct |

| The decrease constant of the learning rate used during contrastive divergence learning. | |

| real | up_down_learning_rate |

| The learning rate used in the up-down algorithm during the unsupervised fine tuning gradient descent. | |

| real | up_down_decrease_ct |

| The decrease constant of the learning rate used in the up-down algorithm during the unsupervised fine tuning gradient descent. | |

| real | grad_learning_rate |

| The learning rate used during the gradient descent. | |

| real | grad_decrease_ct |

| The decrease constant of the learning rate used during gradient descent. | |

| int | batch_size |

| Training batch size (1=stochastic learning, 0=full batch learning) | |

| int | n_classes |

| Number of classes in the training set. | |

| TVec< int > | training_schedule |

| Number of examples to use during each phase of learning: first the greedy phases, and then the fine-tuning phase. | |

| int | up_down_nstages |

| Number of samples to use for unsupervised fine-tuning with the up-down algorithm. | |

| bool | use_classification_cost |

| If the first cost function is the NLL in classification, pre-trained with CD, and using the last *two* layers to get a better approximation (undirected softmax) than layer-wise mean-field. | |

| bool | reconstruct_layerwise |

| Minimize reconstruction error of each layer as an auto-encoder. | |

| TVec< PP< RBMLayer > > | layers |

| The layers of units in the network. | |

| int | i_output_layer |

| The index of the output layer (when the final module is external) | |

| string | learnerExpdir |

| Experiment directory where the learner will be save if save_learner_before_fine_tuning is true. | |

| bool | save_learner_before_fine_tuning |

| Saves the learner before the supervised fine_tuning. | |

| TVec< PP< RBMConnection > > | connections |

| The weights of the connections between the layers. | |

| TVec< PP< RBMMultinomialLayer > > | greedy_target_layers |

| Optional target layers for greedy layer-wise pretraining. | |

| TVec< PP< RBMMatrixConnection > > | greedy_target_connections |

| Optional target matrix connections for greedy layer-wise pretraining. | |

| PP< OnlineLearningModule > | final_module |

| Optional module that takes as input the output of the last layer (layers[n_layers-1), and its output is fed to final_cost, and concatenated with the one of classification_cost (if present) as output of the learner. | |

| PP< CostModule > | final_cost |

| The cost function to be applied on top of the DBN (or of final_module if provided). | |

| TVec< PP< CostModule > > | partial_costs |

| The different cost function to be applied on top of each layer (except the first one, which has to be null) of the RBM. | |

| bool | use_sample_for_up_layer |

| Indication that the update of the top layer during CD uses a sample, not the expectation. | |

| string | use_corrupted_posDownVal |

| Indicates whether we will use a corrupted version of the positive down value during the CD step. | |

| string | noise_type |

| Type of noise that corrupts the pos_down_val. | |

| real | fraction_of_masked_inputs |

| Fraction of components that will be corrupted in function corrupt_input. | |

| bool | mask_with_pepper_salt |

| Indication that inputs should be masked with 0 or 1 according to prop_salt_noise. | |

| real | prob_salt_noise |

| Probability that we mask by 1 instead of 0 when using mask_with_pepper_salt. | |

| PP< RBMClassificationModule > | classification_module |

| The module computing the probabilities of the different classes. | |

| int | n_layers |

| Number of layers. | |

| TVec< string > | cost_names |

| The computed cost names. | |

| bool | online |

| whether to do things by stages, including fine-tuning, or on-line | |

| real | background_gibbs_update_ratio |

| bool | top_layer_joint_cd |

| Wether we do a step of joint contrastive divergence on top-layer Only used if online for the moment. | |

| int | gibbs_chain_reinit_freq |

| after how many examples should we re-initialize the Gibbs chains (if == INT_MAX, the default then NEVER re-initialize except when stage==0) | |

| real | mean_field_contrastive_divergence_ratio |

| Coefficient between 0 and 1. | |

| int | train_stats_window |

| The number of samples to use to compute training stats. | |

| PP< PTimer > | timer |

| Timer for monitoring the speed. | |

| PP< NLLCostModule > | classification_cost |

| The module computing the classification cost function (NLL) on top of classification_module. | |

| PP< RBMMixedLayer > | joint_layer |

| Concatenation of layers[n_layers-2] and the target layer (that is inside classification_module), if use_classification_cost. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

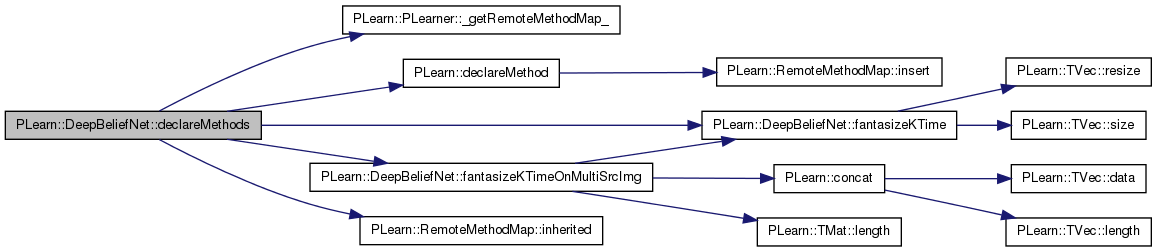

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declare the methods that are remote-callable. | |

Protected Attributes | |

| int | minibatch_size |

| Actual size of a mini-batch (size of the training set if batch_size==1) | |

| bool | initialize_gibbs_chain |

| TVec< Vec > | activation_gradients |

| Stores the gradient of the cost wrt the activations (at the input of the layers) | |

| TVec< Mat > | activations_gradients |

| For mini-batch. | |

| TVec< Vec > | expectation_gradients |

| Stores the gradient of the cost wrt the expectations (at the output of the layers) | |

| TVec< Mat > | expectations_gradients |

| For mini-batch. | |

| TVec< TVec< Vec > > | greedy_target_expectations |

| For the fprop with greedy_target_layers. | |

| TVec< TVec< Vec > > | greedy_target_activations |

| TVec< TVec< Vec > > | greedy_target_expectation_gradients |

| TVec< TVec< Vec > > | greedy_target_activation_gradients |

| TVec< Vec > | greedy_target_probability_gradients |

| TVec< PP< RBMLayer > > | greedy_joint_layers |

| TVec< PP< RBMConnection > > | greedy_joint_connections |

| Vec | final_cost_input |

| Mat | final_cost_inputs |

| For mini-batch. | |

| Vec | final_cost_value |

| Mat | final_cost_values |

| For mini-batch. | |

| Vec | final_cost_output |

| Vec | class_output |

| Vec | class_gradient |

| Vec | final_cost_gradient |

| Stores the gradient of the cost at the input of final_cost. | |

| Mat | final_cost_gradients |

| For mini-batch. | |

| Vec | save_layer_activation |

| buffers bottom layer activation during onlineStep | |

| Mat | save_layer_activations |

| For mini-batches. | |

| Vec | save_layer_expectation |

| buffers bottom layer expectation during onlineStep | |

| Mat | save_layer_expectations |

| For mini-batches. | |

| Vec | pos_down_val |

| Store a copy of the positive phase values. | |

| Vec | corrupted_pos_down_val |

| Vec | pos_up_val |

| Mat | pos_down_vals |

| Mat | pos_up_vals |

| Mat | cd_neg_down_vals |

| Mat | cd_neg_up_vals |

| Mat | mf_cd_neg_down_vals |

| Mat | mf_cd_neg_up_vals |

| Vec | mf_cd_neg_down_val |

| Vec | mf_cd_neg_up_val |

| TVec< Mat > | gibbs_down_state |

| Store the state of the Gibbs chain for each RBM. | |

| Vec | optimized_costs |

| Used to store the costs optimized by the final cost module. | |

| Vec | target_one_hot |

| One-hot representation of the target. | |

| Vec | reconstruction_costs |

| Stores reconstruction costs. | |

| int | nll_cost_index |

| Keeps the index of the NLL cost in train_costs. | |

| int | class_cost_index |

| Keeps the index of the class_error cost in train_costs. | |

| int | final_cost_index |

| Keeps the beginning index of the final costs in train_costs. | |

| TVec< int > | partial_costs_indices |

| Keeps the beginning indices of the partial costs in train_costs. | |

| int | reconstruction_cost_index |

| Keeps the beginning index of the reconstruction costs in train_costs. | |

| int | greedy_target_layer_nlls_index |

| Keeps the beginning index of the greedy target layer NLLs. | |

| int | training_cpu_time_cost_index |

| Index of the cpu time cost (per each call of train()) | |

| int | cumulative_training_time_cost_index |

| The index of the cumulative training time cost. | |

| int | cumulative_testing_time_cost_index |

| The index of the cumulative testing time cost. | |

| real | cumulative_training_time |

| Holds the total training (cpu)time. | |

| real | cumulative_testing_time |

| Holds the total testing (cpu)time. | |

| TVec< int > | cumulative_schedule |

| Cumulative training schedule. | |

| int | up_down_stage |

| Number of samples visited so far during unsupervised fine-tuning. | |

| Vec | layer_input |

| Mat | layer_inputs |

| TVec< PP< RBMConnection > > | generative_connections |

| The untied generative weights of the connections between the layers for the up-down algorithm. | |

| TVec< Vec > | up_sample |

| TVec< Vec > | down_sample |

| TVec< TVec< int > > | expectation_indices |

| Indices of the expectation components. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_layers_and_connections () |

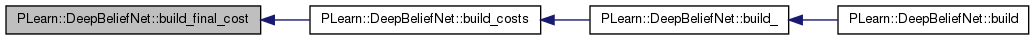

| void | build_costs () |

| void | build_classification_cost () |

| void | build_final_cost () |

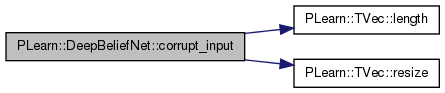

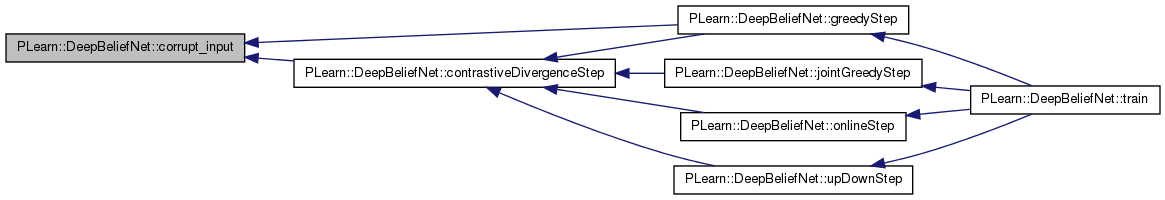

| void | corrupt_input (const Vec &input, Vec &corrupted_input, int layer) |

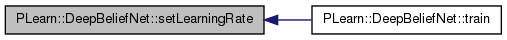

| void | setLearningRate (real the_learning_rate) |

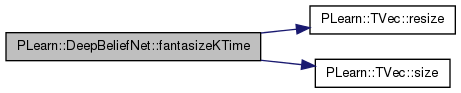

| TVec< Vec > | fantasizeKTime (const int KTime, const Vec &srcImg, const Vec &sample, bool alwaysFromSrcImg) |

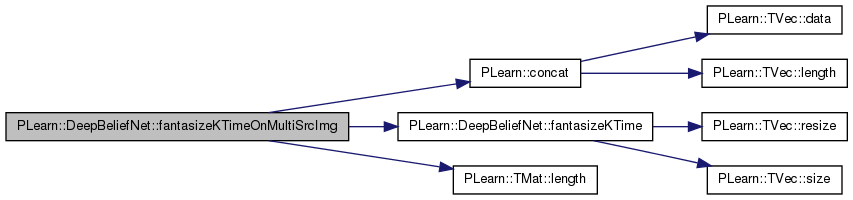

| TVec< Vec > | fantasizeKTimeOnMultiSrcImg (const int KTime, const Mat &srcImg, const Vec &sample, bool alwaysFromSrcImg) |

Neural net, learned layer-wise in a greedy fashion.

This version support different unit types, different connection types, and different cost functions, including the NLL in classification.

Definition at line 61 of file DeepBeliefNet.h.

typedef PLearner PLearn::DeepBeliefNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 63 of file DeepBeliefNet.h.

| PLearn::DeepBeliefNet::DeepBeliefNet | ( | ) |

Default constructor.

Definition at line 60 of file DeepBeliefNet.cc.

References n_layers, and PLearn::PLearner::random_gen.

:

cd_learning_rate( 0. ),

cd_decrease_ct( 0. ),

up_down_learning_rate( 0. ),

up_down_decrease_ct( 0. ),

grad_learning_rate( 0. ),

grad_decrease_ct( 0. ),

// grad_weight_decay( 0. ),

batch_size( 1 ),

n_classes( -1 ),

up_down_nstages( 0 ),

use_classification_cost( true ),

reconstruct_layerwise( false ),

i_output_layer( -1 ),

learnerExpdir(""),

save_learner_before_fine_tuning( false ),

use_sample_for_up_layer( false ),

use_corrupted_posDownVal( "none" ),

noise_type( "masking_noise" ),

fraction_of_masked_inputs( 0 ),

mask_with_pepper_salt( false ),

prob_salt_noise( 0.5 ),

online ( false ),

background_gibbs_update_ratio(0),

gibbs_chain_reinit_freq( INT_MAX ),

mean_field_contrastive_divergence_ratio( 0 ),

train_stats_window( -1 ),

minibatch_size( 0 ),

initialize_gibbs_chain( false ),

nll_cost_index( -1 ),

class_cost_index( -1 ),

final_cost_index( -1 ),

reconstruction_cost_index( -1 ),

training_cpu_time_cost_index ( -1 ),

cumulative_training_time_cost_index ( -1 ),

cumulative_testing_time_cost_index ( -1 ),

cumulative_training_time( 0 ),

cumulative_testing_time( 0 ),

up_down_stage( 0 )

{

random_gen = new PRandom();

n_layers = 0;

}

| string PLearn::DeepBeliefNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DeepBeliefNet.cc.

| OptionList & PLearn::DeepBeliefNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DeepBeliefNet.cc.

| RemoteMethodMap & PLearn::DeepBeliefNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DeepBeliefNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DeepBeliefNet.cc.

| Object * PLearn::DeepBeliefNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DeepBeliefNet.cc.

| StaticInitializer DeepBeliefNet::_static_initializer_ & PLearn::DeepBeliefNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file DeepBeliefNet.cc.

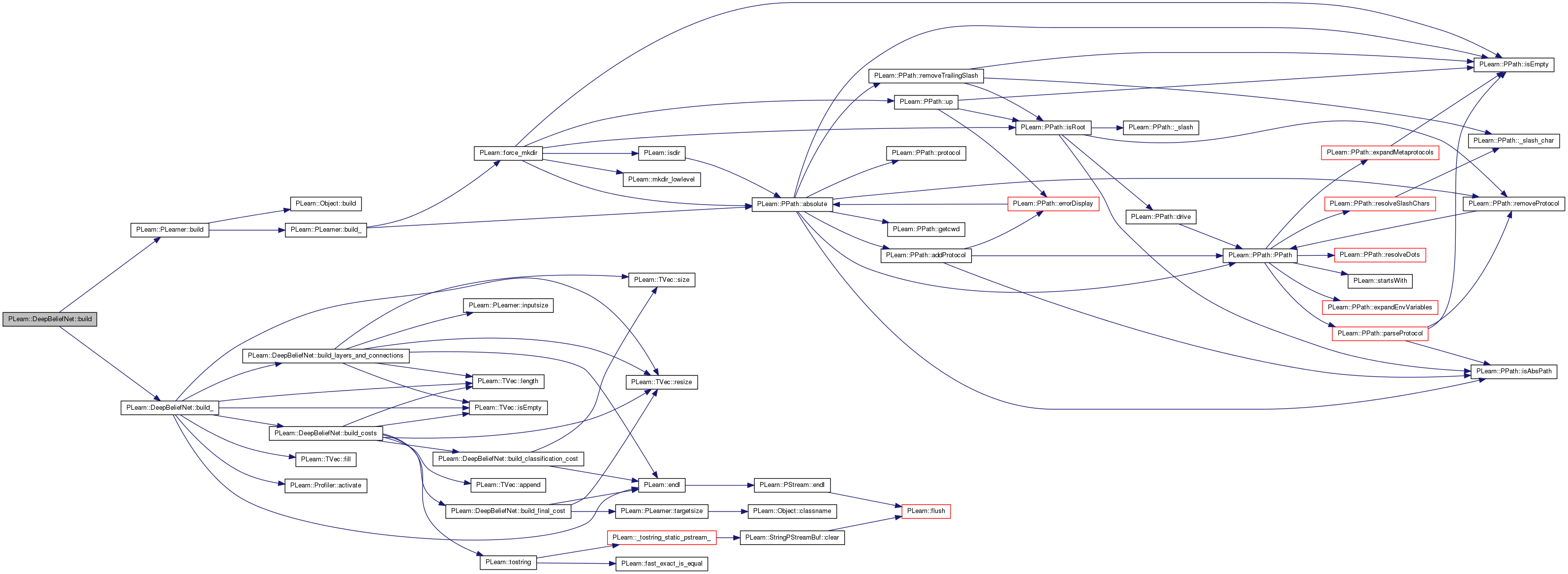

| void PLearn::DeepBeliefNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 943 of file DeepBeliefNet.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

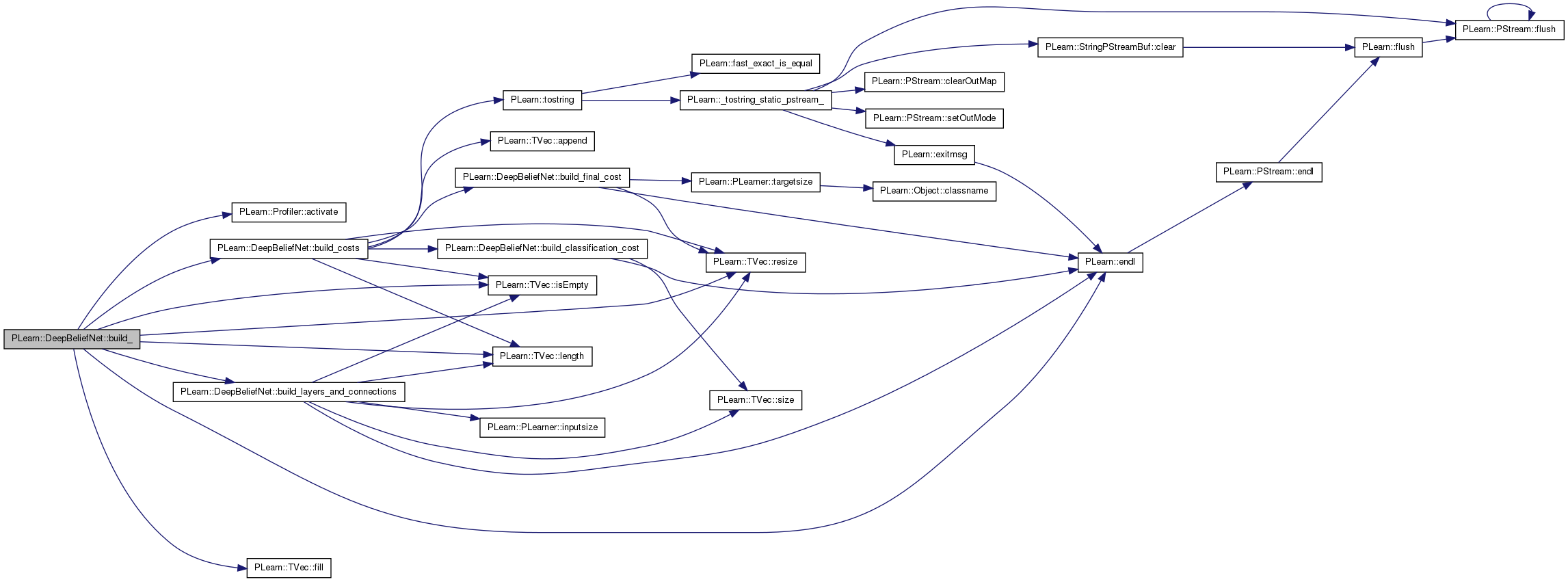

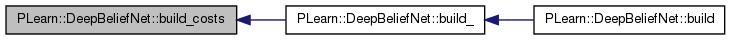

| void PLearn::DeepBeliefNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 451 of file DeepBeliefNet.cc.

References PLearn::Profiler::activate(), background_gibbs_update_ratio, batch_size, build_costs(), build_layers_and_connections(), cumulative_schedule, PLearn::endl(), PLearn::TVec< T >::fill(), i, i_output_layer, PLearn::TVec< T >::isEmpty(), layers, PLearn::TVec< T >::length(), mean_field_contrastive_divergence_ratio, n_layers, online, PLASSERT, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), training_schedule, up_down_nstages, use_corrupted_posDownVal, and use_sample_for_up_layer.

Referenced by build().

{

PLASSERT( batch_size >= 0 );

MODULE_LOG << "build_() called" << endl;

// Initialize some learnt variables

if (layers.isEmpty())

PLERROR("In DeepBeliefNet::build_ - You must provide at least one RBM "

"layer through the 'layers' option");

else

n_layers = layers.length();

if( i_output_layer < 0)

i_output_layer = n_layers - 1;

if( online && up_down_nstages > 0)

PLERROR("In DeepBeliefNet::build_ - up-down algorithm not implemented "

"for online setting.");

if( batch_size != 1 && up_down_nstages > 0 )

PLERROR("In DeepBeliefNet::build_ - up-down algorithm not implemented "

"for minibatch setting.");

if( mean_field_contrastive_divergence_ratio > 0 &&

background_gibbs_update_ratio != 0 )

PLERROR("In DeepBeliefNet::build_ - mean-field CD cannot be used "

"with background_gibbs_update_ratio != 0.");

if( mean_field_contrastive_divergence_ratio > 0 &&

use_sample_for_up_layer )

PLERROR("In DeepBeliefNet::build_ - mean-field CD cannot be used "

"with use_sample_for_up_layer.");

if( mean_field_contrastive_divergence_ratio < 0 ||

mean_field_contrastive_divergence_ratio > 1 )

PLERROR("In DeepBeliefNet::build_ - mean_field_contrastive_divergence_ratio should "

"be in [0,1].");

if( use_corrupted_posDownVal != "for_cd_fprop" &&

use_corrupted_posDownVal != "for_cd_update" &&

use_corrupted_posDownVal != "none" )

PLERROR("In DeepBeliefNet::build_ - use_corrupted_posDownVal should "

"be chosen among {\"for_cd_fprop\",\"for_cd_update\",\"none\"}.");

if( !online )

{

if( training_schedule.length() != n_layers )

{

PLWARNING("In DeepBeliefNet::build_ - training_schedule.length() "

"!= n_layers, resizing and zeroing");

training_schedule.resize( n_layers );

training_schedule.fill( 0 );

}

cumulative_schedule.resize( n_layers+1 );

cumulative_schedule[0] = 0;

for( int i=0 ; i<n_layers ; i++ )

{

cumulative_schedule[i+1] = cumulative_schedule[i] +

training_schedule[i];

}

}

build_layers_and_connections();

// Activate the profiler

Profiler::activate();

build_costs();

}

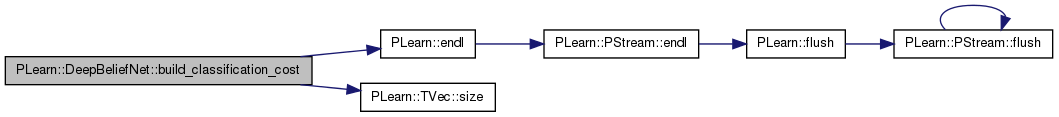

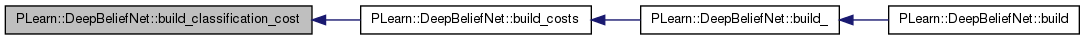

| void PLearn::DeepBeliefNet::build_classification_cost | ( | ) | [private] |

Definition at line 805 of file DeepBeliefNet.cc.

References batch_size, classification_cost, classification_module, connections, PLearn::endl(), joint_layer, layers, n_classes, n_layers, PLASSERT_MSG, PLearn::PLearner::random_gen, and PLearn::TVec< T >::size().

Referenced by build_costs().

{

MODULE_LOG << "build_classification_cost() called" << endl;

PLASSERT_MSG(batch_size == 1, "DeepBeliefNet::build_classification_cost - "

"This method has not been verified yet for minibatch "

"compatibility");

PP<RBMMatrixConnection> last_to_target;

if (classification_module)

last_to_target = classification_module->last_to_target;

if (!last_to_target ||

last_to_target->up_size != layers[n_layers-1]->size ||

last_to_target->down_size != n_classes ||

last_to_target->random_gen != random_gen)

{

// We need to (re-)create 'last_to_target', and thus the classification

// module too.

// This is not systematically done so that the learner can be

// saved and loaded without losing learned parameters.

last_to_target = new RBMMatrixConnection();

last_to_target->up_size = layers[n_layers-1]->size;

last_to_target->down_size = n_classes;

last_to_target->random_gen = random_gen;

last_to_target->build();

PP<RBMMultinomialLayer> target_layer = new RBMMultinomialLayer();

target_layer->size = n_classes;

target_layer->random_gen = random_gen;

target_layer->build();

PLASSERT_MSG(n_layers >= 2, "You must specify at least two layers (the "

"input layer and one hidden layer)");

classification_module = new RBMClassificationModule();

classification_module->previous_to_last = connections[n_layers-2];

classification_module->last_layer =

(RBMBinomialLayer*) (RBMLayer*) layers[n_layers-1];

classification_module->last_to_target = last_to_target;

classification_module->target_layer = target_layer;

classification_module->random_gen = random_gen;

classification_module->build();

}

classification_cost = new NLLCostModule();

classification_cost->input_size = n_classes;

classification_cost->target_size = 1;

classification_cost->build();

joint_layer = new RBMMixedLayer();

joint_layer->sub_layers.resize( 2 );

joint_layer->sub_layers[0] = layers[ n_layers-2 ];

joint_layer->sub_layers[1] = classification_module->target_layer;

joint_layer->random_gen = random_gen;

joint_layer->build();

}

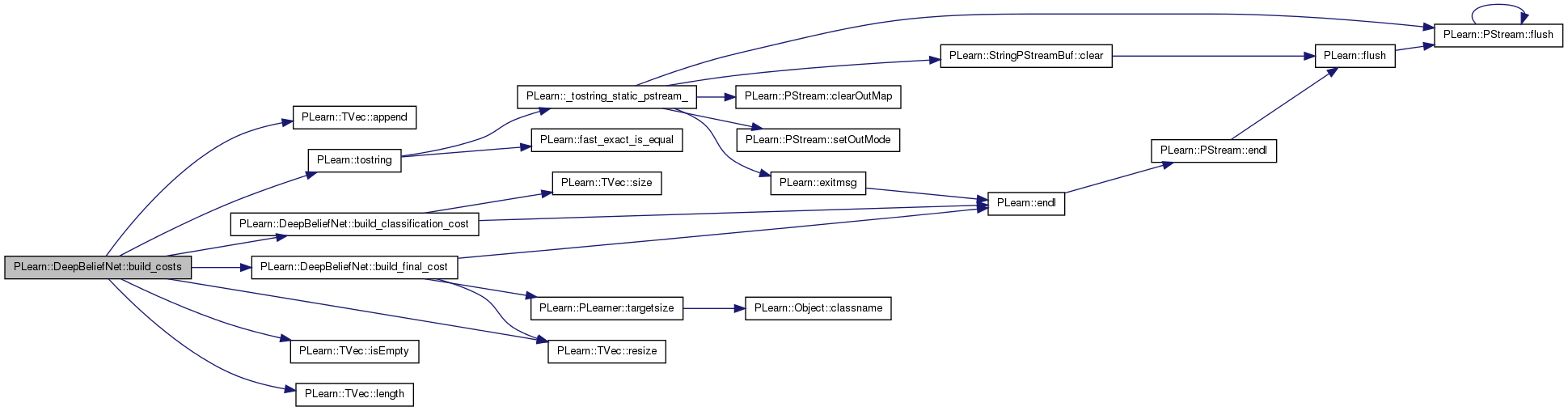

| void PLearn::DeepBeliefNet::build_costs | ( | ) | [private] |

Definition at line 526 of file DeepBeliefNet.cc.

References PLearn::TVec< T >::append(), build_classification_cost(), build_final_cost(), class_cost_index, cost_names, cumulative_testing_time_cost_index, cumulative_training_time_cost_index, final_cost, final_cost_index, greedy_target_layer_nlls_index, greedy_target_layers, i, PLearn::TVec< T >::isEmpty(), j, PLearn::TVec< T >::length(), n_classes, n_layers, nll_cost_index, partial_costs, partial_costs_indices, PLASSERT, PLERROR, PLearn::PLearner::random_gen, reconstruct_layerwise, reconstruction_cost_index, reconstruction_costs, PLearn::TVec< T >::resize(), target_one_hot, PLearn::tostring(), training_cpu_time_cost_index, and use_classification_cost.

Referenced by build_().

{

cost_names.resize(0);

int current_index = 0;

// build the classification module, its cost and the joint layer

if( use_classification_cost )

{

PLASSERT( n_classes >= 2 );

build_classification_cost();

cost_names.append("NLL");

nll_cost_index = current_index;

current_index++;

cost_names.append("class_error");

class_cost_index = current_index;

current_index++;

}

if( final_cost )

{

build_final_cost();

TVec<string> final_names = final_cost->costNames();

int n_final_costs = final_names.length();

for( int i=0; i<n_final_costs; i++ )

cost_names.append("final." + final_names[i]);

final_cost_index = current_index;

current_index += n_final_costs;

}

if( partial_costs )

{

int n_partial_costs = partial_costs.length();

if( n_partial_costs != n_layers - 1)

PLERROR("DeepBeliefNet::build_costs() - \n"

"partial_costs.length() (%d) != n_layers-1 (%d).\n",

n_partial_costs, n_layers-1);

partial_costs_indices.resize(n_partial_costs);

for( int i=0; i<n_partial_costs; i++ )

if( partial_costs[i] )

{

TVec<string> names = partial_costs[i]->costNames();

int n_partial_costs_i = names.length();

for( int j=0; j<n_partial_costs_i; j++ )

cost_names.append("partial"+tostring(i)+"."+names[j]);

partial_costs_indices[i] = current_index;

current_index += n_partial_costs_i;

// Share random_gen with partial_costs[i], unless it already

// has one

if( !(partial_costs[i]->random_gen) )

{

partial_costs[i]->random_gen = random_gen;

partial_costs[i]->forget();

}

}

else

partial_costs_indices[i] = -1;

}

else

partial_costs_indices.resize(0);

if( reconstruct_layerwise )

{

reconstruction_costs.resize(n_layers);

cost_names.append("layerwise_reconstruction_error");

reconstruction_cost_index = current_index;

current_index++;

for( int i=0; i<n_layers-1; i++ )

cost_names.append("layer"+tostring(i)+".reconstruction_error");

current_index += n_layers-1;

}

else

reconstruction_costs.resize(0);

if( !greedy_target_layers.isEmpty() )

{

greedy_target_layer_nlls_index = current_index;

target_one_hot.resize(n_classes);

for( int i=0; i<n_layers-1; i++ )

{

cost_names.append("layer"+tostring(i)+".nll");

current_index++;

}

}

cost_names.append("cpu_time");

cost_names.append("cumulative_train_time");

cost_names.append("cumulative_test_time");

training_cpu_time_cost_index = current_index;

current_index++;

cumulative_training_time_cost_index = current_index;

current_index++;

cumulative_testing_time_cost_index = current_index;

current_index++;

PLASSERT( current_index == cost_names.length() );

}

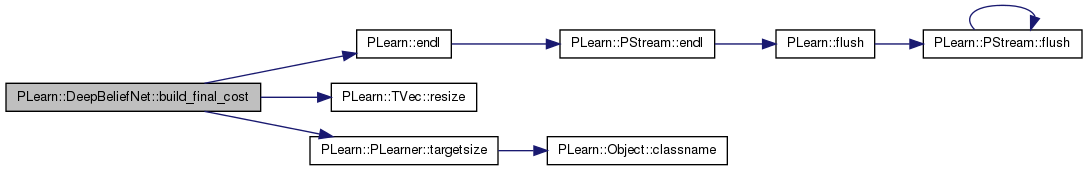

| void PLearn::DeepBeliefNet::build_final_cost | ( | ) | [private] |

Definition at line 865 of file DeepBeliefNet.cc.

References PLearn::endl(), final_cost, final_cost_gradient, final_module, grad_learning_rate, layers, n_classes, n_layers, PLASSERT_MSG, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::PLearner::targetsize(), and PLearn::PLearner::targetsize_.

Referenced by build_costs().

{

MODULE_LOG << "build_final_cost() called" << endl;

PLASSERT_MSG(final_cost->input_size >= 0, "The input size of the final "

"cost must be non-negative");

final_cost_gradient.resize( final_cost->input_size );

final_cost->setLearningRate( grad_learning_rate );

if( final_module )

{

if( layers[n_layers-1]->size != final_module->input_size )

PLERROR("DeepBeliefNet::build_final_cost() - "

"layers[%i]->size (%d) != final_module->input_size (%d)."

"\n", n_layers-1, layers[n_layers-1]->size,

final_module->input_size);

if( final_module->output_size != final_cost->input_size )

PLERROR("DeepBeliefNet::build_final_cost() - "

"final_module->output_size (%d) != final_cost->input_size (%d)."

"\n", final_module->output_size,

final_module->input_size);

final_module->setLearningRate( grad_learning_rate );

// Share random_gen with final_module, unless it already has one

if( !(final_module->random_gen) )

{

final_module->random_gen = random_gen;

final_module->forget();

}

// check target size and final_cost->input_size

if( n_classes == 0 ) // regression

{

if( targetsize_ >= 0 && final_cost->input_size != targetsize() )

PLERROR("DeepBeliefNet::build_final_cost() - "

"final_cost->input_size (%d) != targetsize() (%d), "

"although we are doing regression (n_classes == 0).\n",

final_cost->input_size, targetsize());

}

else

{

if( final_cost->input_size != n_classes )

PLERROR("DeepBeliefNet::build_final_cost() - "

"final_cost->input_size (%d) != n_classes (%d), "

"although we are doing classification (n_classes != 0).\n",

final_cost->input_size, n_classes);

if( targetsize_ >= 0 && targetsize() != 1 )

PLERROR("DeepBeliefNet::build_final_cost() - "

"targetsize() (%d) != 1, "

"although we are doing classification (n_classes != 0).\n",

targetsize());

}

}

else

{

if( layers[n_layers-1]->size != final_cost->input_size )

PLERROR("DeepBeliefNet::build_final_cost() - "

"layers[%i]->size (%d) != final_cost->input_size (%d)."

"\n", n_layers-1, layers[n_layers-1]->size,

final_cost->input_size);

}

// Share random_gen with final_cost, unless it already has one

if( !(final_cost->random_gen) )

{

final_cost->random_gen = random_gen;

final_cost->forget();

}

}

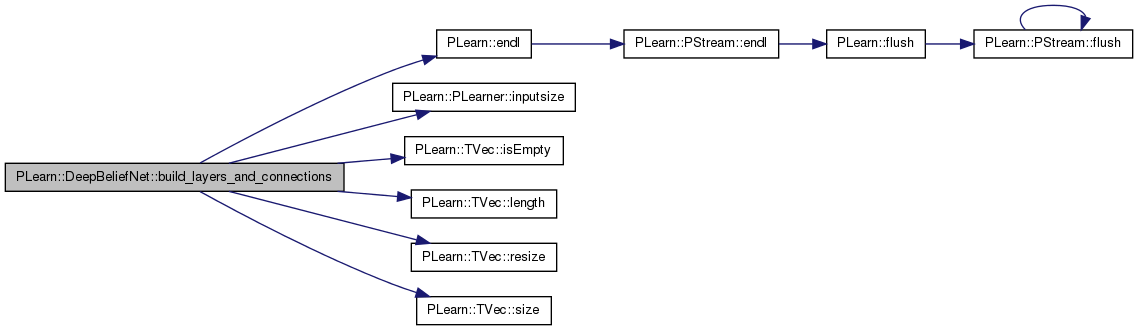

| void PLearn::DeepBeliefNet::build_layers_and_connections | ( | ) | [private] |

Definition at line 637 of file DeepBeliefNet.cc.

References activation_gradients, activations_gradients, background_gibbs_update_ratio, batch_size, c, connections, PLearn::endl(), expectation_gradients, expectation_indices, expectations_gradients, fraction_of_masked_inputs, gibbs_down_state, greedy_joint_connections, greedy_joint_layers, greedy_target_activation_gradients, greedy_target_activations, greedy_target_connections, greedy_target_expectation_gradients, greedy_target_expectations, greedy_target_layers, greedy_target_probability_gradients, i, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, PLearn::TVec< T >::isEmpty(), j, layers, PLearn::TVec< T >::length(), minibatch_hack, n_classes, n_layers, noise_type, online, partial_costs, PLASSERT, PLASSERT_MSG, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), use_classification_cost, and use_corrupted_posDownVal.

Referenced by build_().

{

MODULE_LOG << "build_layers_and_connections() called" << endl;

if( connections.length() != n_layers-1 )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"connections.length() (%d) != n_layers-1 (%d).\n",

connections.length(), n_layers-1);

if( inputsize_ >= 0 )

PLASSERT( layers[0]->size == inputsize() );

activation_gradients.resize( n_layers );

activations_gradients.resize( n_layers );

expectation_gradients.resize( n_layers );

expectations_gradients.resize( n_layers );

gibbs_down_state.resize( n_layers-1 );

expectation_indices.resize( n_layers-1 );

for( int i=0 ; i<n_layers-1 ; i++ )

{

if( layers[i]->size != connections[i]->down_size )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"layers[%i]->size (%d) != connections[%i]->down_size (%d)."

"\n", i, layers[i]->size, i, connections[i]->down_size);

if( connections[i]->up_size != layers[i+1]->size )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"connections[%i]->up_size (%d) != layers[%i]->size (%d)."

"\n", i, connections[i]->up_size, i+1, layers[i+1]->size);

// Assign random_gen to layers[i] and connections[i], unless they

// already have one

if( !(layers[i]->random_gen) )

{

layers[i]->random_gen = random_gen;

layers[i]->forget();

}

if( !(connections[i]->random_gen) )

{

connections[i]->random_gen = random_gen;

connections[i]->forget();

}

activation_gradients[i].resize( layers[i]->size );

expectation_gradients[i].resize( layers[i]->size );

if( greedy_target_layers.length()>i && greedy_target_layers[i] )

{

if( use_classification_cost )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_classification_cost not implemented for greedy_target_layers.");

if( greedy_target_connections.length()>i && !greedy_target_connections[i] )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"some greedy_target_connections are missing.");

if( greedy_target_layers[i]->size != n_classes)

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"greedy_target_layers[%d] should be of size %d.",i,n_classes);

if( greedy_target_connections[i]->down_size != n_classes ||

greedy_target_connections[i]->up_size != layers[i+1]->size )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"greedy_target_connections[%d] should be of size (%d,%d).",

i,layers[i+1]->size,n_classes);

if( partial_costs.length() != 0 )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"greedy_target_layers can't be used with partial_costs.");

greedy_target_expectations.resize(n_layers-1);

greedy_target_activations.resize(n_layers-1);

greedy_target_expectation_gradients.resize(n_layers-1);

greedy_target_activation_gradients.resize(n_layers-1);

greedy_target_probability_gradients.resize(n_layers-1);

greedy_target_expectations[i].resize(n_classes);

greedy_target_activations[i].resize(n_classes);

greedy_target_expectation_gradients[i].resize(n_classes);

greedy_target_activation_gradients[i].resize(n_classes);

greedy_target_probability_gradients[i].resize(n_classes);

for( int c=0; c<n_classes; c++)

{

greedy_target_expectations[i][c].resize(layers[i+1]->size);

greedy_target_activations[i][c].resize(layers[i+1]->size);

greedy_target_expectation_gradients[i][c].resize(layers[i+1]->size);

greedy_target_activation_gradients[i][c].resize(layers[i+1]->size);

}

greedy_joint_layers.resize(n_layers-1);

PP<RBMMixedLayer> ml = new RBMMixedLayer();

ml->sub_layers.resize(2);

ml->sub_layers[0] = layers[ i ];

ml->sub_layers[1] = greedy_target_layers[ i ];

ml->random_gen = random_gen;

ml->build();

greedy_joint_layers[i] = (RBMMixedLayer *)ml;

greedy_joint_connections.resize(n_layers-1);

PP<RBMMixedConnection> mc = new RBMMixedConnection();

mc->sub_connections.resize(1,2);

mc->sub_connections(0,0) = connections[i];

mc->sub_connections(0,1) = greedy_target_connections[i];

mc->build();

greedy_joint_connections[i] = (RBMMixedConnection *)mc;

if( !(greedy_target_connections[i]->random_gen) )

{

greedy_target_connections[i]->random_gen = random_gen;

greedy_target_connections[i]->forget();

}

if( !(greedy_target_layers[i]->random_gen) )

{

greedy_target_layers[i]->random_gen = random_gen;

greedy_target_layers[i]->forget();

}

}

if( use_corrupted_posDownVal != "none" )

{

if( greedy_target_layers.length() != 0 )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_corrupted_posDownVal not implemented for greedy_target_layers.");

if( online )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_corrupted_posDownVal not implemented for online.");

if( use_classification_cost )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_classification_cost not implemented for use_corrupted_posDownVal.");

if( background_gibbs_update_ratio != 0 )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_corrupted_posDownVal not implemented with background_gibbs_update_ratio!=0.");

if( batch_size != 1 || minibatch_hack )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_corrupted_posDownVal not implemented for batch_size != 1 or minibatch_hack.");

if( !partial_costs.isEmpty() )

PLERROR("DeepBeliefNet::build_layers_and_connections() - \n"

"use_corrupted_posDownVal not implemented for partial_costs.");

if( noise_type == "masking_noise" && fraction_of_masked_inputs > 0 )

{

expectation_indices[i].resize( layers[i]->size );

for( int j=0 ; j < expectation_indices[i].length() ; j++ )

expectation_indices[i][j] = j;

}

}

}

if( !(layers[n_layers-1]->random_gen) )

{

layers[n_layers-1]->random_gen = random_gen;

layers[n_layers-1]->forget();

}

int last_layer_size = layers[n_layers-1]->size;

PLASSERT_MSG(last_layer_size >= 0,

"Size of last layer must be non-negative");

activation_gradients[n_layers-1].resize(last_layer_size);

expectation_gradients[n_layers-1].resize(last_layer_size);

}

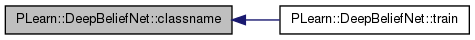

| string PLearn::DeepBeliefNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 55 of file DeepBeliefNet.cc.

Referenced by train().

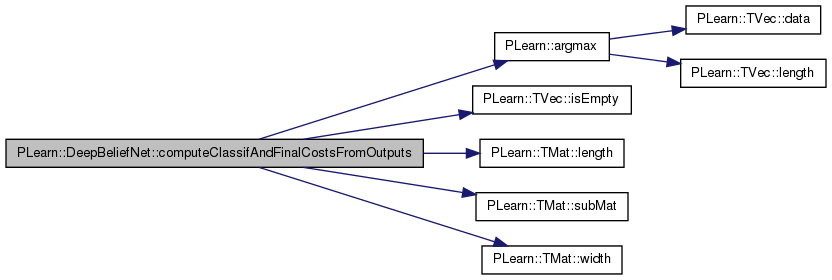

| void PLearn::DeepBeliefNet::computeClassifAndFinalCostsFromOutputs | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| const Mat & | targets, | ||

| Mat & | costs | ||

| ) | const [virtual] |

Definition at line 3387 of file DeepBeliefNet.cc.

References PLearn::argmax(), class_cost_index, classification_cost, final_cost, final_cost_index, final_cost_values, PLearn::TVec< T >::isEmpty(), PLearn::TMat< T >::length(), n_classes, nll_cost_index, partial_costs, PLASSERT, PLERROR, PLearn::TMat< T >::subMat(), use_classification_cost, and PLearn::TMat< T >::width().

Referenced by computeOutputsAndCosts().

{

// Compute the costs from *already* computed output.

int nsamples = inputs.length();

PLASSERT( nsamples > 0 );

PLASSERT( targets.length() == nsamples );

PLASSERT( targets.width() == 1 );

PLASSERT( outputs.length() == nsamples );

PLASSERT( costs.length() == nsamples );

if( use_classification_cost )

{

Vec pcosts;

classification_cost->CostModule::fprop( outputs.subMat(0, 0, nsamples, n_classes),

targets, pcosts );

costs.subMat( 0, nll_cost_index, nsamples, 1) << pcosts;

for (int isample = 0; isample < nsamples; isample++ )

costs(isample,class_cost_index) =

(argmax(outputs(isample).subVec(0, n_classes)) == (int) round(targets(isample,0))) ? 0 : 1;

}

if( final_cost )

{

int init = use_classification_cost ? n_classes : 0;

final_cost->fprop( outputs.subMat(0, init, nsamples, outputs(0).size() - init ),

targets, final_cost_values );

costs.subMat(0, final_cost_index, nsamples, final_cost_values.width())

<< final_cost_values;

}

if( !partial_costs.isEmpty() )

PLERROR("cannot compute partial costs in DeepBeliefNet::computeCostsFromOutputs(Mat&, Mat&, Mat&, Mat&)"

"(expectations are not up to date in the batch version)");

}

| void PLearn::DeepBeliefNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 3288 of file DeepBeliefNet.cc.

References PLearn::argmax(), class_cost_index, classification_cost, PLearn::TVec< T >::clear(), cost_names, PLearn::TVec< T >::fill(), PLearn::fill_one_hot(), final_cost, final_cost_index, final_cost_value, greedy_target_layer_nlls_index, greedy_target_layers, i, PLearn::TVec< T >::isEmpty(), layers, PLearn::TVec< T >::length(), MISSING_VALUE, n_classes, n_layers, nll_cost_index, partial_costs, partial_costs_indices, reconstruct_layerwise, reconstruction_cost_index, reconstruction_costs, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), target_one_hot, and use_classification_cost.

{

// Compute the costs from *already* computed output.

costs.resize( cost_names.length() );

costs.fill( MISSING_VALUE );

// TO MAKE FOR CLEANER CODE INDEPENDENT OF ORDER OF CALLING THIS

// METHOD AND computeOutput, THIS SHOULD BE IN A REDEFINITION OF computeOutputAndCosts

if( use_classification_cost )

{

classification_cost->CostModule::fprop( output.subVec(0, n_classes),

target, costs[nll_cost_index] );

costs[class_cost_index] =

(argmax(output.subVec(0, n_classes)) == (int) round(target[0]))? 0 : 1;

}

if( final_cost )

{

int init = use_classification_cost ? n_classes : 0;

final_cost->fprop( output.subVec( init, output.size() - init ),

target, final_cost_value );

costs.subVec(final_cost_index, final_cost_value.length())

<< final_cost_value;

}

if( !partial_costs.isEmpty() )

{

Vec pcosts;

for( int i=0 ; i<n_layers-1 ; i++ )

// propagate into local cost associated to output of layer i+1

if( partial_costs[ i ] )

{

partial_costs[ i ]->fprop( layers[ i+1 ]->expectation,

target, pcosts);

costs.subVec(partial_costs_indices[i], pcosts.length())

<< pcosts;

}

}

if( !greedy_target_layers.isEmpty() )

{

target_one_hot.clear();

fill_one_hot( target_one_hot,

(int) round(target[0]), real(0.), real(1.) );

for( int i=0 ; i<n_layers-1 ; i++ )

if( greedy_target_layers[i] )

costs[greedy_target_layer_nlls_index+i] =

greedy_target_layers[i]->fpropNLL(target_one_hot);

else

costs[greedy_target_layer_nlls_index+i] = MISSING_VALUE;

}

if (reconstruct_layerwise)

costs.subVec(reconstruction_cost_index, reconstruction_costs.length())

<< reconstruction_costs;

}

Computes the output from the input.

We haven't computed the expectations of the top layer

Reconstruction error of the top layer

Reimplemented from PLearn::PLearner.

Definition at line 3115 of file DeepBeliefNet.cc.

References PLearn::TVec< T >::append(), c, classification_module, PLearn::TVec< T >::clear(), connections, final_cost, final_cost_input, final_module, greedy_target_connections, greedy_target_expectations, greedy_target_layers, i, i_output_layer, PLearn::TVec< T >::isEmpty(), layer_input, layers, PLearn::TVec< T >::length(), PLearn::multiplyScaledAdd(), n_classes, n_layers, outputsize(), partial_costs, reconstruct_layerwise, reconstruction_costs, PLearn::TVec< T >::resize(), PLearn::TVec< T >::toMat(), and use_classification_cost.

Referenced by computeOutputsAndCosts().

{

// Compute the output from the input.

output.resize(0);

// fprop

layers[0]->expectation << input;

if(reconstruct_layerwise)

reconstruction_costs[0]=0;

for( int i=0 ; i<n_layers-2 ; i++ )

{

if( greedy_target_layers.length() && greedy_target_layers[i] )

{

connections[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

greedy_target_layers[i]->activation.clear();

greedy_target_layers[i]->activation += greedy_target_layers[i]->bias;

for( int c=0; c<n_classes; c++ )

{

// Compute class free-energy

layers[i+1]->activation.toMat(layers[i+1]->size,1) += greedy_target_connections[i]->weights.column(c);

greedy_target_layers[i]->activation[c] -= layers[i+1]->freeEnergyContribution(layers[i+1]->activation);

// Compute class dependent expectation and store it

layers[i+1]->expectation_is_not_up_to_date();

layers[i+1]->computeExpectation();

greedy_target_expectations[i][c] << layers[i+1]->expectation;

// Remove class-dependent energy for next free-energy computations

layers[i+1]->activation.toMat(layers[i+1]->size,1) -= greedy_target_connections[i]->weights.column(c);

}

greedy_target_layers[i]->expectation_is_not_up_to_date();

greedy_target_layers[i]->computeExpectation();

// Computing next layer representation

layers[i+1]->expectation.clear();

Vec expectation = layers[i+1]->expectation;

for( int c=0; c<n_classes; c++ )

{

Vec expectation_c = greedy_target_expectations[i][c];

real p_c = greedy_target_layers[i]->expectation[c];

multiplyScaledAdd(expectation_c, real(1.), p_c, expectation);

}

}

else

{

connections[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( connections[i] );

layers[i+1]->computeExpectation();

}

if( i_output_layer==i && (!use_classification_cost && !final_module))

{

output.resize(outputsize());

output << layers[ i ]->expectation;

}

if (reconstruct_layerwise)

{

layer_input.resize(layers[i]->size);

layer_input << layers[i]->expectation;

connections[i]->setAsUpInput(layers[i+1]->expectation);

layers[i]->getAllActivations(connections[i]);

real rc = reconstruction_costs[i+1] = layers[i]->fpropNLL( layer_input );

reconstruction_costs[0] += rc;

}

}

if( i_output_layer>=n_layers-2 && (!use_classification_cost && !final_module))

{

if(i_output_layer==n_layers-1)

{

connections[ n_layers-2 ]->setAsDownInput(layers[ n_layers-2 ]->expectation );

layers[ n_layers-1 ]->getAllActivations( connections[ n_layers-2 ] );

layers[ n_layers-1 ]->computeExpectation();

}

output.resize(outputsize());

output << layers[ i_output_layer ]->expectation;

}

if( use_classification_cost )

classification_module->fprop( layers[ n_layers-2 ]->expectation,

output );

if( final_cost || (!partial_costs.isEmpty() && partial_costs[n_layers-2] ))

{

if( greedy_target_layers.length() && greedy_target_layers[n_layers-2] )

{

connections[n_layers-2]->setAsDownInput( layers[n_layers-2]->expectation );

layers[n_layers-1]->getAllActivations( connections[n_layers-2] );

greedy_target_layers[n_layers-2]->activation.clear();

greedy_target_layers[n_layers-2]->activation +=

greedy_target_layers[n_layers-2]->bias;

for( int c=0; c<n_classes; c++ )

{

// Compute class free-energy

layers[n_layers-1]->activation.toMat(layers[n_layers-1]->size,1) +=

greedy_target_connections[n_layers-2]->weights.column(c);

greedy_target_layers[n_layers-2]->activation[c] -=

layers[n_layers-1]->freeEnergyContribution(layers[n_layers-1]->activation);

// Compute class dependent expectation and store it

layers[n_layers-1]->expectation_is_not_up_to_date();

layers[n_layers-1]->computeExpectation();

greedy_target_expectations[n_layers-2][c] << layers[n_layers-1]->expectation;

// Remove class-dependent energy for next free-energy computations

layers[n_layers-1]->activation.toMat(layers[n_layers-1]->size,1) -=

greedy_target_connections[n_layers-2]->weights.column(c);

}

greedy_target_layers[n_layers-2]->expectation_is_not_up_to_date();

greedy_target_layers[n_layers-2]->computeExpectation();

// Computing next layer representation

layers[n_layers-1]->expectation.clear();

Vec expectation = layers[n_layers-1]->expectation;

for( int c=0; c<n_classes; c++ )

{

Vec expectation_c = greedy_target_expectations[n_layers-2][c];

real p_c = greedy_target_layers[n_layers-2]->expectation[c];

multiplyScaledAdd(expectation_c,real(1.), p_c, expectation);

}

}

else

{

connections[ n_layers-2 ]->setAsDownInput(

layers[ n_layers-2 ]->expectation );

layers[ n_layers-1 ]->getAllActivations( connections[ n_layers-2 ] );

layers[ n_layers-1 ]->computeExpectation();

}

if( final_module )

{

final_module->fprop( layers[ n_layers-1 ]->expectation,

final_cost_input );

output.append( final_cost_input );

}

else

{

output.append( layers[ n_layers-1 ]->expectation );

}

if (reconstruct_layerwise)

{

layer_input.resize(layers[n_layers-2]->size);

layer_input << layers[n_layers-2]->expectation;

connections[n_layers-2]->setAsUpInput(layers[n_layers-1]->expectation);

layers[n_layers-2]->getAllActivations(connections[n_layers-2]);

real rc = reconstruction_costs[n_layers-1] = layers[n_layers-2]->fpropNLL( layer_input );

reconstruction_costs[0] += rc;

}

}

if(!use_classification_cost && !final_module)

{

if (reconstruct_layerwise)

{

layer_input.resize(layers[n_layers-2]->size);

layer_input << layers[n_layers-2]->expectation;

connections[n_layers-2]->setAsUpInput(layers[n_layers-1]->expectation);

layers[n_layers-2]->getAllActivations(connections[n_layers-2]);

real rc = reconstruction_costs[n_layers-1] = layers[n_layers-2]->fpropNLL( layer_input );

reconstruction_costs[0] += rc;

}

}

}

| void PLearn::DeepBeliefNet::computeOutputsAndCosts | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | outputs, | ||

| Mat & | costs | ||

| ) | const [virtual] |

This function is usefull when the NLL CostModule AND/OR the final_cost Module are more efficient with batch computation (or need to be computed on a bunch of examples, as LayerCostModule)

Reimplemented from PLearn::PLearner.

Definition at line 3353 of file DeepBeliefNet.cc.

References computeClassifAndFinalCostsFromOutputs(), computeOutput(), cost_names, PLearn::TMat< T >::fill(), i, PLearn::TVec< T >::isEmpty(), layers, PLearn::TVec< T >::length(), PLearn::TMat< T >::length(), MISSING_VALUE, n_layers, outputsize(), partial_costs, partial_costs_indices, PLASSERT, reconstruct_layerwise, reconstruction_cost_index, reconstruction_costs, and PLearn::TMat< T >::resize().

{

int nsamples = inputs.length();

PLASSERT( targets.length() == nsamples );

outputs.resize( nsamples, outputsize() );

costs.resize( nsamples, cost_names.length() );

costs.fill( MISSING_VALUE );

for (int isample = 0; isample < nsamples; isample++ )

{

Vec in_i = inputs(isample);

Vec out_i = outputs(isample);

computeOutput(in_i, out_i);

if( !partial_costs.isEmpty() )

{

Vec pcosts;

for( int i=0 ; i<n_layers-1 ; i++ )

// propagate into local cost associated to output of layer i+1

if( partial_costs[ i ] )

{

partial_costs[ i ]->fprop( layers[ i+1 ]->expectation,

targets(isample), pcosts);

costs(isample).subVec(partial_costs_indices[i], pcosts.length())

<< pcosts;

}

}

if (reconstruct_layerwise)

costs(isample).subVec(reconstruction_cost_index, reconstruction_costs.length())

<< reconstruction_costs;

}

computeClassifAndFinalCostsFromOutputs(inputs, outputs, targets, costs);

}

| void PLearn::DeepBeliefNet::contrastiveDivergenceStep | ( | const PP< RBMLayer > & | down_layer, |

| const PP< RBMConnection > & | connection, | ||

| const PP< RBMLayer > & | up_layer, | ||

| int | layer_index, | ||

| bool | nofprop = false |

||

| ) |

Perform a step of contrastive divergence, assuming that down_layer->expectation(s) is set.

Definition at line 2802 of file DeepBeliefNet.cc.

References background_gibbs_update_ratio, cd_neg_down_vals, cd_neg_up_vals, corrupt_input(), corrupted_pos_down_val, gibbs_down_state, initialize_gibbs_chain, mean_field_contrastive_divergence_ratio, mf_cd_neg_down_val, mf_cd_neg_down_vals, mf_cd_neg_up_val, mf_cd_neg_up_vals, minibatch_hack, minibatch_size, pos_down_val, pos_down_vals, pos_up_val, pos_up_vals, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), use_corrupted_posDownVal, and use_sample_for_up_layer.

Referenced by greedyStep(), jointGreedyStep(), onlineStep(), and upDownStep().

{

bool mbatch = minibatch_size > 1 || minibatch_hack;

// positive phase

if (!nofprop)

{

if (mbatch) {

connection->setAsDownInputs( down_layer->getExpectations() );

up_layer->getAllActivations( connection, 0, true );

up_layer->computeExpectations();

} else {

if( use_corrupted_posDownVal == "for_cd_fprop" )

{

corrupted_pos_down_val.resize( down_layer->size );

corrupt_input( down_layer->expectation, corrupted_pos_down_val, layer_index );

connection->setAsDownInput( corrupted_pos_down_val );

}

else

connection->setAsDownInput( down_layer->expectation );

up_layer->getAllActivations( connection );

up_layer->computeExpectation();

}

}

if (mbatch)

{

// accumulate positive stats using the expectation

// we deep-copy because the value will change during negative phase

pos_down_vals.resize(minibatch_size, down_layer->size);

pos_up_vals.resize(minibatch_size, up_layer->size);

pos_down_vals << down_layer->getExpectations();

pos_up_vals << up_layer->getExpectations();

up_layer->generateSamples();

// down propagation, starting from a sample of up_layer

if (background_gibbs_update_ratio<1)

// then do some contrastive divergence, o/w only background Gibbs

{

Mat neg_down_vals;

Mat neg_up_vals;

if( mean_field_contrastive_divergence_ratio > 0 )

{

mf_cd_neg_down_vals.resize(minibatch_size, down_layer->size);

mf_cd_neg_up_vals.resize(minibatch_size, up_layer->size);

connection->setAsUpInputs( up_layer->getExpectations() );

down_layer->getAllActivations( connection, 0, true );

down_layer->computeExpectations();

// negative phase

connection->setAsDownInputs( down_layer->getExpectations() );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectations();

mf_cd_neg_down_vals << down_layer->getExpectations();

mf_cd_neg_up_vals << up_layer->getExpectations();

}

if( mean_field_contrastive_divergence_ratio < 1 )

{

if( use_sample_for_up_layer )

pos_up_vals << up_layer->samples;

connection->setAsUpInputs( up_layer->samples );

down_layer->getAllActivations( connection, 0, true );

down_layer->computeExpectations();

down_layer->generateSamples();

// negative phase

connection->setAsDownInputs( down_layer->samples );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectations();

neg_down_vals = down_layer->samples;

if( use_sample_for_up_layer)

{

up_layer->generateSamples();

neg_up_vals = up_layer->samples;

}

else

neg_up_vals = up_layer->getExpectations();

}

if (background_gibbs_update_ratio==0)

// update here only if there is ONLY contrastive divergence

{

if( mean_field_contrastive_divergence_ratio < 1 )

{

real lr_dl = down_layer->learning_rate;

real lr_ul = up_layer->learning_rate;

real lr_c = connection->learning_rate;

down_layer->setLearningRate(lr_dl * (1-mean_field_contrastive_divergence_ratio));

up_layer->setLearningRate(lr_ul * (1-mean_field_contrastive_divergence_ratio));

connection->setLearningRate(lr_c * (1-mean_field_contrastive_divergence_ratio));

down_layer->update( pos_down_vals, neg_down_vals );

connection->update( pos_down_vals, pos_up_vals,

neg_down_vals, neg_up_vals );

up_layer->update( pos_up_vals, neg_up_vals );

down_layer->setLearningRate(lr_dl);

up_layer->setLearningRate(lr_ul);

connection->setLearningRate(lr_c);

}

if( mean_field_contrastive_divergence_ratio > 0 )

{

real lr_dl = down_layer->learning_rate;

real lr_ul = up_layer->learning_rate;

real lr_c = connection->learning_rate;

down_layer->setLearningRate(lr_dl * mean_field_contrastive_divergence_ratio);

up_layer->setLearningRate(lr_ul * mean_field_contrastive_divergence_ratio);

connection->setLearningRate(lr_c * mean_field_contrastive_divergence_ratio);

down_layer->update( pos_down_vals, mf_cd_neg_down_vals );

connection->update( pos_down_vals, pos_up_vals,

mf_cd_neg_down_vals, mf_cd_neg_up_vals );

up_layer->update( pos_up_vals, mf_cd_neg_up_vals );

down_layer->setLearningRate(lr_dl);

up_layer->setLearningRate(lr_ul);

connection->setLearningRate(lr_c);

}

}

else

{

connection->accumulatePosStats(pos_down_vals,pos_up_vals);

cd_neg_down_vals.resize(minibatch_size, down_layer->size);

cd_neg_up_vals.resize(minibatch_size, up_layer->size);

cd_neg_down_vals << neg_down_vals;

cd_neg_up_vals << neg_up_vals;

}

}

//

if (background_gibbs_update_ratio>0)

{

Mat down_state = gibbs_down_state[layer_index];

if (initialize_gibbs_chain) // initializing or re-initializing the chain

{

if (background_gibbs_update_ratio==1) // if <1 just use the CD state

{

up_layer->generateSamples();

connection->setAsUpInputs(up_layer->samples);

down_layer->getAllActivations(connection, 0, true);

down_layer->generateSamples();

down_state << down_layer->samples;

}

initialize_gibbs_chain=false;

}

// sample up state given down state

connection->setAsDownInputs(down_state);

up_layer->getAllActivations(connection, 0, true);

up_layer->generateSamples();

// sample down state given up state, to prepare for next time

connection->setAsUpInputs(up_layer->samples);

down_layer->getAllActivations(connection, 0, true);

down_layer->generateSamples();

// update using the down_state and up_layer->expectations for moving average in negative phase

// (and optionally

if (background_gibbs_update_ratio<1)

{

down_layer->updateCDandGibbs(pos_down_vals,cd_neg_down_vals,

down_state,

background_gibbs_update_ratio);

connection->updateCDandGibbs(pos_down_vals,pos_up_vals,

cd_neg_down_vals, cd_neg_up_vals,

down_state,

up_layer->getExpectations(),

background_gibbs_update_ratio);

up_layer->updateCDandGibbs(pos_up_vals,cd_neg_up_vals,

up_layer->getExpectations(),

background_gibbs_update_ratio);

}

else

{

down_layer->updateGibbs(pos_down_vals,down_state);

connection->updateGibbs(pos_down_vals,pos_up_vals,down_state,

up_layer->getExpectations());

up_layer->updateGibbs(pos_up_vals,up_layer->getExpectations());

}

// Save Gibbs chain's state.

down_state << down_layer->samples;

}

} else {

// accumulate positive stats using the expectation

// we deep-copy because the value will change during negative phase

pos_down_val.resize( down_layer->size );

pos_up_val.resize( up_layer->size );

Vec neg_down_val;

Vec neg_up_val;

pos_down_val << down_layer->expectation;

pos_up_val << up_layer->expectation;

up_layer->generateSample();

// negative phase

// down propagation, starting from a sample of up_layer

if( mean_field_contrastive_divergence_ratio > 0 )

{

connection->setAsUpInput( up_layer->expectation );

down_layer->getAllActivations( connection );

down_layer->computeExpectation();

connection->setAsDownInput( down_layer->expectation );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectation();

mf_cd_neg_down_val.resize( down_layer->size );

mf_cd_neg_up_val.resize( up_layer->size );

mf_cd_neg_down_val << down_layer->expectation;

mf_cd_neg_up_val << up_layer->expectation;

}

if( mean_field_contrastive_divergence_ratio < 1 )

{

if( use_sample_for_up_layer )

pos_up_val << up_layer->sample;

connection->setAsUpInput( up_layer->sample );

down_layer->getAllActivations( connection );

down_layer->computeExpectation();

down_layer->generateSample();

connection->setAsDownInput( down_layer->sample );

up_layer->getAllActivations( connection, 0, mbatch );

up_layer->computeExpectation();

neg_down_val = down_layer->sample;

if( use_sample_for_up_layer )

{

up_layer->generateSample();

neg_up_val = up_layer->sample;

}

else

neg_up_val = up_layer->expectation;

}

// update

if( mean_field_contrastive_divergence_ratio < 1 )

{

real lr_dl = down_layer->learning_rate;

real lr_ul = up_layer->learning_rate;

real lr_c = connection->learning_rate;

down_layer->setLearningRate(lr_dl * (1-mean_field_contrastive_divergence_ratio));

up_layer->setLearningRate(lr_ul * (1-mean_field_contrastive_divergence_ratio));

connection->setLearningRate(lr_c * (1-mean_field_contrastive_divergence_ratio));

if( use_corrupted_posDownVal == "for_cd_update" )

{

corrupted_pos_down_val.resize( down_layer->size );

corrupt_input( pos_down_val, corrupted_pos_down_val, layer_index );

down_layer->update( corrupted_pos_down_val, neg_down_val );

connection->update( corrupted_pos_down_val, pos_up_val,

neg_down_val, neg_up_val );

}

else

{

down_layer->update( pos_down_val, neg_down_val );

connection->update( pos_down_val, pos_up_val,

neg_down_val, neg_up_val );

}

up_layer->update( pos_up_val, neg_up_val );

down_layer->setLearningRate(lr_dl);

up_layer->setLearningRate(lr_ul);

connection->setLearningRate(lr_c);

}

if( mean_field_contrastive_divergence_ratio > 0 )

{

real lr_dl = down_layer->learning_rate;

real lr_ul = up_layer->learning_rate;

real lr_c = connection->learning_rate;

down_layer->setLearningRate(lr_dl * mean_field_contrastive_divergence_ratio);

up_layer->setLearningRate(lr_ul * mean_field_contrastive_divergence_ratio);

connection->setLearningRate(lr_c * mean_field_contrastive_divergence_ratio);

if( use_corrupted_posDownVal == "for_cd_update" )

{

corrupted_pos_down_val.resize( down_layer->size );

corrupt_input( pos_down_val, corrupted_pos_down_val, layer_index );

down_layer->update( corrupted_pos_down_val, mf_cd_neg_down_val );

connection->update( corrupted_pos_down_val, pos_up_val,

mf_cd_neg_down_val, mf_cd_neg_up_val );

}

else

{

down_layer->update( pos_down_val, mf_cd_neg_down_val );

connection->update( pos_down_val, pos_up_val,

mf_cd_neg_down_val, mf_cd_neg_up_val );

}

up_layer->update( pos_up_val, mf_cd_neg_up_val );

down_layer->setLearningRate(lr_dl);

up_layer->setLearningRate(lr_ul);

connection->setLearningRate(lr_c);

}

}

}

| void PLearn::DeepBeliefNet::corrupt_input | ( | const Vec & | input, |

| Vec & | corrupted_input, | ||

| int | layer | ||

| ) | [private] |

Definition at line 3430 of file DeepBeliefNet.cc.

References expectation_indices, fraction_of_masked_inputs, j, PLearn::TVec< T >::length(), mask_with_pepper_salt, noise_type, PLERROR, prob_salt_noise, PLearn::PLearner::random_gen, and PLearn::TVec< T >::resize().

Referenced by contrastiveDivergenceStep(), and greedyStep().

{

corrupted_input.resize(input.length());

if( noise_type == "masking_noise" )

{

corrupted_input << input;

if( fraction_of_masked_inputs != 0 )

{

random_gen->shuffleElements(expectation_indices[layer]);

if( mask_with_pepper_salt )

for( int j=0 ; j < round(fraction_of_masked_inputs*input.length()) ; j++)

corrupted_input[ expectation_indices[layer][j] ] = random_gen->binomial_sample(prob_salt_noise);

else

for( int j=0 ; j < round(fraction_of_masked_inputs*input.length()) ; j++)

corrupted_input[ expectation_indices[layer][j] ] = 0;

}

}

/* else if( noise_type == "binary_sampling" )

{

for( int i=0; i<corrupted_input.length(); i++ )

corrupted_input[i] = random_gen->binomial_sample((input[i]-0.5)*binary_sampling_noise_parameter+0.5);

}

else if( noise_type == "gaussian" )

{

for( int i=0; i<corrupted_input.length(); i++ )

corrupted_input[i] = input[i] +

random_gen->gaussian_01() * gaussian_std;

}

else

PLERROR("In StackedAutoassociatorsNet::corrupt_input(): "

"missing_data_method %s not valid with noise_type %s",

missing_data_method.c_str(), noise_type.c_str());

}*/

else if( noise_type == "none" )

corrupted_input << input;

else

PLERROR("In DeepBeliefNet::corrupt_input(): noise_type %s not valid", noise_type.c_str());

}

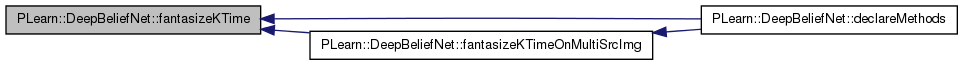

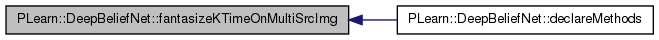

| void PLearn::DeepBeliefNet::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declare the methods that are remote-callable.

Reimplemented from PLearn::PLearner.

Definition at line 105 of file DeepBeliefNet.cc.

References PLearn::PLearner::_getRemoteMethodMap_(), PLearn::declareMethod(), fantasizeKTime(), fantasizeKTimeOnMultiSrcImg(), and PLearn::RemoteMethodMap::inherited().

{

// Insert a backpointer to remote methods; note that this is different from declareOptions().

rmm.inherited(inherited::_getRemoteMethodMap_());

declareMethod(

rmm, "fantasizeKTime",

&DeepBeliefNet::fantasizeKTime,

(BodyDoc("On a trained learner, computes a codage-decodage (fantasize) through a specified number of hidden layer."),

ArgDoc ("kTime", "Number of time we want to fantasize. \n"

"Next input image will again be the source Image (if alwaysFromSrcImg is True) \n"

"or next input image will be the last fantasize image (if alwaysFromSrcImg is False), and so on for kTime.)"),

ArgDoc ("srcImg", "Source image vector (should have same width as raws layer)"),

ArgDoc ("sampling", "Vector of bool indicating whether or not a sampling will be done for each hidden layer\n"

"during decodage. Its width indicates how many hidden layer will be used.)\n"

" (should have same width as maskNoiseFractOrProb)\n"

"smaller element of the vector correspond to lower layer"),

ArgDoc ("alwaysFromSrcImg", "Booleen indicating whether each encode-decode \n"

"steps are done from the source image (sets to True) or \n"

"if the next input image is the last fantasize image (sets to False). "),

RetDoc ("Fantasize images obtained for each kTime.")));

declareMethod(

rmm, "fantasizeKTimeOnMultiSrcImg",

&DeepBeliefNet::fantasizeKTimeOnMultiSrcImg,

(BodyDoc("Call the 'fantasizeKTime' function for each source images found in the matrix 'srcImg'."),

ArgDoc ("kTime", "Number of time we want to fantasize for each source images. \n"

"Next input image will again be the source Image (if alwaysFromSrcImg is True) \n"

"or next input image will be the last fantasize image (if alwaysFromSrcImg is False), and so on for kTime.)"),

ArgDoc ("srcImg", "Source images matrix (should have same width as raws layer)"),

ArgDoc ("sampling", "Vector of bool indicating whether or not a sampling will be done for each hidden layer\n"

"during decodage. Its width indicates how many hidden layer will be used.)\n"

" (should have same width as maskNoiseFractOrProb)\n"

"smaller element of the vector correspond to lower layer"),

ArgDoc ("alwaysFromSrcImg", "Booleen indicating whether each encode-decode \n"

"steps are done from the source image (sets to True) or \n"

"if the next input image is the preceding fantasize image obtained (sets to False). "),

RetDoc ("For each source images, fantasize images obtained for each kTime.")));

}

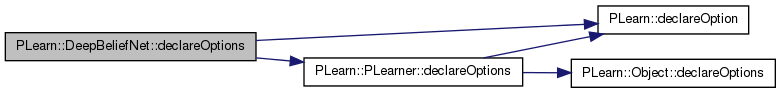

| void PLearn::DeepBeliefNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 150 of file DeepBeliefNet.cc.

References background_gibbs_update_ratio, batch_size, PLearn::OptionBase::buildoption, cd_decrease_ct, cd_learning_rate, classification_cost, classification_module, connections, cumulative_testing_time, cumulative_training_time, PLearn::declareOption(), PLearn::PLearner::declareOptions(), final_cost, final_module, fraction_of_masked_inputs, generative_connections, gibbs_chain_reinit_freq, gibbs_down_state, grad_decrease_ct, grad_learning_rate, greedy_target_connections, greedy_target_layers, i_output_layer, joint_layer, layers, learnerExpdir, PLearn::OptionBase::learntoption, mask_with_pepper_salt, mean_field_contrastive_divergence_ratio, minibatch_size, n_classes, n_layers, noise_type, PLearn::OptionBase::nosave, online, partial_costs, prob_salt_noise, reconstruct_layerwise, save_learner_before_fine_tuning, top_layer_joint_cd, train_stats_window, training_schedule, up_down_decrease_ct, up_down_learning_rate, up_down_nstages, up_down_stage, use_classification_cost, use_corrupted_posDownVal, and use_sample_for_up_layer.

{

declareOption(ol, "cd_learning_rate", &DeepBeliefNet::cd_learning_rate,

OptionBase::buildoption,

"The learning rate used during contrastive divergence"

" learning");

declareOption(ol, "cd_decrease_ct", &DeepBeliefNet::cd_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during"

" contrastive divergence");

declareOption(ol, "up_down_learning_rate",

&DeepBeliefNet::up_down_learning_rate,

OptionBase::buildoption,

"The learning rate used in the up-down algorithm during the\n"

"unsupervised fine tuning gradient descent.\n");

declareOption(ol, "up_down_decrease_ct", &DeepBeliefNet::up_down_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used in the\n"

"up-down algorithm during the unsupervised fine tuning\n"

"gradient descent.\n");

declareOption(ol, "grad_learning_rate", &DeepBeliefNet::grad_learning_rate,

OptionBase::buildoption,

"The learning rate used during gradient descent");

declareOption(ol, "grad_decrease_ct", &DeepBeliefNet::grad_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during"

" gradient descent");

declareOption(ol, "batch_size", &DeepBeliefNet::batch_size,

OptionBase::buildoption,

"Training batch size (1=stochastic learning, 0=full batch learning).");

/* NOT IMPLEMENTED YET

declareOption(ol, "grad_weight_decay", &DeepBeliefNet::grad_weight_decay,

OptionBase::buildoption,

"The weight decay used during the gradient descent");

*/

declareOption(ol, "n_classes", &DeepBeliefNet::n_classes,

OptionBase::buildoption,

"Number of classes in the training set:\n"

" - 0 means we are doing regression,\n"

" - 1 means we have two classes, but only one output,\n"

" - 2 means we also have two classes, but two outputs"

" summing to 1,\n"

" - >2 is the usual multiclass case.\n"

);

declareOption(ol, "training_schedule", &DeepBeliefNet::training_schedule,

OptionBase::buildoption,

"Number of examples to use during each phase of learning:\n"

"first the greedy phases, and then the fine-tuning phase.\n"

"However, the learning will stop as soon as we reach nstages.\n"

"For example for 2 hidden layers, with 1000 examples in each\n"

"greedy phase, and 500 in the fine-tuning phase, this option\n"

"should be [1000 1000 500], and nstages should be at least 2500.\n"

"When online = true, this vector is ignored and should be empty.\n");

declareOption(ol, "up_down_nstages", &DeepBeliefNet::up_down_nstages,

OptionBase::buildoption,

"Number of samples to use for unsupervised fine-tuning\n"

"with the up-down algorithm. The unsupervised fine-tuning will\n"

"be executed between the greedy layer-wise learning and the\n"

"supervised fine-tuning. The up-down algorithm only works for\n"

"RBMMatrixConnection connections.\n");

declareOption(ol, "use_classification_cost",

&DeepBeliefNet::use_classification_cost,

OptionBase::buildoption,

"Put the class target as an extra input of the top-level RBM\n"

"and compute and maximize conditional class probability in that\n"

"top layer (probability of the correct class given the other input\n"

"of the top-level RBM, which is the output of the rest of the network.\n");

declareOption(ol, "reconstruct_layerwise",

&DeepBeliefNet::reconstruct_layerwise,

OptionBase::buildoption,

"Compute reconstruction error of each layer as an auto-encoder.\n"

"This is done using cross-entropy between actual and reconstructed.\n"

"This option automatically adds the following cost names:\n"

" layerwise_reconstruction_error (sum over all layers)\n"

" layer0.reconstruction_error (only layers[0])\n"

" layer1.reconstruction_error (only layers[1])\n"

" etc.\n");

declareOption(ol, "layers", &DeepBeliefNet::layers,

OptionBase::buildoption,

"The layers of units in the network (including the input layer).");

declareOption(ol, "i_output_layer", &DeepBeliefNet::i_output_layer,

OptionBase::buildoption,

"The index of the layers from which you want to compute output"

"when there is NO final_module NEITHER final_cost."

"If -1, then the outputs (with this setting) will be"

"the expectations of the last layer.");

declareOption(ol, "connections", &DeepBeliefNet::connections,

OptionBase::buildoption,

"The weights of the connections between the layers");

declareOption(ol, "greedy_target_layers", &DeepBeliefNet::greedy_target_layers,

OptionBase::buildoption,

"Optional target layers for greedy layer-wise pretraining");

declareOption(ol, "greedy_target_connections", &DeepBeliefNet::greedy_target_connections,

OptionBase::buildoption,

"Optional target matrix connections for greedy layer-wise pretraining");

declareOption(ol, "learnerExpdir",

&DeepBeliefNet::learnerExpdir,

OptionBase::buildoption,

"Experiment directory where the learner will be save\n"

"if save_learner_before_fine_tuning is true."

);

declareOption(ol, "save_learner_before_fine_tuning",

&DeepBeliefNet::save_learner_before_fine_tuning,

OptionBase::buildoption,

"Saves the learner before the supervised fine-tuning."

);

declareOption(ol, "classification_module",

&DeepBeliefNet::classification_module,

OptionBase::learntoption,

"The module computing the class probabilities (if"

" use_classification_cost)\n"

);

declareOption(ol, "classification_cost",

&DeepBeliefNet::classification_cost,

OptionBase::nosave,

"The module computing the classification cost function (NLL)"

" on top\n"

"of classification_module.\n"

);

declareOption(ol, "joint_layer", &DeepBeliefNet::joint_layer,

OptionBase::nosave,

"Concatenation of layers[n_layers-2] and the target layer\n"

"(that is inside classification_module), if"

" use_classification_cost.\n"

);

declareOption(ol, "final_module", &DeepBeliefNet::final_module,

OptionBase::buildoption,

"Optional module that takes as input the output of the last"

" layer\n"

"layers[n_layers-1), and its output is fed to final_cost,"

" and\n"

"concatenated with the one of classification_cost (if"

" present)\n"

"as output of the learner.\n"

"If it is not provided, then the last layer will directly be"

" put as\n"

"input of final_cost.\n"

);

declareOption(ol, "final_cost", &DeepBeliefNet::final_cost,

OptionBase::buildoption,

"The cost function to be applied on top of the DBN (or of\n"

"final_module if provided). Its gradients will be"

" backpropagated\n"

"to final_module, then combined with the one of"

" classification_cost and\n"

"backpropagated to the layers.\n"

);

declareOption(ol, "partial_costs", &DeepBeliefNet::partial_costs,

OptionBase::buildoption,

"The different cost functions to be applied on top of each"

" layer\n"

"(except the first one) of the RBM. These costs are not\n"

"back-propagated to previous layers.\n");

declareOption(ol, "use_sample_for_up_layer", &DeepBeliefNet::use_sample_for_up_layer,

OptionBase::buildoption,

"Indication that the update of the top layer during CD uses\n"

"a sample, not the expectation.\n");

declareOption(ol, "use_corrupted_posDownVal",

&DeepBeliefNet::use_corrupted_posDownVal,

OptionBase::buildoption,

"Indicates whether we will use a corrupted version of the\n"

"positive down value during the CD step.\n"

"Choose among:\n"

" - \"for_cd_fprop\"\n"

" - \"for_cd_update\"\n"

" - \"none\"\n");

declareOption(ol, "noise_type",

&DeepBeliefNet::noise_type,

OptionBase::buildoption,

"Type of noise that corrupts the pos_down_val. "

"Choose among:\n"

" - \"masking_noise\"\n"

" - \"none\"\n");

declareOption(ol, "fraction_of_masked_inputs",