|

PLearn 0.1

|

|

PLearn 0.1

|

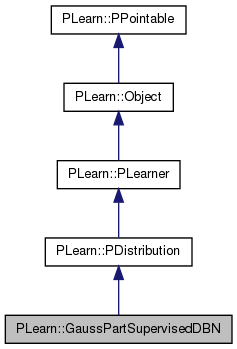

Hinton's DBN plus supervised gradient from a logistic regression layer. More...

#include <GaussPartSupervisedDBN.h>

Public Member Functions | |

| GaussPartSupervisedDBN () | |

| Default constructor. | |

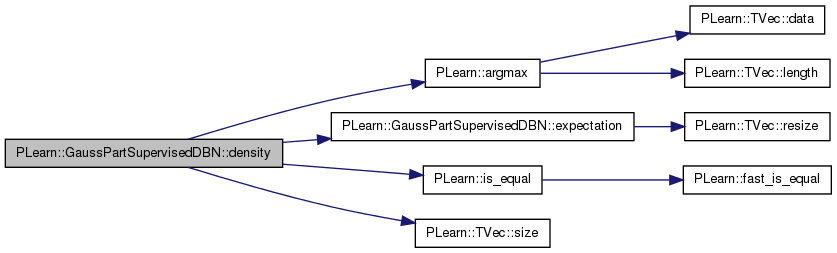

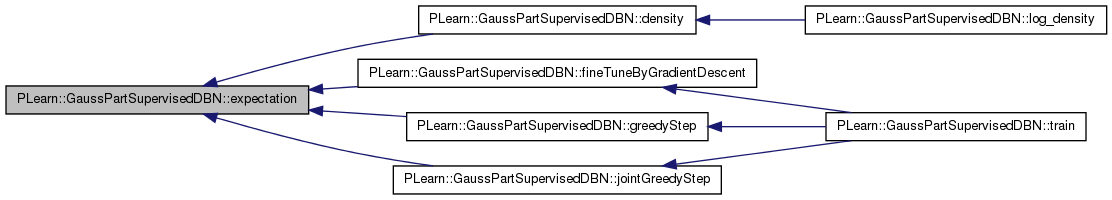

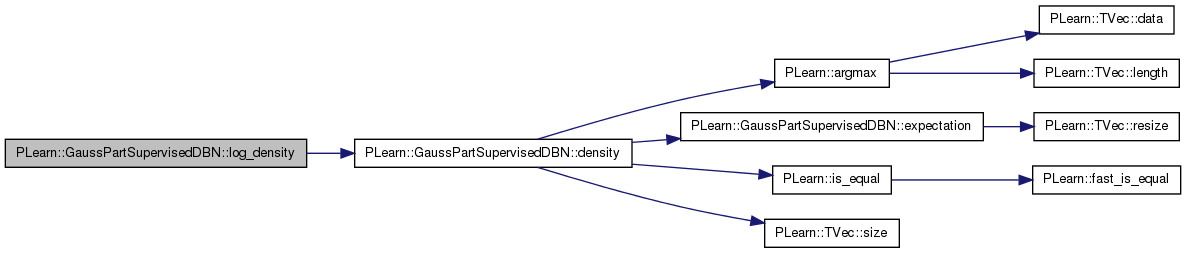

| virtual real | density (const Vec &y) const |

| Return probability density p(y | x) | |

| virtual real | log_density (const Vec &y) const |

| Return log of probability density log(p(y | x)). | |

| virtual real | survival_fn (const Vec &y) const |

| Return survival function: P(Y>y | x). | |

| virtual real | cdf (const Vec &y) const |

| Return cdf: P(Y<y | x). | |

| virtual void | expectation (Vec &mu) const |

| Return E[Y | x]. | |

| virtual void | variance (Mat &cov) const |

| Return Var[Y | x]. | |

| virtual void | generate (Vec &y) const |

| Return a pseudo-random sample generated from the conditional distribution, of density p(y | x). | |

| virtual bool | setPredictorPredictedSizes (int the_predictor_size, int the_predicted_size, bool call_parent=true) |

| Generates a pseudo-random sample x from the reversed conditional distribution, of density p(x | y) (and NOT p(y | x)). | |

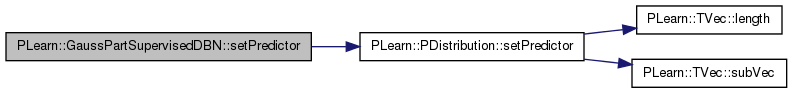

| virtual void | setPredictor (const Vec &predictor, bool call_parent=true) const |

| Set the value for the predictor part of a conditional probability. | |

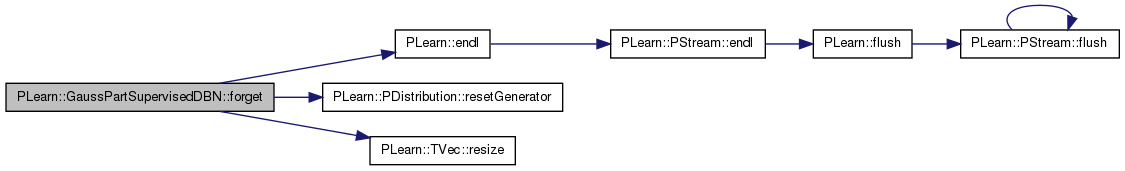

| virtual void | forget () |

| (Re-)initializes the PDistribution in its fresh state (that state may depend on the 'seed' option). | |

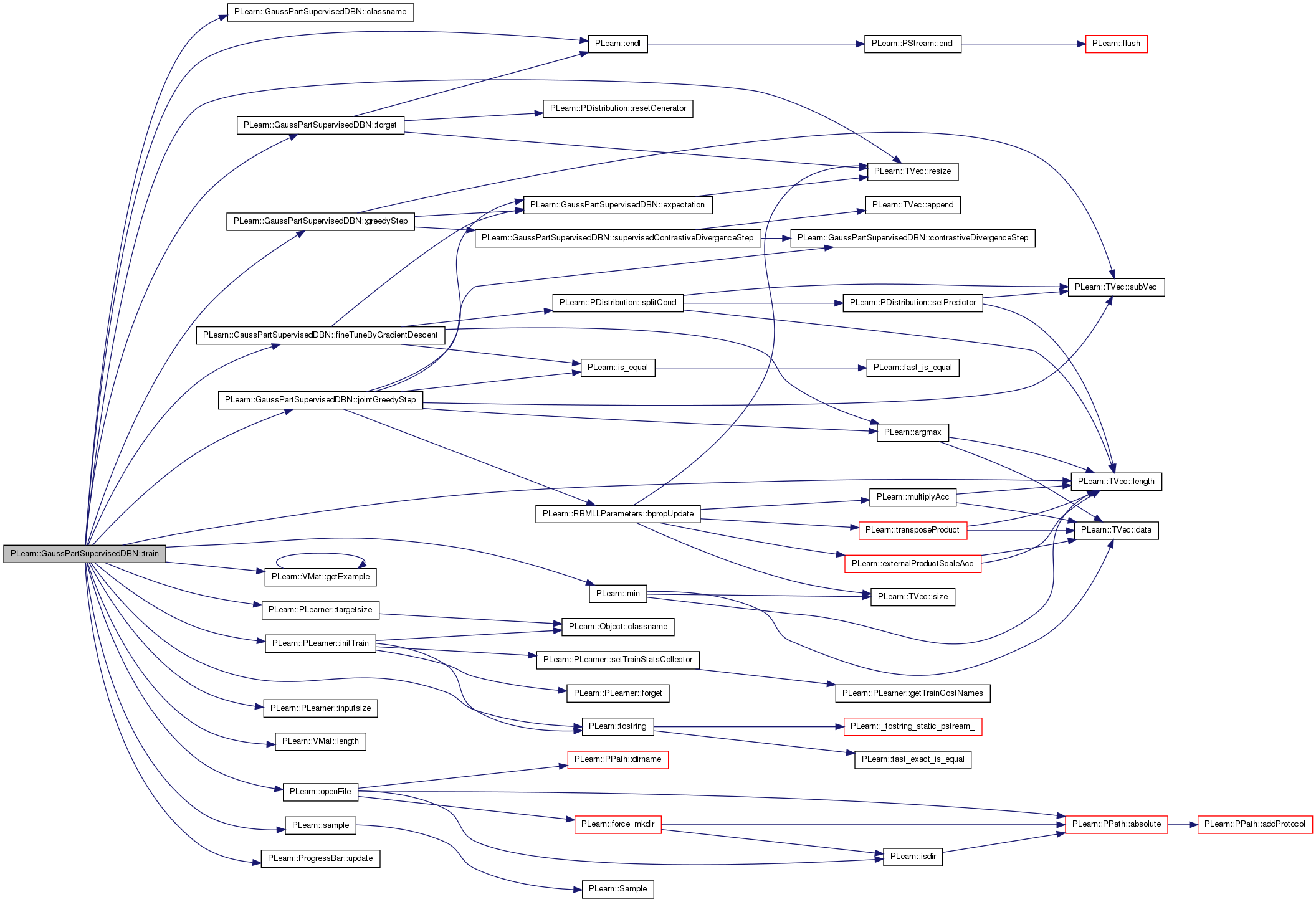

| virtual void | train () |

| The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Compute a cost, depending on the type of the first output : if it is the density or the log-density: NLL if it is the expectation: NLL and class error. | |

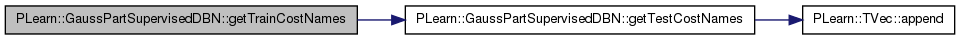

| virtual TVec< string > | getTestCostNames () const |

| Return [ "NLL" ] (the only cost computed by a PDistribution). | |

| virtual TVec< string > | getTrainCostNames () const |

| Return [ ]. | |

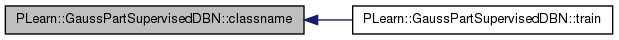

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual GaussPartSupervisedDBN * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

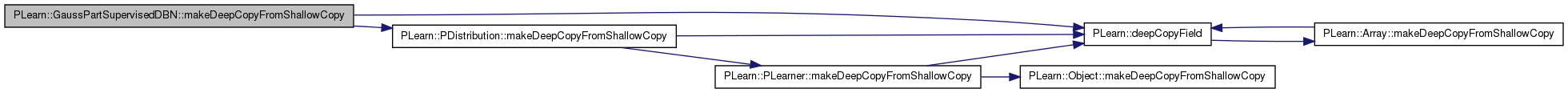

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| REDEFINE test FOR PARALLELIZATION OF THE TEST. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | learning_rate |

| The learning rate used during greedy learning. | |

| Vec | supervised_learning_rates |

| The learning rates used for the supervised part during greedy learning. | |

| real | fine_tuning_learning_rate |

| The learning rate used during the gradient descent. | |

| real | initial_momentum |

| Initial momentum. | |

| real | final_momentum |

| Final momentum. | |

| int | momentum_switch_time |

| number of samples to be seen by layer i before its momentum switches from initial_momentum to final_momentum | |

| real | weight_decay |

| The weight decay. | |

| string | initialization_method |

| The method used to initialize the weights: | |

| int | n_layers |

| Number of layers, including input layer and last layer, but not target layer. | |

| TVec< PP< RBMLayer > > | layers |

| Layers that learn representations of the input, layers[0] is input layer, layers[n_layers-1] is last layer. | |

| PP< RBMLayer > | last_layer |

| Last layer, learning joint representations of input and target. | |

| PP< RBMMultinomialLayer > | target_layer |

| Target (or label) layer. | |

| PP< RBMMixedLayer > | joint_layer |

| Concatenation of target_layer and layers[n_layers-2]. | |

| TVec< PP< RBMLLParameters > > | params |

| RBMParameters linking the unsupervised layers. | |

| PP< RBMQLParameters > | input_params |

| RBMParameters linking the unsupervised layers. | |

| PP< RBMLLParameters > | target_params |

| Parameters linking target_layer and last_layer. | |

| PP< RBMJointLLParameters > | joint_params |

| Parameters linking joint_layer and last_layer. | |

| TVec< PP< OnlineLearningModule > > | regressors |

| Logistic regressors that will provide the supervised gradient for each RBMParameters. | |

| int | parallelization_minibatch_size |

| only used when USING_MPI for parallelization this is the number of examples seen by one process during training after which the weight updates are shared among all the processes. | |

| bool | sum_parallel_contributions |

| only used when USING_MPI for parallelization: sum or average the delta-w contributions from different processes? | |

| TVec< int > | training_schedule |

| Number of examples to use during each of the different greedy steps of the training phase. | |

| string | fine_tuning_method |

| Method for fine-tuning the whole network after greedy learning. | |

| TVec< int > | use_sample_or_expectation |

| Vector providing information on which information to use during the contrastive divergence step: | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

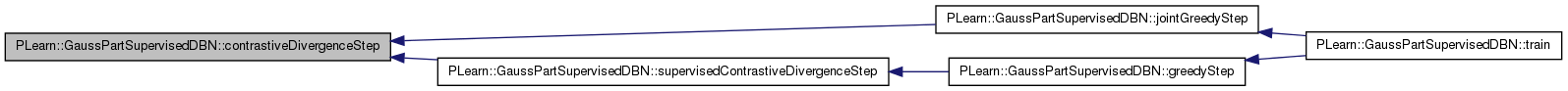

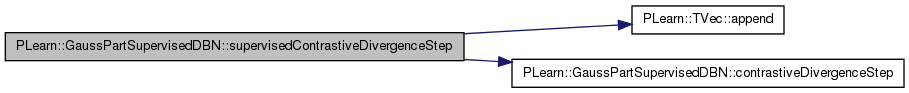

| virtual void | contrastiveDivergenceStep (const PP< RBMLayer > &down_layer, const PP< RBMParameters > ¶meters, const PP< RBMLayer > &up_layer) |

| virtual real | supervisedContrastiveDivergenceStep (const PP< RBMLayer > &down_layer, const PP< RBMParameters > ¶meters, const PP< RBMLayer > &up_layer, const Vec &target, int index) |

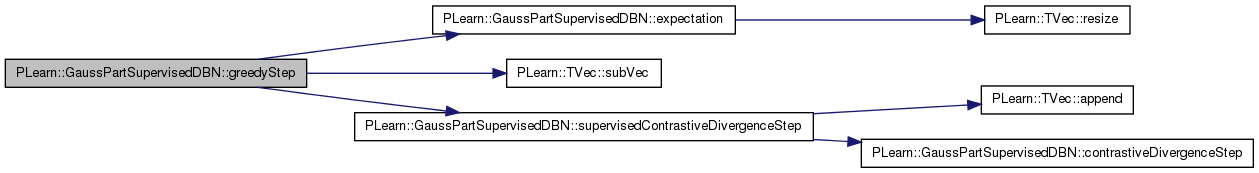

| virtual real | greedyStep (const Vec &predictor, int params_index) |

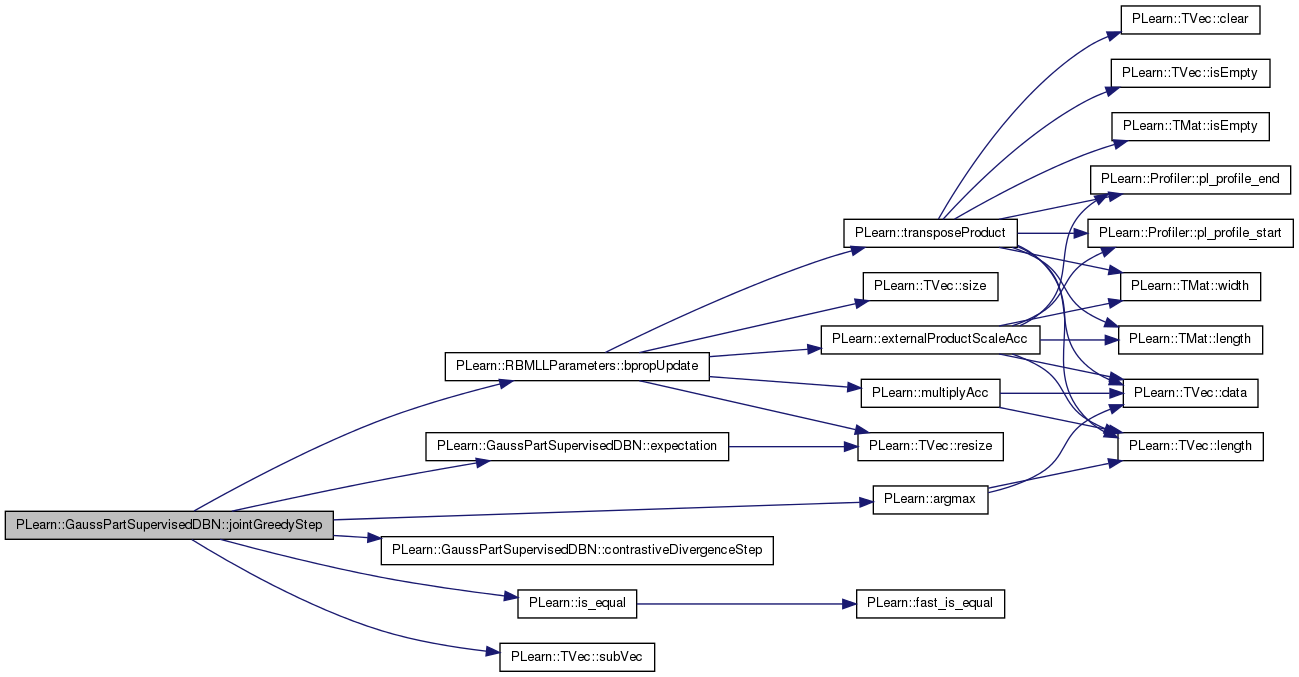

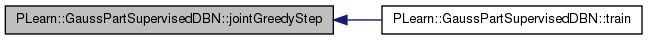

| virtual real | jointGreedyStep (const Vec &input) |

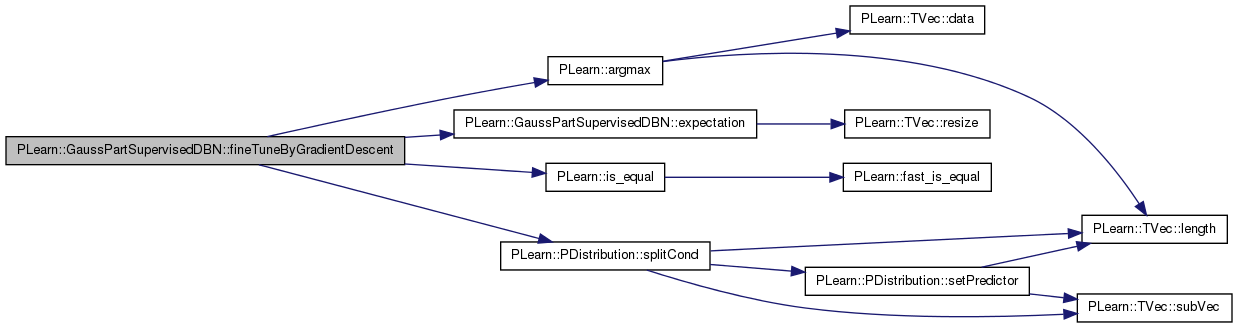

| virtual void | fineTuneByGradientDescent (const Vec &input, const Vec &train_costs) |

Static Protected Member Functions | |

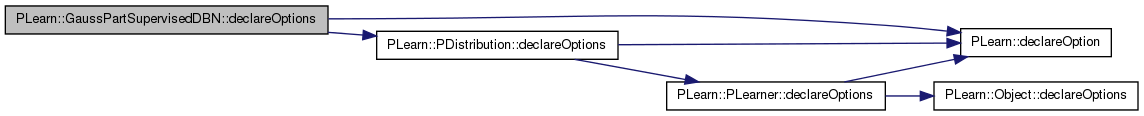

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| TVec< Vec > | activation_gradients |

| gradients of cost wrt the activations (output of params) | |

| TVec< Vec > | expectation_gradients |

| gradients of cost wrt the expectations (output of layers) | |

| Vec | output_gradient |

| gradient wrt output activations | |

Private Types | |

| typedef PDistribution | inherited |

Private Member Functions | |

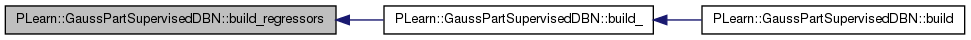

| void | build_ () |

| This does the actual building. | |

| void | build_layers () |

| Build the layers. | |

| void | build_params () |

| Build the parameters if needed. | |

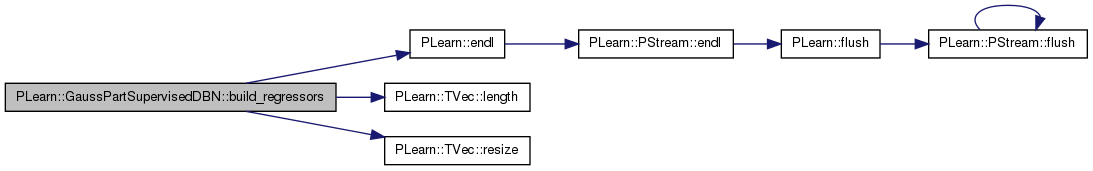

| void | build_regressors () |

| Build the regressors if needed. | |

Hinton's DBN plus supervised gradient from a logistic regression layer.

Definition at line 63 of file GaussPartSupervisedDBN.h.

typedef PDistribution PLearn::GaussPartSupervisedDBN::inherited [private] |

Reimplemented from PLearn::PDistribution.

Definition at line 65 of file GaussPartSupervisedDBN.h.

| PLearn::GaussPartSupervisedDBN::GaussPartSupervisedDBN | ( | ) |

Default constructor.

Definition at line 76 of file GaussPartSupervisedDBN.cc.

References PLearn::PLearner::random_gen, and use_sample_or_expectation.

:

learning_rate(0.),

fine_tuning_learning_rate(-1.),

initial_momentum(0.),

final_momentum(0.),

momentum_switch_time(-1),

weight_decay(0.),

parallelization_minibatch_size(100),

sum_parallel_contributions(0),

use_sample_or_expectation(4)

{

use_sample_or_expectation[0] = 0;

use_sample_or_expectation[1] = 1;

use_sample_or_expectation[2] = 2;

use_sample_or_expectation[3] = 0;

random_gen = new PRandom();

}

| string PLearn::GaussPartSupervisedDBN::_classname_ | ( | ) | [static] |

REDEFINE test FOR PARALLELIZATION OF THE TEST.

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| OptionList & PLearn::GaussPartSupervisedDBN::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| RemoteMethodMap & PLearn::GaussPartSupervisedDBN::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| Object * PLearn::GaussPartSupervisedDBN::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| StaticInitializer GaussPartSupervisedDBN::_static_initializer_ & PLearn::GaussPartSupervisedDBN::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

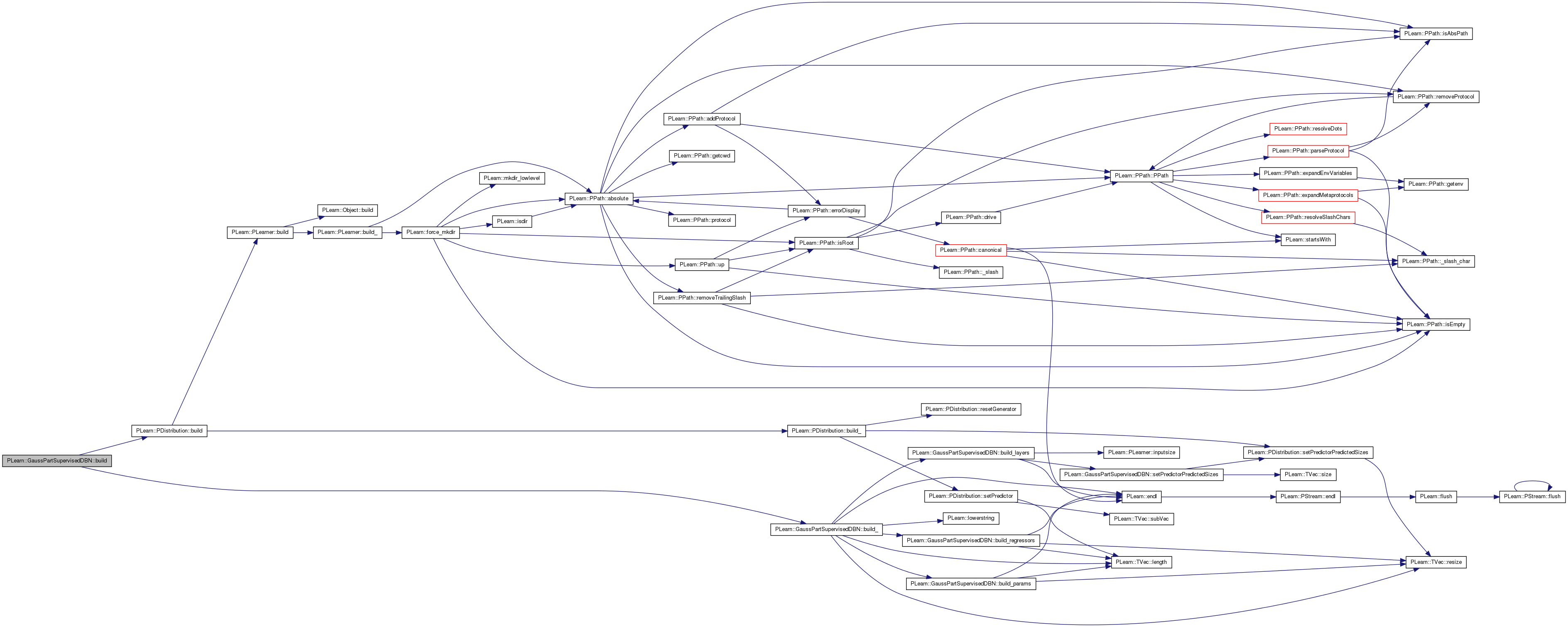

| void PLearn::GaussPartSupervisedDBN::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PDistribution.

Definition at line 262 of file GaussPartSupervisedDBN.cc.

References PLearn::PDistribution::build(), and build_().

{

// ### Nothing to add here, simply calls build_().

inherited::build();

build_();

}

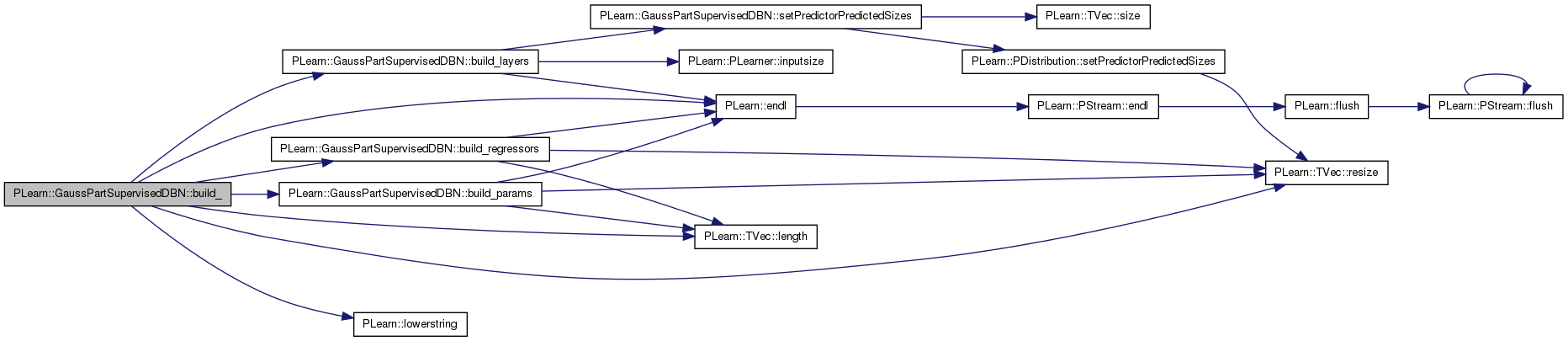

| void PLearn::GaussPartSupervisedDBN::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PDistribution.

Definition at line 272 of file GaussPartSupervisedDBN.cc.

References build_layers(), build_params(), build_regressors(), PLearn::endl(), fine_tuning_learning_rate, fine_tuning_method, initialization_method, layers, learning_rate, PLearn::TVec< T >::length(), PLearn::lowerstring(), n_layers, PLERROR, PLearn::TVec< T >::resize(), supervised_learning_rates, and training_schedule.

Referenced by build().

{

MODULE_LOG << "build_() called" << endl;

n_layers = layers.length();

if( n_layers <= 1 )

return;

if( fine_tuning_learning_rate < 0. )

fine_tuning_learning_rate = learning_rate;

// check value of initialization_method

string im = lowerstring( initialization_method );

if( im == "" || im == "uniform_sqrt" )

initialization_method = "uniform_sqrt";

else if( im == "uniform_linear" )

initialization_method = im;

else if( im == "zero" )

initialization_method = im;

else

PLERROR( "RBMParameters::build_ - initialization_method\n"

"\"%s\" unknown.\n", initialization_method.c_str() );

MODULE_LOG << " initialization_method = \"" << initialization_method

<< "\"" << endl;

// check value of fine_tuning_method

string ftm = lowerstring( fine_tuning_method );

if( ftm == "" | ftm == "none" )

fine_tuning_method = "";

else if( ftm == "cd" | ftm == "contrastive_divergence" )

fine_tuning_method = "CD";

else if( ftm == "egd" | ftm == "error_gradient_descent" )

fine_tuning_method = "EGD";

else if( ftm == "ws" | ftm == "wake_sleep" )

fine_tuning_method = "WS";

else

PLERROR( "GaussPartSupervisedDBN::build_ - fine_tuning_method \"%s\"\n"

"is unknown.\n", fine_tuning_method.c_str() );

MODULE_LOG << " fine_tuning_method = \"" << fine_tuning_method << "\""

<< endl;

//TODO: build structure to store gradients during gradient descent

if( training_schedule.length() != n_layers-1 )

training_schedule = TVec<int>( n_layers-1, 1000000 );

// fills with 0's if too short

supervised_learning_rates.resize( n_layers-1 );

MODULE_LOG << " training_schedule = " << training_schedule << endl;

MODULE_LOG << "learning_rate = " << learning_rate << endl;

MODULE_LOG << "fine_tuning_learning_rate = "

<< fine_tuning_learning_rate << endl;

MODULE_LOG << "supervised_learning_rates = "

<< supervised_learning_rates << endl;

MODULE_LOG << endl;

build_layers();

build_params();

build_regressors();

}

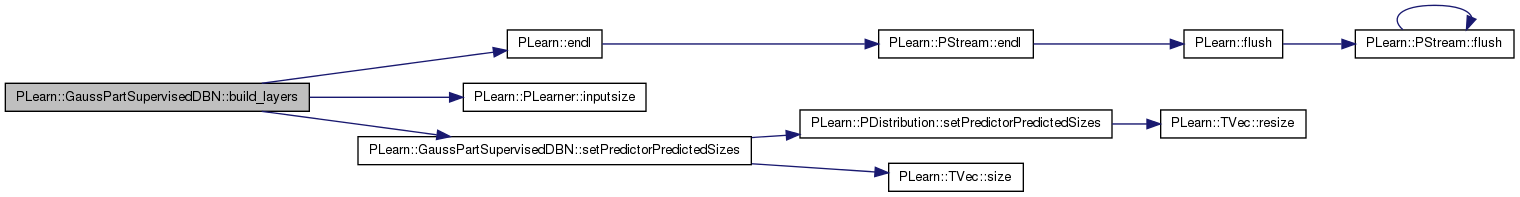

| void PLearn::GaussPartSupervisedDBN::build_layers | ( | ) | [private] |

Build the layers.

Definition at line 332 of file GaussPartSupervisedDBN.cc.

References PLearn::endl(), i, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, joint_layer, last_layer, layers, n_layers, PLearn::PDistribution::n_predicted, PLearn::PDistribution::n_predictor, PLASSERT, PLearn::PLearner::random_gen, setPredictorPredictedSizes(), and target_layer.

Referenced by build_().

{

MODULE_LOG << "build_layers() called" << endl;

if( inputsize_ >= 0 )

{

PLASSERT( layers[0]->size + target_layer->size == inputsize() );

setPredictorPredictedSizes( layers[0]->size,

target_layer->size, false );

MODULE_LOG << " n_predictor = " << n_predictor << endl;

MODULE_LOG << " n_predicted = " << n_predicted << endl;

}

for( int i=0 ; i<n_layers ; i++ )

layers[i]->random_gen = random_gen;

target_layer->random_gen = random_gen;

last_layer = layers[n_layers-1];

// concatenate target_layer and layers[n_layers-2] into joint_layer,

// if it is not already done

if( !joint_layer

|| joint_layer->sub_layers.size() !=2

|| joint_layer->sub_layers[0] != target_layer

|| joint_layer->sub_layers[1] != layers[n_layers-2] )

{

TVec< PP<RBMLayer> > the_sub_layers( 2 );

the_sub_layers[0] = target_layer;

the_sub_layers[1] = layers[n_layers-2];

joint_layer = new RBMMixedLayer( the_sub_layers );

}

joint_layer->random_gen = random_gen;

}

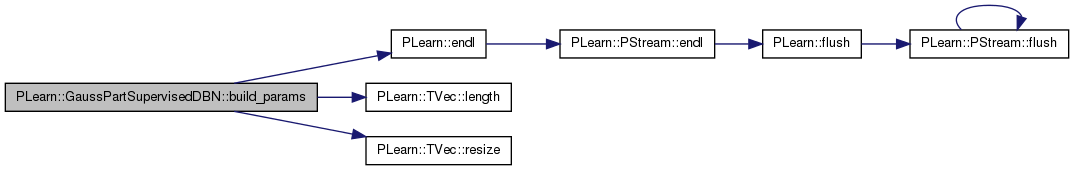

| void PLearn::GaussPartSupervisedDBN::build_params | ( | ) | [private] |

Build the parameters if needed.

Definition at line 365 of file GaussPartSupervisedDBN.cc.

References activation_gradients, PLearn::endl(), expectation_gradients, i, initialization_method, input_params, joint_params, last_layer, layers, learning_rate, PLearn::TVec< T >::length(), n_layers, PLearn::PDistribution::n_predicted, output_gradient, params, PLERROR, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), target_layer, and target_params.

Referenced by build_().

{

MODULE_LOG << "build_params() called" << endl;

if( params.length() == 0 )

{

input_params = new RBMQLParameters() ;

params.resize( n_layers-1 );

for( int i=1 ; i<n_layers-1 ; i++ )

params[i] = new RBMLLParameters();

}

else if( params.length() != n_layers-1 )

PLERROR( "GaussPartSupervisedDBN::build_params - params.length() should\n"

"be equal to layers.length()-1 (%d != %d).\n",

params.length(), n_layers-1 );

activation_gradients.resize( n_layers-1 );

expectation_gradients.resize( n_layers-1 );

output_gradient.resize( n_predicted );

input_params->down_units_types = layers[0]->units_types;

input_params->up_units_types = layers[1]->units_types;

input_params->learning_rate = learning_rate;

input_params->initialization_method = initialization_method;

input_params->random_gen = random_gen;

input_params->build();

activation_gradients[0].resize( input_params->down_layer_size );

expectation_gradients[0].resize( input_params->down_layer_size );

for( int i=1 ; i<n_layers-1 ; i++ )

{

//TODO: call changeOptions instead

params[i]->down_units_types = layers[i]->units_types;

params[i]->up_units_types = layers[i+1]->units_types;

params[i]->initialization_method = initialization_method;

params[i]->random_gen = random_gen;

params[i]->build();

activation_gradients[i].resize( params[i]->down_layer_size );

expectation_gradients[i].resize( params[i]->down_layer_size );

}

if( target_layer && !target_params )

target_params = new RBMLLParameters();

//TODO: call changeOptions instead

target_params->down_units_types = target_layer->units_types;

target_params->up_units_types = last_layer->units_types;

target_params->initialization_method = initialization_method;

target_params->random_gen = random_gen;

target_params->build();

// build joint_params from params[n_layers-1] and target_params

// if it is not already done

if( !joint_params

|| joint_params->target_params != target_params

|| joint_params->cond_params != params[n_layers-2] )

{

joint_params = new RBMJointLLParameters( target_params,

params[n_layers-2] );

}

joint_params->random_gen = random_gen;

// share the biases

for( int i=1 ; i<n_layers-2 ; i++ )

params[i]->up_units_bias = params[i+1]->down_units_bias;

input_params->up_units_bias = params[1]->down_units_bias;

}

| void PLearn::GaussPartSupervisedDBN::build_regressors | ( | ) | [private] |

Build the regressors if needed.

Definition at line 435 of file GaussPartSupervisedDBN.cc.

References PLearn::endl(), i, input_params, PLearn::TVec< T >::length(), n_layers, PLearn::PDistribution::n_predicted, params, regressors, PLearn::TVec< T >::resize(), and supervised_learning_rates.

Referenced by build_().

{

MODULE_LOG << "build_regressors() called" << endl;

if( regressors.length() != n_layers-1 )

regressors.resize( n_layers-1 );

for( int i=0 ; i<n_layers-1 ; i++ )

if( !(regressors[i]))

// || regressors[i]->input_size != i>0?

// params[i]->up_layer_size : input_params->up_layer_size )

{

MODULE_LOG << "creating regressor " << i << endl;

// A linear layer of the appropriate size, that will be trained by

// stochastic gradient descent, initial weights are 0.

PP<GradNNetLayerModule> p_gnnlm = new GradNNetLayerModule();

p_gnnlm->input_size = i > 0 ? params[i]->up_layer_size :

input_params->up_layer_size;

p_gnnlm->output_size = n_predicted;

p_gnnlm->start_learning_rate = supervised_learning_rates[i];

MODULE_LOG << "start_learning_rate = "

<< p_gnnlm->start_learning_rate << endl;

p_gnnlm->init_weights_random_scale = 0.;

p_gnnlm->build();

// The softmax+NLL part

PP<NLLErrModule> p_nll = new NLLErrModule();

p_nll->input_size = n_predicted;

p_nll->output_size = 1;

p_nll->build();

// Stack them, and...

TVec< PP<OnlineLearningModule> > stack(2);

stack[0] = (GradNNetLayerModule*) p_gnnlm;

stack[1] = (NLLErrModule*) p_nll;

// ... encapsulate them in another Module, that will compute

// and backprop the NLL

PP<StackedModulesModule> p_smm = new StackedModulesModule();

p_smm->modules = stack;

p_smm->last_layer_is_cost = true;

p_smm->target_size = n_predicted;

p_smm->build();

regressors[i] = (StackedModulesModule*) p_smm;

}

}

Return cdf: P(Y<y | x).

Reimplemented from PLearn::PDistribution.

Definition at line 524 of file GaussPartSupervisedDBN.cc.

References PLERROR.

{

PLERROR("cdf not implemented for GaussPartSupervisedDBN"); return 0;

}

| string PLearn::GaussPartSupervisedDBN::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

Referenced by train().

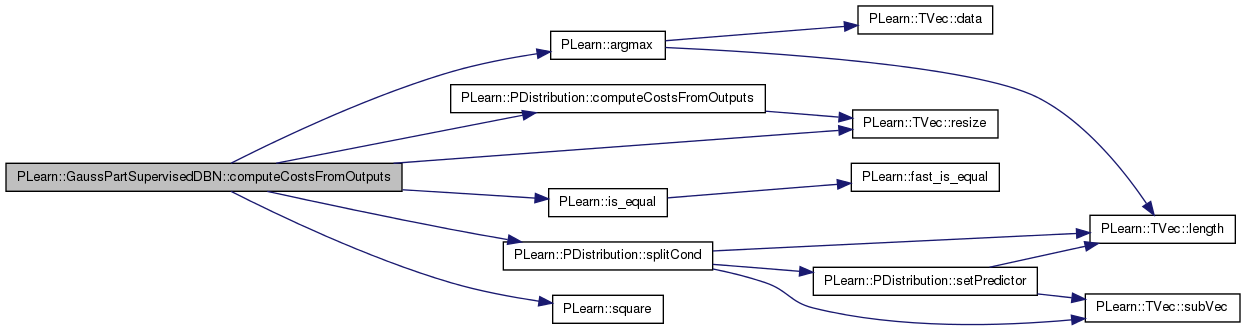

| void PLearn::GaussPartSupervisedDBN::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Compute a cost, depending on the type of the first output : if it is the density or the log-density: NLL if it is the expectation: NLL and class error.

Reimplemented from PLearn::PDistribution.

Definition at line 1254 of file GaussPartSupervisedDBN.cc.

References PLearn::argmax(), c, PLearn::PDistribution::computeCostsFromOutputs(), i, PLearn::is_equal(), PLearn::PDistribution::n_predicted, PLearn::PDistribution::outputs_def, pl_log, PLASSERT, PLearn::PDistribution::predicted_part, PLearn::TVec< T >::resize(), PLearn::PDistribution::splitCond(), and PLearn::square().

{

char c = outputs_def[0];

if( c == 'l' || c == 'd' )

inherited::computeCostsFromOutputs(input, output, target, costs);

else if( c == 'e' )

{

costs.resize( 3 );

splitCond(input);

// actual_index is the actual 'target'

int actual_index = argmax(predicted_part);

#ifdef BOUNDCHECK

for( int i=0 ; i<n_predicted ; i++ )

PLASSERT( is_equal( predicted_part[i], 0. ) ||

i == actual_index && is_equal( predicted_part[i], 1. ) );

#endif

costs[0] = -pl_log( output[actual_index] );

// predicted_index is the most probable predicted class

int predicted_index = argmax(output);

if( predicted_index == actual_index )

costs[1] = 0;

else

costs[1] = 1;

real expected_output = .0 ;

real expected_teacher = .0 ;

for(int i=0 ; i<n_predicted ; ++i) {

expected_output += output[i] * i;

expected_teacher += predicted_part[i] * i ;

}

costs[2] = square(expected_output - expected_teacher) ;

}

}

| void PLearn::GaussPartSupervisedDBN::contrastiveDivergenceStep | ( | const PP< RBMLayer > & | down_layer, |

| const PP< RBMParameters > & | parameters, | ||

| const PP< RBMLayer > & | up_layer | ||

| ) | [protected, virtual] |

Definition at line 1015 of file GaussPartSupervisedDBN.cc.

References use_sample_or_expectation.

Referenced by jointGreedyStep(), and supervisedContrastiveDivergenceStep().

{

// Re-initialize values in down_layer

if( use_sample_or_expectation[0] == 0 )

parameters->setAsDownInput( down_layer->expectation );

else

{

down_layer->generateSample();

parameters->setAsDownInput( down_layer->sample );

}

// positive phase

up_layer->getAllActivations( parameters );

up_layer->computeExpectation();

up_layer->generateSample();

// accumulate stats using the right vector (sample or expectation)

if( use_sample_or_expectation[0] == 2 )

{

if( use_sample_or_expectation[1] == 2 )

parameters->accumulatePosStats(down_layer->sample,

up_layer->sample );

else

parameters->accumulatePosStats(down_layer->sample,

up_layer->expectation );

}

else

{

if( use_sample_or_expectation[1] == 2 )

parameters->accumulatePosStats(down_layer->expectation,

up_layer->sample);

else

parameters->accumulatePosStats(down_layer->expectation,

up_layer->expectation );

}

// down propagation

if( use_sample_or_expectation[1] == 0 )

parameters->setAsUpInput( up_layer->expectation );

else

parameters->setAsUpInput( up_layer->sample );

down_layer->getAllActivations( parameters );

down_layer->computeExpectation();

down_layer->generateSample();

if( use_sample_or_expectation[2] == 0 )

parameters->setAsDownInput( down_layer->expectation );

else

parameters->setAsDownInput( down_layer->sample );

up_layer->getAllActivations( parameters );

up_layer->computeExpectation();

// accumulate stats using the right vector (sample or expectation)

if( use_sample_or_expectation[3] == 2 )

{

up_layer->generateSample();

if( use_sample_or_expectation[2] == 2 )

parameters->accumulateNegStats( down_layer->sample,

up_layer->sample );

else

parameters->accumulateNegStats( down_layer->expectation,

up_layer->sample );

}

else

{

if( use_sample_or_expectation[2] == 2 )

parameters->accumulateNegStats( down_layer->sample,

up_layer->expectation );

else

parameters->accumulateNegStats( down_layer->expectation,

up_layer->expectation );

}

// update

parameters->update();

}

| void PLearn::GaussPartSupervisedDBN::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PDistribution.

Definition at line 97 of file GaussPartSupervisedDBN.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PDistribution::declareOptions(), final_momentum, fine_tuning_learning_rate, fine_tuning_method, initial_momentum, initialization_method, input_params, joint_layer, joint_params, last_layer, layers, learning_rate, PLearn::OptionBase::learntoption, momentum_switch_time, n_layers, PLearn::OptionBase::nosave, parallelization_minibatch_size, params, regressors, sum_parallel_contributions, supervised_learning_rates, target_layer, target_params, training_schedule, use_sample_or_expectation, and weight_decay.

{

declareOption(ol, "learning_rate", &GaussPartSupervisedDBN::learning_rate,

OptionBase::buildoption,

"Learning rate used during greedy learning");

declareOption(ol, "supervised_learning_rates",

&GaussPartSupervisedDBN::supervised_learning_rates,

OptionBase::buildoption,

"The learning rates used for the supervised part during"

" greedy learning\n"

"(layer by layer).\n");

declareOption(ol, "fine_tuning_learning_rate",

&GaussPartSupervisedDBN::fine_tuning_learning_rate,

OptionBase::buildoption,

"Learning rate used during the gradient descent");

declareOption(ol, "initial_momentum",

&GaussPartSupervisedDBN::initial_momentum,

OptionBase::buildoption,

"Initial momentum factor (should be between 0 and 1)");

declareOption(ol, "final_momentum",

&GaussPartSupervisedDBN::final_momentum,

OptionBase::buildoption,

"Final momentum factor (should be between 0 and 1)");

declareOption(ol, "momentum_switch_time",

&GaussPartSupervisedDBN::momentum_switch_time,

OptionBase::buildoption,

"Number of samples to be seen by layer i before its momentum"

" switches\n"

"from initial_momentum to final_momentum.\n");

declareOption(ol, "weight_decay", &GaussPartSupervisedDBN::weight_decay,

OptionBase::buildoption,

"Weight decay");

declareOption(ol, "initialization_method",

&GaussPartSupervisedDBN::initialization_method,

OptionBase::buildoption,

"The method used to initialize the weights:\n"

" - \"uniform_linear\" = a uniform law in [-1/d, 1/d]\n"

" - \"uniform_sqrt\" = a uniform law in [-1/sqrt(d),"

" 1/sqrt(d)]\n"

" - \"zero\" = all weights are set to 0,\n"

"where d = max( up_layer_size, down_layer_size ).\n");

declareOption(ol, "training_schedule",

&GaussPartSupervisedDBN::training_schedule,

OptionBase::buildoption,

"Total number of examples that should be seen until each"

" layer\n"

"have been greedily trained.\n"

"We should always have training_schedule[i] <"

" training_schedule[i+1].\n");

declareOption(ol, "fine_tuning_method",

&GaussPartSupervisedDBN::fine_tuning_method,

OptionBase::buildoption,

"Method for fine-tuning the whole network after greedy"

" learning.\n"

"One of:\n"

" - \"none\"\n"

" - \"CD\" or \"contrastive_divergence\"\n"

" - \"EGD\" or \"error_gradient_descent\"\n"

" - \"WS\" or \"wake_sleep\".\n");

declareOption(ol, "layers", &GaussPartSupervisedDBN::layers,

OptionBase::buildoption,

"Layers that learn representations of the input,"

" unsupervisedly.\n"

"layers[0] is input layer.\n");

declareOption(ol, "input_params", &GaussPartSupervisedDBN::input_params,

OptionBase::buildoption,

"Parameters linking layer[0] and layer[1]");

declareOption(ol, "target_layer", &GaussPartSupervisedDBN::target_layer,

OptionBase::buildoption,

"Target (or label) layer");

declareOption(ol, "params", &GaussPartSupervisedDBN::params,

OptionBase::buildoption,

"RBMParameters linking the unsupervised layers.\n"

"params[i] links layers[i] and layers[i+1], except for"

"params[n_layers-1],\n"

"that links layers[n_layers-1] and last_layer.\n");

declareOption(ol, "target_params", &GaussPartSupervisedDBN::target_params,

OptionBase::buildoption,

"Parameters linking target_layer and last_layer");

/*

declareOption(ol, "use_sample_rather_than_expectation_in_positive_phase_statistics",

&GaussPartSupervisedDBN::use_sample_rather_than_expectation_in_positive_phase_statistics,

OptionBase::buildoption,

"In positive phase statistics use output->sample * input\n"

"rather than output->expectation * input.\n");

*/

declareOption(ol, "use_sample_or_expectation",

&GaussPartSupervisedDBN::use_sample_or_expectation,

OptionBase::buildoption,

"Vector providing information on which information to use"

" during the\n"

"contrastive divergence step:\n"

" - 0 means that we use the expectation only,\n"

" - 1 means that we sample (for the next step), but we use"

" the\n"

" expectation in the CD update formula,\n"

" - 2 means that we use the sample only.\n"

"The order of the arguments matches the steps of CD:\n"

" - visible unit during positive phase (you should keep it"

" to 0),\n"

" - hidden unit during positive phase,\n"

" - visible unit during negative phase,\n"

" - hidden unit during negative phase (you should keep it"

" to 0).\n");

declareOption(ol, "parallelization_minibatch_size",

&GaussPartSupervisedDBN::parallelization_minibatch_size,

OptionBase::buildoption,

"Only used when USING_MPI for parallelization.\n"

"This is the number of examples seen by one process\n"

"during training after which the weight updates are shared\n"

"among all the processes.\n");

declareOption(ol, "sum_parallel_contributions",

&GaussPartSupervisedDBN::sum_parallel_contributions,

OptionBase::buildoption,

"Only used when USING_MPI for parallelization.\n"

"sum or average the delta-w contributions from different processes?\n");

declareOption(ol, "n_layers", &GaussPartSupervisedDBN::n_layers,

OptionBase::learntoption,

"Number of unsupervised layers, including input layer");

declareOption(ol, "last_layer", &GaussPartSupervisedDBN::last_layer,

OptionBase::learntoption,

"Last layer, learning joint representations of input and"

" target");

declareOption(ol, "joint_layer", &GaussPartSupervisedDBN::joint_layer,

OptionBase::nosave,

"Concatenation of target_layer and layers[n_layers-1]");

declareOption(ol, "joint_params", &GaussPartSupervisedDBN::joint_params,

OptionBase::nosave,

"Parameters linking joint_layer and last_layer");

declareOption(ol, "regressors", &GaussPartSupervisedDBN::regressors,

OptionBase::learntoption,

"Logistic regressors that will provide the supervised"

" gradient\n"

"for each RBMParameters\n");

// Now call the parent class' declareOptions().

inherited::declareOptions(ol);

}

| static const PPath& PLearn::GaussPartSupervisedDBN::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PDistribution.

Definition at line 298 of file GaussPartSupervisedDBN.h.

:

//##### Protected Options ###############################################

| GaussPartSupervisedDBN * PLearn::GaussPartSupervisedDBN::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

Return probability density p(y | x)

Reimplemented from PLearn::PDistribution.

Definition at line 564 of file GaussPartSupervisedDBN.cc.

References PLearn::argmax(), expectation(), i, PLearn::is_equal(), PLearn::PDistribution::n_predicted, PLASSERT, PLearn::TVec< T >::size(), and PLearn::PDistribution::store_expect.

Referenced by log_density().

{

PLASSERT( y.size() == n_predicted );

// TODO: 'y'[0] devrait plutot etre l'entier "index" lui-meme!

int index = argmax( y );

// If y != onehot( index ), then density is 0

if( !is_equal( y[index], 1. ) )

return 0;

for( int i=0 ; i<n_predicted ; i++ )

if( !is_equal( y[i], 0 ) && i != index )

return 0;

expectation( store_expect );

return store_expect[index];

}

| void PLearn::GaussPartSupervisedDBN::expectation | ( | Vec & | mu | ) | const [virtual] |

Return E[Y | x].

Reimplemented from PLearn::PDistribution.

Definition at line 532 of file GaussPartSupervisedDBN.cc.

References i, input_params, joint_params, layers, n_layers, params, PLearn::PDistribution::predicted_size, PLearn::PDistribution::predictor_part, PLearn::TVec< T >::resize(), and target_layer.

Referenced by density(), fineTuneByGradientDescent(), greedyStep(), and jointGreedyStep().

{

mu.resize( predicted_size );

// Propagate input (predictor_part) until penultimate layer

layers[0]->expectation << predictor_part;

input_params->setAsDownInput(layers[0]->expectation) ;

layers[1]->getAllActivations( (RBMQLParameters*) input_params );

layers[1]->computeExpectation();

for( int i=1 ; i<n_layers-2 ; i++ )

{

params[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( (RBMLLParameters*) params[i] );

layers[i+1]->computeExpectation();

}

// Set layers[n_layers-2]->expectation (penultimate) as conditionning input

// of joint_params

joint_params->setAsCondInput( layers[n_layers-2]->expectation );

// Get all activations on target_layer from target_params

target_layer->getAllActivations( (RBMLLParameters*) joint_params );

target_layer->computeExpectation();

mu << target_layer->expectation;

}

| void PLearn::GaussPartSupervisedDBN::fineTuneByGradientDescent | ( | const Vec & | input, |

| const Vec & | train_costs | ||

| ) | [protected, virtual] |

Definition at line 1195 of file GaussPartSupervisedDBN.cc.

References activation_gradients, PLearn::argmax(), expectation(), expectation_gradients, i, input_params, PLearn::is_equal(), joint_params, layers, n_layers, PLearn::PDistribution::n_predicted, output_gradient, params, pl_log, PLASSERT, PLearn::PDistribution::predicted_part, PLearn::PDistribution::splitCond(), and target_layer.

Referenced by train().

{

// split input in predictor_part and predicted_part

splitCond(input);

// compute predicted_part expectation, conditioned on predictor_part

// (forward pass)

expectation( output_gradient );

int actual_index = argmax(predicted_part);

// update train_costs

#ifdef BOUNDCHECK

for( int i=0 ; i<n_predicted ; i++ )

PLASSERT( is_equal( predicted_part[i], 0. ) ||

i == actual_index && is_equal( predicted_part[i], 1. ) );

#endif

train_costs[0] = -pl_log( target_layer->expectation[actual_index] );

int predicted_index = argmax( target_layer->expectation );

if( predicted_index == actual_index )

train_costs[1] = 0;

else

train_costs[1] = 1;

// output gradient

output_gradient[actual_index] -= 1.;

joint_params->bpropUpdate( layers[n_layers-2]->expectation,

target_layer->expectation,

expectation_gradients[n_layers-2],

output_gradient );

for( int i=n_layers-2 ; i>1 ; i-- )

{

layers[i]->bpropUpdate( layers[i]->activations,

layers[i]->expectation,

activation_gradients[i],

expectation_gradients[i] );

params[i-1]->bpropUpdate( layers[i-1]->expectation,

layers[i]->activations,

expectation_gradients[i-1],

activation_gradients[i] );

}

layers[1]->bpropUpdate( layers[1]->activations,

layers[1]->expectation,

activation_gradients[1],

expectation_gradients[1] );

input_params->bpropUpdate( layers[0]->expectation,

layers[1]->activations,

expectation_gradients[0],

activation_gradients[1] );

}

| void PLearn::GaussPartSupervisedDBN::forget | ( | ) | [virtual] |

(Re-)initializes the PDistribution in its fresh state (that state may depend on the 'seed' option).

And sets 'stage' back to 0 (this is the stage of a fresh learner!). ### You may remove this method if your distribution does not ### implement it.

A typical forget() method should do the following:

Reimplemented from PLearn::PDistribution.

Definition at line 487 of file GaussPartSupervisedDBN.cc.

References PLearn::endl(), i, input_params, layers, n_layers, params, PLearn::PDistribution::resetGenerator(), PLearn::TVec< T >::resize(), PLearn::PLearner::seed_, PLearn::PLearner::stage, target_layer, and target_params.

Referenced by train().

{

MODULE_LOG << "forget() called" << endl;

resetGenerator(seed_);

input_params->forget() ;

for( int i=1 ; i<n_layers-1 ; i++ )

params[i]->forget();

for( int i=0 ; i<n_layers ; i++ )

layers[i]->reset();

#if USING_MPI

global_params.resize(0);

#endif

target_params->forget();

target_layer->reset();

stage = 0;

}

| void PLearn::GaussPartSupervisedDBN::generate | ( | Vec & | y | ) | const [virtual] |

Return a pseudo-random sample generated from the conditional distribution, of density p(y | x).

Reimplemented from PLearn::PDistribution.

Definition at line 516 of file GaussPartSupervisedDBN.cc.

References PLERROR.

{

PLERROR("generate not implemented for GaussPartSupervisedDBN");

}

| OptionList & PLearn::GaussPartSupervisedDBN::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| OptionMap & PLearn::GaussPartSupervisedDBN::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| RemoteMethodMap & PLearn::GaussPartSupervisedDBN::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 71 of file GaussPartSupervisedDBN.cc.

| TVec< string > PLearn::GaussPartSupervisedDBN::getTestCostNames | ( | ) | const [virtual] |

Return [ "NLL" ] (the only cost computed by a PDistribution).

Reimplemented from PLearn::PDistribution.

Definition at line 1294 of file GaussPartSupervisedDBN.cc.

References PLearn::TVec< T >::append(), c, and PLearn::PDistribution::outputs_def.

Referenced by getTrainCostNames().

{

char c = outputs_def[0];

TVec<string> result;

if( c == 'l' || c == 'd' )

result.append( "NLL" );

else if( c == 'e' )

{

result.append( "NLL" );

result.append( "class_error" );

result.append( "WMSE" );

}

return result;

}

| TVec< string > PLearn::GaussPartSupervisedDBN::getTrainCostNames | ( | ) | const [virtual] |

Return [ ].

Reimplemented from PLearn::PDistribution.

Definition at line 1309 of file GaussPartSupervisedDBN.cc.

References getTestCostNames().

{

return getTestCostNames();

}

| real PLearn::GaussPartSupervisedDBN::greedyStep | ( | const Vec & | predictor, |

| int | params_index | ||

| ) | [protected, virtual] |

Definition at line 1097 of file GaussPartSupervisedDBN.cc.

References expectation(), i, input_params, layers, PLearn::PDistribution::n_predicted, PLearn::PDistribution::n_predictor, params, PLearn::TVec< T >::subVec(), and supervisedContrastiveDivergenceStep().

Referenced by train().

{

// deterministic propagation until we reach index

layers[0]->expectation << input.subVec(0, n_predictor);

input_params->setAsDownInput( layers[0]->expectation );

layers[1]->getAllActivations( (RBMQLParameters*) input_params );

layers[1]->computeExpectation();

for( int i=1 ; i<index ; i++ )

{

params[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( (RBMLLParameters*) params[i] );

layers[i+1]->computeExpectation();

}

// perform one step of CD + partially supervised gradient

real sup_cost;

if (index == 0)

sup_cost = supervisedContrastiveDivergenceStep(

layers[index],

(RBMQLParameters*) input_params,

layers[index+1],

input.subVec(n_predictor,n_predicted),

index );

else

sup_cost = supervisedContrastiveDivergenceStep(

layers[index],

(RBMLLParameters*) params[index],

layers[index+1],

input.subVec(n_predictor,n_predicted),

index );

return sup_cost;

}

Definition at line 1134 of file GaussPartSupervisedDBN.cc.

References PLearn::argmax(), PLearn::RBMLLParameters::bpropUpdate(), contrastiveDivergenceStep(), expectation(), expectation_gradients, i, input_params, PLearn::is_equal(), joint_layer, joint_params, last_layer, layers, PLearn::RBMParameters::learning_rate, learning_rate, MISSING_VALUE, n_layers, PLearn::PDistribution::n_predicted, PLearn::PDistribution::n_predictor, output_gradient, params, pl_log, PLASSERT, PLearn::TVec< T >::subVec(), supervised_learning_rates, and target_layer.

Referenced by train().

{

// deterministic propagation until we reach n_layers-2, setting the input

// of the "input" part of joint_layer

layers[0]->expectation << input.subVec( 0, n_predictor );

input_params->setAsDownInput( layers[0]->expectation );

layers[1]->getAllActivations( (RBMQLParameters*) input_params );

layers[1]->computeExpectation();

for( int i=1 ; i<n_layers-2 ; i++ )

{

params[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( (RBMLLParameters*) params[i] );

layers[i+1]->computeExpectation();

}

real supervised_cost = MISSING_VALUE;

if( supervised_learning_rates[n_layers-2] > 0 )

{

// deterministic forward pass

joint_params->setAsCondInput( layers[n_layers-2]->expectation );

target_layer->getAllActivations( (RBMLLParameters*) joint_params );

target_layer->computeExpectation();

// now get the actual index of the target

int actual_index = argmax( input.subVec( n_predictor, n_predicted ) );

#ifdef BOUNDCHECK

for( int i=0 ; i<n_predicted ; i++ )

PLASSERT( is_equal( input[n_predictor+i], 0. ) ||

i == actual_index && is_equal( input[n_predictor+i], 1 ) );

#endif

// get supervised cost (= train cost) and output gradient

supervised_cost = -pl_log( target_layer->expectation[actual_index] );

output_gradient << target_layer->expectation;

output_gradient[actual_index] -= 1.;

// put the right learning rate

joint_params->learning_rate = supervised_learning_rates[n_layers-2];

// backprop and update

joint_params->bpropUpdate( layers[n_layers-2]->expectation,

target_layer->expectation,

expectation_gradients[n_layers-2],

output_gradient );

// put the learning rate back

joint_params->learning_rate = learning_rate;

}

// now fill the "target" part of joint_layer

target_layer->expectation << input.subVec( n_predictor, n_predicted );

// do contrastive divergence step with the new weights and actual target

contrastiveDivergenceStep( (RBMLayer*) joint_layer,

(RBMLLParameters*) joint_params,

last_layer );

// return supervised cost

return supervised_cost;

}

Return log of probability density log(p(y | x)).

Reimplemented from PLearn::PDistribution.

Definition at line 586 of file GaussPartSupervisedDBN.cc.

References density(), and pl_log.

| void PLearn::GaussPartSupervisedDBN::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PDistribution.

Definition at line 610 of file GaussPartSupervisedDBN.cc.

References PLearn::deepCopyField(), input_params, joint_layer, joint_params, last_layer, layers, PLearn::PDistribution::makeDeepCopyFromShallowCopy(), params, target_layer, target_params, and training_schedule.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(layers, copies);

deepCopyField(last_layer, copies);

deepCopyField(target_layer, copies);

deepCopyField(joint_layer, copies);

deepCopyField(params, copies);

deepCopyField(joint_params, copies);

deepCopyField(target_params, copies);

deepCopyField(input_params, copies);

deepCopyField(training_schedule, copies);

}

| void PLearn::GaussPartSupervisedDBN::setPredictor | ( | const Vec & | predictor, |

| bool | call_parent = true |

||

| ) | const [virtual] |

Set the value for the predictor part of a conditional probability.

Reimplemented from PLearn::PDistribution.

Definition at line 628 of file GaussPartSupervisedDBN.cc.

References PLearn::PDistribution::setPredictor().

{

if (call_parent)

inherited::setPredictor(predictor, true);

// ### Add here any specific code required by your subclass.

}

| bool PLearn::GaussPartSupervisedDBN::setPredictorPredictedSizes | ( | int | the_predictor_size, |

| int | the_predicted_size, | ||

| bool | call_parent = true |

||

| ) | [virtual] |

Generates a pseudo-random sample x from the reversed conditional distribution, of density p(x | y) (and NOT p(y | x)).

i.e., generates a "predictor" part given a "predicted" part, regardless of any previously set predictor. Set the 'predictor' and 'predicted' sizes for this distribution.

Reimplemented from PLearn::PDistribution.

Definition at line 639 of file GaussPartSupervisedDBN.cc.

References layers, PLearn::PDistribution::n_predicted, PLearn::PDistribution::n_predictor, PLERROR, PLearn::PDistribution::setPredictorPredictedSizes(), PLearn::TVec< T >::size(), and target_layer.

Referenced by build_layers().

{

bool sizes_have_changed = false;

if (call_parent)

sizes_have_changed = inherited::setPredictorPredictedSizes(

the_predictor_size, the_predicted_size, true);

// ### Add here any specific code required by your subclass.

if( the_predictor_size >= 0 && the_predictor_size != layers[0]->size ||

the_predicted_size >= 0 && the_predicted_size != target_layer->size )

PLERROR( "GaussPartSupervisedDBN::setPredictorPredictedSizes - \n"

"n_predictor should be equal to layer[0]->size (%d)\n"

"n_predicted should be equal to target_layer->size (%d).\n",

layers[0]->size, target_layer->size );

n_predictor = layers[0]->size;

n_predicted = target_layer->size;

// Returned value.

return sizes_have_changed;

}

| real PLearn::GaussPartSupervisedDBN::supervisedContrastiveDivergenceStep | ( | const PP< RBMLayer > & | down_layer, |

| const PP< RBMParameters > & | parameters, | ||

| const PP< RBMLayer > & | up_layer, | ||

| const Vec & | target, | ||

| int | index | ||

| ) | [protected, virtual] |

Definition at line 962 of file GaussPartSupervisedDBN.cc.

References activation_gradients, PLearn::TVec< T >::append(), contrastiveDivergenceStep(), expectation_gradients, learning_rate, MISSING_VALUE, regressors, and supervised_learning_rates.

Referenced by greedyStep().

{

real supervised_cost = MISSING_VALUE;

if( supervised_learning_rates[index] > 0 )

{

// (Deterministic) forward pass

parameters->setAsDownInput( down_layer->expectation );

up_layer->getAllActivations( parameters );

up_layer->computeExpectation();

Vec supervised_input = up_layer->expectation.copy();

supervised_input.append( target );

// Compute supervised cost and gradient

Vec sup_cost(1);

regressors[index]->fprop( supervised_input, sup_cost );

regressors[index]->bpropUpdate( supervised_input, sup_cost,

expectation_gradients[index+1],

Vec() );

// propagate gradient to params

up_layer->bpropUpdate( up_layer->activations,

up_layer->expectation,

activation_gradients[index+1],

expectation_gradients[index+1] );

// put the right learning rate

parameters->learning_rate = supervised_learning_rates[index];

// updates the parameters

parameters->bpropUpdate( down_layer->expectation,

up_layer->activations,

expectation_gradients[index],

activation_gradients[index+1] );

// put the learning rate back

parameters->learning_rate = learning_rate;

// return the cost

supervised_cost = sup_cost[0];

}

// We have to do another forward pass because the weights have changed

contrastiveDivergenceStep( down_layer, parameters, up_layer );

// return supervised cost

return supervised_cost;

}

Return survival function: P(Y>y | x).

Reimplemented from PLearn::PDistribution.

Definition at line 594 of file GaussPartSupervisedDBN.cc.

References PLERROR.

{

PLERROR("survival_fn not implemented for GaussPartSupervisedDBN"); return 0;

}

| void PLearn::GaussPartSupervisedDBN::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process.

Reimplemented from PLearn::PDistribution.

Definition at line 667 of file GaussPartSupervisedDBN.cc.

References classname(), PLearn::endl(), PLearn::PLearner::expdir, final_momentum, fine_tuning_learning_rate, fine_tuning_method, fineTuneByGradientDescent(), forget(), PLearn::VMat::getExample(), greedyStep(), i, initial_momentum, PLearn::PLearner::initTrain(), input_params, PLearn::PLearner::inputsize(), joint_params, jointGreedyStep(), learning_rate, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::min(), momentum_switch_time, n_layers, PLearn::PLearner::nstages, PLearn::openFile(), parallelization_minibatch_size, params, PLERROR, PLearn::PLMPI::rank, PLearn::PStream::raw_ascii, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::sample(), PLearn::PLMPI::size, PLearn::PLearner::stage, sum_parallel_contributions, target_params, PLearn::PLearner::targetsize(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_schedule, and PLearn::ProgressBar::update().

{

MODULE_LOG << "train() called" << endl;

// The role of the train method is to bring the learner up to

// stage==nstages, updating train_stats with training costs measured

// on-line in the process.

/* TYPICAL CODE:

static Vec input; // static so we don't reallocate memory each time...

static Vec target; // (but be careful that static means shared!)

input.resize(inputsize()); // the train_set's inputsize()

target.resize(targetsize()); // the train_set's targetsize()

real weight;

// This generic PLearner method does a number of standard stuff useful for

// (almost) any learner, and return 'false' if no training should take

// place. See PLearner.h for more details.

if (!initTrain())

return;

while(stage<nstages)

{

// clear statistics of previous epoch

train_stats->forget();

//... train for 1 stage, and update train_stats,

// using train_set->getExample(input, target, weight)

// and train_stats->update(train_costs)

++stage;

train_stats->finalize(); // finalize statistics for this epoch

}

*/

Vec input( inputsize() );

Vec target( targetsize() ); // unused

real weight; // unused

Vec train_costs(2);

// hack for supervised cost

real sum_sup_cost = 0;

PStream sup_cost_file = openFile( expdir/"sup_cost.amat",

PStream::raw_ascii, "a" );

int nsamples = train_set->length();

#if USING_MPI

// initialize global parameters for allowing to easily share them across

// multiple CPUs

// wait until we can attach a gdb process

//pout << "START WAITING..." << endl;

//sleep(20);

//pout << "DONE WAITING!" << endl;

MPI_Barrier(MPI_COMM_WORLD);

int total_bsize=parallelization_minibatch_size*PLMPI::size;

//#endif

forget(); // DEBUGGING TO GET REPRODUCIBLE RESULTS

if (global_params.size()==0)

{

int n_params = joint_params->nParameters(1,1);

for (int i=0;i<params.length()-1;i++)

n_params += params[i]->nParameters(0,1);

global_params.resize(n_params);

previous_global_params.resize(n_params);

Vec p=global_params;

for (int i=0;i<params.length()-1;i++)

p=params[i]->makeParametersPointHere(p,0,1);

p=joint_params->makeParametersPointHere(p,1,1);

if (p.length()!=0)

PLERROR("HintonDeepBeliefNet: Inconsistencies between nParameters and makeParametersPointHere!");

}

#endif

MODULE_LOG << " nsamples = " << nsamples << endl;

MODULE_LOG << " initial stage = " << stage << endl;

MODULE_LOG << " objective: nstages = " << nstages << endl;

if( !initTrain() )

{

MODULE_LOG << "train() aborted" << endl;

return;

}

ProgressBar* pb = 0;

// clear stats of previous epoch

train_stats->forget();

/***** initial greedy training *****/

for( int layer=0 ; layer < n_layers-2 ; layer++ )

{

MODULE_LOG << "Training parameters between layers " << layer

<< " and " << layer+1 << endl;

int end_stage = min( training_schedule[layer], nstages );

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

if( report_progress && stage < end_stage )

{

pb = new ProgressBar( "Training layer "+tostring(layer)

+" of "+classname(),

end_stage - stage );

}

if (layer > 0) {

params[layer]->learning_rate = learning_rate;

int momentum_switch_stage = momentum_switch_time;

if( layer > 0 )

momentum_switch_stage += training_schedule[layer-1];

if( stage <= momentum_switch_stage )

params[layer]->momentum = initial_momentum;

else

params[layer]->momentum = final_momentum;

}

else {

input_params->learning_rate = learning_rate;

int momentum_switch_stage = momentum_switch_time;

if( layer > 0 )

momentum_switch_stage += training_schedule[layer-1];

}

#if USING_MPI

// make a copy of the parameters as they were at the beginning of

// the minibatch

if (sum_parallel_contributions)

previous_global_params << global_params;

#endif

int begin_sample = stage % nsamples;

for( ; stage<end_stage ; stage++ )

{

#if USING_MPI

// only look at some of the examples, associated with this process

// number (rank)

if (stage%PLMPI::size==PLMPI::rank)

{

#endif

// resetGenerator(1); // DEBUGGING HACK TO MAKE SURE RESULTS ARE INDEPENDENT OF PARALLELIZATION

int sample = stage % nsamples;

if( sample == begin_sample )

{

sup_cost_file << sum_sup_cost / nsamples << endl;

sum_sup_cost = 0;

}

train_set->getExample(sample, input, target, weight);

sum_sup_cost += greedyStep( input, layer );

if( pb )

{

if( layer == 0 )

pb->update( stage + 1 );

else

pb->update( stage - training_schedule[layer-1] + 1 );

}

#if USING_MPI

}

// time to share among processors

if (stage%total_bsize==0 || stage==end_stage-1)

shareParamsMPI();

#endif

}

}

/***** joint training *****/

MODULE_LOG << "Training joint parameters, between target,"

<< " penultimate (" << n_layers-2 << ")," << endl

<< "and last (" << n_layers-1 << ") layers." << endl;

int end_stage = min( training_schedule[n_layers-2], nstages );

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

if( report_progress && stage < end_stage )

pb = new ProgressBar( "Training joint layer (target and "

+tostring(n_layers-2)+") of "+classname(),

end_stage - stage );

joint_params->learning_rate = learning_rate;

// target_params->learning_rate = learning_rate;

int previous_stage = (n_layers < 3) ? 0 : training_schedule[n_layers-3];

int momentum_switch_stage = momentum_switch_time + previous_stage;

if( stage <= momentum_switch_stage )

joint_params->momentum = initial_momentum;

else

joint_params->momentum = final_momentum;

int begin_sample = stage % nsamples;

int last = min(training_schedule[n_layers-2],nstages);

for( ; stage<last ; stage++ )

{

#if USING_MPI

// only look at some of the examples, associated with this process

// number (rank)

if (stage%PLMPI::size==PLMPI::rank)

{

#endif

int sample = stage % nsamples;

if( sample == begin_sample )

{

sup_cost_file << sum_sup_cost / nsamples << endl;

sum_sup_cost = 0;

}

train_set->getExample(sample, input, target, weight);

sum_sup_cost += jointGreedyStep( input );

if( stage == momentum_switch_stage )

joint_params->momentum = final_momentum;

if( pb )

pb->update( stage - previous_stage + 1 );

#if USING_MPI

}

// time to share among processors

if (stage%total_bsize==0 || stage==last-1)

shareParamsMPI();

#endif

}

/***** fine-tuning *****/

MODULE_LOG << "Fine-tuning all parameters, using method "

<< fine_tuning_method << endl;

int init_stage = stage;

if( report_progress && stage < nstages )

pb = new ProgressBar( "Fine-tuning parameters of all layers of "

+classname(),

nstages - init_stage );

MODULE_LOG << " fine_tuning_learning_rate = "

<< fine_tuning_learning_rate << endl;

input_params->learning_rate = fine_tuning_learning_rate ;

for( int i=1 ; i<n_layers-1 ; i++ )

params[i]->learning_rate = fine_tuning_learning_rate;

joint_params->learning_rate = fine_tuning_learning_rate;

target_params->learning_rate = fine_tuning_learning_rate;

if( fine_tuning_method == "" ) // do nothing

{

stage = nstages;

if( pb )

pb->update( nstages - init_stage + 1 );

}

else if( fine_tuning_method == "EGD" )

{

begin_sample = stage % nsamples;

for( ; stage<nstages ; stage++ )

{

#if USING_MPI

// only look at some of the examples, associated with

// this process number (rank)

if (stage%PLMPI::size==PLMPI::rank)

{

#endif

int sample = stage % nsamples;

if( sample == begin_sample )

train_stats->forget();

train_set->getExample(sample, input, target, weight);

fineTuneByGradientDescent( input, train_costs );

train_stats->update( train_costs );

if( pb )

pb->update( stage - init_stage + 1 );

#if USING_MPI

}

// time to share among processors

if (stage%total_bsize==0 || stage==nstages-1)

shareParamsMPI();

#endif

}

train_stats->finalize(); // finalize statistics for this epoch

}

else

PLERROR( "Fine-tuning methods other than \"EGD\" are not"

" implemented yet." );

if( pb )

delete pb;

MODULE_LOG << "Training finished" << endl << endl;

}

| void PLearn::GaussPartSupervisedDBN::variance | ( | Mat & | cov | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 602 of file GaussPartSupervisedDBN.cc.

References PLERROR.

{

PLERROR("variance not implemented for GaussPartSupervisedDBN");

}

Reimplemented from PLearn::PDistribution.

Definition at line 298 of file GaussPartSupervisedDBN.h.

TVec< Vec > PLearn::GaussPartSupervisedDBN::activation_gradients [mutable, protected] |

gradients of cost wrt the activations (output of params)

Definition at line 314 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), fineTuneByGradientDescent(), and supervisedContrastiveDivergenceStep().

TVec< Vec > PLearn::GaussPartSupervisedDBN::expectation_gradients [mutable, protected] |

gradients of cost wrt the expectations (output of layers)

Definition at line 317 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), fineTuneByGradientDescent(), jointGreedyStep(), and supervisedContrastiveDivergenceStep().

Final momentum.

Definition at line 83 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions(), and train().

The learning rate used during the gradient descent.

Definition at line 77 of file GaussPartSupervisedDBN.h.

Referenced by build_(), declareOptions(), and train().

Method for fine-tuning the whole network after greedy learning.

One of:

Definition at line 155 of file GaussPartSupervisedDBN.h.

Referenced by build_(), declareOptions(), and train().

Initial momentum.

Definition at line 80 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions(), and train().

The method used to initialize the weights:

Definition at line 97 of file GaussPartSupervisedDBN.h.

Referenced by build_(), build_params(), and declareOptions().

RBMParameters linking the unsupervised layers.

params[i] links layers[i] and layers[i+1]

Definition at line 122 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), build_regressors(), declareOptions(), expectation(), fineTuneByGradientDescent(), forget(), greedyStep(), jointGreedyStep(), makeDeepCopyFromShallowCopy(), and train().

Concatenation of target_layer and layers[n_layers-2].

Definition at line 114 of file GaussPartSupervisedDBN.h.

Referenced by build_layers(), declareOptions(), jointGreedyStep(), and makeDeepCopyFromShallowCopy().

Parameters linking joint_layer and last_layer.

Contains params[n_layers-2] and target_params.

Definition at line 129 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), declareOptions(), expectation(), fineTuneByGradientDescent(), jointGreedyStep(), makeDeepCopyFromShallowCopy(), and train().

Last layer, learning joint representations of input and target.

Definition at line 108 of file GaussPartSupervisedDBN.h.

Referenced by build_layers(), build_params(), declareOptions(), jointGreedyStep(), and makeDeepCopyFromShallowCopy().

Layers that learn representations of the input, layers[0] is input layer, layers[n_layers-1] is last layer.

Definition at line 105 of file GaussPartSupervisedDBN.h.

Referenced by build_(), build_layers(), build_params(), declareOptions(), expectation(), fineTuneByGradientDescent(), forget(), greedyStep(), jointGreedyStep(), makeDeepCopyFromShallowCopy(), and setPredictorPredictedSizes().

The learning rate used during greedy learning.

Definition at line 71 of file GaussPartSupervisedDBN.h.

Referenced by build_(), build_params(), declareOptions(), jointGreedyStep(), supervisedContrastiveDivergenceStep(), and train().

number of samples to be seen by layer i before its momentum switches from initial_momentum to final_momentum

Definition at line 87 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions(), and train().

Number of layers, including input layer and last layer, but not target layer.

Definition at line 101 of file GaussPartSupervisedDBN.h.

Referenced by build_(), build_layers(), build_params(), build_regressors(), declareOptions(), expectation(), fineTuneByGradientDescent(), forget(), jointGreedyStep(), and train().

Vec PLearn::GaussPartSupervisedDBN::output_gradient [mutable, protected] |

gradient wrt output activations

Definition at line 320 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), fineTuneByGradientDescent(), and jointGreedyStep().

only used when USING_MPI for parallelization this is the number of examples seen by one process during training after which the weight updates are shared among all the processes.

Definition at line 139 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions(), and train().

RBMParameters linking the unsupervised layers.

params[i] links layers[i] and layers[i+1]

Definition at line 118 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), build_regressors(), declareOptions(), expectation(), fineTuneByGradientDescent(), forget(), greedyStep(), jointGreedyStep(), makeDeepCopyFromShallowCopy(), and train().

Logistic regressors that will provide the supervised gradient for each RBMParameters.

Definition at line 133 of file GaussPartSupervisedDBN.h.

Referenced by build_regressors(), declareOptions(), and supervisedContrastiveDivergenceStep().

only used when USING_MPI for parallelization: sum or average the delta-w contributions from different processes?

Definition at line 143 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions(), and train().

The learning rates used for the supervised part during greedy learning.

Definition at line 74 of file GaussPartSupervisedDBN.h.

Referenced by build_(), build_regressors(), declareOptions(), jointGreedyStep(), and supervisedContrastiveDivergenceStep().

Target (or label) layer.

Definition at line 111 of file GaussPartSupervisedDBN.h.

Referenced by build_layers(), build_params(), declareOptions(), expectation(), fineTuneByGradientDescent(), forget(), jointGreedyStep(), makeDeepCopyFromShallowCopy(), and setPredictorPredictedSizes().

Parameters linking target_layer and last_layer.

Definition at line 125 of file GaussPartSupervisedDBN.h.

Referenced by build_params(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Number of examples to use during each of the different greedy steps of the training phase.

Definition at line 147 of file GaussPartSupervisedDBN.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vector providing information on which information to use during the contrastive divergence step:

Definition at line 170 of file GaussPartSupervisedDBN.h.

Referenced by contrastiveDivergenceStep(), declareOptions(), and GaussPartSupervisedDBN().

The weight decay.

Definition at line 90 of file GaussPartSupervisedDBN.h.

Referenced by declareOptions().

1.7.4

1.7.4