|

Date

|

Topic

|

Readings

|

Notes

|

| Introduction / background |

|

Jan 13

|

Lecture: Introduction

|

|

notes

|

|

Jan 14

|

Probability/linear algebra review

|

|

notes

|

|

Jan 20

|

Python review

|

|

notes

logsumexp.py

|

| Learning about images |

|

Jan 21

|

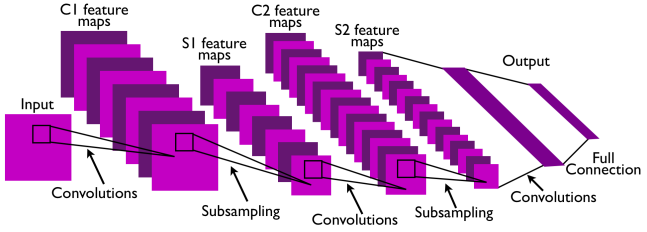

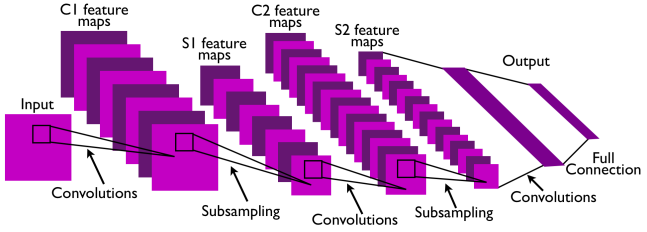

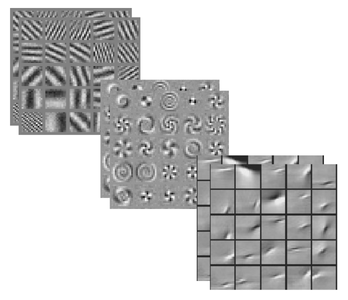

Filtering, convolution, phasor signals

|

Reading 1:

barlow

or

attneave

|

notes

|

|

Jan 27

|

Fourier transforms on images

|

|

notes

Reading 1 due

|

|

Jan 28

|

Fourier transforms on images (cont.)

|

Reading 2: sparse coding (Foldiak, Endres; scholarpedia 2008)

|

|

|

Feb 3

|

Sampling, leakage, windowing

|

|

notes

Reading 2 due

|

|

Feb 4

|

PCA/whitening and Fourier transform

|

|

notes

assignment 1

|

|

Feb 10

|

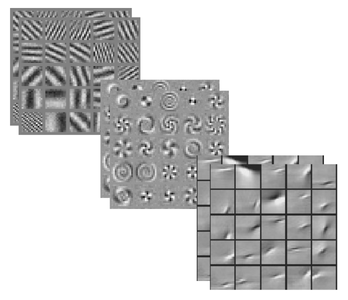

Whitening vs independence, ICA

|

Reading 3: Emergence of Simple-Cell Receptive Field Properties by Learning a Sparse Code for Natural Images. (Olshausen, Field 1996)

|

notes

|

|

Feb 11

|

ICA (contd.)

|

Reading 4: The ``independent components'' of natural scenes are edge filters (Bell, Sejnowski 1997)

|

Assignment 1 due

assignment 2

|

|

Feb 17

|

Overcompleteness and energy-based models,

A1 discussion

|

|

notes

Reading 3 due

convolve.py

solution A1 Q3

solution A1 Q4

|

|

Feb 18

|

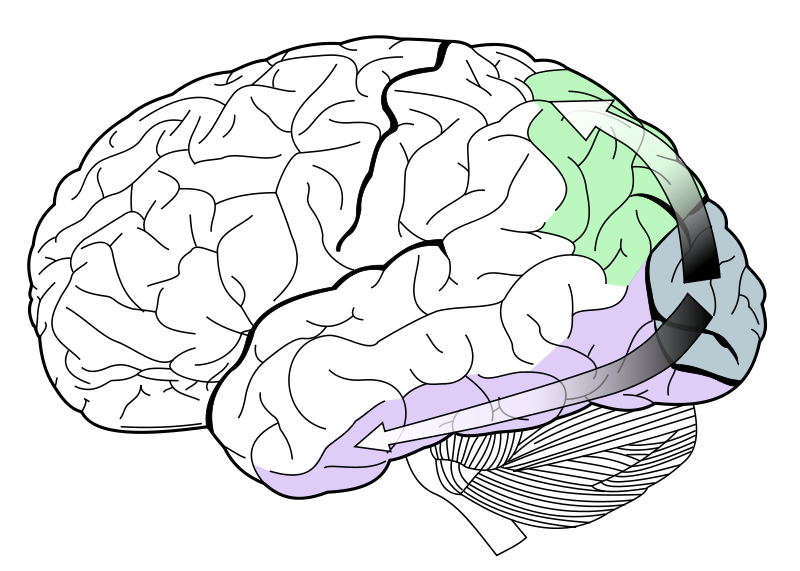

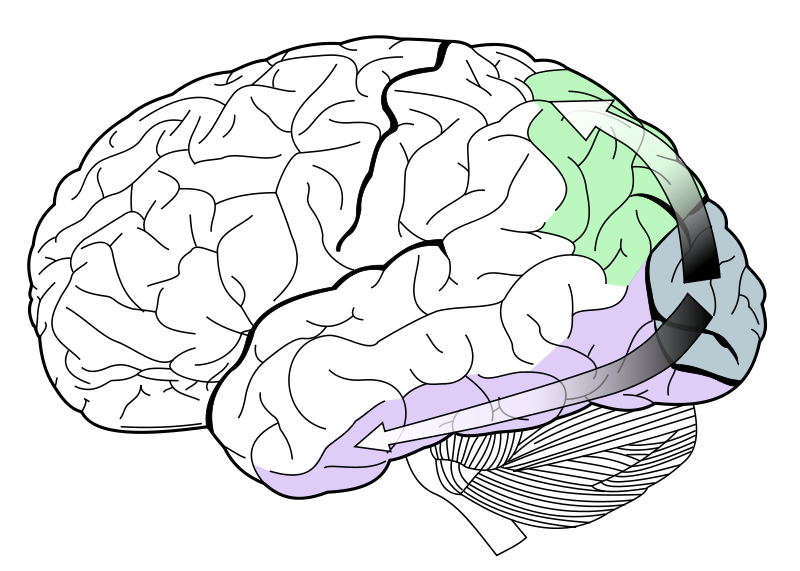

Some biological aspects of vision

|

Reading 5: Feature Discovery by Competitive Learning (Rumelhart, Zipser; 1985) (email instructor if you cannot access the pdf)

|

notes

Reading 4 due

|

|

Feb 24

|

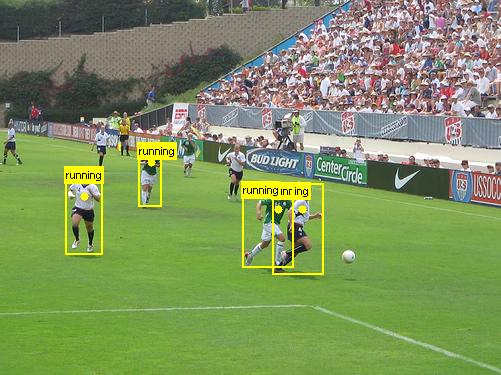

Kmeans/Hebbian-style feature learning

|

|

notes

Reading 5 due

|

|

Feb 25

|

Backprop-review,

autoencoders and energy functions

|

|

notes

Assignment 2 due

|

|

Mar 10

|

A2 discussion

|

|

logreg.py

solution A2 Q4

solution A2 Q5

solution A2 Q6 global features

solution A2 Q6 local features

|

|

Mar 11

|

* no class *

|

* no class *

|

|

|

Mar 17

|

Paper presentations and discussion

|

Schedule:

-

Fast Inference in Sparse Coding Algorithms with Applications to Object Recognition K. Kavukcuoglu et al. 2008 [pdf] (Samira S.)

- An analysis of single-layer networks in unsupervised feature learning. A. Coates, H. Lee, A. Y. Ng. AISTATS 2011. [pdf]

Using unsupervised local features for recognition. (Soroush M.)

|

|

|

Mar 18

|

Paper presentations and discussion

|

Schedule:

- Building high-level features using large scale unsupervised learning. Q. Le, M.A. Ranzato, R. Monga, M. Devin, K. Chen, G. Corrado, J. Dean, A. Ng. ICML 2012. [pdf]

The "google-brain" paper that got a lot of publicity: a stack of autoencoders for unsupervised learning. (Jose S.)

-

On Random Weights and Unsupervised Feature Learning. A Saxe, et al. ICML 2011 [pdf]

This paper shows why in certain artitectures random filters work just fine for recognition. (Ishmael B.)

- Transforming Auto-encoders. G. Hinton, et al. ICANN 2011. [pdf]

Another (somewhat speculative) paper about what's wrong with conv-nets: How to learn about geometric relationships instead just statistics over local structure. (Dong Huyn L.)

|

assignment 3

reaction report due, on one of the papers presented this week

|

|

Mar 24

|

Paper presentations and discussion

|

Schedule:

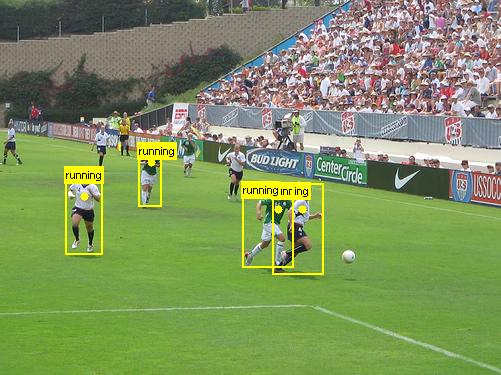

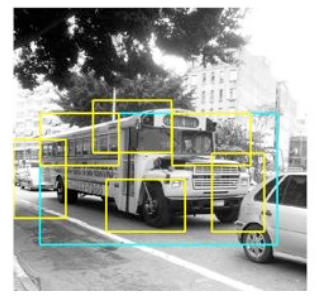

- ImageNet Classification with Deep Convolutional Neural Networks. A. Krizhevsky, I. Sutskever, G.E. Hinton. NIPS 2012. [pdf] (Alexandre N.)

The paper describing the model that re-ignited interest in conv-nets by winning the ImageNet competition in 2012.

-

Going Deeper with Convolutions Szegedy et al. 2014 [pdf]

The paper describes GoogLeNet which one the 2014 ImageNet competition with a performance most people doubted would be possible anytime soon. (see also this related paper)

(Ying Z)

-

Batch Normalization: Accelerating Deep network training by reducing internal covariate shift Ioffe, Szegedy 2015 [pdf](Zhouhan L.)

|

|

|

Mar 25

|

Paper presentations and discussion

|

Schedule:

-

Disjunctive Normal Networks Sajjadi et al. 2014 [pdf] (Mohammad P.)

-

DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition Donahue et al. [pdf]

One (of many) follow-up works demonstrating the generality of learned features. (also see: this related paper) (Mat R.)

-

C3D: Generic Features for Video Analysis Tran et al. 2015 [pdf] (Thomas B.)

|

|

|

Mar 31

|

Paper presentations and discussion

|

cancelled due to strike

|

A3 due

|

|

Apr 1

|

Paper presentations and discussion

|

cancelled due to strike

|

|

|

Apr 7

|

Paper presentations and discussion

|

Preliminary schedule:

-

Teaching Deep Convolutional Neural Networks to Play Go (C Clark, A Storkey) 2015 [pdf] (Kyle K.)

-

Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture Eigen, Fergus 2014 [pdf]

Extract richer scene information with conv-nets. (Faezeh A.)

-

Recurrent Models of Visual Attention (Mnih et al.) [pdf]

Attention as a way to (potentially) go beyond conv-nets (see also this follow-up work ) (Junyoung C)

-

DRAW: A Recurrent Neural Network For Image Generation

[pdf]

Generating natural images is a common approach to visualizing what a network "knows" about images. This is the best currently existing (very recent) result on this. It uses an RNN to literally "draw" the image. (Jeremy Z)

-

Long-term Recurrent Convolutional Networks for Visual Recognition and Description (Donahue et al) [pdf]

Combine CNNs with LSTM RNNs for generating image and video descriptions. (Matthieu C.)

|

|

|

Apr 8

|

Paper presentations and discussion

|

Preliminary schedule:

-

WSABIE: Scaling Up To Large Vocabulary Image Annotation Weston et al. 2011 [pdf] (Bart v. M.) (See also this paper)

-

Video (Language) Modeling: A Baseline for Generative Models of Natural Videos (Ranzato et al.) [pdf]

A baseline and experiments on unsupervised visual learning by predicting the future of natural videos. (Didier N.)

-

Computing the Stereo Matching Cost with a Convolutional Neural Network Zbontar, LeCun 2014 [pdf] (Benjamin P.)

-

How transferable are features in deep neural networks? Yosinski et al. 2014 [pdf] (Harm d. V.)

-

Explaining and Harnessing Adversarial Examples Goodfellow et al. 2015 [pdf] (Saizheng Z)

|

solution A3 Q3 pooling

solution A3 Q3 backprop

A3 cnn

reaction report due (1 for this week)

|

|

Apr 14

|

Project presentations and discussion

Wrap-up

|

|

|

|

|